1. Introduction

Many complex real world problems can be formulated as combinatorial optimization (CO) problems. Technically, CO problems require a proper solution from a discrete finite set of feasible solutions in order to simultaneously achieve the minimization (or maximization) of a cost function and the satisfaction of the problem’s given constraints. One such problem is finding the shortest path (or a path “close” to the shortest with respect to some appropriate metric) for a global positioning system (GPS) in a short period of time. The above real-world problem can be perfectly modeled by the travelling salesman problem (TSP).

The travelling salesman problem (TSP) is one of the most widely studied combinatorial optimization problems. Solving the TSP means finding the minimum cost route so that the salesman (the person or entity who travels along a specific route containing many nodes) can start from a point of origin and return to the origin after passing from all given nodes once. The first use of the term “travelling salesman problem” appeared around 1931–1932. Remarkably, a century earlier, in 1832, a book was printed in Germany [

1], which, although dedicated to other issues, in the last chapter deals with the essence of the TSP problem: “With a suitable choice and route planning, you can often save so much time [making] suggestions [...]. The most important aspect is to cover as many locations [as possible], without visiting a location [a] second time.” In that book, the TSP is expressed for the first time using some examples of routes through Germany and Switzerland. However, an in-depth study of the problem is not attempted in this book. The TSP was expressed mathematically for the first time in the 19th century by Hamilton and Kirkman [

1]. A

cycle in a graph is a closed path that starts and ends at the same node and visits every other node exactly once. A cycle containing all vertices of the graph is called

Hamiltonian.

In short, TSP is the problem of finding the shortest Hamiltonian cycle. The Hamiltonian graph problem, i.e., deciding whether a graph has a Hamiltonian cycle, is reducible to the TSP. One can see this by assigning zero length to the edges of the graph and constructing a new edge of length, one for each missing edge. If the solution of the TSP for the resulting graph is zero, then the original graph contains a Hamiltonian cycle; if it is a positive number, then the original graph contains no Hamiltonian cycle [

2]. TSP is NP-hard and is of great significance in various fields, such as operational research and theoretical computer science. In practice, TSP amounts to finding the best way one can visit all the cities, return to the starting point, and minimize the cost of the tour.

Typically, TSP is represented by a graph. Specifically, the problem is stated in terms of a complete graph

, in which

is the set of nodes and

and

is the set of the directed edges or arcs. Each arc is associated with a weight

representing the cost (or the distance) of moving from node

i to node

j. If

is equal to

, the TSP is symmetric (sTSP); otherwise, it is called asymmetric (aTSP). The fact that TSP is NP-hard implies that there is no known polynomial-time algorithm for finding an optimal solution regardless of the size of the problem instance [

3].

Real world problems, such as those related to GPS, can be formulated as instances of the TSP. This class of routing problems requires good solutions computed in a short amount of time. In order to improve the computational time, it is common practice to sacrifice some of the solution’s quality by adopting heuristic and metaheuristic approaches [

4,

5,

6]. Heuristics are fast approximation computational methods divided into construction and improvement heuristics. Construction heuristics are used to build feasible initial solutions, and improvement heuristics are applied to achieve better solutions. It is customary to apply improvement heuristics iteratively. Metaheuristics are general optimization frameworks that can be appropriately modified in their individual characteristics in order to generate efficient methods for solving specific classes of optimization problems.

The main contribution of this paper is the application of the novel extension of general variable neighborhood search (GVNS for short) metaheuristic to a GPS problem. We achieve efficient solutions in a short period of time for a GPS application used for garbage trucks, which is modeled as an instance of the TSP. The proposed method guarantees optimal or near-optimal solutions for a real life routing application. GPS applications typically use the nearest neighbor (NN) or some of its variations in order to achieve good results for the routing section. The routing section is a critical part of a typical GPS application because, if the provided routes are optimal or near-optimal, then the end result will also be near-optimal. The routing solutions produced by the GVNS were compared to the ones obtained by the well known NN algorithm and some of its most widely used variations. The computational results reveal that both GVNS’s first and best improvement solutions provide efficient routes that are either optimal or near-optimal and outperform with a wide margin classic, widely used methods, such as NN and its modifications. This novel GVNS is a quantum-inspired expansion of the conventional GVNS in which the shaking function is based on complex unit vectors.

This paper is organized as follows. In

Section 2 we present related works, in

Section 3 we explain metaheuristics and the variable neighborhood search, and we describe our algorithm in detail. A GPS application and the specific problem we tackle with our algorithm is presented in

Section 4. In

Section 5, the experimental results of our implementation are presented in a series of matrices and figures that clearly demonstrate that our method outperforms standard approaches like the NN algorithm. Finally, conclusions and ideas for future work are described in

Section 6.

2. Related Work

The research community has shown great interest in solving tangible, real world problems via methods applicable to CO problems. Recently, many authors have been actively trying to enhance conventional optimization methods by introducing principles and techniques originating from unconventional methods of computation in the hope that they would prove superior to traditional approaches. For example, Sandip et al. [

7] proposed several novel techniques which they called quantum-inspired ant colony optimization, quantum-inspired differential evolution, and quantum-inspired particle swarm optimization, respectively, for multi-level color image thresholding. These techniques find optimal threshold values at different levels of thresholding for color images.

A new quantum-inspired social evolution (QSE) algorithm was proposed by hybridizing a well-known social evolution algorithm with an emerging quantum-inspired evolutionary one. The proposed QSE algorithm was applied to the 0–1 knapsack problem and the performance of the algorithm was compared to various evolutionary, swarm, and quantum-inspired evolutionary algorithmic variants. Pavithr and Gursaran claim that the performance of the QSE algorithm is better than or at least comparable to the different evolutionary algorithmic variants it was tested against [

8].

Wei Fang et al. proposed a decentralized form of quantum-inspired particle swarm optimization with a cellular structured population for maintaining population diversity and balancing global and local search [

9]. Zheng et al. conducted an interesting study by applying a novel hybrid quantum-inspired evolutionary algorithm to a permutation flow-shop scheduling problem. They proposed a simple representation method for the determination of job sequence in the permutation flow-shop scheduling problem based on the probability amplitude of qubits [

10].

Lu et al. designed a quantum-inspired space search algorithm in order to solve numerical optimization problems. In their algorithm, the feasible solution is decomposed into regions in terms of quantum representation. The search progresses from one generation to the next, while the quantum bits evolve gradually to increase the probability of region selection [

11]. Wu et al. in [

12] proposed a novel approach using a quantum-inspired algorithm based on game-theoretic principles. In particular, they reduced the problem they studied to choosing strategies in evolutionary games. Quantum games and their strategies seem very promising, offering enhanced capabilities over classic ones [

13].

Solutions based on variable neighborhood search (VNS) have been applied to route planning problems. Sze et al. proposed a hybrid adaptive variable neighborhood search algorithm for solving the capacitated vehicle routing problem (VRP) [

14]. A two-level VNS heuristic has been developed in order to tackle the clustered VRP by Defryn and Sorensen [

15]. In [

16], a VNS approach for the solution of the recently introduced swap-body VRP is proposed. Curtin et al. made an extensive comparative study of well known methods and ready-to-use software and they concluded that no software or classic method can guarantee an optimal solution to the TSP problems that model GIS problem with more than 25 nodes [

17]. Papalitsas et al. proposed a GVNS approach for the TSP with time windows [

4] and a quantum-inspired GVNS (qGVNS) for solving the TSP with time windows [

18].

Moreover, the proposed method can also be applied on other classes of problems from real world, such as optimization on localization in hospitals, smart cities, and smart parking systems. Tsiropoulou et al. applied RFID technologies to tag-to-tag communication paradigms in order to achieve improved energy-efficiency and operational effectiveness [

19]. Liebig et al. presented a system for trip planning that consolidates future traffic threats [

20]. Specifically, this system measures traffic flow in areas with low sensor coverage by using a Gaussian Process Regression. Many studies also deal with the optimization of localization and positioning of doctors and nurses in hospitals and health care organizations [

21,

22].

In this work we demonstrate that, for small problems, our method achieves the optimal value; for larger problems, it guarantees a close to optimal solution with a deviation of 1–3%.

3. Novel Variable Neighborhood Search

In this section, we describe the proposed VNS method version. For completeness, we state the necessary background notions and formal definitions (such as on metaheuristics and VNS).

3.1. Metaheuristics

A metaheuristic is a high-level heuristic, designed to find, create, or select a lower level heuristic (for example a local search algorithm), which can provide an adequate solution to an optimization problem. It is particularly useful for instances with missing or incomplete information, or when the computing capacity is limited. According to the literature on metaheuristic optimization [

23], the word “metaheuristics” was devised and proposed by Glover. Metaheuristic algorithms are able to make assumptions about the optimization problem to be solved and thus can be used for a wide variety of problems. Obviously, compared with exact methods, metaheuristic procedures do not guarantee a global optimal solution for each category of problems [

24].

Many metaheuristic algorithms apply some form of stochastic optimization. This implies that the generated solution depends on a set of random variables. By searching in a large set of feasible solutions, the metaheuristic procedures can often find good solutions with less computational effort than exact algorithms, iterative methods, or simple heuristic procedures. Therefore, metaheuristic procedures are useful approaches for optimization problems in many practical situations.

3.2. Variable Neighborhood Search (VNS)

VNS is a metaheuristic for solving combinatorial and global optimization problems, proposed by Mladenovic and Hansen [

25,

26]. The main idea of this framework is the systematic neighborhood change in order to achieve an optimal (or a close-to-optimal) solution [

27]. VNS and its extensions have proven their efficiency in solving many combinatorial and global optimization problems [

28].

Each VNS heuristic consists of three parts. The first one is a shaking procedure (diversification phase) used to escape local optimal solutions. The next one is the neighborhood change move, in which the following neighborhood structure that will be searched is determined; during this part, an approval or rejection criterion is also applied on the last solution found. The third part is the improvement phase (intensification) achieved through the exploration of neighborhood structures through the application of different local search moves. Variable neighborhood descent (VND) is a method in which the neighborhood change procedure is performed deterministically.

GVNS is a VNS variant where the VND method is used as the improvement procedure. GVNS has been successfully tested in many applications, as several recent works have demonstrated [

29,

30].

3.3. Description of Our Algorithm

As does the original GVNS, our version of GVNS consists of a VND local search, a diversification procedure, and a neighborhood change step. In our method, the pipe-VND (exploitation in the same neighborhood while improvements are also being made) is used during the improvement phase. During the improvement phase of the pipe-VND, two classic local search strategies are applied: the relocate and the 2-opt. In the relocate, the solutions are obtained by removing a node and inserting it in a different position of the current route. In the 2-opt, the solutions are obtained by breaking two edges and reconnecting them in a different order.

The biggest difference between our approach and the classic GVNS is in the diversification phase. The main use of a shaking function is to resolve local minima traps within a VNS procedure. In our approach, perturbation is achieved by adopting techniques from the field of quantum computation.

Quantum-inspired procedures are not actual quantum algorithms designed to run on future quantum computers, but conventional, classical algorithms that utilize principles and ideas from the field of quantum computing. Quantum computing was envisioned by Feynman [

31,

32], who was the first to observe that it is not possible to efficiently simulate an actual quantum system using a classical computer. More in-depth information regarding quantum computation and its principles can be found in [

33,

34].

In our case, during each shaking call, a simulated quantum n-qubit register generates a complex n-dimensional unit vector. A quantum register is the quantum analogue of a classical processor register. The dimension n of the complex unit vector is greater than or equal to the dimension of the problem. Our algorithm takes as input the complex n-dimensional vector and produces a real n-dimensional vector. The i-th component, , of the real vector is equal to the modulus squared of the i-th component of the complex vector. Obviously, the components of the real vector are real numbers in the interval .

Each node of the current solution is associated with precisely one of the components of the real n-dimensional vector. In effect, the vector components are used as a flag for each node in the current solution. Under this correspondence between vector components and nodes, the sorting of the components of the real vector, will induce an identical ordering among the nodes in the solution. Thus, the ordered route produced after this shaking move will drive our exploration effort in another search space.

At this point, it should be mentioned that the NN heuristic is used in order to produce an initial feasible solution (the first node is set as a depot). From an algorithmic perspective, the procedure is summarized in the next pseudocode fragment [

35]. The solution method is provided in Algorithm 1:

| Algorithm 1: Pseudocode of the novel general variable neighborhood search (GVNS) |

![Algorithms 11 00038 i001]() |

4. GPS Application for Garbage Trucks Modeled as a Travelling Salesman Problem

An operation with substantial importance for the handling of everyday scheduling of a city’s traffic is the routing of garbage trucks from their depots to every dustbin on their routes and back to their depots. The optimal routes correspond to minimum required transportation time and minimum distance. Finding optimal routes typically proves to be time-consuming, especially in the case of metropolitan cities with very dense road networks. However, by exploiting recent advances from the field of metaheuristics, it is possible to attain efficient, near-optimal solutions in a short amount of time for many practical cases. We take advantage of the performance improvement brought by a novel metaheuristic procedure based on VNS. Our novel GVNS, in combination with the minimal required computational time, can provide a significantly enhanced the solution for these kind of problems. The incorporation of the enhanced VNS procedure within the GIS will lower the system’s response time and provide close to optimal solutions.

GIS technology integrates common database operations such as query and statistical analysis with the unique visualization and geographic analysis benefits offered by maps [

36,

37]. Among other things, a GIS facilitates the modeling of spatial networks (e.g., road networks) offering algorithms to query and analyze them. Spatial networks are modeled with graphs. In the case of road networks, the graph’s arcs correspond to street segments, whereas the nodes correspond to street segment intersections. Each arc has a weight associated with it, representing the cost of traversing it.

A GIS usually provides a number of tools for the analysis of spatial networks. It generally offers tools to find the shortest or minimum route through a network and heuristic procedures to find the most efficient route to a series of locations. Such a problem is typically modeled as an instance of the TSP. Our implementation solves efficiently the TSP problem, finding near-optimal solutions for a range of small, medium, or large benchmark problems. Distance matrix calculation can be used to calculate distances between pairs of nodes representing origins and destinations, whereas location-allocation functions determine site locations and assign demand to sites. These capabilities of GIS for analyzing spatial networks enable them to be used as decision support systems for the districting and routing of vehicles [

38,

39].

Routing a garbage truck from its depot to each dustbin and back is modeled by finding routes for the travelling salesman problem. The GIS will be used to find the optimal routes corresponding to minimum required transportation time. The GIS can also present the driver with directions corresponding to the routes generated. These directions will be transmitted to the garbage truck. In a real-time system, the time performance of the routing function is of vital significance. Metaheuristics, like our implementation presented here, can guarantee that.

Our Approach

In this paper, we describe a system offering a solution to the problem of garbage truck routing management. It is based on quantum-inspired metaheuristics applied on TSP and integrated to GIS/GPS technologies. Our approach is an integrated waste management solution. Based on the functional requirements and some case studies, [

40], the components of our application are designed and decomposed into subsystems and smaller functional units. Operations and relationships between subsystems are defined for each subsystem:

Bin Sensors: equipment that estimates the waste bin fill level and collects, stores, and transmits bins data. This will help our main system to take into account specific bins and avoid computation on final route empty bins.

Data Gathering from Bins: a unit that communicates with the bin sensors and delivers the collected information to the central system. It can be installed on passing vehicles and consists of three components:

a communicator, which implements the communication with the bin;

a storage procedure, which temporarily stores the data until transferred to the central system;

a transfer procedure, which implements the data transfer to the central system.

All these operations will be applied via the Global System for Mobile Communications (GSM) network.

GPS Navigation Applications Integrated in Trucks: a classic navigation application through GPS and will provide navigation guidance to a truck driver and instructions regarding which bins should be collected.

A Central System: the back-end system of our application. Its main part is the data storing, bin data, vehicle data, and all data needed to compute the most efficient routes. Furthermore, data storing will keep any information retrieved from other subsystems, particularly from the data mining subsystem and the routing optimizations subsystem. All generated spatial information for the current route will be stored locally in a spatial database.

A Map Substructure: A REST-ful API based on maps that will provide all the required functionality for creating rich-web applications based on geographic and descriptive data.

A Data Mining Subsystem: a system mainly used to estimate the fullness of bins when we do not have the available information updated.

A Routing Optimization Structure: Our main contribution is mainly based on implementing efficient routing algorithms for this application. We propose this novel metaheuristic, which provides optimal or close to optimal solutions over a short period of time. Our implementation of routing functionality gives an overall comparative advantage compared with the other implementations for two main reasons:

We can compute efficient solutions in a short period of time. This makes the application efficient because we can compute and re-compute, live and continuously, many times for the same route and feed the results to the application.

Our routing algorithm outperforms classic methods, such as the NN, that are used to find routes on GPS, and the solutions for every class of problem are near-optimal.

A real-time system like ours must be able to give prompt replies to such queries because, in these situations, the response time is of vital importance. By using efficient local search structures and the novel GVNS for the problem tour, the metaheuristic algorithm can provide better results.

Most GIS/GPS implementations use either the NN or one of its variations to compute routes. NN is a well known and widely used construction heuristic in network designing problems. Initially, in NN, an arbitrary node is inserted in the route as the starting node, and then, iteratively, the nearest to the last added node, selected to insert in the route. The procedure is terminated when all the nodes have been added in the route. Therefore, because of the popularity of NN, when we test the performance of our algorithm, we compare its results both with the optimal solution and the solutions achieved by NN. This comparison demonstrates that GVNS produces results that, in all cases, are near-optimal and significantly superior to the NN algorithm, which is widely used by most GIS/GPS software. As a result of these better routing solutions, the total amount of fuel is drastically reduced. Thus, our method is environmentally friendly, since fuel consumption has a direct impact on the environment.

5. Experimental Results

This section is devoted to the presentation of the experimental results that showcase the strengths of the novel GVNS. The experimental tests were implemented in Fortran 90 and ran on a laptop PC with an Intel Core i7-6700HQ Processor at 2.59 GH and 16 GB memory. We ran each benchmark at least five times and imposed a limit of 60 s per run. We chose this threshold for two reasons. First, it is adequate to compute an optimal or near-optimal solution, and, second, it is short enough to allow for repeated experiments. We then kept the best solution found and computed the average of all runs in order to derive the final cost. The algorithms were tested on 48 benchmark instances from the TSPLIB. TSPLIB is a library that contains a collection of benchmarks for the TSP. These benchmarks are characterized by their diversity and their variation with respect to the dimension of the problem. It is precisely for these qualities that they are widely used by researchers for comparing results [

41].

5.1. Novel GVNS versus Nearest Neighbor

In this work, we propose a novel GVNS version based on quantum computing principles and techniques, and we apply it to a garbage collection application, an actual GPS-based problem. Below we present the computational results of our approach. As already mentioned, NN and its variants are widely used in GPS/GIS applications in order to construct the tour of the underlying graph. In particular, we compare our method against the NN heuristic and two of its most well-known variants: the repeated NN and the improved NN. This section presents the comparative analysis among NN, GVNS, and the optimal value (OV), showing the results in a series of figures and tables. It is important to point out that we chose to examine the NN because of its widespread use in GPS applications.

Table 1 contains the aggregated experimental results. Specifically, it contains the benchmark name, the NN cost, the average for first improvement (FI) for each problem, the gap between GVNS using FI vs. OV, the average for best improvement (BI) for each problem, the gap between GVNS using BI vs. OV, and the OV. Given the outcome

x, its gap from the optimal value

is computed by the formula

. The gap is widely used in the field of optimization to measure how close to the optimal a particular solution is. The data demonstrate that GVNS

is consistently very close to the optimal value both with FI and BI, and

outperforms NN in all cases.

The shaded lines in

Table 1 emphasize the superiority of GVNS for certain instances of the benchmarks. Specifically, we highlight these instances where GVNS has a 2% or less gap from the optimal value. For example, the NN’s gap from the optimal value for

att48 is −0.2101, whereas GVNS’s BI search strategy achieves −0.0016, and GVNS using FI achieves −0.0024. Moreover, GVNS achieves the optimal value (0.0000 gap from OV) for

bayg29,

bays29,

eil51,

fri26,

gr17,

gr21, and

gr24. Another characteristic case is the benchmark

lin105, where NN has a −0.4156 gap from the optimal, while GVNS with BI strategy achieves −0.0163, and GVNS using FI achieves −0.0159.

5.2. GVNS versus Nearest Neighbor Variants

In addition to the previous setup, we ran additional tests using the two most popular variants of the NN heuristic. These are the

repeated NN and the

improved NN. The Improved NN is a variant of the classic NN in which the starting pair of the route is the shortest edge in the distance matrix [

42]. The remaining nodes are added to the route in a way identical to the simple NN method. Repeated or repetitive NN is another modification in which the NN algorithm is applied to every node. Finally, the route with the minimum total cost is selected as the best one.

We compared GVNS with the improved NN and the repeated NN, and the experimental results are contained in

Table 2 and

Table 3. These tables show the Benchmark Name, the improved NN tour cost, the repeated NN tour cost, the GVNS using BI tour cost, the GVNS using FI tour cost, and the Optimal Value (OV). For consistency, we ran the same experiments as before, but instead of the simple NN, we used the improved NN and the repeated NN, and we compared the results with GVNS and the OV. The improved NN and repeated NN achieve better results compared to the simple NN for each benchmark. However, the fact remains that GVNS using FI or using BI still outperforms these improved NN-based methods.

Table 2 and

Table 3 confirm that GVNS using either BI or FI outperforms the improved NN and the repeated NN. Let us consider, for example, the benchmark

eil101, which consists of 101 nodes. The computational results show that that the improved NN has a tour cost of 823, the repeated NN has a tour cost of 746, GVNS on first improvement has 649 and GVNS on best improvement has 647, while the optimal tour’s cost Value is 629. It is clear that GVNS is marginally close to the OV and the gap between both versions of GVNS and both NN variants is relatively high. Other examples of benchmarks that highlight the superiority of GVNS are

bayg29,

d198, and

ch130.

Table 3 contains computational data that corroborate that GVNS performs better than the improved and the repeated NN. Characteristic examples are

gr229,

lin105, and

pr136, which are benchmark instances of 229, 105, and 136 nodes, respectively. We can therefore conclude that GVNS is always close to the optimal value and clearly outperforms the NN variants.

5.3. Novel GVNS Versus Conventional GVNS

The data in

Table 4 reveal that the novel GVNS is indeed an improvement over the classic GVNS. From the data one may conclude that in all cases this new version of GVNS is at least as good as GVNS and in many cases outperforms classical GVNS. This is in accordance with the work in [

35], which, through a comparative analysis, also showed that the quantum-inspired GVNS achieves better results than the conventional GVNS.

Table 5 contains the experimental results of the novel GVNS on some asymmetric TSP benchmarks.

In order to further test the feasibility of our method, we applied the novel GVNS to some national TSPs (nTSPs) in order to test the behavior on much bigger instances.

Table 6 contains the computational results of novel GVNS on nTSPs.

Our aim in this paper was the implementation of a method based on a well-known metaheuristic that would be efficient enough to solve real world problems, such as the garbage collection challenge studied here. We wanted to develop an algorithm that would be able to find near-optimal solutions in a relatively short period of time. To achieve our goals, we chose to implement this novel version of GVNS, since we expected to outperform the conventional GVNS. This was indeed confirmed experimentally, as the results in

Table 4 show. The bulk of our experiments were meant to determine how GVNS fares against well-established methods that are widely applied for GPS, like the NN and its most important variations.

Table 1,

Table 2 and

Table 3 contain experimental evidence suggesting that GVNS outperforms the NN, the repeated NN, and the improved NN heuristics in all cases.

5.4. Graphical Representation of the Results

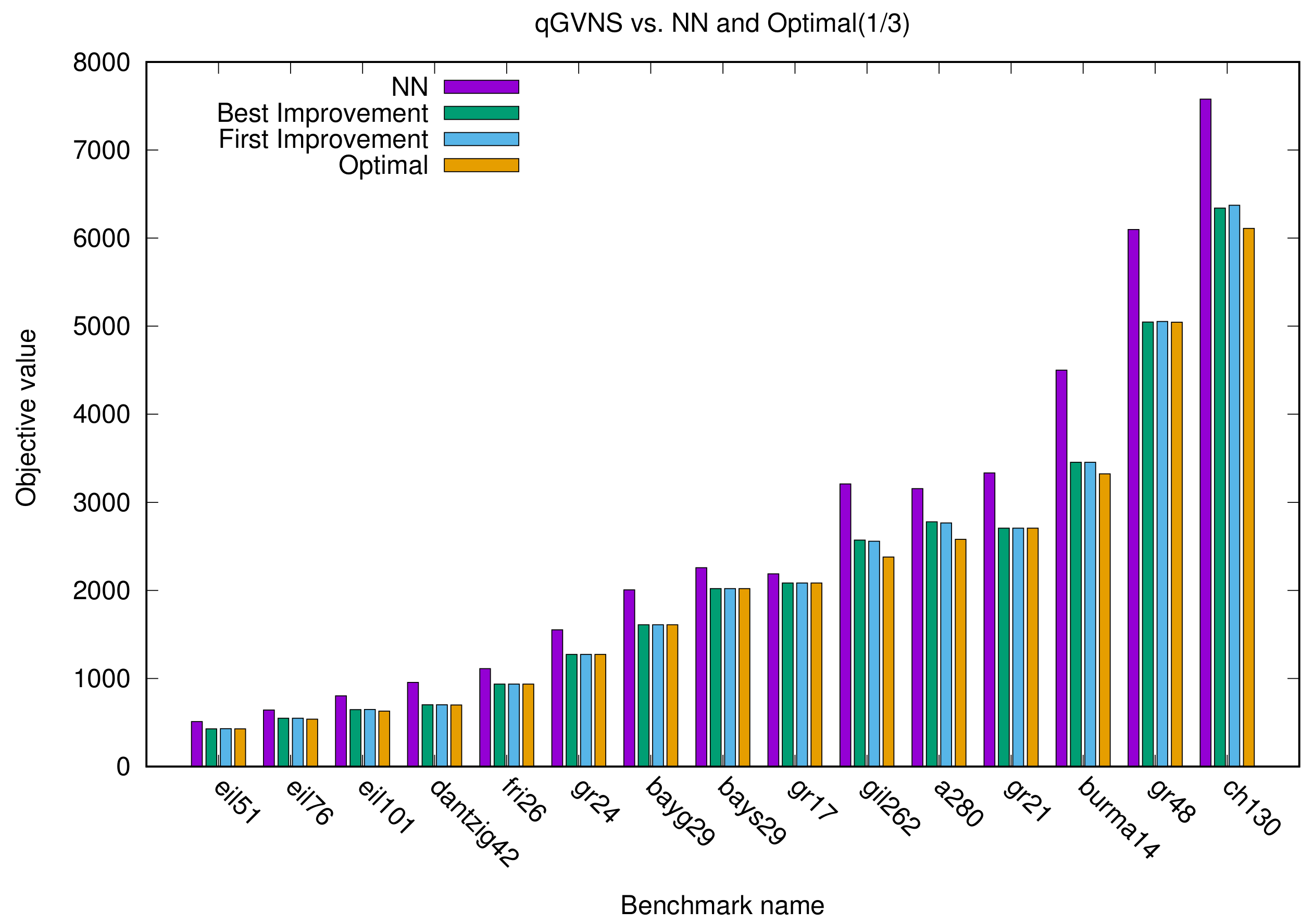

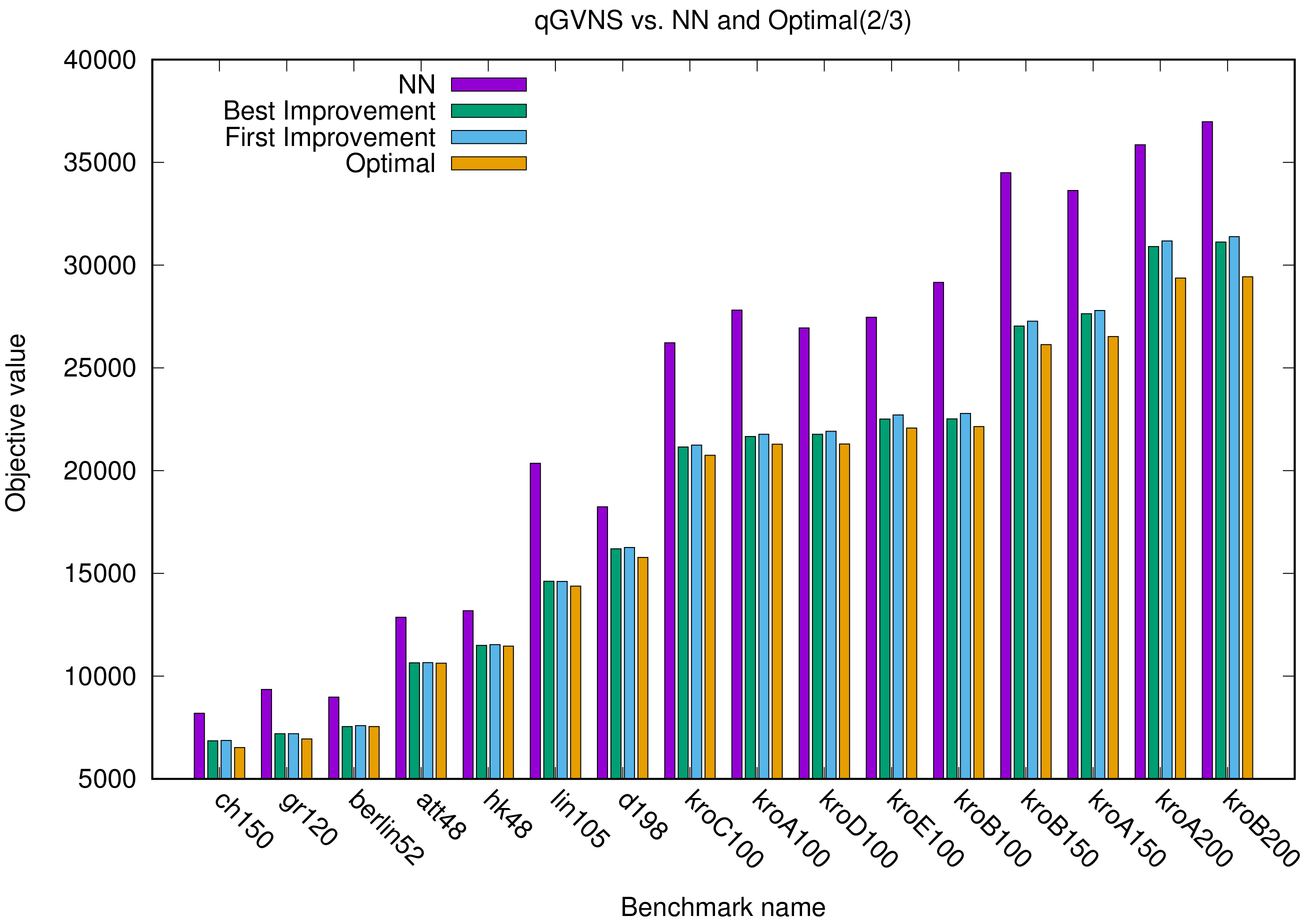

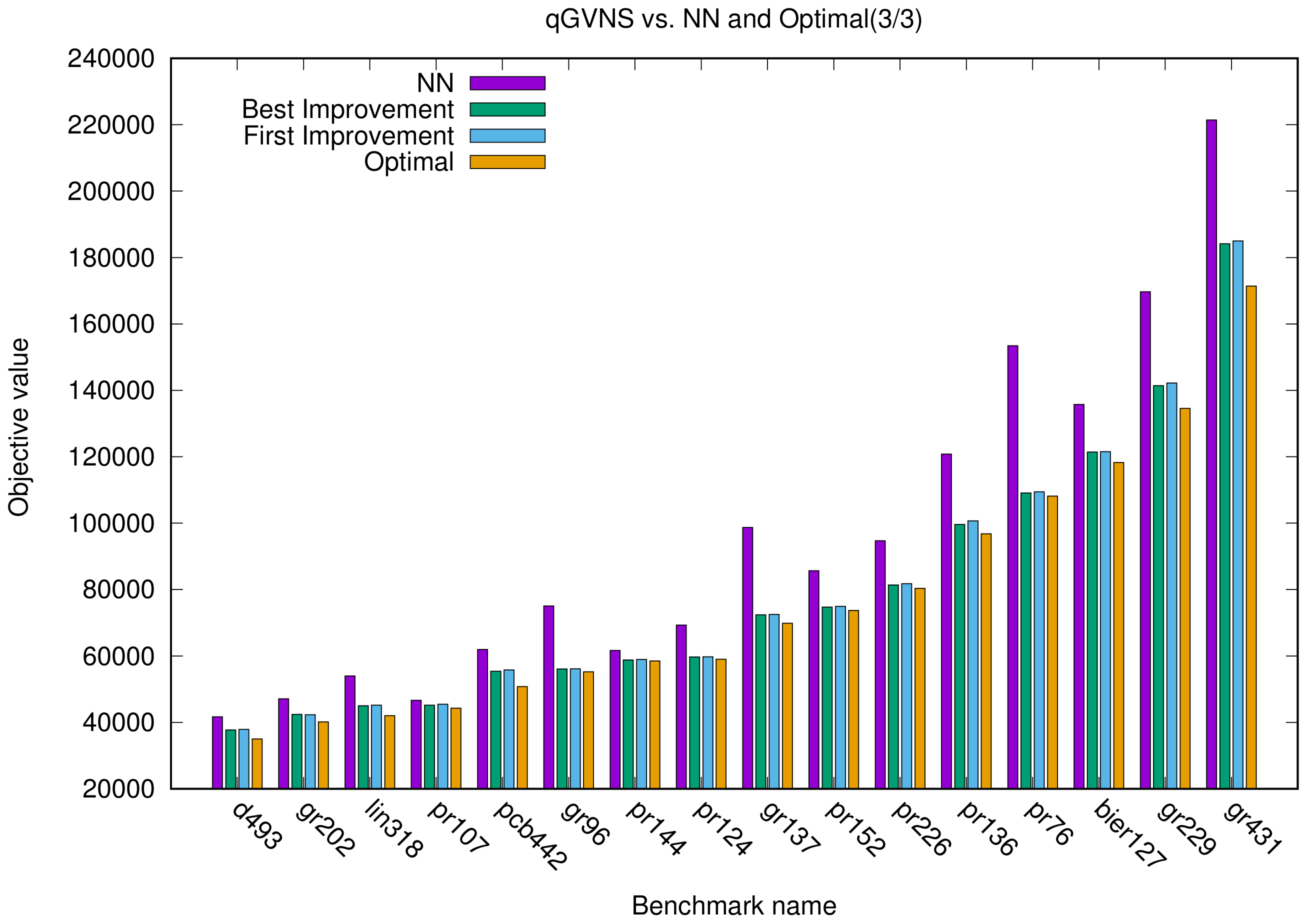

In this section, we present two different sets of figures with three different bar charts for each set. Each figure concerns a different subset of the total experiments, sorted according to the optimal value. In the first set (

Figure 1,

Figure 2 and

Figure 3), each benchmark problem is represented with four different bars, one for each method. Specifically, one represents the NN algorithm, one the optimal value, and another two depict GVNS (best and first improvement). It can be easily seen that our implementation achieves results very close to optimal, unlike the NN algorithm, which does not provide efficient solutions.

A notable example of

Figure 1 is

ch130. We can see that GVNS (using either FI or BI) is much closer to the optimal value, compared to NN.

It is clear from

Figure 2 that, in

kroC100,

KroA100,

KroD100,

KroE100,

KroB100,

KroB150,

KroA150,

KroA200, and

KroB200, the gap between the NN method and both variants of the GVNS is too high. We can also observe some cases in

Figure 2, where the GVNS achieves the optimal value. These are

berlin52,

hk48, and

att48.

We observe that, in most benchmarks, in

Figure 3, GVNS using FI or using BI comes close to the optimal value, while the NN bar is further away from the optimal value. Examples include

lin318,

pr124,

gr137pr76,

bier127.

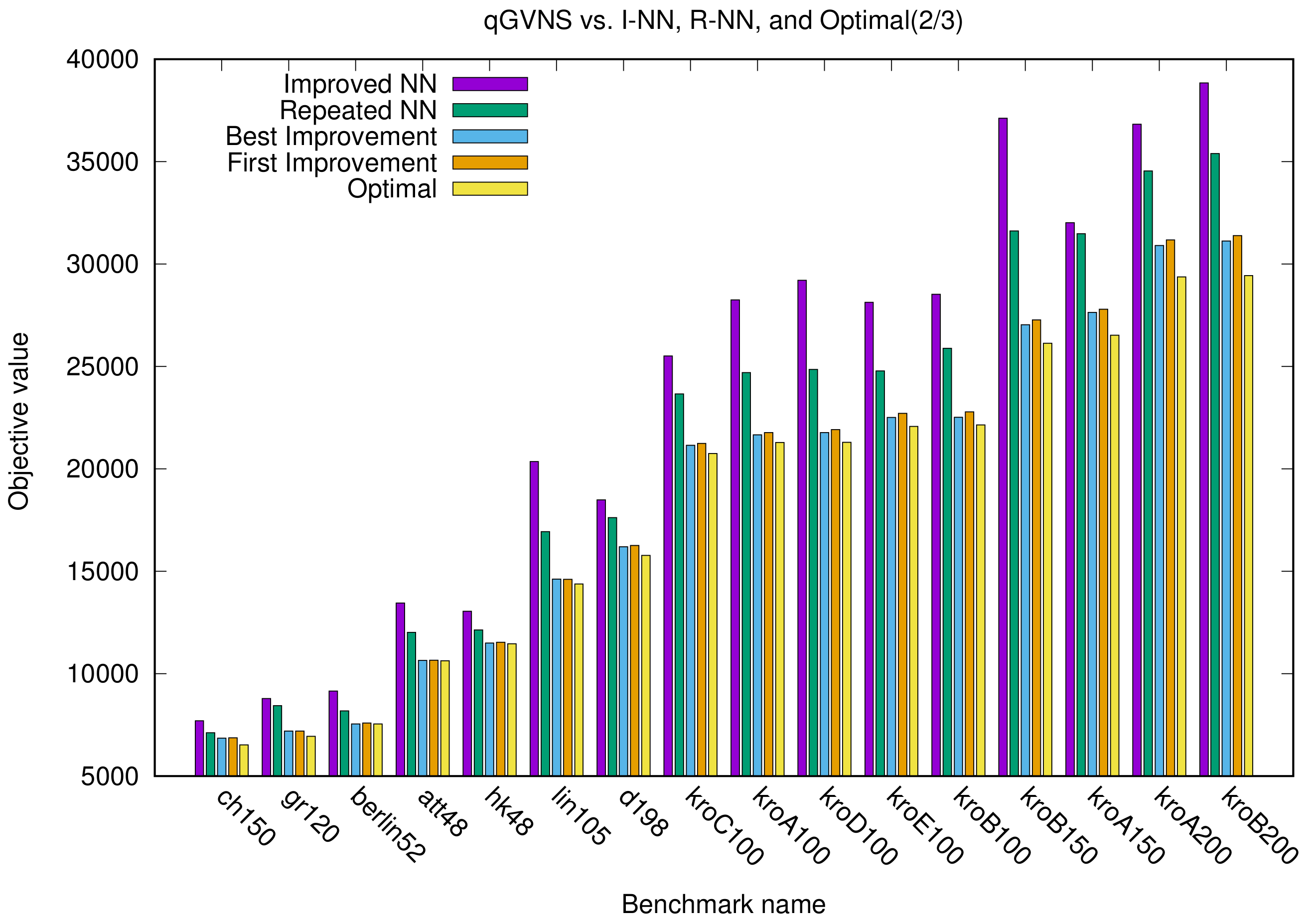

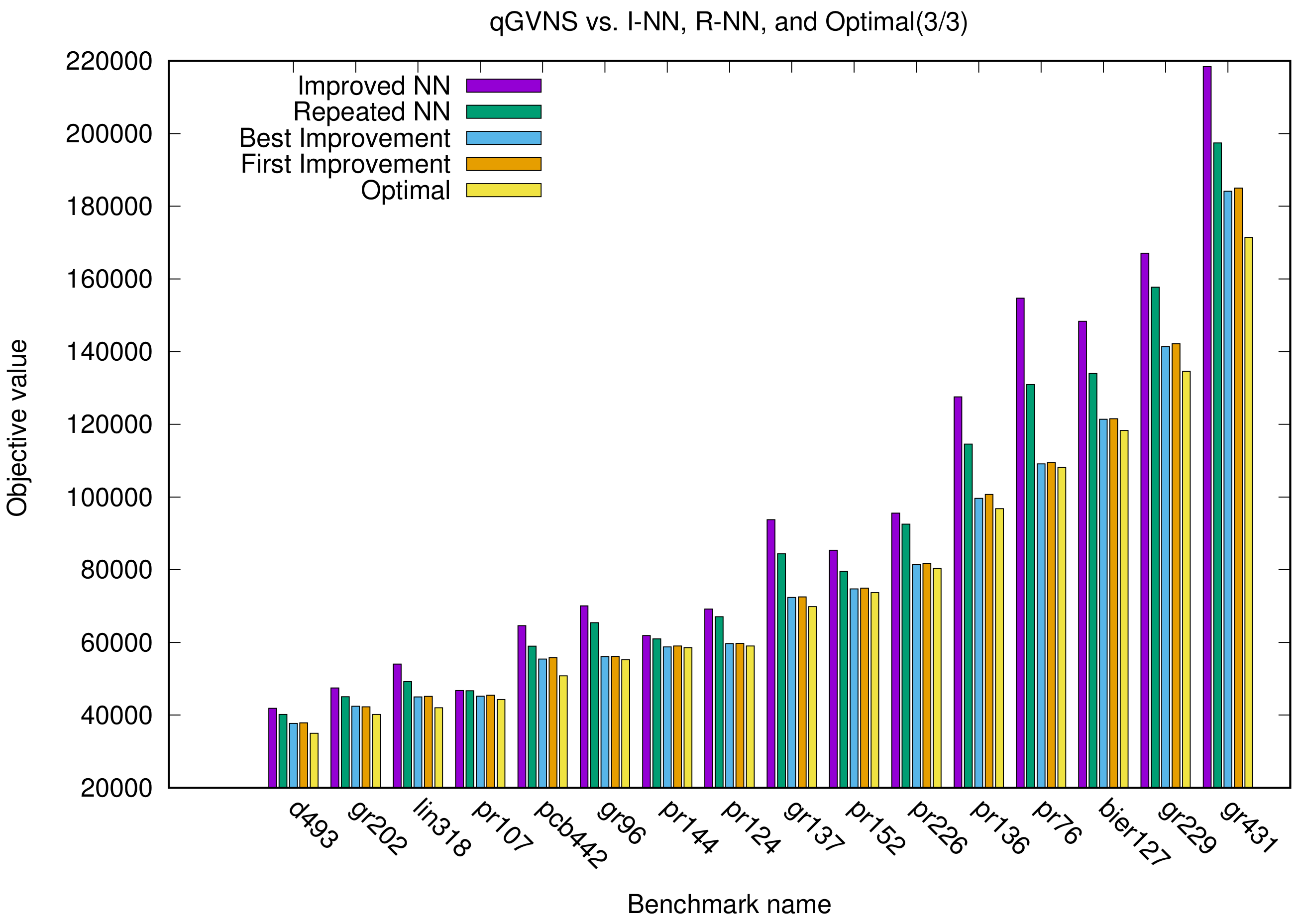

In the next set of figures (i.e.,

Figure 4,

Figure 5 and

Figure 6), each benchmark is represented with five different bars; one for the improved NN algorithm, one for the repeated NN algorithm, one for the optimal value, and another two for our implementation (best and first improvement). A close examination reveals that GVNS is once again very close to optimal values, whereas the improved and repeated NN algorithms fail to provide equally good solutions.

Looking at

Figure 4, we infer that, when it comes to medium benchmark problems, GVNS significantly approximates the optimal value, unlike both variants of NN, which are far from the optimal. We can particularly see this with

kroB100,

lin105,

kroB150 and

kroB200.

In general,

Figure 4,

Figure 5 and

Figure 6 show that the improved NN appears to be a better method than repeated NN. However, in all cases, both GVNS variants outperform the two NN variants.

Figure 6 demonstrates once again the superiority of GVNS. Let us consider benchmark

pr76, where GVNS touches the optimal point, while both the repeated and improved NN have a much lower performance. In addition, in

Figure 4,

Figure 5 and

Figure 6, we can see for a different set of benchmarks that again GVNS outperforms the NN variants, significantly closer to the optimal value in each case.

To sum up, the graphical results allow us to conclude that GVNS with BI or FI produces results that are appreciably close to the OV in most benchmark tests, whereas the NN algorithm is far from being close (for every case). Previously, we provided the analytical results in tabular form. The graphical representation of these results makes it easy to see the efficiency of the proposed algorithm, since one can immediately see that the divergence from optimal is almost negligible.

6. Conclusions and Future Work

This work studied an application of garbage collector routing using a new metaheuristic method based on a novel version of GVNS. We modeled the underlying problem as a TSP instance and went on to solve it using GVNS. Our GVNS algorithm, differs from conventional approaches due to its inspiration from principles of quantum computing. Our study was focused on quick and efficient transitions to different areas of the search space. This enabled us to find efficient routes in a short period of time. To assess the efficiency of our approach, we performed extensive experimental tests using well-known benchmarks from TSPLIB. The results were quite encouraging, as they confirmed that the novel GVNS outperforms methods that are widely used in practice, such as NN, repeated NN, and improved NN.

A direction for future work could be the investigation of alternative neighborhood structures and neighborhood change moves in VND (variable neighborhood descent) under the GVNS framework. In the same vein, one could study modifications during the perturbation phase in order to achieve even closer to optimal solutions, particularly on bigger asymmetric benchmarks. Yet another direction could be the use of a multi-improvement strategy [

43]. In any event, we plan to apply, adopt if necessary, and assess GVNS to other TSP variants and real-life routing optimization problems.