Microarray images contain noise, which is sometimes considerable. This is due to imperfections in conducting the experiment and also in the image digitalization process. Being able to reduce noise can be useful to extract more accurate genetic information and to obtain better compression results. However, denoising-based compression is not lossless because denoising can be considered an irreversible transform that is applied before storing the image. There has been much research on denoising, but most of it is focused on the microarray analysis process and not on compression.

In 2006, Adjeroh

et al. published their approach [

7] based on the translation invariant (TI) transform. They argue that wavelet based denoising techniques that are not translation invariant suffer from an adverse pseudo-Gibbs phenomenon at discontinuities. This is especially important in microarray images since the edge of each spot can be considered a discontinuity. In their work, the original image is shifted horizontally, vertically and diagonally, and each of these shifted images plus the original one are denoised separately. The resulting images are then shifted back and combined to obtain the denoised image. Adjeroh

et al. proposed three different approaches for combining the deshifted images: TI-hard, TI-median and TI-median2. The TI-hard method consists of outputting the average value of each pixel in the four deshifted images. The TI-median technique outputs the median instead of the average. The TI-median2 technique works by applying the TI-median technique twice: first, the TI-median is applied on the original image and an auxiliary image is produced; then the TI-median technique is applied again on the auxiliary image to obtain the denoised output image.

Many more authors have covered microarray image denoising for genetic information extraction, but only some of the most recent and relevant are referenced here. In 2005, Lukac proposed a method [

8] based on fuzzy logic and local statistics for noise removal. Also in 2005, Smolka

et al. discussed the peer group concept [

9] as a means to remove impulsive noise. In 2007, Chen and Duan presented a simple method for denoising microarray images [

10] based on comparing the edge features of the red and green channels. Recently, in 2010, Zifan

et al. have designed multiwavelet transformations [

11] to denoise images.

2.1.2. Segmentation

Segmentation, also know as

spot finding or

addressing, consists in determining which of the image pixels belong to spots (

i.e., the foreground), as opposed to those that do not (

i.e., the background). As will be discussed in

Section 2.4, this can be very useful in later stages of the compression process. For example, it is possible to code separately foreground and background or to exploit differences in pixel intensity distributions between sets. We discuss here only specific approaches oriented towards image compression. However, for the sake of completeness, some segmentation techniques not directly focused on compression and some general segmentation quality metrics are described at the bottom of this subsection.

In 2003, Faramarzpour

et al. proposed a lossless coder whose segmentation stage consists of two steps [

12]. First, spot regions are located by studying the period of the signal obtained from summing the intensity by rows and by columns, and studying its minima. After that, spot centers are estimated based on the region centroid, which is needed for their spiral scanning procedure, as explained in

Section 2.4. Simpler versions of this spot region location idea had already been used in microarray image analysis and also in Jörnsten and Yu’s work of 2002 [

13], where they proposed a lossy-to-lossless compression scheme. In their technique, a

seeded region growing algorithm is used to obtain a coarse mask for the spot pixels, which is then refined.

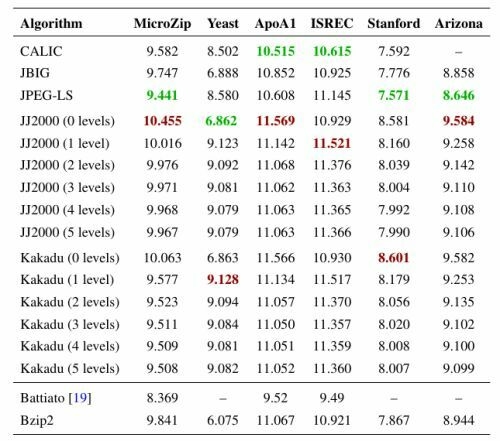

Later, in 2004, Lonardi and Luo presented their MicroZip lossy or lossless compression software [

14]. They used a variation of Faramarzpour’s spot region finding idea, but they considered the existence of subgrids which are located before spot regions. Four subgrids can be appreciated in

Figure 1, but other images may contain more.

In 2004, Hua

et al. proposed a lossy or lossless scheme [

15] with a segmentation technique based on the Mann–Whitney U test, which helps in deciding whether two independent sets of samples have equally large values. Once a region containing a spot has been located, a default mask is applied to obtain an initial set of pixels classified as spot pixels, and then the test is applied to add or remove pixels from this mask. This had already been used in microarray image segmentation [

16], but the authors proposed a variation that speeds up the algorithm by up to 50 times.

Also in 2006, Bierman

et al. described a purely lossless compression scheme [

17] with a simple threshold method for dividing microarray images into low and high intensities. It consists of determining the lowest of the threshold values from 28, 29, 210 or 211 such that approximately 90% of the pixels fall within it.

In 2007, Neekabadi

et al. proposed another threshold-based technique for segmentation [

18], this time in three subsets: background, edge and spot pixels. Their lossless proposal performs segmentation in two steps. First, they determine the optimal threshold value by minimizing the total standard deviation of pixels above and below it. Then they segment the image in the mentioned subsets. To do so, first they determine the spot pixels by eroding the mask formed with pixels above the selected threshold. Edge pixels are the ones surrounding the spot pixels, and background pixels are all the others.

Finally in 2009, Battiato and Rundo published an approach [

19] based on Cellular Neural Networks (CNNs). They define two layers for their lossless system, each with as many cells as the image has pixels. The input and state of the first layer are the pixels of the original image. Its output is the input and state of the second layer. By defining the cloning templates that drive the whole CNN dynamic, the second layer tends to its saturation equilibrium state and the resulting output tends to a “local binary image” where spot pixels tend to 1 and background pixels to 0.

Apart from the compression-oriented segmentation techniques already described, many others have been proposed in the more general context of DNA microarray image analysis. Some of the most recent are referenced next. In 2006, Battiato

et al. proposed a microarray segmentation pipeline called MISP [

20], where they used statistical region merging,

γLUT and k-means clustering. In 2007, they improved the pipeline adding advanced image rotation and griding modules [

21]. These two publications comprehensively describe previous well-known segmentation techniques. In 2008, Battiato

et al. proposed a neurofuzzy segmentation strategy based on a Kohonen self-organizing map and an ulterior fuzzy k-means classifier [

22]. In 2010, Karimi

et al. described a new approach using an adaptive graph-based method [

23]. Uslan

et al. in 2010 [

24] and Li

et al. in 2011 [

25] proposed two methods based on fuzzy c-means clustering. From the image-compression point of view, segmentation performance is generally measured based on the compression rates obtained after segmenting. However, in the DNA microarray image analysis context, other quality metrics might be applicable. In 2007, Battiato

et al. defined a quality measure based on a previous technique for general DNA microarray image quality assessment, and compared the overall segmentation performances of their MISP technique and other previous approaches [

21]. More information about microarray image quality assessment and information uncertainty can be found in Subsection

2.6.