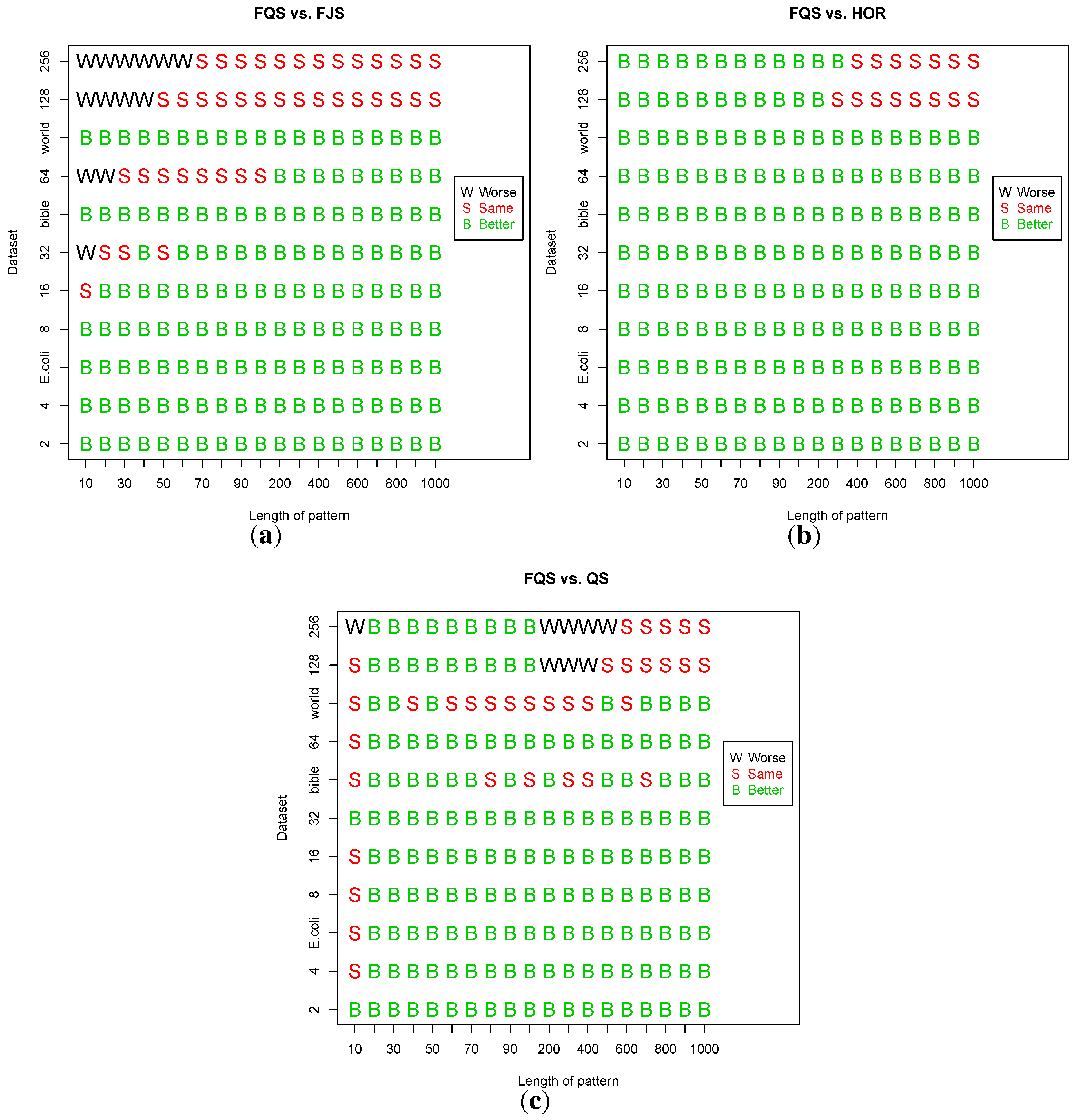

4.1. Randomized Text Files

We generated eight random text files with different alphabet sizes, namely, = 2, 4, 8, 16, 32, 64, 128 and 256. The size of each random text file was fixed at 100 MB. Patterns were randomly chosen from these files with 19 varying lengths: m = 10, 20, 30, 40, 50, 60, 70, 80, 90, 100, 200, 300, 400, 500, 600, 700, 800, 900 and 1,000, respectively. For a given pattern length, 50 different patterns were randomly chosen to search in each text file. The average running times were then calculated from these 50 runs.

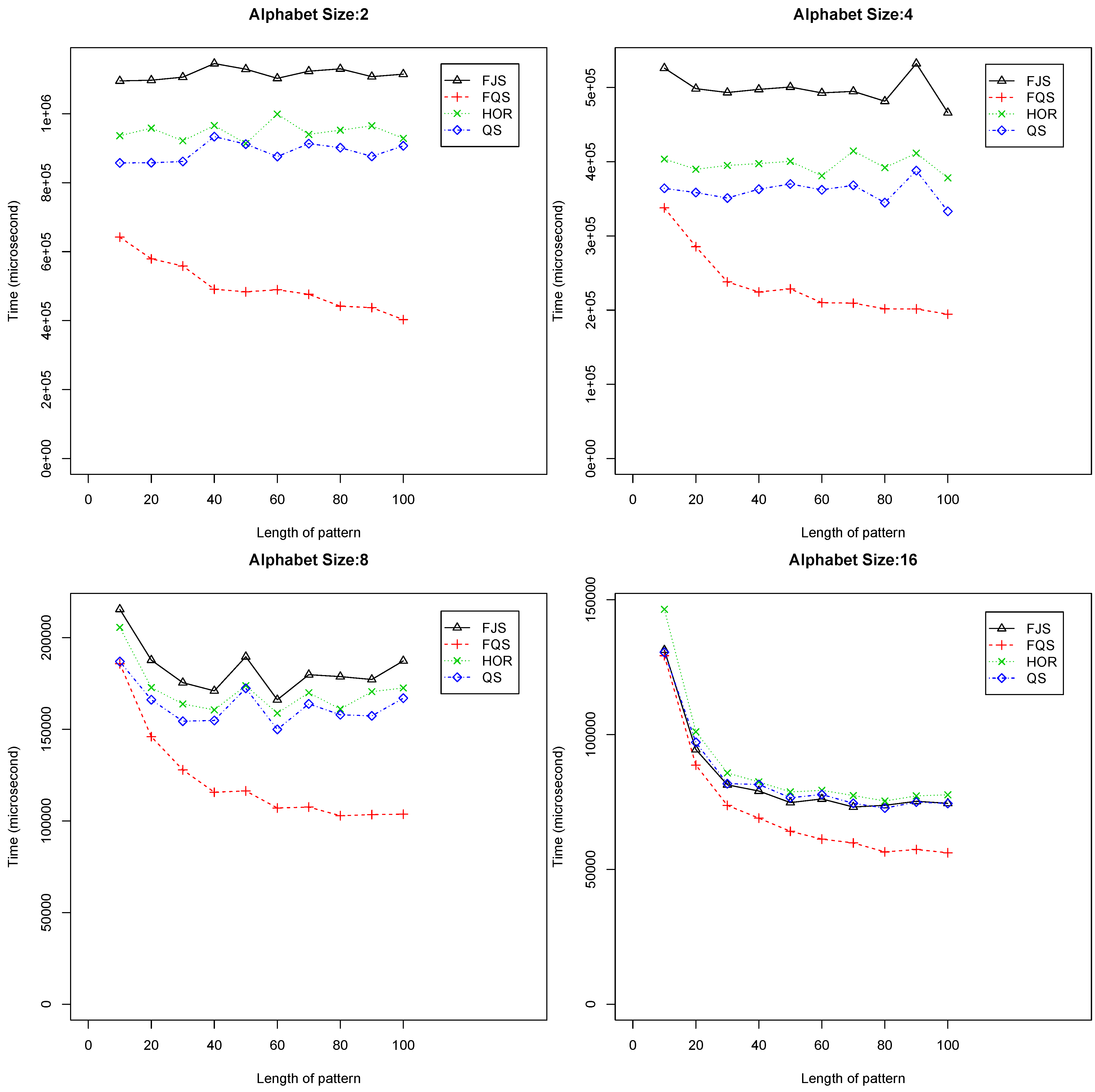

The experimental results are shown separately for two cases: (1) the pattern length is less than or equal to 100 (

); and (2) the pattern length is greater or equal to 100 (

). The results show the following:

When

and

, FQS is much faster than the others.

Figure 6 shows the performance of the algorithms in these cases. When

, the trends are similar: FQS is the fastest algorithm among the four. The QS is the second best, which is slightly better than Horspool (denoted as HOR in the figures);

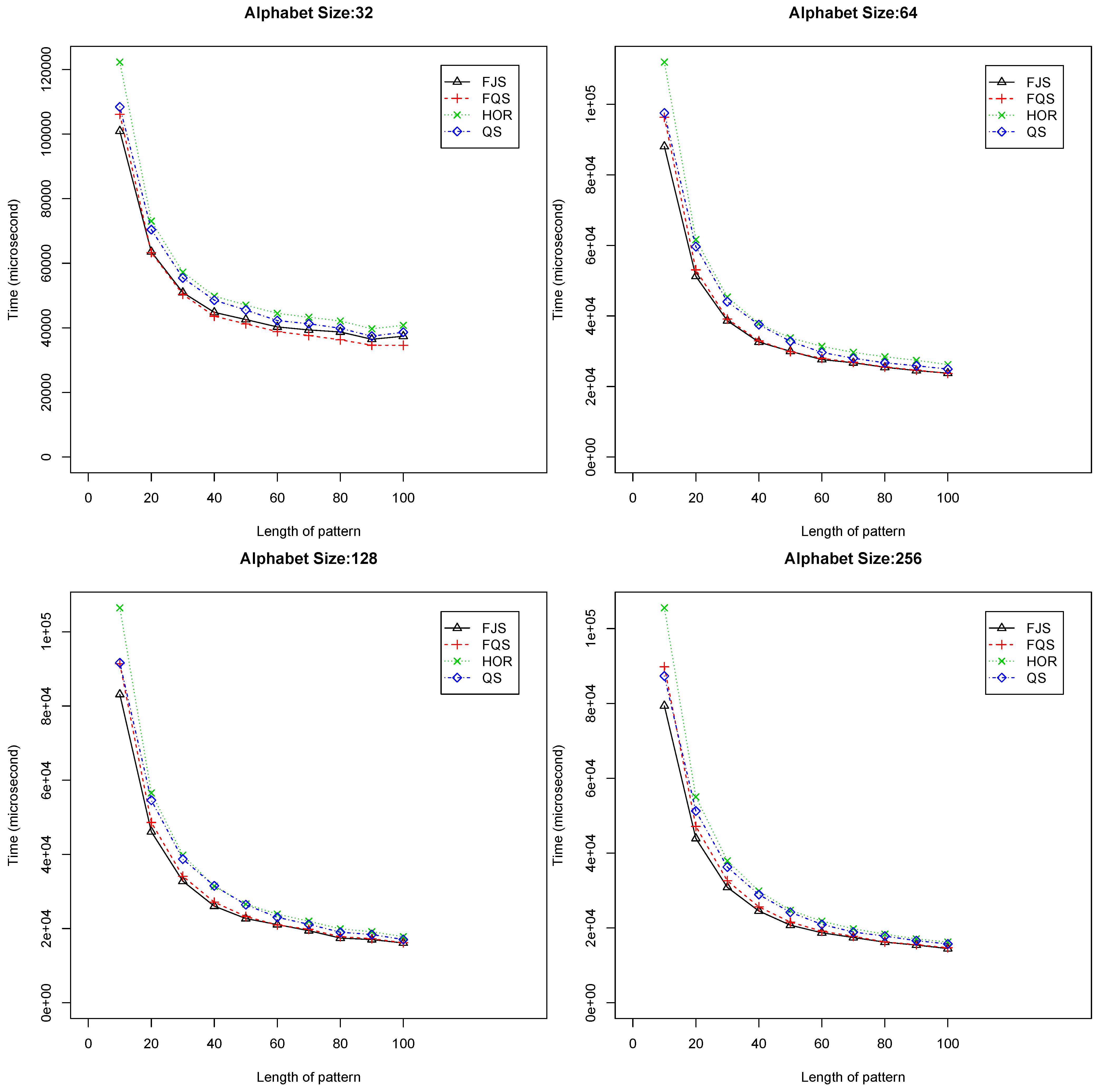

When

and

, FQS and FJS demonstrate a competitive performance, which is better than the QS and HOR.

Figure 7 shows the performances of the four algorithms in these situations. With the increasing of the alphabet size, the performance of the four algorithms tends to be similar. Although FQS is still among the best, the performance advantage over the others is less obvious. From

Figure 7, we can observe that, when the pattern length is small (e.g., with

), FJS provided the best performance among the four algorithms;

When

and

, FQS provides the best results among the four algorithms.

Figure 8 shows the comparative results. When the alphabet size is two, four and eight, respectively, QS is the second best. When the alphabet size is 32, and 64, FJS is ranked as the second best, only inferior to FQS;

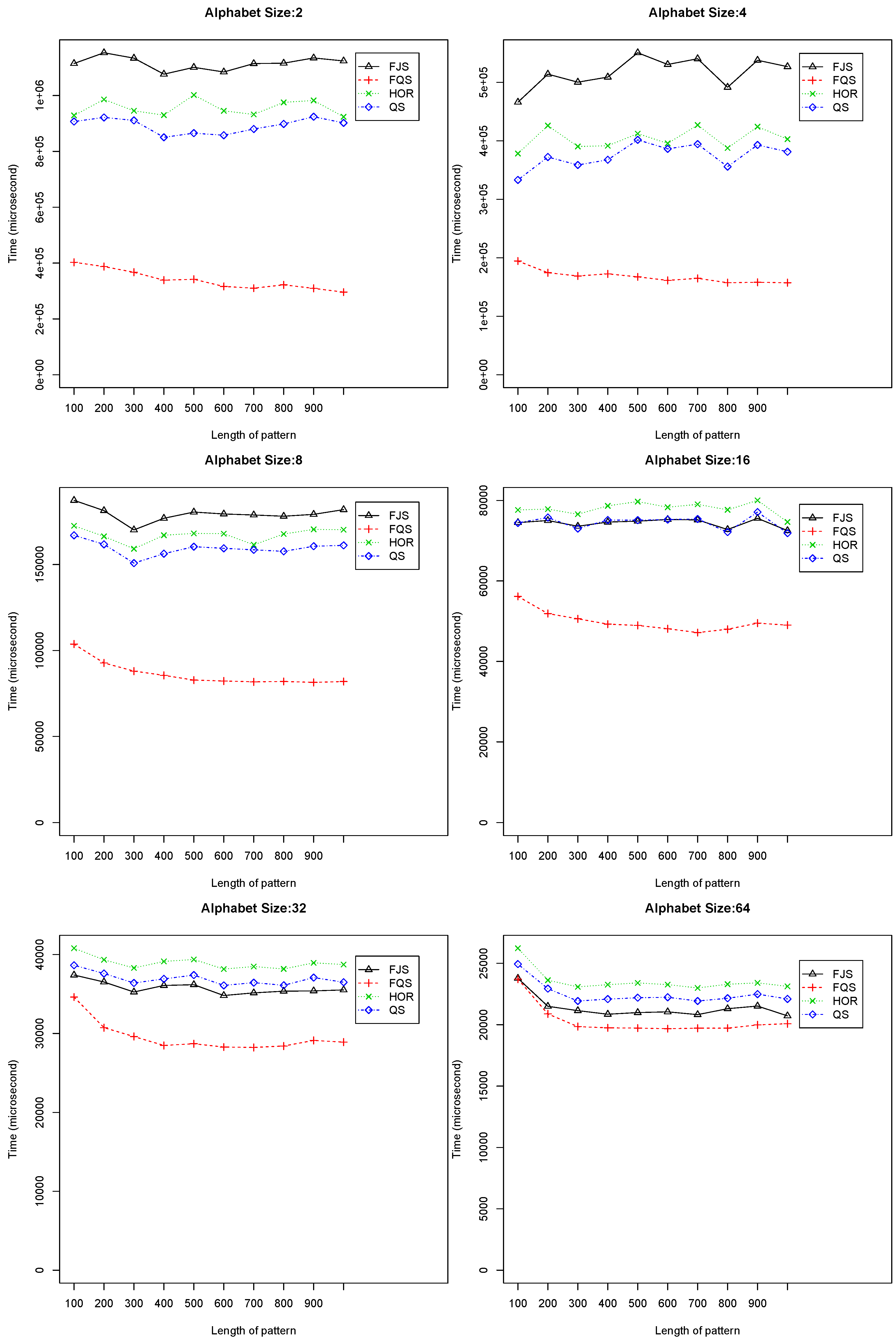

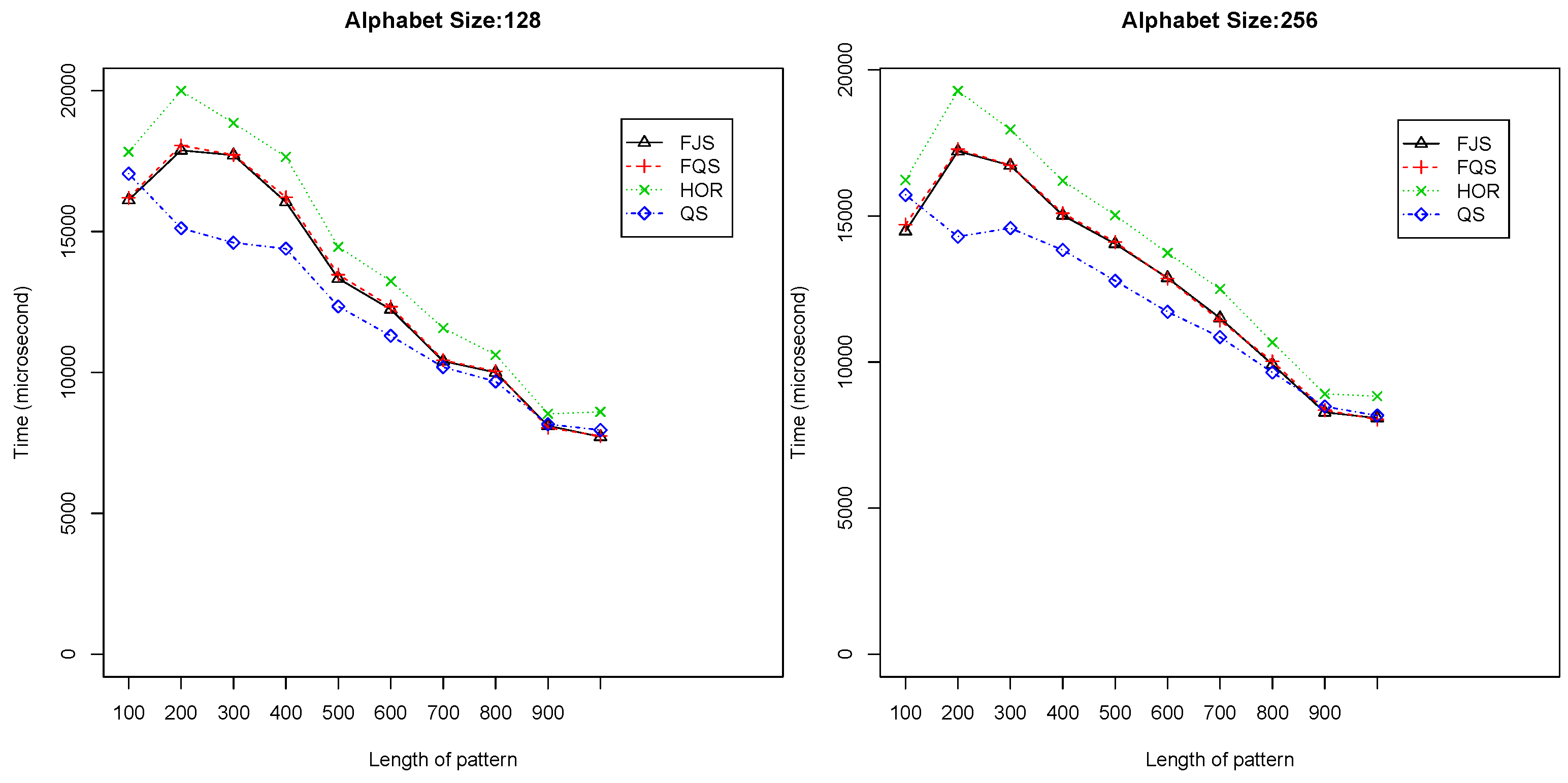

When

and

, QS is the best algorithm; FQS is similar to FJS, ranked as the second.

Figure 9 shows the experimental results. When the length of the pattern is longer than 800, QS, FJS and FQS all have a very similar performance.

Figure 6.

Execution time versus pattern length, m (), using randomized text files when .

Figure 6.

Execution time versus pattern length, m (), using randomized text files when .

Figure 7.

Execution time versus pattern length, m (), using randomized text files when . FJS, Franek–Jennings–Smyth; FQS, faster quick search; HOR, Horspool.

Figure 7.

Execution time versus pattern length, m (), using randomized text files when . FJS, Franek–Jennings–Smyth; FQS, faster quick search; HOR, Horspool.

Figure 8.

Execution time versus pattern length, m (), using randomized text files when .

Figure 8.

Execution time versus pattern length, m (), using randomized text files when .

When the alphabet size is small or medium ( = 2, 4, 8, 16, 32 and 64, respectively), the performance of FQS is significantly better than others. When the alphabet size is large ( = 128 or 256), FQS still has a competitive running performance. FQS is suitable to be used with a small or medium alphabet size (not more than 128). The longer the pattern is, the better FQS performs.

Figure 9.

Execution time versus pattern length, m (), using randomized text files when .

Figure 9.

Execution time versus pattern length, m (), using randomized text files when .

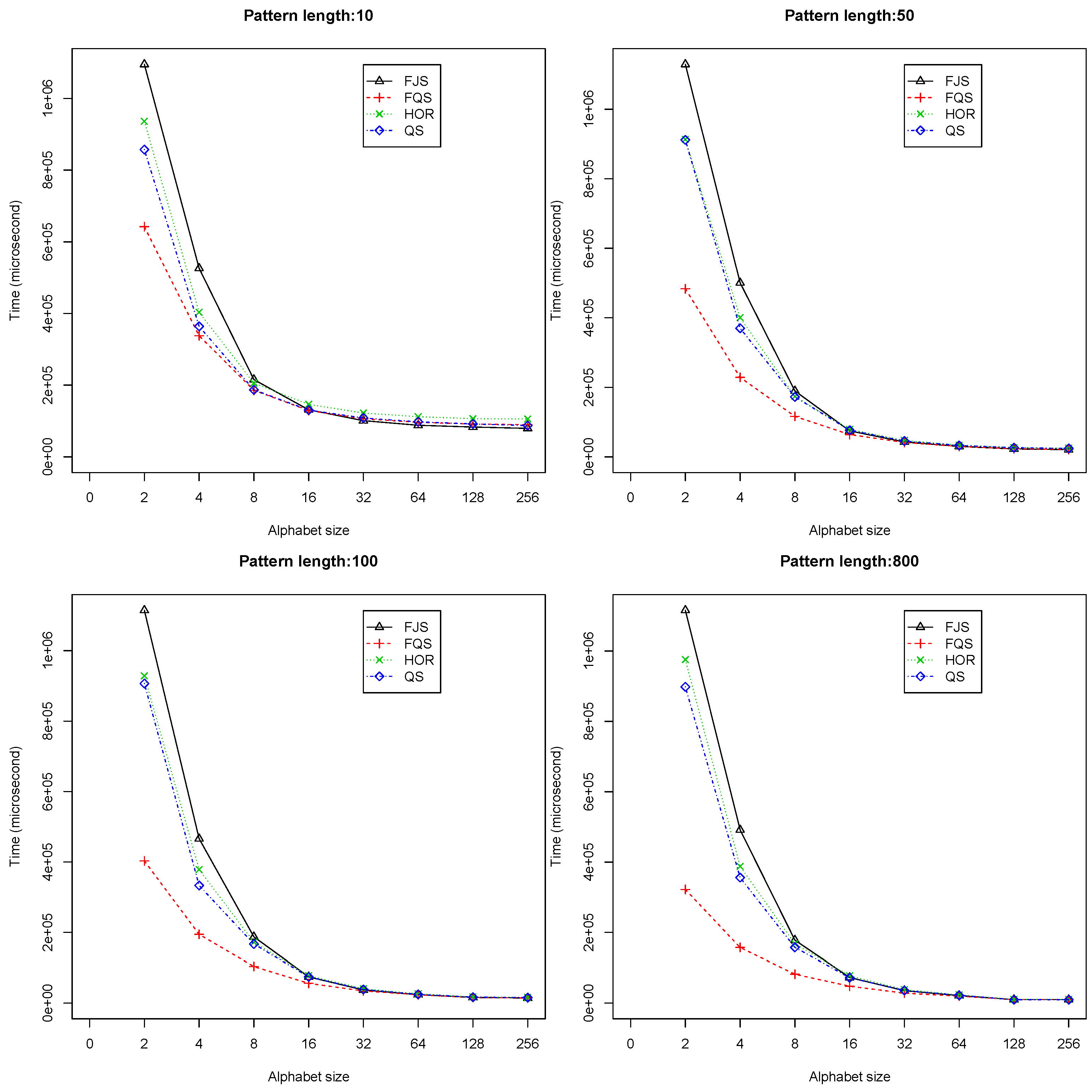

We took a closer look at the impact of alphabet sizes on the performance.

Figure 10 shows the average execution time plotted against alphabet size when the pattern length is fixed, for the cases with

,

,

and

, respectively.

Figure 10.

The variation of execution time with alphabet size Σ, () using randomized text files, when m = 10, 50, 100 and 800.

Figure 10.

The variation of execution time with alphabet size Σ, () using randomized text files, when m = 10, 50, 100 and 800.

From the figure, we can observe the overall trend for all of the algorithms: with increasing alphabet size

, the execution time decreases. FQS has better performance when

is small, especially for cases of long patterns (see

in

Figure 10, for example). This suggests that FQS will have important potential applications in the analysis of a genomic database, since the alphabet size is usually very small, typically four (for DNA or RNA sequences) or 20 (for protein sequences).

We summarize our observations on random texts as follows.

The longer a pattern is, the faster the FQS algorithm runs;

When the alphabet size is small or medium ( = 2, 4, 8, 16, 32 and 64), FQS outperforms the other QS variants: Horspool (abbreviated as HOR), FJS and the classic QS;

When , FQS is competitive with the other QS variants: HOR, FJS and classic QS.

4.2. Practical Text Files

The algorithms were also compared using the following three practical text files downloaded from the Large Canterbury Corpus:

- (1)

E. coli: the sequence of the Escherichia coli genome consisting of 4,638,690 base pairs with ;

- (2)

The Bible: The King James version of the Bible consisting of 4,047,392 characters with ;

- (3)

World192: A CIA World Fact Book consisting of 2,473,400 characters with .

The experiments were carried out the same way as in the case of randomized text files. The same 19 varying pattern lengths are used, namely, m = 10, 20, 30, 40, 50, 60, 70, 80, 90, 100, 200, 300, 400, 500, 600, 700, 800, 900 and 1,000, respectively. For a given pattern length, 50 different patterns are randomly chosen to search in each text file, and the average running time is recorded.

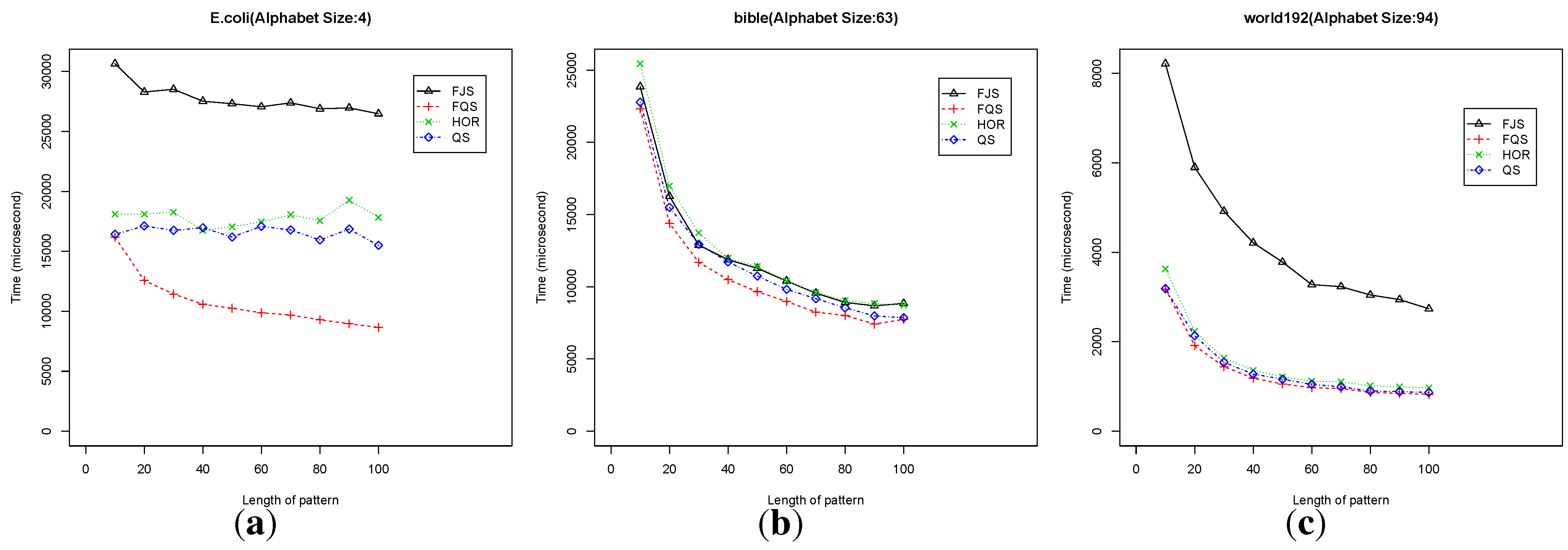

Figure 11 shows the execution time

versus pattern length from 10 to 100 in the three practical text files.

Figure 12 shows the results for pattern length from 100 to 1,000. In all of these cases, FQS outperforms the others.

Figure 11.

Execution time versus pattern length, m, for short to medium patterns () using practical text files.

Figure 11.

Execution time versus pattern length, m, for short to medium patterns () using practical text files.

Figure 12.

Execution time versus pattern length, m, for large patterns () using practical text files.

Figure 12.

Execution time versus pattern length, m, for large patterns () using practical text files.

For

E. coli, FQS is much better than the other algorithms. The QS and HOR are in the second group rank (

Figure 11a and

Figure 12a). For the Bible, the four algorithms have a similar performance. FQS is slightly better than the others (

Figure 11b and

Figure 12b). For World192, QS, FQS and HOR have a similar performance, with FQS showing a slightly better performance (

Figure 11c and

Figure 12c).

For these practical files, FQS is the overall best algorithm among the four. Each of the three practical files has a symbol alphabet with size . This suggests that FQS might be the algorithm of choice for practical use, especially for searching genomic databases with typically smaller alphabets.

4.3. Number of Symbol Comparisons

To put the practical running times presented above in context, we also investigated the number of comparisons required by the algorithms and the number of pattern shifts performed during the match. These two parameters are the basic determinants of the running time of the algorithms. Below, we report on the performance of the two best algorithms, QS and FQS.

Table 2 shows the number of comparisons, their corresponding standard deviation (STD) and statistical significance (

p-value) from algorithms QS and FQS, for pattern lengths

m = 10, 100, 500 and 1,000, respectively. From the table, we can observe that, in all cases, the number of comparisons used by FQS is less than that of QS. The Student’s

t-test compares whether there is a statistical difference between these two algorithms by using

p-value = 0.05 as the threshold. The

p-value is shown in bold where there is a significant difference. For Bible and

E. coli, there are significant differences in all cases. For World192, there is a statistically significant difference when pattern length

m = 1,000; the other three cases (

m = 10, 100, 500) do not show any statistically significant difference.

Table 3 shows the corresponding results for the number of pattern shifts, the corresponding standard deviation (STD) and statistical difference (

p-value) from algorithm QS and FQS, for the pattern lengths

m = 10, 100, 500, 1,000. Again, the results show that in all cases, the number of pattern shifts performed by FQS is less than the number for QS. From a statistical point of view, in seven out of 12 cases, there are statistically significant difference in the performance of FQS over QS. Taken together, these two tables provide an explanation for the superior performance of FQS on the practical files when compared with the other QS variants. More importantly, the results show the effectiveness of the innovative use of an intelligent pre-testing stage before embarking on the more time-consuming pattern matching. In our FQS algorithm, this pre-testing is performed using

, the location with the maximal expected shift in our FQS algorithm.

Table 2.

The number of symbol comparisons used by QS and FQS.

Table 2.

The number of symbol comparisons used by QS and FQS.

| Dataset | m | QS | | FQS | p_value |

|---|

| Mean | STD | | Mean | STD |

|---|

| Bible | 10 | 2,509,581 | 349,838 | | 2,233,411 | 124,671 | 0.0029 |

| Bible | 100 | 762,316 | 75,952 | | 646,298 | 54,656 | <0.01 |

| Bible | 500 | 436,243 | 69,142 | | 366,246 | 52,643 | 0.0010 |

| Bible | 1,000 | 371,849 | 47,702 | | 311,520 | 45,886 | 0.0002 |

| E. coli | 10 | 1,595,760 | 345,988 | | 1,197,866 | 265,086 | 0.0002 |

| E. coli | 100 | 1,634,972 | 548,912 | | 657,987 | 128,279 | <0.01 |

| E. coli | 500 | 1,563,532 | 435,567 | | 541,158 | 75,234 | <0.01 |

| E. coli | 1,000 | 1,777,232 | 505,260 | | 538,972 | 87,332 | <0.01 |

| World192 | 10 | 314,182 | 44,298 | | 307,453 | 30,050 | 0.5777 |

| World192 | 100 | 75,189 | 11,321 | | 70,636 | 11,953 | 0.2238 |

| World192 | 500 | 33,607 | 6,834 | | 30,483 | 6,272 | 0.1403 |

| World192 | 1,000 | 26,898 | 4,649 | | 23,800 | 3,675 | 0.0250 |

Table 3.

The number of pattern shifts used by QS and FQS.

Table 3.

The number of pattern shifts used by QS and FQS.

| Dataset | m | QS | | FQS | p_value |

|---|

| Mean | STD | | Mean | STD |

|---|

| Bible | 10 | 2,091,864 | 88,154 | | 1,981,448 | 170,460 | 0.0156 |

| Bible | 100 | 668,353 | 53,648 | | 638,690 | 54,324 | 0.0904 |

| Bible | 500 | 395,943 | 45,934 | | 361,286 | 52,932 | 0.0332 |

| Bible | 1,000 | 336,126 | 48,988 | | 304,023 | 46,194 | 0.0395 |

| E. coli | 10 | 1,173,807 | 274,324 | | 1,060,892 | 235,219 | 0.1706 |

| E. coli | 100 | 1,220,728 | 410,989 | | 603,276 | 119,927 | <0.01 |

| E. coli | 500 | 1,167,201 | 326,151 | | 497,990 | 69,480 | <0.01 |

| E. coli | 1,000 | 1,326,734 | 378,141 | | 495,055 | 79,672 | <0.01 |

| World192 | 10 | 285,185 | 29,089 | | 265,368 | 29,591 | 0.0392 |

| World192 | 100 | 71,759 | 11,011 | | 70,235 | 12,103 | 0.6794 |

| World192 | 500 | 31,265 | 6,038 | | 29,869 | 6,252 | 0.4768 |

| World192 | 1,000 | 24,299 | 4,146 | | 22,650 | 3,672 | 0.1910 |