Estimation of Tree Stem Attributes Using Terrestrial Photogrammetry with a Camera Rig

Abstract

:1. Introduction

2. Materials and Methods

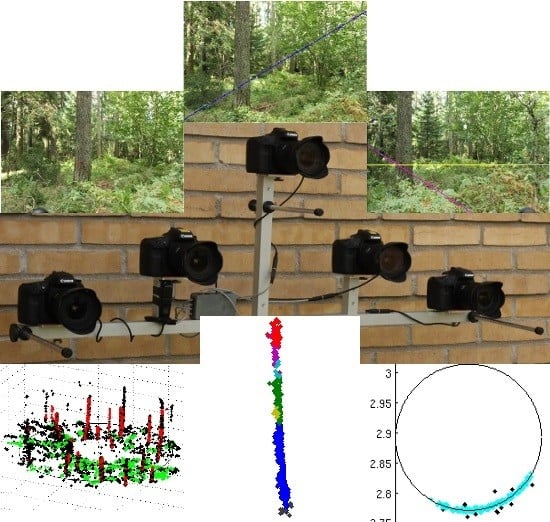

2.1. Rig and Image Protocol Design

2.2. Calibration

2.3. Field Work

2.4. Reduction of the Dataset

2.5. Data Processing

2.5.1. Calibration

2.5.2. Point Cloud Construction

- The keypoints should be similar, as judged by the normalized similarity () of the keypoint descriptors.

- The keypoints should correspond to the same 3D area, indicated by a similarity in keypoint size (within ±10%) and dominant direction (within ±30°). This requirement follows indirectly from the calibrated geometry of the rig, as an area at a distance of, e.g., 8 m should have roughly the same size and dominant orientation when viewed from the different cameras of the rig.

- The keypoints should correspond to the same 3D coordinate, as measured by the distance to the epipolar lines ( times the keypoint size) of the keypoints of the other images [23] (Chapter 9.1). This requirement follows directly from the calibrated geometry of the rig.

2.5.3. Tree Detection

2.5.4. Diameter Estimation

2.5.5. Circle Estimation

2.5.6. Presentation of Results

3. Results

- Correctly estimated tree with a diameter absolute error less than 20%.

- Incorrectly estimated tree with a diameter absolute error of at least 20%.

- Detected tree, where a stem is segmented, but the number of points close to breast height was either too few to be suitable for circle fitting or an error was reported by the circle fitting code. For detected trees, the position was the only reported attribute.

- Invisible tree, where a tree was present in the ground truth, but no corresponding segments were detected in the point cloud.

4. Discussion

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Riksskogstaxeringen. Available online: http://www.slu.se/sv/centrumbildningar-och-projekt/ riksskogstaxeringen/hur-vi-jobbar/ (accessed on 1 February 2016).

- Olofsson, K.; Lindberg, E.; Holmgren, J. A Method for Linking Field-surveyed and Aerial-detected Single Trees using Cross Correlation of Position Images and the Optimization of Weighted Tree List Graphs. In Proceedings of the SilviLaser, Edinburgh, UK, 17–19 September 2008; pp. 95–104.

- Lindberg, E.; Holmgren, J.; Olofsson, K.; Olsson, H. Estimation of Stem Attributes using a Combination of Terrestrial and Airborne Laser Scanning. In Proceedings of the SilviLaser, Freiburg, Germany, 14–17 September 2010; pp. 1917–1031.

- Vauhkonen, J.; Maltamo, M.; McRoberts, R.E.; Næsset, E. Forestry Applications of Airborne Laser Scanning; Springer: Netherlands, Netherlands, 2014; Chapter 4.3.3; p. 74. [Google Scholar]

- Clark, N.A.; Wynne, R.H.; Schmoldt, D.L. A Review of Past Research on Dendrometers. For. Sci. 2000, 46, 570–576. [Google Scholar]

- Liang, X.; Litkey, P.; Hyyppä, J.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Automatic Stem Mapping Using Single-Scan Terrestrial Laser Scanning. IEEE Trans. Geosci. Remote Sens. 2012, 50, 661–670. [Google Scholar] [CrossRef]

- Olofsson, K.; Holmgren, J.; Olsson, H. Tree Stem and Height Measurements using Terrestrial Laser Scanning and the RANSAC Algorithm. Remote Sens. 2014, 6, 4323–4344. [Google Scholar] [CrossRef]

- Ducey, M.J.; Astrup, R. Adjusting for nondetection in forest inventories derived from terrestrial laser scanning. Can. J. Remote Sens. 2013, 39, 410–425. [Google Scholar]

- Liang, X.; Hyyppä, J. Automatic stem mapping by merging several terrestrial laser scans at the feature and decision levels. Sensors 2013, 13, 1614–1634. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Xinlian, L.; Yunsheng, W.; Anttoni, J.; Antero, K.; Harri, K.; Juha, H.; Eija, H.; Jingbin, L. Forest Data Collection Using Terrestrial Image-Based Point Clouds From a Handheld Camera Compared to Terrestrial and Personal Laser Scanning. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5117–5132. [Google Scholar] [CrossRef]

- Reidelstürz, P. Forstliches Anwendungspotential der terrestrisch—Analytischen Stereo- photogrammetrie. Ph.D. Thesis, der Albert-Ludwigs-Universität Freiburg, Breisgau, Germany, 1997. [Google Scholar]

- Fürst, C.; Nepveu, G. Assessment of the assortment potential of the growing stock—A photogrammetry based approach for an automatized grading of sample trees. Ann. For. Sci. 2006, 63, 951–960. [Google Scholar] [CrossRef]

- Dick, A.R.; Kershaw, J.A.; MacLean, D.A. Spatial Tree Mapping Using Photography. N. J. Appl. For. 2010, 27, 68–74. [Google Scholar]

- Berveglieri, A.; Oliveira, R.A.; Tommaselli, A.M.G. A feasibility study on the measurement of tree trunks in forests using multi-scale vertical images. Int. Archives Photogrammetry Remote Sens. Spat. Inf. Sci. 2014, XL-5, 87–92. [Google Scholar] [CrossRef]

- Liang, X.; Jaakkola, A.; Wang, Y.; Hyyppä, J.; Honkavaara, E.; Liu, J.; Kaartinen, H. The Use of a Hand-Held Camera for Individual Tree 3D Mapping in Forest Sample Plots. Remote Sens. 2014, 6, 6587–6603. [Google Scholar] [CrossRef]

- Gatziolis, D.; Lienard, J.F.; Vogs, A.; Strigul, N.S. 3D Tree Dimensionality Assessment Using Photogrammetry and Small Unmanned Aerial Vehicles. PLoS ONE 2015, 10, e0137765. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-García, C.; Montes, F.; Ruiz, F.; Cañellas, I.; Pita, P. Stem mapping and estimating standing volume from stereoscopic hemispherical images. Eur. J. For. Res. 2014, 133, 895–904. [Google Scholar] [CrossRef]

- Forsman, M.; Börlin, N.; Holmgren, J. Estimation of tree stem attributes using terrestrial photogrammetry. Int. Archives Photogrammetry Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B5, 261–265. [Google Scholar] [CrossRef]

- Easyrig 2.5. Available online: http://www.easyrig.se/product/easyrig-2-5.

- Börlin, N.; Grussenmeyer, P. Camera Calibration using the Damped Bundle Adjustment Toolbox. In Proceedings of the ISPRS Technical Commission V Symposium, Riva del Garda, Italy, 23–25 June 2014; 2014; Volume II-5, pp. 89–96. [Google Scholar]

- Börlin, N.; Grussenmeyer, P. Bundle Adjustment With and Without Damping. Photogramm. Rec. 2013, 28, 396–415. [Google Scholar] [CrossRef]

- Vedaldi, A.; Fulkerson, B. VLFeat: An Open and Portable Library of Computer Vision Algorithms. Available online: http://www.vlfeat.org/ (accessed on 3 March 2016).

- Hartley, R.I.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003; ISBN 0521540518. [Google Scholar]

- Chris McGlone, J.; Mikhail, E.M.; Bethel, J.S.; American Society for Photogrammetry and Remote Sensing. Manual of Photogrammetry; American Society for Photogrammetry and Remote Sensing: Bethesda, Maryland, 2004. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Söderkvist, I.; Wedin, P.A. Determining the Movements of the Skeleton Using Well-Configured Markers. J. Biomech. 1993, 26, 1473–1477. [Google Scholar] [CrossRef]

- Pfeifer, N.; Gorte, B.; Winterhalder, D. Automatic Reconstruction of Single Trees from Terrestrial Laser Scanner Data. In Proceedings of the 20th ISPRS Congress, Istanbul, Turkey, 12–23 July 2004.

- The CGAL Project. CGAL User and Reference Manual, 4.7 ed.; CGAL Editorial Board, 2015. [Google Scholar]

- Rabbani, T.; van den Heuvel, F.; Vosselmann, G. Segmentation of point clouds using smoothness constraint. Int. Arch. Photogram. Remote Sens. Spatial Inf. Sci. 2006, 36, 248–253. [Google Scholar]

- Gander, W.; Golub, G.H.; Strebel, R. Least-squares fitting of circles and ellipses. BIT 1994, 34, 558–578. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Liang, X.; Litkey, P.; Hyyppä, J.; Kaartinen, H.; Kukko, A.; Holopainen, M. Automatic plot-wise tree location mapping using single-scan terrestrial laser scanning. Photogramm. J. Finland 2011, 22, 37–48. [Google Scholar]

- Hessenmöller, D.; Elsenhans, A.S.; Schulze, E.D. Sampling forest tree regeneration with a transect approach. Ann. For. Res. 2013, 56, 3–14. [Google Scholar]

| Plot | Trees | Trees/m | mean DBH | min DBH | max DBH |

|---|---|---|---|---|---|

| 32 | 29 | 0.09 | 23.1 | 11.9 | 35.7 |

| 87 | 38 | 0.12 | 22.8 | 5.0 | 35.7 |

| 165 | 25 | 0.08 | 24.1 | 10.5 | 34.5 |

| 167 | 22 | 0.07 | 19.2 | 5.2 | 30.8 |

| 203 | 34 | 0.11 | 21.0 | 3.8 | 37.0 |

| 343 | 12 | 0.04 | 19.3 | 7.1 | 29.9 |

| Plot No. | # of Trees | Correct | Incorrect | Detected | Invisible |

|---|---|---|---|---|---|

| 165 | 25 | 7 | 2 | 8 | 8 |

| 167 | 22 | 8 | 0 | 10 | 4 |

| 343 | 12 | 7 | 1 | 2 | 2 |

| 203 | 34 | 2 | 0 | 9 | 23 |

| 32 | 29 | 2 | 2 | 7 | 18 |

| 87 | 38 | 1 | 1 | 13 | 23 |

| All plots | 160 | 27 | 6 | 49 | 78 |

| Suitable plots | 59 | 22 | 3 | 20 | 14 |

| Diameter (m) | Position (m) | |||

|---|---|---|---|---|

| Plot No. | Bias | RMSE | Mean diff | Std |

| 165 | 0.012 | 0.095 | 0.39 | 0.13 |

| 167 | −0.005 | 0.028 | 0.31 | 0.11 |

| 343 | 0.023 | 0.067 | 0.54 | 0.25 |

| 203 | 0.024 | 0.026 | 0.40 | 0.1 |

| 32 | 0.040 | 0.054 | 0.41 | 0.08 |

| 87 | 0.110 | 0.138 | 0.78 | 0.01 |

| All plots | 0.020 | 0.074 | - | - |

| Suitable plots | 0.010 | 0.072 | - | - |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Forsman, M.; Börlin, N.; Holmgren, J. Estimation of Tree Stem Attributes Using Terrestrial Photogrammetry with a Camera Rig. Forests 2016, 7, 61. https://doi.org/10.3390/f7030061

Forsman M, Börlin N, Holmgren J. Estimation of Tree Stem Attributes Using Terrestrial Photogrammetry with a Camera Rig. Forests. 2016; 7(3):61. https://doi.org/10.3390/f7030061

Chicago/Turabian StyleForsman, Mona, Niclas Börlin, and Johan Holmgren. 2016. "Estimation of Tree Stem Attributes Using Terrestrial Photogrammetry with a Camera Rig" Forests 7, no. 3: 61. https://doi.org/10.3390/f7030061