Forest Structure Estimation from a UAV-Based Photogrammetric Point Cloud in Managed Temperate Coniferous Forests

Abstract

:1. Introduction

2. Materials and Methods

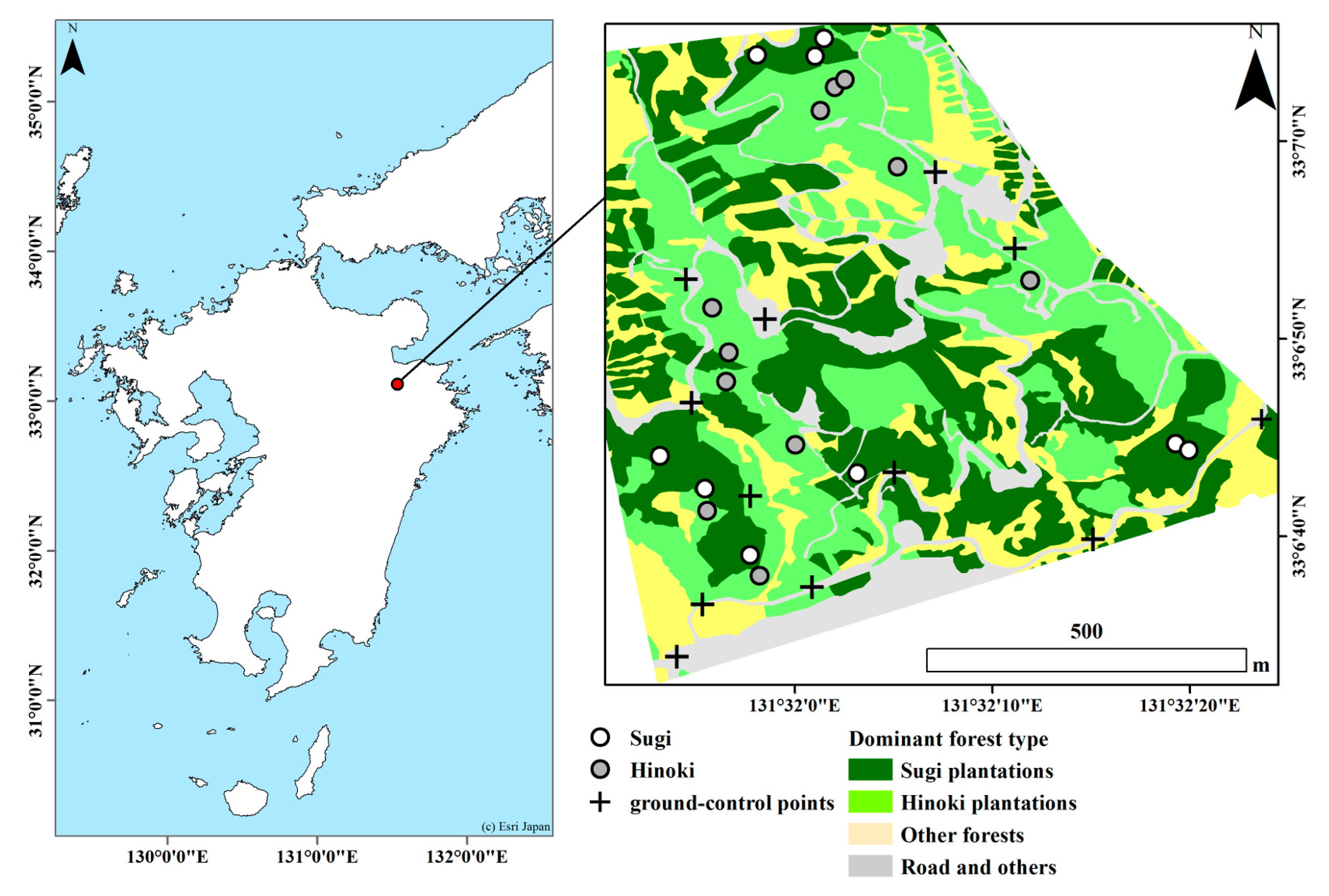

2.1. Study Area

2.2. Field Measurements

2.3. Remote Sensing Data

2.4. Remote Sensing Data

2.4.1. Processing of the UAV Photographs

2.4.2. Calculation of a Canopy Height Model (CHM) and Variable Extractions

2.4.3. Statistical Analysis

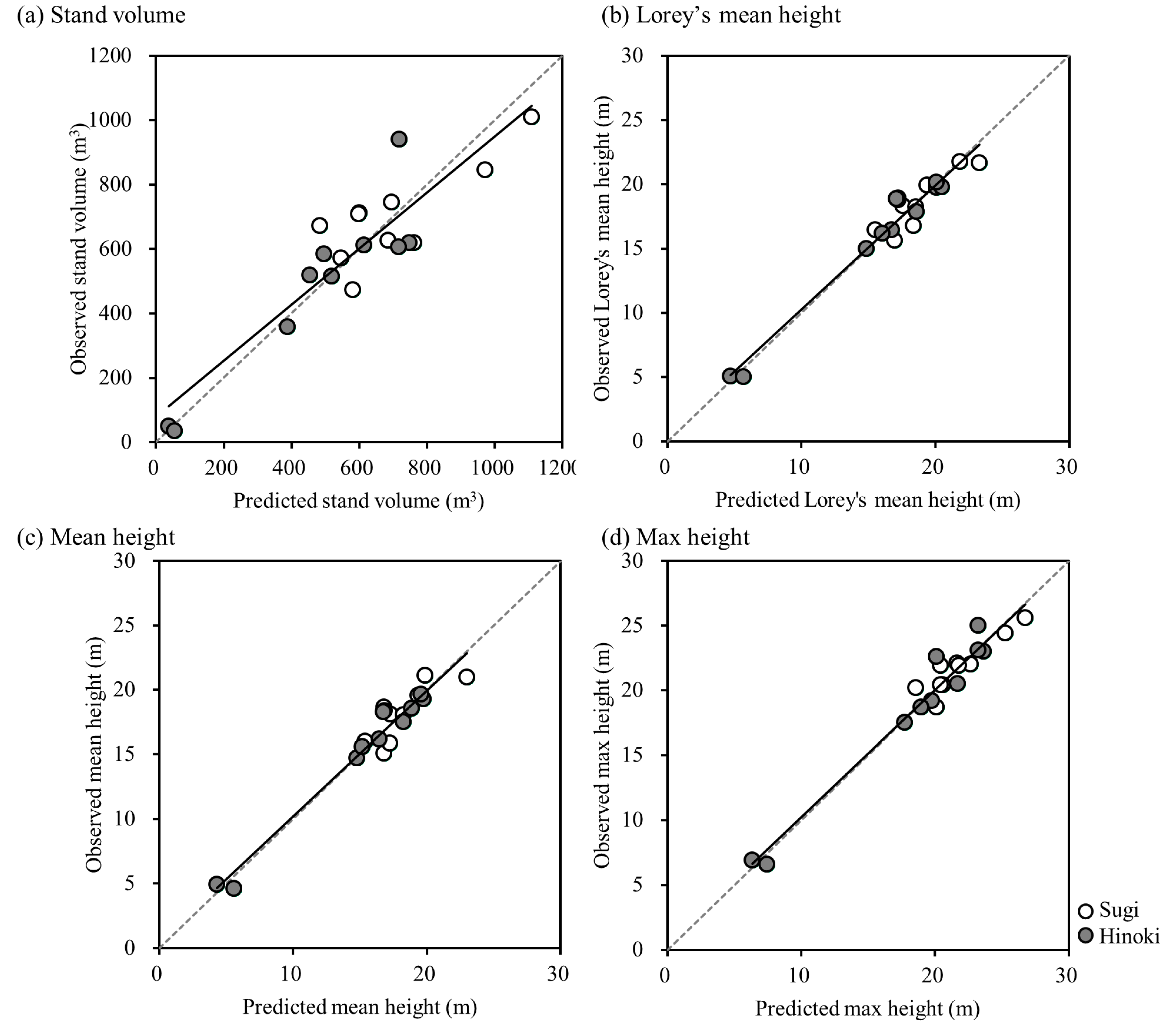

3. Results

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- White, J.C.; Coops, N.C.; Wulder, M.A.; Vastaranta, M.; Hilker, T.; Tompalski, P. Remote Sensing Technologies for Enhancing Forest Inventories: A Review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar] [CrossRef]

- Næsset, E. Airborne laser scanning as a method in operational forest inventory: Status of accuracy assessments accomplished in Scandinavia. Scand. J. For. Res. 2007, 22, 433–442. [Google Scholar] [CrossRef]

- Ioki, K.; Imanishi, J.; Sasaki, T.; Morimoto, Y.; Kitada, K. Estimating stand volume in broad-leaved forest using discrete-return LiDAR: Plot-based approach. Landsc. Ecol. Eng. 2010, 6, 29–36. [Google Scholar] [CrossRef] [Green Version]

- Næsset, E.; Økland, T. Estimating tree height and tree crown properties using airborne scanning laser in a boreal nature reserve. Remote Sens. Environ. 2002, 79, 105–115. [Google Scholar] [CrossRef]

- Wulder, M.A.; Bater, C.W.; Coops, N.C.; Hilker, T.; White, J.C. The role of LiDAR in sustainable forest management. For. Chron. 2008, 84. [Google Scholar] [CrossRef]

- Hird, N.J.; Montaghi, A.; McDermid, J.G.; Kariyeva, J.; Moorman, J.B.; Nielsen, E.S.; McIntosh, C.A. Use of Unmanned Aerial Vehicles for Monitoring Recovery of Forest Vegetation on Petroleum Well Sites. Remote Sens. 2017, 9. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Vastaranta, M.; Coops, N.C.; Pitt, D.; Woods, M. The utility of image-based point clouds for forest inventory: A comparison with airborne laser scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef]

- Ota, T.; Ogawa, M.; Shimizu, K.; Kajisa, T.; Mizoue, N.; Yoshida, S.; Takao, G.; Hirata, Y.; Furuya, N.; Sano, T.; et al. Aboveground Biomass Estimation Using Structure from Motion Approach with Aerial Photographs in a Seasonal Tropical Forest. Forests 2015, 6, 3882–3898. [Google Scholar] [CrossRef]

- Bohlin, J.; Wallerman, J.; Fransson, J.E.S. Forest variable estimation using photogrammetric matching of digital aerial images in combination with a high-resolution DEM. Scand. J. For. Res. 2012, 27, 692–699. [Google Scholar] [CrossRef]

- Balenović, I.; Simic Milas, A.; Marjanović, H. A Comparison of Stand-Level Volume Estimates from Image-Based Canopy Height Models of Different Spatial Resolutions. Remote Sens. 2017, 9, 205. [Google Scholar] [CrossRef]

- Vastaranta, M.; Wulder, M.A.; White, J.C.; Pekkarinen, A.; Tuominen, S.; Ginzler, C.; Kankare, V.; Holopainen, M.; Hyyppä, J.; Hyyppä, H. Airborne laser scanning and digital stereo imagery measures of forest structure: Comparative results and implications to forest mapping and inventory update. Can. J. Remote Sens. 2013, 39, 382–395. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Salamí, E.; Barrado, C.; Pastor, E. UAV Flight Experiments Applied to the Remote Sensing of Vegetated Areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef] [Green Version]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef] [Green Version]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2016, 38, 2427–2447. [Google Scholar]

- Goodbody, T.R.H.; Coops, N.C.; Marshall, P.L.; Tompalski, P.; Crawford, P. Unmanned aerial systems for precision forest inventory purposes: A review and case study. For. Chron. 2017, 93, 71–81. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Birdal, A.C.; Avdan, U.; Türk, T. Estimating tree heights with images from an unmanned aerial vehicle. Geomat. Nat. Hazards Risk 2017, 1–13. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Dandois, J.; Olano, M.; Ellis, E. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A photogrammetric workflow for the creation of a forest canopy height model from small unmanned aerial system imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef]

- Tonolli, S.; Dalponte, M.; Neteler, M.; Rodeghiero, M.; Vescovo, L.; Gianelle, D. Fusion of airborne LiDAR and satellite multispectral data for the estimation of timber volume in the Southern Alps. Remote Sens. Environ. 2011, 115, 2486–2498. [Google Scholar] [CrossRef]

- Popescu, S.C.; Wynne, R.H.; Scrivani, J.A. Fusion of small-footprint lidar and multispectral data to estimate plot-level volume and biomass in deciduous and pine forests in Virginia, USA. For. Sci. 2004, 50, 551–565. [Google Scholar]

- Luo, S.; Wang, C.; Xi, X.; Pan, F.; Peng, D.; Zou, J.; Nie, S.; Qin, H. Fusion of airborne LiDAR data and hyperspectral imagery for aboveground and belowground forest biomass estimation. Ecol. Indic. 2017, 73, 378–387. [Google Scholar] [CrossRef]

- Näslund, M. Skogsforsöksastaltens gallringsforsök i tallskog. Medd. Statens Skogsforsöksanstalt 1936, 29, 1–169. [Google Scholar]

- Forest Agency of Japan Timber volume table (Western Japan). Forestry Investigation Committee: Tokyo, Japan, 1970.

- Agisoft PhotoScan. Available online: http://www.agisoft.com (accessed on 22 May 2017).

- Agisoft, L.L.C. Agisoft Photoscan User Manual; Professional Edition, Version 1.2; Agisoft: St. Petersburg, Russia, 2016; Available online: http://www.agisoft.com/pdf/photoscan-pro_1_2_en.pdf (accessed on 6 July 2017).

- Dalponte, M.; Ørka, H.O.; Ene, L.T.; Gobakken, T.; Næsset, E. Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens. Environ. 2014, 140, 306–317. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-based photogrammetry and hyperspectral imaging for mapping bark beetle damage at tree-level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef]

- Ota, T.; Kajisa, T.; Mizoue, N.; Yoshida, S.; Takao, G.; Hirata, Y.; Furuya, N.; Sano, T.; Ponce-Hernandez, R.; Ahmed, O.S.; et al. Estimating aboveground carbon using airborne LiDAR in Cambodian tropical seasonal forests for REDD+ implementation. J. For. Res. 2015, 20, 484–492. [Google Scholar] [CrossRef]

- R Core Team R: A Language and Environment for Statistical Computing. Available online: https://www.r-project.org/ (accessed on 26 May 2017).

- Kachamba, J.D.; Ørka, O.H.; Gobakken, T.; Eid, T.; Mwase, W. Biomass Estimation Using 3D Data from Unmanned Aerial Vehicle Imagery in a Tropical Woodland. Remote Sens. 2016, 8. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Tree species classification in the Southern Alps based on the fusion of very high geometrical resolution multispectral/hyperspectral images and LiDAR data. Remote Sens. Environ. 2012, 123, 258–270. [Google Scholar] [CrossRef]

- Næsset, E. Estimation of above-and below-ground biomass in boreal forest ecosystems. In International Society of Photogrammetry and Remote Sensing. International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences; Thies, M., Kock, B., Spiecker, H., Weinacker, H., Eds.; International Society of Photogrammetry and Remote Sensing (ISPRS): Freiburg, Germany, 2004; pp. 145–148. [Google Scholar]

| Dominant Tree Type | The Number of Plots | V (m3/ha) | HL (m) | HA (m) | HM (m) | ||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | Mean | SD | ||

| Sugi | 9 | 712.37 | 142.20 | 18.67 | 2.06 | 18.17 | 2.07 | 21.91 | 1.98 |

| Hinoki | 11 | 491.75 | 249.36 | 15.67 | 5.25 | 15.21 | 5.13 | 18.50 | 5.92 |

| Ground-Control Points | X Error (m) | Y Error (m) | Z Error (m) | Total Error (m) |

|---|---|---|---|---|

| 1 | 1.15 | 5.19 | −1.42 | 5.50 |

| 2 | 0.48 | 0.26 | −0.07 | 0.55 |

| 3 | −3.26 | −1.08 | −3.87 | 5.17 |

| 4 | 0.92 | −0.15 | 0.44 | 1.03 |

| 5 | 2.19 | −0.54 | −0.26 | 2.27 |

| 6 | −1.01 | −0.81 | 0.15 | 1.30 |

| 7 | 0.81 | −1.89 | −0.85 | 2.23 |

| 8 | −0.48 | −0.63 | 0.90 | 1.20 |

| 9 | 1.16 | −0.30 | −0.01 | 1.20 |

| 10 | −1.53 | 1.68 | 1.87 | 2.94 |

| 11 | −0.49 | −1.77 | 3.14 | 3.64 |

| RMSE | 1.47 | 1.89 | 1.71 | 2.94 |

| Dependent Variables | Independent Variable | Selected Variables | R2 | AdjR2 | RMSE | Relative RMSE | BIC |

|---|---|---|---|---|---|---|---|

| V | h | h90 | 0.71 | 0.70 | 131.74 | 22.29 | 7.14 |

| RGB | RGBG, sd | 0.26 | 0.21 | 291.80 | 49.37 | 58.99 | |

| h + RGB | h90, RGBB, sd | 0.68 | 0.64 | 143.15 | 24.22 | 9.95 | |

| h + dtype | h90, dtype | 0.78 | 0.75 | 118.30 | 20.02 | 2.99 | |

| RGB + dtype | RGBB, sd, dtype | 0.20 | 0.11 | 303.16 | 51.29 | 53.01 | |

| h + RGB + dtype | h90, RGBR, sd, dtype | 0.80 | 0.76 | 112.97 | 19.11 | 3.83 | |

| HL | h | h90 | 0.93 | 0.92 | 1.21 | 7.08 | −41.14 |

| RGB | RGBB, sd | 0.23 | 0.19 | 4.31 | 25.29 | 19.73 | |

| h + RGB | h90, RGBB, mean | 0.94 | 0.92 | 1.19 | 7.00 | −39.41 | |

| h + dtype | h90, dtype | 0.92 | 0.93 | 1.13 | 6.65 | −42.56 | |

| RGB + dtype | RGBB, sd, dtype | 0.90 | 0.10 | 4.69 | 27.57 | 22.73 | |

| h + RGB + dtype | h90, RGBG, mean, dtype | 0.93 | 0.92 | 1.15 | 6.7 | −39.71 | |

| HA | h | h90 | 0.91 | 0.91 | 1.31 | 7.92 | −35.96 |

| RGB | RGBB, sd | 0.21 | 0.16 | 4.30 | 25.97 | 21.12 | |

| h + RGB | h90, RGBB, mean | 0.92 | 0.91 | 1.25 | 7.53 | −35.26 | |

| h + dtype | h70, dtype | 0.90 | 0.91 | 1.24 | 7.50 | −34.12 | |

| RGB + dtype | RGBB, sd, dtype | 0.89 | 0.08 | 4.62 | 27.96 | 24.11 | |

| h + RGB + dtype | h90, RGBB, mean, dtype | 0.92 | 0.91 | 1.24 | 7.51 | −34.29 | |

| HM | h | h90 | 0.93 | 0.92 | 1.32 | 6.61 | −42.74 |

| RGB | RGBB, sd | 0.26 | 0.22 | 4.62 | 23.05 | 14.92 | |

| h + RGB | h90, RGBR, mean | 0.94 | 0.92 | 1.32 | 6.57 | −40.37 | |

| h + dtype | h90, dtype | 0.92 | 0.93 | 1.24 | 6.17 | −44.89 | |

| RGB + dtype | RGBB, sd, dtype | 0.90 | 0.13 | 5.04 | 25.17 | 17.91 | |

| h + RGB + dtype | h90, RGBR, mean, dtype | 0.93 | 0.94 | 1.13 | 5.63 | −44.30 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ota, T.; Ogawa, M.; Mizoue, N.; Fukumoto, K.; Yoshida, S. Forest Structure Estimation from a UAV-Based Photogrammetric Point Cloud in Managed Temperate Coniferous Forests. Forests 2017, 8, 343. https://doi.org/10.3390/f8090343

Ota T, Ogawa M, Mizoue N, Fukumoto K, Yoshida S. Forest Structure Estimation from a UAV-Based Photogrammetric Point Cloud in Managed Temperate Coniferous Forests. Forests. 2017; 8(9):343. https://doi.org/10.3390/f8090343

Chicago/Turabian StyleOta, Tetsuji, Miyuki Ogawa, Nobuya Mizoue, Keiko Fukumoto, and Shigejiro Yoshida. 2017. "Forest Structure Estimation from a UAV-Based Photogrammetric Point Cloud in Managed Temperate Coniferous Forests" Forests 8, no. 9: 343. https://doi.org/10.3390/f8090343