The

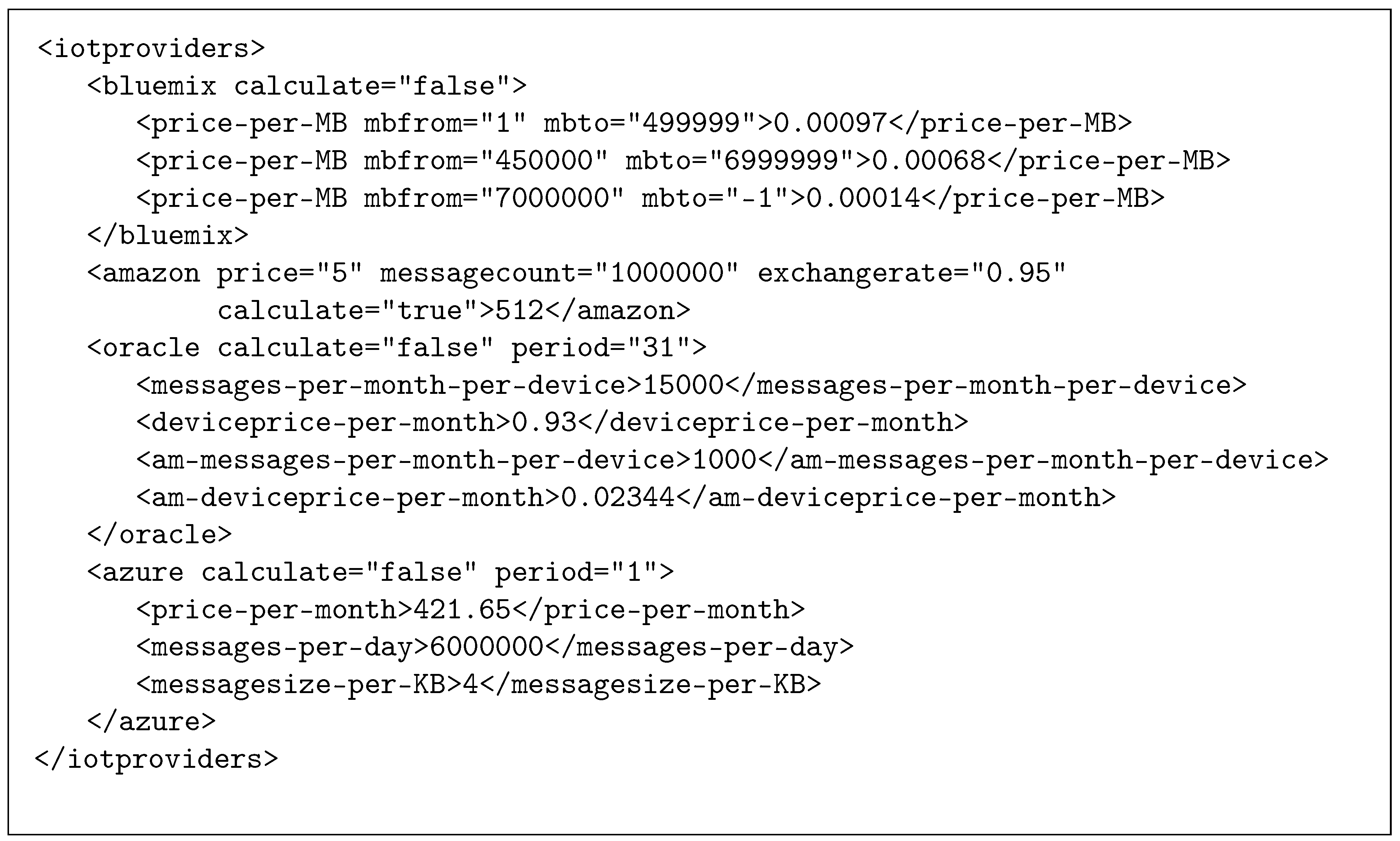

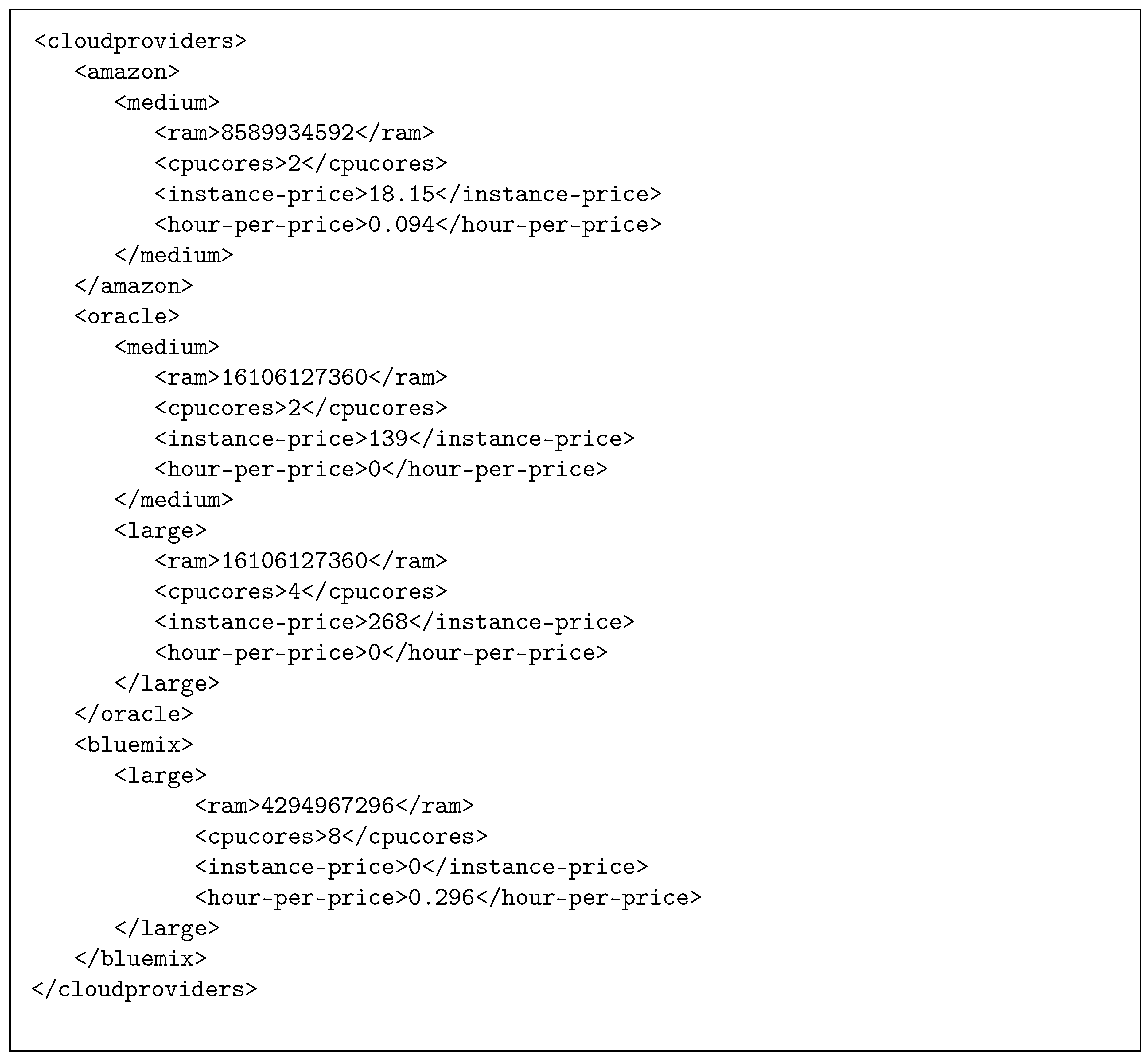

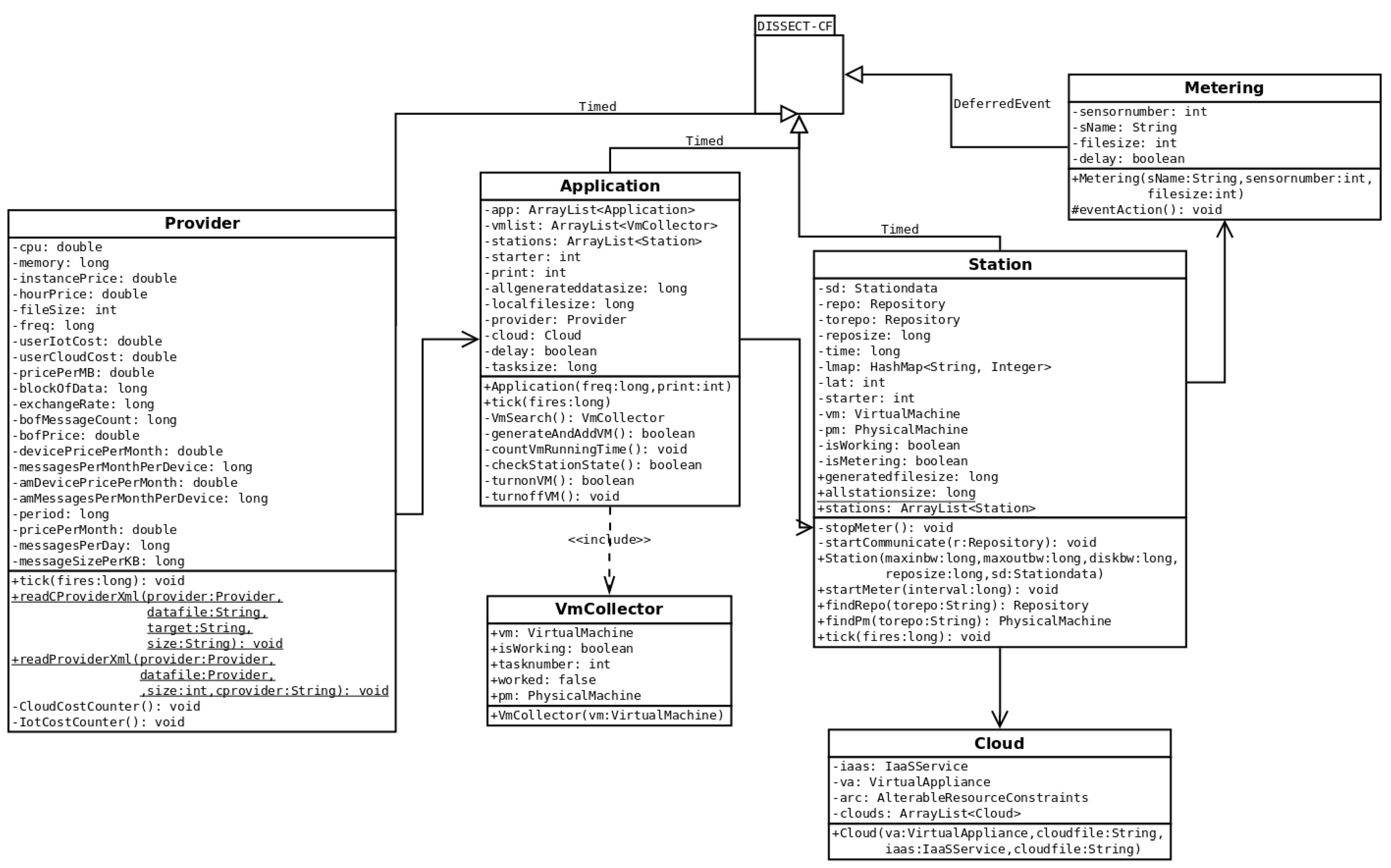

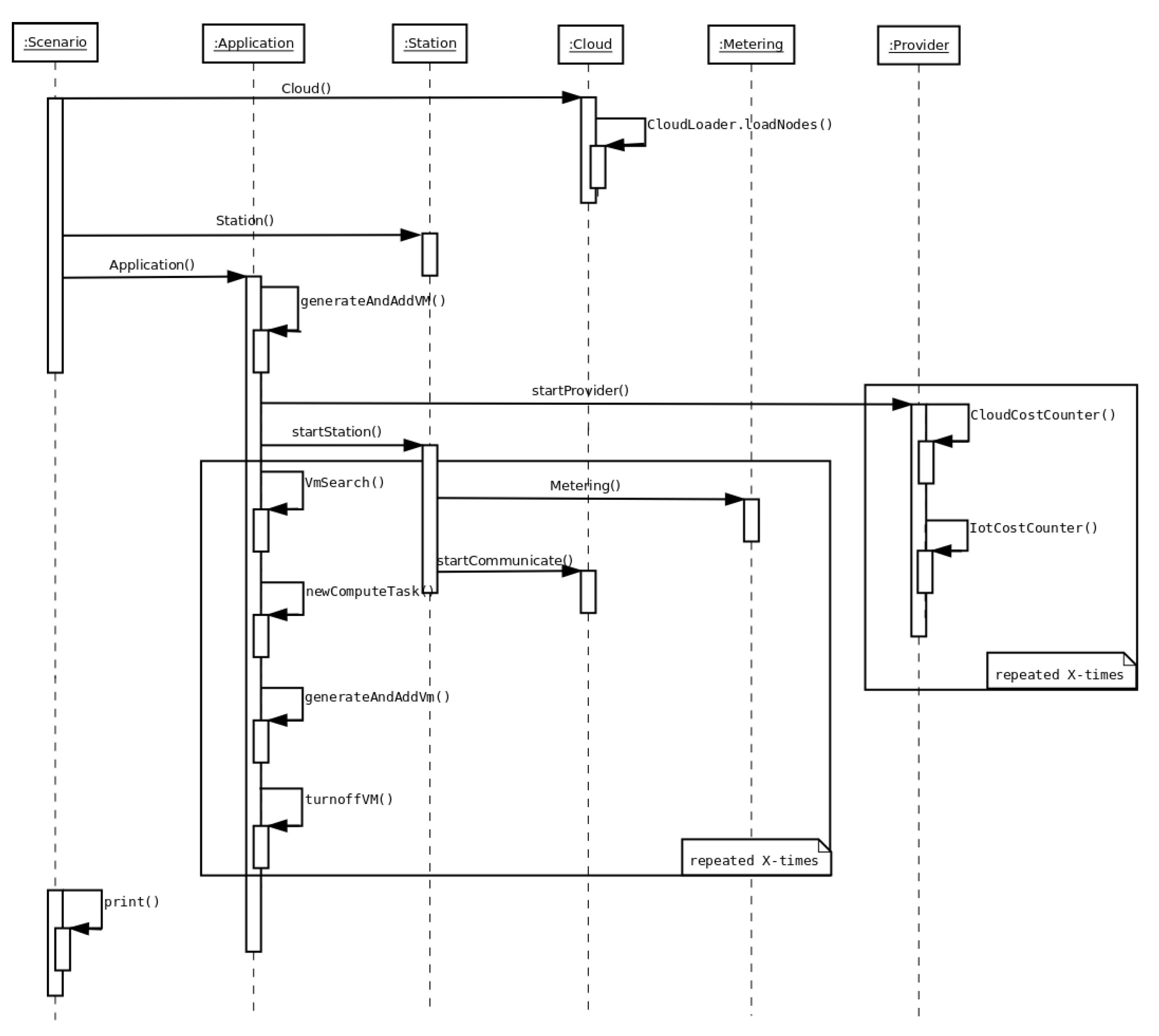

Provider class is the implementation of the IoT Pricing and Cloud Pricing components of the architecture shown in

Figure 1). In the previous section, we defined our proposed cost model with two XML-based, declarative modelling language schemes for representing and configuring IoT and cloud side costs of certain providers. These samples can be used to assign pricing to the corresponding entities within the simulation, and the

Provider class is responsible to load (i.e., read) and manage these values for cost calculations. It can be specified for each simulated IoT cloud system and which provider (thus pricing scheme) to be used for the calculation. If one needs to define a new IoT provider (other than the ones introduced), then the abstract class of

Provider needs to be extended with an overridden method called:

IoTCostCounter(). This extension can use the existing elements of the XML to define its new scheme. If a completely new method is needed for cost calculation, then the relevant XML parsing must also be done by the extension. To define a new cloud provider, one has to change the target and the size attributes (defined in the IoT provider XML file) and keep it in sync with the cloud provider XML file. This could entail the overriding of the

CloudCostCounter() method.

Evaluation with Five Scenarios

In this sub-section, we reveal five scenarios addressing questions likely to be investigated with the help of extended DISSECT-CF [

26]. Namely, our scenarios mainly focus on how resource utilization and management patterns alter based on changing sensor behaviour and how do these affect the incurred costs of operating the IoT system (e.g., how different sensor data sizes and varying number of stations and sensors affect the operation of the simulated IoT system). Note, the scope of these scenarios is solely focused on the validation of our proposed IoT extensions and thus the scenarios are mostly underdeveloped in terms of how a weather service would behave internally.

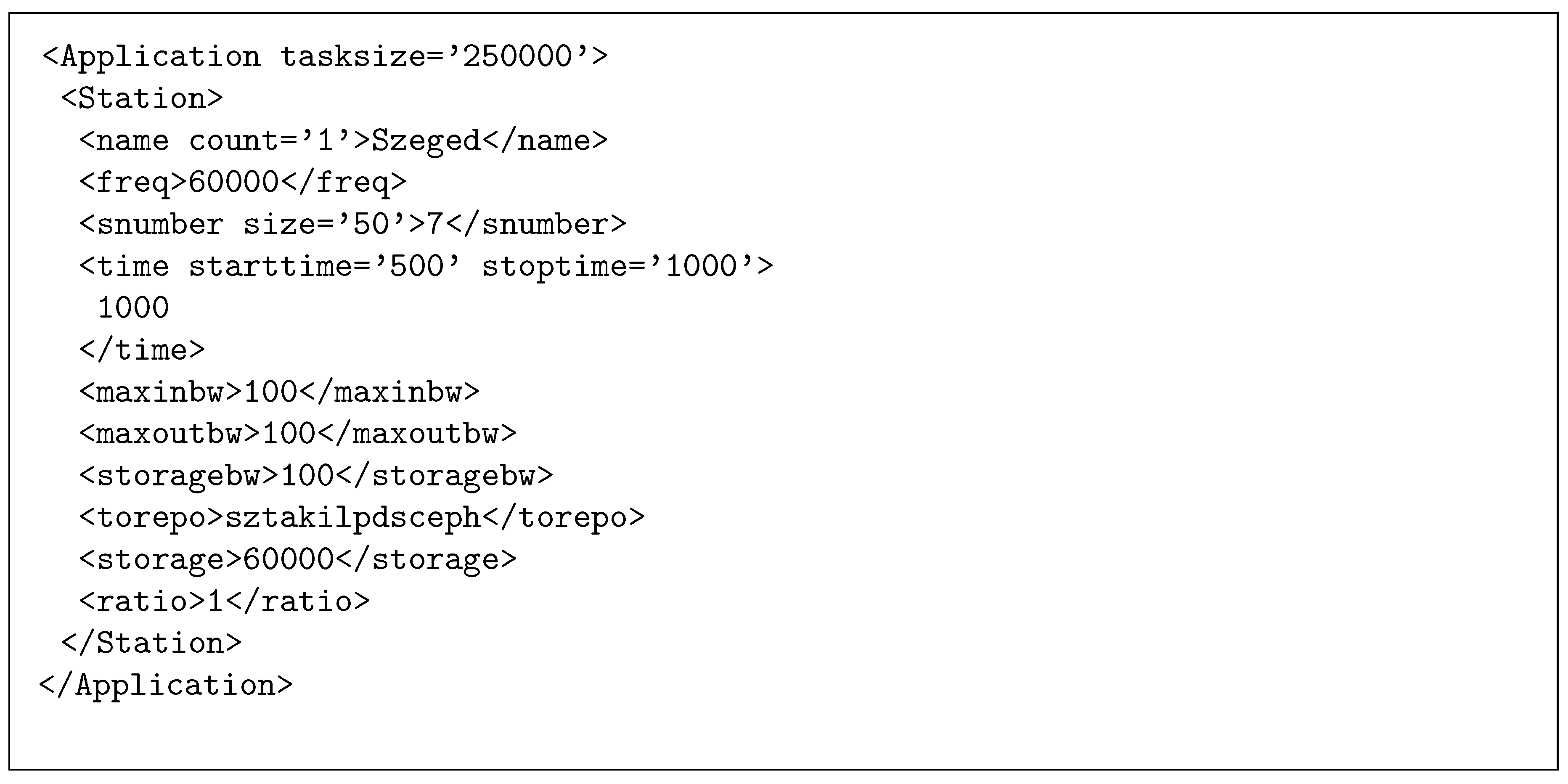

Before getting into the details, we clarify the common behavioural patterns, we used during all of the scenarios below (these were the common starting points for all scenarios unless stated otherwise). First of all, to limit simulation runtime, all of our experiments limited the station lifetimes to a single day. The start-up period of the stations were selected randomly between 0 and 20 min. The task creator daemon service of our

Application implementation spawned tasks after the cloud storage received more than 250 KiB of metering data (see the

tasksize of

Figure 5). This step ensured the estimated processing time of 5 min/task. The cloud storage was completely run empty by the daemon: the last spawned task was started with less than 250 KiB to process—scaling down its execution time. The application was mainly using Bluemix Large VMs (see

Figure 3). Finally, we disabled the dynamic VM decommissioning feature of the application (see step 8 in

Section 4).

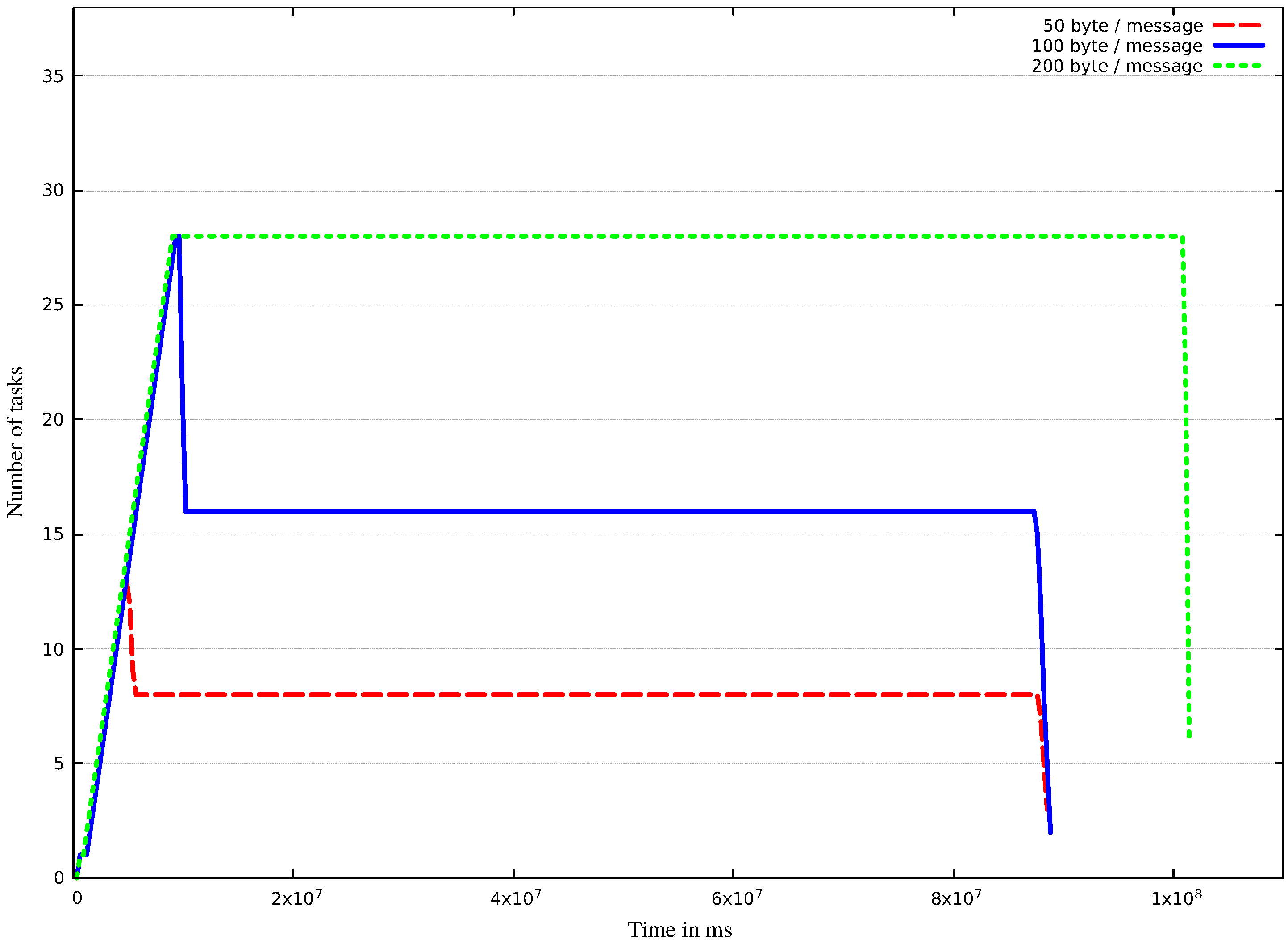

In scenario N

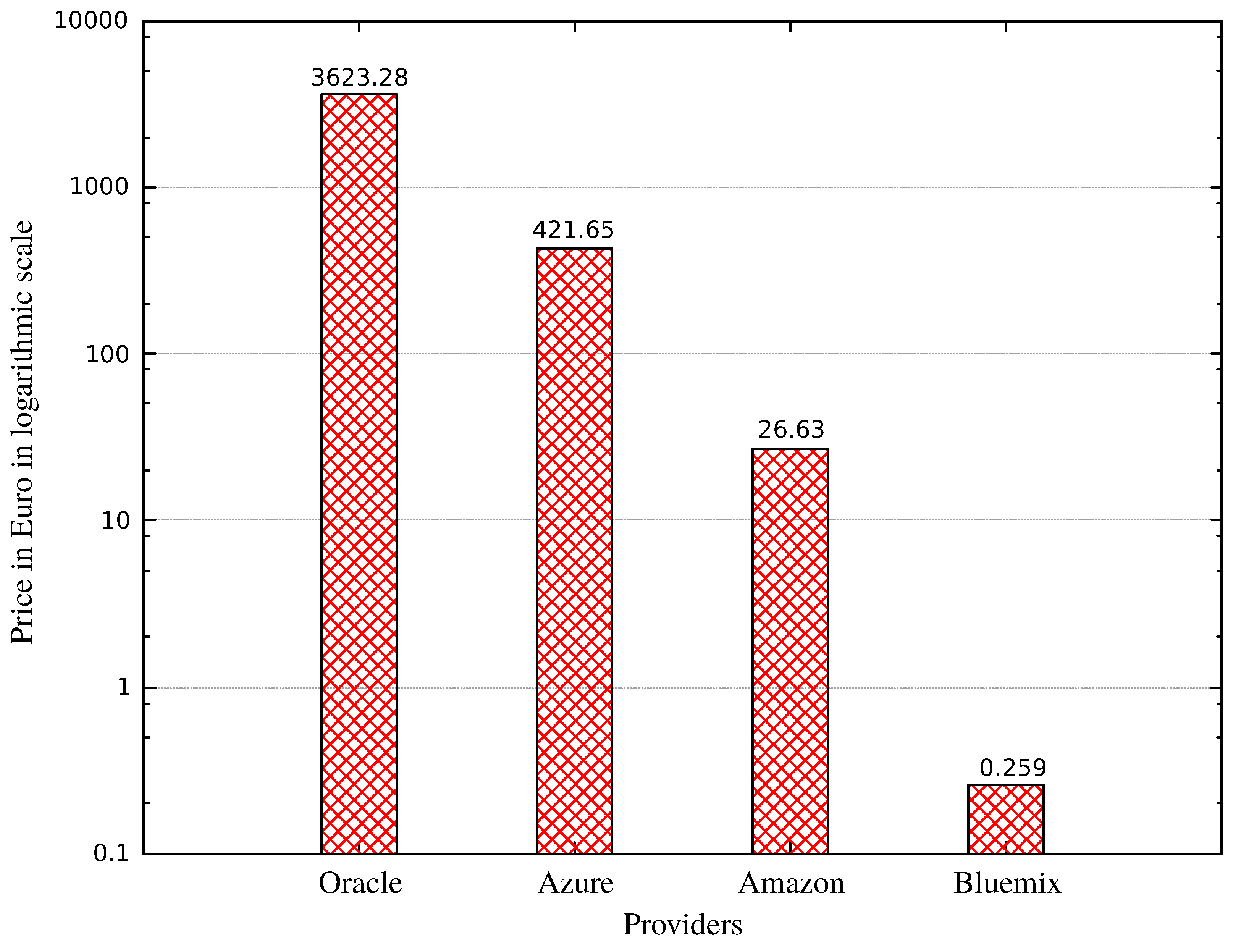

, we varied the amount of data produced by the sensors: we set 50, 100 and 200 bytes for different cases (allowing overheads for storage, network transfer, different data formats and secure encoding etc.). We simulated all stations of the weather service for 24 h. We also investigated how the costs of the IoT side changed, if we would use one of the four IoT providers defined before. The measurement results can be seen in

Figure 7 and

Figure 8, and

Table 1. For the first case with 50 bytes of sensor data, we measured 0.261 MiB of produced data in total, while in the second case of 100 bytes we measured 0.522 MiB, and in the third of 200 bytes we measured 1.044 GBs (showing linear scale up). In the three cases, we needed 12, 27 and 28 VMs to process all tasks, respectively. With the preloaded cloud parameters, the system is allowed to start maximum 28 virtual machines; therefore, in the first case of 50 bytes, our cloud cost was

Euros, in the second case of 100 bytes, the cloud cost was

, and finally, in the last case, our cloud cost was

Euros. The lessons learnt with this scenario is that, if we use more than 200 bytes per message, we need stronger virtual machines (also a larger cloud with stronger physical resources) to manage our application because, in the third case, the simulation runs for more than 24 h (despite the sensors were only producing data for a single day), which increased our costs using time-dependent cloud services. Finally,

Table 1 shows how much virtual machines needed to process all of generated data for all test cases, and how much tasks were generated for the produced data.

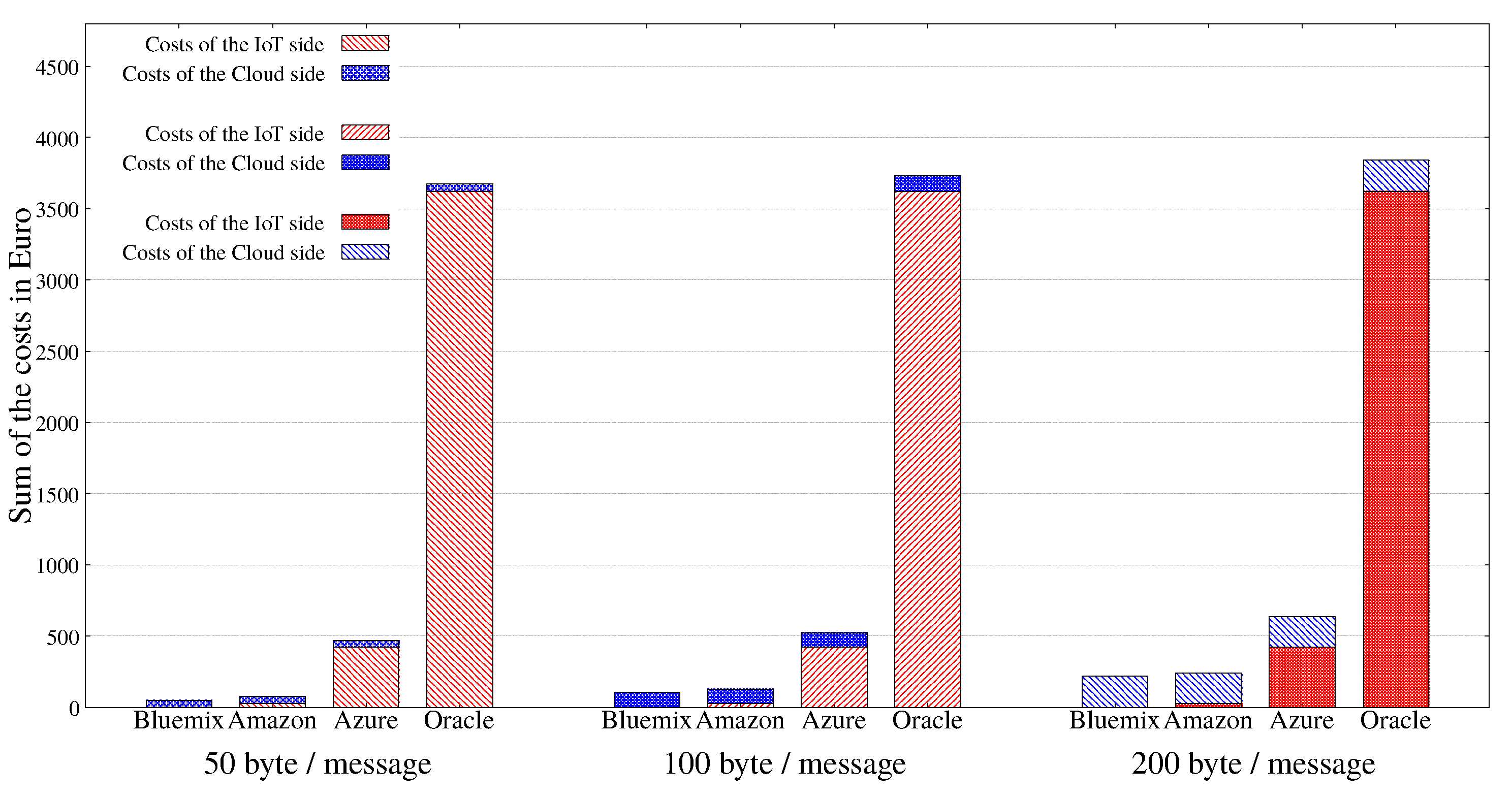

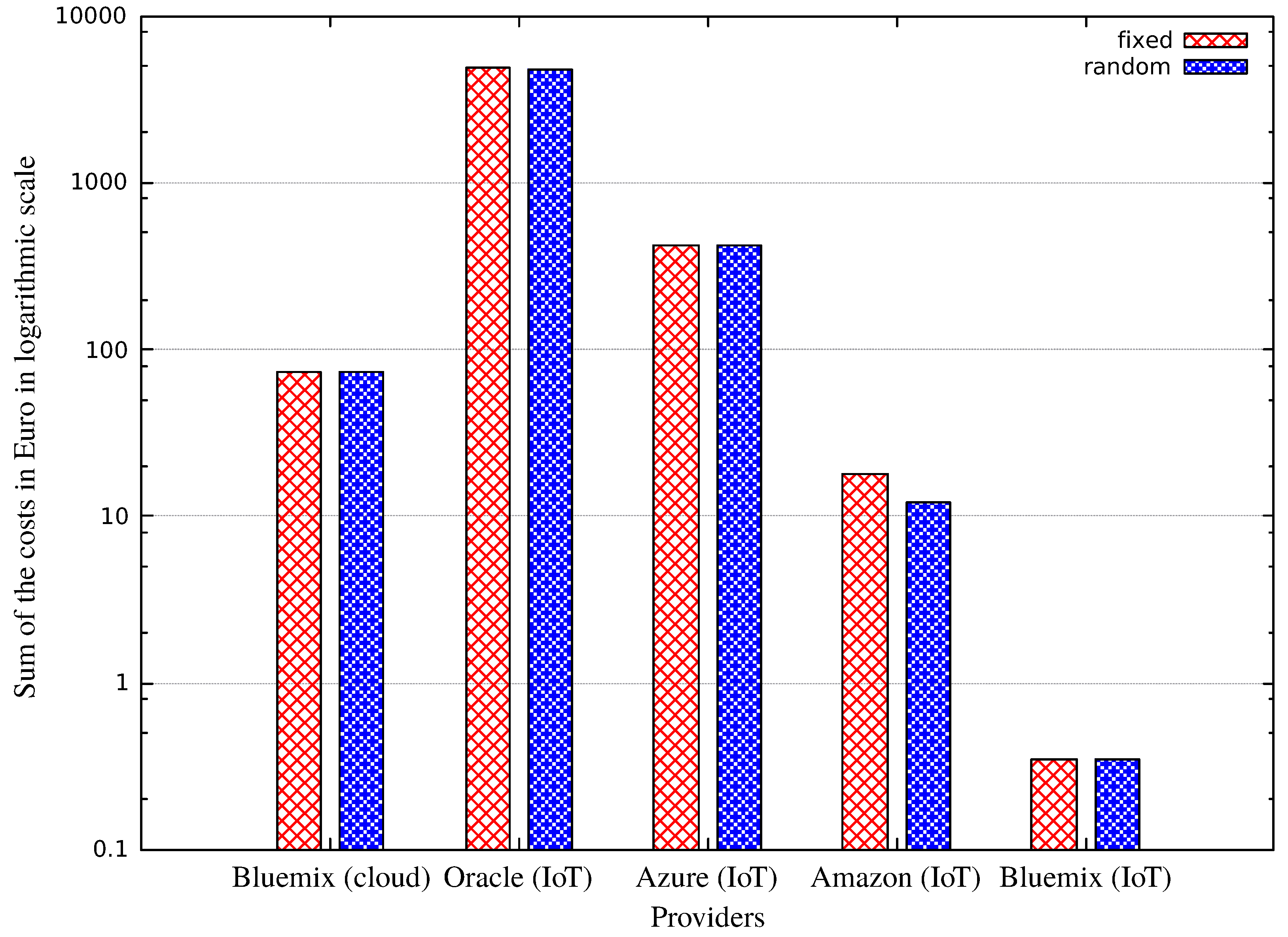

Figure 8 presents a cost comparison for all considered providers. We can see that Oracle costs are much higher than the other three providers in all cases (50, 100, 200 bytes messages). The main cause for this issue is that Oracle charges after each utilized device, which is not the case for other providers. Our initial estimations show that only such an IoT cloud system operation is beneficial with Oracle that has at most 200 devices and transfers 1–2 messages per minute per device.

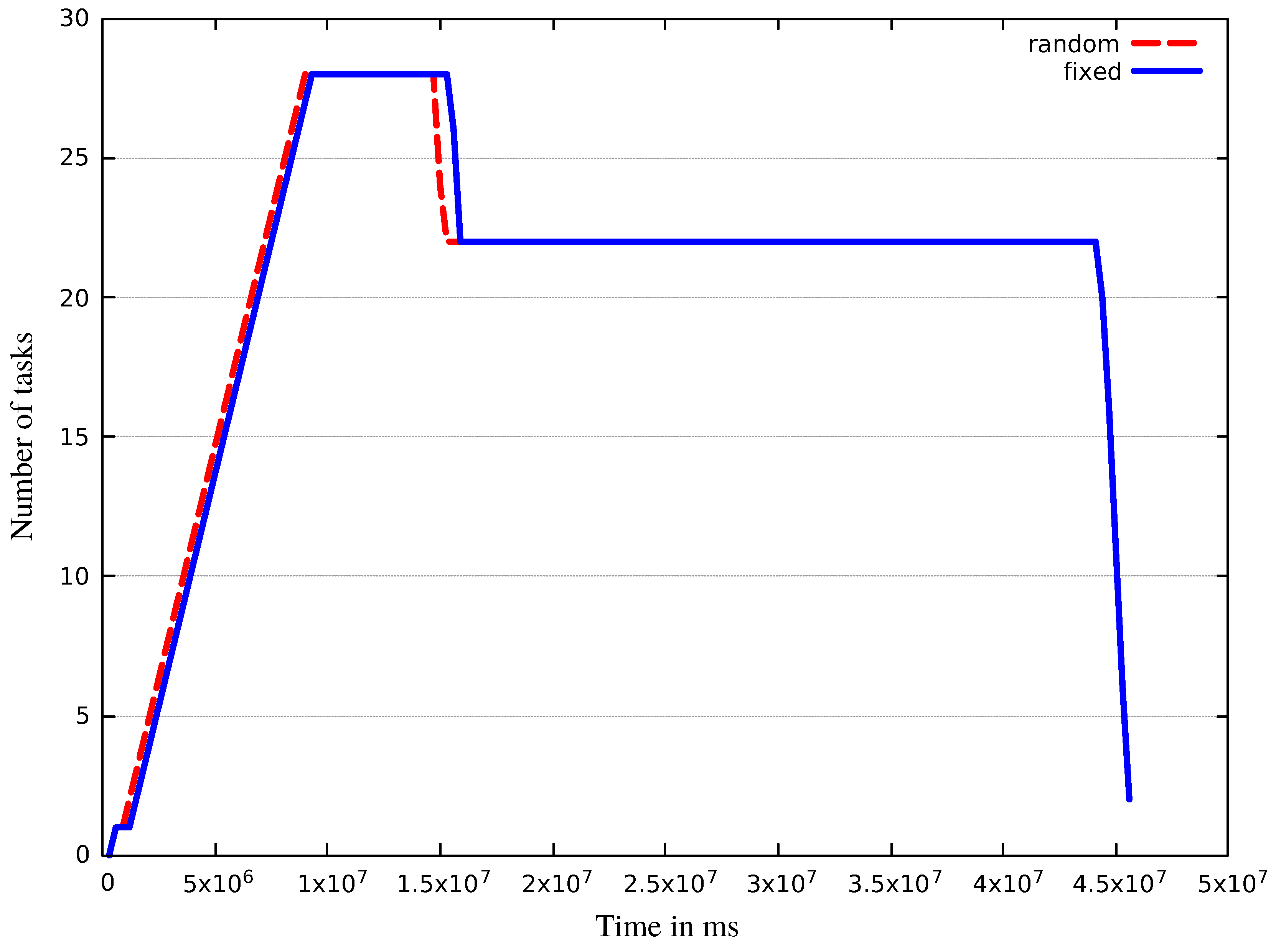

In scenario N

, we wanted to examine the effects of varying sensor numbers and varying sensor data sizes per stations to mimic real world systems better. Therefore, we defined a fixed case using 744 stations having seven sensors each, producing 100 bytes of sensor data per measurement, and a random case, in which we had the 744 stations with randomly sized sensor set (ranging between 6–8) and sensor data size (50, 100 or 200 bytes/sensor). The results can be seen in

Figure 9 and

Figure 10 and

Table 2. As we can see, we experienced minimal differences; the random case resulted in slightly more tasks. Furthermore, there are minimal differences between the cost of IoT providers, but we can see that even the small configuration differences can cause bigger variations of the costs, like in the case of Amazon.

Table 2 shows how much virtual machines needed to process all generated data, and how many tasks were generated by the produced data in fixed and random cases.

In scenario N

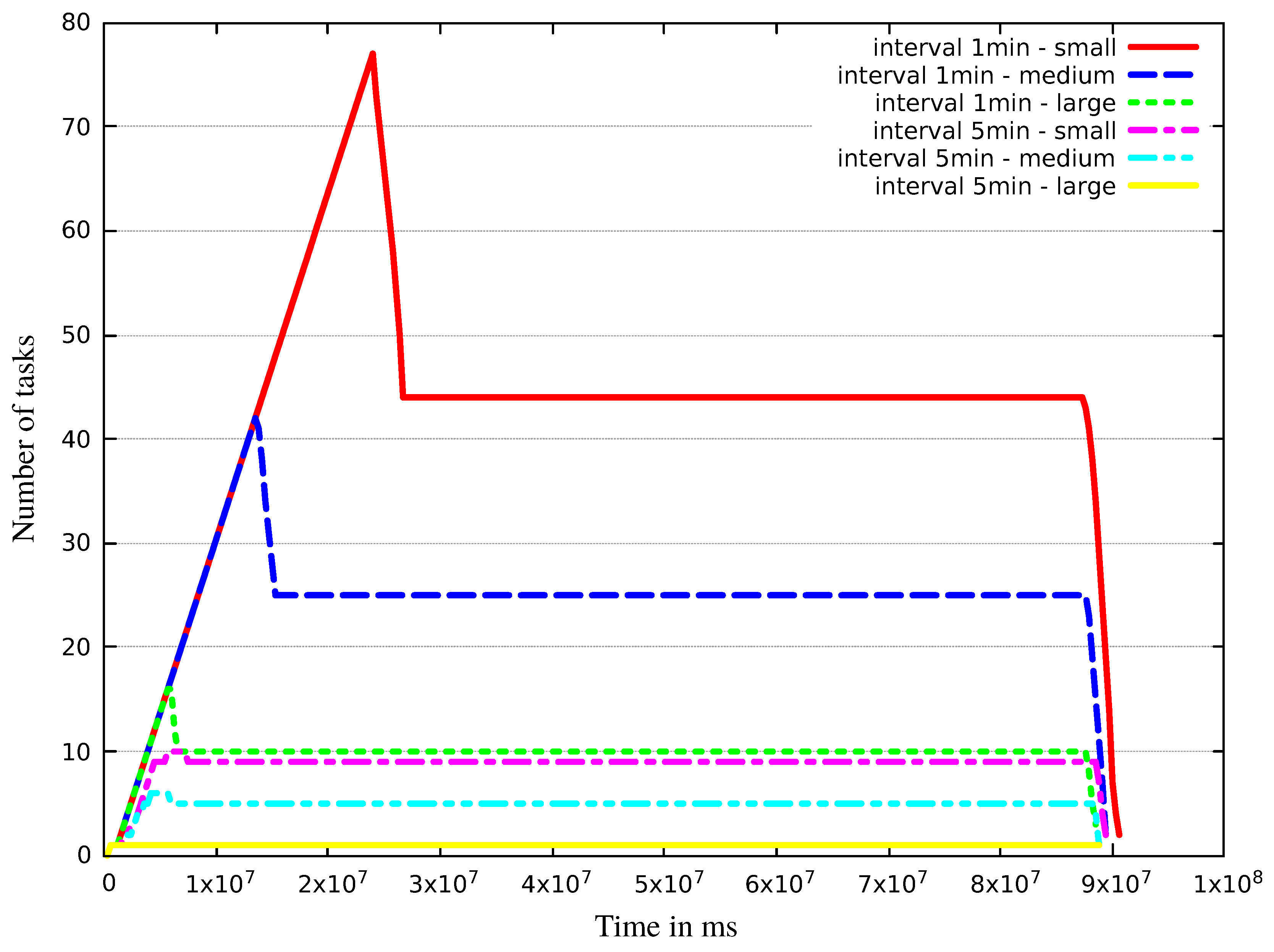

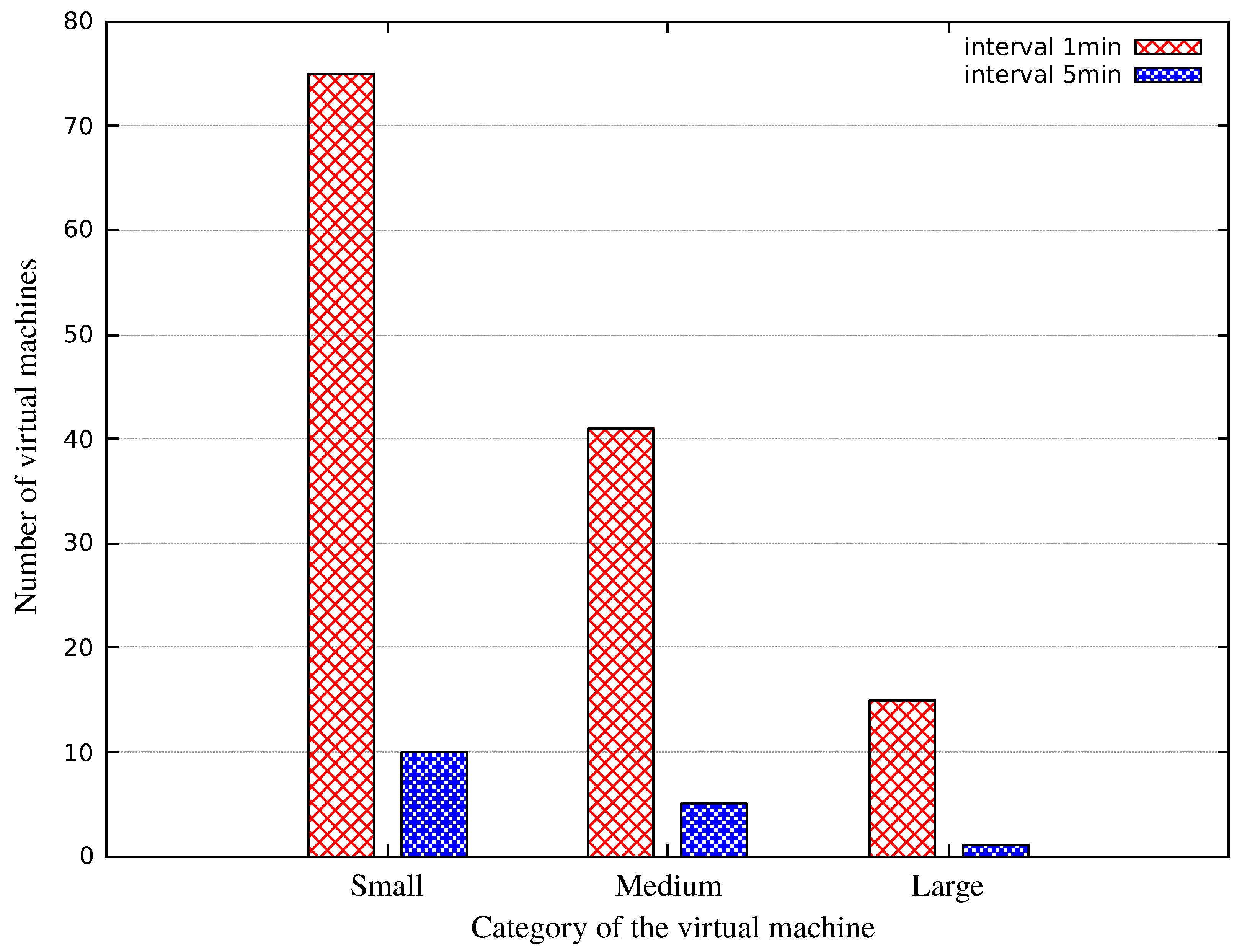

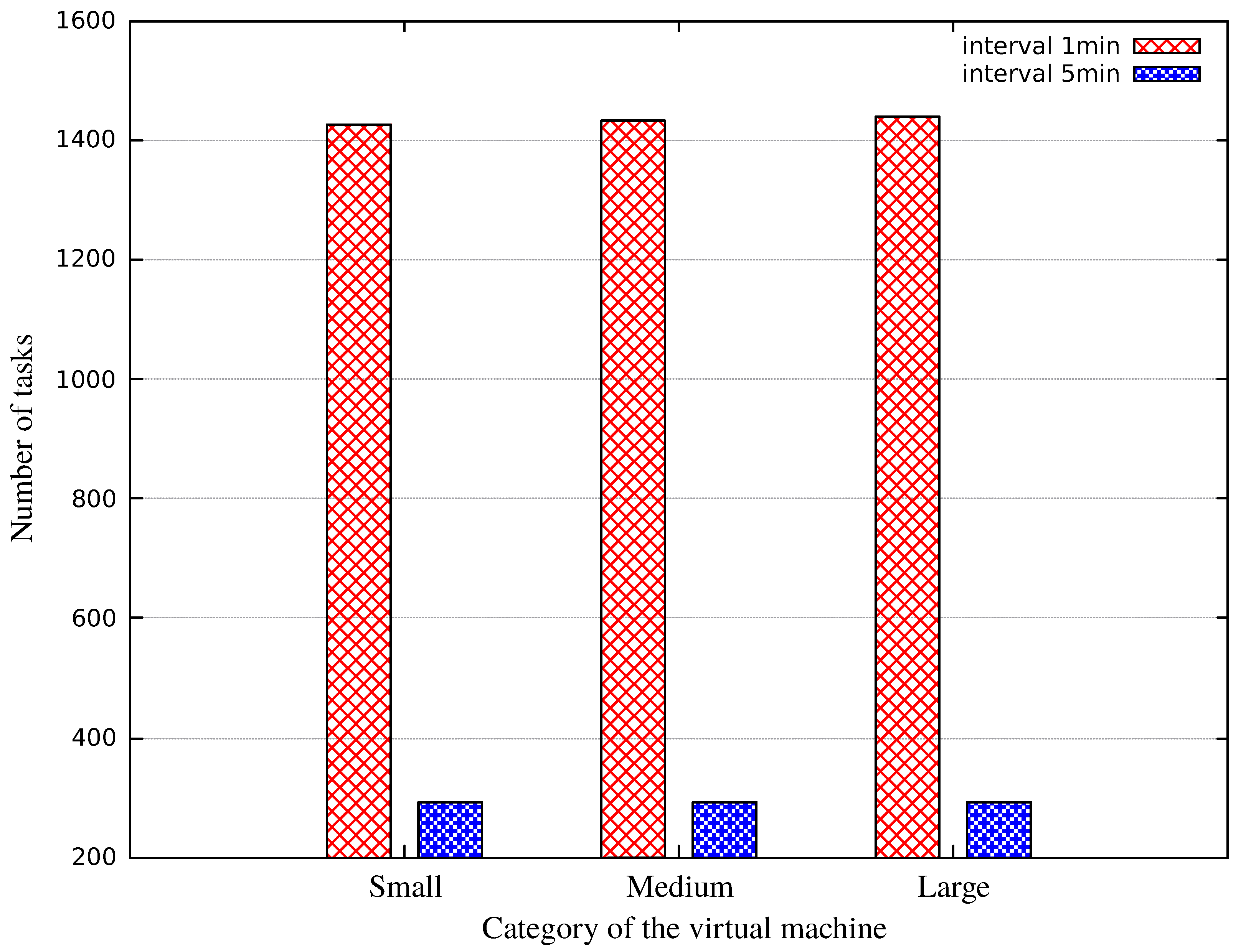

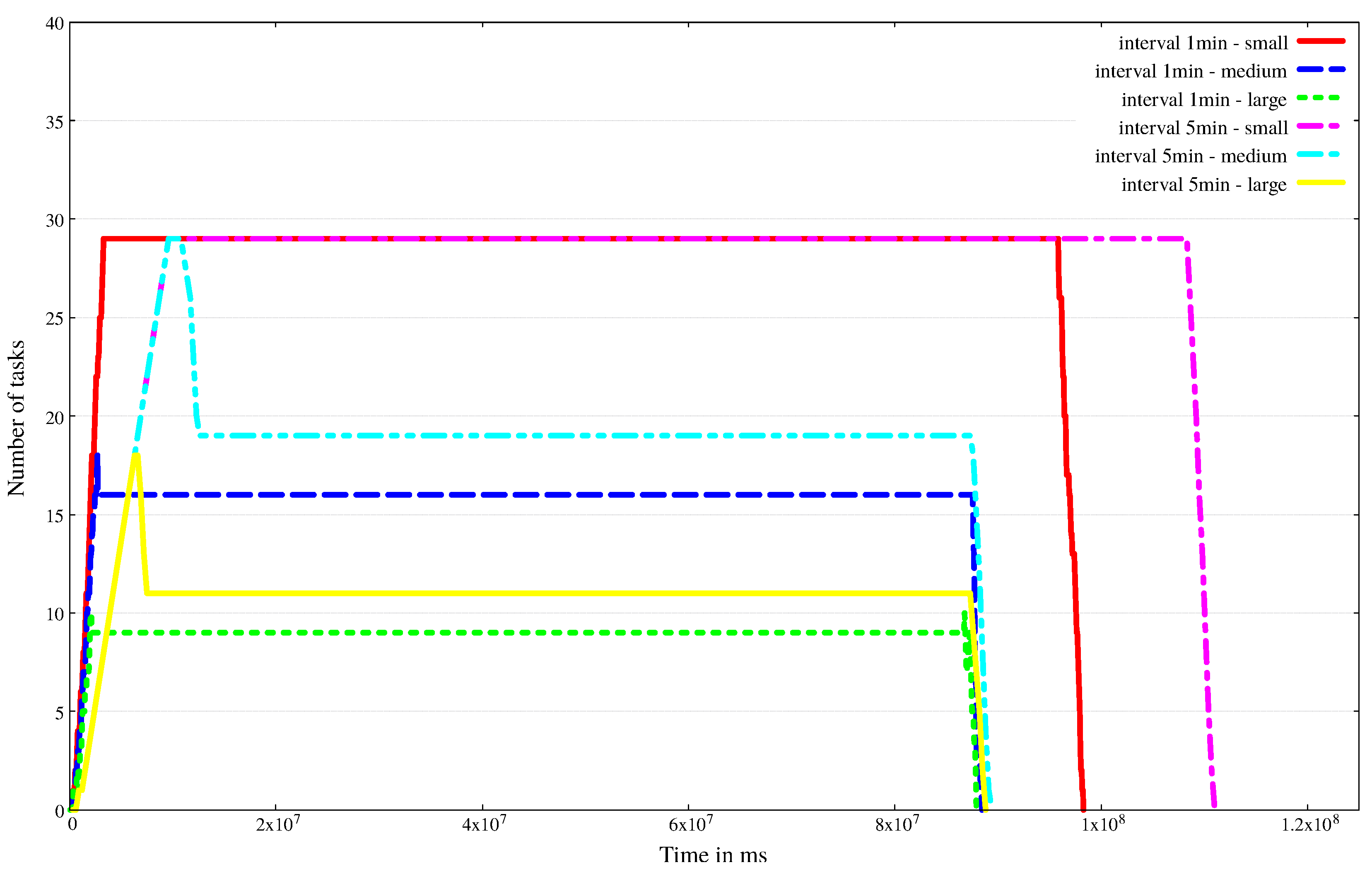

, we examined two sensor data generation frequencies. We set up 600 stations and defined cases for two static frequencies (1 and 5 min). In real life, the varying weather conditions may call for (or result in) such changes. In both cases, the sensors generated our previously estimated 50 bytes. The results can be seen in

Figure 11,

Figure 12 and

Figure 13 and in

Table 3,

Table 4 and

Table 5. The generated data in total: 0.321 GiB for 1 min frequency, 0.064 GiB for 5 min frequency. In this scenario, we used the three categories (small, medium and large) of the Azure cloud provider for both data generation frequencies. The first category contains 1 core and 1.75 GiB memory, the second contains two cores and 3.5 GiB memory, and finally we defined a virtual machine with eight cores and 14 GiB memory for the third category. The application needs more than 1400 tasks to process all generated data by the stations with 1 min frequency, while the data generated by stations with 5 min frequencies needed almost 300 tasks to process them. For this work, the application deployed 75 VMs with the small category by the Azure cloud provider in the 1 min case, and 10 VMs in the 5 min case. For medium category VMs, we needed 41 in the 1 min case, and five VMs in the 5 min case. Finally, 15 large category VMs needed to process in time for the 1 min case, but only one virtual machine was necessary to process all data generated in the 5 min case. As we can see from the results, the cheapest choice for operating the IoT side is Bluemix.

To summarize, with this scenario, we have shown how small changes in the system parameters can affect the number of the virtual machines needed for sensor data processing, and the measurements also reflected how these parameters affect the final usage prices.

Table 3,

Table 4 and

Table 5 show the detailed costs of the three test cases. The IoT costs are the same in all cases, but, from the cloud side costs, we can see that the stronger virtual machine we use, the more we should pay to operate the system.

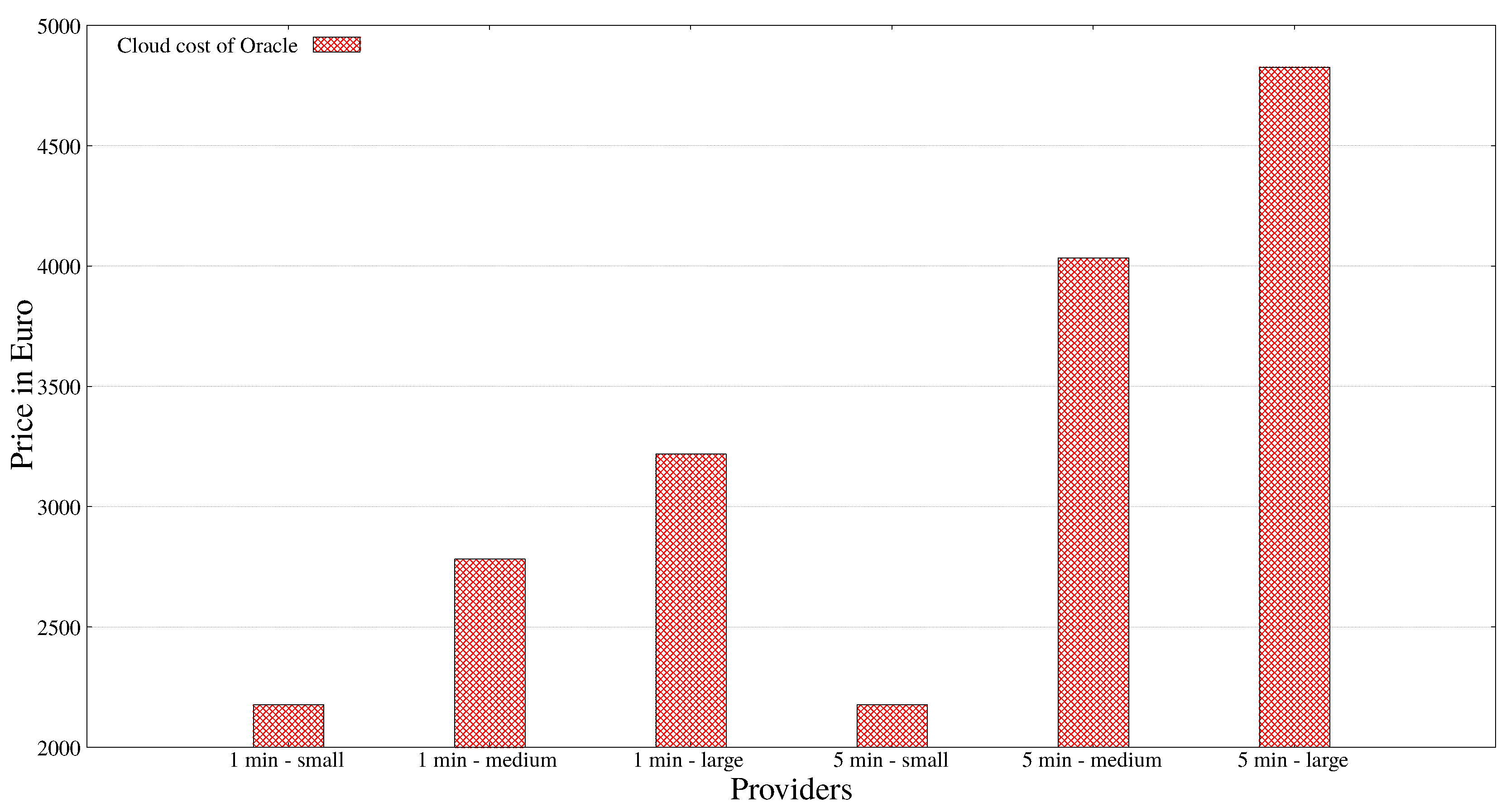

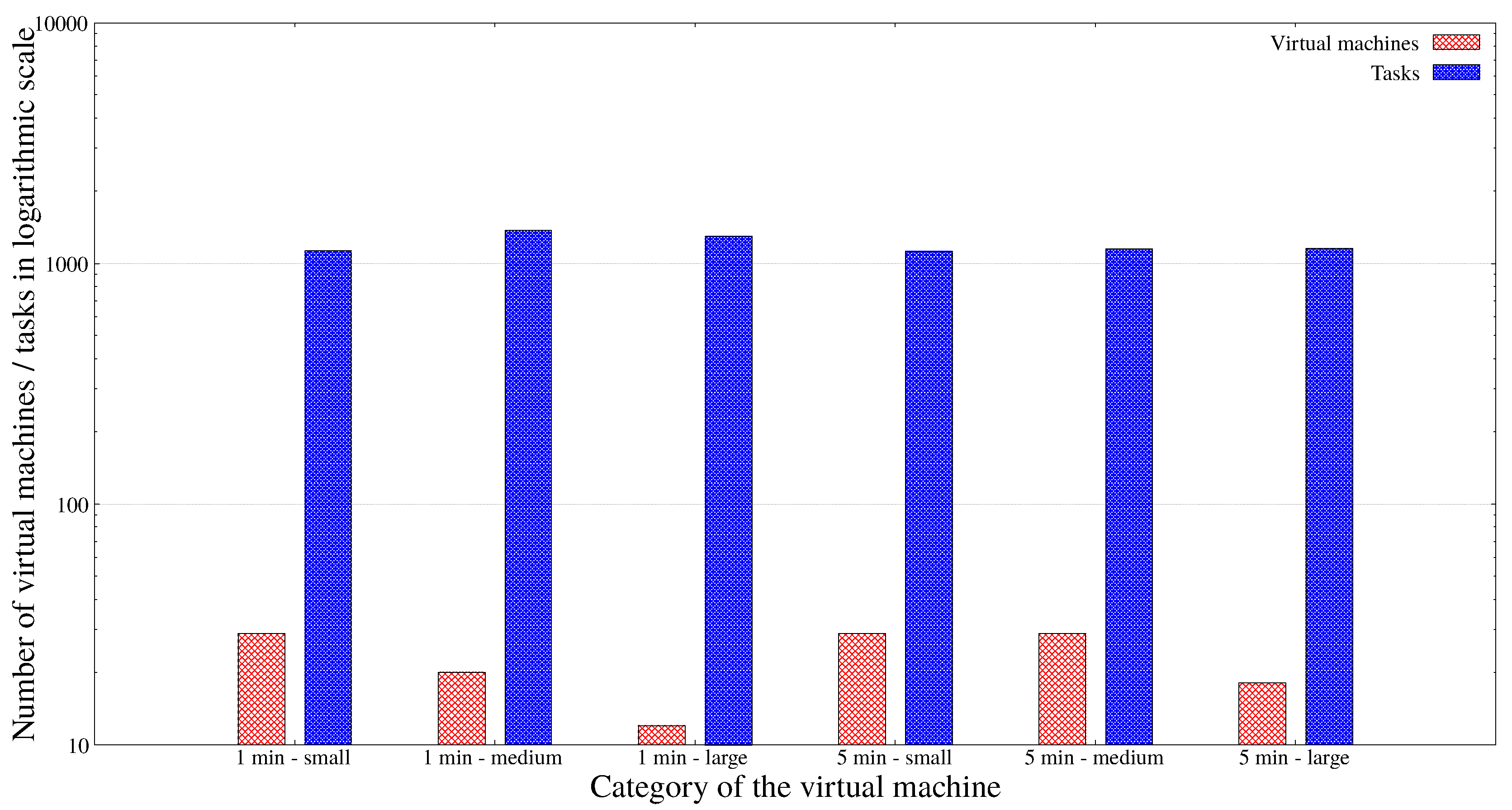

In the three scenarios executed so far, the main application was responsible for processing the sensor data in the cloud and checked the repository for new transfers in every minute. In some cases, we experienced that only a small amount of data has arrived within this interval (i.e., task creation frequency). Therefore, in scenario N

, we examined what happens if we widen this interval to 5 min. We executed it with 487 stations. The results can be seen in

Figure 14,

Figure 15,

Figure 16 and

Figure 17. In this scenario, we used the Oracle cloud provider pricing to calculate the cloud side costs for the running virtual machines. In this simulation we had three categories: 75 (small), 139 (medium) and 268 Euros (large) for one instance of a VM, and we wanted to know which provider offers the cheapest prices if we use 1 min frequency or 5 min frequency for task generation. In all six test cases, we had similar IoT costs as shown in

Figure 14, and the best provider is Bluemix with 0.259 Euros.

Figure 16 shows that we can save money if we choose the small category with 1 min interval or the small category of 5 min interval because they have the cheapest cloud costs with

Euros. The disadvantage of these categories (which was presented by

Figure 15) is that we needed more time to process all generated data than in the case of the other categories. Finally, in

Figure 17, we can see that the number of tasks is almost equal, but we needed different numbers of virtual machines to process these tasks. We can summarize that increasing the processing time is not always the best solution for application management and for saving money.

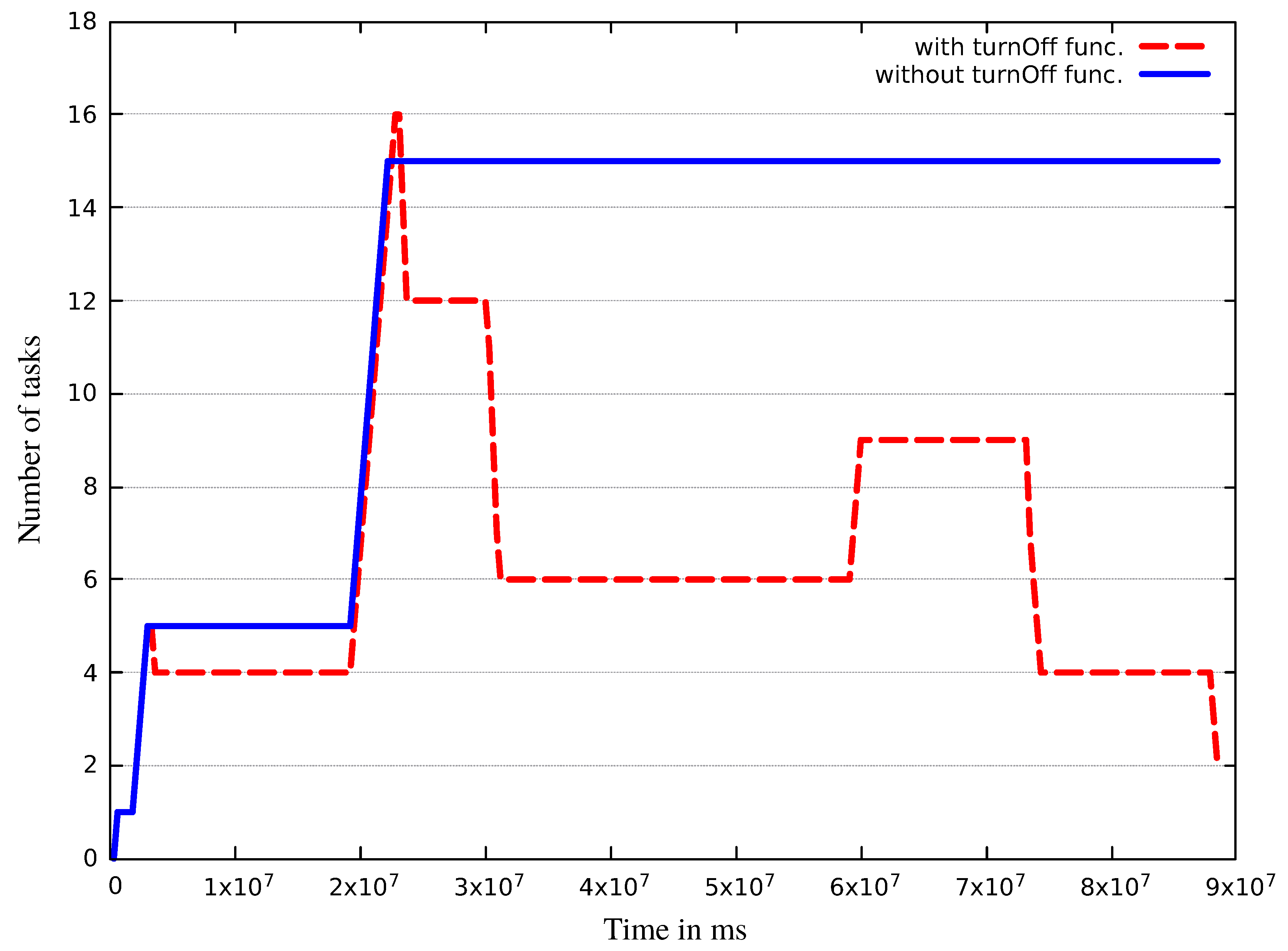

As we model a crowdsourced service, we expect to see a more dynamic behaviour regarding the number of active stations. In the previous cases, we used a static number of stations per experiment, while in our final scenario, N

, we ensured the station numbers to dynamically change. Such changes may occur due to station or sensor failures, or even by sensor replacement. In this scenario, we performed these changes by specific hours of the day: from 12:00 a.m. to 5:00 a.m., we started 200 stations, from 5:00 a.m. to 8:00 a.m., we operated 700 stations, from 8:00 a.m. to 4:00 p.m., we scaled them down to 300, then from 4:00 p.m.to 8:00 p.m. Up to 500, finally, in the last round from 8:00 p.m. to 12:00 a.m., we set it back to 200. In this experiment, we also wanted to examine the effects of VM decommissioning; therefore, we executed two different cases, one with and one without turning off unused VMs. In both cases, we set the

tasksize attribute to the usual 250 KiB. The results can be seen in

Figure 18. We can see that, without turning off the unused VMs from 6:00 p.m., we kept 15 VMs alive (resulting in more over provisioning), while, in the other case, the number of running VMs dynamically changed to the one required by the number of tasks to be processed.

Table 6 shows what happens with the application operating costs, if we do not turn off the unused, but still running virtual machines. The cheapest IoT provider is Bluemix with

Euros, and we can save almost 38 Euros using the VM turnOff function. If we used Oracle as the cloud provider, we would pay for a virtual machine instance of the smallest category 75 Euros, resulting in 1125 Euros for operating the cloud side of our application.

As a summary, in this section, we presented five scenarios focusing on various properties of IoT cloud systems. We have shown that, with our extended simulator, we can investigate the behaviour and operating costs of these systems and contribute to the development of better design and management solutions in this research field.