A Combinational Buffer Management Scheme in Mobile Opportunistic Network

Abstract

:1. Introduction

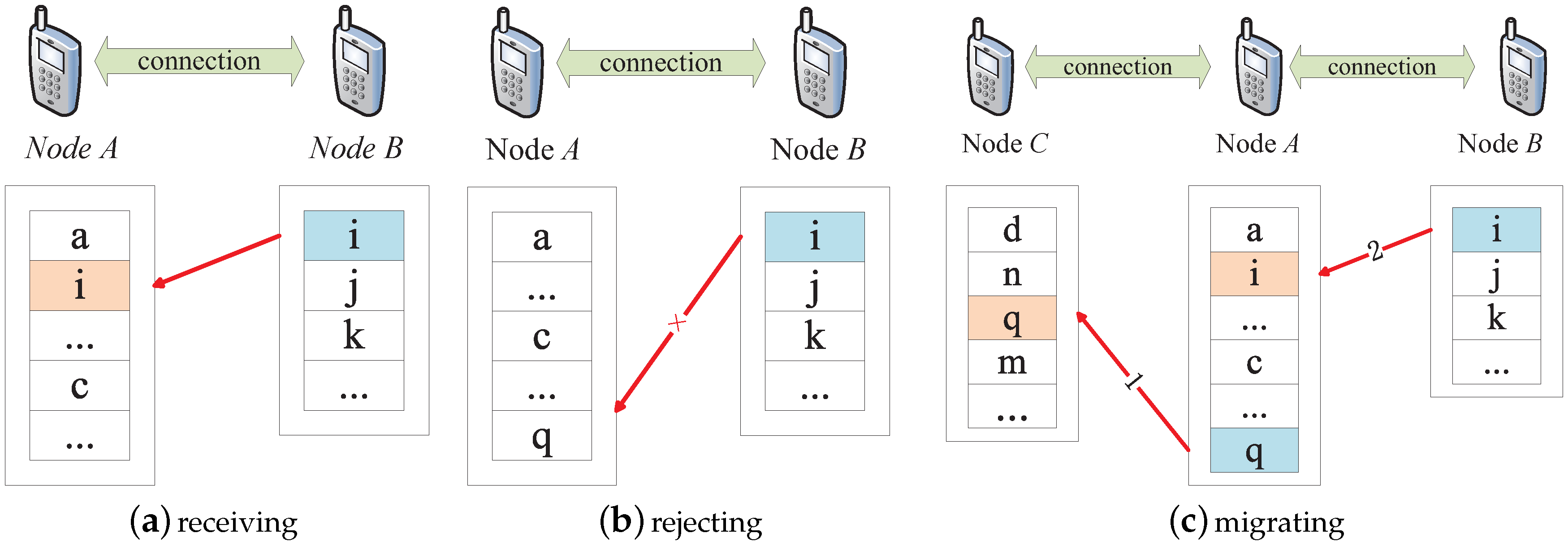

- We integrate the attributes of nodes into the utility value of messages and decide whether to receive a new message based on the utility value of the message and that of the node.

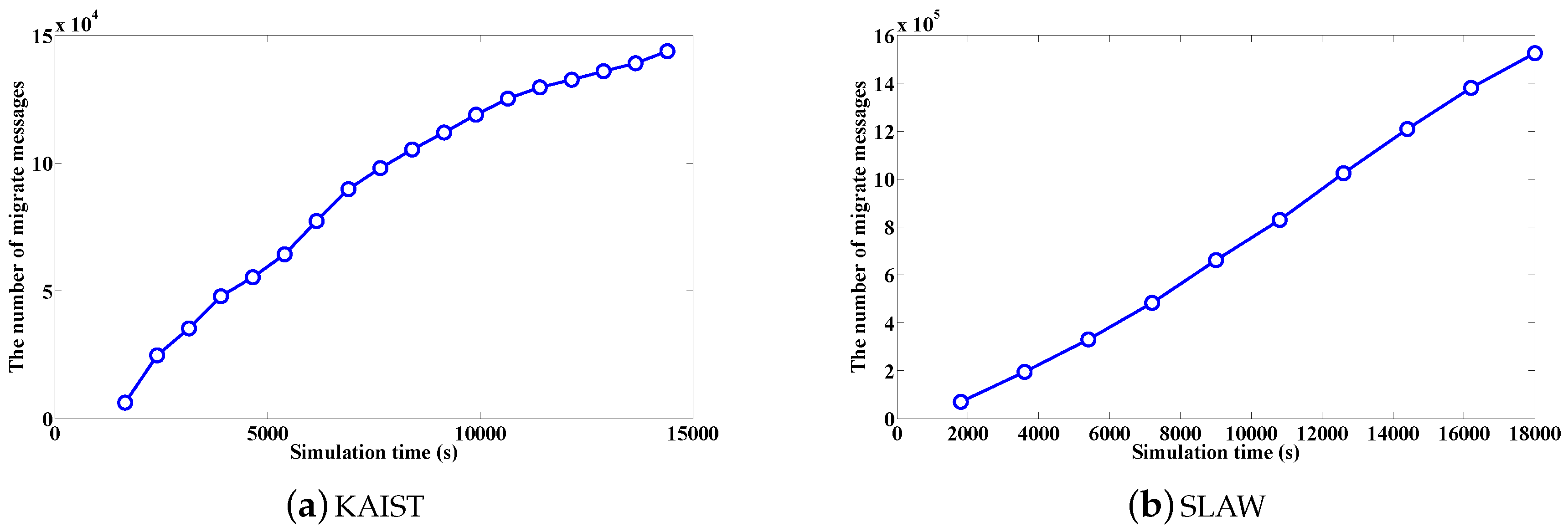

- We migrate the messages to the neighbor, rather than deleting them when the buffer space of nodes is full.

2. Related Works

2.1. Single Standard

2.2. Multiple Standards

2.3. Migration Strategy

3. Buffer Management

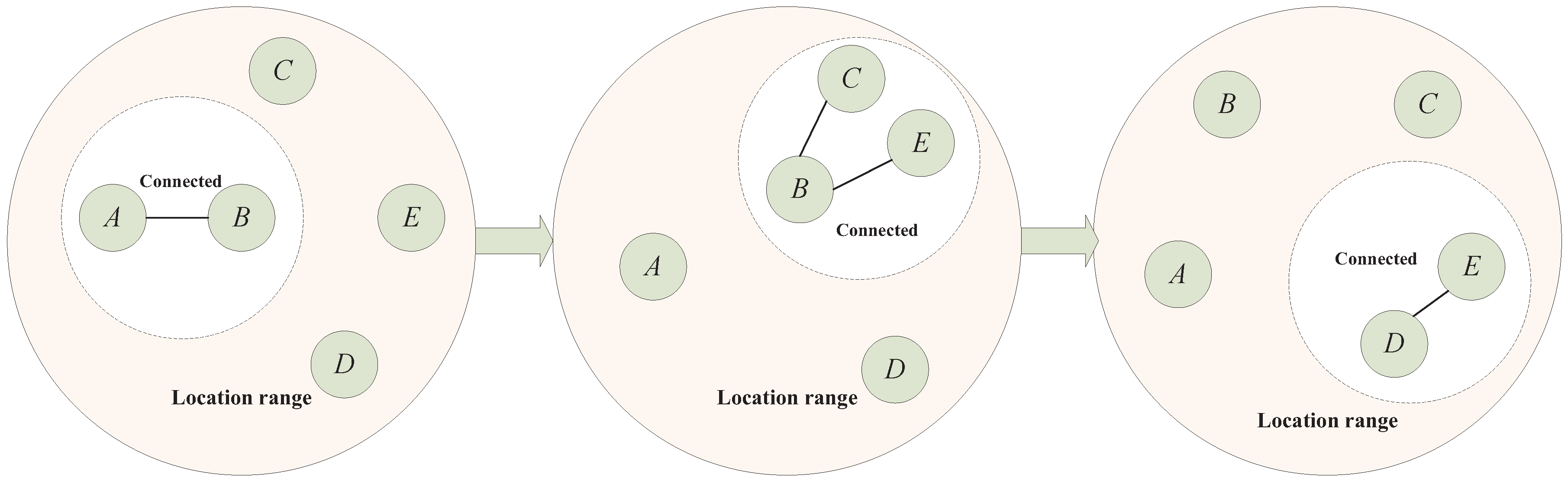

3.1. Preliminaries

- Each node has a limited buffer.

- Mobility of nodes is independent and nodes have different contact rates.

- The links have the same bandwidth.

- A short contact duration or low data rate will not complete the message transmission.

3.2. Queuing Strategy

3.3. Utility Value Calculation

3.4. Evaluation Method

| Algorithm 1 Message forwarding and migration strategy of CBM. |

|

4. Simulation

4.1. Network Model and Simulation Environment

- KAIST is a real dataset which record the daily activities of 32 students in the campus dormitory that carried the Garmin GPS 60CSx handheld receiver in Daejeon, Korea, in Asia from 26 September 2006 to 3 October 2007. In addition, the GPS receiver reads and records a track every 10 s with accuracy of 3 m. The participants walk most of the time during the experiment, but also occasionally travel by bus, trolley, cars, or subway trains. A total of 92 daily trajectories were collected.

- SLAW is a new mobile model that relies on GPS traces of human walks, including 226 daily traces collected from 101 volunteers from five different outdoor locations for five hours. These traces include the same nature of the people, such as students in the same university campuses or visitors of the theme park. SLAW can represent the social contexts between volunteers through the participants visiting common places and walk patterns.

- Delivery ratio. The ratio of the number of messages successfully delivered to the destination to the total number of messages generated.

- Overhead ratio. The number of all messages and their copies in the network divided by the number of the original messages.

- Average delays. The average delay of all messages successfully delivered to the destination node.

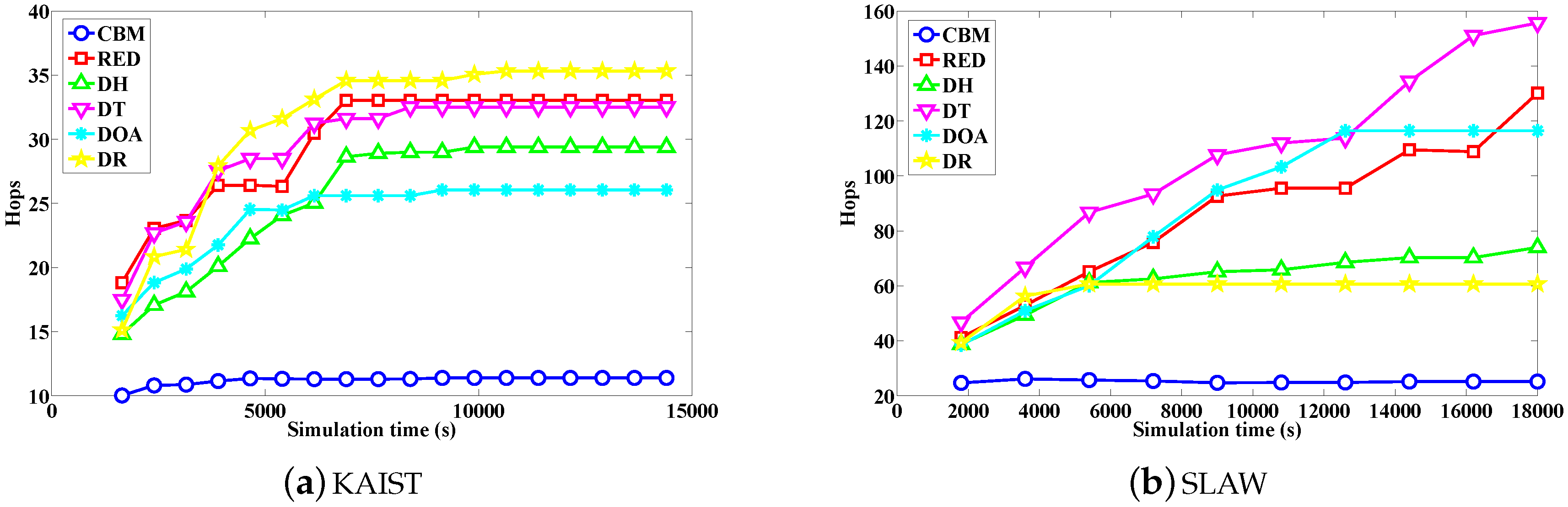

- Hops. The average forwarding hops for all messages from the source node to the destination node.

4.2. Simulation Results and Discussion

4.2.1. Overall Performance

4.2.2. Analysis of Message Migration

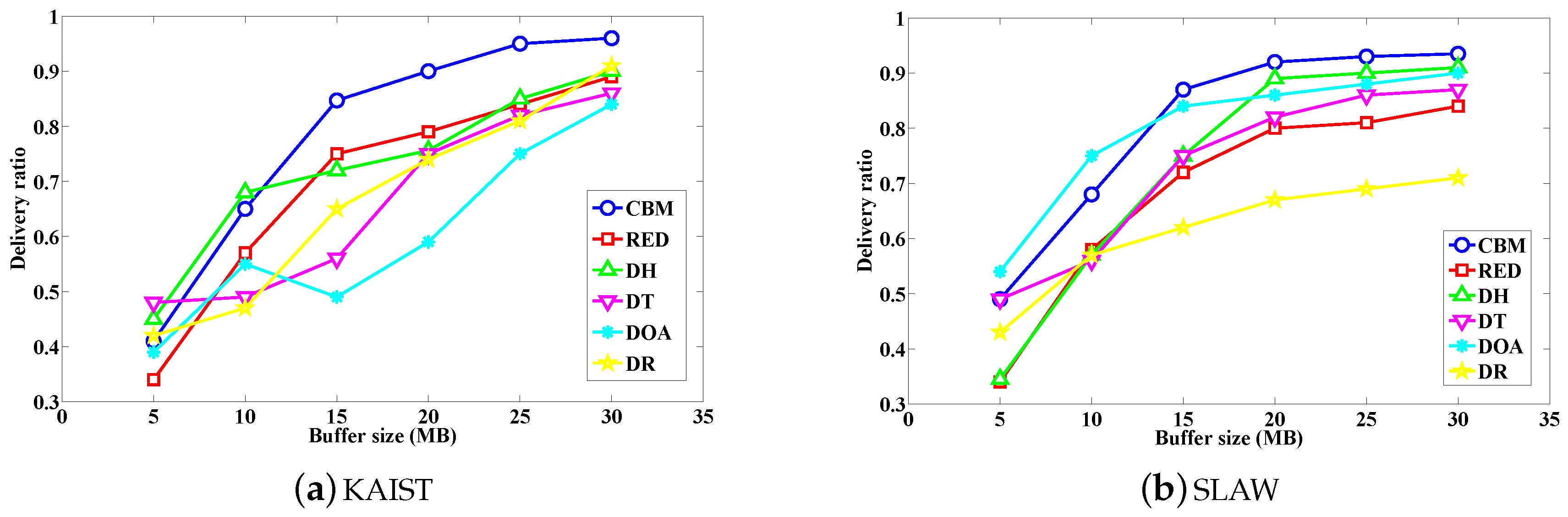

4.2.3. Impact of Buffer Size of the Delivery Ratio

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Trifunovic, S.; Kouyoumdjieva, S.T.; Distl, B.; Pajevic, L.; Karlsson, G.; Plattner, B. A Decade of Research in Opportunistic Networks: Challenges, Relevance, and Future Directions. IEEE Commun. Mag. 2017, 55, 168–173. [Google Scholar] [CrossRef]

- Ngo, T.; Nishiyama, H.; Kato, N.; Kotabe, S.; Tohjo, H. A Novel Graph-Based Topology Control Cooperative Algorithm for Maximizing Throughput of Disaster Recovery Networks. In Proceedings of the 2016 IEEE 83rd Vehicular Technology Conference (VTC Spring), Nanjing, China, 15–18 May 2016; pp. 1–5. [Google Scholar]

- Dressler, F.; Ripperger, S.; Hierold, M.; Nowak, T. From radio telemetry to ultra-low-power sensor networks: Tracking bats in the wild. IEEE Commun. Mag. 2016, 54, 129–135. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, X.; Guo, J. Noncooperative Dynamic Routing with Bandwidth Constraint in Intermittently Connected Deep Space Information Networks Under Scheduled Contacts. Wirel. Pers. Commun. 2012, 68, 1255–1285. [Google Scholar] [CrossRef]

- Qin, J.; Zhu, H.; Zhu, Y.; Lu, L.; Xue, G.; Li, M. POST: Exploiting Dynamic Sociality for Mobile Advertising in Vehicular Networks. IEEE Trans. Parallel Distrib. Syst. 2016, 27, 1770–1782. [Google Scholar] [CrossRef]

- Santos, R.; Orozco, J.; Ochoa, S.F. A real-time analysis approach in opportunistic networks. ACM SIGBED Rev. 2011, 8, 40–43. [Google Scholar] [CrossRef]

- Boldrini, C. Design and analysis of context-aware forwarding protocols for opportunistic networks. In Proceedings of the Second International Workshop on Mobile Opportunistic Networking, Pisa, Italy, 22–23 February 2010; pp. 201–202. [Google Scholar]

- Pan, D.; Ruan, Z.; Zhou, N.; Liu, X.; Song, Z. A comprehensive-integrated buffer management strategy for opportunistic networks. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 103. [Google Scholar] [CrossRef]

- Erramilli, V.; Crovella, M. Forwarding in opportunistic networks with resource constraints. In Proceedings of the Third ACM Workshop on Challenged Networks, San Francisco, CA, USA, 15 September 2008; pp. 41–48. [Google Scholar]

- Rashid, S.; Ayub, Q.; Zahid, M.S.M.; Abdullah, A.H. Impact of Mobility Models on DLA (Drop Largest) Optimized DTN Epidemic Routing Protocol. Int. J. Comput. Appl. 2011, 18, 35–39. [Google Scholar] [CrossRef]

- Kim, D.; Park, H.; Yeom, I. Minimizing the impact of buffer overflow in DTN. In Proceedings of the 3rd International Conference on Future Internet Technologies (CFI), Seoul, Korea, 18–20 June 2008. [Google Scholar]

- Sati, S.; Probst, C.; Graffi, K. Analysis of Buffer Management Policies for Opportunistic Networks. In Proceedings of the IEEE 25th International Conference on Computer Communication and Networks, Waikoloa, HI, USA, 1–4 August 2016; pp. 1–8. [Google Scholar]

- Krifa, A.; Baraka, C.; Spyropoulos, T. Optimal Buffer Management Policies for Delay Tolerant Networks. In Proceedings of the 5th Annual IEEE Communications Society Conference on Sensor, Mesh and Ad Hoc Communications and Networks, San Francisco, CA, USA, 16–20 June 2008; pp. 260–268. [Google Scholar]

- Scott, K.; Burleigh, S. Bundle Protocol Specification. Internet RFC 5050. Available online: https://rfc-editor.org/rfc/rfc5050.txt (accessed on 10 November 2017).

- Elwhishi, A.; Ho, P.H.; Naik, K.; Shihada, B. A Novel Message Scheduling Framework for Delay Tolerant Networks Routing. IEEE Trans. Parallel Distrib. Syst. 2013, 24, 871–880. [Google Scholar] [CrossRef]

- Ramanathan, R.; Hansen, R.; Basu, P.; Rosales-Hain, R.; Krishnan, R. Prioritized epidemic routing for opportunistic networks. In Proceedings of the 1st International MobiSys Workshop on Mobile Opportunistic Networking, San Juan, Puerto Rico, 11 June 2007; pp. 62–66. [Google Scholar]

- Balasubramanian, A.; Levine, B.N.; Venkataramani, A. DTN routing as a resource allocation problem. ACM SIGCOMM Comput. Commun. Rev. 2007, 37, 373–384. [Google Scholar] [CrossRef]

- Ayub, Q.; Rashid, S.; Zahid, M.S.M. Buffer Scheduling Policy for Opportunitic Networks. Int. J. Sci. Eng. Res. 2013, 2, 1–7. [Google Scholar]

- Rashid, S.; Ayub, Q.; Zahid, S.M.M.; Abdullah, A.H. E-DROP: An Effective Drop Buffer Management Policy for DTN Routing Protocols. Int. J. Comput. Appl. 2011, 13, 8–13. [Google Scholar] [CrossRef]

- Wang, E.; Yang, Y.; Wu, J. A Knapsack-Based Message Scheduling and Drop Strategy for Delay-Tolerant Networks. In Proceedings of the European Conference on Wireless Sensor Networks, Porto, Portugal, 9–11 February 2015; pp. 120–134. [Google Scholar]

- Wang, E.; Yang, Y.; Wu, J. A Knapsack-based buffer management strategy for delay-tolerant networks. J. Parallel Distrib. Comput. 2015, 86, 1–15. [Google Scholar] [CrossRef]

- Li, Y.; Qian, M.; Jin, D.; Su, L.; Zeng, L. Adaptive Optimal Buffer Management Policies for Realistic DTN. In Proceedings of the IEEE Global Telecommunications Conference, Honolulu, HI, USA, 30 November–4 December 2009; pp. 1–5. [Google Scholar]

- Yao, J.; Ma, C.; Yu, H.; Liu, Y.; Yuan, Q. A Utility-Based Buffer Management Policy for Improving Data Dissemination in Opportunistic Networks. China Commun. 2017, 14, 118–126. [Google Scholar] [CrossRef]

- Iranmanesh, S. A novel queue management policy for delay-tolerant networks. EURASIP J. Wirel. Commun. Netw. 2016, 2016, 88. [Google Scholar] [CrossRef]

- Seligman, M.; Mundur, P.; Mundur, P. Alternative custodians for congestion control in delay tolerant networks. In Proceedings of the 2006 SIGCOMM Workshop on Challenged Networks, Pisa, Italy, 11–15 September 2006; pp. 229–236. [Google Scholar]

- Moetesum, M.; Hadi, F.; Imran, M.; Minhas, A.A.; Vasilakos, A.V. An adaptive and efficient buffer management scheme for resource-constrained delay tolerant networks. Wirel. Netw. 2016, 22, 2189–2201. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, J.; Liu, Y. Congestion management in delay tolerant networks. In Proceedings of the 4th Annual International Conference on Wireless Internet, Maui, HI, USA, 17–19 November 2008; p. 65. [Google Scholar]

- Rhee, I.; Shin, M.; Hong, S.; Lee, K.; Kim, S.J.; Chong, S. On the Levy-Walk Nature of Human Mobility. IEEE/ACM Trans. Netw. 2011, 19, 630–643. [Google Scholar] [CrossRef]

- Lee, K.; Hong, S.; Kim, S.J.; Rhee, I.; Chong, S. SLAW: Self-Similar Least-Action Human Walk. IEEE/ACM Trans. Netw. 2012, 20, 515–529. [Google Scholar] [CrossRef]

- Niu, J.; Wang, D.; Atiquzzaman, M. Copy limited flooding over opportunistic networks. In Proceedings of the 2013 IEEE Wireless Communications and Networking Conference (WCNC), Shanghai, China, 7–10 April 2015; Volume 58, pp. 94–107. [Google Scholar]

| Parameter | Value |

|---|---|

| Simulation field size | 600 × 600 m |

| Simulation time (KAIST/SLAW) | 15 × s/18 × s |

| Number of nodes (KAIST/SLAW) | 90/500 |

| Transmission range | 25 m |

| Node storage size | 20 MB |

| Message storage size | [0.5,1] MB |

| The TTL of the message | 300 s |

| KAIST | SLAW | |||

|---|---|---|---|---|

| Overhead Ratio | Average Delay (s) | Overhead Ratio | Average Delay (s) | |

| CBM | 1340.55 | 1101.56 | 15,305.41 | 1292.61 |

| RED | 4009.45 | 1442.41 | 40,731.24 | 1487.67 |

| DH | 2372.80 | 1920.67 | 26,255.48 | 1316.42 |

| DT | 3972.88 | 1190.45 | 40,674.63 | 1494.95 |

| DOA | 3691.13 | 1324.83 | 42,903.37 | 1467.42 |

| DR | 4075.63 | 1691.94 | 54,101.54 | 1445.23 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, P.; Yu, H. A Combinational Buffer Management Scheme in Mobile Opportunistic Network. Future Internet 2017, 9, 82. https://doi.org/10.3390/fi9040082

Yuan P, Yu H. A Combinational Buffer Management Scheme in Mobile Opportunistic Network. Future Internet. 2017; 9(4):82. https://doi.org/10.3390/fi9040082

Chicago/Turabian StyleYuan, Peiyan, and Hai Yu. 2017. "A Combinational Buffer Management Scheme in Mobile Opportunistic Network" Future Internet 9, no. 4: 82. https://doi.org/10.3390/fi9040082