Correction of Pushbroom Satellite Imagery Interior Distortions Independent of Ground Control Points

Abstract

:1. Introduction

2. Materials and Methods

2.1. Fundamental Theory

2.2. Distortion Detection Method

2.2.1. Calibration Model

2.2.2. Distortion Detection Method

2.2.3. Solution of Error Equations

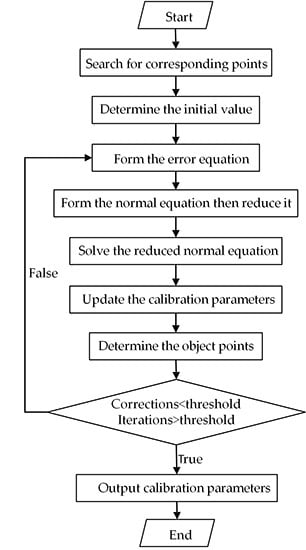

2.3. Processing Procedure

- (1)

- (2)

- Determine the initial value of the unknown parameters. Initial calibration parameters can be assigned to laboratory calibration values acquired from the calibration work in the laboratory before the satellite launch. Although laboratory calibration values have changed during the launch process due to factors such as the release of stress, it can be still set as the initial value of calibration parameters. On this basis, the correction of calibration parameters can be assigned to zero. And the unknown object coordinates can be determined by forward intersection between the corresponding points of the images.

- (3)

- Form the error equation point by point. The linearized calibration equation can be constructed according to Equation (6). The process should be applied to every point to form the error equation as in Equation (9).

- (4)

- Form the normal equation, then reduce it. The normal equation can be formed according to Equation (11), and the reduced normal equation for the correction to calibration parameters from the normal equation is Equation (12).

- (5)

- Use the ICCV method to solve the reduced normal equation, and thereby acquire the correction to the calibration parameters.

- (6)

- Update calibration parameters by adding the corrections.

- (7)

- Determine the coordinates of object points by forward intersection.

- (8)

- Execute steps (3)–(7) iteratively until calibration parameters tend to be convergent and stable. Otherwise, the procedure provides the updated calibration parameters and terminates. Here the iterations and corrections can be set an empirical threshold respectively to terminate the iteration.

3. Results and Discussion

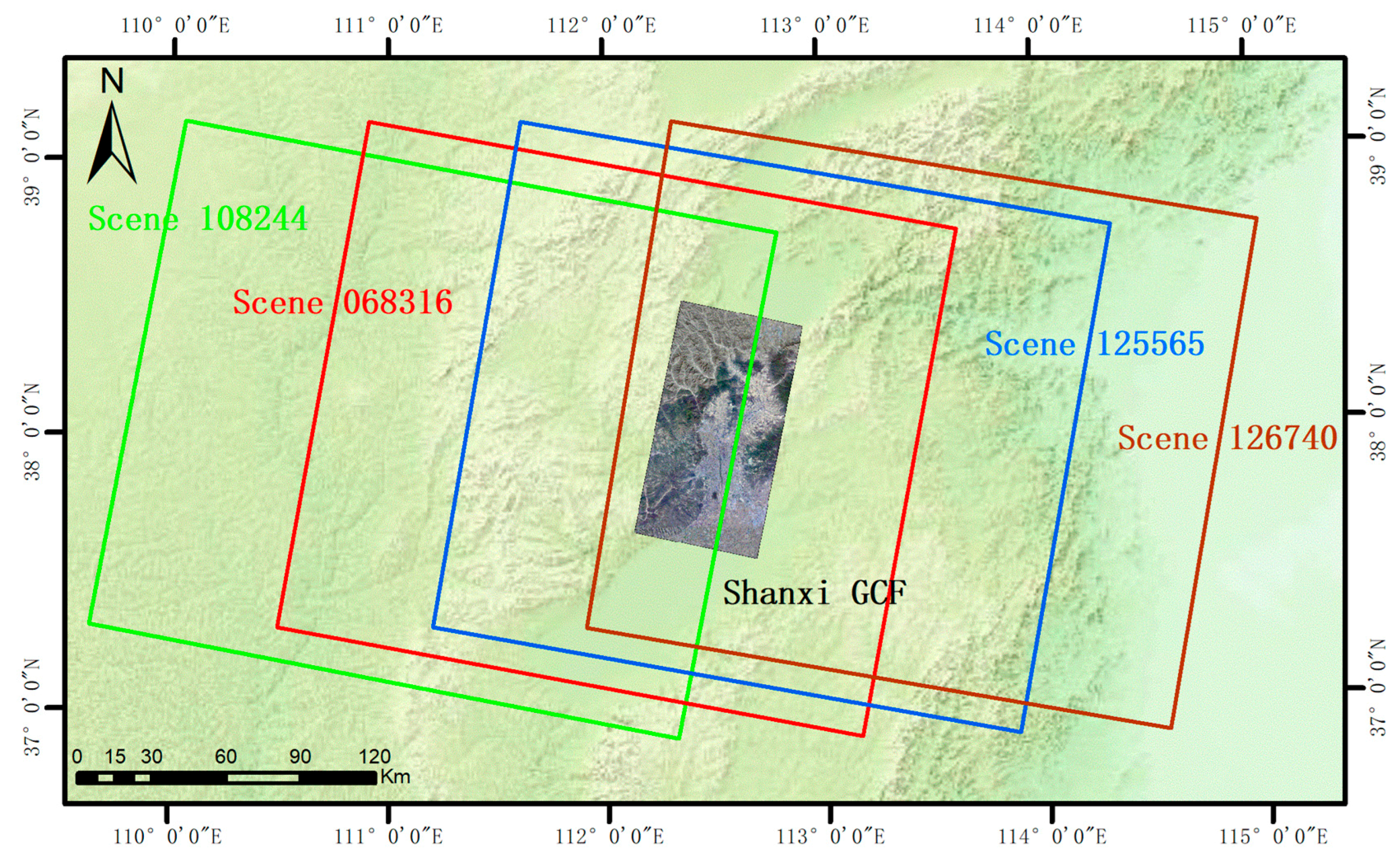

3.1. Datasets

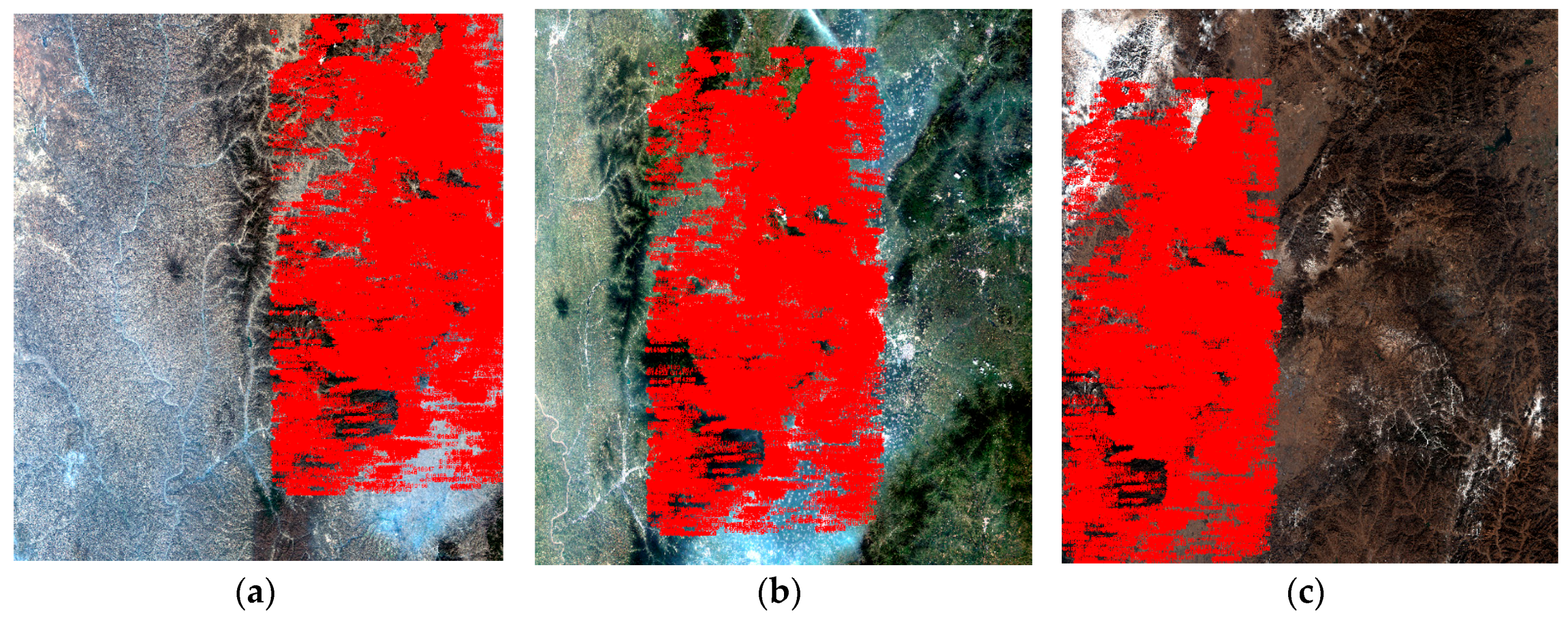

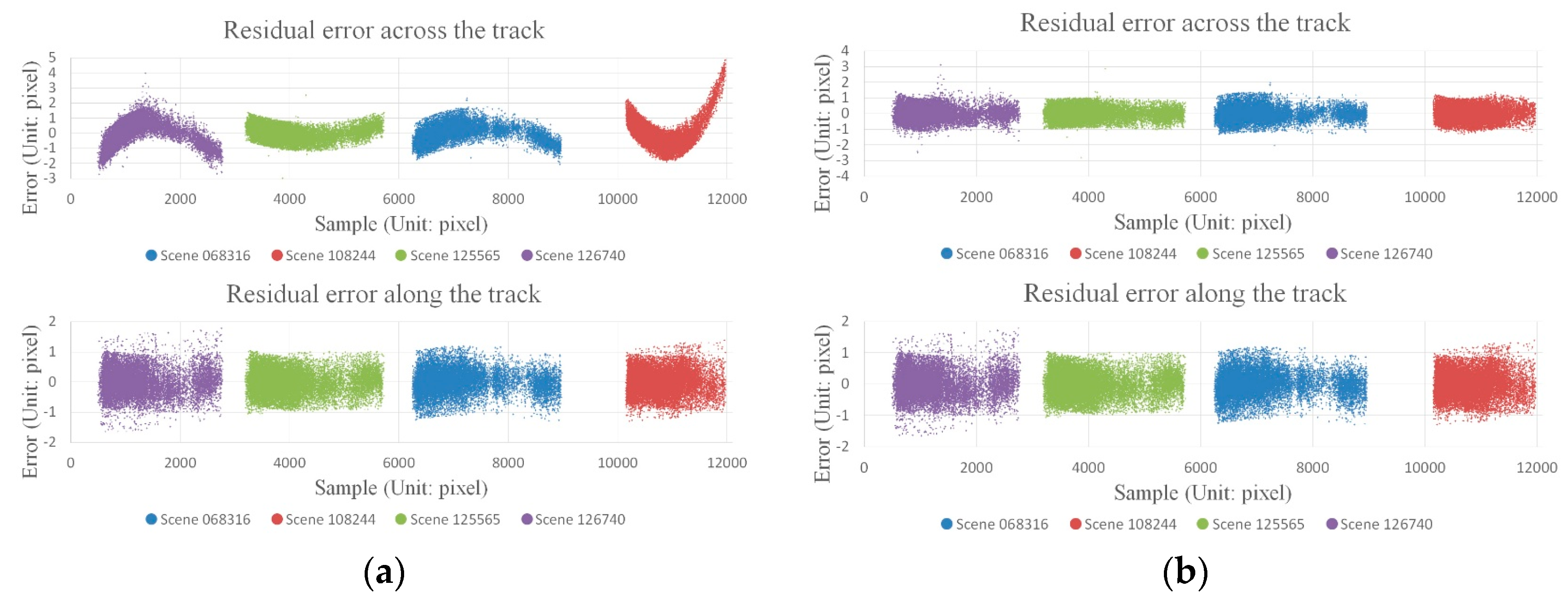

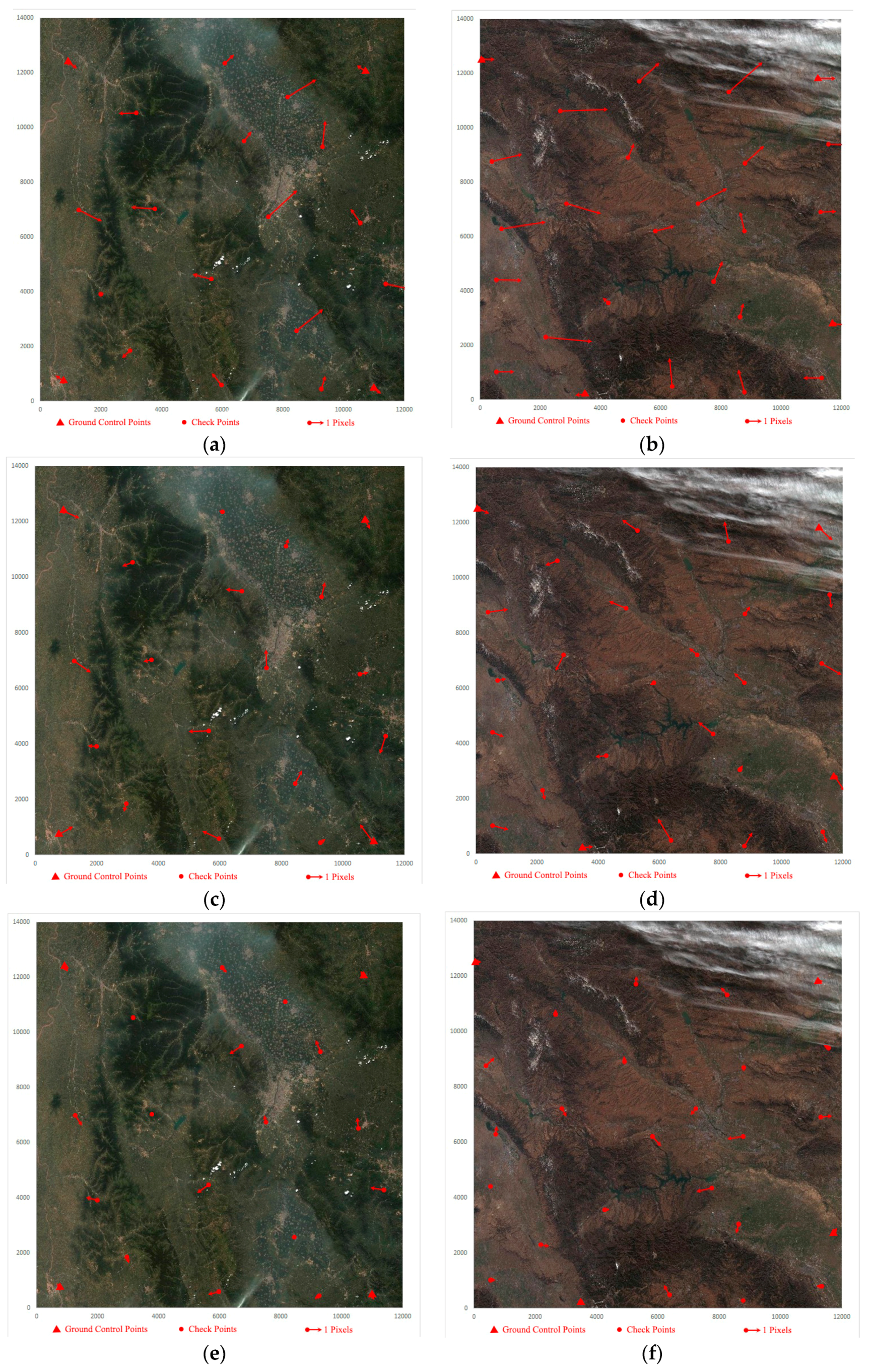

3.2. Distortion Detection

3.3. Accuracy Validation

4. Conclusions

- The proposed method can compensate for interior distortions and effectively improve the internal accuracy for pushbroom satellite imagery. After applying the calibration parameters acquired by the proposed method, images orientation accuracies evaluated by Ground Control Field (GCF) are within 0.6 pixel, with residual errors manifesting as random errors. Validation using Google Earth CPs further validates that the proposed method can improve orientation accuracy to within 1 pixel, and the entire scene is undistorted compared with that without calibration parameters compensating.

- In this paper, with the proposed method affected by unfavorable factors, such as lack of absolute references, over-parameterization of the calibration model and original image quality, the result is slightly inferior to the traditional GCF method and there exists maximum difference being approximately 0.4 pixel finally.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Baltsavias, E.; Zhang, L.; Eisenbeiss, H. Dsm generation and interior orientation determination of IKONOS images using a testfield in Switzerland. Photogramm. Fernerkund. Geoinf. 2006, 1, 41–54. [Google Scholar]

- Zhang, L.; Gruen, A. Multi-image matching for DSM generation from IKONOS imagery. ISPRS J. Photogramm. Remote Sens. 2006, 60, 195–211. [Google Scholar] [CrossRef]

- Leprince, S.; Musé, P.; Avouac, J.P. In-flight CCD distortion calibration for pushbroom satellites based on subpixel correlation. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2675–2683. [Google Scholar] [CrossRef]

- CNES (Centre National d’Etudes Spatiales). Spot Image Quality Performances. Available online: http://www.spot.ucsb.edu/spot-performance.pdf (acessed on 15 May 2004).

- Greslou, D.; Delussy, F.; Delvit, J.; Dechoz, C.; Amberg, V. PLEIADES-HR Innovative Techniquesfor Geometric Image Quality Commissioning. In Proceedings of the 2012 XXII ISPRS CongressInternational Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, VIC, Australia, 25 August–1 September 2012; pp. 543–547. [Google Scholar]

- Huang, W.C.; Zhang, G.; Tang, X.M.; Li, D.R. Compensation for distortion of basic satellite images based on rational function model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5767–5775. [Google Scholar] [CrossRef]

- Leprince, S.; Barbot, S.; Ayoub, F.; Avouac, J.P. Automatic and precise orthorectification, coregistration, and subpixel correlation of satellite images, application to ground deformation measurements. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1529–1558. [Google Scholar] [CrossRef]

- Zhang, G.; Jiang, Y.H.; Li, D.R.; Huang, W.C.; Pan, H.B.; Tang, X.M.; Zhu, X.Y. In-orbit geometric calibration and validation of ZY-3 linear array sensors. Photogramm. Rec. 2014, 29, 68–88. [Google Scholar] [CrossRef]

- Jiang, Y.H.; Zhang, G.; Tang, X.M.; Li, D.R.; Huang, W.C.; Pan, H.B. Geometric calibration and accuracy assessment of ZiYuan-3 multispectral images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4161–4172. [Google Scholar] [CrossRef]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Habib, A.F.; Michel, M.; Young, R.L. Bundle adjustment with self–calibration using straight lines. Photogramm. Rec. 2010, 17, 635–650. [Google Scholar] [CrossRef]

- Sultan, K.; Armin, G. Orientation and self-calibration of ALOS PRISM imagery. Photogramm. Rec. 2008, 23, 323–340. [Google Scholar] [CrossRef]

- Gonzalez, S.; Gomez-Lahoz, J.; Gonzalez-Aguilera, D.; Arias, B.; Sanchez, N.; Hernandez, D.; Felipe, B. Geometric analysis and self-calibration of ADS40 imagery. Photogramm. Rec. 2013, 28, 145–161. [Google Scholar] [CrossRef]

- Di, K.C.; Liu, Y.L.; Liu, B.; Peng, M.; Hu, W.M. A self-calibration bundle adjustment method for photogrammetric processing of Chang’e-2 stereo lunar imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5432–5442. [Google Scholar] [CrossRef]

- Zheng, M.T.; Zhang, Y.J.; Zhu, J.F.; Xiong, X.D. Self-calibration adjustment of CBERS-02B long-strip imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3847–3854. [Google Scholar] [CrossRef]

- Kubik, P.; Lebègue, L.; Fourest, F.; Delvit, J.M.; Lussy, F.D.; Greslou, D.; Blanchet, G. First in-flight results of PLEIADES 1A innovative methods for optical calibration. In Proceedings of the International Conference on Space Optics—ICSO 2012, Ajaccio, France, 9–12 October 2012. [Google Scholar] [CrossRef]

- Dechoz, C.; Lebègue, L. PLEIADES-HR 1A&1B image quality commissioning: Innovative geometric calibration methods and results. In Proceedings of the SPIE—The International Society for Optical Engineering, San Diego, CA, USA, 23 September 2013; Volume 8866, p. 11. [Google Scholar] [CrossRef]

- De Lussy, F.; Greslou, D.; Dechoz, C.; Amberg, V.; Delvit, J.M.; Lebegue, L.; Blanchet, G.; Fourest, S. PLEIADES-HR in flight geometrical calibration: Location and mapping of the focal plane. In Proceedings of the 2012 XXII ISPRS CongressInternational Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, VIC, Australia, 25 August–1 September 2012; pp. 519–523. [Google Scholar]

- Delevit, J.M.; Greslou, D.; Amberg, V.; Dechoz, C.; De Lussy, F.; Lebegue, L.; Latry, C.; Artigues, S.; Bernard, L. Attitude assessment using PLEIADES-HR capabilities. In Proceedings of the 2012 XXII ISPRS CongressInternational Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, VIC, Australia, 25 August–1 September 2012; pp. 525–530. [Google Scholar]

- Faugeras, O.D.; Luong, Q.T.; Maybank, S.J. Camera Self-Calibration: Theory and Experiments. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 1992; pp. 321–334. [Google Scholar]

- Hartley, R.I. Self-calibration of stationary cameras. Int. J. Comput. Vis. 1997, 22, 5–23. [Google Scholar] [CrossRef]

- Malis, E.; Cipolla, R. Self-calibration of zooming cameras observing an unknown planar structure. In Proceedings of the 15th International Conference on Pattern Recognition, Barcelona, Spain, 3–8 September 2000; pp. 85–88. [Google Scholar]

- Malis, E.; Cipolla, R. Camera self-calibration from unknown planar structures enforcing the multiview constraints between collineations. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1268–1272. [Google Scholar] [CrossRef]

- Tang, X.M.; Zhu, X.Y.; Pan, H.B.; Jiang, Y.H.; Zhou, P.; Wang, X. Triple linear-array image geometry model of ZiYuan-3 surveying satellite and its validation. Acta Geod. Cartogr. Sin. 2012, 4, 33–51. [Google Scholar] [CrossRef]

- Xu, K.; Jiang, Y.H.; Zhang, G.; Zhang, Q.J.; Wang, X. Geometric potential assessment for ZY3–02 triple linear array imagery. Remote Sens. 2017, 9, 658. [Google Scholar] [CrossRef]

- Xu, J.Y. Study of CBERS CCD camera bias matix calculation and its application. Spacecr. Recover. Remote Sens. 2004, 4, 25–29. [Google Scholar]

- Yuan, X.X. Calibration of angular systematic errors for high resolution satellite imagery. Acta Geod. Cartogr. Sin. 2012, 41, 385–392. [Google Scholar]

- Radhadevi, P.V.; Solanki, S.S. In-flight geometric calibration of different cameras of IRS-P6 using a physical sensor model. Photogramm. Rec. 2010, 23, 69–89. [Google Scholar] [CrossRef]

- Bouillon, A. Spot5 HRG and HRS first in-flight geometric quality results. Int. Symp. Remote Sens. 2003, 4881, 212–223. [Google Scholar] [CrossRef]

- Bouillon, A.; Breton, E.; Lussy, F.D.; Gachet, R. Spot5 geometric image quality. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toulouse, France, 21–25 July 2003; pp. 303–305. [Google Scholar]

- Mulawa, D. On-orbit geometric calibration of the Orbview-3 high resolution imaging satellite. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2004, 35, 1–6. [Google Scholar]

- Wang, X.Z.; Liu, D.Y.; Zhang, Q.Y.; Huang, H.L. The iteration by correcting characteristic value and its application in surveying data processing. J. Heilongjiang Inst. Technol. 2001, 15, 3–6. [Google Scholar] [CrossRef]

- Bai, Z.G. Gf-1 satellite-the first satellite of CHEOS. Aerosp. China 2013, 14, 11–16. [Google Scholar]

- Lu, C.L.; Wang, R.; Yin, H. Gf-1 satellite remote sensing characters. Spacecr. Recover. Remote Sens. 2014, 35, 67–73. [Google Scholar]

- XinHuaNet. China Launches Gaofen-1 Satellite. Available online: http://news.xinhuanet.com/photo/2013–04/26/c_124636364.htm (accessed on 26 April 2013).

- Fraser, C.S.; Hanley, H.B. Bias compensation in rational functions for IKONOS satellite imagery. Photogramm. Eng. Remote Sens. 2015, 69, 53–58. [Google Scholar] [CrossRef]

- Fraser, C.S.; Yamakawa, T. Insights into the affine model for high-resolution satellite sensor orientation. ISPRS J. Photogramm. Remote Sens. 2004, 58, 275–288. [Google Scholar] [CrossRef]

- Wirth, J.; Bonugli, E.; Freund, M. Assessment of the accuracy of Google Earth imagery for use as a tool in accident reconstruction. SAE Tech. Pap. 2015, 1, 1435. [Google Scholar] [CrossRef]

- Pulighe, G.; Baiocchi, V.; Lupia, F. Horizontal accuracy assessment of very high resolution Google Earth images in the city of Rome, Italy. Int. J. Digit. Earth 2015, 9, 342–362. [Google Scholar] [CrossRef]

- Farah, A.; Algarni, D. Positional accuracy assessment of GoogleEarth in Riyadh. Artif. Satell. 2014, 49, 101–106. [Google Scholar] [CrossRef]

| Area | GSD of DOM (m) | Plane Accuracy of DOM RMS (m) | Height Accuracy of DEM RMS (m) | Range (km2) (Across Track × Along Track) | Center (Latitude and Longitude) |

|---|---|---|---|---|---|

| Shanxi | 0.5 | 1 | 1.5 | 50 × 95 | 38.00°N, 112.52°E |

| Songshan | 0.5 | 1 | 1.5 | 50 × 41 | 34.65°N, 113.55°E |

| Dengfeng | 0.2 | 0.4 | 0.7 | 54 × 84 | 34.45°N, 113.07°E |

| Tianjin | 0.2 | 0.4 | 0.7 | 72 × 54 | 39.17°N, 117.35°E |

| Northeast | 0.5 | 1 | 1.5 | 100 × 600 | 45.50°N, 125.63°E |

| Items | Values |

|---|---|

| Swath | 200 km |

| Resolution | 16 m |

| Change-coupled device (CCD) size | 0.0065 mm |

| Principle distance | 270 mm |

| Field of view (FOV) | 16.44 degrees |

| Image size | 12,000 × 13,400 pixels |

| Scene ID | Area | Image Date | No. of CPs | Sample Range (Pixel) | Function |

|---|---|---|---|---|---|

| 068316 | Shanxi | 10 August 2013 | 15,800 | 6300–9000 | Detection/Validation |

| 108244 | Shanxi | 7 November 2013 | 18,057 | 10,200–12,000 | Detection/Validation |

| 125565 | Shanxi | 27 November 2013 | 19,459 | 3200–5700 | Detection/Validation |

| 126740 | Shanxi | 5 December 2013 | 14,551 | 500–2700 | Validation |

| 079476 | Henan | 3 September 2013 | —— | —— | Validation |

| 125567 | Henan | 27 November 2013 | —— | —— | Validation |

| 132279 | Henan | 13 December 2013 | —— | —— | Validation |

| Scene ID | No. GCPs/CPs | Sample Range (Pixel) | Line (along the Track) | Sample (across the Track) | Max | Min | RMS | |

|---|---|---|---|---|---|---|---|---|

| 068316 | 4/15,796 | 6300–9000 | Ori. 1 | 0.383 | 0.537 | 2.345 | 0.005 | 0.660 |

| Com. 2 | 0.384 | 0.416 | 2.022 | 0.005 | 0.566 | |||

| 108244 | 4/18,053 | 10,200–12,000 | Ori. | 0.382 | 0.864 | 4.863 | 0.005 | 0.945 |

| Com. | 0.382 | 0.412 | 1.656 | 0.004 | 0.562 | |||

| 125565 | 4/19,455 | 3200–5700 | Ori. | 0.374 | 0.428 | 3.045 | 0.005 | 0.569 |

| Com. | 0.374 | 0.375 | 3.015 | 0.007 | 0.530 | |||

| 126740 | 4/14,547 | 500–2700 | Ori. | 0.432 | 0.813 | 3.973 | 0.009 | 0.920 |

| Com. | 0.432 | 0.439 | 3.117 | 0.008 | 0.616 |

| Scene ID | No. GCPs/CPs | Line (along the Track) | Sample (across the Track) | Max | Min | RMS | |

|---|---|---|---|---|---|---|---|

| 068316 | 4/16 | Ori. 1 | 0.916 | 1.069 | 2.692 | 0.207 | 1.410 |

| Pro. 2 | 0.701 | 0.701 | 1.529 | 0.215 | 0.991 | ||

| Cla. 3 | 0.430 | 0.437 | 0.991 | 0.130 | 0.613 | ||

| 079476 | 4/24 | Ori. | 0.840 | 1.921 | 5.538 | 0.512 | 2.097 |

| Pro. | 0.846 | 0.780 | 2.543 | 0.119 | 1.164 | ||

| Cla. | 0.646 | 0.635 | 1.788 | 0.088 | 0.906 | ||

| 125567 | 4/22 | Ori. | 0.966 | 1.721 | 3.173 | 0.541 | 1.973 |

| Pro. | 0.760 | 0.748 | 1.803 | 0.305 | 1.067 | ||

| Cla. | 0.384 | 0.433 | 1.072 | 0.079 | 0.579 | ||

| 132279 | 4/22 | Ori. | 0.790 | 1.991 | 4.922 | 0.249 | 2.142 |

| Pro. | 0.798 | 0.779 | 2.050 | 0.145 | 1.115 | ||

| Cla. | 0.525 | 0.505 | 1.198 | 0.054 | 0.728 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G.; Xu, K.; Zhang, Q.; Li, D. Correction of Pushbroom Satellite Imagery Interior Distortions Independent of Ground Control Points. Remote Sens. 2018, 10, 98. https://doi.org/10.3390/rs10010098

Zhang G, Xu K, Zhang Q, Li D. Correction of Pushbroom Satellite Imagery Interior Distortions Independent of Ground Control Points. Remote Sensing. 2018; 10(1):98. https://doi.org/10.3390/rs10010098

Chicago/Turabian StyleZhang, Guo, Kai Xu, Qingjun Zhang, and Deren Li. 2018. "Correction of Pushbroom Satellite Imagery Interior Distortions Independent of Ground Control Points" Remote Sensing 10, no. 1: 98. https://doi.org/10.3390/rs10010098