1. Introduction

In the western US, snowmelt from mountains is the main source of water within a complex ecosystem that is rapidly changing due to frequent natural and socio-economic pressures over the past few decades [

1,

2]. In particular, mountainous ecosystems in the Sierra Nevada (California) are currently experiencing drastic disturbance and regeneration events such as tree mortality, fire, insect damage, disease, and drought [

3]. The dynamic impacts of these processes are still not well known mainly due to the lack of long-term forest measurements characterizing 3D forest structure (e.g., forest height, vegetation vertical profile) changes over time and space. For instance, the uncertainty associated with the poor understanding of forest dynamics in terms of carbon fluxes in North America is nearly 50% [

4].

Field inventory techniques are constrained by the spatial and temporal frequency of sampling and are not optimal for characterizing disturbance and regeneration processes, namely over mountain environments where the complex topography enhances heterogeneity on forest structure and creates access difficulties. Remote sensing techniques have been used to produce time-series of maps at regional- and landscape-level using medium- and high-resolution products such as Landsat and QuickBird (e.g., [

5,

6,

7]). However, they are not adapted to characterize forest with the detail required to study many ecological processes such as shrubby vegetation fuel loads estimation, forest vertical profile characterization, and tree recruitment. Airborne lidar is the best tool to measure large swaths of forest structure at high spatial resolution in both vertical and horizontal dimensions. Its ability to characterize fine scale features such as tree counting or low vegetation density have been demonstrated over different ecosystems [

8,

9]. However, the high cost of airborne lidar acquisition has been a limitation to surveying large areas with reasonable temporal resolution in order to study forest disturbance and regeneration [

10,

11,

12,

13,

14,

15,

16].

In this work, we investigate the reliability of fusing lower resolution but frequent lidar datasets acquired by the NASA-JPL Airborne Snow Observatory (ASO) to characterize the forest 3D structure at a fine scale. The ASO focus is the mountain snowpack and as such does not require high point densities that are needed to characterize forest canopies [

17]. Instead, ASO offers multiple acquisitions over mountain systems each winter and spring in, at present, 12 mountain watersheds (

Figure 1a) that are populated by vegetated areas of great ecological interest over the Sierra Nevada (California, since 2013), the Rocky Mountains (Colorado, since 2013), Mount Jefferson (Oregon, since 2016), and Mount Olympus (Washington, since 2014). With the multiple acquisitions each year, temporal sampling may be converted to an effective higher point density by the fusion of point clouds. Indeed, these datasets have the potential to be an important asset for the forest community in addition to its main purpose of mapping snowpack and snow melting.

Low point cloud densities (~1 pt/m

2) have been successfully used in the framework of many applications (e.g., biomass estimates), but they require extensive field work to calibrate the signal [

18,

19]. On the contrary, high density point clouds (>8 pt/m

2) allow direct extraction of forest metrics alleviating the need for costly and time-consuming field work [

20,

21], reduce uncertainty in forest metrics estimation (e.g., maximum and mean height of canopy) [

22], and better represent understory and near-surface vegetation [

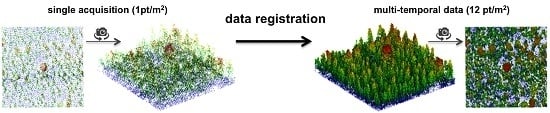

23]. Therefore, we have the opportunity to markedly improve the forest structure characterization by coherently fusing the weekly ASO measurements (~1 pt/m

2) in order to significantly increase the point density (up to ~12 pt/m

2) and provide a multi-year dataset that is then optimal for any type of lidar-based assessment of vegetation. The multi-year ASO datasets can be readily compared and fused with other airborne and satellite datasets (e.g., hyperspectral imagery) in the framework of many applications such as species mapping or canopy water loss studies. Below, we present the methodology to compute a spatially coherent high-resolution point cloud from multiple lower-resolution surveys. We assess the spatial geolocation accuracy of individual lidar acquisitions in order to compensate for systematic bias to provide a final point cloud that meets the requirements in term of both geometry coherence and geolocation accuracy for fine-scale forestry studies.

Registering Lidar 3D Point Clouds

Lidar systems provide 3D point clouds with increasing accuracy and reliability. When the same area is measured multiple times, those point clouds can be fused to increase effective point density. However, the individual lidar point clouds must be co-registered to compensate for 3D shifts between the different acquisitions. Although ASO acquisition parameters do not change appreciably for a given study basin (e.g., flight lines path and flight altitude), lidar datasets can have planimetric shifts due to inaccuracies in platform trajectory estimation introduced by the GPS (Global Position System) receiver and drifts of the IMU (Inertial Measurement Unit) that are coupled to the lidar sensor.

Most registration methods for converting misaligned 3D point clouds to a single spatial geometry consist of two main steps: (1) the identification of corresponding objects in the different datasets, called “tie objects”, to calculate the spatial shift between point clouds, and (2) the establishment of a coordinate transformation model composed by a translation, a rotation, or by a combination of both to adjust the coordinates of the datasets according to the estimated spatial shift. Registration methods can be divided into four main families based on the approach used to identify tie objects: artificial reflective targets-based, features-based, surface-based, and point-based approaches. The first is the least sophisticated and involves artificial reflective targets that are placed along the study site. These targets are regarded as tie objects in two different datasets to calculate the transformation model parameters [

24]. In the features-based approach, a collection of tie objects is first identified in each point cloud using automatic or semi-automatic methods. They rely heavily on the ability of the extraction method to accurately identify tie objects, which can be defined as (a) key points (e.g., electric poles location, [

25]), (b) building corners [

26], or specific patterns such as (c) spheres and cylinders [

27] or even (d) segments and curves [

28]. The surface-based methods extract tie objects using surface models (typically a mesh or a grid raster product such as a Delaunay Triangulation or a DTM, respectively) instead of using the original 3D point cloud. They usually focus on objects easily recognized such as depressions or cliff edges [

29]. Finally, the point-based method does not rely on the extraction of tie objects. Instead, the parameters of the transformation model are calculated from the original point cloud. The most common approach for conducting this transformation is the Iterative Closest Point (ICP) algorithm that aligns two lidar point clouds by minimizing the distance between the corresponding closest neighborhoods [

30].

Unfortunately, these existing registration methods are not immediately adaptable to fusing the ASO data due to three main reasons: (1) the lower spatial resolution of single ASO acquisitions, (2) the fact that the ASO covers natural environments where tie objects are difficult to extract, and (3) the dynamics of the landscape that change with snowfall, snow melt, and occurrence of ice at the surface. The lower point density of the ASO data limits the identification of well-defined key points that could be used as tie objects (e.g., top of the crowns or artificial reflecting targets) and limits the ICP method because the probability of a laser pulse hitting the same object (e.g., a tree crown apex or edge) over every acquisition is significantly low. In addition, the fact that we are working in a natural environment with very few human structures prevents us from applying feature-based approaches that use standard patterns (e.g., building corners) as tie points. Finally, the surface-based methods are not appropriate because distinctive landscape features may not be visible depending on snow and ice occurrence (such as terrain depressions, road intersections, and river outlines).

In this work, we (1) assess the quality of ASO lidar data in terms of geolocation accuracy, and (2) demonstrate a new method to register low-resolution point cloud data in order to calculate a spatially coherent, high-resolution point cloud. We implement the end-to-end processing approach on ASO acquisitions over a sample area in the Tuolumne River Basin, Sierra Nevada (California) and provide assessments of the performance of the approach for 3D characteristics of forest structure.

3. Methods

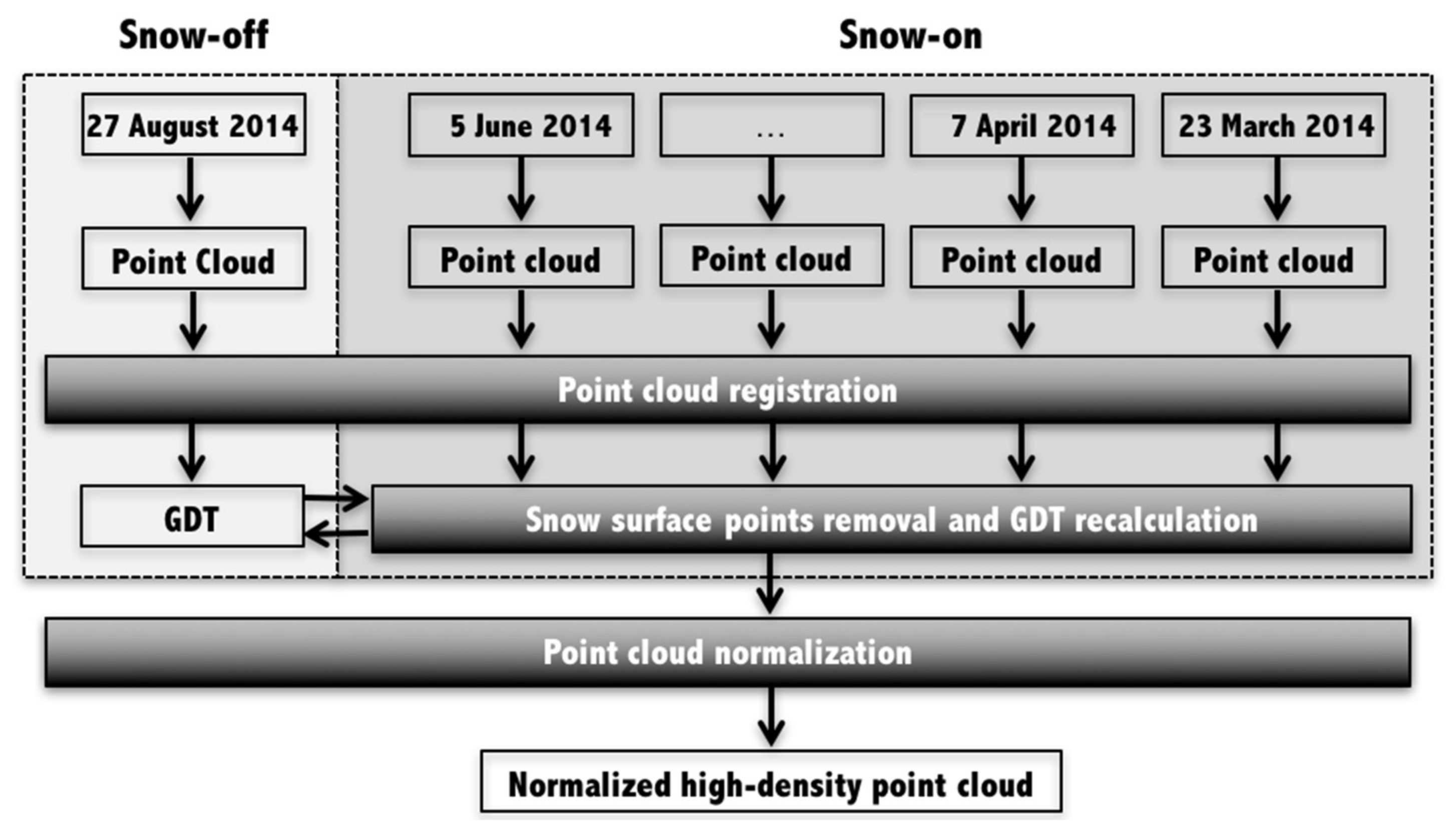

We developed a method to compute a normalized high-density point cloud data by coherently merging multiple lower-density lidar acquisitions. The method workflow is composed of three major processing steps, namely (1) point cloud registration, (2) snow surface points removal and GDT (Ground Delaunay Triangulation) recalculation, and (3) point cloud normalization (

Figure 2). In the first step, we develop a new registration method to coherently merge individual point clouds. The method estimates both planimetric and altimetric discrepancies between individual acquisitions in order to establish a set of coordinate transformation models that are then applied to each point cloud to adjust their location. The registration method is explained in detail in

Section 3.1.

The purpose of the second step is to iteratively produce a robust GDT to characterize the complex topography of the mountainous environment. To do this, we remove low lidar points from the snow-on acquisitions because they might correspond to the snowpack surface and would be confused with vegetation because they show higher elevation compared with the true GDT. First, we compute a GDT using the points classified as ground within the snow-off dataset. Then, we examine each of the snow-on acquisitions to remove points corresponding to the snowpack surface. In practice, we iteratively consider each triangle of the reference GDT in order to select the lidar points classified as ground which planimetric coordinates are located within the boundaries the triangle. Those points are removed from the dataset if their averaged vertical distance to the GDT is greater than 5 cm as this suggests that the area of the triangle is covered by snow. The remaining points are merged to the former point cloud and its GDT recalculated using the MCC algorithm [

35]. We start this process with the lidar acquisitions that are more likely to contain the largest number of points representing the bare soil (e.g., 5 June 2014,

Figure 2) in order to produce a denser and more accurate GDT in the earlier iterations. Note that we consider the existence of ground points within the datasets collected during snow-on conditions because the snowpack does not melt uniformly throughout the study site, leaving exposed patches with valid ground points. Finally, we remove the effect of topography from the high-resolution lidar data by interpolating each lidar point in the GDT in order to calculate its distance to the ground and calculate a normalized point cloud (Point cloud normalization step,

Figure 2). The latter is a standard product in the framework of vegetation analysis because it allows direct retrieval of the height of vegetation from the point cloud.

3.1. Point Cloud Registration

The registration method is a feature-based approach that uses coniferous tree apexes as tie objects, as they are the most constant feature in the study area across time due to their conical shape and lack of snow accumulation. The tie objects are then used to establish a set of coordinate transformation models that apply to individual point clouds in order to compensate for geolocation inaccuracies between acquisitions. This allows us to coherently merge the individual acquisitions to create a high-density point cloud with a single geometry (Point cloud registration step,

Figure 2). The transformation models follow

where

and

are the coordinates of the original and adjusted lidar point clouds, respectively;

and

where

is the number of points in a given point cloud and

is the number of lidar acquisitions;

is a translation vector and

a 3D rotation matrix defined by the axis angles

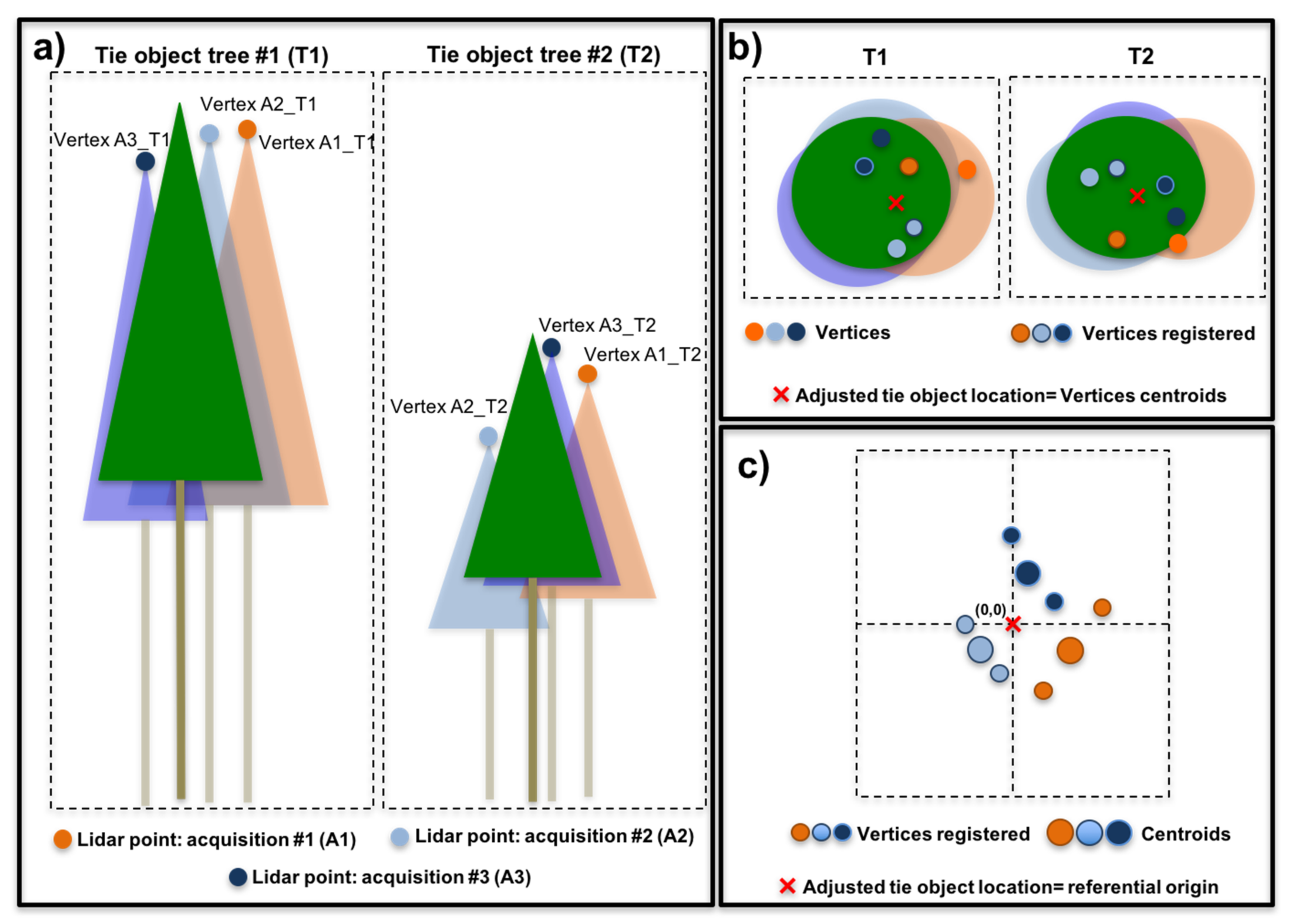

. Our method is composed of three main steps: (1) definition of a set of tie objects (

Figure 3a), (2) adjustment of the location of tie objects (

Figure 3b), and (3) calculation of transformation models for each lidar acquisition (Equation (1)).

In the first step, we manually define a dataset of tie objects by identifying isolated tree crowns with well-defined apexes. To do this, we generate a 1 m resolution Digital Surface Model (DSM) by selecting the highest lidar point within each pixel from a point cloud composite computed by merging the lidar individual acquisitions before registration. This preliminary DSM identifies isolated tree crowns with reasonable accuracy despite the fact that it may have spatial inaccuracies due to misalignments between lidar acquisitions. Then, we define a circular neighborhood located over each tie object to select the corresponding highest lidar point from each acquisition. The later points are hereafter called vertices and they are defined by

with

, where s is the number of tie objects for a given study site. In

Figure 3a, we show a dummy example of three lidar acquisitions and two tie objects (i.e.,

j = 1, 2, 3 and

k = 1, 2; respectively). We set the maximum radius of the circular neighborhood to 5 m, as the spatial misalignment between acquisitions is expected to be less than 5 m. Furthermore, the use of a maximum 5 m neighborhood over isolated trees avoids the risk of selecting vertices that correspond to different tree crowns. Due to the lower density of ASO single acquisitions, it is likely that none of the vertices would correspond to the actual apex of the tie objects. Indeed, when considering lower density lidar acquisitions, we expect errors on the estimation of important forest metrics such as the location of trees and their maximum height (orange, cyan, and blue trees in

Figure 3a).

In the second step, we adjust the location of the tie objects by assigning their positions to the centroid of the corresponding vertices. The tie objects location is here defined by

, which is represented in

Figure 3b the red cross. This allows us to calculate the locations of the adjusted tie objects as a function of each acquisition in order to ensure that the removal of outliers introduced by misalignment between flight lines are performed automatically without user decision in selecting the locations.

In the third step, we estimate a transformation model for each lidar acquisition defined as a function of the corresponding vertices (

) and centroids (

). To do this, we set

and

in Equation (1) to estimate both the translation vector (

) and the rotation matrix (

) using a commonly used least-square fitting approach based on the singular value decomposition [

36]. Note that we need at least four tie objects, i.e.,

, to solve the three-dimensional rigid model shown in Equation (1). However, a higher number of tie points would produce more robust transformation models.

Finally, we use and calculated for each lidar acquisition () in the previous step to compensate for location inaccuracies and calculate the adjusted point cloud coordinates as a function of the original coordinates (Equation (1)). Then, we merge the individual lidar acquisitions to obtain a high-density point cloud that contains points from all available acquisitions.

In this work, we selected twenty isolated trees for use as tie objects using a preliminary DSM distributed over the Tuolumne River basin study site (

Figure 4).

3.2. Accuracy Assessment

The nature of the translation vectors (

) and rotation matrices (

) calculated for each lidar acquisition are indicators of the magnitude and direction of the misalignment between point clouds in terms of their geolocation. For a more intuitive comparison, we normalize the coordinates of the vertices as a function of the corresponding tie objects to compute scatter plots that provide visual and quantitative measures of systematic bias for each acquisition date (

Figure 3c).

We start with

that are the locations of the adjusted vertices calculated by applying Equation (1) on the original vertices (

) using the corresponding

and

. Then, we define

and

to reduce the differences in coordinates of the vertices (for both original and adjusted point clouds, respectively) by using the coordinates of the corresponding tie object. Finally, we calculate the centroid of the adjusted vertices

to estimate the magnitude of the bias of each lidar acquisition.

Figure 3c provides schematics that allow identification and quantification of systematic bias by comparing different lidar acquisitions. The magnitude of the bias can be interpreted directly from the coordinates of the centroids of the vertices as the coordinates of the corresponding tie objects have been transformed to match the origin of the graph (

Figure 3c shows

and not the original

).

4. Results and Discussion

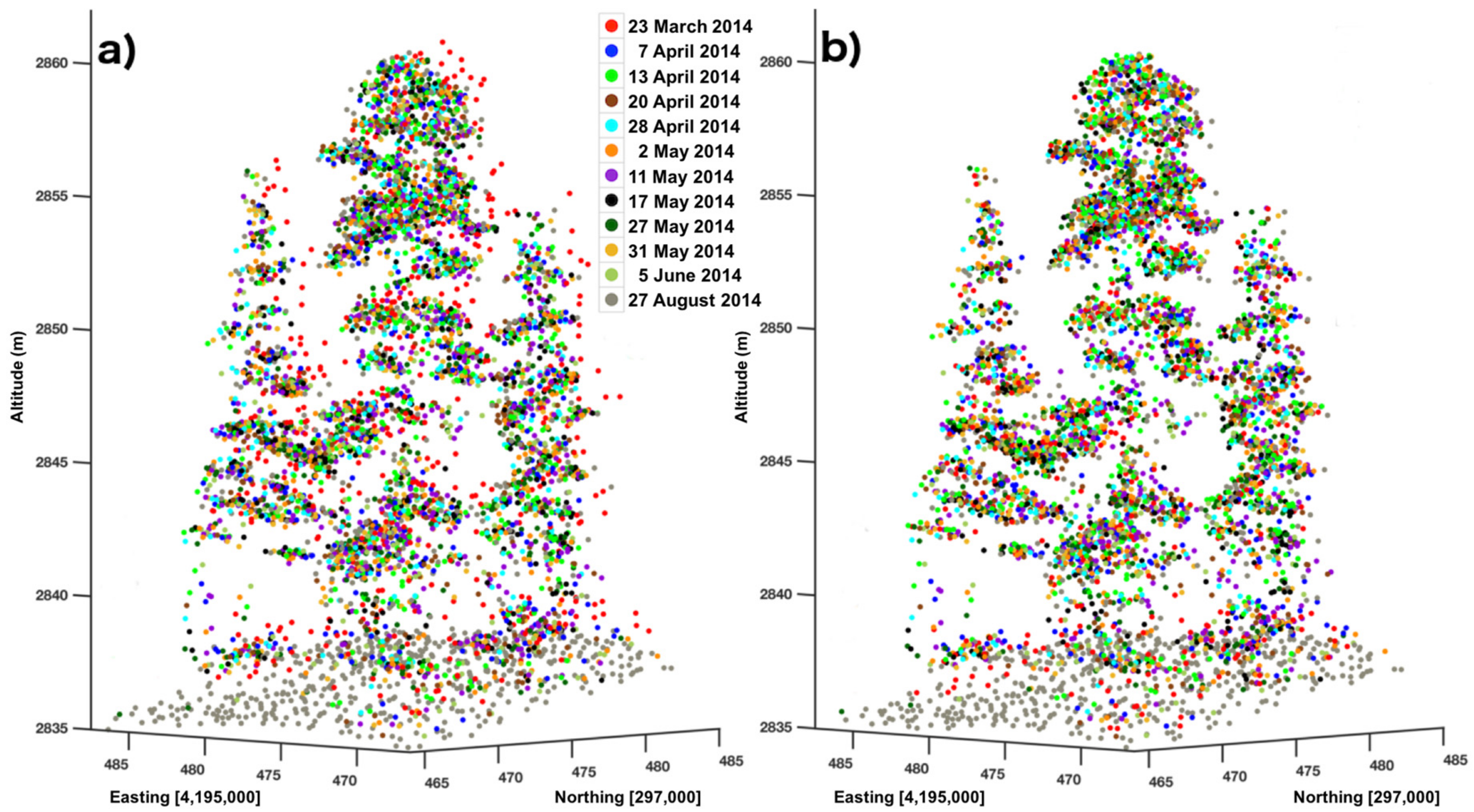

Figure 5a shows the multiple lidar point clouds in the surroundings of tie object T1 (

Figure 4a) before registration. In general, the point clouds from individual acquisitions are spatially consistent but as expected some forest features (e.g., the tree apexes) are not well defined in single acquisitions due to geolocation issues and low-density characteristics of the surveys that make the point cloud blurred. A demonstration of the adjustment method on the 3D point cloud composite is shown in

Figure 5b when compared to

Figure 5a, which shows the data after applying our registration approach. The improvements in terms of geometrical consistency are clear, particularly regarding the lidar acquisition that showed the largest bias (red dots). In addition, the main forest features such as the tree apexes are also markedly better defined in

Figure 5b, suggesting that the location of remaining acquisitions have also been appropriately adjusted.

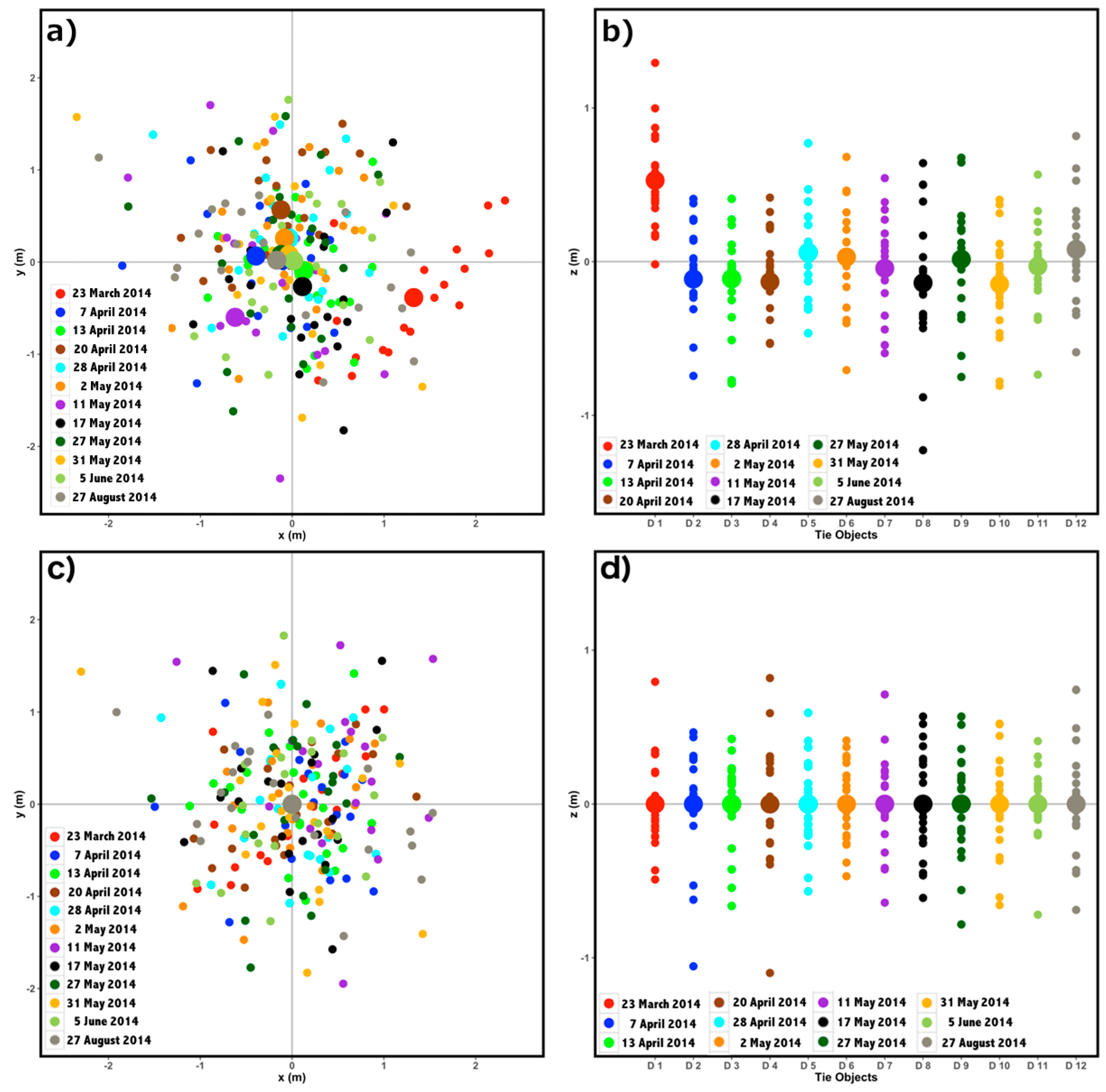

To quantify the misalignment between the lidar acquisitions and identify systematic bias, we normalize the multiple vertices using the corresponding tie object coordinates to draw a scatter plot similar to the one shown in

Figure 3c. Results are show in

Figure 6a,b, and supporting main statistics are summarized in

Table 1. The distances between the vertices and the tie objects range between 0.02 m and 2.24 m with an average of 0.97 m in planimetry (horizontal displacement), whereas with respect to the altimetry (vertical displacement), they are within a smaller interval (0.00–1.52 m) and have a lower average (0.28 m). The accuracy of the original location of vertex-based metrics is not expected to be higher than these values due to the nature of the point cloud accumulation from lower density discrete returns that is subject to a low probability of the laser beam intersecting exactly the same feature of trees (e.g., tree apex). Despite the fact that the tree growth is small in severe climate conditions, the vertical errors might also be associated with the tree growth during Spring.

As discussed in the methods, the main goal of this work is not to compensate for local bias at the vertex level, which is not possible due to the lower resolution of the data. Instead, we aim to compensate for systematic biases at the individual acquisition level by means of the centroids locations calculated from the vertices (larger dots in

Figure 6) and create an effective high-density characterization of the forest canopy.

Figure 6a,b shows that the lidar acquisition on 23 March (represented by the red dots) has a larger geolocation systematic bias compared with the remaining ones in both horizontal and vertical dimensions (~1.3 m and ~0.53 m, respectively). The misalignment of the red dots corresponding to that particular acquisition is clearly visible in

Figure 5a. The acquisition of May 11th (purple dots in

Figure 6) has a moderate bias of 0.8 m in the horizontal dimension. The remaining centroids are relatively close to the origin of the graph even if the spread of the vertices is large (

Figure 6a,b). Indeed, the average distance between the vertices and the tie objects (0.97 m and 0.28 m in the horizontal and vertical dimension, respectively) is larger than the average distance of the centroids (0.38 m and 0.12 m, respectively;

Table 1). This means that although the mean distance between the vertices and the tie objects is high due to the fact the laser beam most likely missed the treetops, the centroid of the vertices is a reliable unbiased estimate of the tie object locations.

Figure 5c,d as well as

Table 1 quantifies the improvements introduced by our method in terms of spatial consistency. As expected, all centroids overlap at the origin of the reference plane and confirm that our method is successful in removing the bias visible in the original measurements, which was of 0.38 m and 0.12 m in the horizontal and vertical domain, respectively (“centroid-based” columns in

Table 1). While the centroid of the point cloud was modified, the spread of vertices changed little with respect to the original data. The average horizontal distance between the vertices and the corresponding tie objects decreased only from 0.97 m to 0.88 m when we compared the original and adjusted point clouds, (“vertex-based” columns in

Table 1). Similarly, the averaged vertical distance decreased from 0.28 m to 0.25 m. Therefore, our approach does not force the vertices to individually converge toward the tie objects. Instead, it seeks a global solution that minimizes the distance between the entire number of vertices for a given acquisition—i.e., its centroid—and the corresponding tie object locations and, as such, preserves the original relative spatial geometry.

Figure 7a,b provide a perspective view of a single acquisition dataset and the high-density point cloud calculated by merging the registered individual acquisitions, respectively. The latter represents the forest structure at a finer resolution in both horizontal and vertical dimensions and enables the lidar forest inventory at the individual tree level, which is crucial for the monitoring of tree mortality as well as for tree biomass estimates over areas with non-existent field sampling [

20,

21]. Furthermore, the merging of multiple acquisitions improves mapping of the surface vegetation characteristics that highly impact the wildfire hazard and behavior [

37,

38].

Our methodology for the removal of snow points may eliminate some low vegetation points due to the fact that it is a conservative approach which is based only on the geometric and topological relationship between the lidar points and the reference ground Delaunay triangulation. We had considered to use additional information such as the lidar intensity to differentiate snowpack from low vegetation points [

39]. However, it is difficult to isolate and normalize the energy reflected by the vegetation and the snow (or ground) in laser beams that give rise to multiple returns [

40]. Moreover, the lidar intensity reflected by the snowpack is driven by many factors (e.g., content in impurities and liquid water) that change significantly across space and time [

41] and, the incidence angle of the laser beam on the snowpack changes significantly that also introduces randomness in the lidar return intensity. Finally, the intensity would need to be calibrated for each lidar acquisition (e.g., using reflective reference targets) taking into account many factors such as the atmospheric conditions. On the one hand, our approach is readily transferable to different locations experiencing different weather conditions. On the other hand, the high temporal resolution of the ASO lidar measurements ensures that there are enough points to characterize the low vegetation properly, namely over areas of lower altitude where the snow melts earlier in spring and the shrubby vegetation is more luxurious due to milder climate conditions.

The improvements of the ASO multi-temporal data over single acquisitions can be readily shown in the calculation of the most commonly used product in forestry studies that is the canopy height model (CHM). In

Figure 7, we show two CHM products derived from the ASO point cloud data with different point densities, providing clear distinctions in how the forest canopy is characterized with high point density data acquired from multiple acquisitions (

Figure 7b,d) compared to a lower point density data from a single acquisition (

Figure 7a,c). The results show that the successive ASO lidar acquisitions provide spatially consistent CHM to improve the characterization of the forest structure at fine resolution.

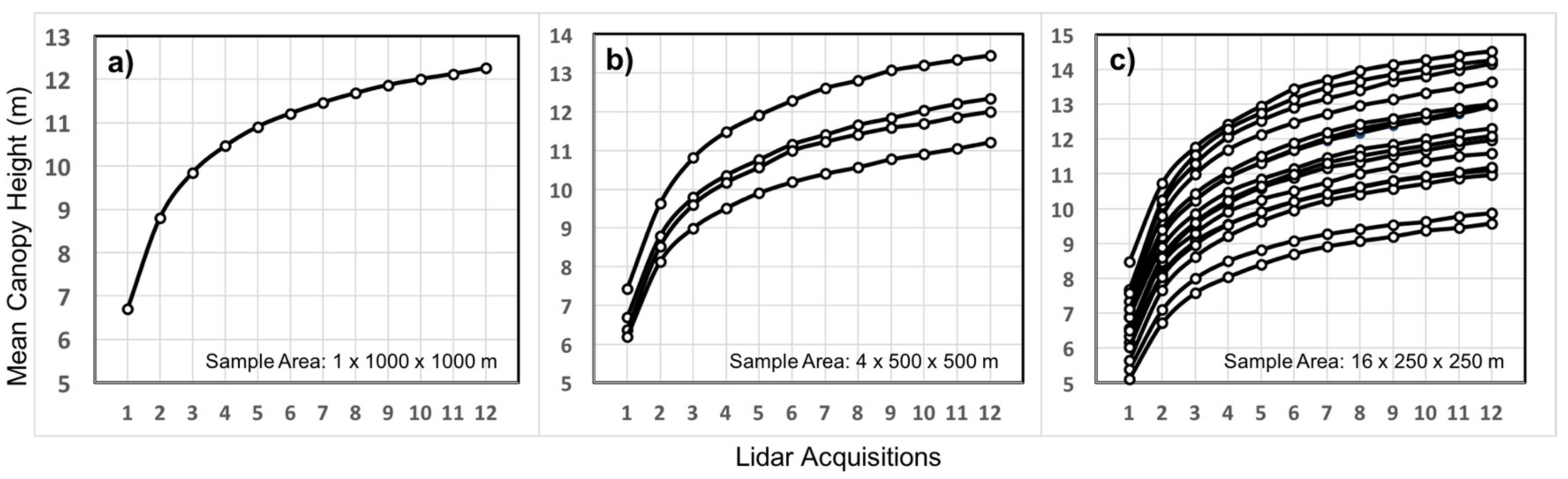

The impact of point density on the mean canopy height (MCH) was calculated using the CHM over an area of 1 km

2 (1000 m × 1000 m,

Figure 8a). On average at the 1 km

2 scale, the mean canopy height increases by approximately a factor of 2 by adding 3–4 lidar acquisitions (

Figure 8a: 1–5). Merging more acquisitions continues to improve MCH asymptotically with approximately 1 m increase by adding 6 more acquisitions (

Figure 8a: 6–12). The results also suggest that the difference in point density introduces a significant bias (about 6 m) in height estimation that can be asymptotically reduced by adding more acquisitions. The bias in MCH can introduce a much larger bias when used in lidar-biomass allometric relations [

42]. The improvement of MCH will also depend on the scale of analysis and may not follow the same asymptotic model as in

Figure 8. To examine this, we performed the analysis at finer scales by dividing the 1 km

2 sample area into four 500 m × 500 m (

Figure 8b) and 16 250 m × 250 m subsample areas (

Figure 8c). The results suggest that, depending on the landscape variations of forest structure and surface topography, the magnitude of bias and the asymptotic improvement with increasing point density may vary significantly.

Further detailed studies in mountain forest ecosystems with sparse vegetation are required to understand and quantify the impacts of point density and the number of acquisitions required to reach asymptotic improvements on forest structure and aboveground biomass estimation. However, our methodology for merging point density from different airborne lidar acquisitions has the potential to improve forest characteristics at scales of a single tree to landscape scales [

21] and leverage the snowpack-motivated acquisitions to create an effective higher point density acquisition that can be used for analysis between actual higher density single acquisitions.

5. Conclusions

We provide a novel approach to combine a time series of snowpack-focused ASO lidar surveys to improve the characterization of ground surface and forest 3D structure. The nominal flights of the ASO for snowpack monitoring provide lower resolution datasets with limited capabilities for vegetation sampling, but its high temporal resolution (through multiple overpasses) enables the calculation of high-resolution point clouds by merging individual acquisitions annually. Our methodology first provides an assessment of the ASO lidar system reliability in terms of geolocation because it directly impacts the consistency of the high-density products. By combining 12 lidar acquisitions over a 7 × 4 km area in the Tuolumne River basin, we concluded that the ASO lidar system procedure that is used to georeference the data produces point clouds with reasonable geolocation accuracy. On average, we found an estimated bias of 0.38 m and 0.12 m with respect to the horizontal and vertical dimensions, respectively. However, certain single acquisitions show a systematic bias up to 1.38 m in planimetry (horizontal) and 0.53 m in altimetry (vertical). We show that our registration method is capable of identifying and compensating for systematic errors in order to improve the geometrical coherence of the point clouds composites.

Our point cloud registration method improved the location accuracy significantly by compensating for erroneous GPS/IMU measurements by averaging geolocation estimates of 12 lidar acquisitions. Our method was designed for application in dynamic natural landscapes with frequent snowfalls that make the selection of tie points very limited and is a unique aspect of the study. The most stable features in these landscapes are the top of tree crowns that play the role of tie objects in the methodology. Unlike former methods that offer a pair-wise solution (e.g., keeping one point cloud fixed as the reference geolocation data and the remaining point clouds are transformed to best match the reference), our approach uses tie objects that are calculated as the centroid using the multi-temporal data.

As a result, we register the point clouds as a function of an averaged location that can be used as a better proxy for the tree apex true location than a single GPS/IMU measurement. As such, our method applies an absolute registration (best compromise between a collection of objects and its UTM/WGS84 coordinates) rather than a relative registration (best match between corresponding points of two datasets). Therefore, the absolute co-registration has a clear advantage for analyzing multi-year point clouds because they can be directly compared. Moreover, our datasets can be directly compared with other sources of information such as aerial or satellite imagery. For instance, the merging of the ASO 3D forest characterization with airborne and satellite multispectral imagery enable the mapping of species, estimation of canopy water loss, and tree stress [

43,

44]. ASO offers a unique high-resolution lidar dataset to monitor forest and surface change over mountain ecosystems with complex ecological and hydrological processes while attending to its primary focus of characterizing mountain snowpack across large mountain basins.