1. Introduction

Forest canopy structure has many components but often refers to the size and spatial arrangement of overstory trees as described by the vertical and horizontal distributions of overstory foliage [

1,

2]. Because of the strong allometric relationship between the forest canopy and other aspects of forest structure, it has been intensively studied as a surrogate for overall forest structure. In addition, the forest canopy plays an important role as a biotic habitat [

3], an area of high photosynthetic capacity [

4], an indicator of biodiversity [

2,

5,

6], and a gauge of forest health [

7]. However, our understanding of forest canopy structure may be constrained in some important ways (e.g., structural and spatial complexity of the canopy at the landscape level) because most existing studies of the forest canopy are based on data collected through field surveys within a small set of sample plots that are often selected subjectively [

8,

9]. In addition, characterisation of the three-dimensional (3D) structure of the forest canopy using conventional field survey data is challenging because of physical access and resource requirements [

10,

11].

By providing varying spatial, spectral, and temporal resolution as well as effective means of 3D canopy reconstruction, the use of remote sensing (RS) technology addresses these issues. RS has also proven an effective means of studying forest canopy structure, as it often complements existing ground-based techniques by contributing reliable, detailed information on various aspects of the complex forest canopy [

12,

13,

14]. In particular, recent advances in RS technology, such as airborne laser scanning (ALS), digital photogrammetry, and unmanned aerial vehicle (UAV) systems, have enabled efficient data collection and fully automated reconstruction of forest canopy surfaces over large spatial areas [

15,

16,

17,

18,

19,

20,

21,

22].

ALS is an active RS technique that uses a light detection and ranging (LiDAR) sensor, which emits a laser beam across the flight path at an operator-specified angle and receives the reflected energy. This technique allows users to determine the distance from the sensor to a target object using either discrete return (pulse ranging) or continuous wave systems. LiDAR measurements have proven to be more successful than other remote sensing options at reconstructing 3D forest canopy structure and more accurate at predicting structural attributes, particularly when acquired with satisfactory point densities [

9,

16,

17]. In addition, this method provides otherwise unavailable scientific insights by allowing for detailed and novel structural measurements [

14,

23,

24]. Therefore, the application of LiDAR measurements to analysing forest canopy structure has been researched intensively in terms of both the area-based approach (ABA) and individual tree-based methods [

16,

25,

26,

27,

28]. Nonetheless, the main limitations of ALS in practice are the high acquisition cost, which limits its application to operational forest management, and the absence of spectral data that can lead to other important information, such as species identification.

Digital photogrammetric techniques such as structure from motion (SfM) also facilitate 3D modelling of forest canopy structure. Following the principles of traditional stereoscopic photogrammetry, SfM uses multiple images from different angular viewpoints to identify well-defined geometric features and to generate a 3D point cloud [

15,

29]. Recently, many studies have taken advantage of digital photogrammetry (e.g., [

30,

31,

32,

33,

34]), particularly for estimating the biophysical properties of individual trees and plot-level forest structural attributes with reasonable accuracy (e.g., [

35,

36,

37,

38,

39,

40]). Moreover, many previous studies attempted to compare photogrammetric products i.e., point clouds, CHMs and structural metrics with LiDAR data [

19,

39,

40,

41,

42,

43,

44,

45,

46,

47,

48,

49]. Recent advances in computer vision algorithms, such as the scale invariant feature transform (SIFT), and parallel bundle adjustments on graphics processing units (GPUs) [

15] have enhanced the potential to match image features in many overlapping photographs (100 s–1000 s) acquired from different angles. Thus, SfM can be used effectively to process imagery acquired from UAV platforms (i.e., small multi-rotor or fixed-wing UAVs of less than 5 kg) [

21,

22,

50,

51,

52].

The UAV system is a newly emerging method of fine-scale remote sensing that has the key advantages of (1) flexibility and decentralization of data acquisition; (2) potential for obtaining data with high spatial and temporal resolution; (3) insensitivity to cloud cover; and (4) low operational costs. High-resolution UAV imagery can be utilized to develop point clouds as well as to extract fundamental characteristics such as tone (color), texture, pattern, shape or association [

18,

21,

22]. Nevertheless, out of those fundamental characteristics, tone and texture can be easily used for digital interpretation of the imagery. Therefore, UAV platforms represent a low-cost remote sensing alternative to airborne and satellite platforms and enable the production of cost-effective data with an unrivalled combination of spatial and temporal resolution at local scales (e.g., for areas the size of traditional forest plots up to the size of forest compartments) [

53]. These characteristics have created new possibilities for the utilization of UAV systems and the SfM technique in forest management to collect information on the spatial and structural variability of the forest canopy [

18,

22,

38,

45,

51].

Currently, three types of small UAV platforms that are widely used for scientific research are available on the market: multi-rotor, single-rotor (similar in design and structure to a helicopter), and fixed-wing UAVs [

54]. Single-rotor UAVs (in comparison to multi-rotor UAVs) have the advantage of efficient power consumption but they have limited agility, higher complexity, operational risk and product costs. Compared to multi-rotor UAVs, fixed-wing models are superior in forestry applications because of several factors, including (1) faster flying speeds that allow them to cover large areas without being influenced by wind resistance or bad weather as easily as multi-rotors; (2) long endurance and an extended battery life that enable them to cover many miles in a single session; (3) an ability to carry heavier payloads; and (4) capability to fly at higher altitudes that permit a greater visual line of sight (VLOS) range. Thus, fixed-wing UAVs enable efficient data collection over larger areas and are a viable option for forestry applications, including operational forest management that requires geo-referenced imagery at comparatively large scales.

Although fixed-wing UAVs have great potential for use in forestry applications, very few studies have involved detailed analyses of point clouds or canopy surface models built from fixed-wing UAV imagery at comparatively large scales, such as the forest management compartment level, and even fewer studies have used fixed-wing UAV imagery to estimate forest structural attributes (e.g., [

19,

38,

55]). Applications of fixed-wing UAV imagery and the robustness of digital photogrammetry have also not been studied intensively over a range of forest types, such as mixed conifer–broadleaf forests. In this study, we address these issues.

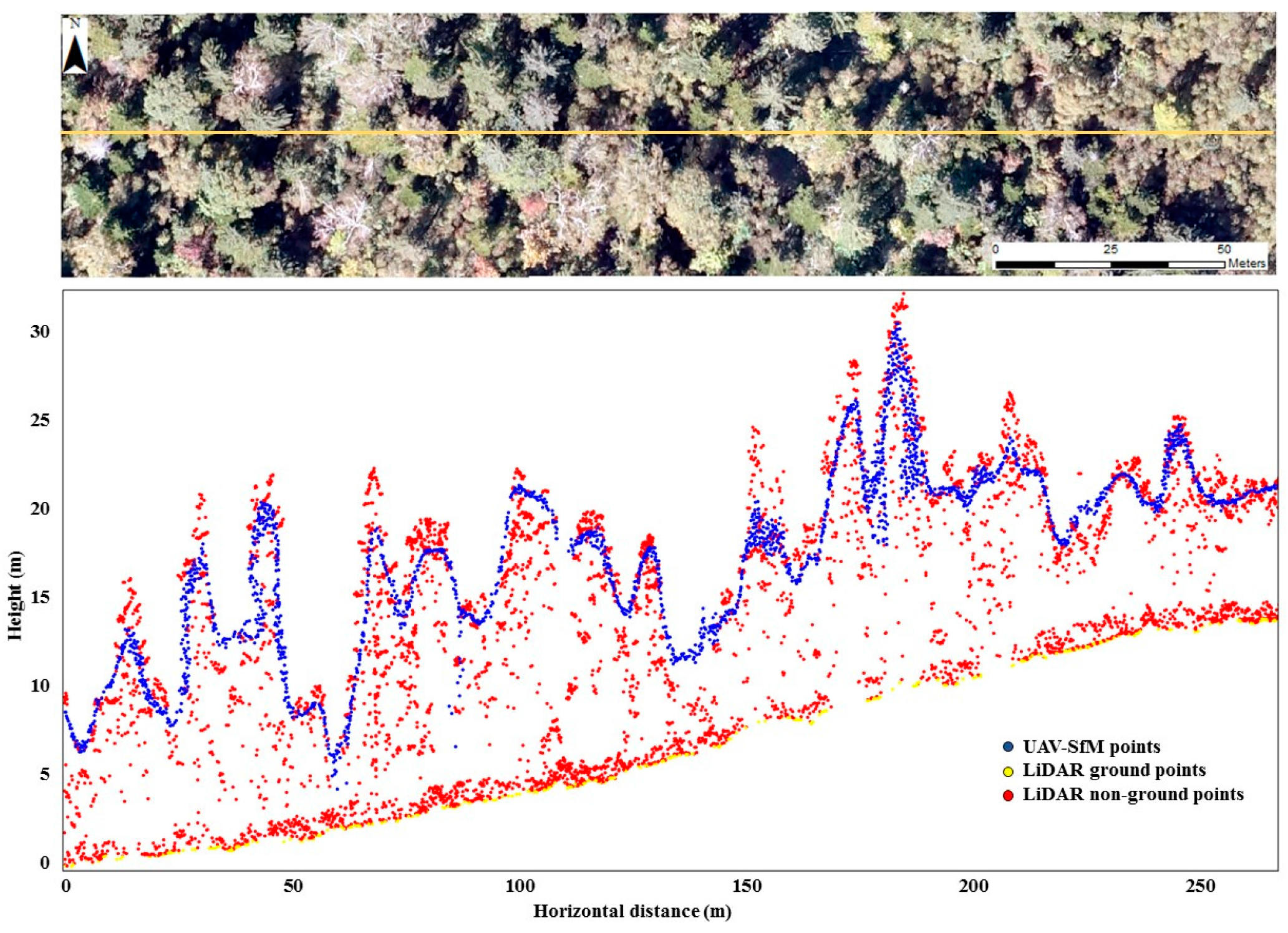

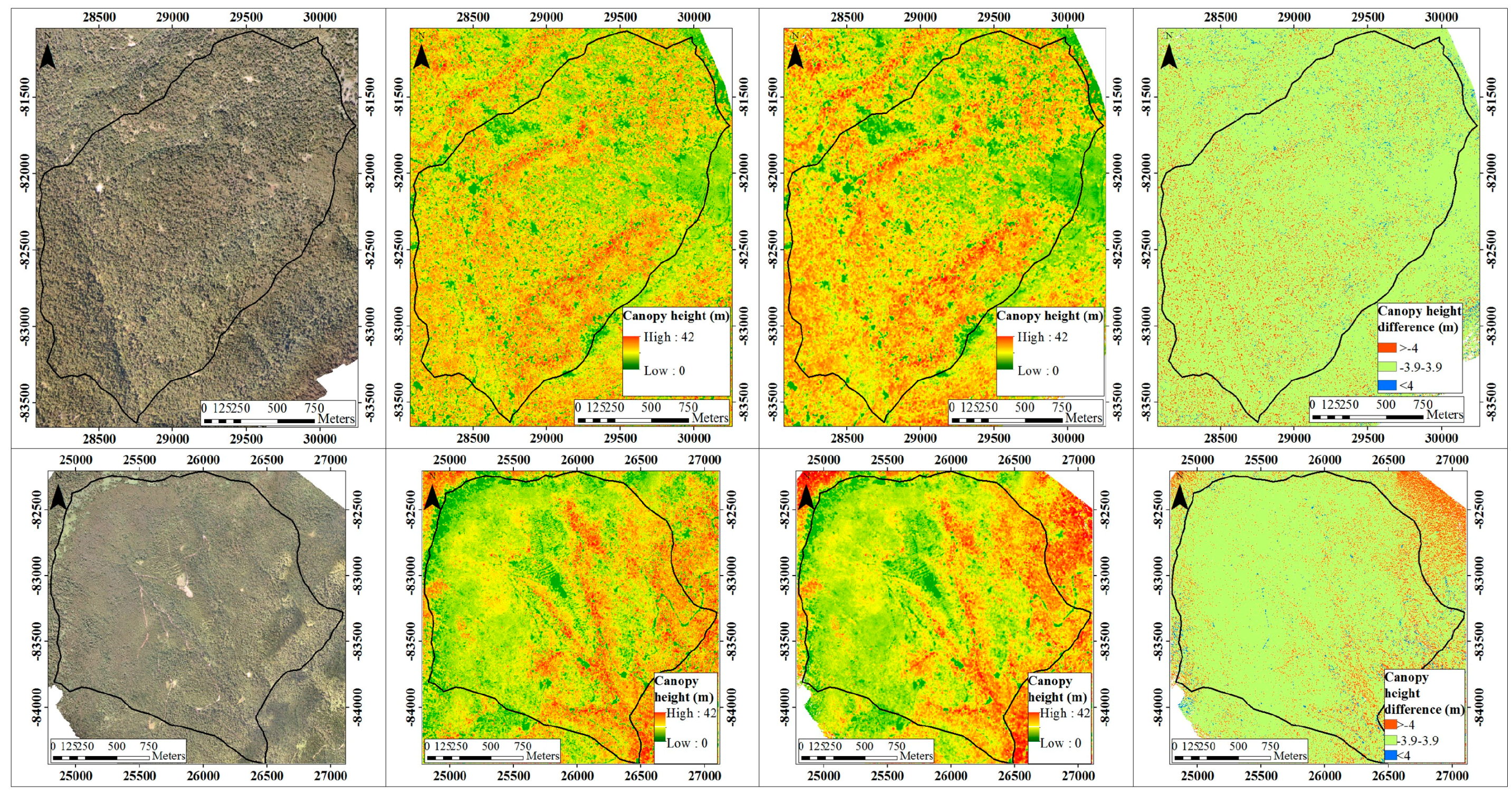

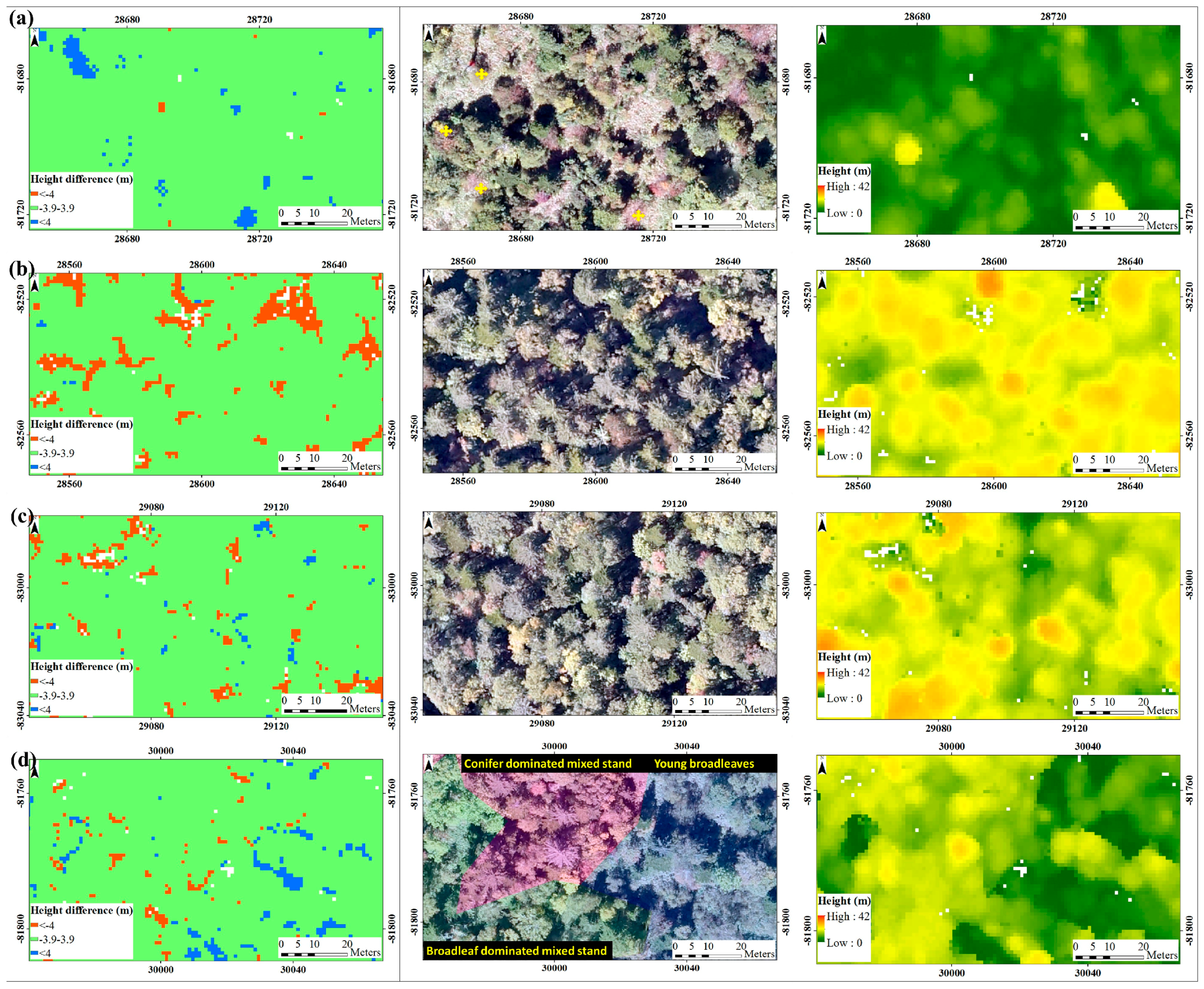

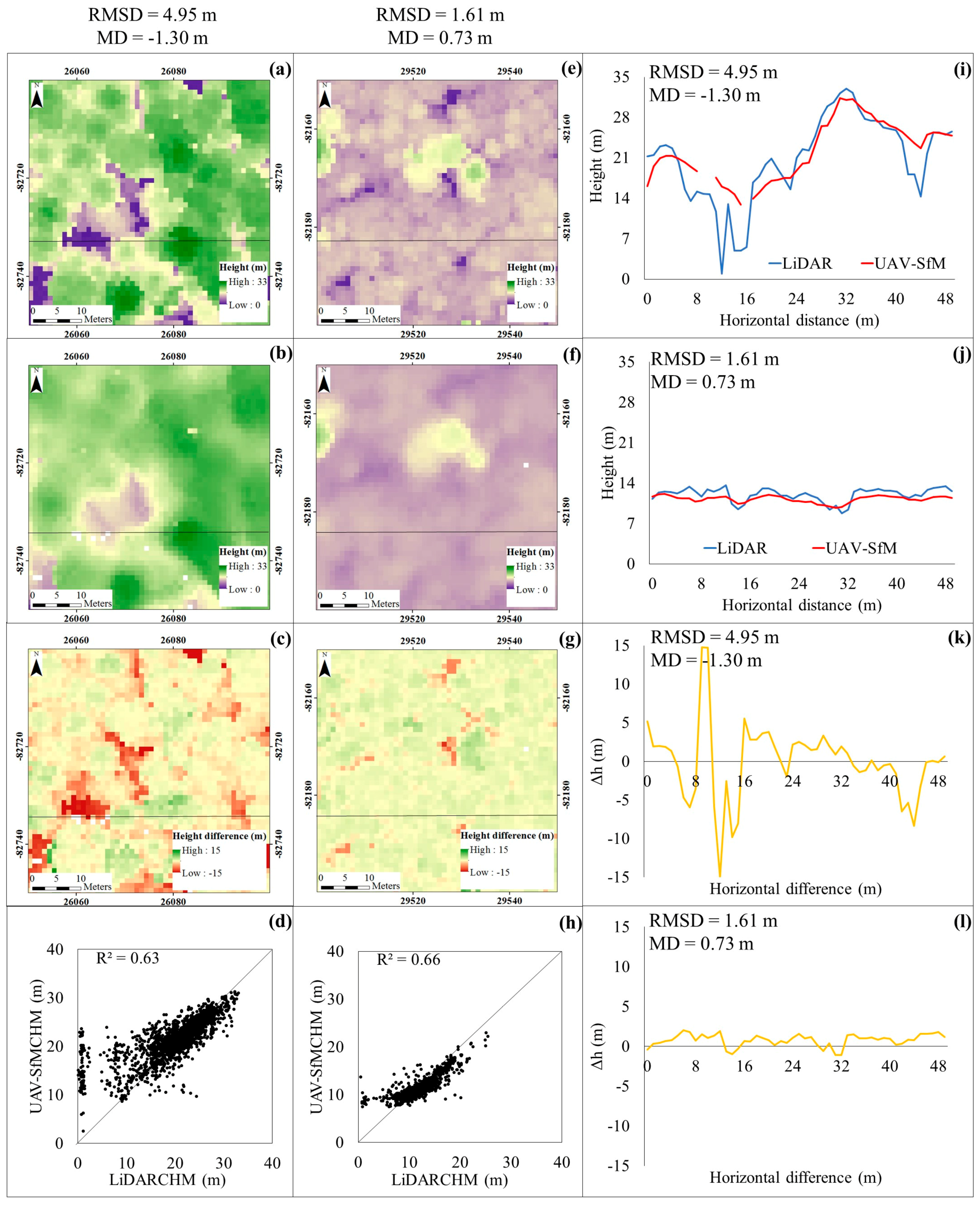

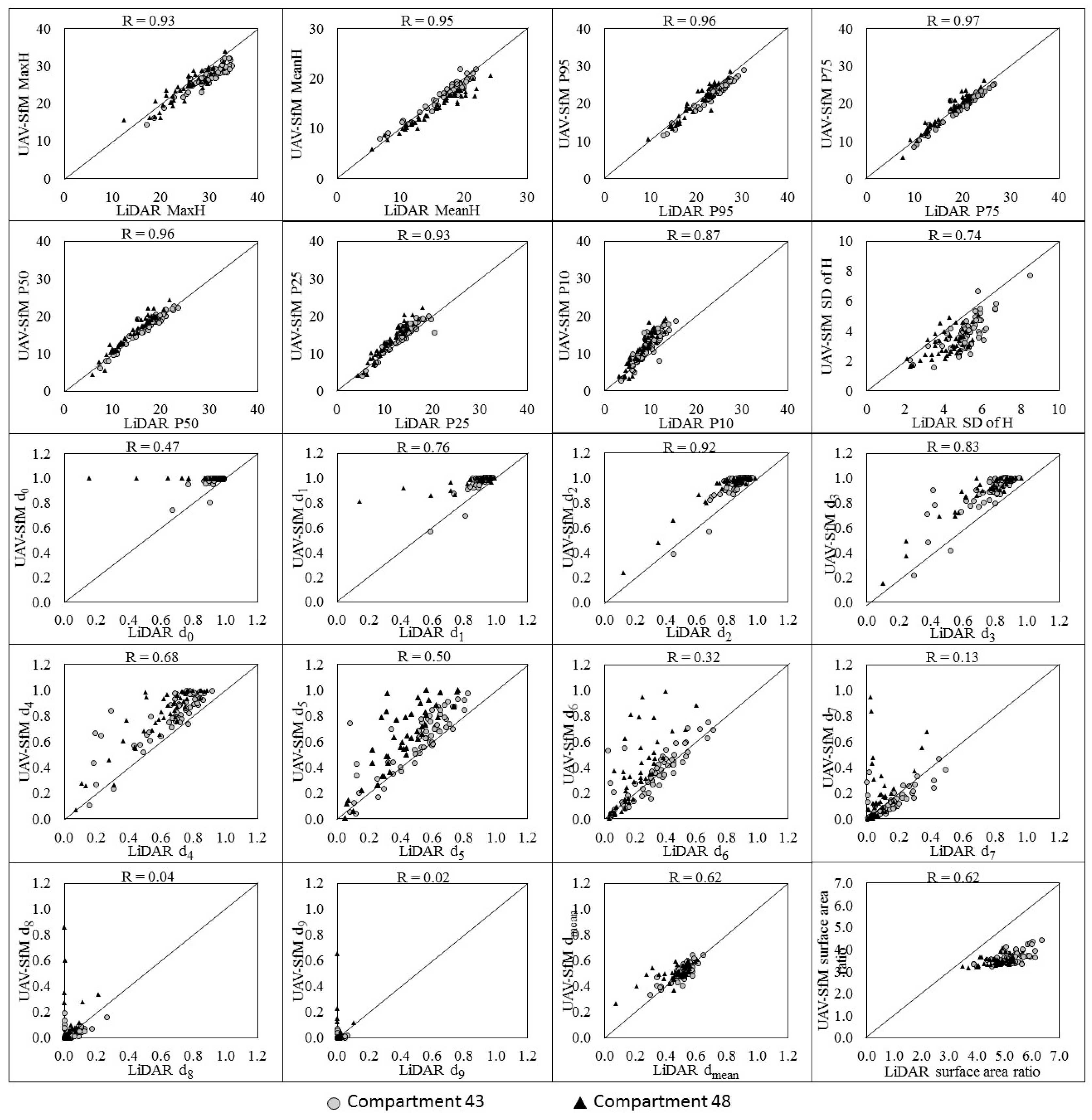

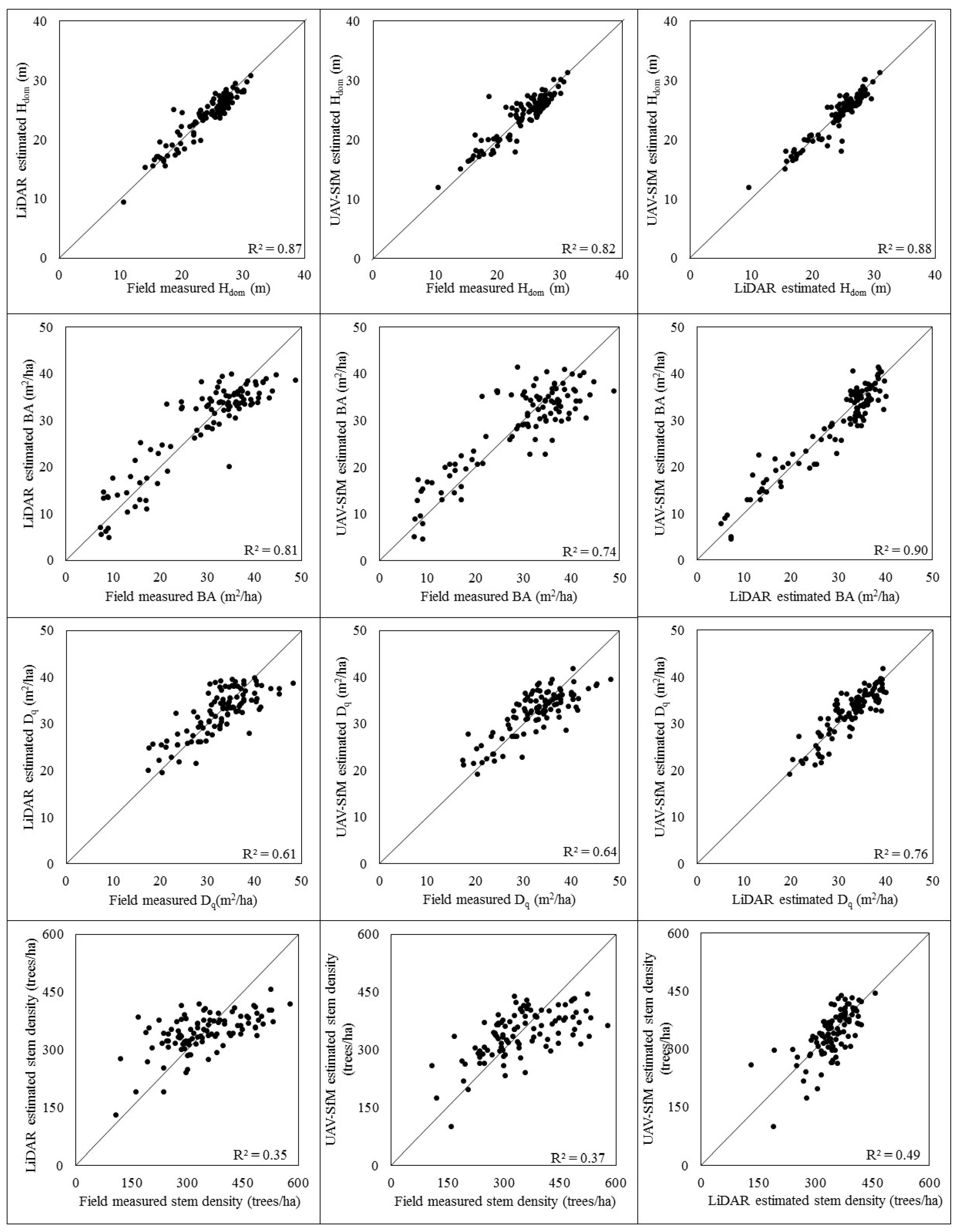

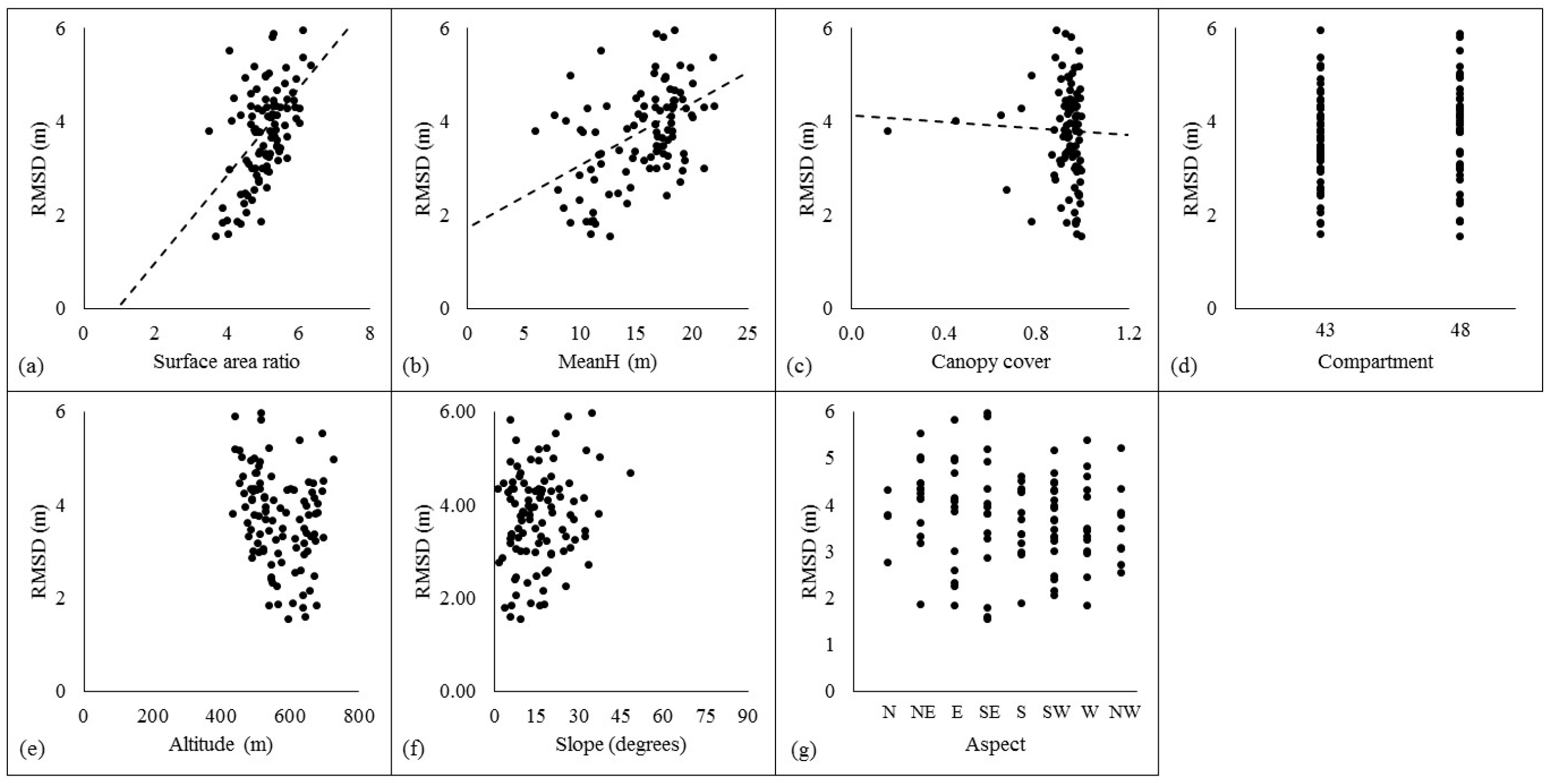

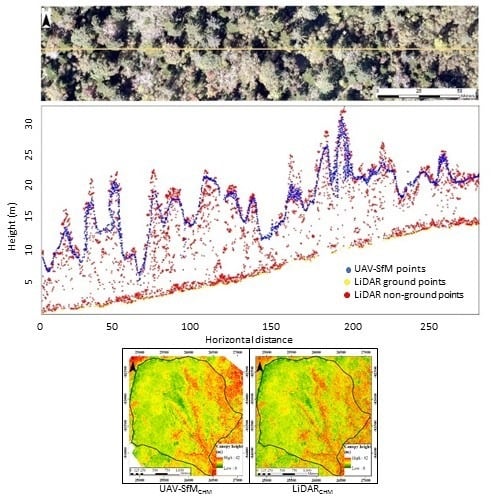

The aim of the present study was to assess the performance of image-based point clouds derived from fixed-wing UAV imagery captured over a mixed conifer–broadleaf forest with varying levels of canopy structural complexity. First, we conducted a detailed evaluation of UAV-SfM outputs by comparing UAV-SfM-derived canopy height models (CHMs) and structural metrics to LiDAR-derived CHMs and structural metrics. We used LiDAR data as a reference data set to assess the performance of UAV-SfM, as they are considered reliable for forestry applications for two main reasons: (1) the non-clustering effect of LiDAR data leads to accurate estimation of forest structural attributes and (2) the data have a proven ability to reconstruct 3D canopy structure with high accuracy for a variety of forest types [

40,

43,

48,

56]. Second, we assessed the utility of UAV-SfM-derived point clouds for estimating several forest structural attributes that are commonly used in forestry applications. Finally, we examined the effects of forest canopy structural metrics and terrain conditions on the performance of the UAV-SfM canopy model.

5. Conclusions

In this study, the UAV-SfM technique provided a fair characterisation of a mixed conifer–broadleaf forest canopy with varying levels of structural complexity comparable to the results of high-cost airborne LiDAR observation. LiDAR and UAV-SfM data provided similar results in terms of area-based dominant height, basal area, and quadratic mean DBH estimations. Therefore, our results highlight that although there’re differences in between airborne laser scanning and digital photogrammetry techniques, digital photogrammetric products developed using fixed-wing UAV imagery over the mixed conifer–broadleaf forests in northern Japan performed well in characterising forest canopy structure and predicting forest structural attributes that are commonly used in forestry applications. However, UAV-SfM CHMs are likely to be influenced by the structural complexity of the forest canopy. Furthermore, our study demonstrates that fixed-wing UAV imagery could be utilised efficiently in data collection at the local scale, that the SfM technique is capable of detailed automatic reconstruction of the 3D forest canopy surface of mixed conifer–broadleaf forests, and that and that UAV-SfM products are promising for providing reliable forest canopy structural measurements when combined with a LiDAR DTM. A comparison of fixed-wing UAV-SfM data and LiDAR data for forest canopy analyses was the central focus of this study. However, for overall forest structural assessment studies (i.e., those that include the understorey and ground layers), UAV-SfM data could be utilised as a complement rather than an alternative to LiDAR data for two reasons. First, a photogrammetric point cloud does not provide the same level of penetration into the canopy as LiDAR and therefore cannot deliver the same level of information on vertical stratification, understorey vegetation layers, and ground cover. Second, the accuracy of forest canopy height measurements and the use of photogrammetric canopy height models in forest areas with dense canopy cover depend largely on the availability of an accurate DTM, preferably a LiDAR DTM. We can expect several future improvements in the application of fixed-wing UAVs in the forestry sector given their potential to provide detailed information to forest managers and ecologists. For example, point clouds and CHMs with high accuracy can be used in multi-source forest resource assessment and forest structural dynamics monitoring at local scales, whereas high-resolution orthomosaics are better suited to stand delineation, mapping, and forest health monitoring. As shown in our study, fixed-wing UAVs offer key advantages for operational forest management. Therefore, future research should focus on broader and more relevant topics in forestry such as testing how well the photogrammetric products can predict the actual forest structure, and analyzing the structural complexity of the forest canopy and its dynamics using fixed-wing UAV imagery. In addition, research into improving the accuracy of photogrammetric products for various forest types would also provide great benefit.