1. Introduction

Land cover change detection (LCCD), which is a classical problem in many remote sensing disciplines, has been extensively studied [

1,

2,

3]. The development of remote sensing techniques has made multi-temporal images conveniently available, and made LCCD an increasingly popular research topic because of its practical applications [

4], such as in forest defoliation [

5], land cover database updating [

6,

7], and urban expansion [

8,

9].

In the past decades, many change detection techniques have been developed and applied in LCCD [

10,

11,

12,

13]. These approaches usually involve two main steps: calculating the change magnitude image and determining a binary threshold. The change magnitude image between bi-temporal images can be obtained mainly by image differencing [

14], image ratio [

15,

16], change vector analysis (CVA) [

17,

18,

19,

20], and spectral gradient difference [

21]. Then, a binary threshold is needed to divide the change magnitude image into a binary change detection map; the popular threshold determining methods for change detection are mainly expectation maximization (EM) [

22,

23], fuzzy c-means [

24,

25,

26], and Otsu’s method [

27,

28,

29]. In general, these methods measure the distance between the bi-temporal images and use a threshold to determine whether each pixel in the change magnitude image is changed or unchanged. Although these methods can provide a binary change detection map, much noise still exists in the produced map. Therefore, the performance in producing a raw change detection map can still be improved.

VHR remote sensing images can provide much detailed ground information. The contextual feature of VHR images is usually adopted to complement the spectra, for enhancing the performance of the detection result and fully utilizing spatial information [

30]. The most commonly used contextual features for LCCD purposes include Gabor-wavelet-based difference (GWDM) [

31,

32,

33], Markov random field (MRF) [

14,

34,

35,

36,

37], and gray level co-occurrence matrix textures [

38]. Several advanced hybrid change detection methods have been developed. For example, T. Celik proposed a change detection approach based on principal component analysis and k-means clustering (PCA_Kmeans) [

39], Zhang et al. proposed an unsupervised LCCD method from remote sensing images by using level set evolution with local uncertainty constraints (LSELUC) [

40], and Zhang et al. proposed an LCCD method by considering local spectrum-trend similarity between bi-temporal images [

41]. In addition, LCCD also plays an important role in remote sensing applications, such as global environmental change problem [

42], urban growth detection [

43,

44], and sustainable urbanization [

45]. Therefore, proposing a novel approach for generating an LCCD map product with high accuracy and good performance is important.

The aforementioned methods are preprocessing LCCD approaches that generate binary change detection by measuring the change magnitude and use a selected binary threshold. Spatial features can be obtained from the original spectral space to enhance the detecting accuracy of LCCD using VHR remote sensing images. However, post-processing LCCD (P_LCCD) has not received sufficient attention. We define P_LCCD as a refinement of the labeling of raw change detection results to improve its original performance and accuracies.

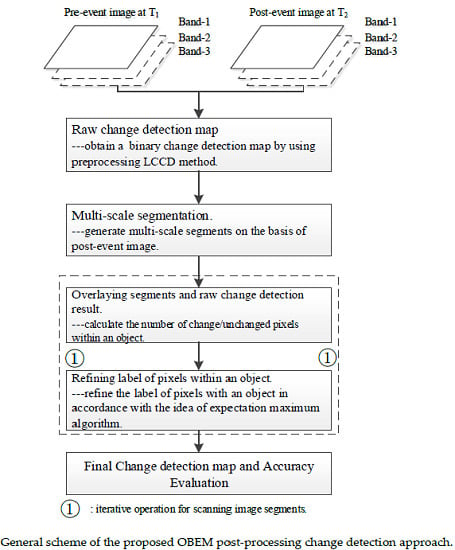

In this study, a P_LCCD method called object-based expectation maximum (OBEM) is proposed to improve raw change detection results. To the best of our knowledge, the P_LCCD method has not been applied for LCCD with remote sensing images. Furthermore, a comprehensive P_LCCD framework is currently lacking. Thus, we propose an OBEM post-processing approach to refine raw change detection results and obtain an improved detection performance.

The rest of the paper is organized as follows.

Section 2 describes the proposed OBEM processing approach.

Section 3 presents the experiments and analysis.

Section 4 contains the discussion, and

Section 5 elaborates the conclusions of the study.

2. OBEM Post-Processing LCCD Approach for VHR Images

Previous studies [

1,

7,

15,

16] have focused on preprocessing change detection in the past decades; however, P_LCCD has not received much attention. Therefore, a P_LCCD framework can be proposed to improve the performance of raw change detection result. In this section, a P_LCCD method called OBEM is proposed. The flowchart of the proposed OBEM is shown in

Figure 1.

As shown in

Figure 1, the proposed OBEM consists of the following steps:

- (1)

A raw binary change detection map (R_BCDM) is obtained by a traditional LCCD method (preprocessing change detection method).

- (2)

Multi-scale segmentation based on the post-event image must be conducted to utilize the spatial information of the detecting target. A most commonly used multi-scale segmentation fractal net evolution algorithm (FNEA) [

46] is employed in the proposed OBEM approach, and additional details on FNEA are reviewed in the following section.

- (3)

The multi-scale segmentation result based on the post-event image is overlaid spatially on the R_BCDM. The number of changed and unchanged pixels within an object is calculated.

- (4)

The label of the pixels within the object is refined in accordance with the labeling of the maximum pixels’ label. Steps 3 and 4 are an iterative progress, and the R_BCMD is scanned and refined by object. This refinement assumes that the internal pixels of an object are homogeneous and are belonging to one class. This assumption accords with that in several existing object-based image processing methods [

5,

47,

48,

49].

When the scanning progress is terminated, the pixels’ label of R_BCDM is refined thoroughly, and the refined result is defined as the final binary change detection map (F_BCDM). Then, F_BCDM is evaluated by comparing it with the ground reference map.

2.1. Brief Review of Multi-Scale Segmentation and Expectation Maximum

Here, we briefly review the most commonly used multi-scale segmentation algorithm, that is, the fractional net evaluation approach (FNEA) [

50]. The main goal of multi-scale segmentation is to integrate an image into disjoint compartments [

47,

48]. Image segmentation is important owing to its fast expanding field of applications, such as image processing [

51], image classification [

49], and object recognition [

52]. Multi-scale segmentation also plays an important role in our proposed OBEM approach and in other existing applications. The FNEA algorithm, which is adopted as the key technique of the proposed OBEM method, is reviewed to fully understand the proposed OBEM [

46,

53]. FNEA can be described as a region-merging technique, which starts with each pixel forming one image object or region; this optimization procedure minimizes the average heterogeneity and maximizes the respective homogeneity for a number of image objects. In the merging procedure, three related parameters (scale, shape, and compactness) are used; scale determines the maximum allowed heterogeneity for the resulting image segments, shape defines the contribution to the entire homogeneity criterion compared with the spectral (color) criteria, and compactness optimizes image objects. Additional details on FNEA multi-scale segmentation can be found in [

46,

54]. In the proposed OBEM post-processing LCCD approach, we use the FNEA multi-scale segmentation algorithm that is implemented in eCognition business software. Shape and compactness should be set with high values in practical application to obtain segments with high homogeneity.

Apart from multi-scale segmentation, the expectation–maximization (EM) algorithm also plays a pivotal role in our proposed OBEM algorithm. Here, EM is reviewed briefly to enhance understanding of the proposed OBEM. The EM algorithm is explained and given its name in the research by Dempster et al [

55]; it is a method of finding the maximum likelihood or maximum a posteriori estimate. Since its introduction, EM has been applied in many image processing fields, such as LCCD based on remote sensing images [

22,

56,

57], image classification [

58,

59], image segmentation [

60], and target recognition [

61]. Inspired by these applications based on EM, EM in the proposed OBEM is employed as the rule to refine the labeling of the pixels within an object. Additional details on this refinement are presented in the following section.

2.2. Proposed OBEM Post-Processing LCCD Approach

In accordance with multi-scale segmentation and EM algorithms, the proposed OBEM considers the detecting target’s spatial information through multi-scale segmentation and refines the R_BCDM by object. Here, the multi-scale segmental results based on the post-event image are defined as O, and O is defined as , where n is the total number of objects. The R_BCDM is obtained by a preprocessing LCCD method.

On the basis of the above-mentioned definitions, a refined criterion of OBEM is presented as

where

is the label for refining the pixels that are within an object

.

and

represent the number of unchanged and changed pixels within an object

for the R_BCDM, respectively. Each pixel label within an object is refined by comparing the total number of changed and unchanged pixels within an object. The refined label of each pixel is assigned as the label of the maximum number pixels. This refining strategy is an effective approach for smoothing and denoising. Prior to this current work, this strategy has not been used for optimizing LCCD results.

The proposed OBEM post-processing LCCD presents two advantages. (1) Given the spatial information inherited by the detecting target from post-event images, the details of the detecting target, such as edge, shape, and size, can be preserved in the smoothing procedure; (2) In theory, an object is deemed as being of a pure material, and the pixels within an object can potentially possess the same value. When refining the pixel labels of an object in accordance with the expected maximum number pixels’ label of that object, the homogeneity of the object can be improved. Therefore, the noise pixels in the R_BCDM can be further removed by the proposed OBEM post-processing LCCD approach.

A schematic example for demonstrating the effectiveness of the proposed OBEM approach is presented in

Figure 2. The blue, yellow, and black dotted line regions in the figure are the objects

O1,

O2, and

O3, respectively. In addition, “0” and “1” present the “unchanged” and “changed” pixels, respectively. Comparing

Figure 2a,c shows that OBEM smoothens noise effectively for each object. Furthermore, the edge between different objects is clearly preserved.

4. Discussion

Compared with the six existing commonly used methods, the proposed OBEM approach can achieve the best accuracies and performance in terms of the FA, MA, and TE. The results

Table 4,

Table 5,

Table 6 and

Table 7 confirm that the proposed OBEM can improve the raw detection accuracy of each of the preprocessing methods.

To facilitate the widespread practical application of the proposed OBEM approach, this section discusses the influence of the parameters of the FNEA multi-segmentation algorithm on the change detection accuracies of the proposed OBEM approach. As discussed in the previous section, the shape and compactness of the adopted FNEA multi-scale algorithm are fixed at relatively high values of 0.8 and 0.9, respectively. Therefore, the refining accuracies of the proposed OBEM approach only rely on the scale parameter of segmentation. The relationship between the segmentation scale of FNEA and the detection accuracies of the proposed OBEM is investigated in this section to promote the practical application of the proposed OBEM approach.

Figure 11 shows the influence of the segmentation scale on the FA, MA, and TE of the proposed OBEM method for the first data set. From

Figure 11, three observations can be made on the general performance of the proposed OBEM approach. (1) As shown in

Figure 11a, the FA gradually moves to a horizontal line as the scale increases from 5 to 50; (2)

Figure 11b indicates that, when the scale ranges from 5 to 50 for the refining result based on the corresponding raw change detection map, the accuracy of MA decreases first, and then increases. When the scale is approximately 20, the optimized MA is obtained for the first data sets. This result is attributed to that when the segmental scale is extremely small, noise in a raw change detection map cannot be removed sufficiently. With the increase in the scale, large amounts of salt-and-pepper noises can be removed correctly from the raw change detection map. However, if the scale is extremely large, then a large number of details of the change areas will be oversmoothed, and a large number of changed pixels will be missed or incorrectly removed in the refining process. Therefore, selecting a suitable segmentation scale is the key to obtaining an improved LCCD result for the proposed OBEM approach; (3)

Figure 11c shows that TE gradually moves to a horizontal level with the increase in the parameter scale.

The relationship between FA, MA, and TE and the segmentation scale of the proposed OBEM is also investigated with the second data set, and the results are shown in

Figure 12. The conclusion here is similar to the conclusion obtained for the first data set. To demonstrate the conclusion here intuitively, a visual investigation on the influence of scale parameter on the refining performance of the proposed OBEM approach is also conducted, and the results are shown in

Figure 13. The figure shows that noise is increasingly removed with the increase in the scale parameter compared with the raw change detection map. In addition, the TE decreases from 19.2% to 4.46% with the increase in the scale parameter from 0 to 20. This conclusion supports previous quantitative investigations.

5. Conclusions

Most of the current change detection methods based on VHR remote sensing image focus on preprocessing techniques, such as texture feature extraction and difference measurement [

33], contextual feature extraction [

40], and MRF-based methods [

35,

36] for LCCD. In general, the basic principle of preprocessing change detection techniques is to utilize spatial information to complement the insufficient spectra for VHR remote sensing images, and thus improve the LCCD accuracy and performance. These methods have achieved great success in recent years, and are gradually becoming the standard methods for LCCD of VHR remote sensing images. Under this background, we propose OBEM for post-processing LCCD. Comparatively, this method has not received as much attention as preprocessing methods. The proposed OBEM aims at refining the raw change detection result, and obtains improved change detection accuracy.

Four experiments are conducted on two pairs of VHR aerial images, one pair of SPOT-5 satellite images and one pair of Landsat TM remote sensing images with medial-low spatial resolution. Compared with widely used methods, such as GWDM_EM and GWDM_FCM [

33], CVA_EM [

62], LSELUC [

40], MRF_EM [

14], and PCA_Kmeans [

39], the proposed OBEM can more effectively refine the raw change detection results of the preprocessing change detection methods and obtain higher detection accuracies. In addition, the proposed OBEM approach can investigate the relationship between multi-scale segmentation parameter and refining change detection accuracy. FA and TE gradually move to a stable trend with the increase in the segmentation scale, and this completion is helpful for the practical application of the proposed OBEM approach.

The contribution of the proposed OBEM approach is three-fold: (1) To the best of our knowledge, this study is the first to propose the P_LCCD method for VHR bi-temporal remote sensing images; (2) The proposed OBEM clearly demonstrates that raw change detection results can be enhanced in an object manner, and the experimental results prove that it is an effective and succinct way to refine raw change detection result aiming at improved change detection accuracies; (3) Investigating the relationship between the segmentation parameters and refined change detection accuracies is important to promote or inspire the design of related methods.

For future studies, additional remote sensing images with various land cover scenes, such as forest deformation, water level change of lakes, and building change detection in urban areas, will be collected and applied to verify the adaptability and robustness of the proposed OBEM. Furthermore, additional P_LCCD methods will be developed to improve the accuracy of raw change detection.