1. Introduction

Deformation monitoring of engineering structures such as bridges, tunnels, dams, and tall buildings is a common application of engineering surveying [

1]. As summarized in Mukupa et al. [

2], deformation analysis can be based on different comparison objects, namely, point-to-point, point-to-surface, or surface-to-surface. The point-to-point-based analysis is a common approach to describe deformations that are captured by conventional point-wise surveying techniques. Examples of such techniques are the total station and the global navigation satellite system; however, in many cases, these have been surpassed by the use of LiDAR technology, especially terrestrial laser scanning (TLS) [

3,

4]. Although the single-point precision of TLS is in the sub-centimeter range (

to

mm), the high redundancy of the scanning observations facilitates a higher precision via the application of least-squares based curve or surface estimation and, hence, an adequate precision of the estimated deformation parameters [

5]. A point-to-surface-based analysis is carried out to represent a deformation by the distance between the point cloud in one epoch and the surface estimated from measurements in another epoch. Such a surface can be constructed as a polygonal model (mesh) [

6,

7,

8] or as a regression model (e.g., a polynomial or B-spline surface model) [

9,

10,

11,

12,

13]. The procedure of a surface-to-surface-based deformation analysis, which is appropriate in certain situations, is to divide the point clouds into cells and to compare the parameters of fitted planes based on cell points in two epochs. This method is applied in Lindenbergh et al. [

14], where the different positions of the laser scanner and strong wind contribute to the change of the coordinate system. The aforementioned three approaches to deformation analysis are complemented by the “point-cloud-based” approach, in which a deformation is reflected by the parameters of a coordinate transformation between sets of point clouds in various epochs. The common algorithm for determining the transformation matrix is the iterative closest point algorithm. The authors of Girardeau-Montaut et al. [

15] presented three simple cloud-to-cloud comparison techniques for detecting changes in building sites or indoor facilities within a certain time.

Aiming at rigorous deformation detection from scatter point clouds, it is crucial to describe the geometrical features of the object accurately by an appropriate curve or surface regression model, and emphasis is put on the latter model in this paper. The purpose of surface fitting is to estimate the continuous model function from the scatter point samples, which can be implemented by approximation in the case of redundant measurements. There are many approximation approaches for working with surfaces based on an implicit, explicit, or parametric form. Parametric models are usually employed to fit point cloud data in applications such as deformation monitoring and reverse engineering. Different parametric models perform differently in terms of accuracy and number of coefficients when fitted to a dataset. Among the many methods utilized in various applications for approximating point clouds, polynomial model fitting is usually applied to smooth and regular objects due to its simple operation. In [

16], the authors assumed a concrete arch as regular and analyzed the deformation behavior through comparing fitted second-degree polynomial surfaces. The more involved fitting of B-splines and non-uniform rational basis splines is often preferred for modeling geometrically complicated objects. In this context, much research has focused on the optimization of the mathematical and stochastic models. In Bureick et al. [

17], the authors optimized free-form curve approximation by means of an optimal selection of the knot vector. Furthermore, in Harmening and Neuner [

18], the authors improved the parametrization process in B-spline surface fitting by using an object-oriented approach instead of focusing on a superior coordinates system, thereby enabling the generated parameters to reflect the features of the object realistically. Moreover, in Zhao et al. [

19], the authors suggested a new stochastic model for TLS measurements and used the resulting covariance matrix within the least-squares estimation of a B-spline curve.

The need for model selection and statistical validation was emphasized in Wunderlich et al. [

20], the authors of which described the deficiencies in current areal deformation analysis and presented possible strategies to improve this situation. Typically, the selection of surface model depends on the object features—for example, whether the surface is regular or irregular. However, in most cases, it is unclear whether the object is smooth enough to be described by a simple model (e.g., as a low-order, global polynomial surface) or not. This limitation serves as the motivation for discriminating between estimated surface models in order to select the most appropriate one. In the context of model selection, Harmening and Neuner [

21,

22] investigated statistical methods based on information criteria and statistical learning theory for selecting the optimal number of control points within B-spline surface estimation. Another possibility is to compare the (log-)likelihoods of competing models directly by means of the general testing principle by D.R. Cox [

23]. In Williams [

24], the authors improved the Cox’s test based on the use of Monte-Carlo simulation, which is straightforward to implement. This kind of test has already been used in Zhao et al. [

19] to select the best fitting stochastic model for B-spline curve estimation. In Vuong [

25], the authors use likelihood-ratio-based statistics to discriminate the competing models based on Kullback–Leibler information criterion. Such hypothesis tests offer the advantage that significant probabilistic differences between models can be detected, which is information that has not been provided by the methods mentioned previously.

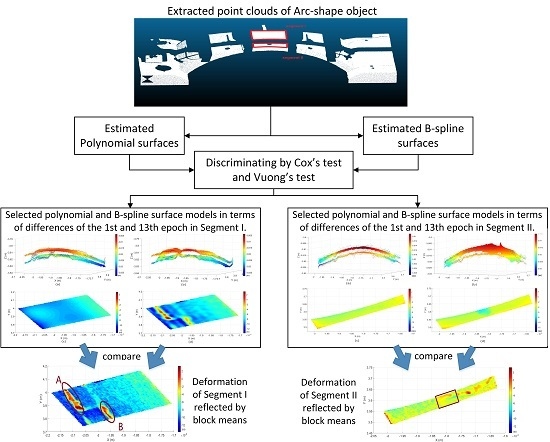

The motivation of this paper lies in the selection of the most parsimonious, yet sufficiently accurate, parametric description of structure based on TLS measurements, whose model is applied to reflect the surface-based deformation of measured objects. Different from standard model selections procedures based on information criteria, we introduce two likelihood-ratio tests from D.R. Cox and Q.H. Vuong, which are instantiated in numerical examples to discriminate statistically between widely used polynomial and B-spline surfaces as models of given TLS point clouds. The selected surface model’s performance in reflecting deformation is compared with the result of the block-means approach. The paper is organized as follows. In

Section 2, the methodology of surface approximation and model selection is reviewed and explained. This methodology is instantiated in two numerical examples by discriminating between widely used polynomial and B-spline surfaces. The evaluation of approximated surface models as well as their performance in deformation analysis are given as results in

Section 3. The subsequent

Section 4 provides a further discussion on the results and a comparison with results obtained by some well-known penalization information criterion approaches. Finally, conclusions are drawn in

Section 5.

4. Discussion

In our numerical example of Segment I, the different results between the two hypothesis tests are caused by the penalized term regarding the parameter numbers. In

Table 2, for example, Pair III has various test results. In Cox’s test, the fourth-degree polynomial model is rejected because of the relatively poor accuracy, while the Vuong’s test result recommends neither, because the improved accuracy is offset by the punishment of increasing parameters. In parallel, in

Table 3, the test decision initially shows in the consistency of both tests that the B-spline models are better compared to the fourth-degree polynomial model. However, as the number of parameters increase, the improvement of model accuracy declines. Finally, in Voung’s test, the advantage of the model’s quality is offset again by the large penalized value and, consequently, shows results that are different from Cox’s test in Pair 36.

Although Cox’s test without penalized term is limited to discriminate models with similar parameters, it is practically very straightforward to implement and are able to offer more reliable decisions by simulating the test distribution, especially when the sample size is small [

33]. We expect to improve the simulation-based version of Cox’s test by adding a proper correction factor similar to that in Vuong’s non-nested hypothesis test, which would be one of our future research projects.

Since previous geodetic literatures [

21,

22,

28] has solved the model selection problem through well-known penalization information criteria: the AIC and BIC, it is necessary in this section to compare Vuong’s non-nested hypothesis test with this widely used approach. It is noticeable that there are close connections between AIC, BIC, and Vuong’s test. Taking the BIC as an example, the value of model 1 is calculated as

where

is the maximum value of the likelihood function for Model 1,

denotes the parameter quantity, and

N is the number of measurements. The different BIC value between two models is calculated as

where the first term in the right part contains logarithmized likelihood ratio

in Equation (

16), so that

is equal to the (un-normalized) adjusted test statistic

for Vuong’s test. The main difference is that Vuong’s test makes judgments in a framework of likelihood ratio hypothesis testing, which offers the advantage that significant probabilistic differences between models can be detected, which is not provided by classical penalization information criterion methods. We compared the Vuong’s test results with both the AIC and BIC to discriminate between B-spline surface models, and the result is shown in

Figure 12. According to the BIC’s curve, the B-spline model with 361 parameters (

n = 18,

m = 18) is optimal, since it is associated with the smallest value. This result is quite consistent with the judgment of Vuong’s test, because the BIC penalized term is used in our adjusted test statistic. By contrast, the AIC tends to prefer much larger models.

Furthermore, the performance of best-fitting polynomial and B-spline surfaces in reflecting deformation were compared. The superior model was the one able to reflect the deformation details recorded by the point clouds. In order to get an exact mutual spatial referencing of points in the different epochs, we used the block-mean approach to approximate the point-wise changes. The comparison results of Segment I indicated that the selected B-spline surfaces can reflect the actual deformation, especially in Areas A and B of

Figure 8, while the best-fitting polynomial model failed to offer this information due to its global smooth effect. However, in the case of Segment II, B-spline models failed to reflect the actual deformation values, especially at the edges of the data gap.

5. Conclusions

In this paper, we approximated point cloud data of a surveyed arch structure by two common surface models: polynomials and B-splines. Subsequently, we compared different adjusted surface models via Cox’s test and Vuong’s test to select an appropriate parametric model, which was sufficient to describe the geometrical features of the two target segments.

Regarding Segment I, in the initial comparison between lower degree polynomial and B-spline models, none of the B-spline models investigated was rejected, but only the polynomial model with degree 3 was found to be adequate in Cox’s test, while Voung’s test indicated no significant difference in Pairs II and III. However, none of these models could reflect detailed geometrical features of target segments. Since it was not possible to increase the degree of polynomial approximation (due to numerical instability of the normal equations) for modeling geometrical details, B-splines were recommended in the field of applications presented. That motivated us to search for an optimal model balancing between approximation quality and its complexity. According to Voung’s test decisions, the B-spline surface model with was considered as the optimal one in the specific numerical example.

The model selection testing results of Segment II were quite different from that of Segment I. All the B-spline models were rejected by Cox’s test, while in Pairs II and III, the equivalent polynomial surfaces were preferred by Voung’s test, as a consequence of the aforementioned numerical instabilities with the knot vector determination and the resulting oscillation effects. Such deficiencies were clearly reflected by the model selection tests, which rejected inadequate B-spline models.

A consistent model selection result was obtained by comparing Vuong’s test decision with the widely used BIC in discriminating B-spline surface models. Thus, it is concluded that the alternative model selection methodology elaborated in this paper, in parallel with well-known penalization information criteria, can effectively guide practitioners in selecting a parsimonious and accurate model for structures, such as the arch in the numerical example presented. The main difference is that Vuong’s test makes judgments in a framework of likelihood ratio hypothesis testing, which can detect the significant probabilistic differences between models. It was proved here that the models selected by the model selection tests have good performance in reflecting actual deformation.

The model selection methodology is applicable not only to TLS data but also to point clouds obtained by other LiDAR technology, such as airborne laser scanning and mobile laser scanning. There are also distribution-free hypothesis tests, such as Clarke’s test [

34], available for mixed distribution observations.