Salient Object Detection via Recursive Sparse Representation

Abstract

:1. Introduction

- (1)

- Local contrast methods are designed to solve the local extremum operation problem, in which only the most distinct part of the object tends to be highlighted, while they are unable to uniformly evaluate the saliency of the entire object region.

- (2)

- Global contrast methods aim to capture the holistic rarity of an image so as to improve the deficiency described above for the local contrast methods to a certain extent. However, they continue to be ineffective in comparing different contrast values for the detection of multiple objects, especially those with large dissimilarity.

- (3)

- Boundary prior-based saliency computation may fail when the objects touch the image boundaries.

- (1)

- Both background and foreground dictionaries are generated and the currently separated reconstructions are combined to enhance the stability of sparse representation.

- (2)

- The traditional eye-fixation results [5] are introduced to extract the initial background and foreground dictionaries. Compared with the previous related methods such as [31] which only use the boundary prior, the proposed RSR method is expected to be more robust, especially for the images with salient objects that touch the boundaries.

- (3)

- A recursive processing step is utilized to optimize the final detection results and weaken the dependence on the initial saliency map obtained from eye-fixation results.

1.1. Related Works

1.1.1. The Previous Saliency Detection Methods

1.1.2. Saliency Detection and Remote Sensing

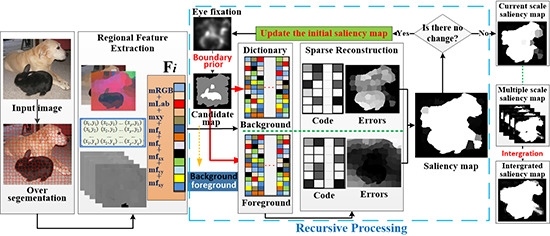

1.2. The Proposed Approach

2. Methodology

2.1. Regional Feature Extraction

2.2. Background and Foreground-Based Sparse Representation

2.3. Dictionary Building

- (1)

- Extracting regions which touch the image boundaries as ;

- (2)

- Calculating the regional fixation level by averaging the value of the region pixels and setting the result as , where is the number of regions and is the eye-fixation level value of region ;

- (3)

- Setting a coefficient of proportionality , and taking the first smaller elements as , the first larger elements as .

2.4. Salient Object Detection by Sparse Representation

2.5. Recursive Processing and Integrated Saliency Map Generation

| Algorithm 1 | |

| 1. | Input: three bands color image I |

| 2. | Output: final saliency map FSM |

| 3. | S = super-pixel-segmentation (I) //over segmentation |

| 4. | Regional feature FS = {F1, F2,…, FN} //Fi = [R, G, B, L, a, b, x, y, fx, fy, fxx, fyy, fxy] regional mean |

| 5. | Initial saliency map ISM = IT eye fixation result //regional mean, initialization |

| 6. | Repeat{ |

| 7. | 1) Boundary prior + ISM => Db, Df // dictionary extraction, |

| 8. | // Db is the background dictionary and Df is the foreground dictionary |

| 9. | 2) Db + Fs → Errb & Errf // Sparse representation |

| 10. | // Errb and Errf are reconstruction errors based on Db and Df |

| 11. | 3) Errb/(Errf + a) → current saliency map CSM // a is a small positive decimal |

| 12. | 4) If CSM ≌ ISM (The similarity is compared to RPcorr) then repeat break |

| 13 | else repeat continue end |

| 14 | 5) If Number of repeats < Threshold |

| 15. | thenISM = CSM and continue |

| 16 | else repeat break end} |

| 17. | FSM = Last (CSM) |

| 18. | ReturnFSM |

3. Experimental Results and Analysis

3.1. Datasets

3.2. Evaluation Measures

3.3. Experimental Parameter Settings

3.4. Visual Comparison on the Benchmark Datasets

3.5. Quantitative Comparison on the Benchmark Datasets

3.6. Comparison of Results on the Remote-Sensing Datasets

3.7. Limitations and Shortcomings

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Borji, A.; Itti, L. State-of-the-art in visual attention modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 185–207. [Google Scholar] [CrossRef] [PubMed]

- Borji, A.; Sihite, D.N.; Itti, L. Quantitative analysis of human model agreement in visual saliency modeling: A comparative study. IEEE Trans. Image Process. 2013, 22, 55–69. [Google Scholar] [CrossRef] [PubMed]

- Hayhoe, M.; Ballard, D. Eye movements in natural behavior. Trends Cognit. Sci. 2005, 9, 188–194. [Google Scholar] [CrossRef] [PubMed]

- Itti, L.; Koch, C. Computational modelling of visual attention. Nature Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef] [PubMed]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Xiang, D.L.; Tang, T.; Ni, W.P.; Zhang, H.; Lei, W.T. Saliency Map Generation for SAR Images with Bayes Theory and Heterogeneous Clutter Model. Remote Sens. 2017, 9, 1290. [Google Scholar] [CrossRef]

- Dong, C.; Liu, J.H.; Xu, F. Ship Detection in Optical Remote Sensing Images Based on Saliency and a Rotation-Invariant Descriptor. Remote Sens. 2018, 10, 400. [Google Scholar] [CrossRef]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-aware saliency detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 32, 1915–1925. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.Z.; Wang, J.D.; Yuan, Z.J.; Liu, T.; Zheng, N.N.; Li, S.P. Automatic salient object segmentation based on context and shape prior. BMVC 2011, 6, 9–20. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Cheng, M.M.; Zhang, G.X.; Mitra, N.J.; Huang, X.L.; Hu, S.M. Global contrast based salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 569–582. [Google Scholar]

- Perazzi, F.; Krahenbuhl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Lu, Y.; Zhang, W.; Lu, H.; Xue, X.Y. Salient object detection using concavity context. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 233–240. [Google Scholar]

- Yang, C.; Zhang, L.H.; Lu, H.C. Graph-regularized saliency detection with convex-hull-based center prior. IEEE Signal Process. Lett. 2013, 20, 637–640. [Google Scholar] [CrossRef]

- Borji, A. Boosting bottom-up and top-down visual features for saliency estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 438–445. [Google Scholar]

- Yang, J.M.; Yang, M.H. Top-down visual saliency via joint crf and dictionary learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2296–2303. [Google Scholar]

- Liu, T.; Yuan, Z.J.; Sun, J.; Wang, J.D.; Zheng, N.N.; Tang, X.O.; Shum, H.Y. Learning to detect a salient object. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 353–367. [Google Scholar] [PubMed]

- Borji, A.; Cheng, M.M.; Jiang, H.Z.; Li, J. Salient Object Detection: A Benchmark. IEEE Trans. Image Proc. 2015, 24, 5706–5722. [Google Scholar] [CrossRef] [PubMed]

- Ohm, K.; Lee, M.; Lee, Y.; Kim, S. Salient object detection using recursive regional feature clustering. Inf. Sci. 2017, 387, 1–18. [Google Scholar]

- Hu, P.; Shuai, B.; Liu, J.; Wang, G. Deep level sets for salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2300–2309. [Google Scholar]

- Gong, X.; Xie, Z.; Liu, Y.Y.; Shi, X.G.; Zheng, Z. Deep salient feature based anti-noise transfer network for scene classification of remote sensing imagery. Remote Sens. 2018, 10, 410. [Google Scholar] [CrossRef]

- Hou, Q.B.; Cheng, M.M.; Hu, X.W.; Borji, A.; Tu, Z.W.; Torr, P.H.S. Deeply supervised salient object detection with short connections. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3203–3212. [Google Scholar]

- Alpert, S.; Galun, M.; Basri, R.; Brant, A. Image segmentation by probabilistic bottom-up aggregation and cue integration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Jiang, H.Z.; Wang, J.D.; Yuan, Z.J.; Wu, Y.; Zheng, N.N.; Li, S.P. Salient object detection: A discriminative regional feature integration approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2083–2090. [Google Scholar]

- Li, Z.C.; Qin, S.Y.; Itti, L. Visual attention guided bit allocation in video compression. Image Vis. Comput. 2011, 29, 1–14. [Google Scholar] [CrossRef]

- Oh, K.H.; Lee, M.; Kim, G.; Kim, S. Detection of multiple salient objects through the integration of estimated foreground clues. Image Vis. Comput. 2016, 54, 31–44. [Google Scholar] [CrossRef]

- Shi, J.P.; Yan, Q.; Xu, L.; Jia, J.Y. Hierarchical image saliency detection on extended CSSD. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 717–729. [Google Scholar] [CrossRef] [PubMed]

- Wei, Y.C.; Wen, F.; Zhu, W.J.; Sun, J. Geodesic saliency using background priors. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 29–42. [Google Scholar]

- Zhu, W.J.; Liang, S.; Wei, Y.C.; Sun, J. Saliency optimization from robust background detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2814–2821. [Google Scholar]

- Li, H.; Wu, E.H.; Wu, W. Salient region detection via locally smoothed label propagation with application to attention driven image abstraction. Neurocomputing 2017, 230, 359–373. [Google Scholar] [CrossRef]

- Li, X.H.; Lu, H.C.; Zhang, L.H.; Ruan, X.; Yang, M.S. Saliency detection via dense and sparse reconstruction. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2976–2983. [Google Scholar]

- Achanta, R.; Estrada, F.; Wils, P.; Süsstrunk, S. Salient region detection and segmentation. In Proceedings of the International Conference on Computer Vision Systems, Santorini, Greece, 12–15 May 2008; pp. 66–75. [Google Scholar]

- Cheng, M.M.; Mitra, N.J.; Huang, X.L.; Torr, P.H.S.; Hu, S.M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Koch, C.; Ullman, S. Shifts in selective visual attention: Towards the underlying neural circuitry. Hum. Neurobiol. 1985, 4, 219–227. [Google Scholar] [PubMed]

- Harel, J.; Koch, C.; Perona, P. Graph-based visual saliency. In Proceedings of the Advances in neural Information Processing Systems, Vancouver, BC, Canada, 4–5 December 2006; pp. 545–552. [Google Scholar]

- Jiang, B.W.; Zhang, L.H.; Lu, H.C.; Yang, C.; Yang, M.S. Saliency detection via absorbing markov chain. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 1665–1672. [Google Scholar]

- Chen, J.Z.; Ma, B.P.; Cao, H.; Chen, J.; Fan, Y.B.; Li, R.; Wu, W.M. Updating initial labels from spectral graph by manifold regularization for saliency detection. Neurocomputing 2017, 266, 79–90. [Google Scholar] [CrossRef]

- Zhang, J.X.; Ehinger, K.A.; Wei, H.K.; Zhang, K.J.; Yang, J.Y. A novel graph-based optimization framework for salient object detection. Pattern Recognit. 2017, 64, 39–50. [Google Scholar] [CrossRef]

- He, Z.Q.; Jiang, B.; Xiao, Y.; Ding, C.; Luo, B. Saliency detection via a graph based diffusion model. In Proceedings of the International Workshop on Graph-Based Representations in Pattern Recognition, Anacapri, Italy, 16–18 May 2017; pp. 3–12. [Google Scholar]

- Yan, Q.; Xu, L.; Shi, J.P.; Jia, J.Y. Hierarchical saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1155–1162. [Google Scholar]

- Judd, T.; Durand, F.; Torralba, A. A Benchmark of Computational Models of Saliency to Predict Human Fixations; Technical Report; Creative Commons: Los Angeles, CA, USA, 2012. [Google Scholar]

- Borji, A.; Sihite, D.N.; Itti, L. Salient object detection: A benchmark. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 414–429. [Google Scholar]

- Peng, H.W.; Li, B.; Ling, H.B.; Hu, W.M.; Xiong, W.H.; Maybank, S.J. Salient object detection via structured matrix decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 818–832. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.B.; Lv, X.R.; Liang, X. Saliency analysis via hyperparameter sparse representation and energy distribution optimization for remote sensing images. Remote Sens. 2017, 9, 636. [Google Scholar] [CrossRef]

- Hu, Z.P.; Zhang, Z.B.; Sun, Z.; Zhao, S.H. Salient object detection via sparse representation and multi-layer contour zooming. IET Comput. Vis. 2017, 11, 309–318. [Google Scholar] [CrossRef]

- Tan, Y.H.; Li, Y.S.; Chen, C.; Yu, J.G.; Tian, J.W. Cauchy graph embedding based diffusion model for salient object detection. JOSA A 2016, 33, 887–898. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.S.; Tan, Y.H.; Deng, J.J.; Wen, Q.; Tian, J.W. Cauchy graph embedding optimization for built-up areas detection from high-resolution remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2078–2096. [Google Scholar] [CrossRef]

- Brunner, D.; Lemoine, G.; Bruzzone, L.; Brunner, D.; Lemoine, G.; Bruzzone, L. Earthquake damage assessment of buildings using VHR optical and SAR imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2403–2420. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.J.; Tong, X. Cloud extraction from chinese high resolution satellite imagery by probabilistic latent semantic analysis and object-based machine learning. Remote Sens. 2016, 8, 963. [Google Scholar] [CrossRef]

- Patra, S.; Bruzzone, L. A novel SOM-SVM-based active learning technique for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6899–6910. [Google Scholar] [CrossRef]

- Duan, L.J.; Wu, C.P.; Miao, J.; Qing, L.Y.; Fu, Y. Visual saliency detection by spatially weighted dissimilarity. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 473–480. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Susstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Oh, K.H.; Kim, S.H.; Kim, Y.C.; Lee, Y.R. Detection of multiple salient objects by categorizing regional features. KSII Trans. Internet Inf. Syst. 2016, 10, 272–287. [Google Scholar]

| Image Parameters | GF-1 | UAV |

|---|---|---|

| Product level | 1A | Original image |

| Number of bands | 4 | 3 |

| Spatial resolution (m) | 8 | 0.6 |

| Original image size | 4548 × 500 | 6000 × 4000 |

| Experimental image cutting size | 1000 × 800 | 1000 × 666 |

| Land-cover type | Buildings + mountains + water | Buildings |

| Parameter | Value | Remark |

|---|---|---|

| 100, 200, 300, 400 | Superpixel number of the SLIC segmentation | |

| 0.2 | Coefficient of proportionality in dictionary extraction | |

| 0.01 | Regularization parameters in sparse representation | |

| 0.1 | Regulatory factor in saliency map computation | |

| 0.3 | Weight value in PR computation | |

| 0.9999 | Similarity coefficient threshold in recursive processing | |

| 10 | Iteration times, upper threshold in recursive processing | |

| 3 | Iteration times, lower threshold in recursive processing |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Wang, X.; Xie, X.; Li, Y. Salient Object Detection via Recursive Sparse Representation. Remote Sens. 2018, 10, 652. https://doi.org/10.3390/rs10040652

Zhang Y, Wang X, Xie X, Li Y. Salient Object Detection via Recursive Sparse Representation. Remote Sensing. 2018; 10(4):652. https://doi.org/10.3390/rs10040652

Chicago/Turabian StyleZhang, Yongjun, Xiang Wang, Xunwei Xie, and Yansheng Li. 2018. "Salient Object Detection via Recursive Sparse Representation" Remote Sensing 10, no. 4: 652. https://doi.org/10.3390/rs10040652