Learning Control Policies of Driverless Vehicles from UAV Video Streams in Complex Urban Environments

Abstract

:1. Introduction

- A new framework for infrastructure-led policy learning is proposed to augment intelligent control algorithms of connected autonomous vehicles with situational knowledge specific to a selected geo-location;

- A novel deep imitation learning framework based on long short term memory (LSTM) networks [15] is proposed as data-driven driving policy learning algorithm, by utilizing a new data set captured through uncrewed aerial vehicles (UAVs).

2. Background and Related Work

2.1. Connected Intelligent Infrastructure as Enabler of Intelligent Mobility

2.2. Deep learning Architectures for Autonomous Driving

2.3. Situational Intelligence for Driverless Vehicles in Intersections/Junctions

2.4. Neural Networks as Non-linear Function Approximators

2.5. Neural Network Models for Time Series Modelling

2.6. Summary

3. Proposed Framework for Infrastructure-led Driving Policy Learning

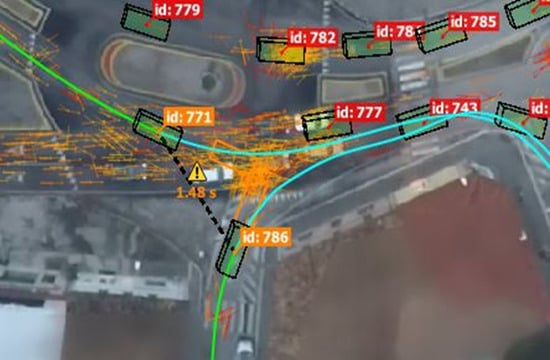

3.1. Data Capturing and Processing

3.2. Scene Perception and Extraction of Expert Data Trajectories

| Algorithm 1. Extraction of Expert Data Trajectories |

Input: Object Tracking data: Positions of vehicles at each timestep with a tracking id

|

3.3. Data-Driven Policy Learning

3.4. Application Layer

4. Experimental Details

4.1. The Dataset

4.2. Data Pre-processing

4.3. The Neural Network Model Building

- Changing the architecture by adding/removing/shuffling layers;

- Changing learning rate, decay rate, and different loss functions;

- Increasing or decreasing number of epochs and/or batch size;

- Changing data pre-processing, timesteps, sequence size, decimal places.

5. Results and Discussion

5.1. Driving Policy Learning

5.2. Comparison of the Models

5.3. Discussion of Results

5.4. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yigitcanlar, T.; Wilson, M.; Kamruzzaman, M. Disruptive Impacts of Automated Driving Systems on the Built Environment and Land Use: An Urban Planner’s Perspective. J. Open Innov. Technol. Mark. Complex. 2019, 5, 24. [Google Scholar] [CrossRef]

- De Silva, V.; Roche, J.; Kondoz, A. Robust Fusion of LiDAR and Wide-Angle Camera Data for Autonomous Mobile Robots. Sensors 2018, 18, 2730. [Google Scholar] [CrossRef] [PubMed]

- Self-Driving Cars. Available online: https://www.autotrader.co.uk/content/features/self-driving-cars (accessed on 1 October 2019).

- The Society of Motor Manufacturers and Traders Connected and Autonomous Vehicles: Winning the Global Race to Market 2019. Available online: https://www.smmt.co.uk/reports/connected-and-autonomous-vehicles-the-global-race-to-market/ (accessed on 1 October 2019).

- North Avenue Smart Corridor: Intelligent Mobility Innovations in Atlanta Improving Safety and Efficiency. Available online: https://www.atkinsglobal.com/en-GB/angles/all-angles/north-ave-smart-corridor (accessed on 1 October 2019).

- Geng, X.; Liang, H.; Yu, B.; Zhao, P.; He, L.; Huang, R. A Scenario-Adaptive Driving Behavior Prediction Approach to Urban Autonomous Driving. Appl. Sci. 2017, 7, 426. [Google Scholar] [CrossRef]

- Gopalswamy, S.; Rathinam, S. Infrastructure Enabled Autonomy: A Distributed Intelligence Architecture for Autonomous Vehicles. arXiv 2018, arXiv:1802.04112. [Google Scholar]

- Driving Autonomous Vehicles Forward with Intelligent Infrastructure. Available online: https://www.smartcitiesworld.net/opinions/opinions/driving-autonomous-vehicles-forward-with-intelligent-infrastructure (accessed on 1 October 2019).

- Coppola, R.; Morisio, M. Connected Car: Technologies, Issues, Future Trends. ACM Comput. Surv. 2016, 49, 46. [Google Scholar] [CrossRef]

- Ohio’s 33 Smart Mobility Corridor. Available online: https://www.33smartcorridor.com (accessed on 1 October 2019).

- Honda Honda’s Smart Intersection Previews the Future of Driving. Available online: https://www.motor1.com/news/269253/honda-smart-intersection-autonomous-driving/ (accessed on 1 October 2019).

- Tesla Autonomy Investor Day. Available online: https://ir.tesla.com/events/event-details/tesla-autonomy-investor-day (accessed on 1 October 2019).

- Sutton, R.S.; Barto, A.G. Reinforcement Learning; MIT Press: Cambridge, MA, USA, 1998; ISBN 978-0-262-19398-6. [Google Scholar]

- Attia, A.; Dayan, S. Global overview of Imitation Learning. arXiv 2018, arXiv:1801.06503. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Wuthishuwong, C.; Traechtler, A. Vehicle to infrastructure based safe trajectory planning for Autonomous Intersection Management. In Proceedings of the 2013 13th International Conference on ITS Telecommunications (ITST), Tampere, Finland, 5–7 November 2013; pp. 175–180. [Google Scholar]

- Pourmehrab, M.; Elefteriadou, L.; Ranka, S. Smart intersection control algorithms for automated vehicles. In Proceedings of the 2017 Tenth International Conference on Contemporary Computing (IC3), Noida, India, 10–12 August 2017; pp. 1–6. [Google Scholar]

- De Silva, V.; Wang, X.; Aladagli, D.; Kondoz, A.; Ekmekcioglu, E. An Agent-based Modelling Framework for Driving Policy Learning in Connected and Autonomous Vehicles. arXiv 2017, arXiv:1709.04622. [Google Scholar]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to End Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Li, L.; Ota, K.; Dong, M. Humanlike Driving: Empirical Decision-Making System for Autonomous Vehicles. IEEE Trans. Veh. Technol. 2018, 67, 6814–6823. [Google Scholar] [CrossRef]

- Fayjie, A.R.; Hossain, S.; Oualid, D.; Lee, D. Driverless Car: Autonomous Driving Using Deep Reinforcement Learning in Urban Environment. In Proceedings of the 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26–30 June 2018; pp. 896–901. [Google Scholar]

- Wang, S.; Jia, D.; Weng, X. Deep Reinforcement Learning for Autonomous Driving. arXiv 2018, arXiv:1811.11329. [Google Scholar]

- Isele, D.; Rahimi, R.; Cosgun, A.; Subramanian, K.; Fujimura, K. Navigating Occluded Intersections with Autonomous Vehicles using Deep Reinforcement Learning. arXiv 2017, arXiv:1705.01196. [Google Scholar]

- Technologies, U. Unity. Available online: https://unity.com/frontpage (accessed on 1 October 2019).

- TORCS—The Open Racing Car Simulator. Available online: https://sourceforge.net/projects/torcs/ (accessed on 1 October 2019).

- Sun, L.; Peng, C.; Zhan, W.; Tomizuka, M. A Fast Integrated Planning and Control Framework for Autonomous Driving via Imitation Learning. arXiv 2017, arXiv:1707.02515. [Google Scholar]

- USA Highway 101 Dataset, FHWA-HRT-07-030. Available online: https://www.fhwa.dot.gov/publications/research/operations/07030/ (accessed on 1 October 2019).

- An LSTM Network for Highway Trajectory Prediction—IEEE Conference Publication. Available online: https://ieeexplore.ieee.org/document/8317913 (accessed on 1 October 2019).

- Dickson, B.B. February 11, 2019 11:08 am EST; February 11, 2019 The Predictions Were Wrong: Self-Driving Cars Have a Long Way to Go. Available online: https://www.pcmag.com/commentary/366394/the-predictions-were-wrong-self-driving-cars-have-a-long-wa (accessed on 1 October 2019).

- Xu, H.; Gao, Y.; Yu, F.; Darrell, T. End-to-end Learning of Driving Models from Large-scale Video Datasets. arXiv 2016, arXiv:1612.01079. [Google Scholar]

- Sama, K.; Morales, Y.; Akai, N.; Takeuchi, E.; Takeda, K. Retrieving a driving model based on clustered intersection data. In Proceedings of the 2018 3rd International Conference on Control and Robotics Engineering (ICCRE), Nagoya, Japan, 20–23 April 2018; pp. 222–226. [Google Scholar]

- Phillips, D.J.; Wheeler, T.A.; Kochenderfer, M.J. Generalizable intention prediction of human drivers at intersections. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1665–1670. [Google Scholar]

- Sze, V.; Chen, Y.-H.; Yang, T.-J.; Emer, J. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. arXiv 2017, arXiv:1703.09039. [Google Scholar] [CrossRef] [Green Version]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2017, arXiv:1609.04747. [Google Scholar]

- Written Memories: Understanding, Deriving and Extending the LSTM—R2RT. Available online: https://r2rt.com/written-memories-understanding-deriving-and-extending-the-lstm.html#backpropagation-through-time-and-vanishing-sensitivity (accessed on 1 October 2019).

- Ahmed, Z. How to Visualize Your Recurrent Neural Network with Attention in Keras. Available online: https://medium.com/datalogue/attention-in-keras-1892773a4f22 (accessed on 1 October 2019).

- Keras LSTM tutorial—How to easily build a powerful deep learning language model. Adventures Mach. Learn. 2018.

- Home—DataFromSky—Traffic Monitoring by a UAV—DataFromSky. Available online: https://datafromsky.com/ (accessed on 1 October 2019).

- Google Maps. Available online: https://www.google.com/maps/@52.6012995,-1.1224142,14z (accessed on 1 October 2019).

- TensorFlow. Available online: https://www.tensorflow.org/ (accessed on 1 October 2019).

- Home—Keras Documentation. Available online: https://keras.io/ (accessed on 1 October 2019).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Welcome to Python.org. Available online: https://www.python.org/ (accessed on 1 October 2019).

- Project Jupyter. Available online: https://www.jupyter.org (accessed on 1 October 2019).

| Feature Name | Value | |

|---|---|---|

| Architecture | Layer 1 | LSTM (10, activation = ‘relu’, input shape = (100,12), return_sequences = True)) |

| Layer 2 | Dropout (0.2) | |

| Layer 3 | Batch Normalization | |

| Layer 4 | Dense (100, activation = ‘softmax’) | |

| Layer 5 | Dropout (0.2) | |

| Layer 6 | Time Distributed (Dense(2)) | |

| Optimizer | Adam (learning rate = 0.01, beta_1 = 0.9, beta_2 = 0.999, epsilon = 1 × 10−8, decay = 0.00) | |

| Loss Function | Mean Squared Error | |

| Batch Size | 2 | |

| Epochs | 50 | |

| Sequence Length | 100 | |

| Feature Name | Value | |

|---|---|---|

| Architecture | Layer 1 | LSTM (10, activation = ‘tanh’, input shape = (100,12), return_sequences = True)) |

| Layer 2 | Dropout (0.4) | |

| Layer 3 | (LSTM(10, activation = ‘relu’, input_shape = (100,12), return_sequences = True) | |

| Layer 4 | Time Distributed (Dense(2)) | |

| Feature Name | Value | |

|---|---|---|

| Architecture | Layer 1 | LSTM (10, activation = ‘relu’, input shape = (100,12), return_sequences = True)) |

| Layer 2 | Dropout (0.2) | |

| Layer 3 | Batch Normalization | |

| Layer 4 | Time Distributed (Dense(2)) | |

| Intersection | Training | Validation | ||

|---|---|---|---|---|

| Accuracy | Loss | Accuracy | Loss | |

| Italy | 0.8148 | 0.0133 | 0.7517 | 0.0116 |

| Denmark A | 0.9925 | 0.0040 | 0.9445 | 0.0059 |

| Denmark B | 0.9591 | 0.1059 | 0.9345 | 0.0660 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Inder, K.; De Silva, V.; Shi, X. Learning Control Policies of Driverless Vehicles from UAV Video Streams in Complex Urban Environments. Remote Sens. 2019, 11, 2723. https://doi.org/10.3390/rs11232723

Inder K, De Silva V, Shi X. Learning Control Policies of Driverless Vehicles from UAV Video Streams in Complex Urban Environments. Remote Sensing. 2019; 11(23):2723. https://doi.org/10.3390/rs11232723

Chicago/Turabian StyleInder, Katie, Varuna De Silva, and Xiyu Shi. 2019. "Learning Control Policies of Driverless Vehicles from UAV Video Streams in Complex Urban Environments" Remote Sensing 11, no. 23: 2723. https://doi.org/10.3390/rs11232723