Automatic Removal of Imperfections and Change Detection for Accurate 3D Urban Cartography by Classification and Incremental Updating

Abstract

:1. Introduction

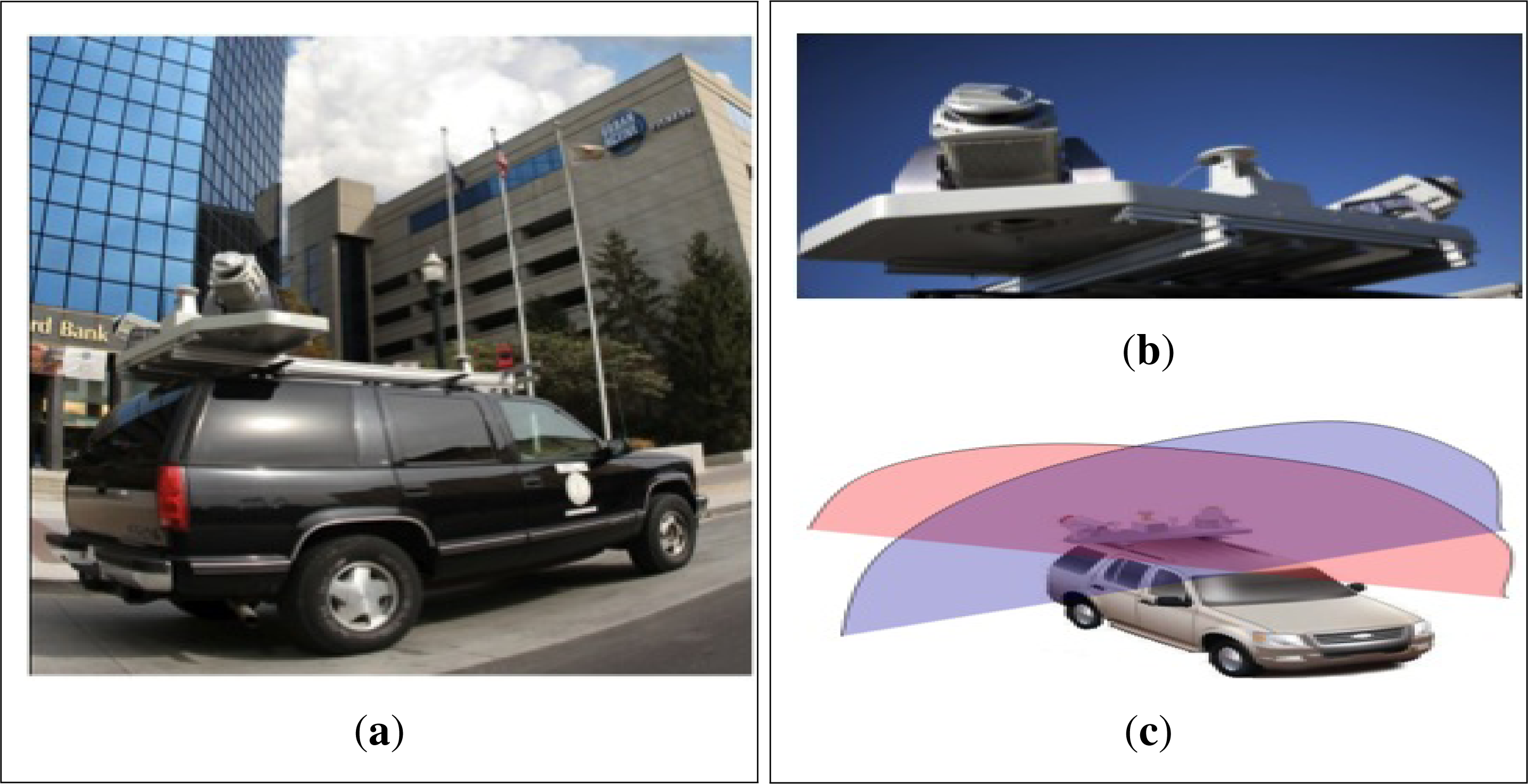

2. Related Work

Require: 3D urban point clouds for passage number, np

|

Sequential update function:{

|

Automatic reset function: {

|

Object update function: {

|

3. 3D Scan Registration

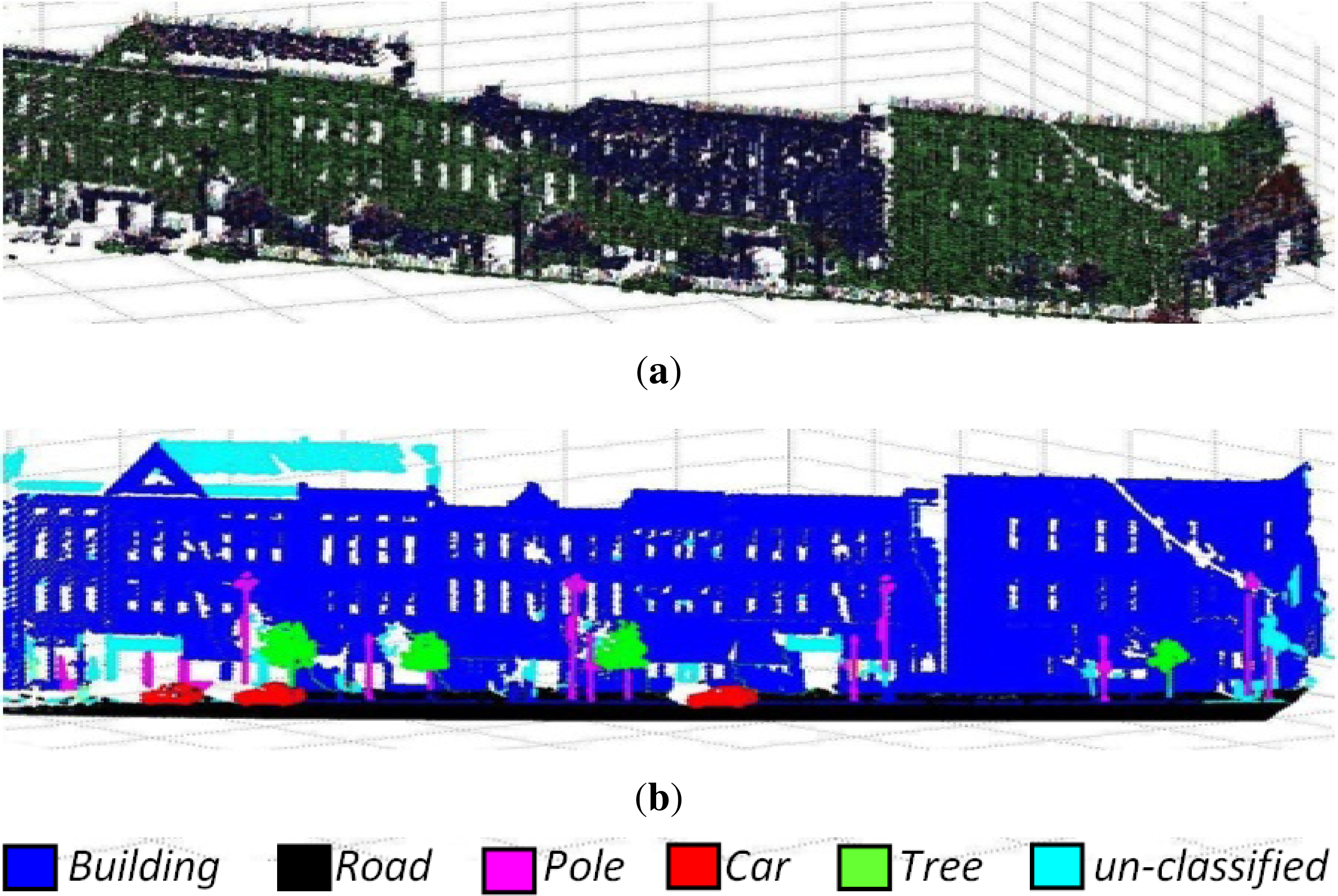

4. Classification of 3D Urban Environment

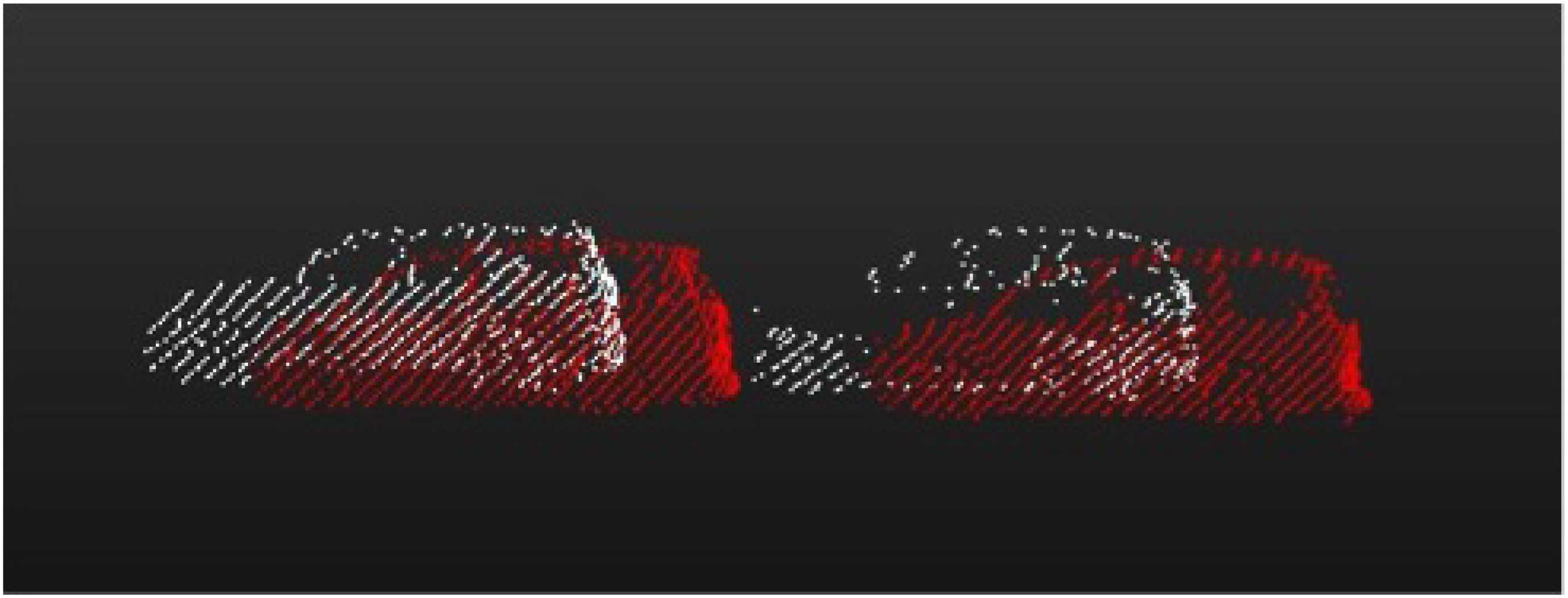

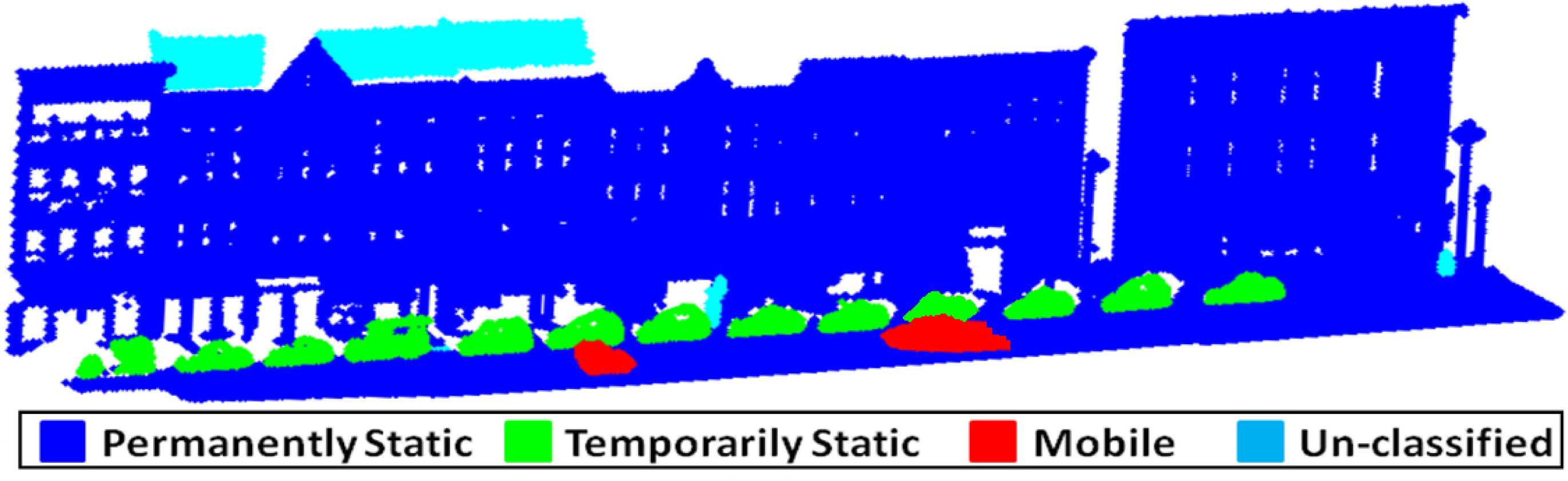

5. 3D Cartography and Removal of Imperfections Exploiting Multiple Passages

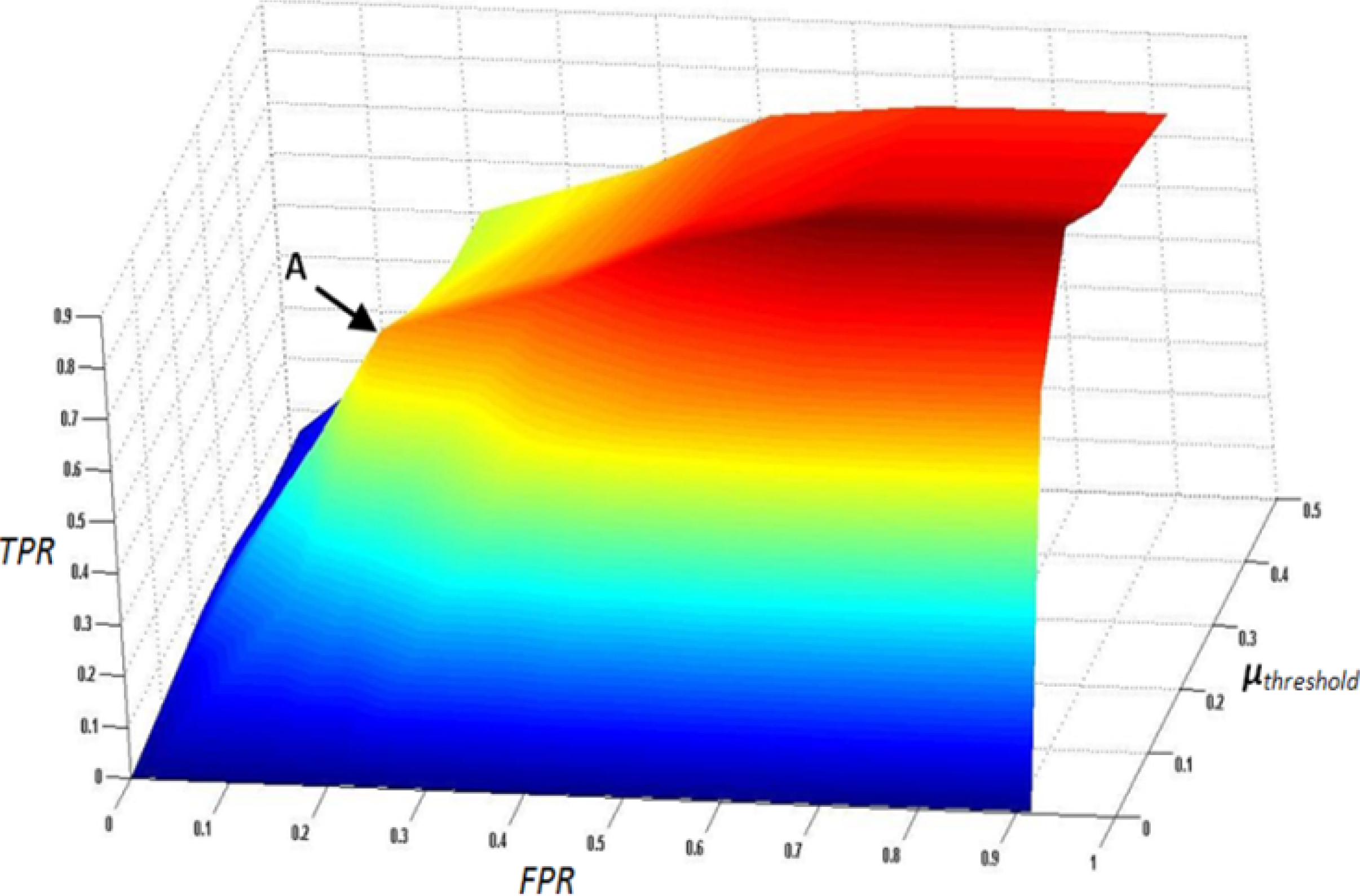

5.1. 3D Evidence Grid Formulation

5.2. Similarity Map Construction

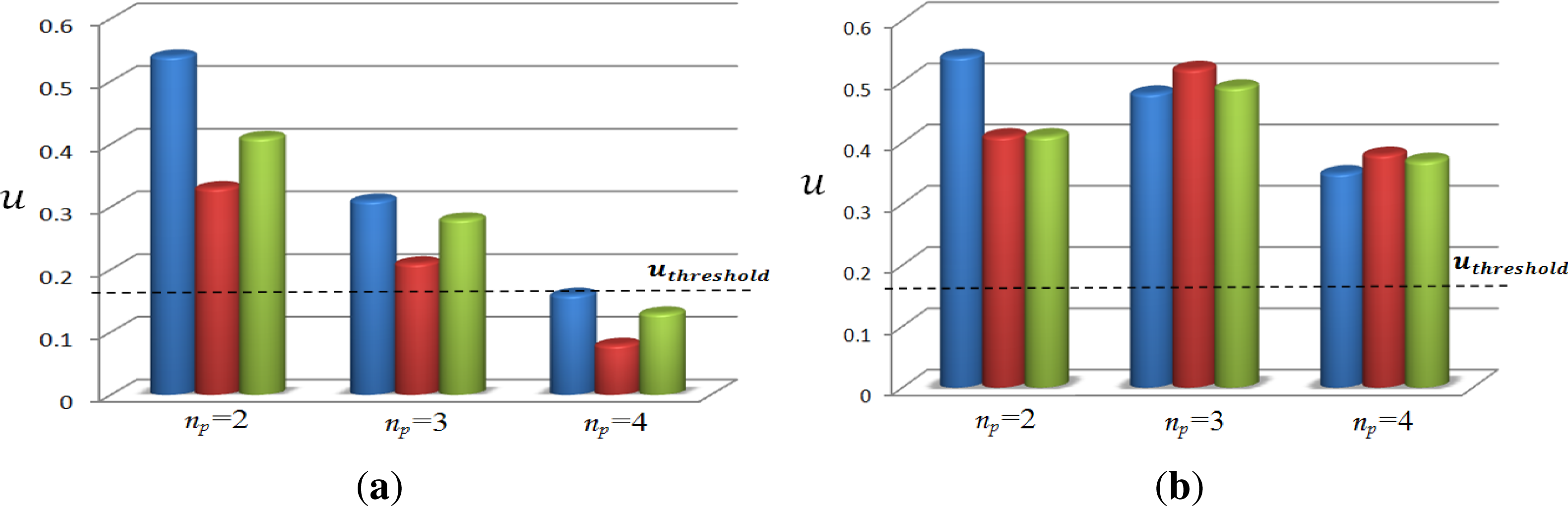

5.3. Associated Uncertainty

5.4. Specialized Functions

5.4.1. Sequential Update Function

| Require: 3D points of building objects |

| 1: for minimum x and y values of 3D points to maximum x and y values of 3D points do |

| 2: Scan building points in the x − y plane |

| 3: Find the maximum and minimum value in the z-axis |

| 4: end for |

| 5: for minimum z values of 3D points to maximum z values of 3D points do |

| 6: Scan building points in the z-axis |

| 7: Find maximum and minimum value in the x − y plane |

| 8: end for |

| 9: return envelope/profile of building objects |

5.4.2. Automatic Reset Function

5.4.3. Object Update Function

5.5. Automatic Checks and Balances

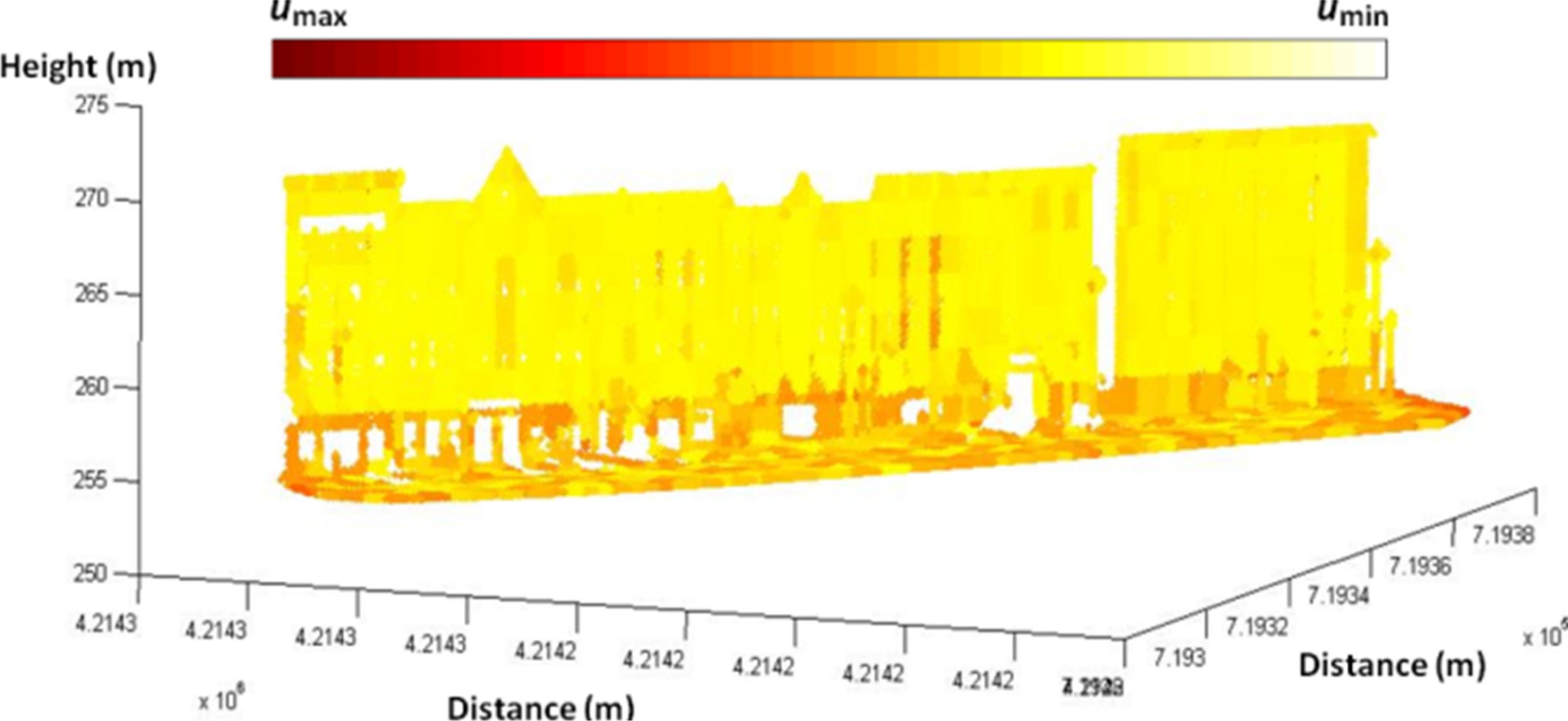

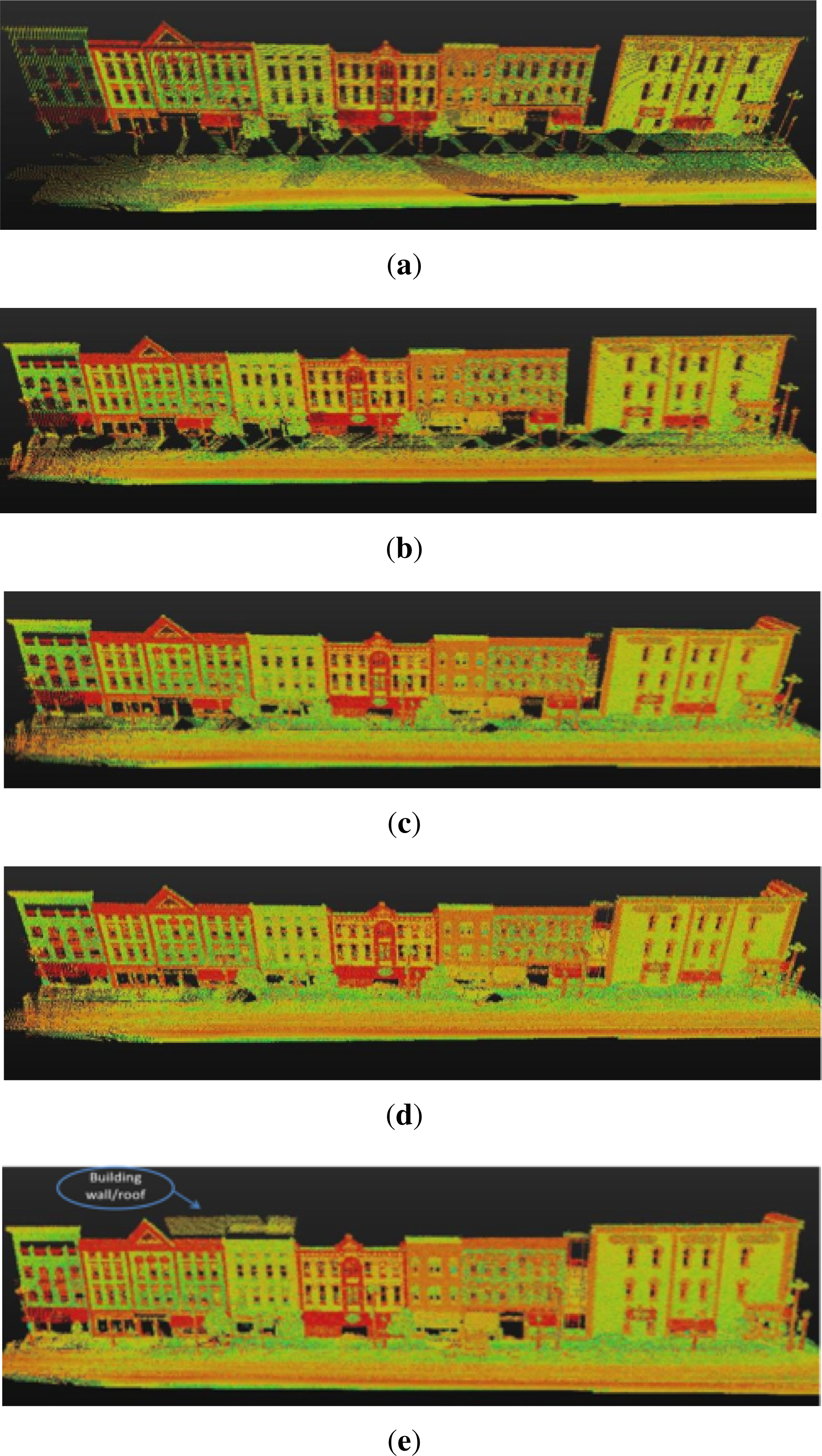

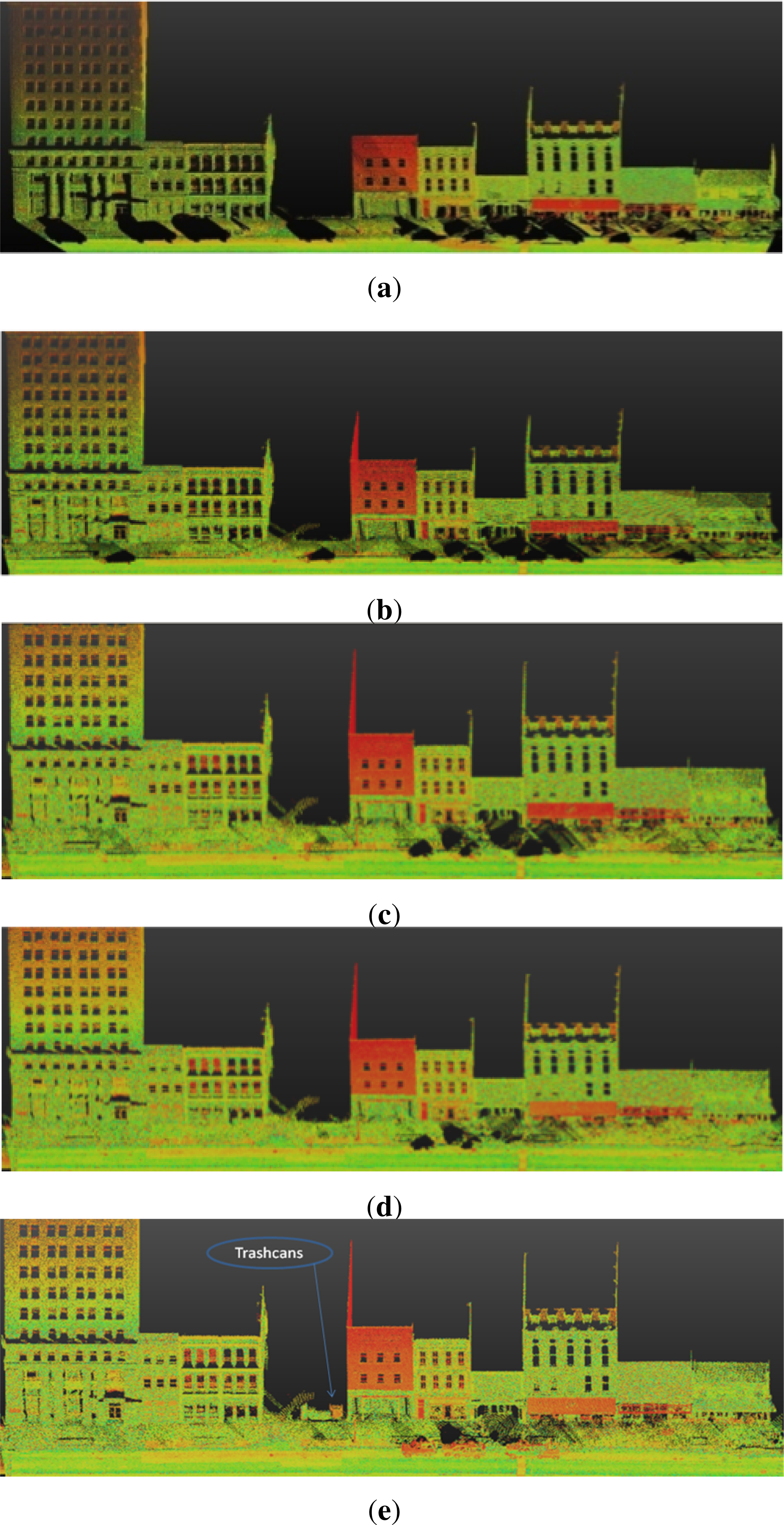

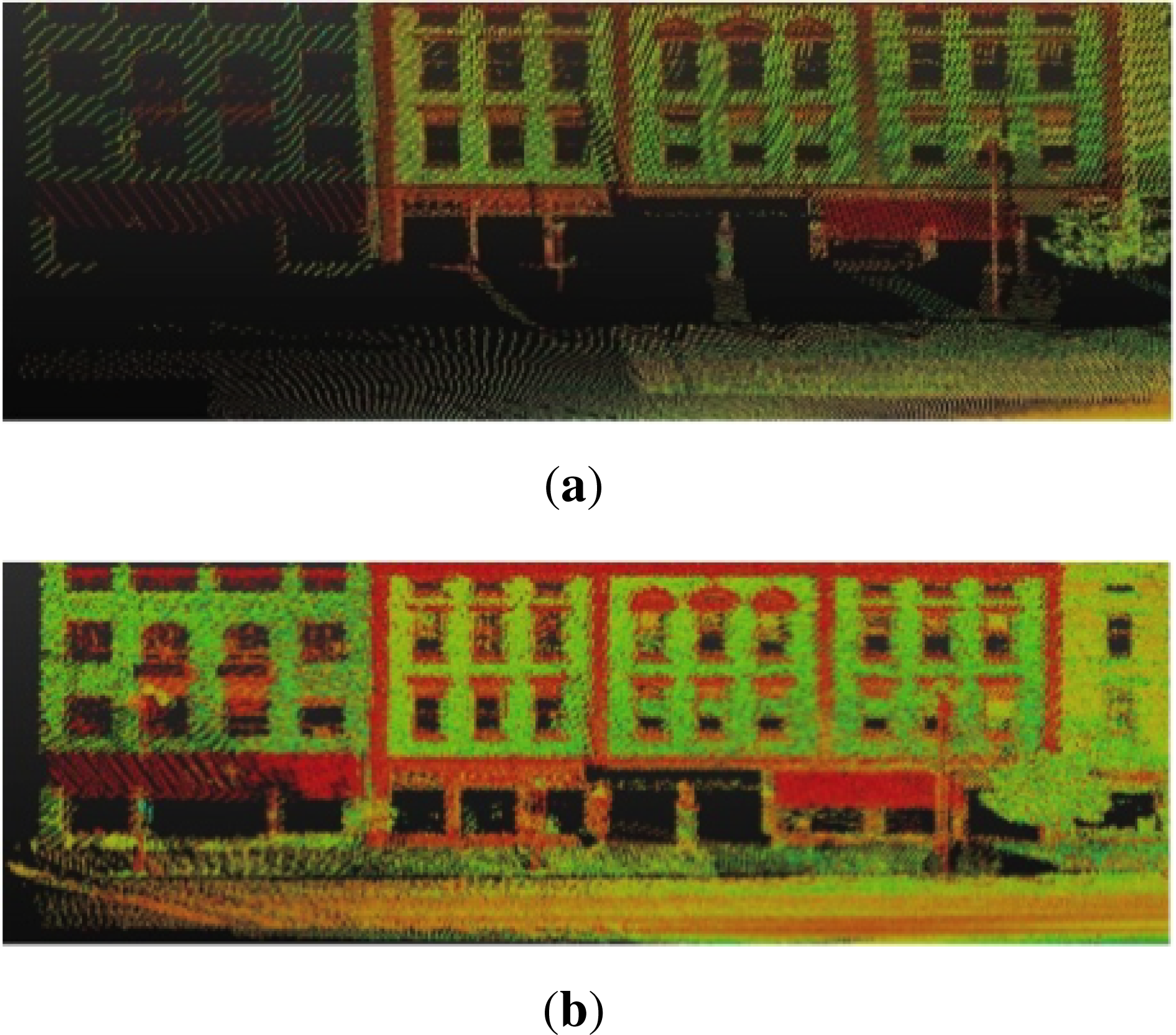

6. Results, Evaluation and Discussion

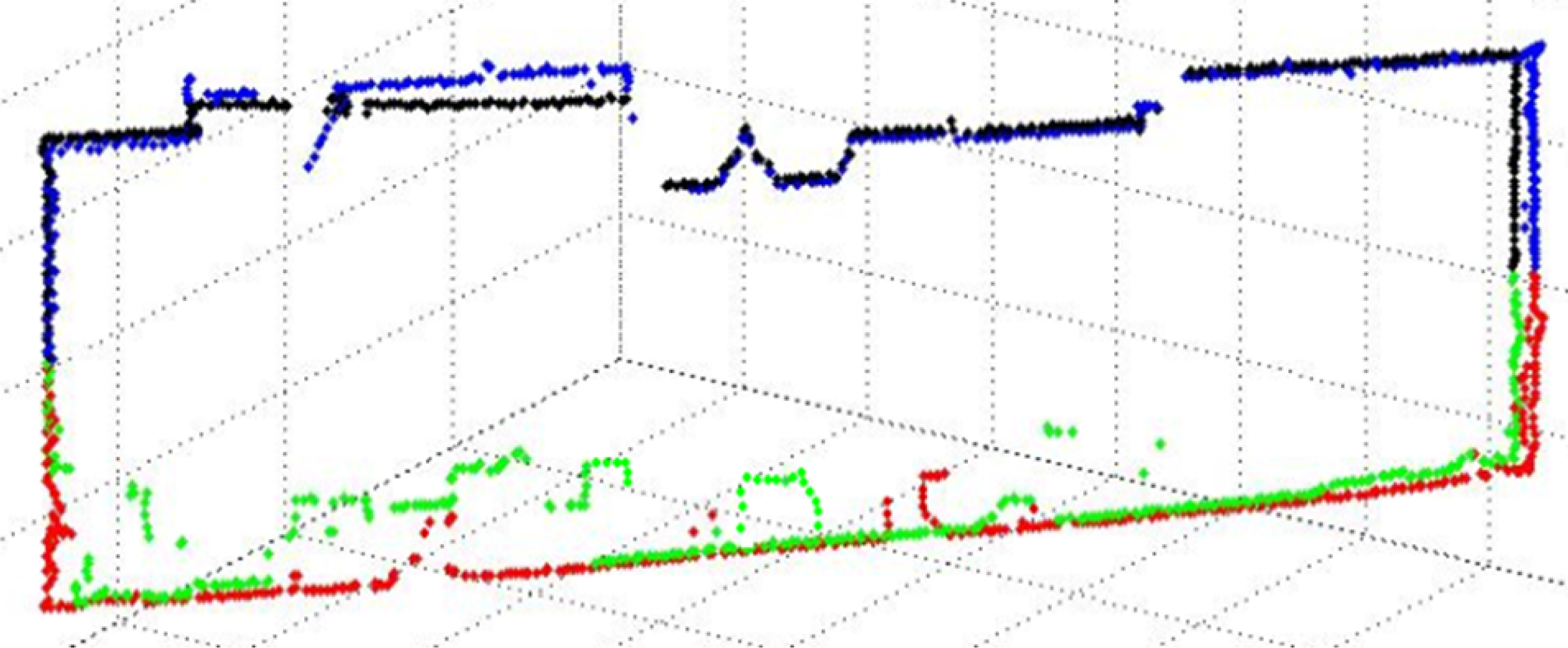

6.1. Change Detection and Reset Function

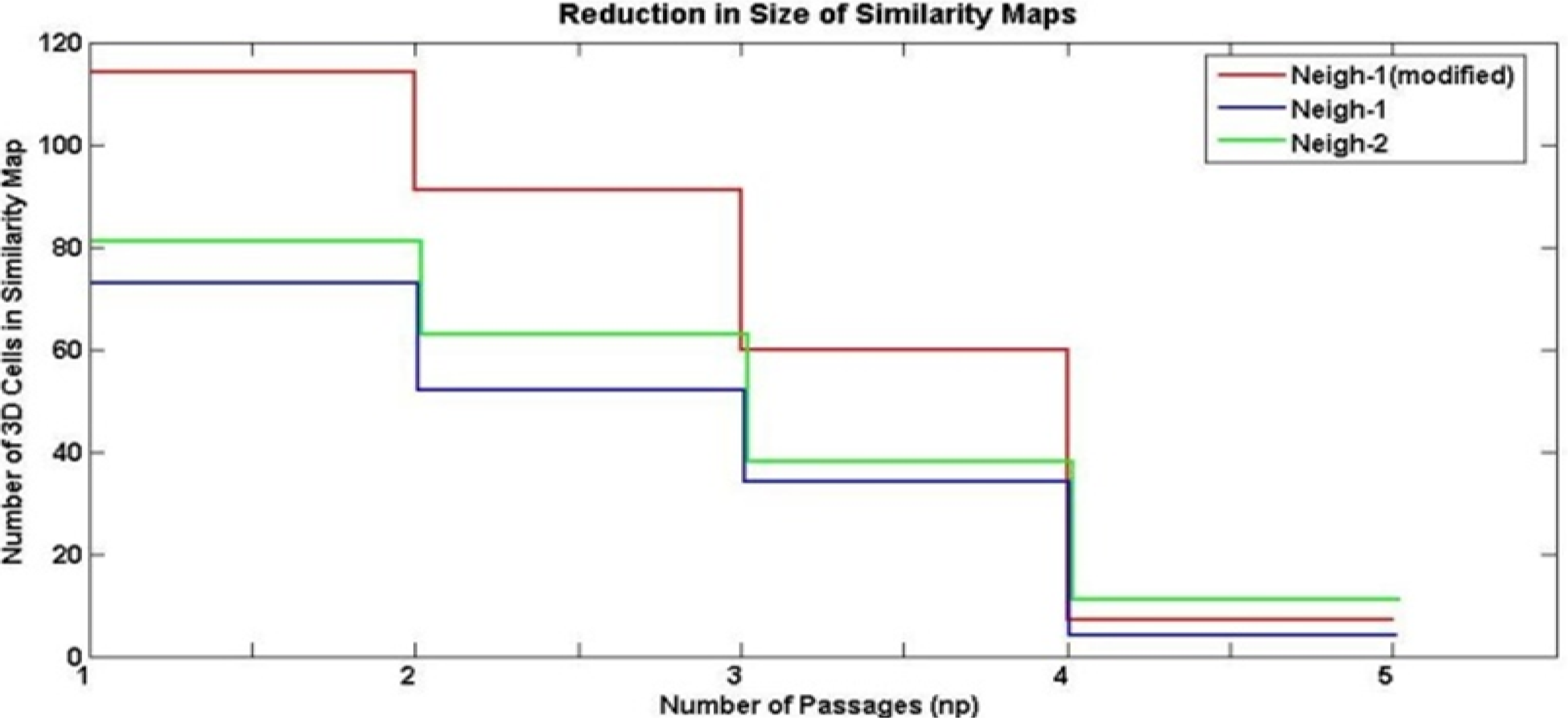

6.2. Evolution of Similarity Map Size

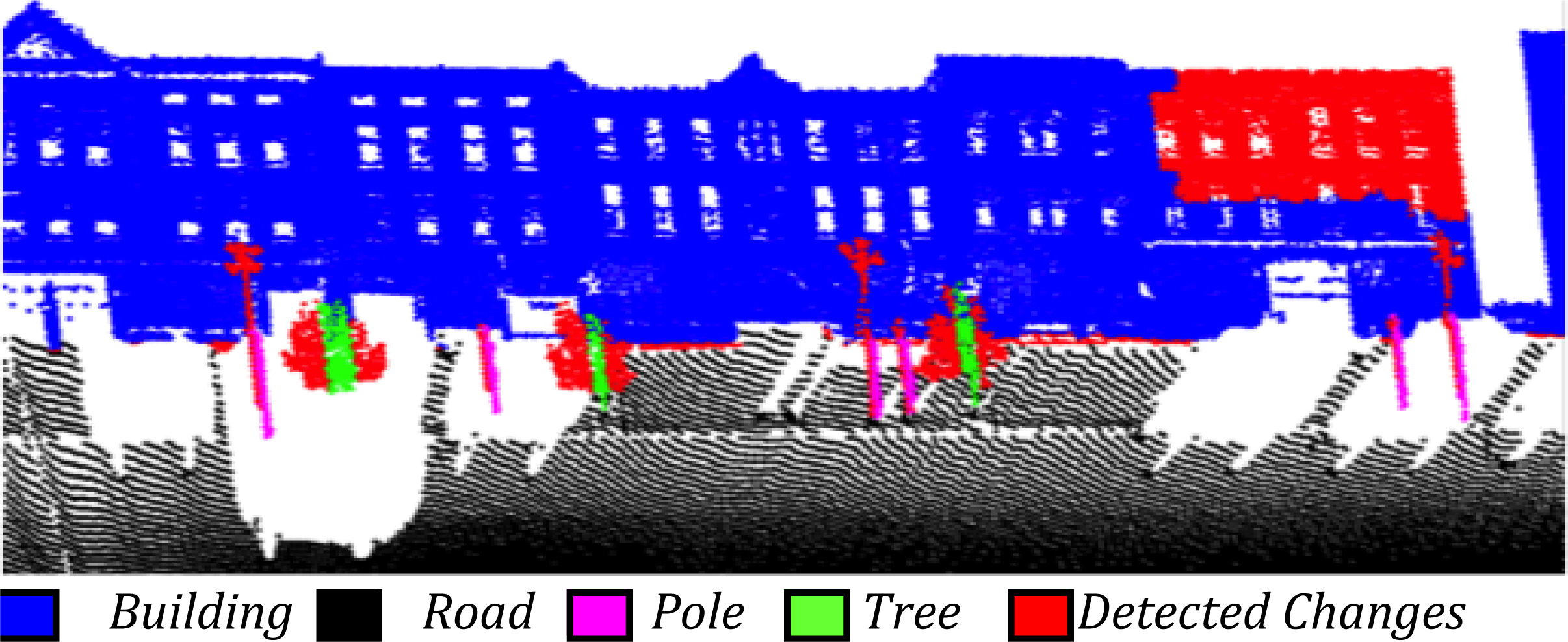

6.3. Handling Misclassifications and Improvement in Classification Results

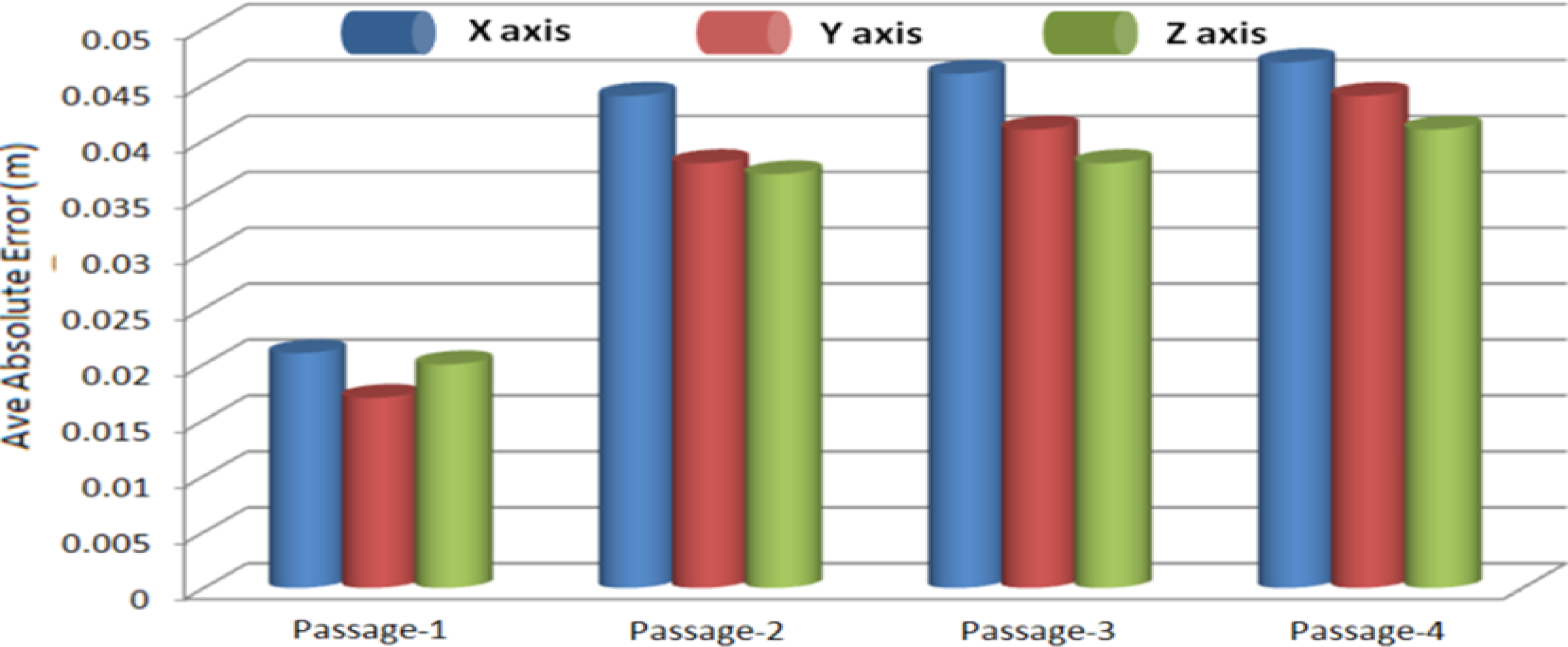

6.4. Accuracy Evaluation

7. Conclusions

Acknowledgments

Conflict of Interest

References

- Wang, C.; Thorpe, C.; Thrun, S.; Hebert, M.; Durrant-Whyte, H. Simultaneous localization, mapping and moving object tracking. Int. J. Robot. Res 2007, 26, 889–916. [Google Scholar]

- Vögtle, T.; Steinle, E. Detection and recognition of changes in building geometry derived from multitemporal laserscanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2004, 35, 428–433. [Google Scholar]

- Champion, N.; Everaerts, J. Detection of unregistered buildings for updating 2D databases. EuroSDR Off. Publ 2009, 56, 7–54. [Google Scholar]

- Criminisi, A.; Pérez, P.; Toyama, K. Region filling and object removal by exemplar-based inpainting. IEEE Trans. Image Process 2004, 13, 1200–1212. [Google Scholar]

- Wang, L.; Jin, H.; Yang, R.; Gong, M. Stereoscopic inpainting: Joint color and depth completion from stereo images. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 1–8.

- Engels, C.; Tingdahl, D.; Vercruysse, M.; Tuytelaars, T.; Sahli, H.; van Gool, L. Automatic occlusion removal from façades for 3D urban reconstruction. Proceedings of Advanced Concepts for Intelligent Vision Systems, Ghent, Belgium, 22–25 August 2011; pp. 681–692.

- Barnes, C.; Shechtman, E.; Finkelstein, A.; Goldman, D. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Trans. Graph 2009, 28, 24:1–24:11. [Google Scholar]

- Konushin, V.; Vezhnevets, V. Automatic Building Texture Completion. Proceedings of Graphicon’2007, Moscow, Russia, 23–27 June 2007; pp. 174–177.

- Rasmussen, C.; Korah, T.; Ulrich, W. Randomized View Planning and Occlusion Removal for Mosaicing Building Façades. Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2005), Edmonton, AB, Canada, 2–6 August 2005; pp. 4137–4142.

- Bénitez, S.; Denis, E.; Baillard, C. Automatic production of occlusion-free rectified façade textures using vehicle-based imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2010, 38, 275–280. [Google Scholar]

- Xiao, J.; Fang, T.; Zhao, P.; Lhuillier, M.; Quan, L. Image-based street-side city modeling. ACM Trans. Graph 2009, 28, 114:1–114:12. [Google Scholar]

- Ortin, D.; Remondino, F. Occlusion-free image generation for realistic texture mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2005, 36, Part 5/W17. 7. [Google Scholar]

- Böhm, J. A Multi-image fusion for occlusion-free façade texturing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2004, 35, 857–873. [Google Scholar]

- Craciun, D.; Paparoditis, N.; Schmitt, F. Multi-view scans alignment for 3D spherical mosaicing in large-scale unstructured environments. Comput. Vision Image Underst 2010, 114, 1248–1263. [Google Scholar]

- Becker, S.; Haala, N. Combined feature extraction for façade reconstruction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2007, 36, 44–49. [Google Scholar]

- Frueh, C.; Sammon, R.; Zakhor, A. Automated Texture Mapping of 3D City Models with Oblique Aerial Imagery. Proceedings of IEEE 2nd International Symposium on 3D Data Processing, Visualization and Transmission (3DPVT), Thessaloniki, Greece, 6–9 September 2004; pp. 396–403.

- Li, Y.; Zheng, Q.; Sharf, A.; Cohen-Or, D.; Chen, B.; Mitra, N.J. 2D-3D Fusion for Layer Decomposition of Urban Façades. Proceedings of IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011.

- Matikainen, L.; Hyyppä, J.; Kaartinen, H. Automatic detection of changes from laser scanner and aerial imagedata for updating building maps. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2004, 5, 3413–3416. [Google Scholar]

- Vu, T.T.; Matsuoka, M.; Yamazaki, F. LIDAR-Based Change Detection of Buildings in Dense Urban Areas. Proceedings of 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; 5, pp. 3413–3416.

- Bouziani, M.; Goïta, K.; He, D.C. Automatic change detection of buildings in urban environment from very high spatial resolution images using existing geodatabase and prior knowledge. ISPRS J. Photogramm 2010, 65, 143–153. [Google Scholar]

- Matikainen, L.; Hyyppä, J.; Ahokas, E.; Markelin, L.; Kaartinen, H. Automatic detection of buildings and changes in buildings for updating of maps. Remote Sens 2010, 2, 1217–1248. [Google Scholar]

- Schäfer, T.; Weber, T.; Kyrinovič, P.; Zámečniková, M. Deformation Measurement Using Terrestrial Laser Scanning at the Hydropower Station of Gabčíkovo. Proceedings of INGEO 2004 and FIG Regional Central and Eastern European Conference on Engineering Surveying, Bratislava, Slovakia, 11–13 November 2004.

- Lindenbergh, R.; Pfeifer, N. A Statistical Deformation Analysis of Two Epochs of Terrestrial Laser Data of a Lock. Proceedings of ISPRS 7th Conference on Optical 3D Measurement Techniques, Vienna, Austria, 3–5 October 2005; 2, pp. 61–70.

- Van Gosliga, R.; Lindenbergh, R.; Pfeifer, N. Deformation analysis of a bored tunnel by means of terrestrial laser scanning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2006, 36, 167–172. [Google Scholar]

- Hsiao, K.; Liu, J.; Yu, M.; Tseng, Y. Change detection of landslide terrains using ground-based LiDAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2004, 35, 617–621. [Google Scholar]

- Girardeau-Montaut, D.; Roux, M.; Marc, R.; Thibault, G. Change detection on points cloud data acquired with a ground laser scanner. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2005, 36, 30–35. [Google Scholar]

- Hyyppä, J.; Jaakkola, A.; Hyyppä, H.; Kaartinen, H.; Kukko, A.; Holopainen, M. others. Map Updating and Change Detection Using Vehicle-Based Laser Scanning. Proceedings of 2009 IEEE Joint Urban Remote Sensing Event, Shanghai, China, 20–22 May 2009; pp. 1–6.

- Zeibak, R.; Filin, S. Change detection via terrestrial laser scanning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2007, 36, 430–435. [Google Scholar]

- Kang, Z.; Lu, Z. The Change Detection of Building Models Using Epochs of Terrestrial Point Clouds. Proceedings of 2011 IEEE International Workshop on Multi-Platform/Multi-Sensor Remote Sensing and Mapping (M2RSM), Xiamen, China, 10–12 January 2011; pp. 1–6.

- McDonald, J.; Kaess, M.; Cadena, C.; Neira, J.; Leonard, J. Real-time 6-DOF multi-session visual SLAM over large-scale environments. Robot. Auto. Syst. 2012. [Google Scholar] [CrossRef]

- Aijazi, A.K.; Checchin, P.; Trassoudaine, L. Segmentation based classification of 3D urban point clouds: A super-voxel based approach with evaluation. Remote Sens 2013, 5, 1624–1650. [Google Scholar]

- Souza, A.A.; Gonçalves, L.M. 3D Robotic Mapping with Probabilistic Occupancy Grids. Int. J. Eng. Sci. Emerg. Technol 2012, 4, 15–25. [Google Scholar]

- Tversky, A. Features of similarity. Psychol. Rev 1977, 84, 327–352. [Google Scholar]

- Besl, P.; McKay, N. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell 1992, 14, 239–256. [Google Scholar]

- Vihinen, M. How to evaluate performance of prediction methods? Measures and their interpretation in variation effect analysis. BMC Genomics 2012, 13, S2. [Google Scholar]

- Hripcsak, G.; Rothschild, A.S. Agreement, the F-measure, and reliability in information retrieval. J. Am. Med. Inform. Assoc 2005, 12, 296–298. [Google Scholar]

| Object Type | Permanently Static | Temporarily Static | Mobile |

|---|---|---|---|

| Road | x | ||

| Building | x | ||

| Tree | x | ||

| Pole | x | ||

| Car | x | x | |

| Pedestrian | x | x | |

| Unclassified | x | x |

| # | Condition | Possible Assumption |

|---|---|---|

| 1 | ASymx,y< ASymy,x | Addition of structure (could be new construction) |

| 2 | ASymx,y> ASymy,x | Removal of structure (could be demolition) |

| 3 | ASymx,y = ASymy,x | Modification of structure (depending on the value of Sym) |

| Neighborhood 1 (Modified) | Neighborhood 1 | Neighborhood 2 | ||

|---|---|---|---|---|

| ACC | Accuracy | 0.864 | 0.891 | 0.903 |

| PPV | Positive Predictive Value | 0.842 | 0.850 | 0.900 |

| NPV | Negative Predictive Value | 0.864 | 0.902 | 0.901 |

| FDR | False Discovery Rate | 0.158 | 0.150 | 0.100 |

| F1 | F1 measure | 0.695 | 0.650 | 0.782 |

| MCC | Matthews Correlation Coefficient | +0.624 | +0.692 | +0.729 |

| Passage 1 | Passage 2 | Passage 3 | Passage 4 | Proposed Method | |

|---|---|---|---|---|---|

| Neighbor-1 | 0.943 | 0.977 | 0.958 | 0.959 | 0.981 |

| Neighbor-2 | 0.979 | 0.975 | 0.981 | 0.984 | 0.992 |

© 2013 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Aijazi, A.K.; Checchin, P.; Trassoudaine, L. Automatic Removal of Imperfections and Change Detection for Accurate 3D Urban Cartography by Classification and Incremental Updating. Remote Sens. 2013, 5, 3701-3728. https://doi.org/10.3390/rs5083701

Aijazi AK, Checchin P, Trassoudaine L. Automatic Removal of Imperfections and Change Detection for Accurate 3D Urban Cartography by Classification and Incremental Updating. Remote Sensing. 2013; 5(8):3701-3728. https://doi.org/10.3390/rs5083701

Chicago/Turabian StyleAijazi, Ahmad Kamal, Paul Checchin, and Laurent Trassoudaine. 2013. "Automatic Removal of Imperfections and Change Detection for Accurate 3D Urban Cartography by Classification and Incremental Updating" Remote Sensing 5, no. 8: 3701-3728. https://doi.org/10.3390/rs5083701