Automated Reconstruction of Building LoDs from Airborne LiDAR Point Clouds Using an Improved Morphological Scale Space

Abstract

:1. Introduction

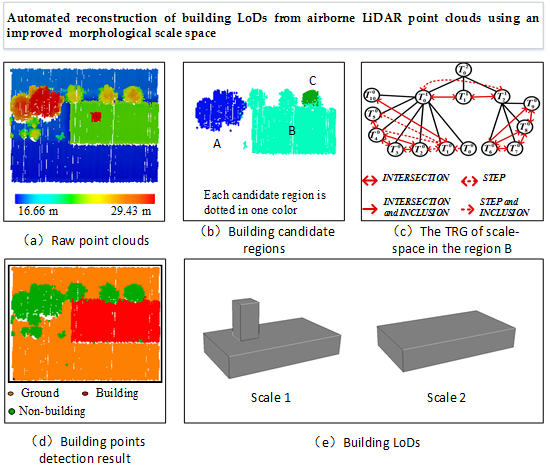

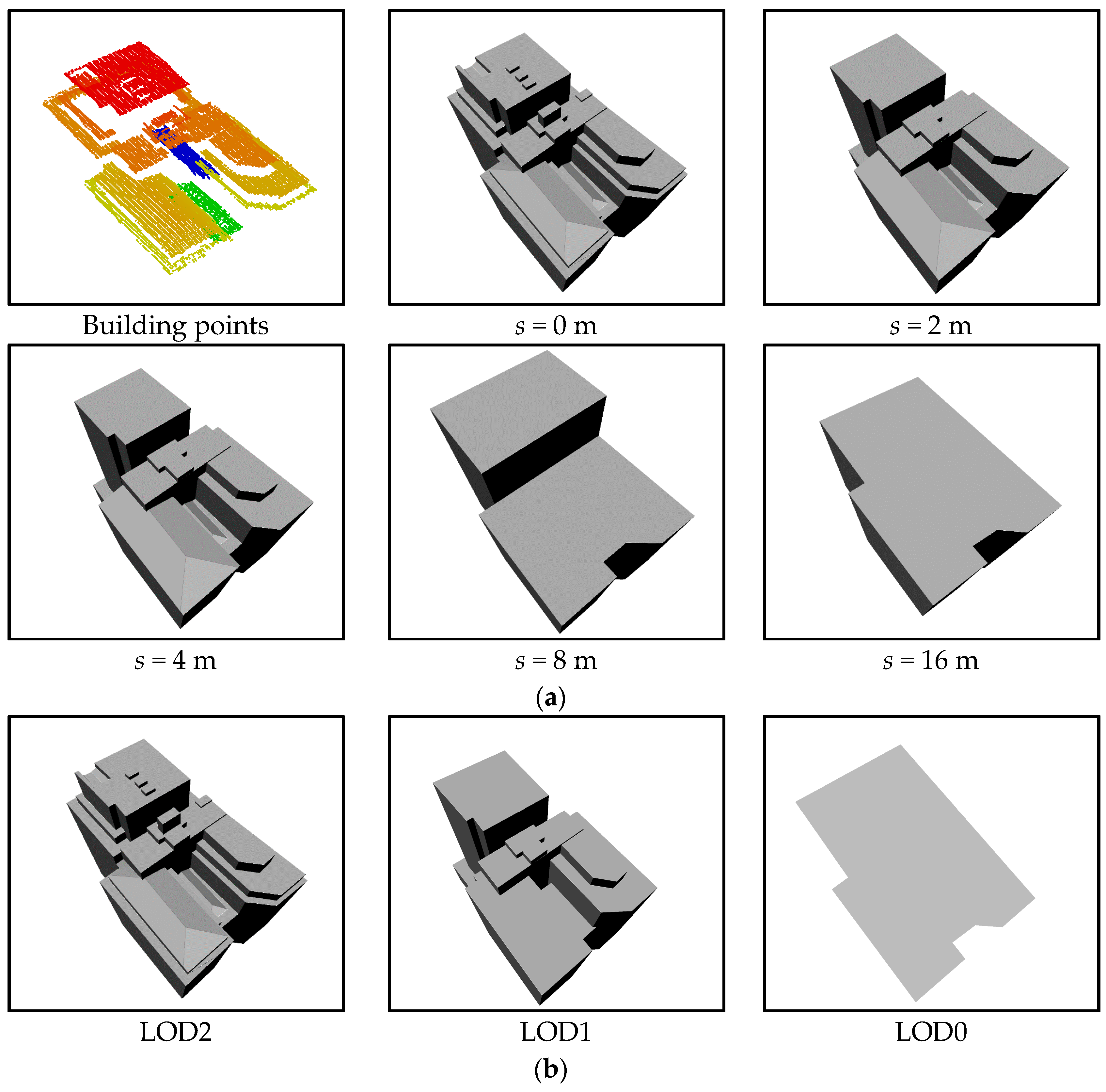

- Directly construct the scale space from airborne laser scanning point clouds by applying the morphological reconstruction with planar segment constraints for feature preservation, and a TRG (topological relationship graph) is created for representing the spatial relations between segments across levels;

- Generate 3D building LoDs from the extracted building points based on the TRG, and the building LoD with a specified level could be automatically reconstructed from the finest building points.

2. An Improved Morphological Scale Space for Point Clouds

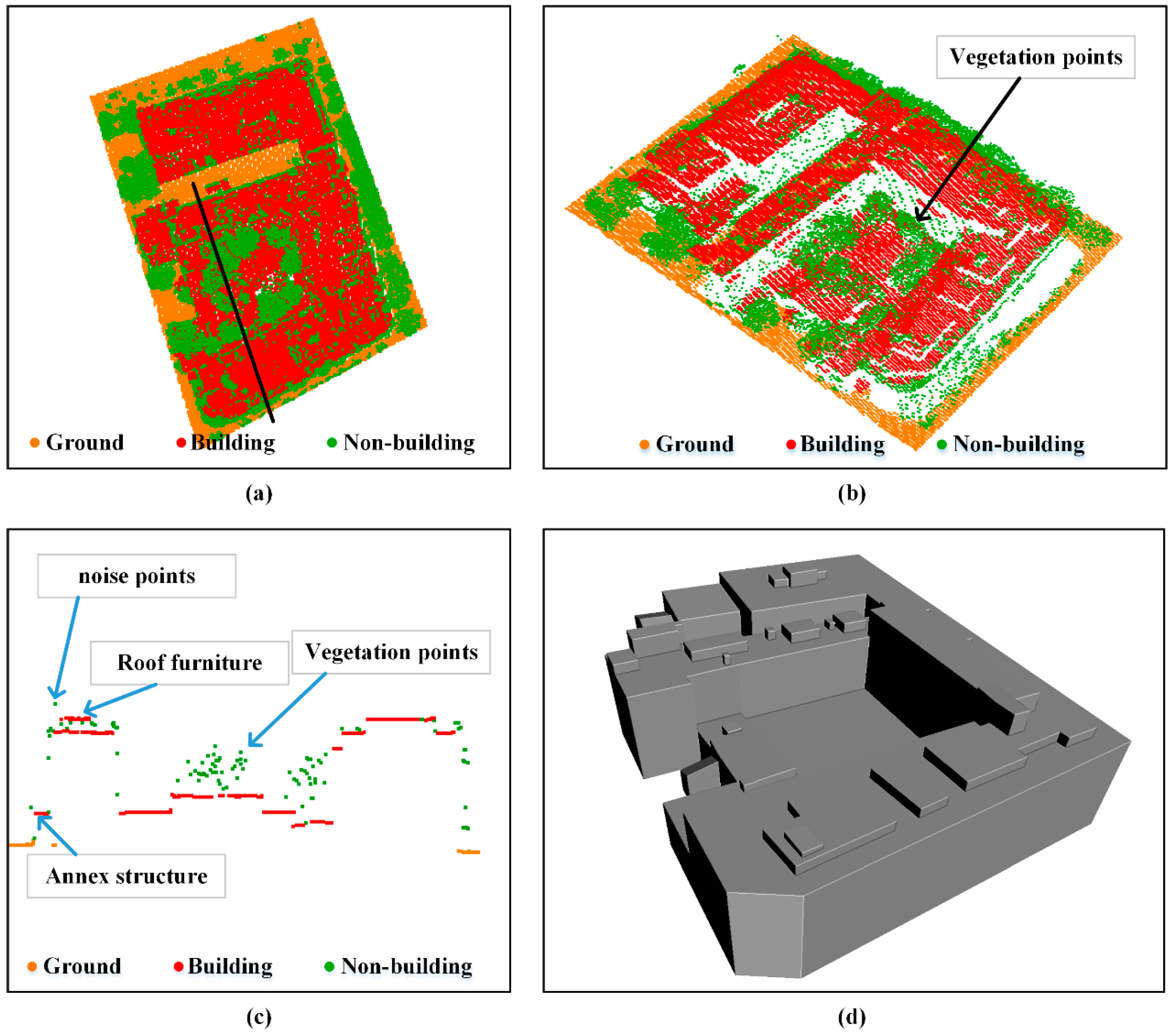

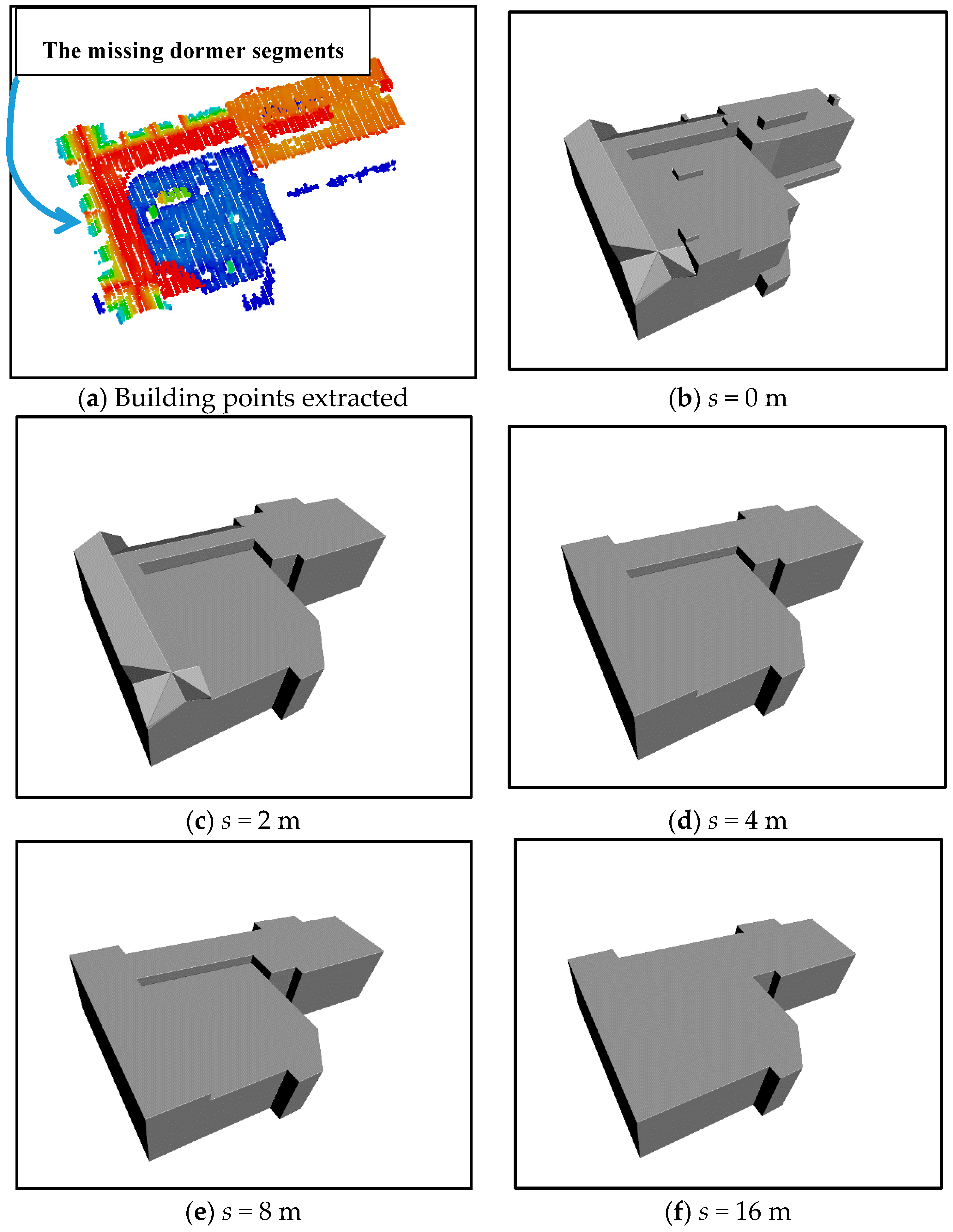

2.1. A Morphological Reconstruction for Each Level with Planar Segment Constraints

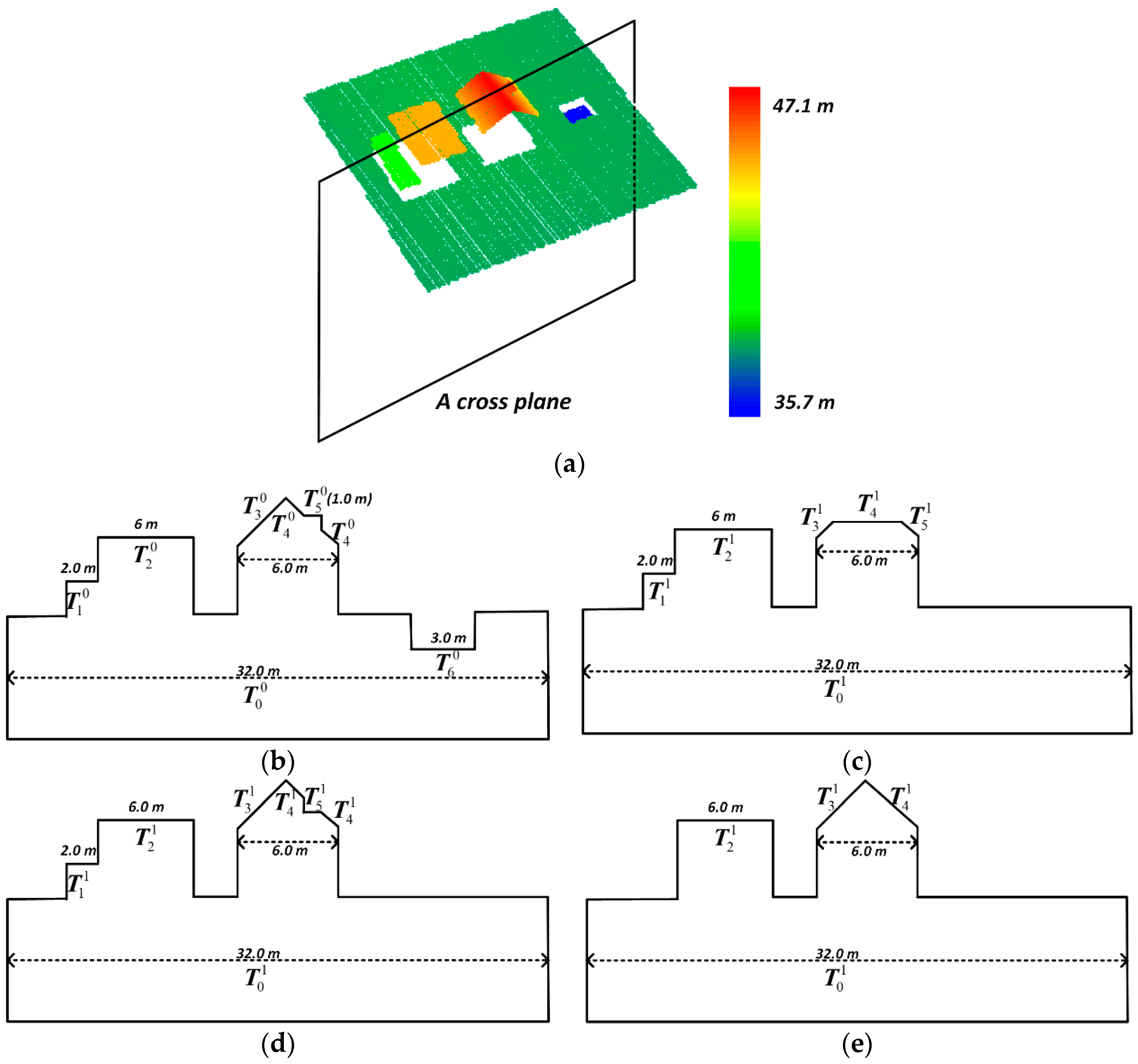

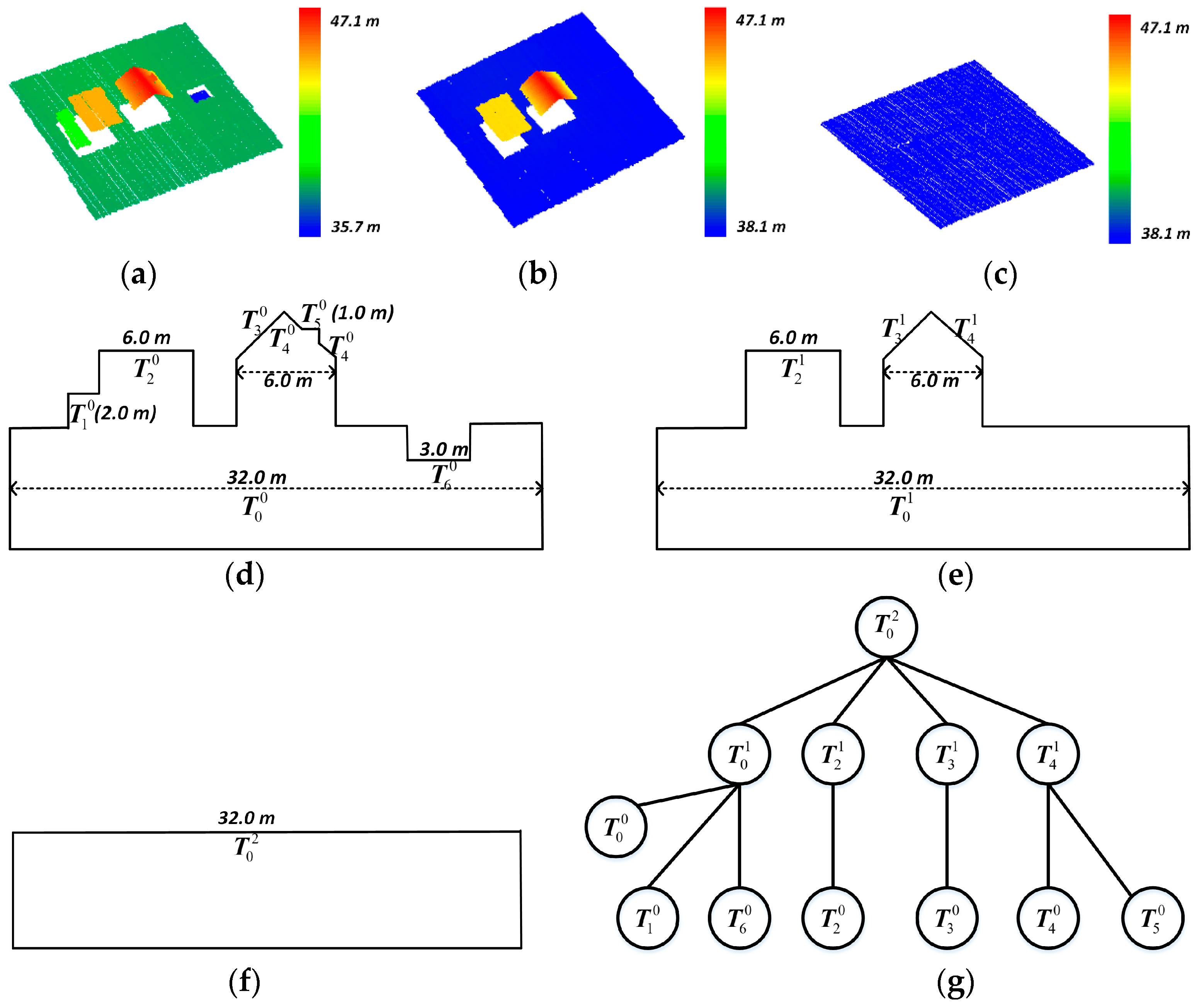

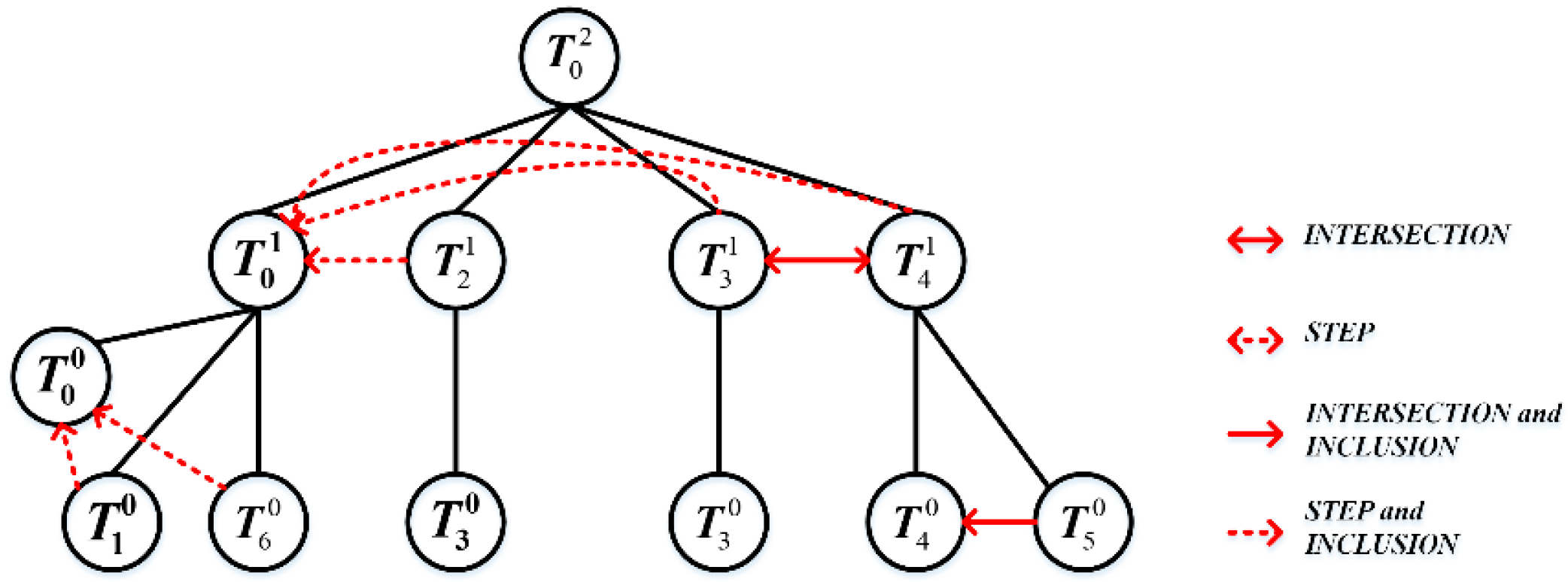

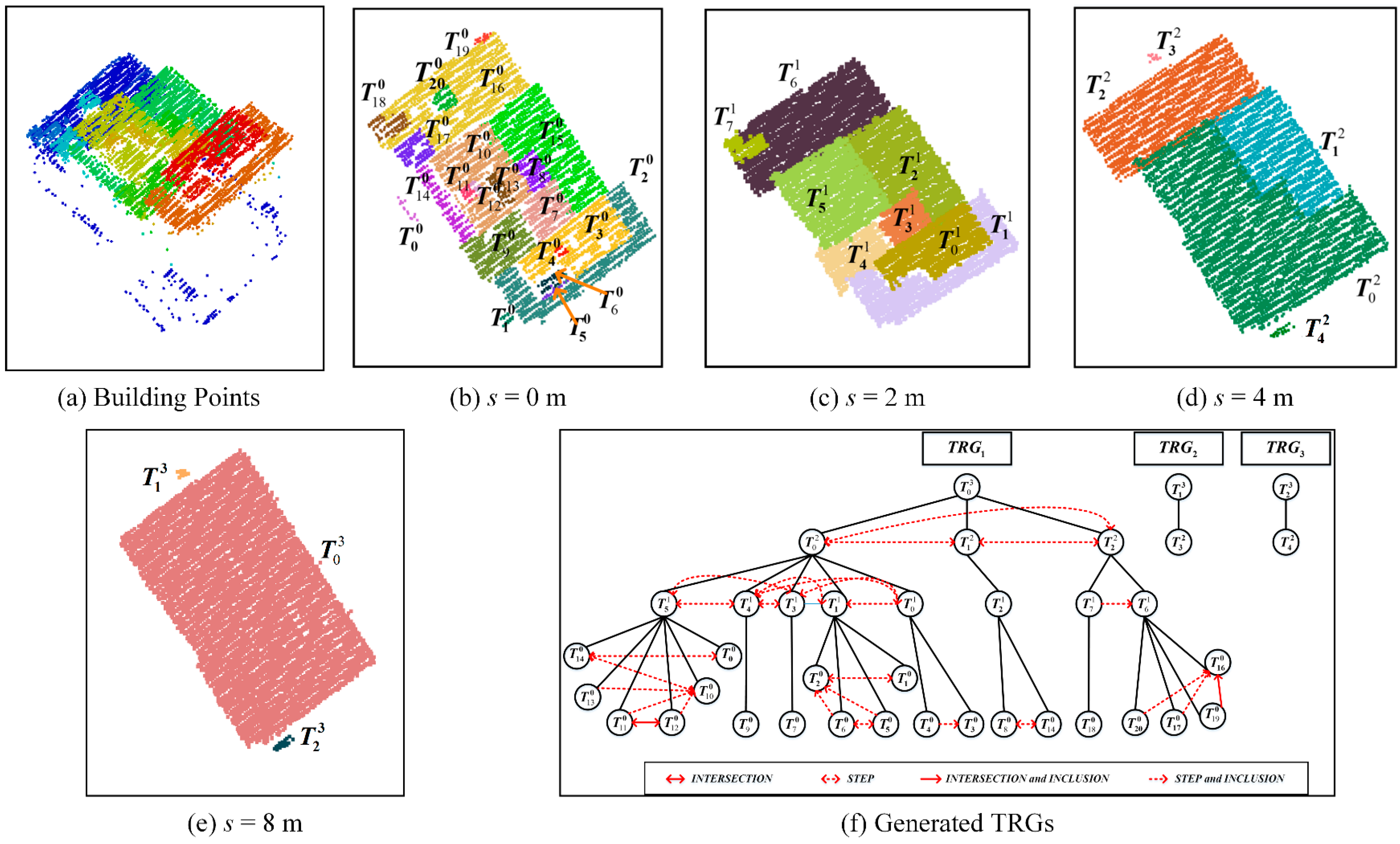

2.2. Generating the Morphological Scale Space and Constructing the Topological Relationship Graph (TRG)

3. Generating Building Levels of Detail (LoDs) Based on the Improved Morphological Scale Space

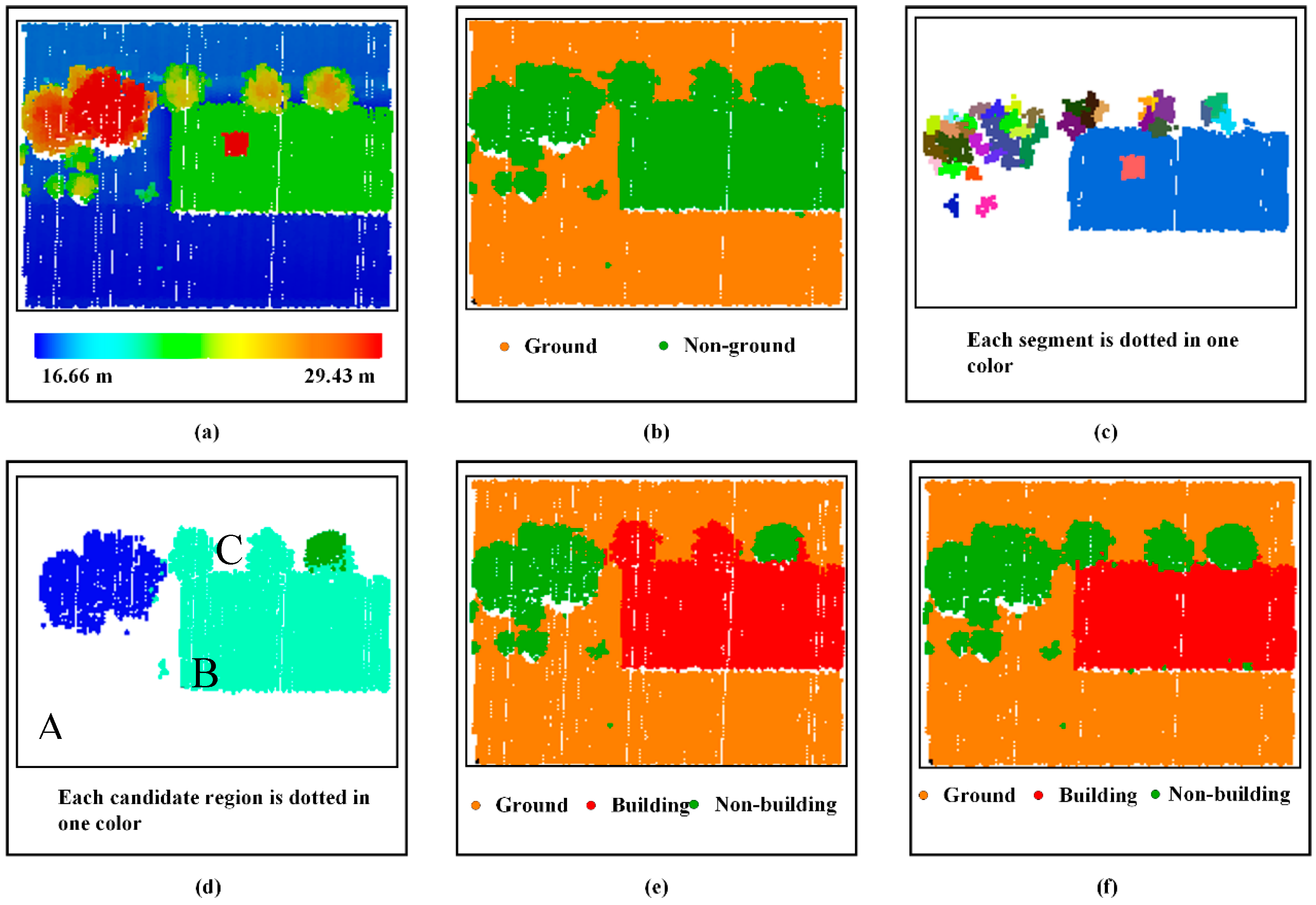

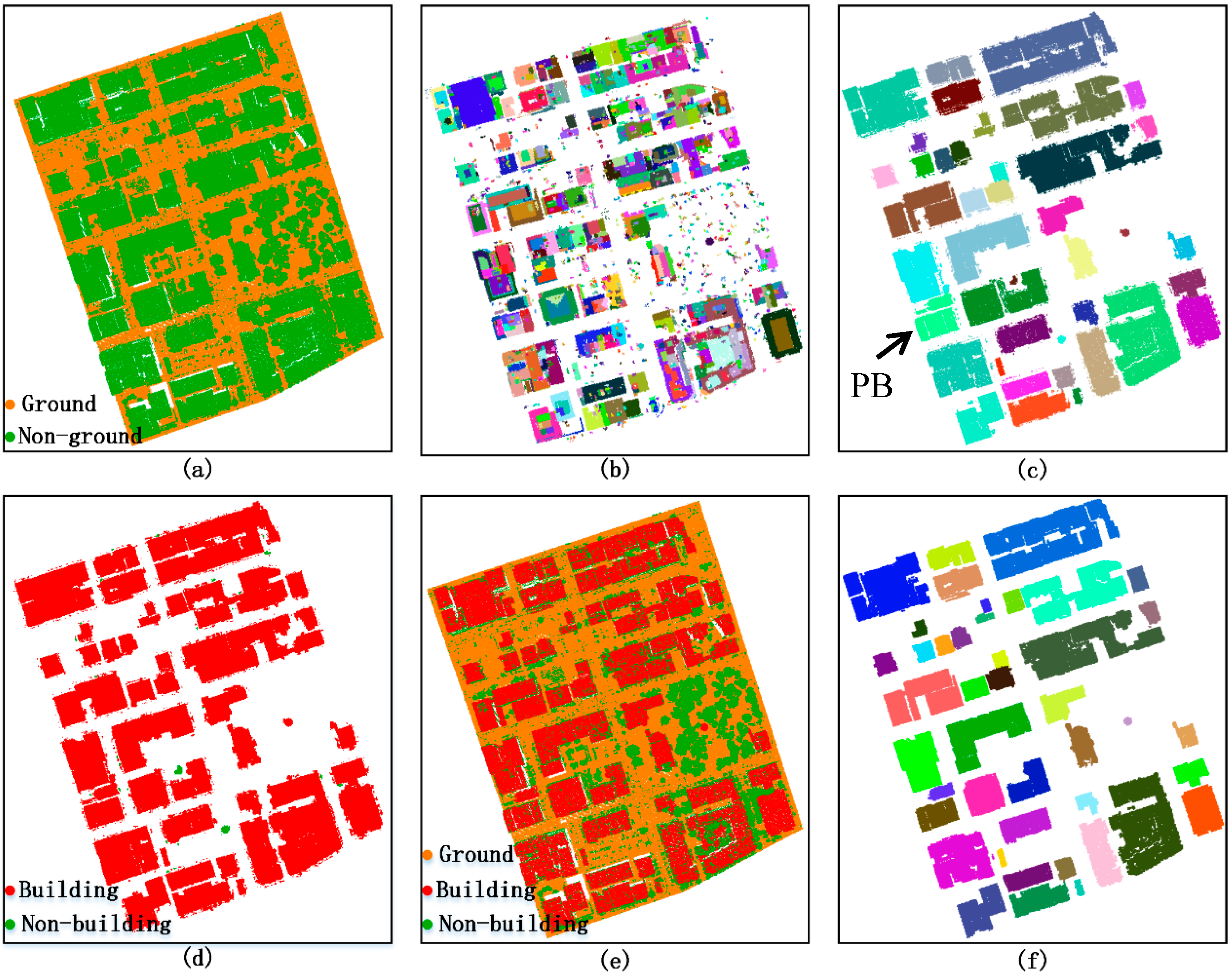

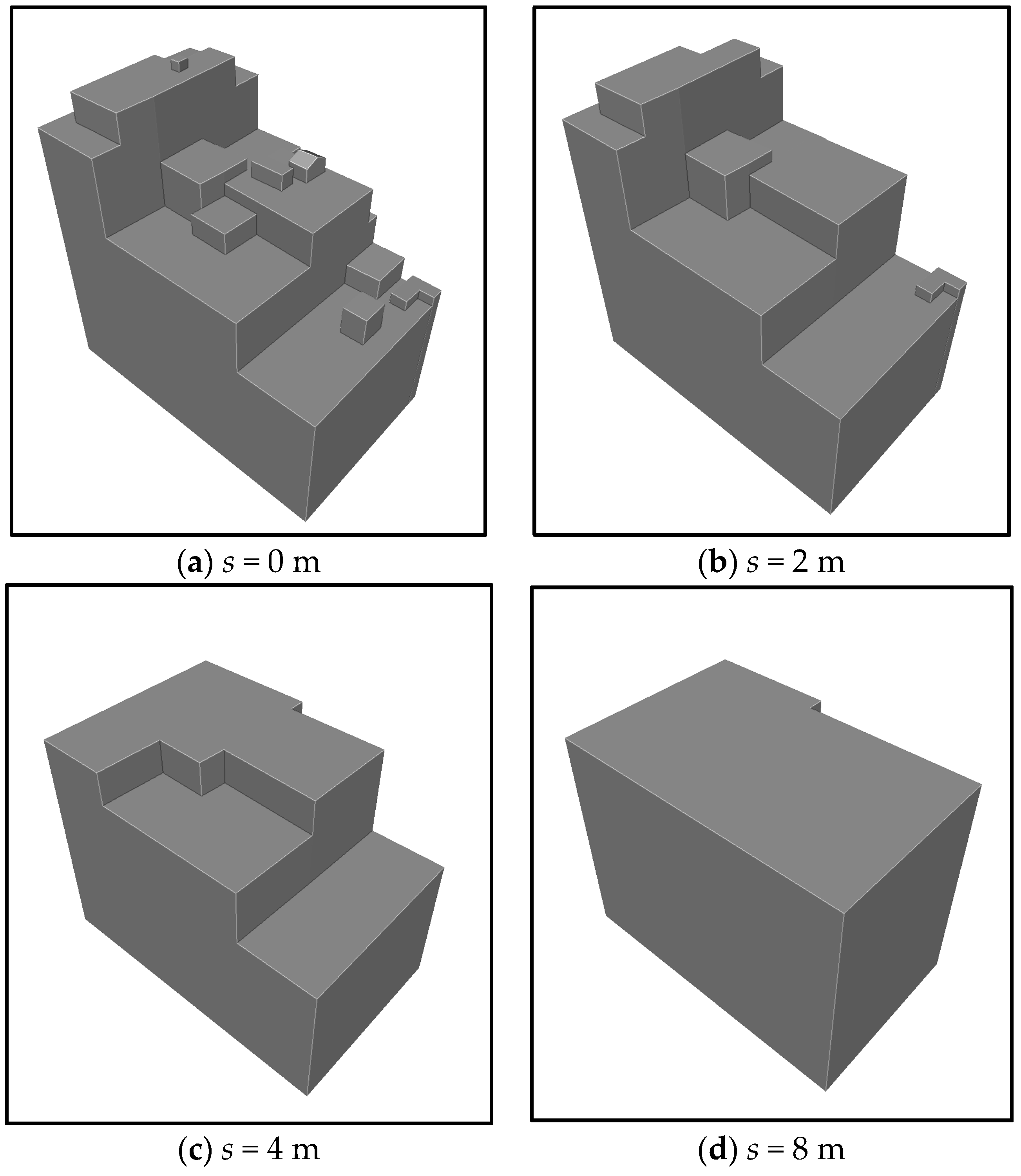

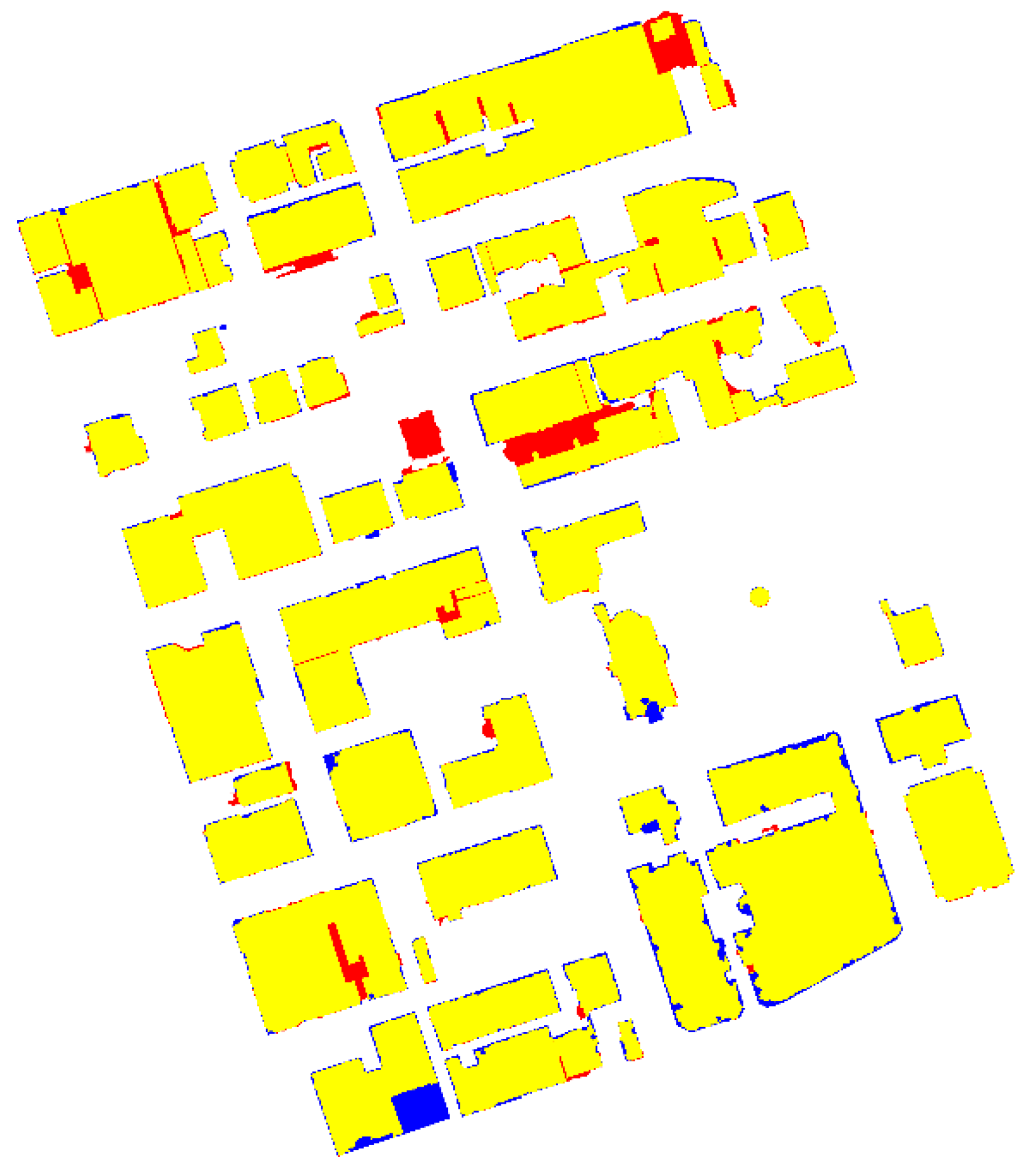

3.1. Building Candidate Region Extraction and Generation of the Morphological Scale Space

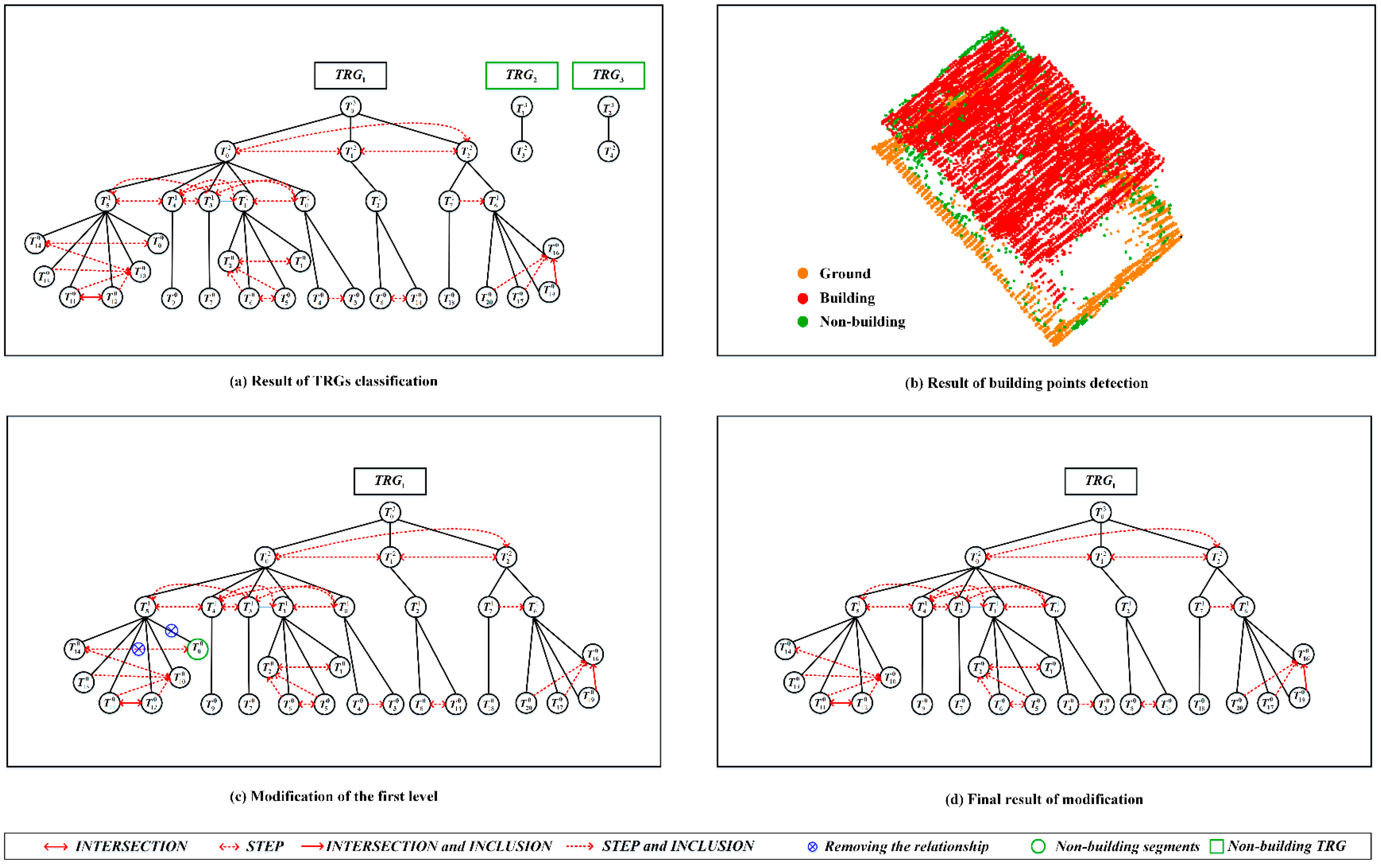

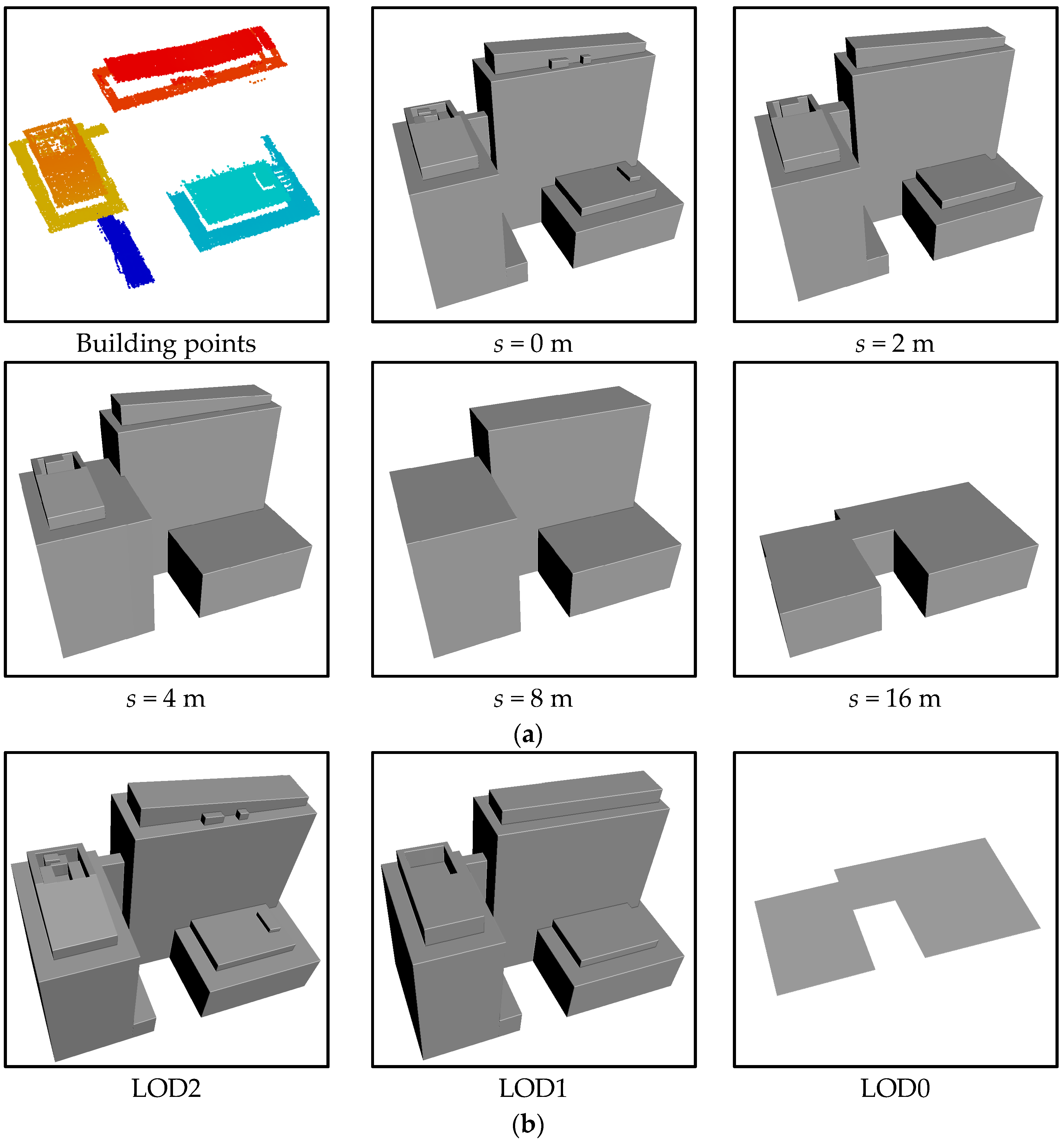

3.2. Building Point Detection

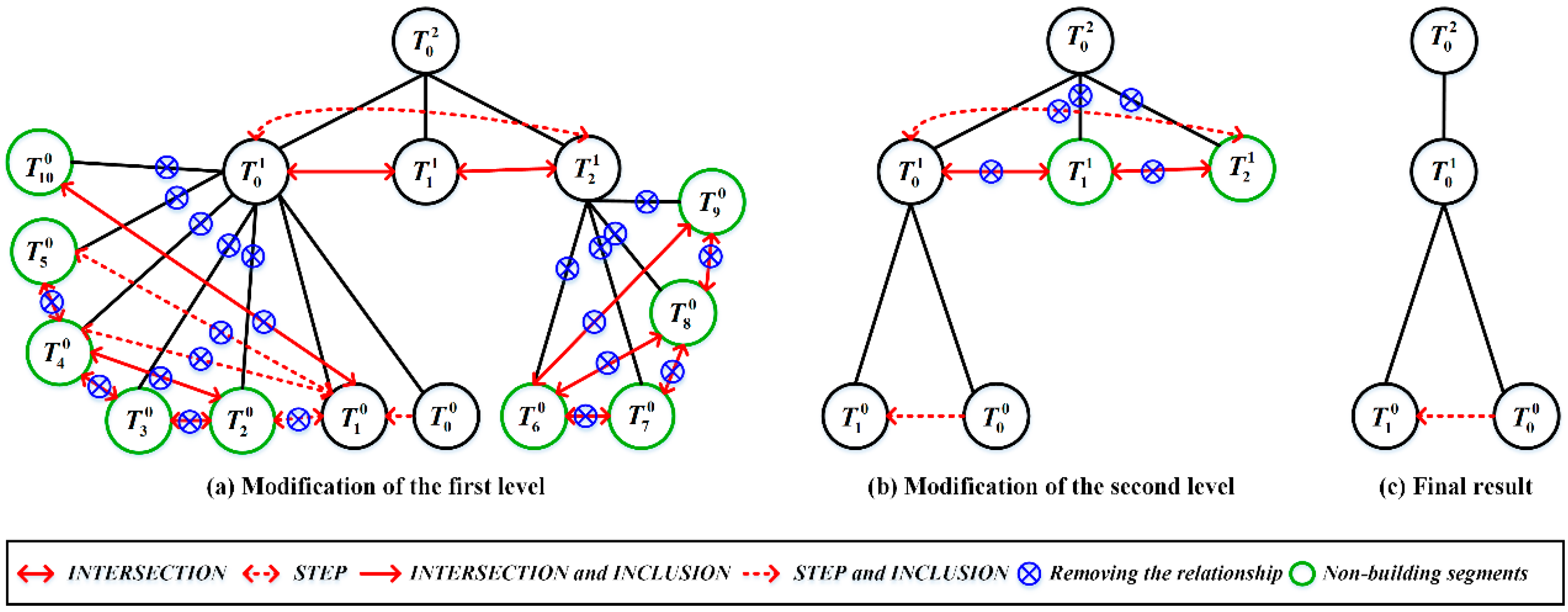

3.2.1. Classification of TRGs

3.2.2. Extraction of the Final Building Points from Each Building TRG

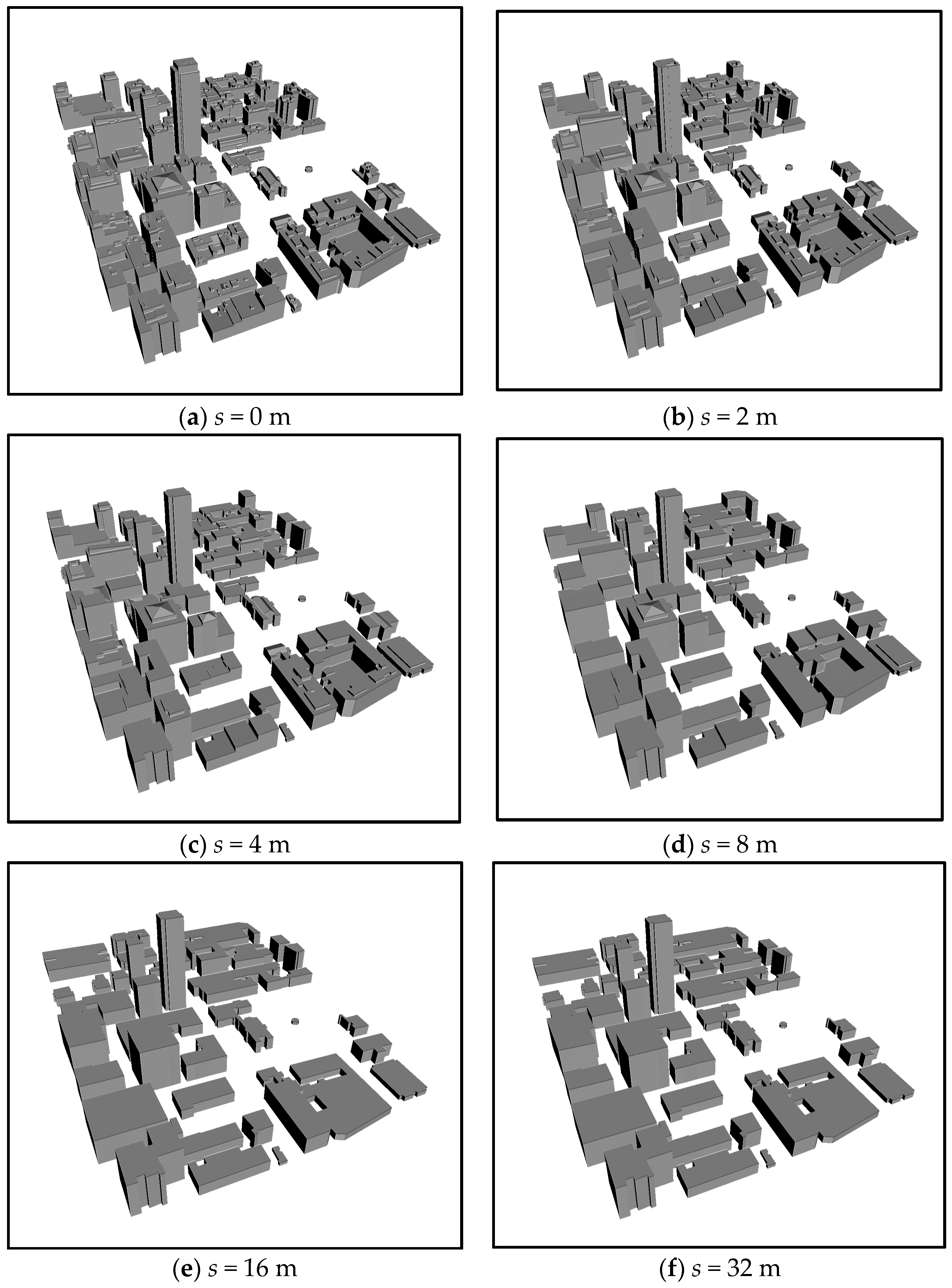

3.3. Building LoD Generation

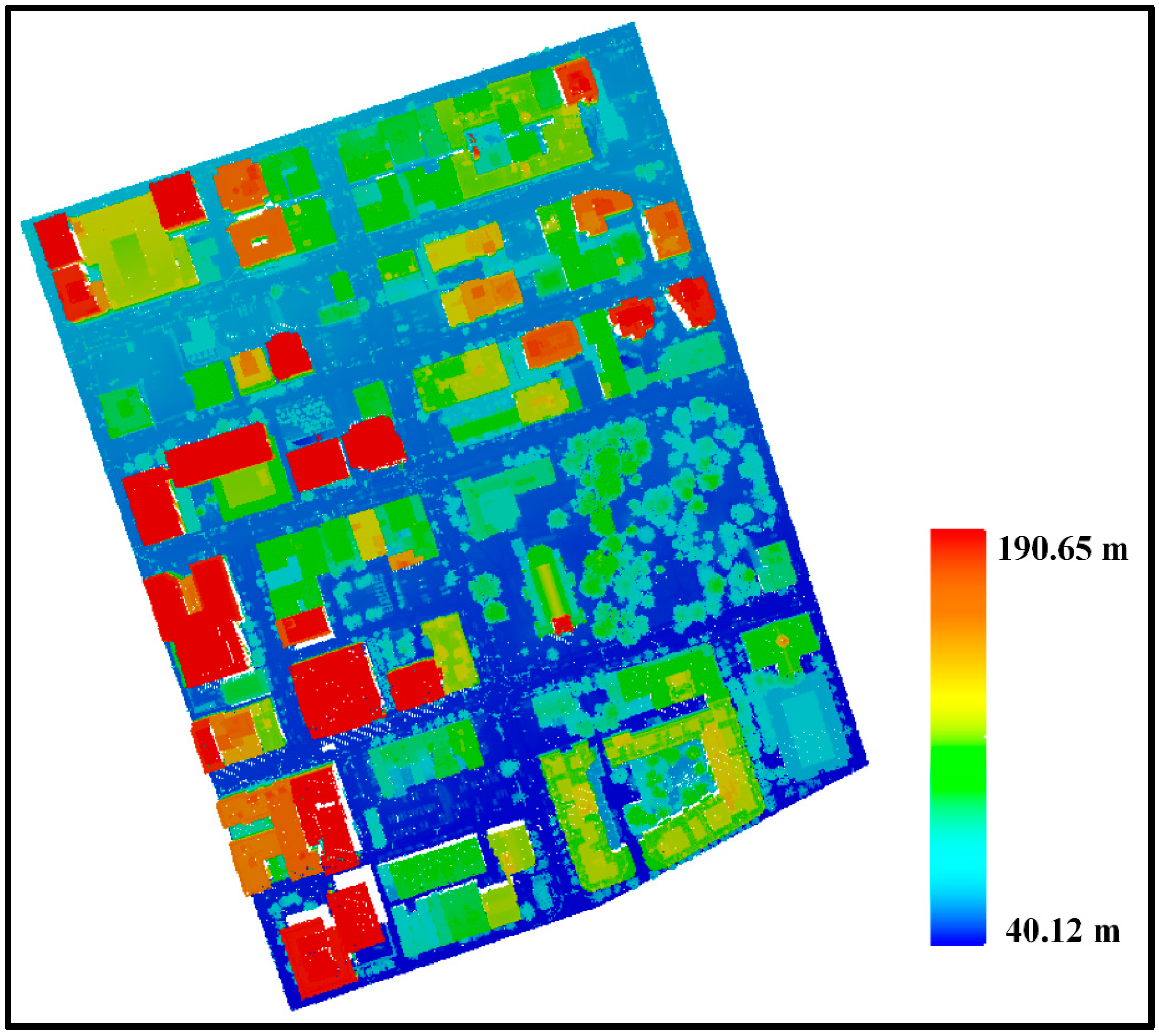

4. Experimental Results and Analysis

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gröger, G.; Kolbe, T.H.; Nagel, C.; Häfele, K.H. OGC City Geography Markup Language (CityGML) Encoding Standard; Open Geospatial Consortium: Wayland, MA, USA, 2012. [Google Scholar]

- Jochem, A.; Hofle, B.; Rutzinger, M.; Pfeifer, N. Automatic roof plane detection and analysis in airborne lidar point clouds for solar potential assessment. Sensors 2009, 9, 5241–5262. [Google Scholar] [CrossRef] [PubMed]

- Biljecki, F.; Ledoux, H.; Stoter, J.; Zhao, J. Formalisation of the level of detail in 3D city modelling. Comput. Environ. Urban Syst. 2014, 48, 1–15. [Google Scholar] [CrossRef]

- Fan, H.; Meng, L. A three-step approach of simplifying 3D buildings modeled by CityGML. Int. J. Geogr. Inf. Sci. 2012, 26, 1091–1107. [Google Scholar] [CrossRef]

- Forberg, A. Generalization of 3D building data based on a scale-space approach. ISPRS J. Photogramm. Remote Sens. 2007, 62, 104–111. [Google Scholar] [CrossRef]

- Mao, B.; Ban, Y.; Harrie, L. A multiple representation data structure for dynamic visualisation of generalised 3D city models. ISPRS J. Photogramm. Remote Sens. 2011, 66, 198–208. [Google Scholar] [CrossRef]

- Thiemann, F.; Sester, M. Segmentation of buildings for 3D-generalisation. In Proceedings of the ICA Workshop on Generalisation and Multiple Representation, Leicester, UK, 20–21 August 2004.

- Kada, M. 3D building generalization based on half-space modeling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 58–64. [Google Scholar]

- Sester, M. Generalization based on least squares adjustment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2000, 33, 931–938. [Google Scholar]

- Biljecki, F.; Ledoux, H.; Stoter, J. An improved LOD specification for 3D building models. Comput. Environ. Urban Syst. 2016, 59, 25–37. [Google Scholar] [CrossRef]

- Verdie, Y.; Lafarge, F.; Alliez, P. LOD Generation for urban scenes. ACM Trans. Graph. 2015, 34, 1–14. [Google Scholar] [CrossRef]

- Shan, J.; Toth, C.K. Topographic Laser Ranging and Scanning, Principles and Processing; CRC Press: London, UK, 2008; Volume 15, pp. 423–446. [Google Scholar]

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D.; Breitkopf, U.; Jung, J. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2014, 93, 256–271. [Google Scholar] [CrossRef]

- Tomljenovic, I.; Höfle, B.; Tiede, D.; Blaschke, T. Building extraction from airborne laser scanning data, an analysis of the state of the art. Remote Sens. 2015, 7, 3826–3862. [Google Scholar] [CrossRef]

- Mongus, D.; Lukač, N.; Žalik, B. Ground and building extraction from LiDAR data based on differential morphological profiles and locally fitted surfaces. ISPRS J. Photogramm. Remote Sens. 2014, 93, 145–156. [Google Scholar] [CrossRef]

- Jochem, A.; Höfle, B.; Wichmann, V.; Rutzinger, M.; Zipf, A. Area-wide roof plane segmentation in airborne LiDAR point clouds. Comput. Environ. Urban Syst. 2012, 36, 54–64. [Google Scholar] [CrossRef]

- Zhao, Z.; Duan, Y.; Zhang, Y.; Cao, R. Extracting buildings from and regularizing boundaries in airborne lidar data using connected operators. Int. J. Remote Sens. 2016, 37, 889–912. [Google Scholar] [CrossRef]

- Xu, S.; Vosselman, G.; Oude Elberink, S. Multiple-entity based classification of airborne laser scanning data in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 88, 1–15. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X.; Ning, X. SVM-based classification of segmented airborne LiDAR point clouds in urban areas. Remote Sens. 2013, 5, 3749–3775. [Google Scholar] [CrossRef]

- Chehata, N.; Guo, L.; Mallet, C. Airborne lidar feature selection for urban classification using random forests. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 39, 207–212. [Google Scholar]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual classification of lidar data and building object detection in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Guo, B.; Huang, X.; Zhang, F.; Sohn, G. Classification of airborne laser scanning data using JointBoost. ISPRS J. Photogramm. Remote Sens. 2015, 100, 71–83. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Q.; Xie, B. Multiple kernel sparse representation for airborne LiDAR data classification. IEEE Trans. Geosci. Remote Sens. 2016, 1–21. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Fraser, C. Automatic segmentation of raw LIDAR data for extraction of building roofs. Remote Sens. 2014, 6, 3716–3751. [Google Scholar] [CrossRef]

- Richter, R.; Behrens, M.; Döllner, J. Object class segmentation of massive 3D point clouds of urban areas using point cloud topology. Int. J. Remote Sens. 2013, 34, 8408–8424. [Google Scholar] [CrossRef]

- Sánchez-Lopera, J.; Lerma, J.L. Classification of lidar bare-earth points, buildings, vegetation, and small objects based on region growing and angular classifier. Int. J. Remote Sens. 2014, 35, 6955–6972. [Google Scholar] [CrossRef]

- Yan, J.; Zhang, K.; Zhang, C.; Chen, S.-C.; Narasimhan, G. Automatic construction of 3-D building model from Airborne LIDAR data through 2-D snake algorithm. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3–14. [Google Scholar]

- Ullman, S.; Vidal-Naquet, M.; Sali, E. Visual features of intermediate complexity and their use in classification. Nat. Neurosci. 2002, 5, 682–687. [Google Scholar] [CrossRef] [PubMed]

- Goutsias, J.; Vincent, L.; Bloomberg, D.S. Mathematical Morphology and Its Applications to Image and Signal Processing; Computational Imaging and Vision; Kluwer: Dordrecht, The Netherlands, 2000. [Google Scholar]

- Jung, C.R.; Scharcanski, J. Adaptive image denoising and edge enhancement in scale-space using the wavelet transform. Pattern Recognit. Lett. 2003, 24, 965–971. [Google Scholar] [CrossRef]

- Lopez-Molina, C.; De Baets, B.; Bustince, H.; Sanz, J.; Barrenechea, E. Multiscale edge detection based on Gaussian smoothing and edge tracking. Knowl. Based Syst. 2013, 44, 101–111. [Google Scholar] [CrossRef]

- Vincent, L. Morphological grayscale reconstruction in image analysis, applications and efficient algorithms. IEEE Trans. Image Process. 1993, 2, 176–201. [Google Scholar] [CrossRef] [PubMed]

- Vu, T.T.; Yamazaki, F.; Matsuoka, M. Multi-scale solution for building extraction from LiDAR and image data. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 281–289. [Google Scholar] [CrossRef]

- Yang, B.; Xu, W.; Dong, Z. Automated extraction of building outlines from airborne laser scanning point clouds. IEEE Geosci. Remote Sens. 2013, 10, 1399–1403. [Google Scholar] [CrossRef]

- Cui, Z.; Zhang, K.; Zhang, C.; Chen, S.C. A multi-pass generation of DEM for urban planning. In Proceedings of the International Conference on Cloud Computing and Big Data (CloudCom-Asia), Fuzhou, China, 16–19 December 2013.

- Yang, B.; Huang, R.; Dong, Z.; Zang, Y.; Li, J. Two-step adaptive extraction method for ground points and breaklines from lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2016, 119, 373–389. [Google Scholar] [CrossRef]

- Perera, G.S.; Maas, H.G. Cycle graph analysis for 3D roof structure modelling, Concepts and performance. ISPRS J. Photogramm. Remote Sens. 2014, 93, 213–226. [Google Scholar] [CrossRef]

- Rutzinger, M.; Rottensteiner, F.; Pfeifer, N. A comparison of evaluation techniques for building extraction from airborne laser scanning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 2, 11–20. [Google Scholar] [CrossRef]

- ISPRS Benchmark Test Results—WHU_YD. Available online: http://www2.isprs.org/commissions/comm3/wg4/results.html (accessed on 10 October 2016).

- Bulatov, D.; Rottensteiner, F.; Schulz, K. Context-based urban terrain reconstruction from images and videos. In Proceedings of the XXII ISPRS Congress of the International Society for Photogrammetry and Remote Sensing ISPRS Annals, Melbourne, Australia, 25 August–1 September 2012.

- Wei, Y.; Yao, W.; Wu, J.; Schmitt, M.; Stilla, U. Adaboost-based feature relevance assessment in fusing lidar and image data for classification of trees and vehicles in urban scenes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 323–328. [Google Scholar] [CrossRef]

- Gerke, M.; Xiao, J. Fusion of airborne laserscanning point clouds and images for supervised and unsupervised scene classification. ISPRS J. Photogramm. Remote Sens. 2014, 87, 78–92. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Lu, G.; Fraser, C. Automatic building extraction from LiDAR data covering complex urban scenes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 25–32. [Google Scholar] [CrossRef]

- Tomljenovic, I.; Blaschke, T.; Höfle, B.; Tiede, D. Potential and idiosyncrasy of object-based image analysis for airborne Lidar-based building detection. South-East. Eur. J. Earth Obs. Geomat. 2014, 3, 517–520. [Google Scholar]

| Features | Descriptions | Characteristics |

|---|---|---|

| The area of the TRG () | The area of the TRG | The areas of buildings and large trees are large, and the areas of small objects (e.g., vehicles, low vegetation and street furniture) are small |

| The width of the TRG () | The width of the TRG | The widths of buildings and large trees are large, and the widths of small objects (e.g., vehicles, low vegetation and street furniture) are small |

| The area ratio of the segments () | The value is the ratio between the minimum and the maximum area of segments across scales. It reflects the result of segmentation for objects in different scales | The value of a building is large, and that of a tree may be small |

| The area ratio of ground points () | The ratio in areas between the entire object and the ground points in the corresponding region. It reflects the penetrating capacities in different objects | The value of a building generally approximates zero, and it may be higher in the area of vegetation |

| The ratio of segmented points () | The ratio in the number of segmented points between the minimum scale and the maximum scale. It reflects the changing of surface characteristics across scales and the penetrating capacities in different objects | The value of a building is large, and it is small for vegetation |

| Scale Values | Multi-Scale Roof Data | Plane Segmentation Results | Building LoDs |

|---|---|---|---|

| 0 m |  |  |  |

| 2 m |  |  |  |

| Scale Values | Multi-Scale Roof Data | Plane Segmentation Results | The Final TRG | Building LoDs |

|---|---|---|---|---|

| 0 m |  |  |  |  |

| 2 m |  |  |  | |

| 4 m |  |  |  |

| Parameters | Values | Description | Steps |

|---|---|---|---|

| /m2 | 50 | The area threshold | Building candidate region extraction |

| /m | 5 | The width threshold | |

| /m | 1.5 | The threshold of describing the elevation difference between the boundary points of a building and the DEM | |

| 10 | This parameter is used to remove very small segments in plane segmentation | The generation of the scale space | |

| /° | 10 | A threshold for the slope parameter | |

| /m | 0.2 | It is a threshold of the elevation difference for determining a segment is inclined or horizontal after morphological reconstruction | |

| 0.5 | The area ratio of the segments across levels of a TRG | Building point detection | |

| 0.5 | The area ratio of ground points within a TRG | ||

| 0.5 | The ratio of segmented points across levels of a TRG | ||

| /m2 | 5 | An area threshold for detecting small segments near buildings |

| Methods | Per_Area/% | Per_Object/% | RMS/m | ||||

|---|---|---|---|---|---|---|---|

| CP | CR | Q | CP | CR | Q | ||

| The proposed method | 94.7 | 95.5 | 90.6 | 98.3 | 96.6 | 95.0 | 0.8 |

| WHUY2 [34] | 95.1 | 89.3 | 85.4 | 96.6 | 94.6 | 91.6 | 1.2 |

| TUM [41] | 85.1 | 80.0 | 70.1 | 86.2 | 92.3 | 80.4 | 1.6 |

| FIE [40] | 96.6 | 90.6 | 87.8 | 98.3 | 98.2 | 96.6 | 1.2 |

| ITCM [42] | 80.5 | 82.1 | 68.5 | 96.6 | 22.9 | 22.7 | 1.5 |

| MAR2 [15] | 93.7 | 94.9 | 89.2 | 98.3 | 94.9 | 93.4 | 2.8 |

| MON2 [43] | 95.1 | 91.1 | 87.0 | 100 | 83.6 | 83.6 | 1.1 |

| Z_GIS [44] | 93.0 | 94.5 | 88.2 | 96.6 | 96.5 | 93.3 | 1.0 |

| MIN | 80.5 | 80 | 68.5 | 86.2 | 22.9 | 22.7 | 0.8 |

| MAX | 96.6 | 95.5 | 90.6 | 100 | 98.2 | 96.6 | 2.8 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, B.; Huang, R.; Li, J.; Tian, M.; Dai, W.; Zhong, R. Automated Reconstruction of Building LoDs from Airborne LiDAR Point Clouds Using an Improved Morphological Scale Space. Remote Sens. 2017, 9, 14. https://doi.org/10.3390/rs9010014

Yang B, Huang R, Li J, Tian M, Dai W, Zhong R. Automated Reconstruction of Building LoDs from Airborne LiDAR Point Clouds Using an Improved Morphological Scale Space. Remote Sensing. 2017; 9(1):14. https://doi.org/10.3390/rs9010014

Chicago/Turabian StyleYang, Bisheng, Ronggang Huang, Jianping Li, Mao Tian, Wenxia Dai, and Ruofei Zhong. 2017. "Automated Reconstruction of Building LoDs from Airborne LiDAR Point Clouds Using an Improved Morphological Scale Space" Remote Sensing 9, no. 1: 14. https://doi.org/10.3390/rs9010014