Image Fusion for Spatial Enhancement of Hyperspectral Image via Pixel Group Based Non-Local Sparse Representation

Abstract

:1. Introduction

2. Related Works

2.1. Sparse Representation

2.2. Spectral Dictionary Learning

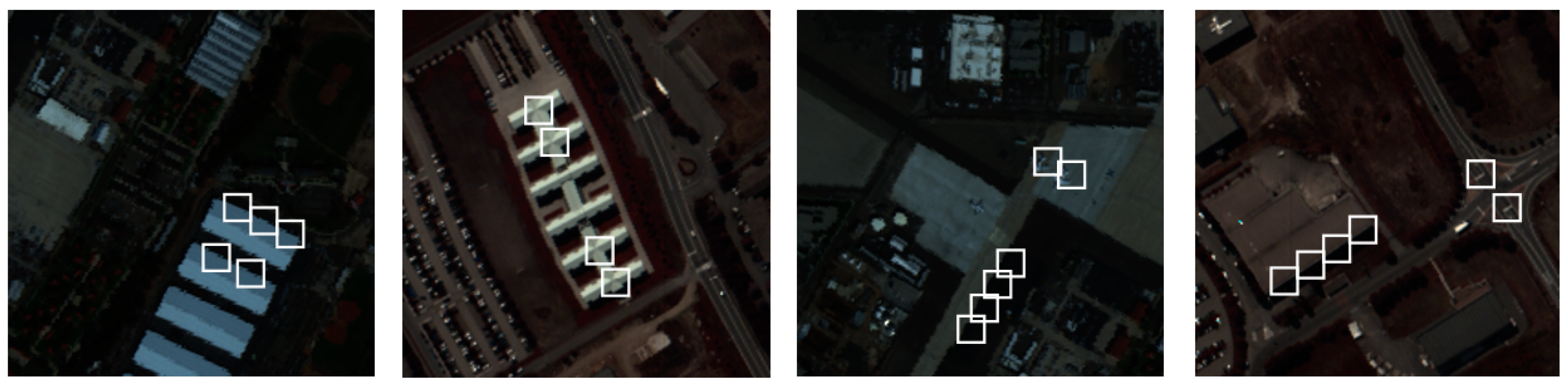

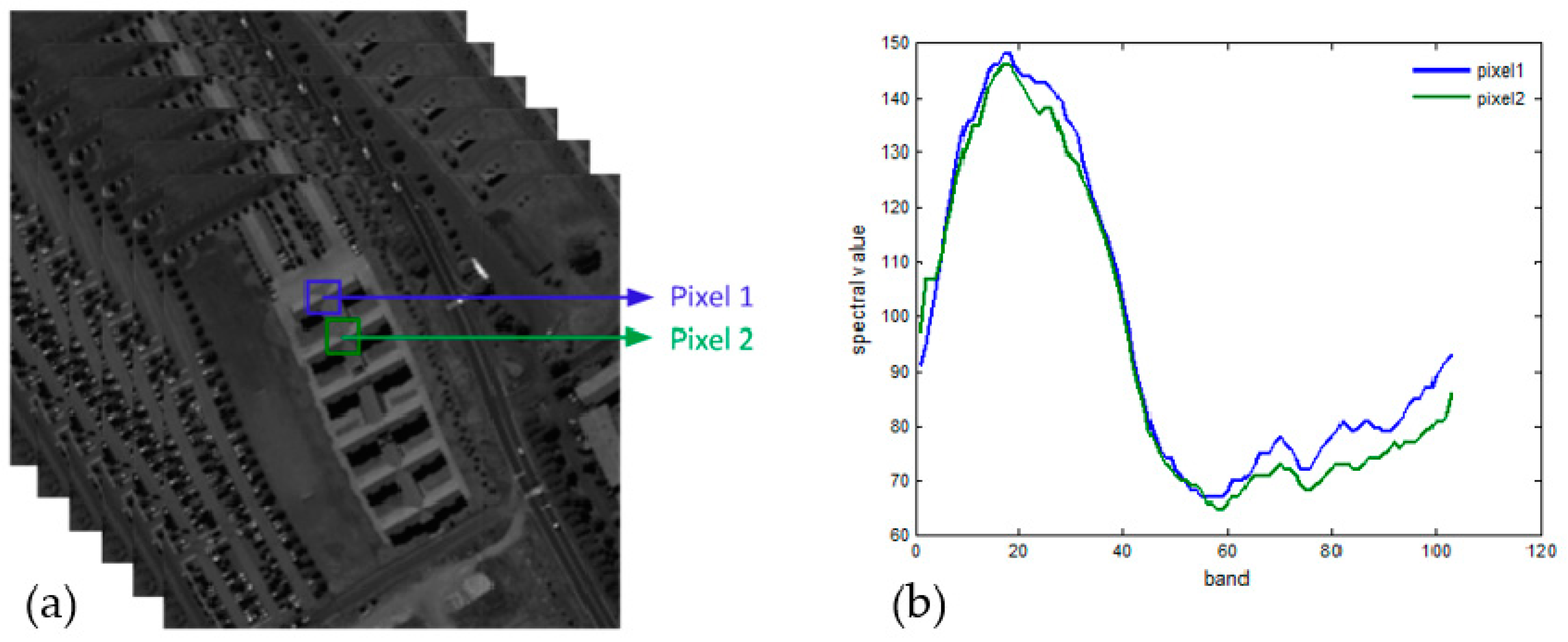

2.3. Non-Local Self-Similarity of Hyperspectral Images

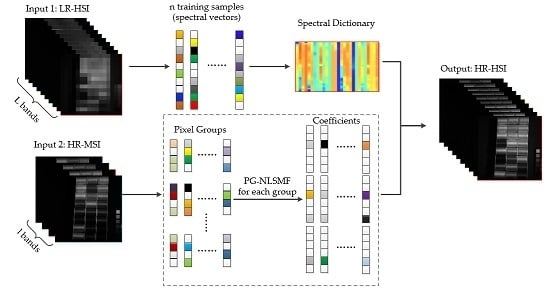

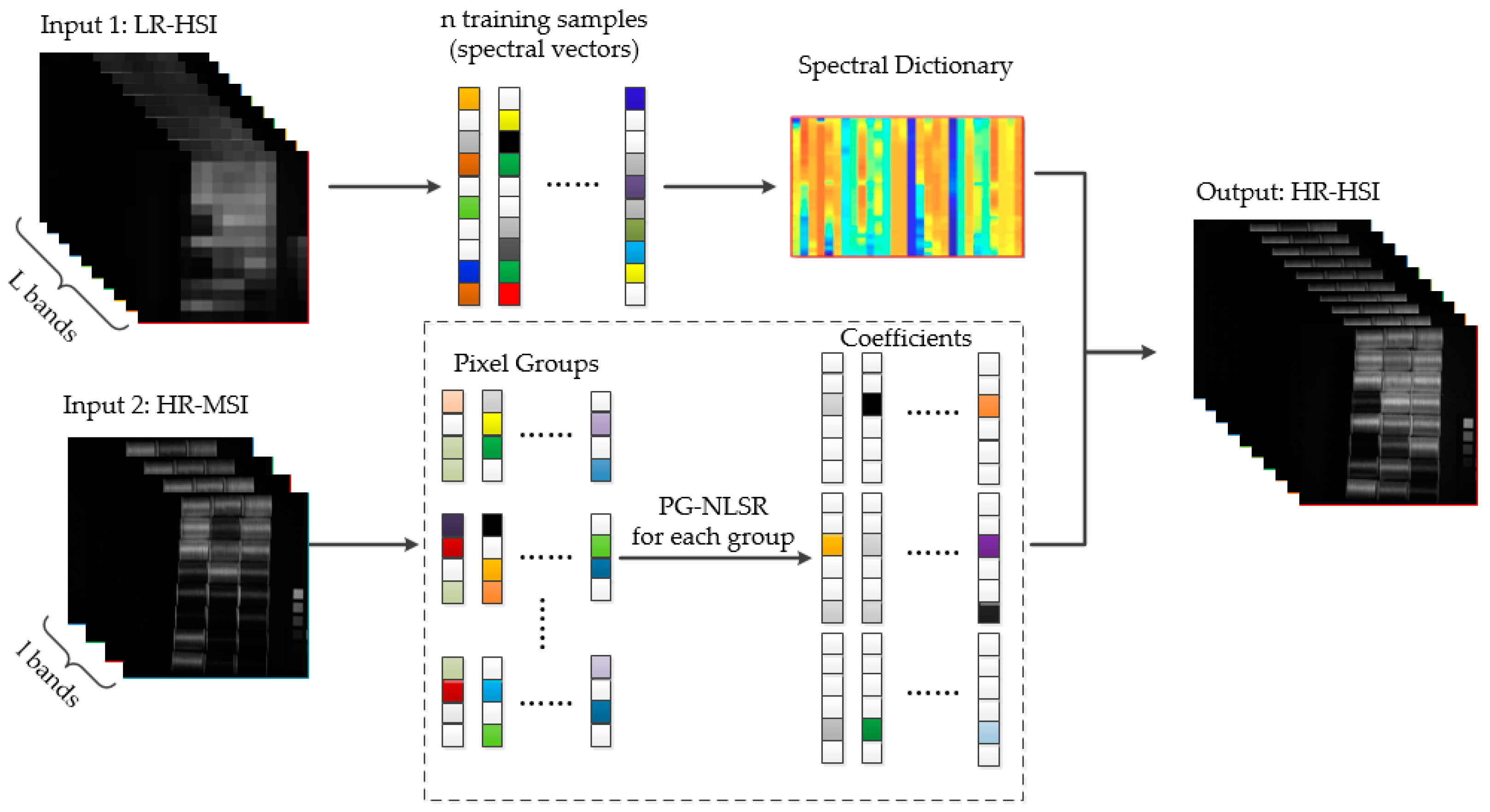

3. Proposed HSI Fusion Algorithm

3.1. Problem Formulation

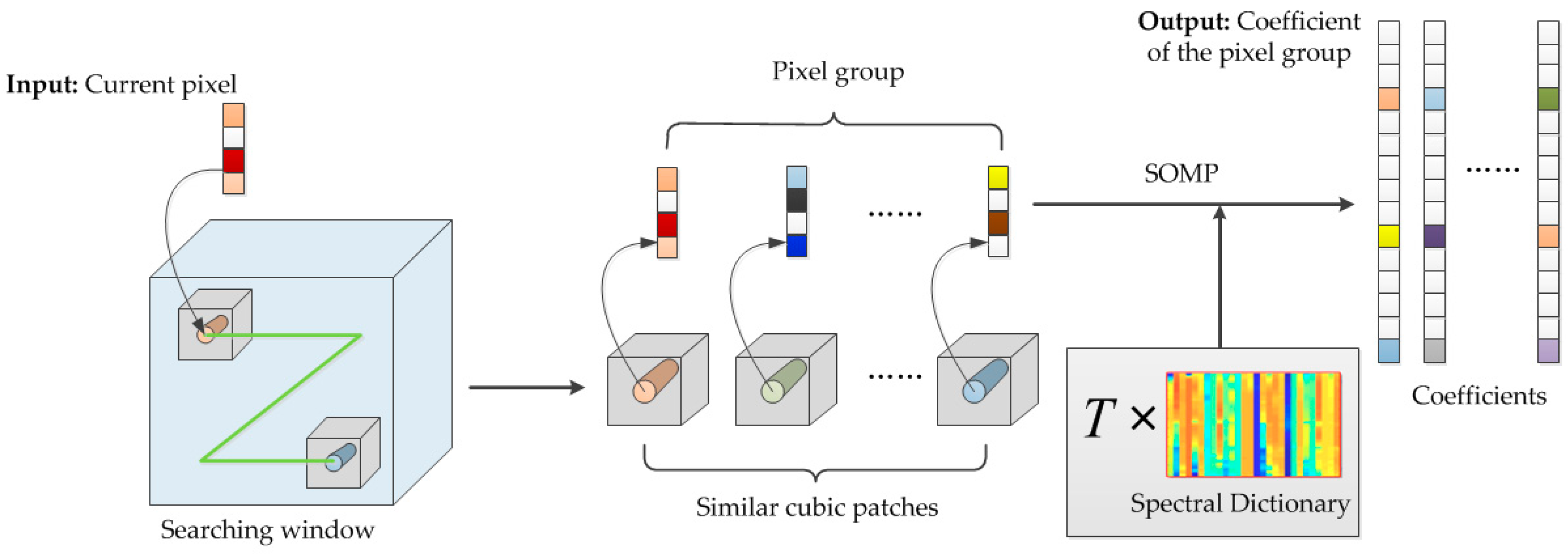

3.2. Pixel Group Based Non-Local Sparse Representation

3.3. Algorithm

4. Experimental Results and Discussion

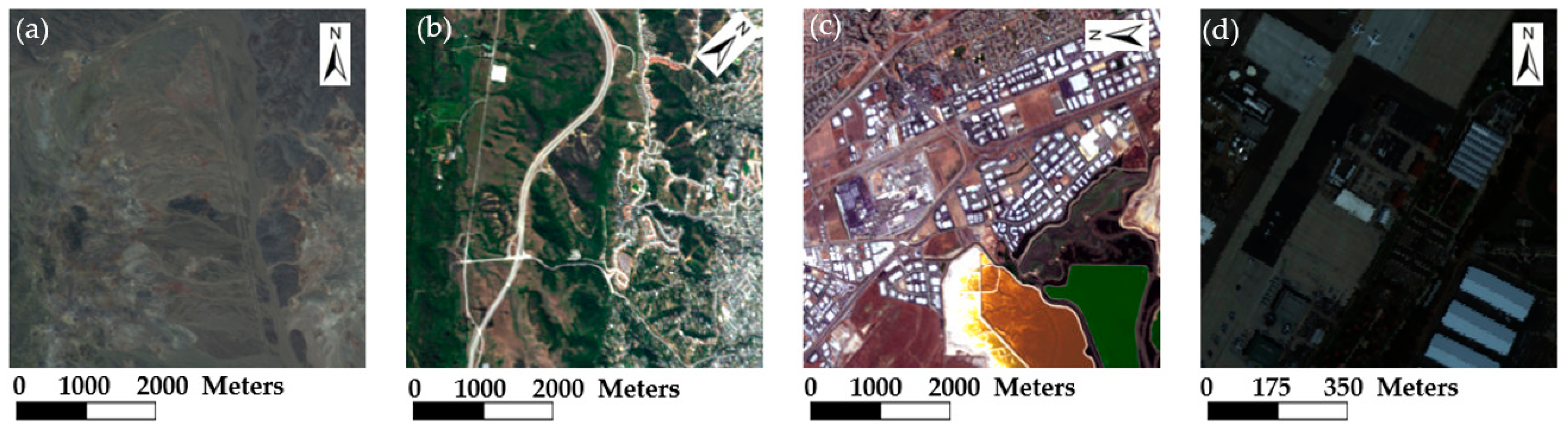

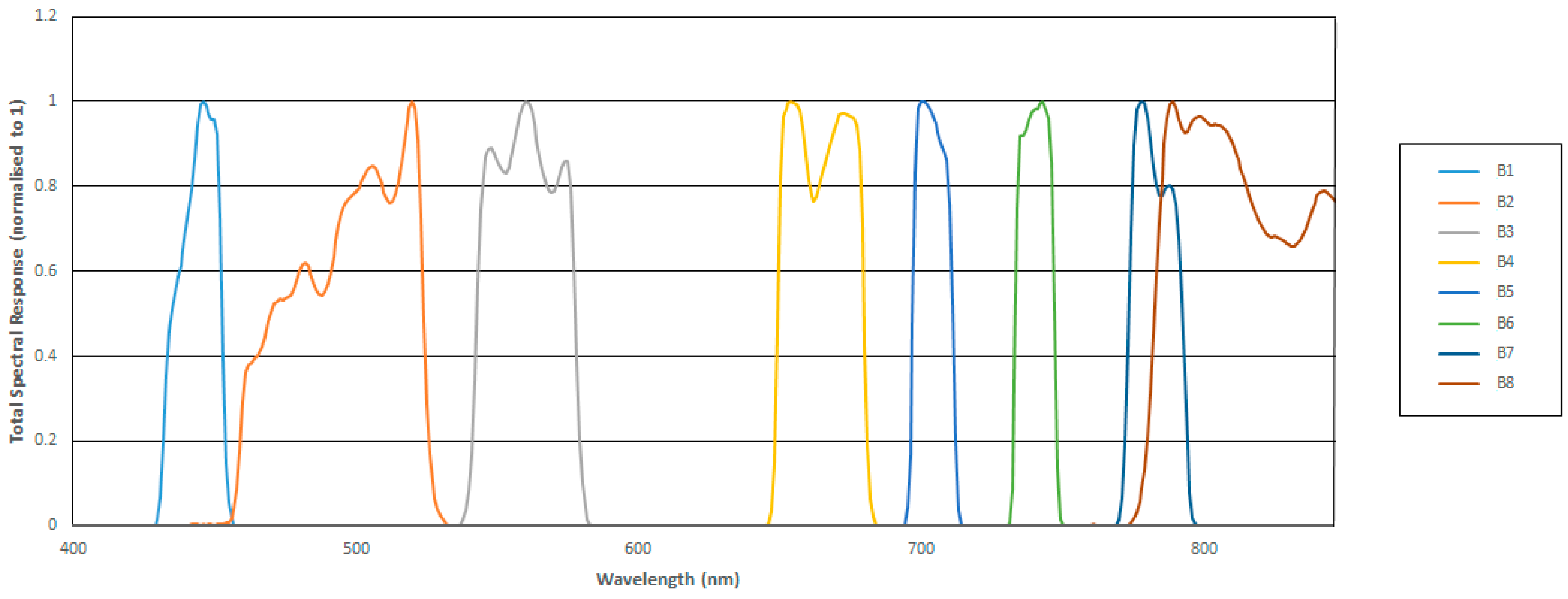

4.1. Experimental Setup

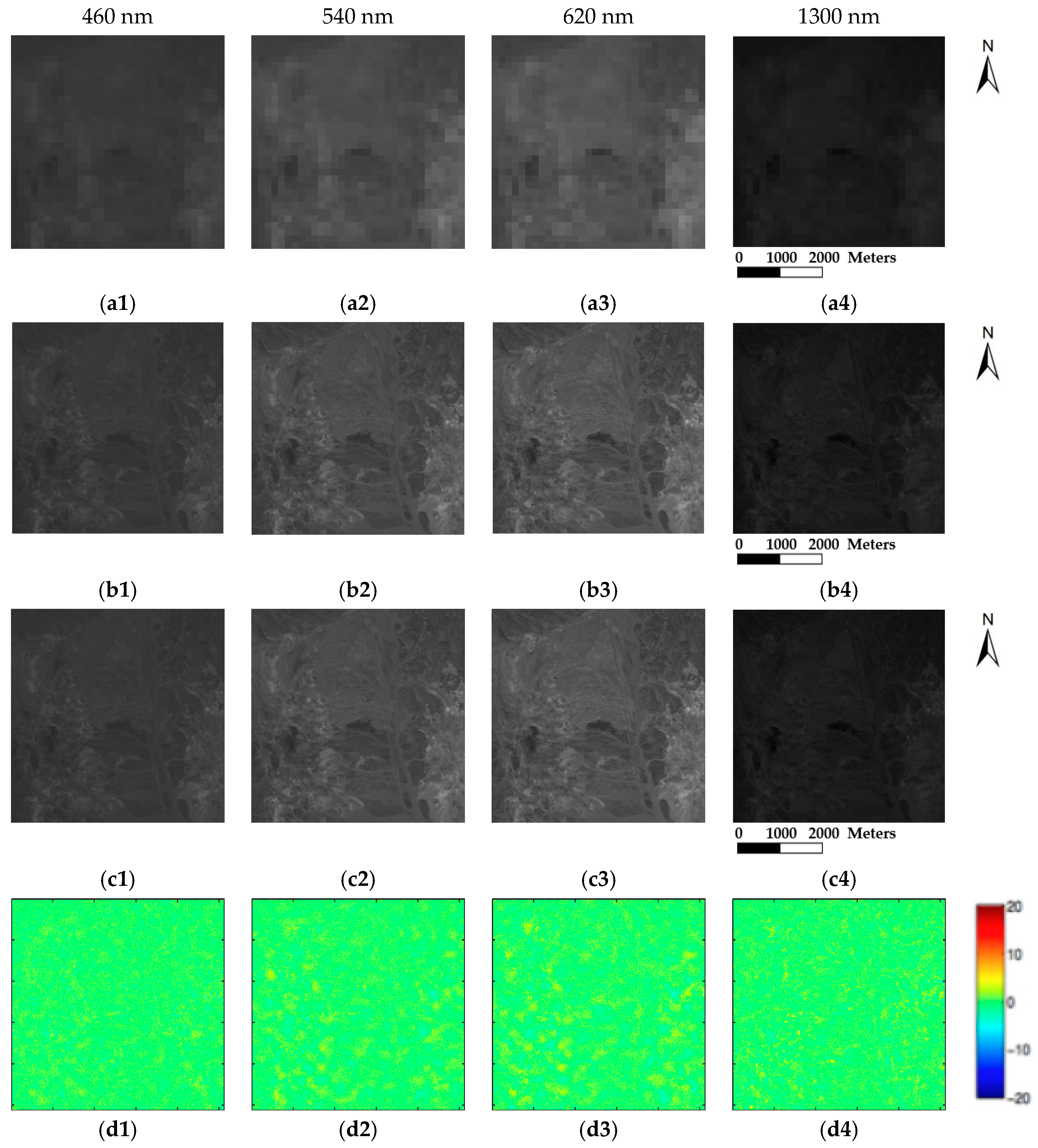

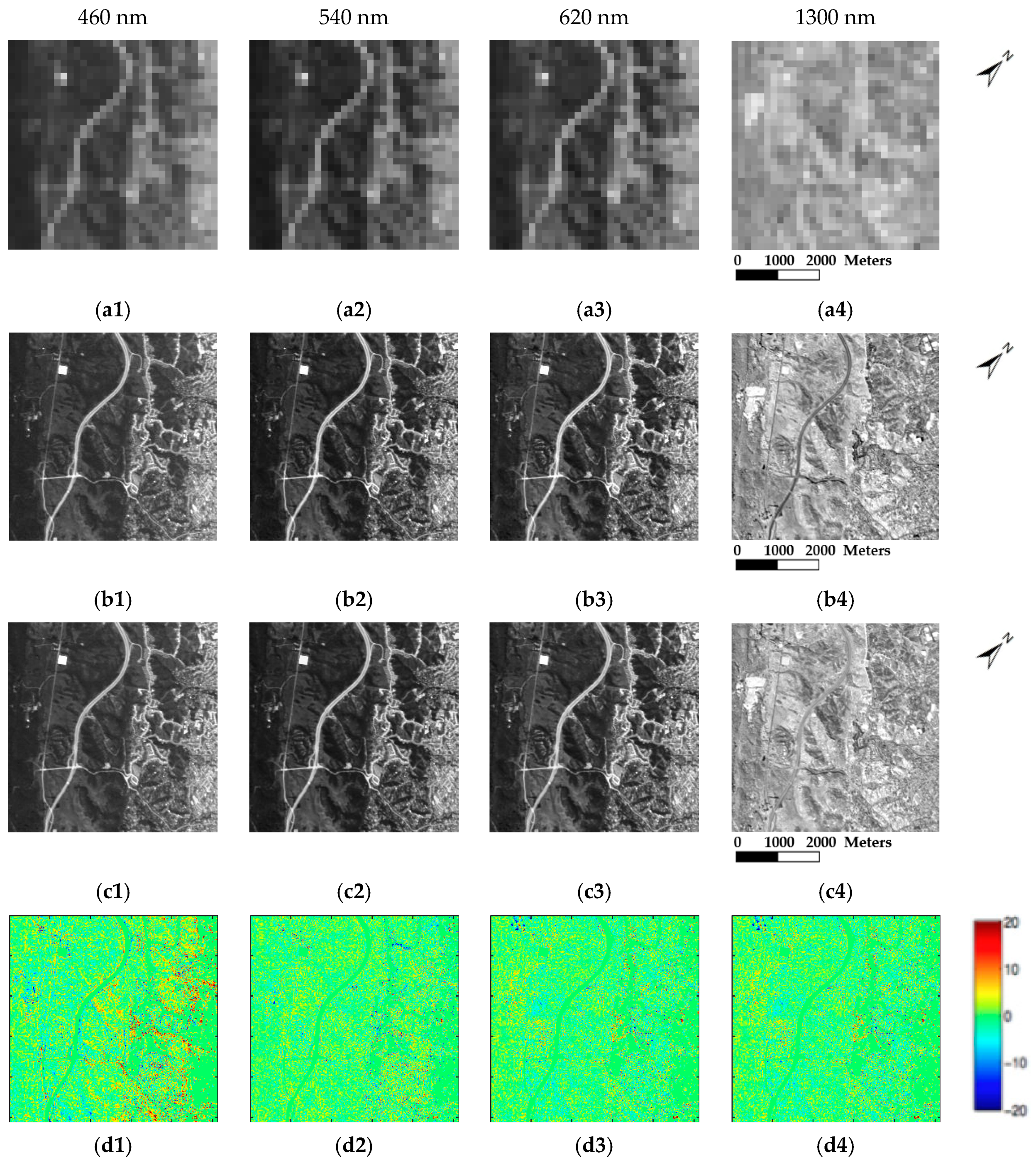

4.2. Performance Evaluation

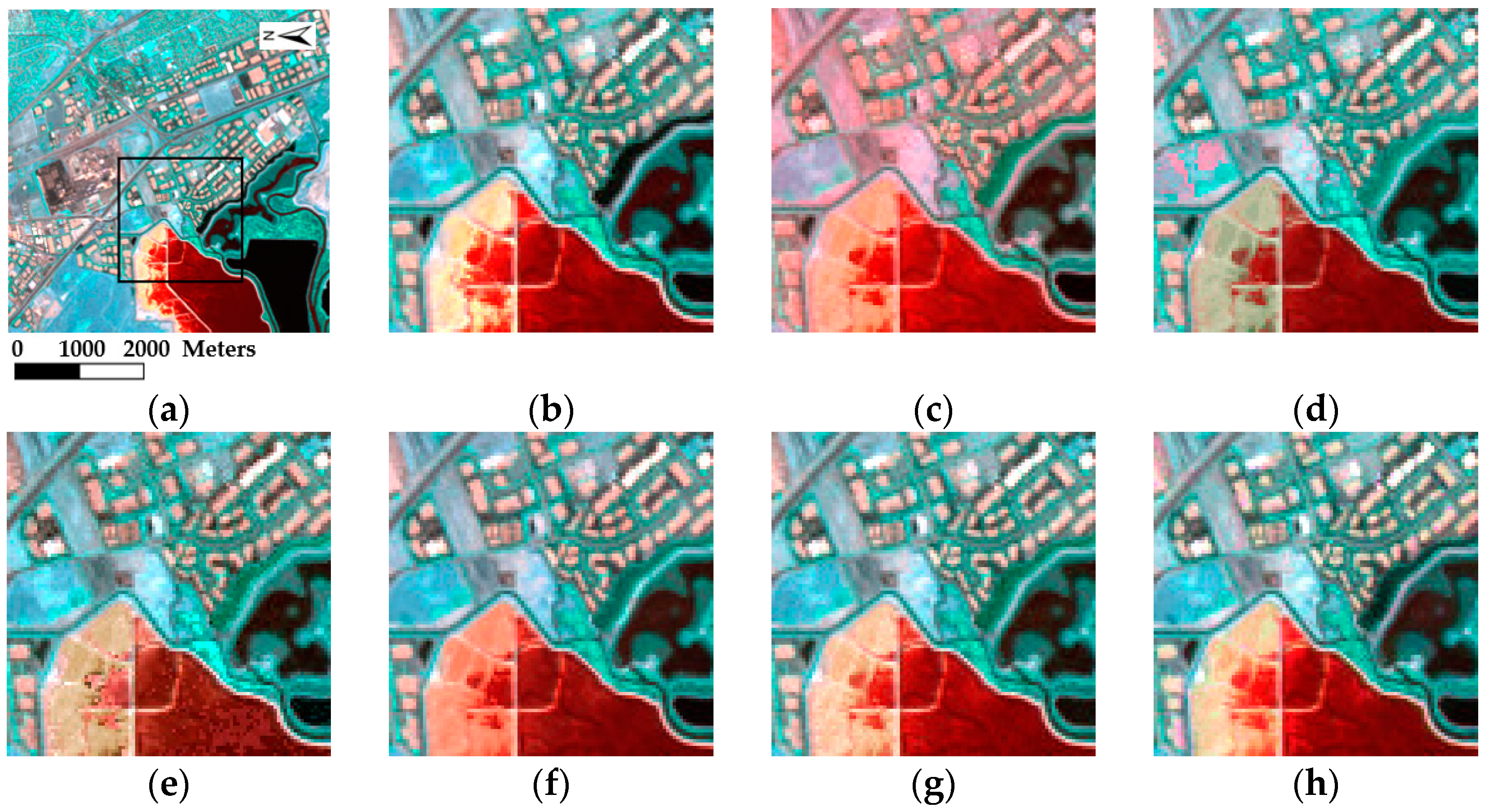

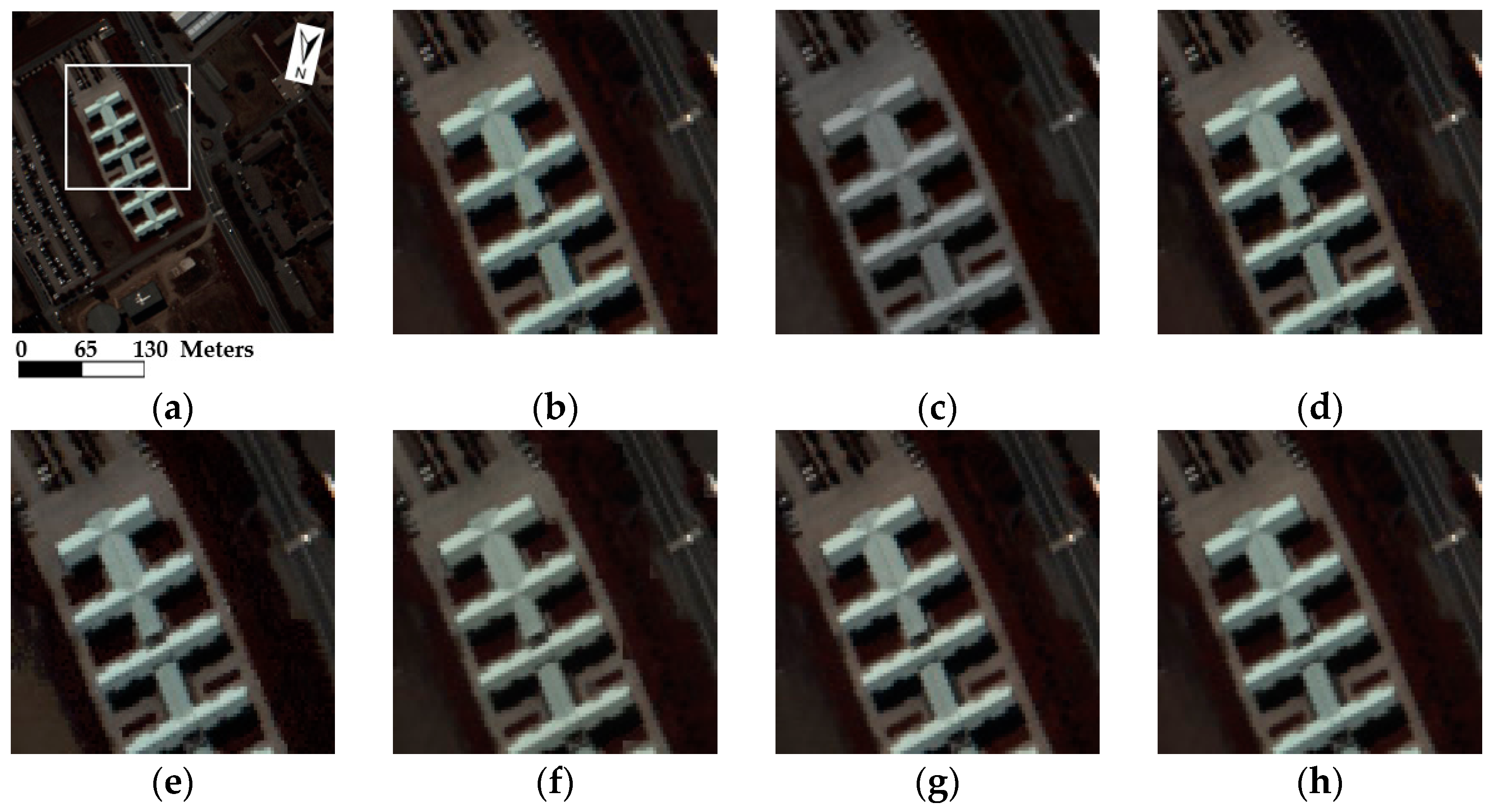

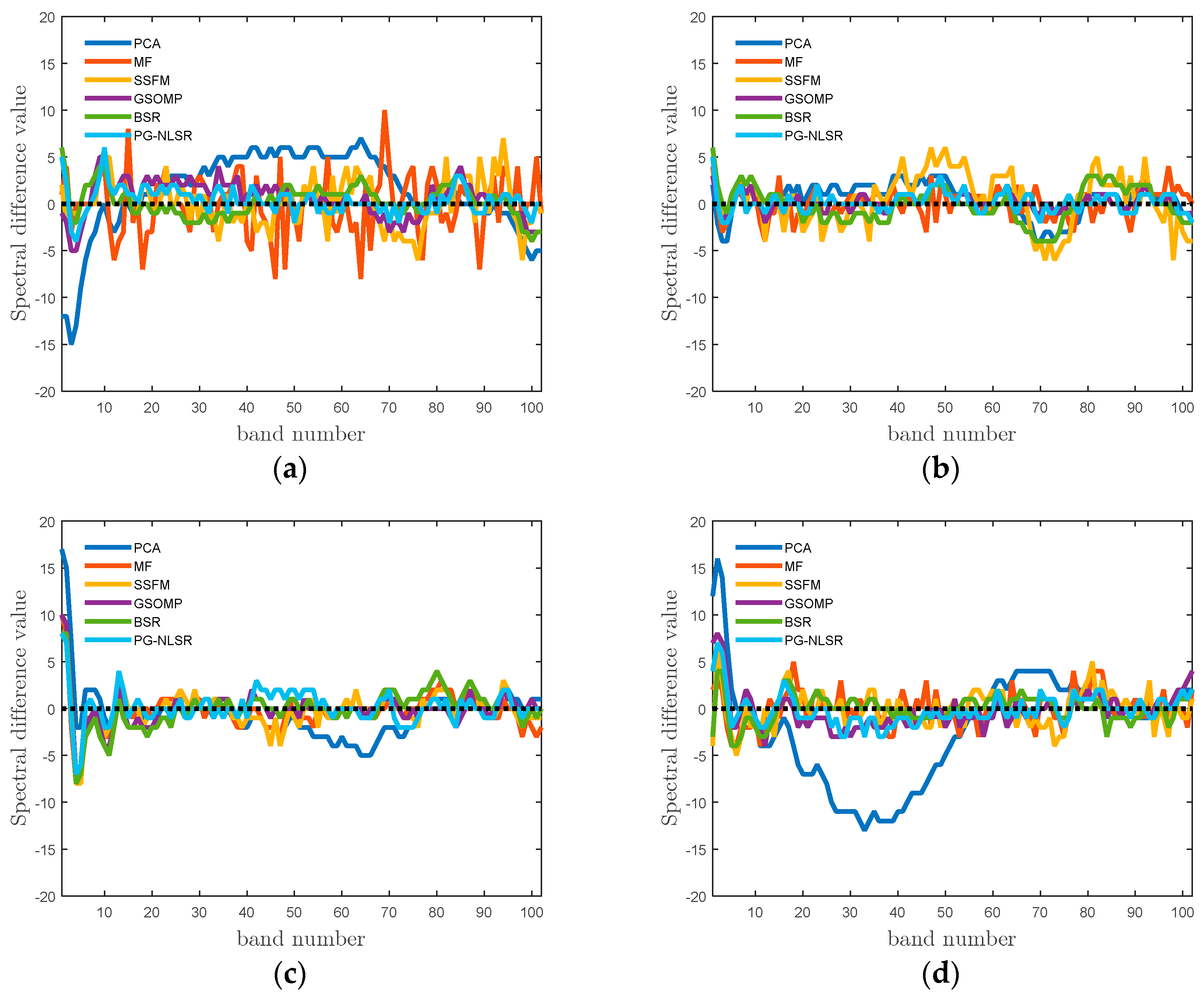

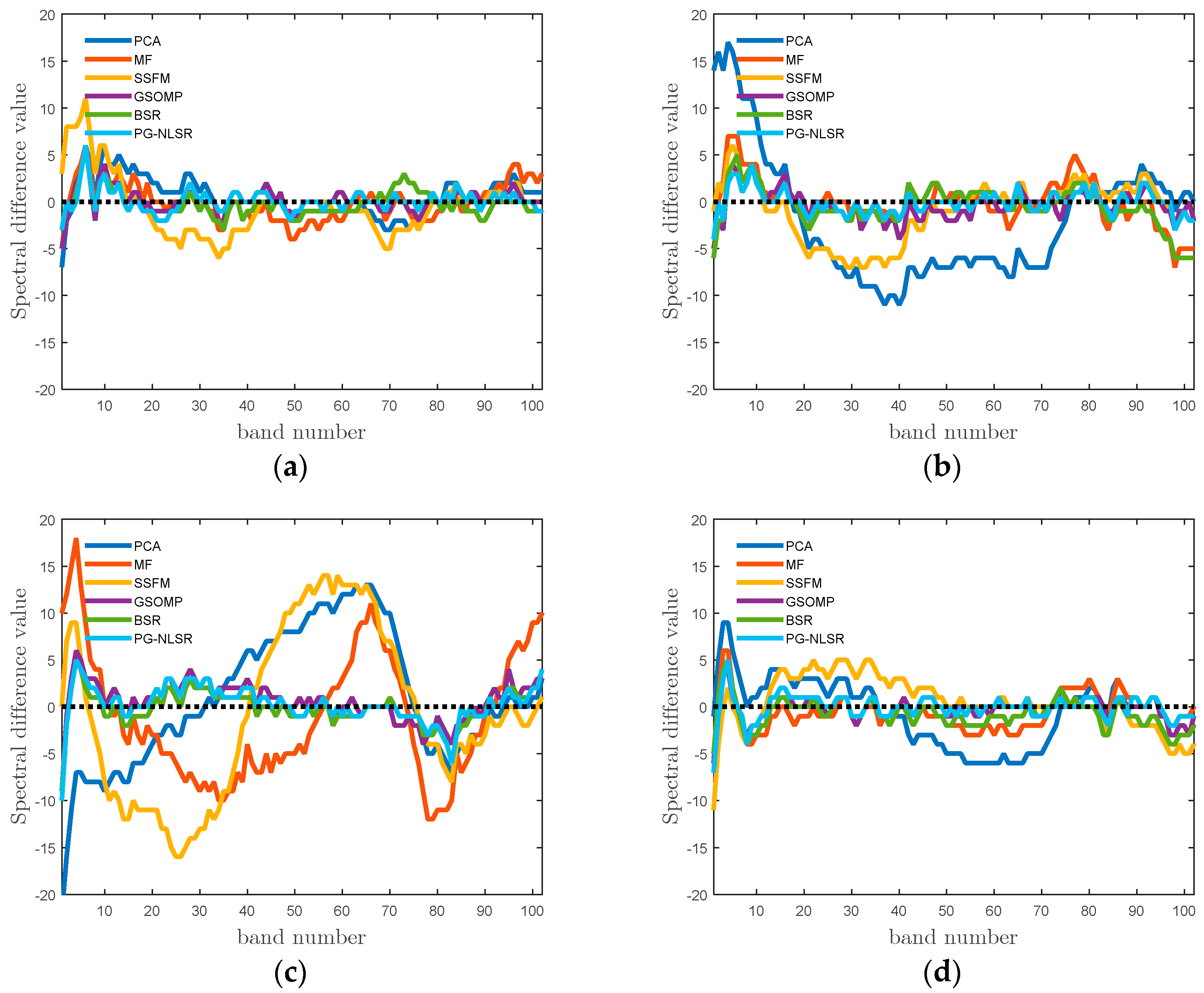

4.3. Experiments on AVIRIS Dataset

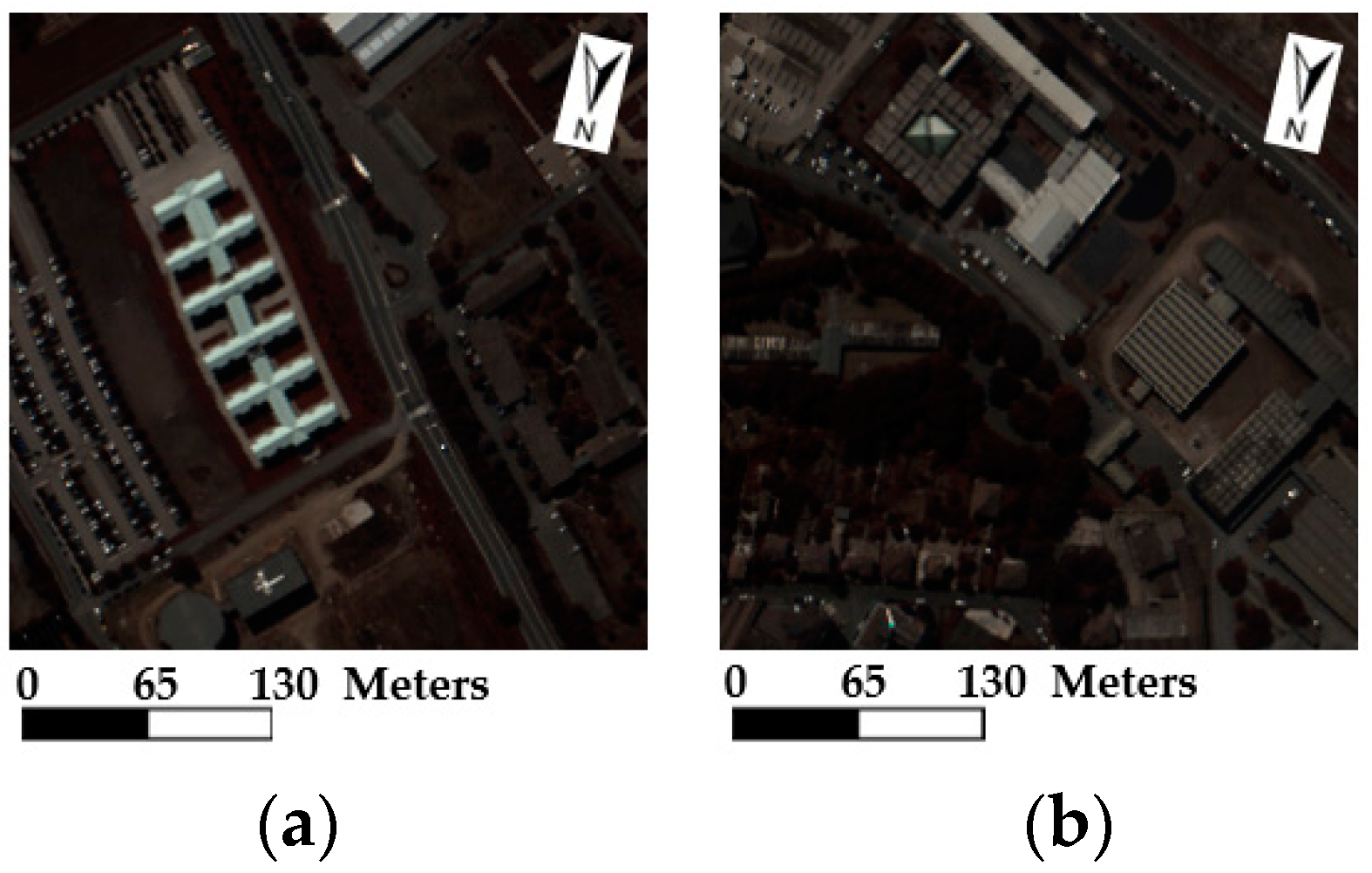

4.4. Experiments on ROSIS Dataset

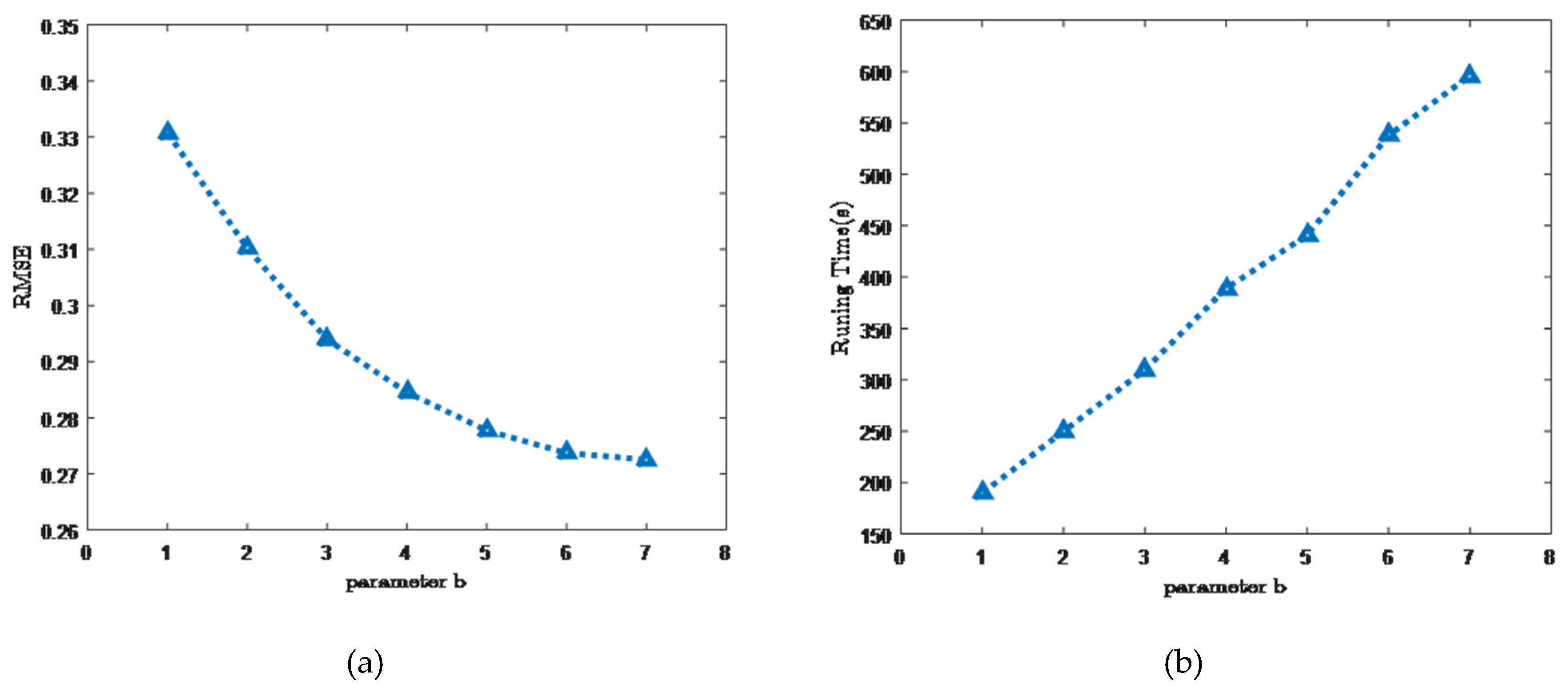

4.5. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing. data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Patel, R.C.; Joshi, M.V. Super-resolution of hyperspectral images: Use of optimum wavelet filter coefficients and sparsity regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1728–1736. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Wu, Y.; He, D.; Xu, X.; Zhang, L. Nonlocal total variation subpixel mapping for hyperspectral remote sensing imagery. Remote Sens. 2016, 8. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Shettigara, V. A generalized component substitution technique for spatial enhancement of multispectral images using a higher resolution data set. Photogramm. Eng. Remote Sens. 1992, 58, 561–567. [Google Scholar]

- Choi, M. A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1672–1682. [Google Scholar] [CrossRef]

- Pradhan, P.S.; King, R.L.; Younan, N.H.; Holcomb, D.W. Estimation of the number of decomposition levels for a wavelet-based multiresolution multisensor image fusion. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3674–3686. [Google Scholar] [CrossRef]

- Robinson, G.D.; Gross, H.N.; Schott, J.R. Evaluation of two applications of spectral mixing models to image fusion. Remote Sens. Environ. 2000, 71, 272–281. [Google Scholar] [CrossRef]

- Zurita-Milla, R.; Clevers, J.G.; Schaepman, M.E. Unmixing-based Landsat TM and MERIS FR data fusion. IEEE Geosci. Remote Sens. Lett. 2008, 5, 453–457. [Google Scholar] [CrossRef] [Green Version]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral super-resolution by coupled spectral unmixing. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3586–3594.

- Nezhad, Z.H.; Karami, A.; Heylen, R.; Scheunders, P. Fusion of hyperspectral and multispectral images using spectral unmixing and sparse coding. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2377–2389. [Google Scholar] [CrossRef]

- Bieniarz, J.; Müller, R.; Zhu, X.X.; Reinartz, P. Hyperspectral image resolution enhancement based on joint sparsity spectral unmixing. In Proceedings of the IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2645–2648.

- Akhtar, N.; Shafait, F.; Mian, A. Sparse spatio-spectral representation for hyperspectral image super-resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 63–78.

- Wycoff, E.; Chan, T.H.; Jia, K.; Ma, W.K.; Ma, Y. A non-negative sparse promoting algorithm for high resolution hyperspectral imaging. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 1409–1413.

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Li, S.; Yin, H.; Fang, L. Remote sensing image fusion via sparse representations over learned dictionaries. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4779–4789. [Google Scholar] [CrossRef]

- Guo, M.; Zhang, H.; Li, J.; Zhang, L.; Shen, H. An online coupled dictionary learning approach for remote sensing image fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1284–1294. [Google Scholar] [CrossRef]

- Dong, W.; Fu, F.; Shi, G.; Cao, X.; Wu, J.; Li, G.; Li, X. Hyperspectral image super-resolution via non-negative structured sparse representation. IEEE Trans. Image Process. 2016, 25, 2337–2352. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and multispectral image fusion based on a sparse representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Song, H.; Huang, B.; Liu, Q.; Zhang, K. Improving the spatial resolution of Landsat TM/ETM+ through fusion with SPOT5 images via learning-based super-resolution. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1195–1204. [Google Scholar] [CrossRef]

- Kawakami, R.; Matsushita, Y.; Wright, J.; Ben-Ezra, M.; Tai, Y.W.; Ikeuchi, K. High-resolution hyperspectral imaging via matrix factorization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2329–2336.

- Huang, B.; Song, H.; Cui, H.; Peng, J.; Xu, Z. Spatial and spectral image fusion using sparse matrix factorization. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1693–1704. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Bayesian sparse representation for hyperspectral image super resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3631–3640.

- Wright, J.; Ma, Y.; Mairal, J.; Sapiro, G.; Huang, T.S.; Yan, S. Sparse representation for computer vision and pattern recognition. Proc. IEEE 2010, 98, 1031–1044. [Google Scholar] [CrossRef]

- Pati, Y.C.; Rezaiifar, R.; Krishnaprasad, P. Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In Proceedings of the 27th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 1–3 November 1993; pp. 40–44.

- Donoho, D.L.; Tsaig, Y.; Drori, I.; Starck, J.L. Sparse solution of underdetermined systems of linear equations by stagewise orthogonal matching pursuit. IEEE Trans. Inf. Theory 2012, 58, 1094–1121. [Google Scholar] [CrossRef]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso: A retrospective. J. R. Stat. Soc. Ser. B Stat. Methodol. 2011, 73, 273–282. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Şımşek, M.; Polat, E. The effect of dictionary learning algorithms on super-resolution hyperspectral reconstruction. In Proceedings of the XXV International Conference on Information, Communication and Automation Technologies, Sarajevo, Bosnia and Herzegovina, 29–31 October 2015; pp. 1–5.

- Aharon, M.; Elad, M.; Bruckstein, A. K-svd: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Proc. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G. Online dictionary learning for sparse coding. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009.

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 60–65.

- Li, Y.; Li, F.; Bai, B.; Shen, Q. Image fusion via nonlocal sparse k-svd dictionary learning. Appl. Opt. 2016, 55, 1814–1823. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Yang, J.; Chan, J.C.W. Hyperspectral imagery super-resolution by spatial–spectral joint nonlocal similarity. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2671–2679. [Google Scholar] [CrossRef]

- Huang, W.; Xiao, L.; Liu, H.; Wei, Z. Hyperspectral imagery super-resolution by compressive sensing inspired dictionary learning and spatial-spectral regularization. Sensors 2015, 15, 2041–2058. [Google Scholar] [CrossRef] [PubMed]

- Tropp, J.A.; Gilbert, A.C.; Strauss, M.J. Algorithms for simultaneous sparse approximation. Part I: Greedy pursuit. Signal Proc. 2006, 86, 572–588. [Google Scholar] [CrossRef]

- Yuhas, R.H.; Goetz, A.F.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the spectral angle mapper (SAM) algorithm. In JPL, Summaries of the Third Annual JPL Airborne Geoscience Workshop; NASA: Washington, DC, USA, 1992. [Google Scholar]

- AVIRIS Data. Available online: http://aviris.jpl.nasa.gov/data/index.html (accessed on 6 October 2015).

- Hyperspectral Remote Sensing Image Scenes (Pavia Centre and University). Available online: http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes (accessed on 16 June 2016).

- Jolliffe, I. Principal Component Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2002. [Google Scholar]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of pansharpening algorithms: Outcome of the 2006 GRS-S data-fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef] [Green Version]

- Vane, G.; Green, R.O.; Chrien, T.G.; Enmark, H.T.; Hansen, E.G.; Porter, W.M. The airborne visible/infrared imaging spectrometer (AVIRIS). Remote Sens. Environ. 1993, 44, 127–143. [Google Scholar] [CrossRef]

- Eismann, M.T.; Hardie, R.C. Hyperspectral resolution enhancement using high-resolution multispectral imagery with arbitrary response functions. IEEE Trans. Geosci. Remote Sens. 2005, 43, 455–465. [Google Scholar] [CrossRef]

| Images | Index | PCA [42] | MF [22] | SSFM [23] | GSOMP [14] | BSR [24] | PG-NLSR |

|---|---|---|---|---|---|---|---|

| Cuprite | RMSE | 1.6760 | 0.5498 | 0.7113 | 0.4665 | 0.3033 | 0.2845 |

| PSNR | 43.6453 | 53.3265 | 51.0896 | 54.7540 | 58.4946 | 59.0488 | |

| A-SSIM | 0.9845 | 0.9907 | 0.9785 | 0.9935 | 0.9931 | 0.9946 | |

| SAM | 2.2749 | 1.2558 | 2.2691 | 1.0409 | 1.1676 | 0.8559 | |

| ERGAS | 6.2879 | 2.1209 | 2.6783 | 1.0535 | 0.8973 | 1.0636 | |

| Jasper-Ridge | RMSE | 11.4688 | 7.6531 | 7.2652 | 4.5126 | 5.7721 | 3.7483 |

| PSNR | 26.9405 | 30.4541 | 30.9058 | 35.0422 | 32.9042 | 36.6542 | |

| A-SSIM | 0.8733 | 0.8704 | 0.9240 | 0.9178 | 0.9160 | 0.9264 | |

| SAM | 5.8845 | 5.4792 | 4.3946 | 3.8501 | 4.0804 | 3.6892 | |

| ERGAS | 1.6518 | 1.4547 | 1.1428 | 1.1183 | 1.0054 | 1.0036 | |

| Moffett-Field | RMSE | 15.9288 | 8.2029 | 7.7466 | 5.5780 | 4.1883 | 4.1833 |

| PSNR | 24.0871 | 29.8514 | 30.3486 | 33.2012 | 35.6901 | 35.7005 | |

| A-SSIM | 0.9030 | 0.8736 | 0.8668 | 0.9296 | 0.9234 | 0.9371 | |

| SAM | 8.8194 | 7.6709 | 8.4644 | 4.3319 | 5.0234 | 3.7269 | |

| ERGAS | 2.0839 | 1.4930 | 1.5145 | 1.0245 | 0.9084 | 0.8275 | |

| San Diego | RMSE | 7.9201 | 4.2662 | 3.1145 | 1.6225 | 1.2230 | 0.8681 |

| PSNR | 30.1562 | 35.5300 | 38.2631 | 43.9273 | 46.3823 | 49.3598 | |

| A-SSIM | 0.7670 | 0.9365 | 0.9746 | 0.9663 | 0.9796 | 0.9803 | |

| SAM | 5.4728 | 2.2006 | 1.7335 | 0.8499 | 0.6451 | 0.7305 | |

| ERGAS | 4.1063 | 1.9276 | 1.0549 | 0.9950 | 0.4635 | 0.5877 |

| Method | PCA [42] | MF [22] | SSFM [23] | GSOMP [14] | BSR [24] | PG-NLSR |

|---|---|---|---|---|---|---|

| S = 4 | ||||||

| RMSE | 2.7352 | 1.6695 | 2.2580 | 1.0116 | 0.7201 | 0.7074 |

| PSNR | 39.3909 | 43.6790 | 41.0563 | 48.0309 | 50.9825 | 51.1380 |

| A-SSIM | 0.9239 | 0.9604 | 0.9509 | 0.9783 | 0.9834 | 0.9856 |

| SAM | 6.4478 | 3.7423 | 4.2193 | 3.1318 | 2.4157 | 2.3337 |

| ERGAS | 4.0682 | 2.1829 | 2.4277 | 0.9209 | 1.2902 | 1.3564 |

| S = 8 | ||||||

| RMSE | 2.7766 | 1.8995 | 2.5754 | 1.0385 | 0.9034 | 0.7491 |

| PSNR | 39.2604 | 42.5581 | 39.9138 | 47.8028 | 49.0129 | 50.6400 |

| A-SSIM | 0.9226 | 0.9481 | 0.9353 | 0.9770 | 0.9774 | 0.9835 |

| SAM | 6.5122 | 4.2119 | 4.4572 | 3.1750 | 2.9190 | 2.5365 |

| ERGAS | 2.0528 | 1.2366 | 1.4232 | 0.9522 | 0.7687 | 0.7266 |

| S = 16 | ||||||

| RMSE | 2.8523 | 2.1191 | 2.6062 | 1.1919 | 0.9364 | 0.9272 |

| PSNR | 39.0270 | 41.6079 | 39.8108 | 46.6057 | 48.7015 | 48.7869 |

| A-SSIM | 0.9203 | 0.9330 | 0.9192 | 0.9726 | 0.9793 | 0.9792 |

| SAM | 6.6424 | 5.8780 | 6.3314 | 3.4892 | 2.9286 | 3.0127 |

| ERGAS | 1.0456 | 0.8301 | 1.0255 | 0.5459 | 0.3917 | 0.4312 |

| Method | PCA [42] | MF [22] | SSFM [23] | GSOMP [14] | BSR [24] | PG-NLSR |

|---|---|---|---|---|---|---|

| S = 4 | ||||||

| RMSE | 3.5179 | 1.6974 | 1.9599 | 1.1712 | 0.7582 | 0.7179 |

| PSNR | 37.2052 | 43.5353 | 42.2863 | 46.7582 | 50.5351 | 51.0097 |

| A-SSIM | 0.9270 | 0.9734 | 0.9423 | 0.9765 | 0.9842 | 0.9859 |

| SAM | 6.4194 | 3.0905 | 4.7297 | 2.8398 | 2.2157 | 2.1693 |

| ERGAS | 4.9933 | 1.8510 | 2.9534 | 1.8718 | 1.2416 | 1.2823 |

| S = 8 | ||||||

| RMSE | 3.5091 | 1.9775 | 2.4717 | 1.6876 | 0.8690 | 0.8518 |

| PSNR | 37.2269 | 42.2087 | 40.2707 | 43.5855 | 49.3501 | 49.5245 |

| A-SSIM | 0.9257 | 0.9536 | 0.9121 | 0.9684 | 0.9829 | 0.9837 |

| SAM | 6.4898 | 3.7624 | 5.7924 | 3.2346 | 2.3855 | 2.3787 |

| ERGAS | 2.5084 | 1.2374 | 1.8591 | 1.1668 | 0.6650 | 0.7184 |

| S = 16 | ||||||

| RMSE | 3.5297 | 2.2620 | 2.7045 | 2.1770 | 1.1513 | 1.1959 |

| PSNR | 37.1760 | 41.0409 | 39.4890 | 41.3736 | 46.9070 | 46.5769 |

| A-SSIM | 0.9240 | 0.9589 | 0.9370 | 0.9596 | 0.9665 | 0.9786 |

| SAM | 6.5875 | 3.9974 | 5.6065 | 3.9253 | 3.1329 | 2.9365 |

| ERGAS | 1.2658 | 0.6532 | 0.9143 | 0.7283 | 0.4312 | 0.4530 |

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Li, Y.; Chan, J.C.-W.; Shen, Q. Image Fusion for Spatial Enhancement of Hyperspectral Image via Pixel Group Based Non-Local Sparse Representation. Remote Sens. 2017, 9, 53. https://doi.org/10.3390/rs9010053

Yang J, Li Y, Chan JC-W, Shen Q. Image Fusion for Spatial Enhancement of Hyperspectral Image via Pixel Group Based Non-Local Sparse Representation. Remote Sensing. 2017; 9(1):53. https://doi.org/10.3390/rs9010053

Chicago/Turabian StyleYang, Jing, Ying Li, Jonathan Cheung-Wai Chan, and Qiang Shen. 2017. "Image Fusion for Spatial Enhancement of Hyperspectral Image via Pixel Group Based Non-Local Sparse Representation" Remote Sensing 9, no. 1: 53. https://doi.org/10.3390/rs9010053