Global Registration of 3D LiDAR Point Clouds Based on Scene Features: Application to Structured Environments

Abstract

:1. Introduction

2. Related Work

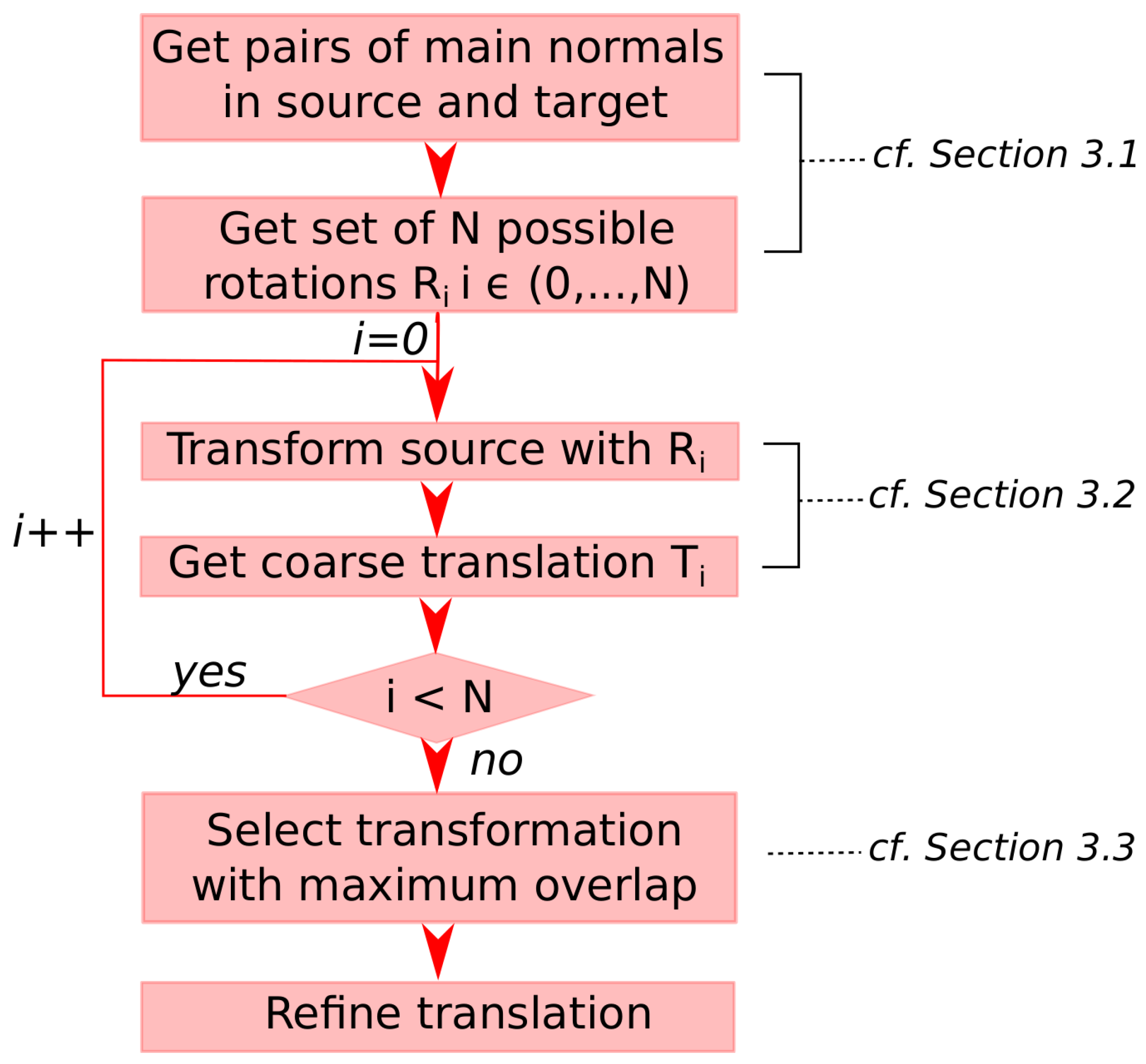

3. Structured Scene Feature-Based Registration

3.1. Rotation Search

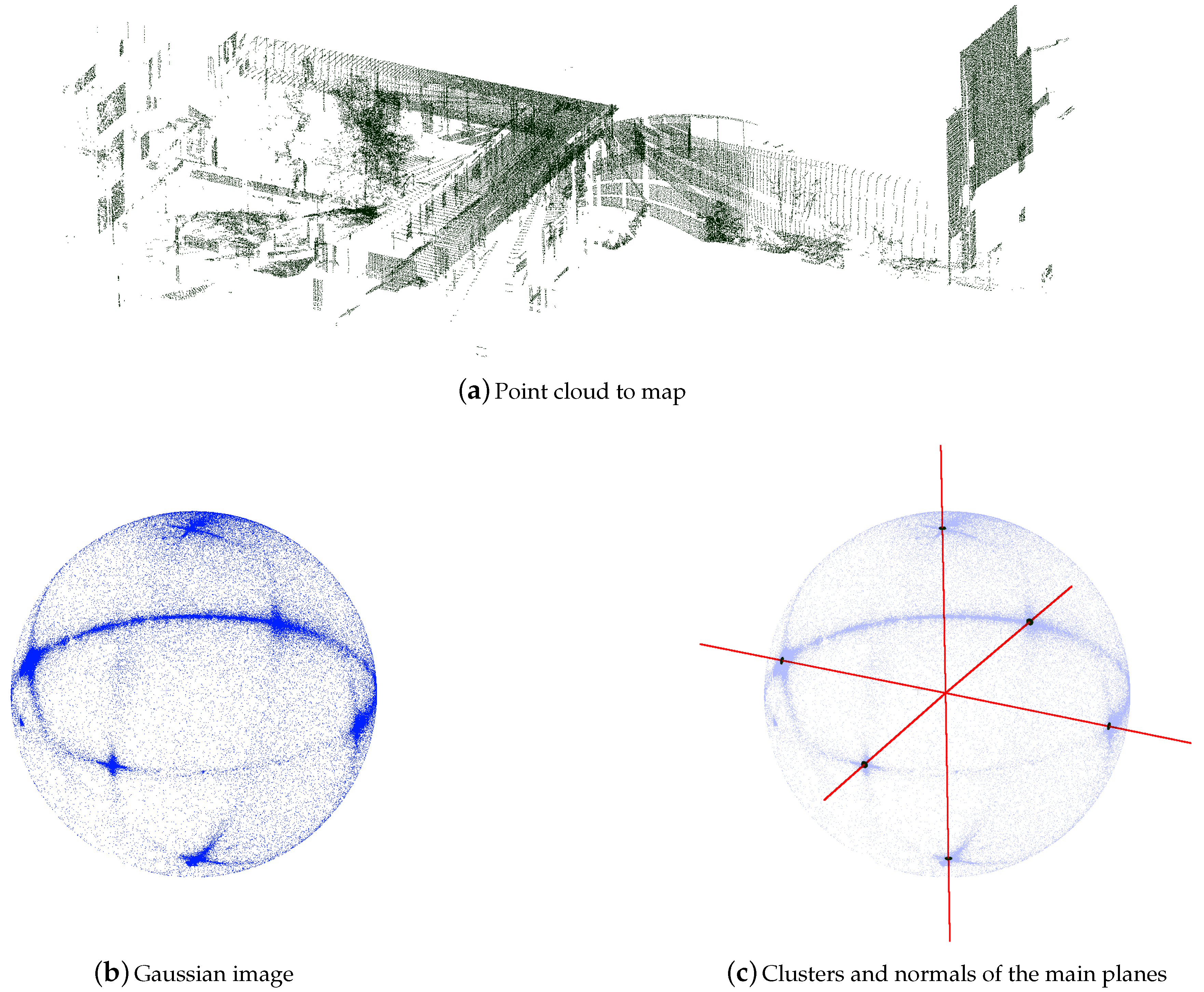

3.1.1. Normal Computation

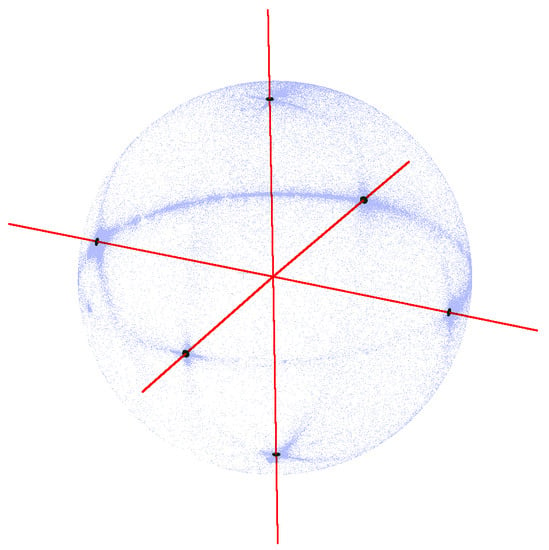

3.1.2. Gaussian Sphere Mapping

3.1.3. Density Filter

3.1.4. Mean Shift Process and Main Normals’ Selection

3.1.5. Creating Pairs of Normals and Matching

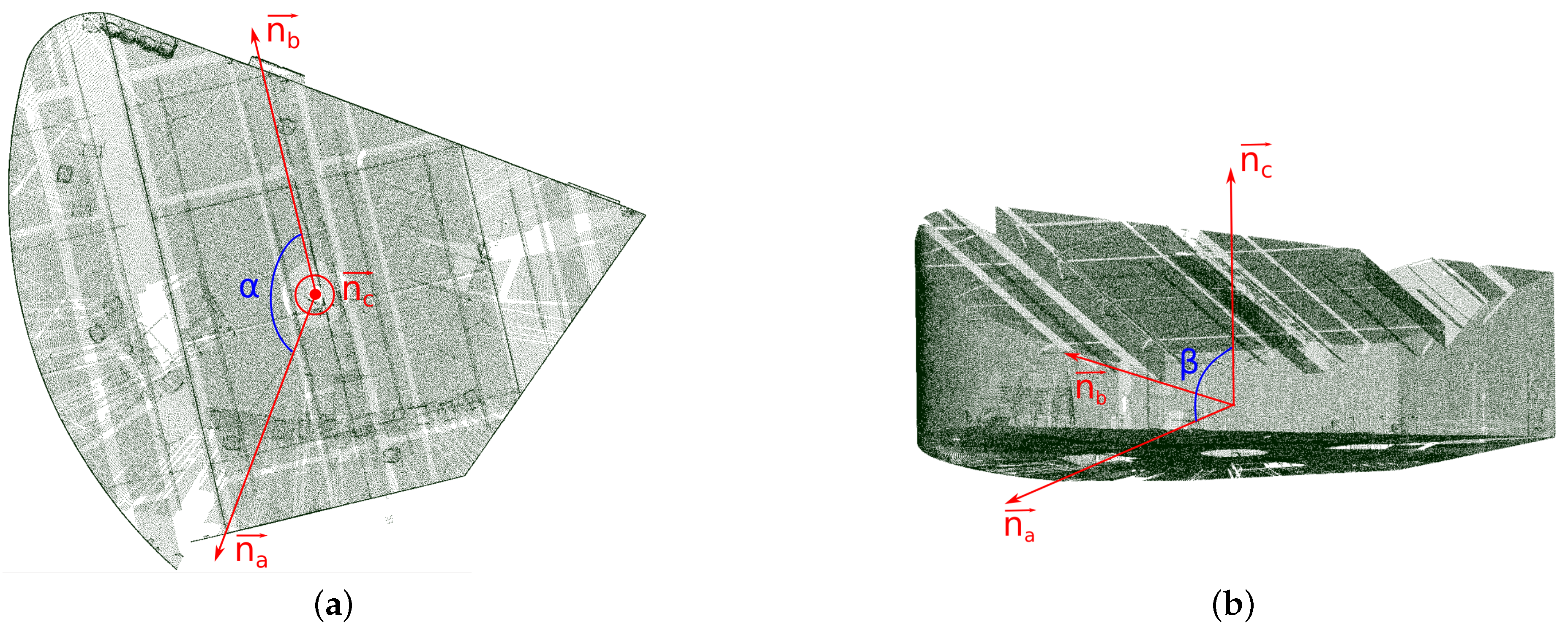

3.1.6. Rotation Computation

3.2. Translation Search

3.2.1. Axes’ Selection

3.2.2. Histograms’ Correlation

3.2.3. Translation Computation

3.3. Selection of Transformation

| Algorithm 1: SSFR: Complete algorithm. |

| input target, source, r, rd, binWidth //r is the radius used to define the neighborhood to compute normals; is the radius used to define the neighborhood to compute density |

| 1: Compute normals with radius r and sample input clouds |

| 2: Map and onto the Gaussian Sphere to get Gaussian images and |

| 3: Compute density at each point of and with radius |

| 4: Extract the six densest regions from and |

| 5: Apply mean shift to find the modes and (with ) from the densest regions |

| 6: Make sets of pairs and with and elements // cf. Section 3.1 |

| 7: for all pair do |

| 8: for all pair do |

| 9: if then |

| 10: Get rotation to align on |

| 11: Select axes a, b, c from // cf. Section 3.2 |

| 12: Tjk ← GetTranslation(Rjk · source, target, a, b, c, binWidth) // cf. Algorithm 2 |

| 13: Build global transformation matrix |

| 14: Compute between () and |

| 15: Append to vector L and to vector A |

| 16: end if |

| 17: end for |

| 18: end for |

| 19: |

| 20: Tjk ← GetTranslation(Aindex · source, target, a, b, c, binWidth/100) // cf. Algorithm 2 |

| 21: return Transformation matrix |

| Algorithm 2: : Computation of the translation between the rotated source and the target. |

| input , , a, b, c, // a, b, c are the translation axes |

| 1: for all do |

| 2: Filter and and project points onto |

| 3: Compute histograms , |

| 4: Filter histograms |

| 5: Compute correlation |

| 6: |

| 7: |

| 8: end for |

| 9: |

| 10: |

| 11: |

| 12: return Translation matrix T with components , , |

4. Materials and Methods

4.1. Dataset 1: Hokuyo

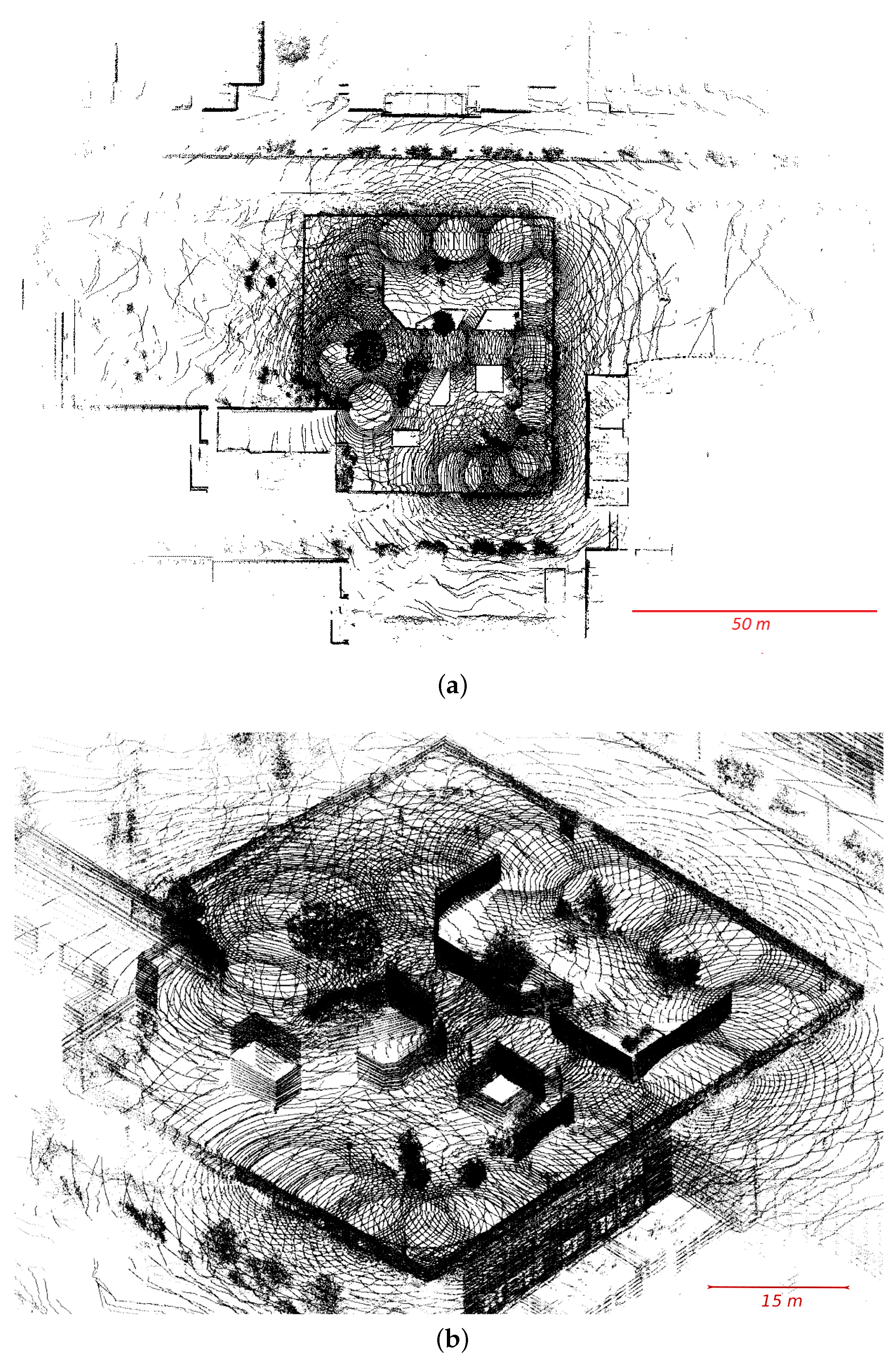

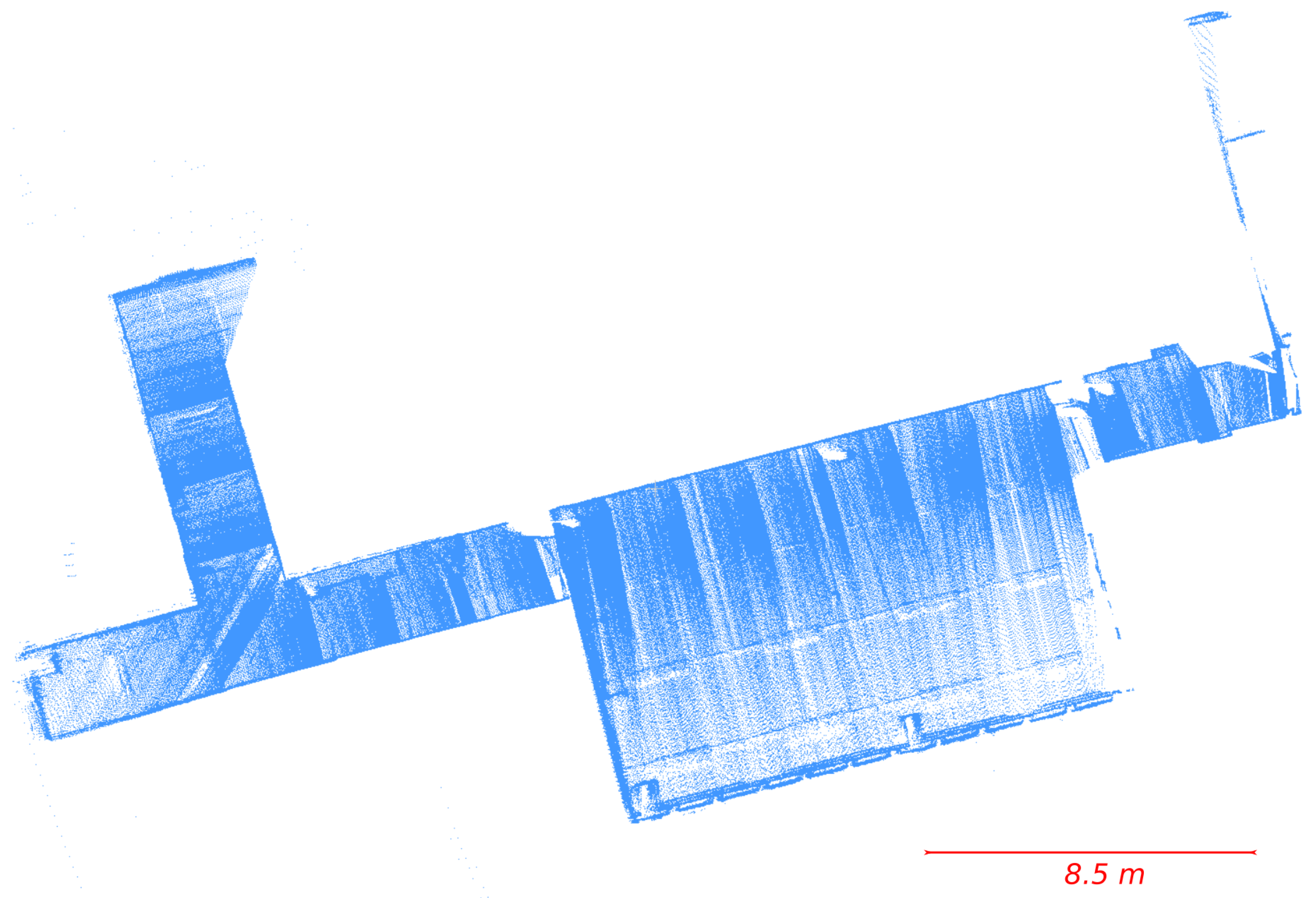

4.2. Dataset 2: Leica

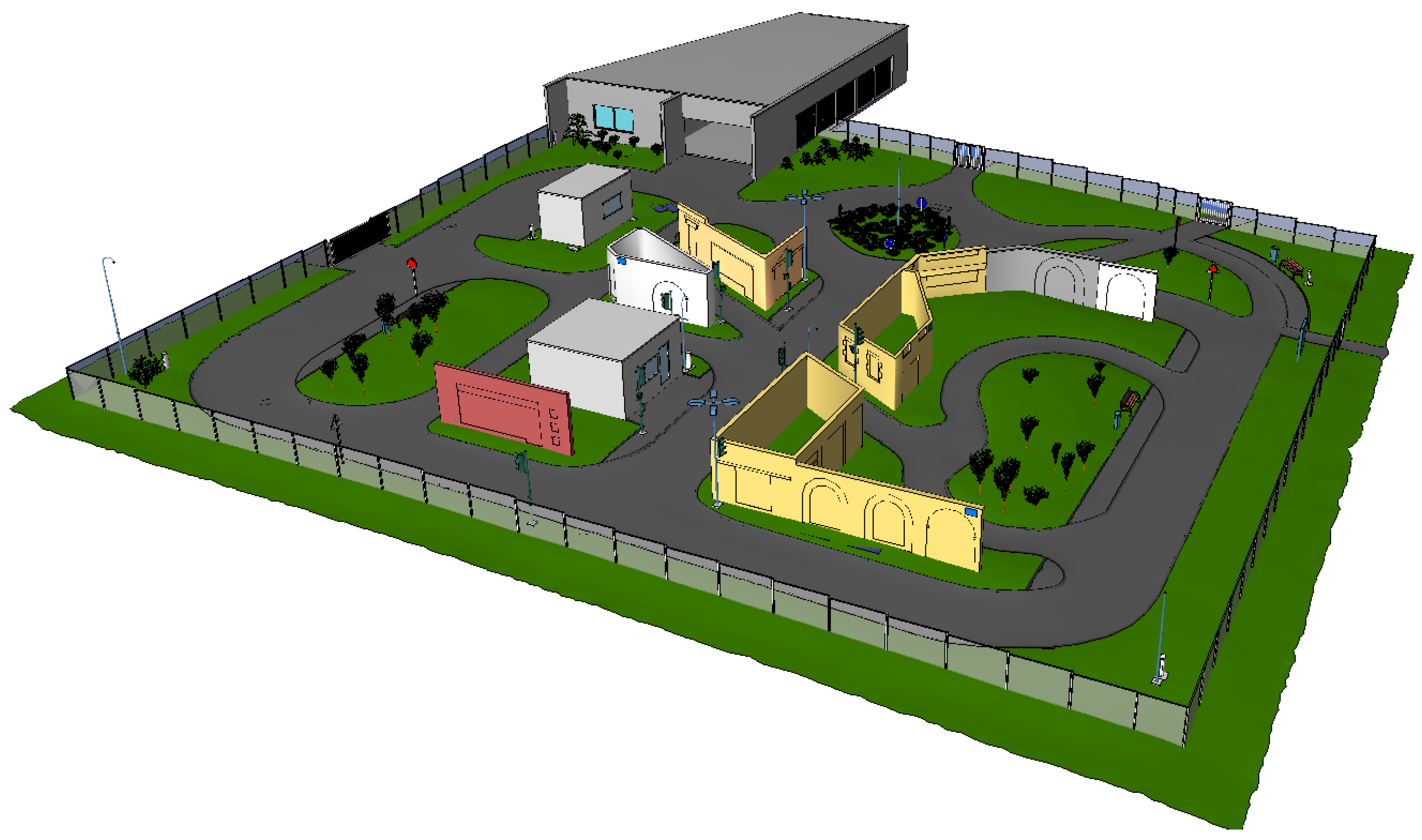

4.3. Dataset 3: Velodyne

4.4. Dataset 4: Sick

4.5. Evaluation Tools

5. Results

5.1. Basic Model

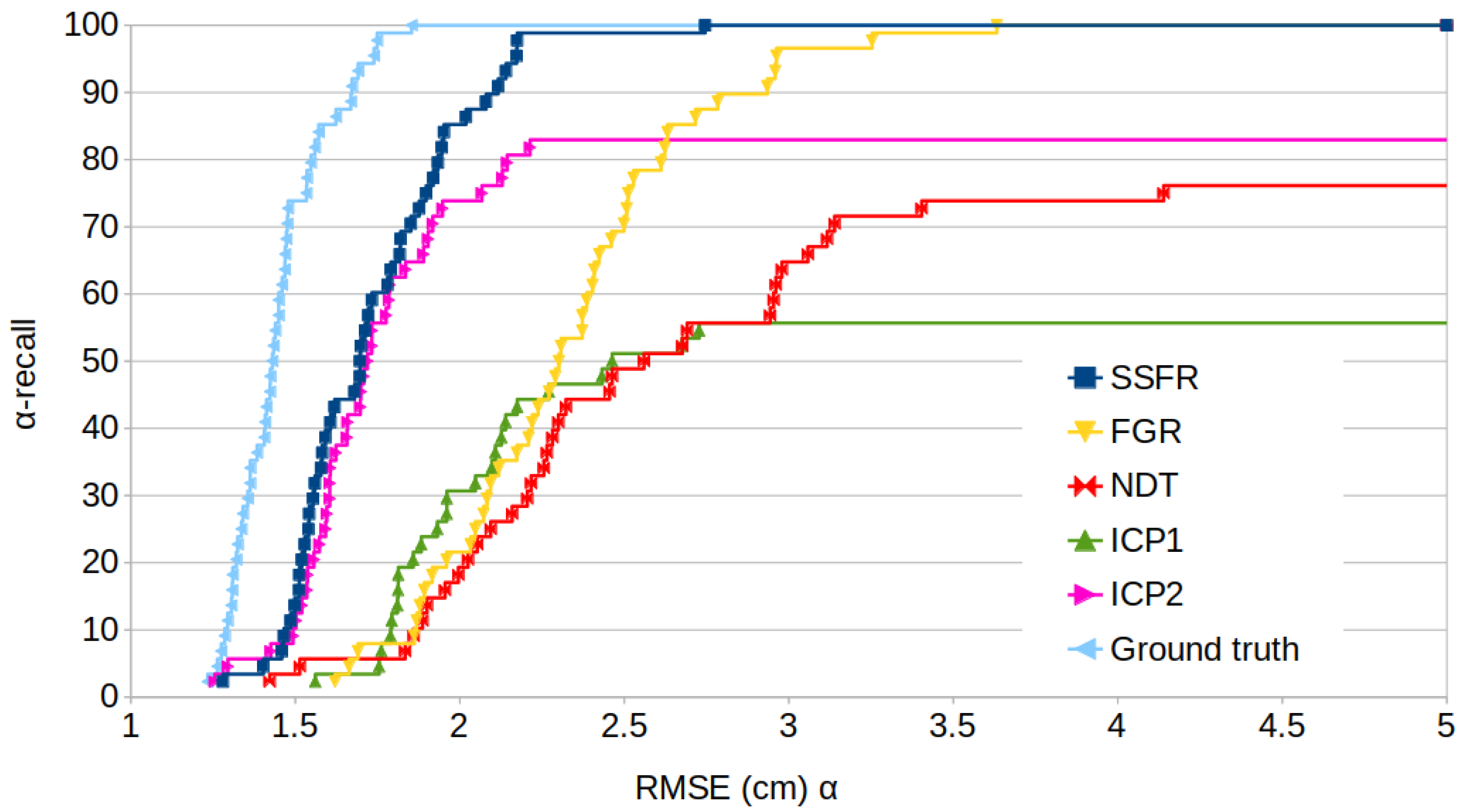

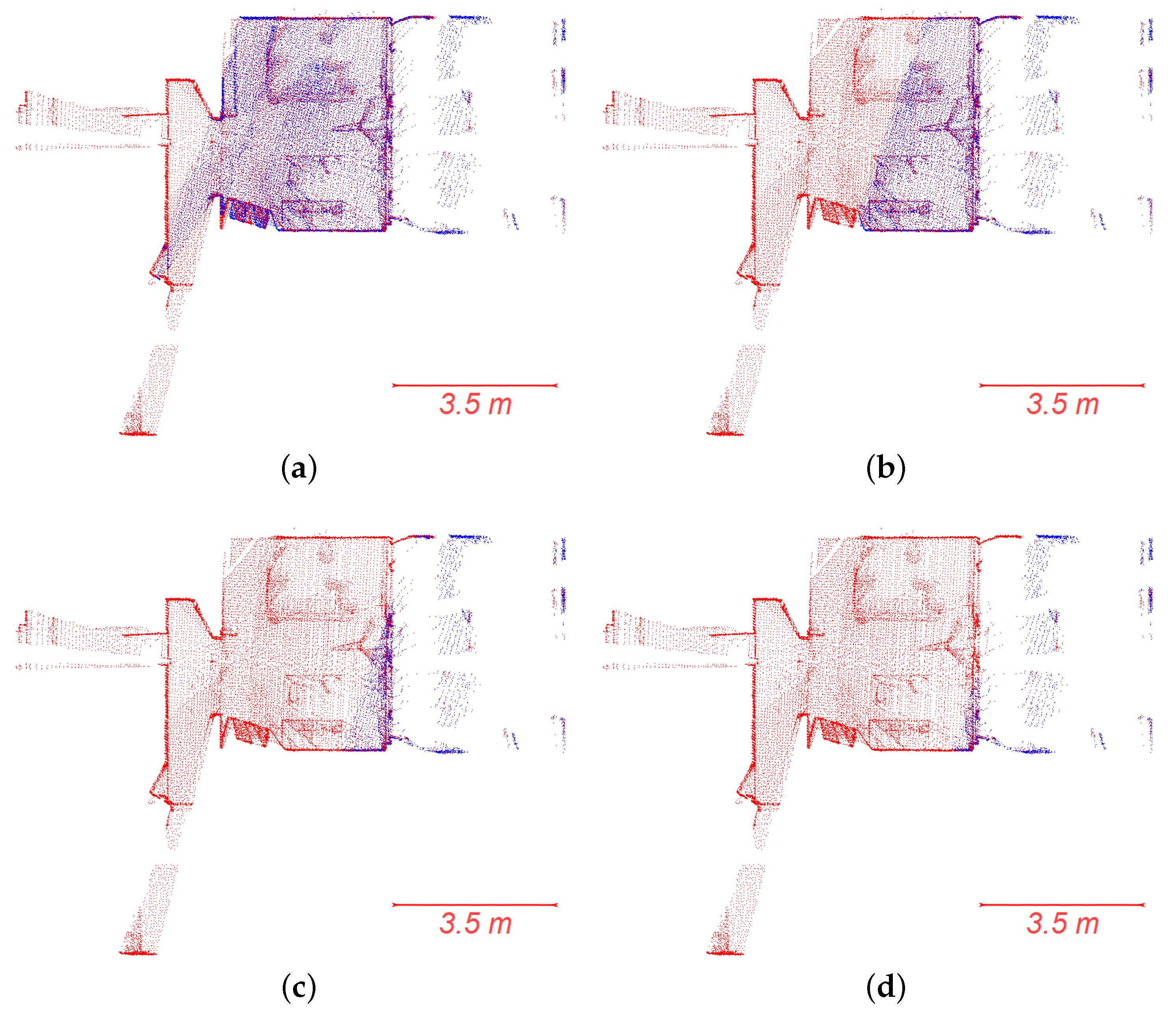

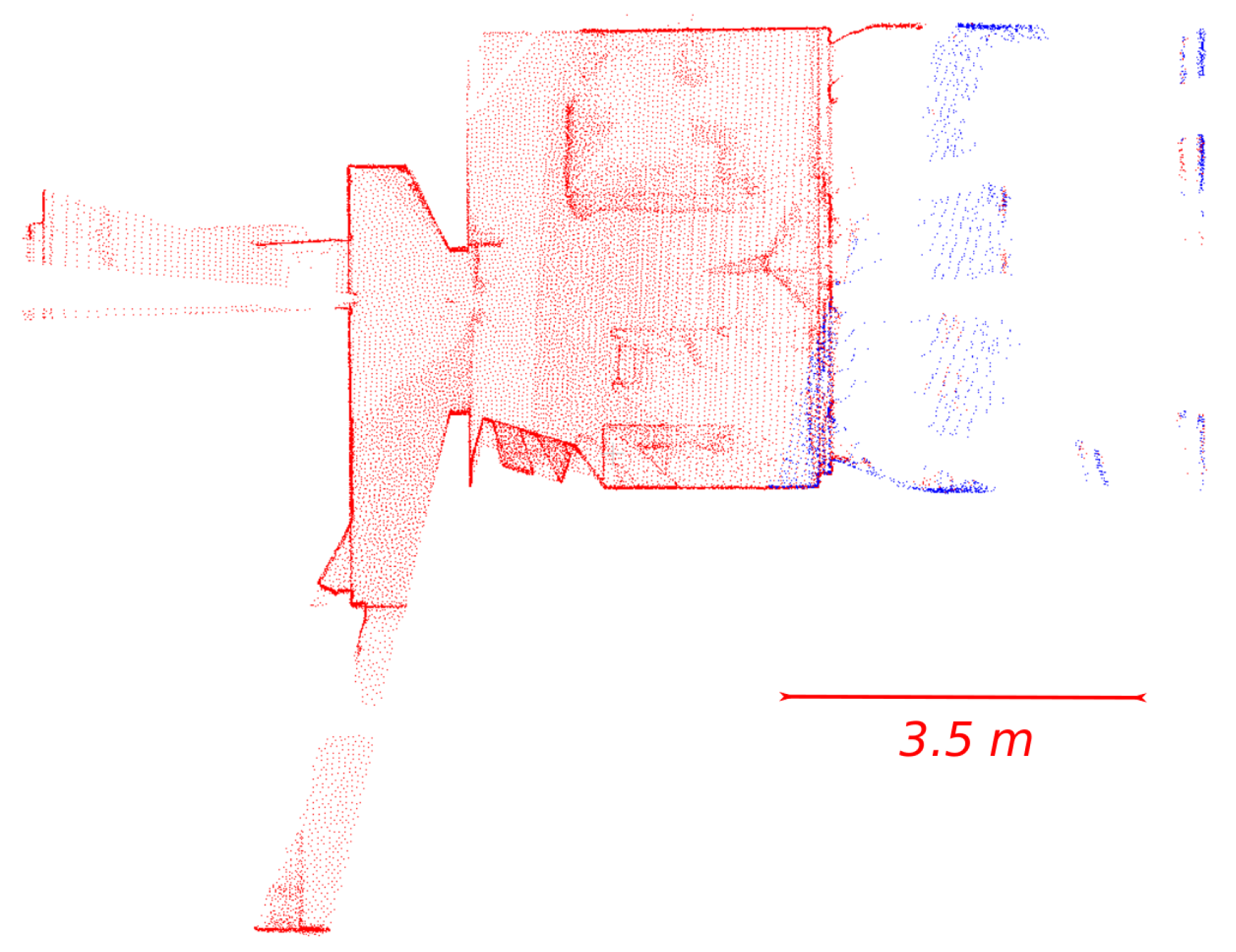

5.2. Indoor Environment

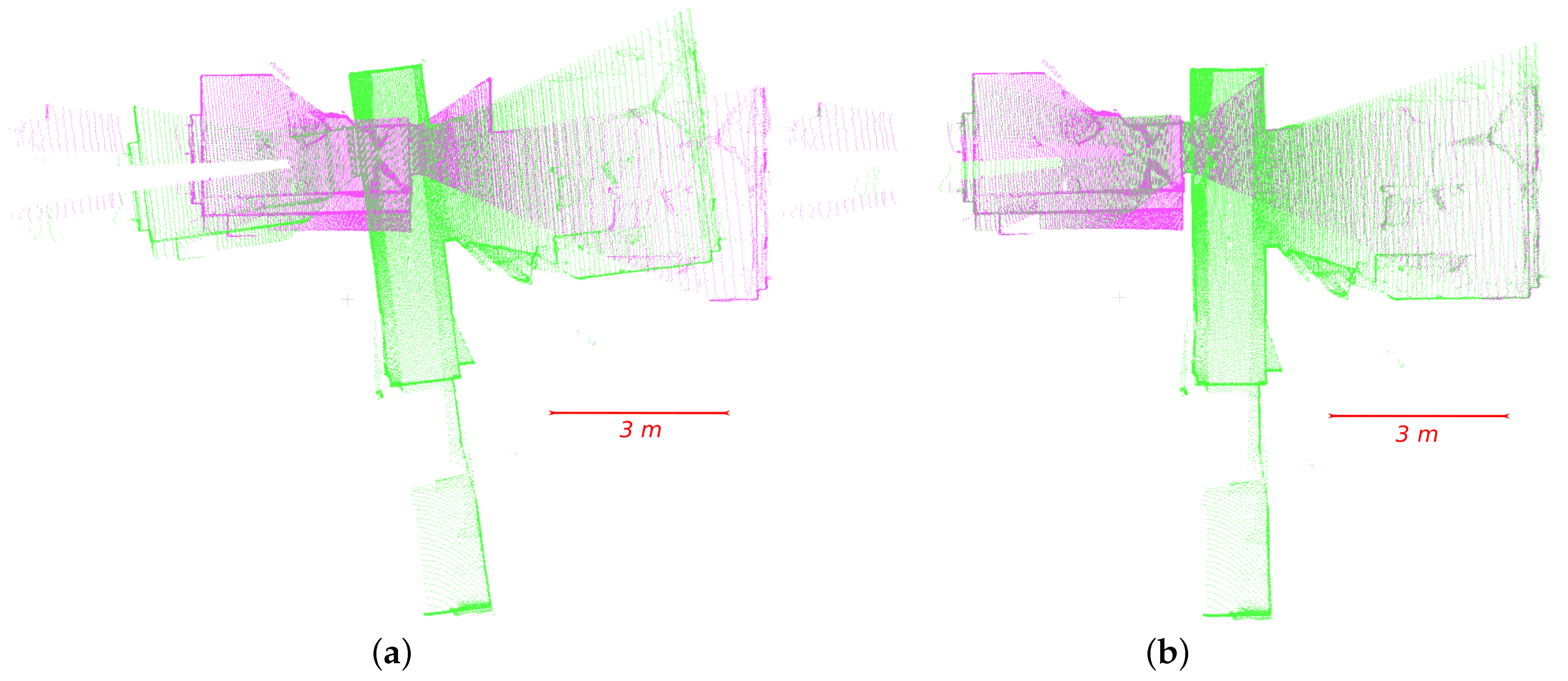

5.3. Complex Indoor Environment

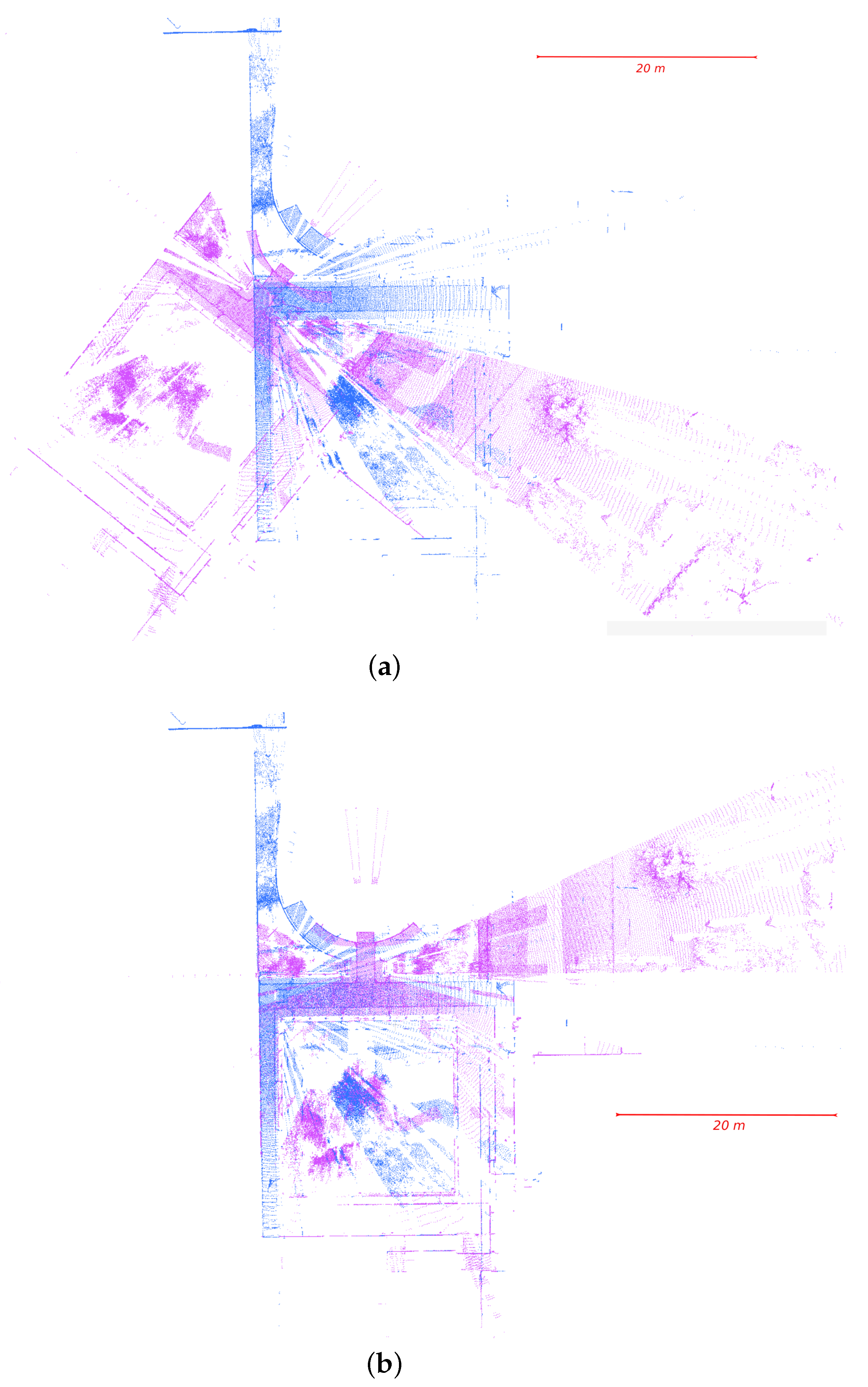

5.4. Navigation Context

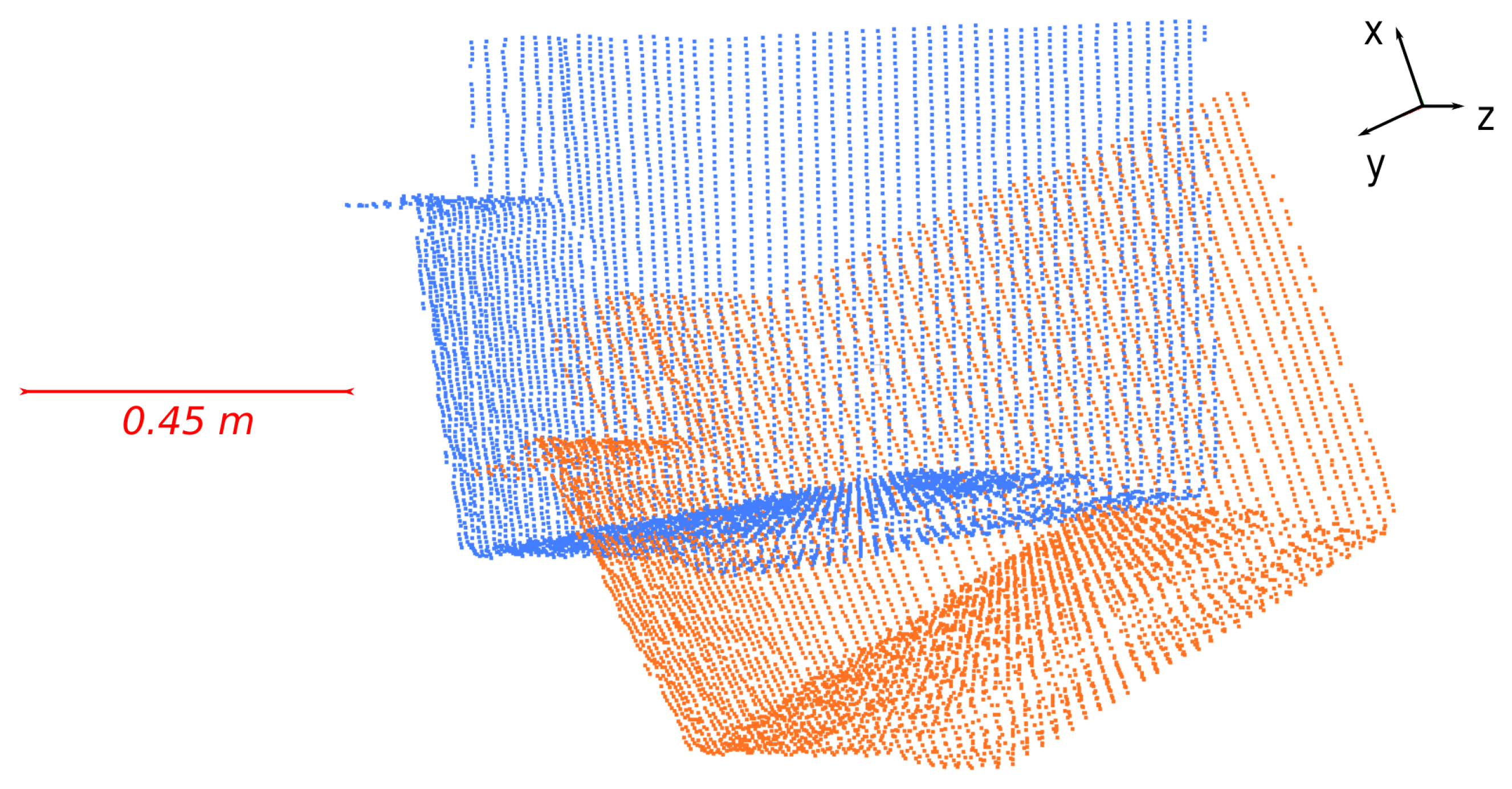

5.5. Failure Cases

6. Discussion

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| SSFR | Structured Scene Features-based Registration (our method) |

| DS | Dataset |

| EGI | Extended Gaussian Image |

| CEGI | Complex Extended Gaussian Image |

| ICP | Iterative Closest Point algorithm |

| Super4PCS | Super Four-Point Congruent Sets |

| FGR | Fast Global Registration |

| NDT | Normal Distribution Transform |

| RMSE | Root Mean Square Error |

| GT | Ground Truth |

| TE | Translation Error |

| RE | Rotation Error |

| SR | Success Rate |

References

- Ochman, S.; Vock, R.; Wessel, R.; Klein, R. Automatic reconstruction of parametric building models from indoor point clouds. Comput. Graph. (Pergamon) 2016, 54, 94–103. [Google Scholar] [CrossRef]

- Reu, J.; De Smedt, P.; De Herremans, D.; Van Meirvenne, M.; Laloo, P.; De Clercq, W. On introducing an image-based 3D reconstruction method in archaeological excavation practice. J. Archaeol. Sci. 2014, 41, 251–262. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Han, J.Y. A non-iterative approach for the quick alignment of multistation unregistered LiDAR point clouds. IEEE Geosci. Remote Sens. Lett. 2010, 7, 727–730. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Brou, P. Using the Gaussian Image to Find the Orientation of Objects. Int. J. Robot. Res. 1984, 3, 89–125. [Google Scholar] [CrossRef]

- Horn, B.K.P. Extended Gaussian images. Proc. IEEE 1984, 72, 1671–1686. [Google Scholar] [CrossRef]

- Kang, S.B.; Ikeuchi, K. Determining 3-D object pose using the complex extended Gaussian image. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Maui, HI, USA, 3–6 June 1991; pp. 580–585. [Google Scholar]

- Makadia, A.; Patterson, A.I.; Daniilidis, K. Fully automatic registration of 3D point clouds. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition CVPR, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 1297–1304. [Google Scholar]

- Pomerleau, F.; Colas, F.; Siegwart, R.; Magnenat, S. Comparing ICP Variants on Real-World Data Sets. Auton. Robots 2013, 34, 133–148. [Google Scholar] [CrossRef]

- Pomerleau, F.; Colas, F.; Siegwart, R. A Review of Point Cloud Registration Algorithms for Mobile Robotics. Found. Trends Robot. 2015, 4, 1–104. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-ICP. Robot. Sci. Syst. 2009, 5, 168–176. [Google Scholar]

- Fitzgibbon, A.W. Robust Registration of 2D and 3D Point Sets. Image Vis. Comput. 2002, 21, 1145–1153. [Google Scholar] [CrossRef]

- Gruen, A.; Akca, D. Least squares 3D surface and curve matching. ISPRS J. Photogramm. Remote Sens. 2005, 59, 151–174. [Google Scholar] [CrossRef]

- Yang, J.; Li, H.; Jia, Y. Go-ICP: Solving 3D registration efficiently and globally optimally. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1457–1464. [Google Scholar]

- Gelfand, N.; Ikemoto, L.; Rusinkiewicz, S.; Levoy, M. Geometrically stable sampling for the ICP algorithm. In Proceedings of the 4th International Conference on 3-D Digital Imaging and Modeling, Banff, Alberta, AB, Canada, 6–10 October 2003; pp. 260–267. [Google Scholar]

- Borrmann, D.; Elseberg, J.; Lingemann, K.; Nüchter, A.; Hertzberg, J. Globally consistent 3D mapping with scan matching. Robot. Auton. Syst. 2008, 56, 130–142. [Google Scholar] [CrossRef]

- Holz, D.; Ichim, A.E.; Tombari, F.; Rusu, R.B.; Behnke, S. Registration with the Point Cloud Library: A Modular Framework for Aligning in 3-D. IEEE Robot. Autom. Mag. 2015, 22, 110–124. [Google Scholar] [CrossRef]

- Filipe, S.; Alexandre, L.A. A comparative evaluation of 3D keypoint detectors in a RGB-D Object Dataset. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; Volume 1, pp. 476–483. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Johnson, A.E.; Hebert, M. Using spin images for efficient object recognition in cluttered 3D scenes. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 433–449. [Google Scholar] [CrossRef]

- Tombari, F.; Salti, S.; Di Stefano, L. Unique Signatures of Histograms for Local Surface Description. In Proceedings of the European Conference on Computer Vision (ECCV), Heraklion, Greece, 5–11 September 2010; pp. 356–369. [Google Scholar]

- Han, J.; Perng, N.; Lin, Y. Feature Conjugation for Intensity Coded LiDAR Point Clouds. IEEE Geosci. Remote Sens. Lett. 2013, 139, 135–142. [Google Scholar] [CrossRef]

- Wang, F.; Ye, Y.; Hu, X.; Shan, J. Point cloud registration by combining shape and intensity contexts. In Proceedings of the 9th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS), Cancun, Mexico, 4 December 2016; Volume 139, pp. 1–6. [Google Scholar]

- Zhou, Q.-Y.; Park, J.; Koltun, V. Fast Global Registration. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Volume 2, pp. 766–782. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Paradigm for Model. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Aiger, D.; Mitra, N.J.; Cohen-or, D. 4-Points Congruent Sets for Robust Pairwise Surface Registration. ACM Trans. Graph. (SIG-GRAPH) 2008, 27, 10. [Google Scholar]

- Mellado, N.; Aiger, D.; Mitra, N.J. Super 4PCS fast global pointcloud registration via smart indexing. Comput. Graph. Forum 2014, 33, 205–215. [Google Scholar] [CrossRef]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef] [Green Version]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform—An Efficient Representation for Registration, Surface Analysis, and Loop Detection. Ph.D. Thesis, Orebro University, Örebro, Sweden, 2009. [Google Scholar]

- Breitenreicher, D.; Schnörr, C. Model-based multiple rigid object detection and registration in unstructured range data. Int. J. Comput. Vis. 2011, 92, 32–52. [Google Scholar] [CrossRef]

- Pathak, K.; Vaskevicius, N.; Birk, A. Uncertainty analysis for optimum plane extraction from noisy 3D range-sensor point-clouds. Intell. Serv. Robot. 2009, 3, 37–48. [Google Scholar] [CrossRef]

- Pathak, K.; Birk, A. Fast Registration Based on Noisy Planes with Unknown Correspondences for 3D Mapping. IEEE Trans. Robot. 2010, 26, 424–441. [Google Scholar] [CrossRef]

- Cheng, Y. Mean shift, mode seeking, and clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 790–799. [Google Scholar] [CrossRef]

- Epanechnikov, V. Nonparametric estimation of a multidimensional probability density. Theory Probab. Appl. 1969, 14, 153–158. [Google Scholar] [CrossRef]

- Sorkine-Hornung, O.; Rabinovich, M. Least-Squares Rigid Motion Using SVD; Technical Report; ETH Department of Computer Science: Zürich, Switzerland, 2017. [Google Scholar]

- Pomerleau, F.; Liu, M.; Colas, F.; Siegwart, R. Challenging data sets for point cloud registration algorithms. Int. J. Robot. Res. 2012, 31, 1705–1711. [Google Scholar] [CrossRef]

- Hokuyo Website. Available online: www.hokuyo-aut.jp (accessed on 27 September 2017).

- Leica Website. Available online: w3.leica-geosystems.com (accessed on 27 September 2017).

- Velodyne Website. Available online: www.velodynelidar.com (accessed on 27 September 2017).

- Elseberg, J.; Borrmann, D.; Lingemann, K.; Nüchter, A. Non-Rigid Registration and Rectification of 3D Laser Scans. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 1546–1552. [Google Scholar]

- Nüchter, A.; Lingemann, K. Robotic 3D Scan Repository. Available online: kos.informatik.uos.de/3Dscans/ (accessed on 27 September 2017).

- SICK Website. Available online: www.sick.com (accessed on 27 September 2017).

- Lehtola, V.V.; Kaartinen, H.; Nüchter, A.; Kaijaluoto, R.; Kukko, A.; Litkey, P.; Honkavaara, E.; Rosnell, T.; Vaaja, M.T.; Virtanen, J.P.; et al. Comparison of the Selected State-Of-The-Art 3D Indoor Scanning and Point Cloud Generation Methods. Remote Sens. 2017, 9, 796. [Google Scholar] [CrossRef]

- Vivet, D.; Checchin, P.; Chapuis, R. Localization and Mapping Using Only a Rotating FMCW Radar Sensor. Sensors 2013, 13, 4527–4552. [Google Scholar] [CrossRef] [PubMed]

| Sensor | Scans Number | Points Number | Max size (m) | Resolution (cm) | Motions | Transformations | |

|---|---|---|---|---|---|---|---|

| -H | Hokuyo UTM-30LX | 45 | 365,000 | 11 | 0.61 | small | Yes |

| -L | Leica P20 | 6 | 9 × 10−6 | 65 | 0.35 | large | Yes |

| -V | Velodyne HDL-32E | 41 | 56,000 | 120 | 7.70 | small | No |

| -S | SICK LMS-200 | 63 | 81,600 | 25 | 8.20 | small | No |

| Parameters Number | RMSE (cm) | T (s) | RMSE (cm) | T (s) | RMSE (cm) | T (s) | |

|---|---|---|---|---|---|---|---|

| ICP1 | 1 | 4.23 × 10−4 | 0.2 | x | x | x | x |

| ICP2 | 2 | 3.77 × 10 | 0.2 | 3.70 × 10 | 0.2 | x | x |

| NDT | 1 | 1.29 × 10−1 | 2.2 | x | x | x | x |

| FGR | 3 | 1.54 × 10−3 | 0.5 | 1.74 × 10−3 | 0.6 | 2.78 × 10−5 | 0.6 |

| Super4PCS | 2 | 5.83 | 1.7 | 9.65 | 11.1 | 3.30 | 3.1 |

| Go-ICP | 4 | 5.77 × 10−4 | 41.5 | 3.31 × 10−4 | 42.0 | 1.83 × 10 | 82.4 |

| SSFR | 3 | 2.70 × 10−3 | 0.3 | 3.98 × 10−3 | 0.3 | 3.36 × 10−4 | 0.3 |

| RMSE (cm) | Success Rate (%) | Time (s) | |

|---|---|---|---|

| ICP1 | 2.0 | 54 | 7.4 |

| ICP2 | 1.7 | 82 | 3.2 |

| NDT | 2.4 | 61 | 5.2 |

| FGR | 2.3 | 100 | 3.9 |

| SSFR | 1.8 | 100 | 2.4 |

| GT | 1.4 | 100 | nd |

| ICP Point-to-Plane | FGR | SSFR | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Resolution (cm) | SR (%) | RMSE B | Time (s) | SR (%) | RMSE (cm) | Time (s) | SR (%) | RMSE (cm) | Time (s) |

| 2.6 | 81.8 | 1.7 | 8.7 | 100 | 2.2 | 9.7 | 100 | 1.8 | 25.7 |

| 3.6 | 81.8 | 1.7 | 3.1 | 100 | 2.3 | 4.2 | 100 | 1.8 | 13.1 |

| 4.6 | 84.1 | 1.7 | 1.5 | 100 | 2.6 | 3.2 | 100 | 1.9 | 7.2 |

| 5.9 | 79.5 | 1.8 | 0.9 | 100 | 3.0 | 1.8 | 100 | 1.9 | 4.4 |

| 10.6 | 75.0 | 1.8 | 0.2 | 93.2 | 5.1 | 1.2 | 100 | 2.1 | 0.6 |

| 16.0 | 59.0 | 1.9 | 0.06 | 65.9 | 6.2 | 2.0 | 100 | 2.5 | 0.3 |

| Radius (cm) | RMSE (cm) | Time (s) |

|---|---|---|

| 0.1 | 2.1 | 0.7 |

| 0.5 | 1.8 | 1.0 |

| 1 | 1.8 | 2.4 |

| 2 | 1.8 | 6.5 |

| 3 | 1.8 | 6.1 |

| 4 | 1.8 | 11.0 |

| 6 | 1.8 | 15.3 |

| 10 | 1.8 | 24.2 |

| RMSE GT (cm) | RMSE (cm) | Translation Error (cm) | Rotation Errors () | Time (s) | |

|---|---|---|---|---|---|

| 1_0 | 2.66 | 2.73 | 0.006 | 0.023, 0.025, 0.024 | 15.8 |

| 2_1 | 2.68 | 2.99 | 1.0 | 0.035, 0.045, 0.021 | 23.5 |

| 3_2 | 2.83 | 3.37 | 1.7 | 0.065, 0.008, 0.037 | 27.1 |

| 4_3 | 2.58 | 2.59 | 0.001 | 0.0006, 0.04, 0.006 | 19.8 |

| 5_4 | 2.55 | 2.61 | 0.0007 | 0.008, 0.015, 0.039 | 33.9 |

| Average | 2.66 | 2.91 | 0.54 | 0.026, 0.027, 0.025 | 24.0 |

| Success Rate (%) | |

|---|---|

| ICP1 | 12 |

| ICP2 | 20 |

| FGR | 85 |

| SSFR | 100 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sanchez, J.; Denis, F.; Checchin, P.; Dupont, F.; Trassoudaine, L. Global Registration of 3D LiDAR Point Clouds Based on Scene Features: Application to Structured Environments. Remote Sens. 2017, 9, 1014. https://doi.org/10.3390/rs9101014

Sanchez J, Denis F, Checchin P, Dupont F, Trassoudaine L. Global Registration of 3D LiDAR Point Clouds Based on Scene Features: Application to Structured Environments. Remote Sensing. 2017; 9(10):1014. https://doi.org/10.3390/rs9101014

Chicago/Turabian StyleSanchez, Julia, Florence Denis, Paul Checchin, Florent Dupont, and Laurent Trassoudaine. 2017. "Global Registration of 3D LiDAR Point Clouds Based on Scene Features: Application to Structured Environments" Remote Sensing 9, no. 10: 1014. https://doi.org/10.3390/rs9101014