Assessing and Improving the Reliability of Volunteered Land Cover Reference Data

Abstract

:1. Introduction

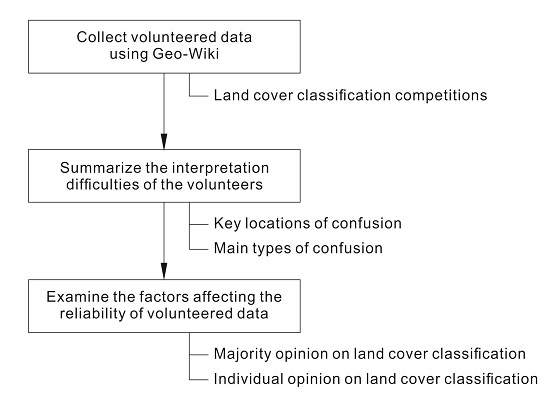

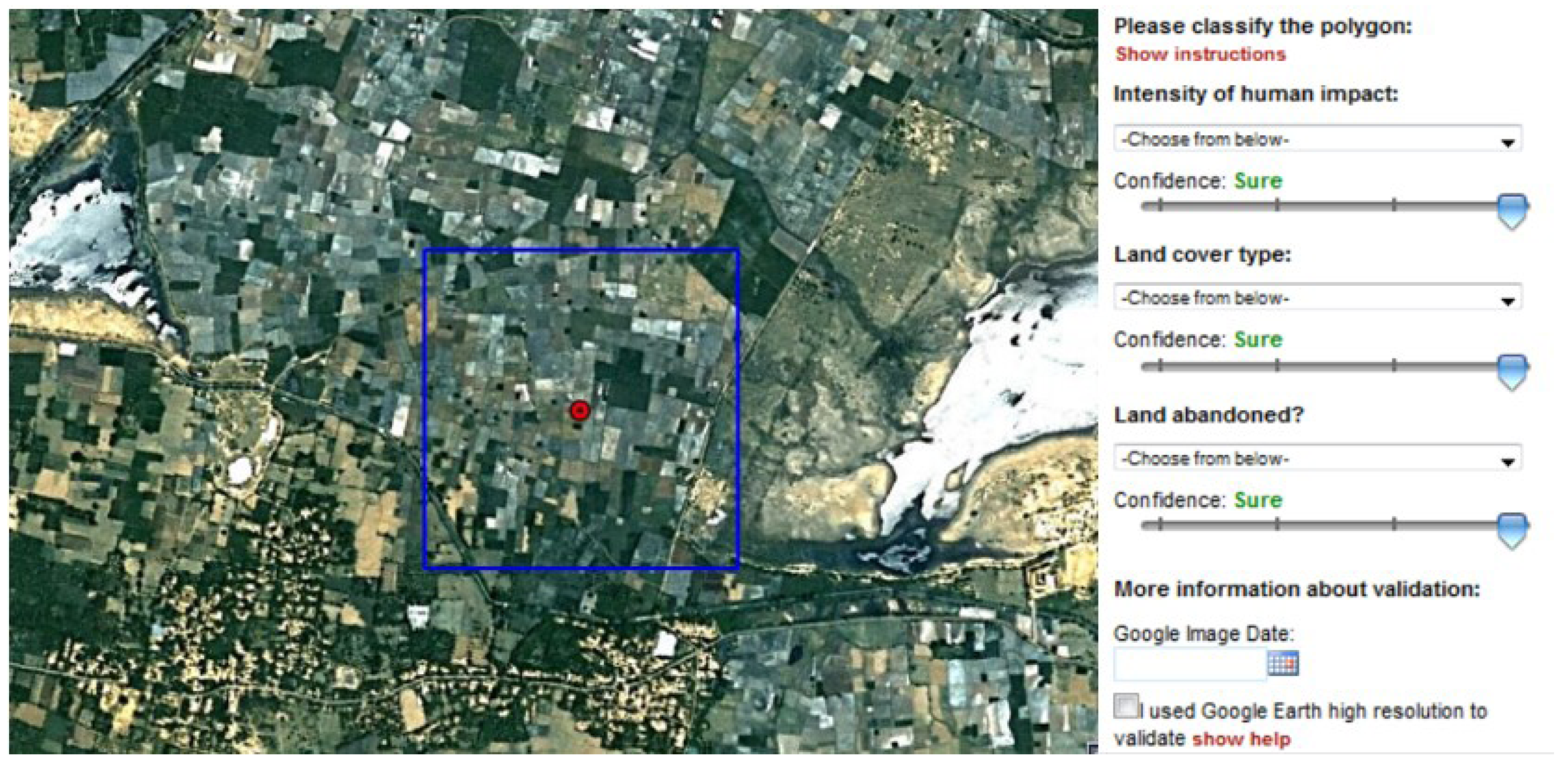

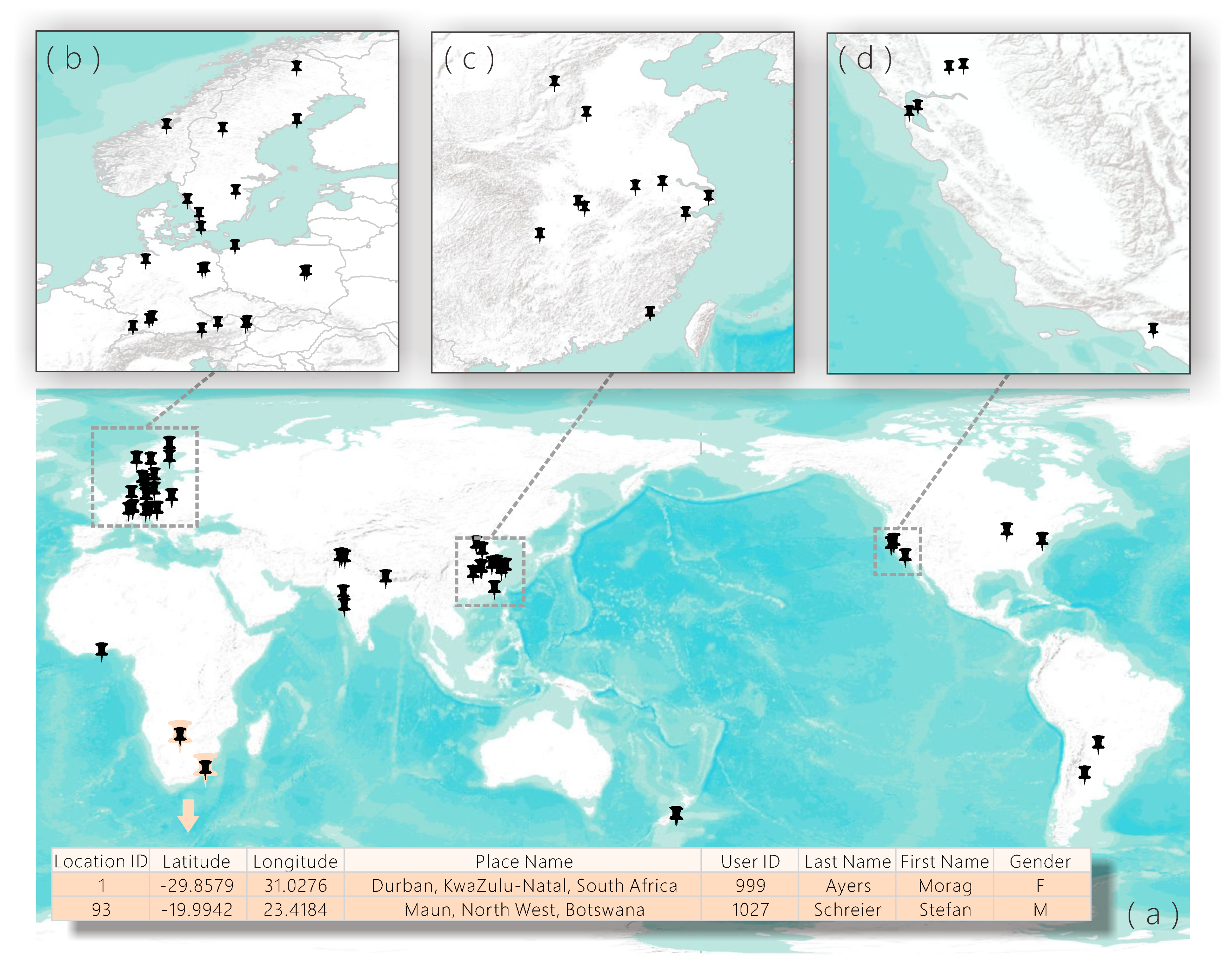

2. Data

3. Methods

3.1. Determining Key Locations of Confusion in the Volunteered Reference Data

3.2. Understanding the Main Types of Land Cover Confusion in the Volunteered Reference Data

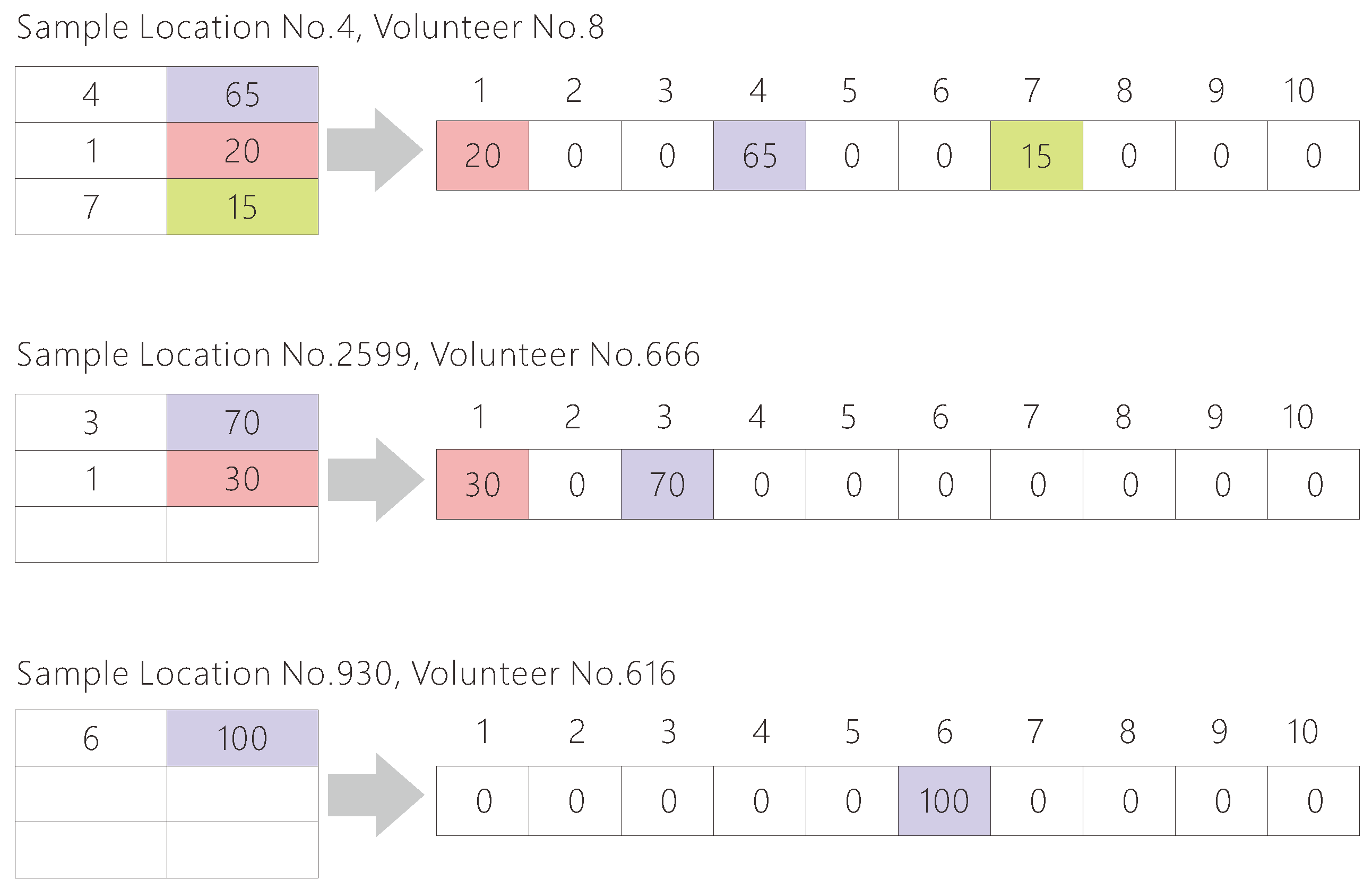

3.3. Reliability of Majority Opinion on Land Cover Classification

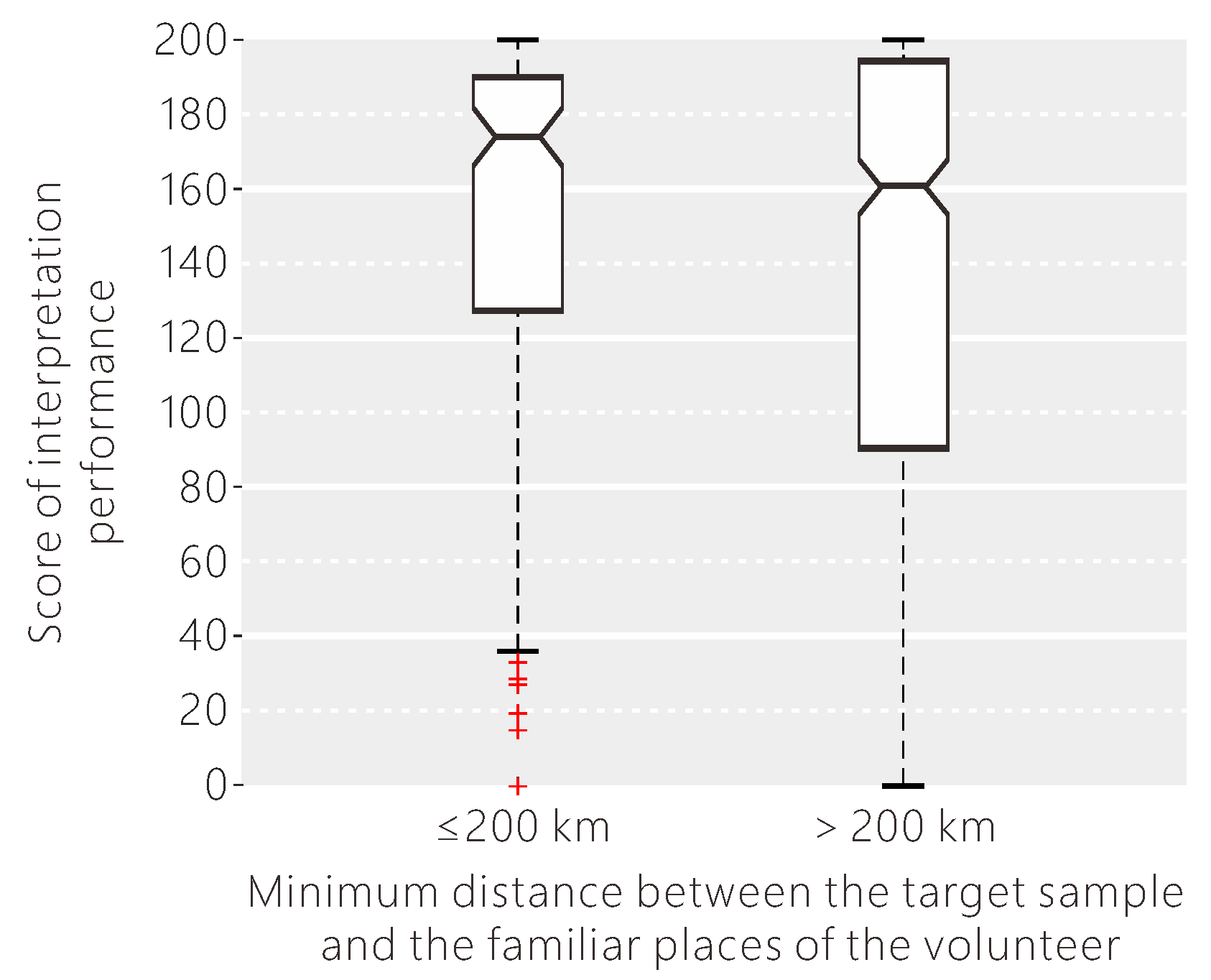

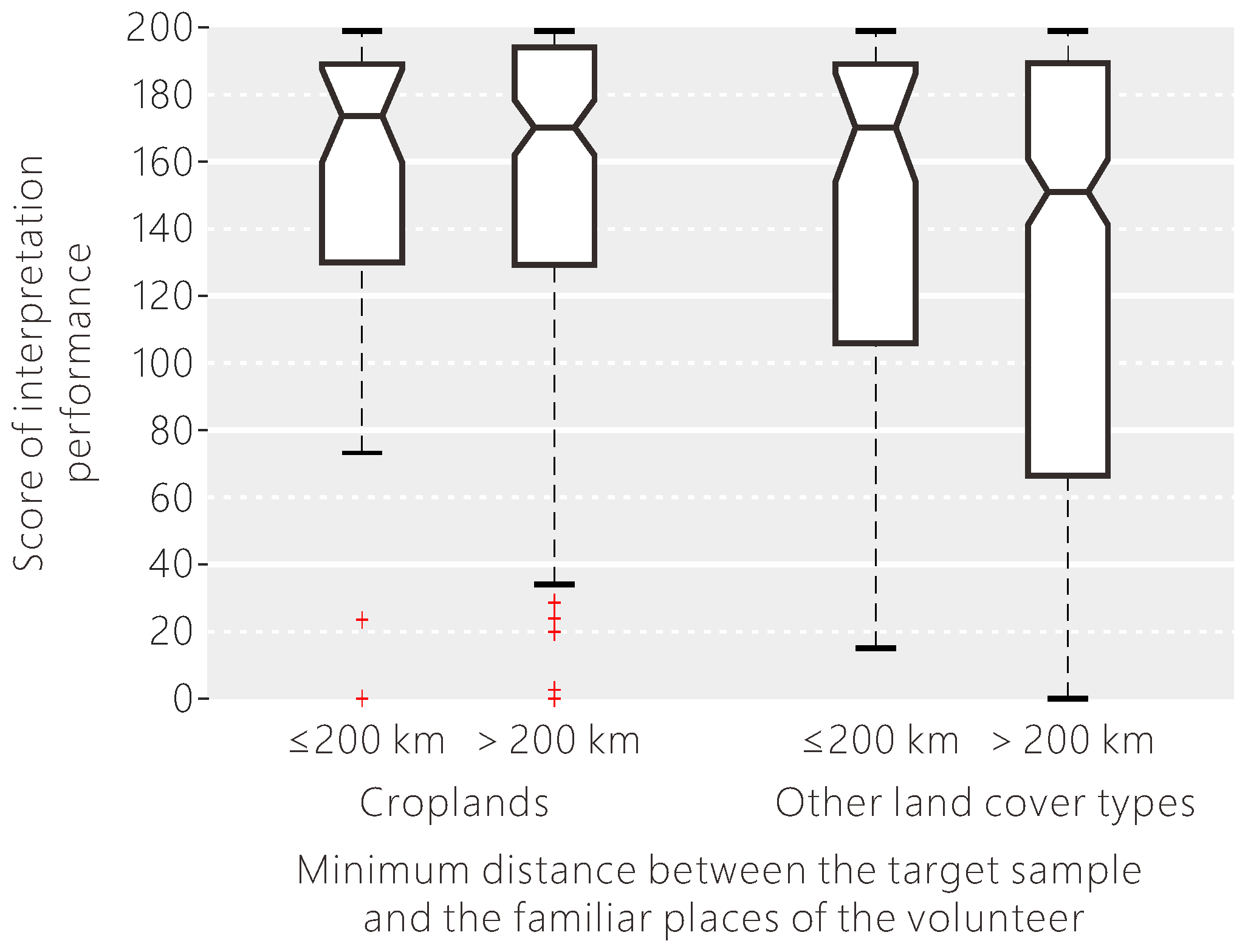

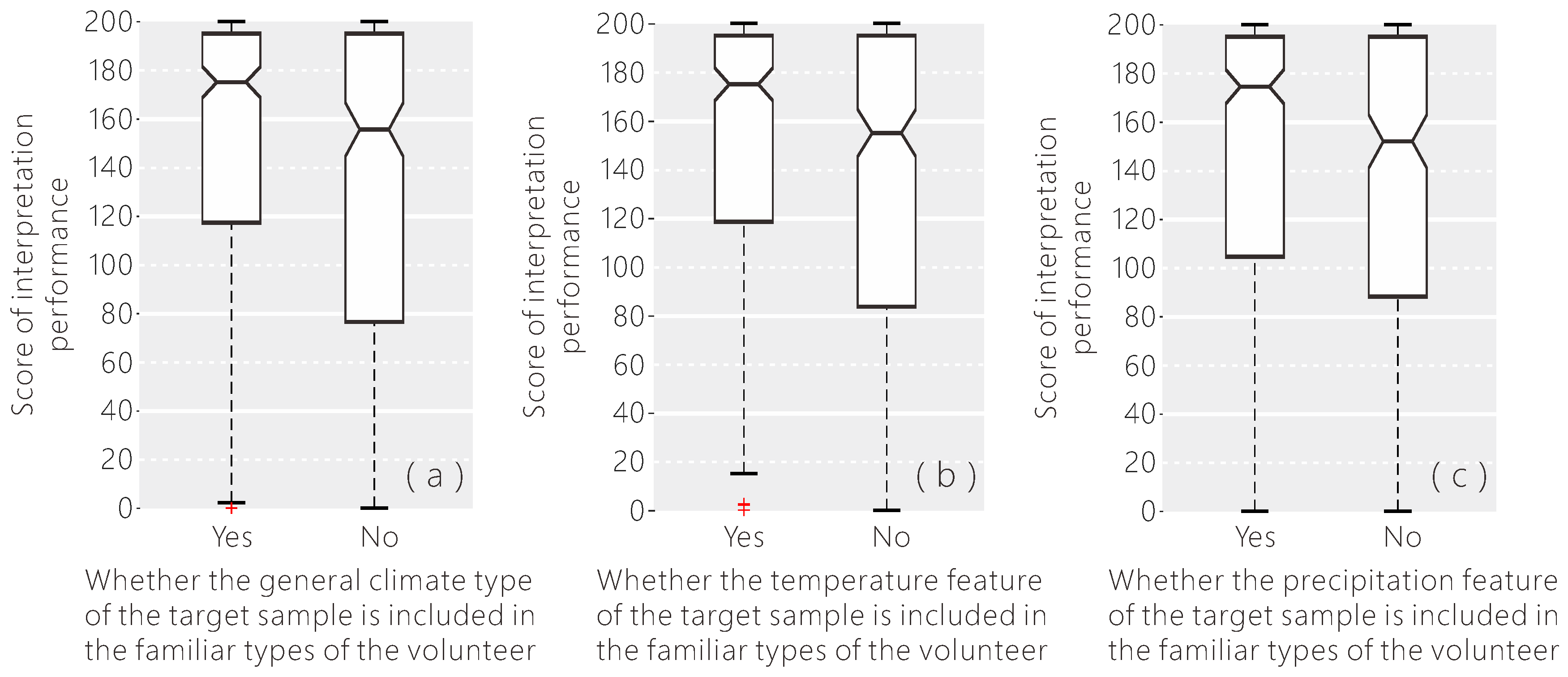

3.4. Reliability of Individual Classifications of Land Cover

4. Results and Discussion

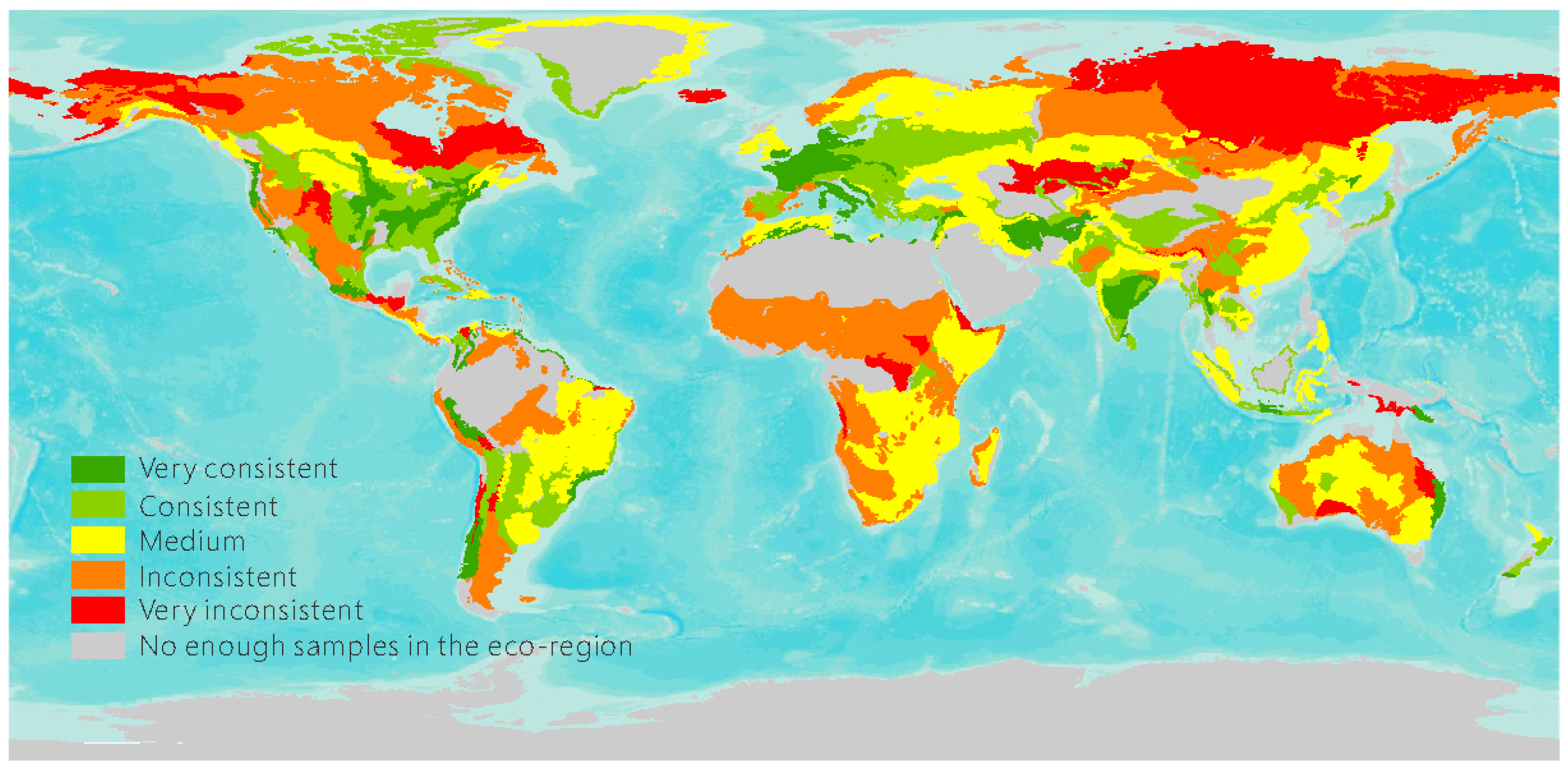

4.1. Determining Key Locations of Confusion in the Volunteered Reference Data

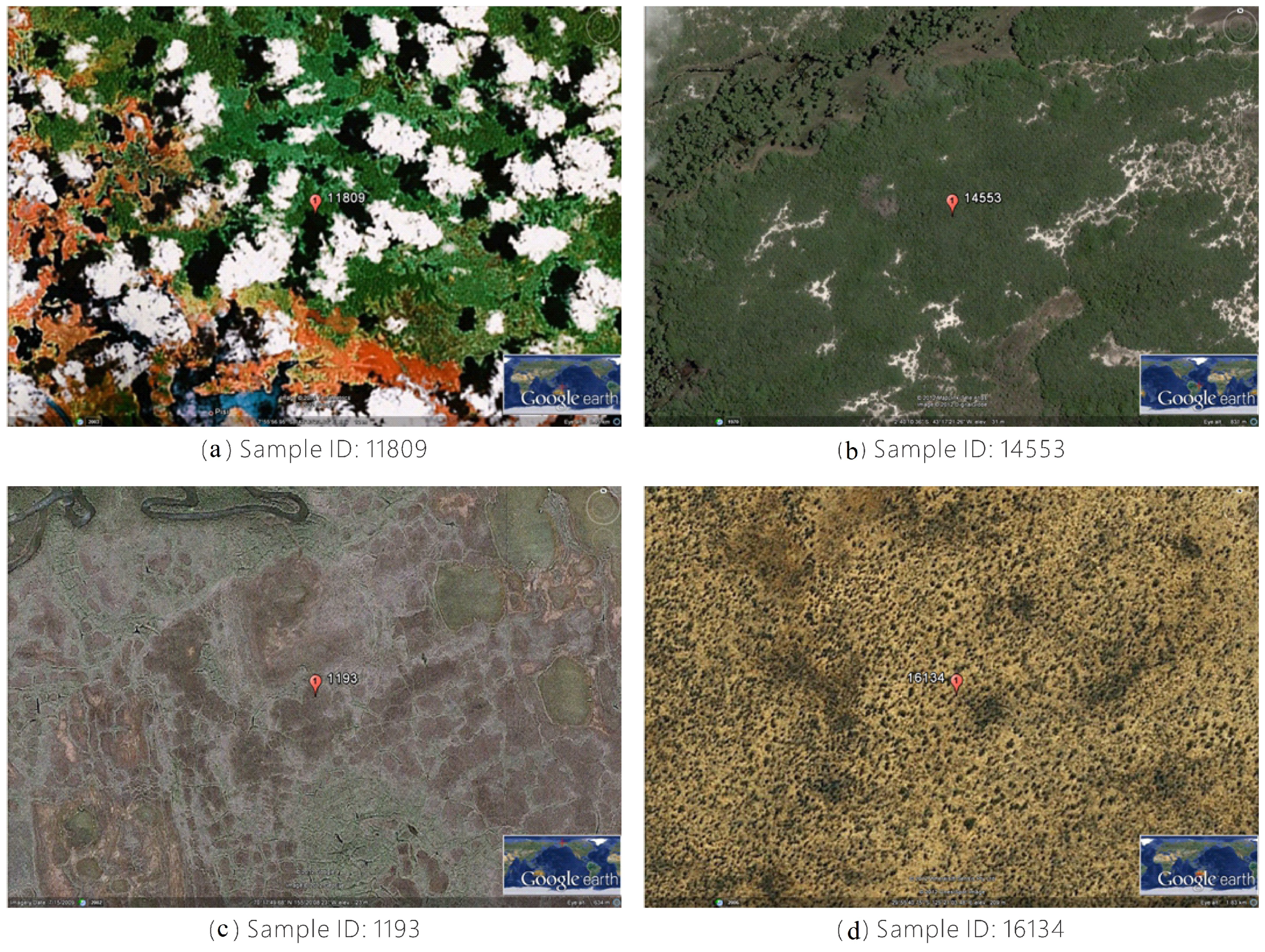

4.2. Understanding the Main Types of Land Cover Confusion in the Volunteered Reference Data

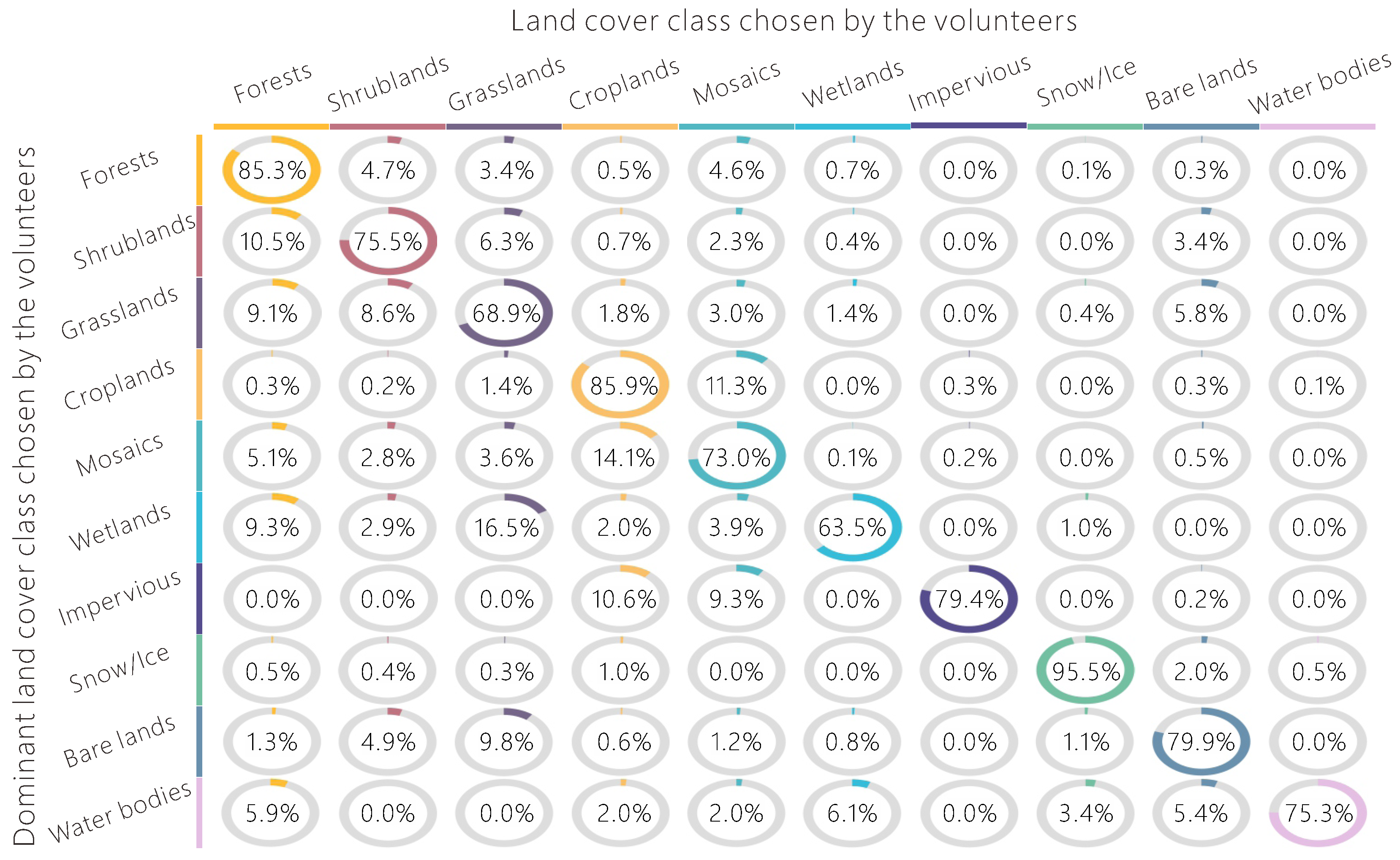

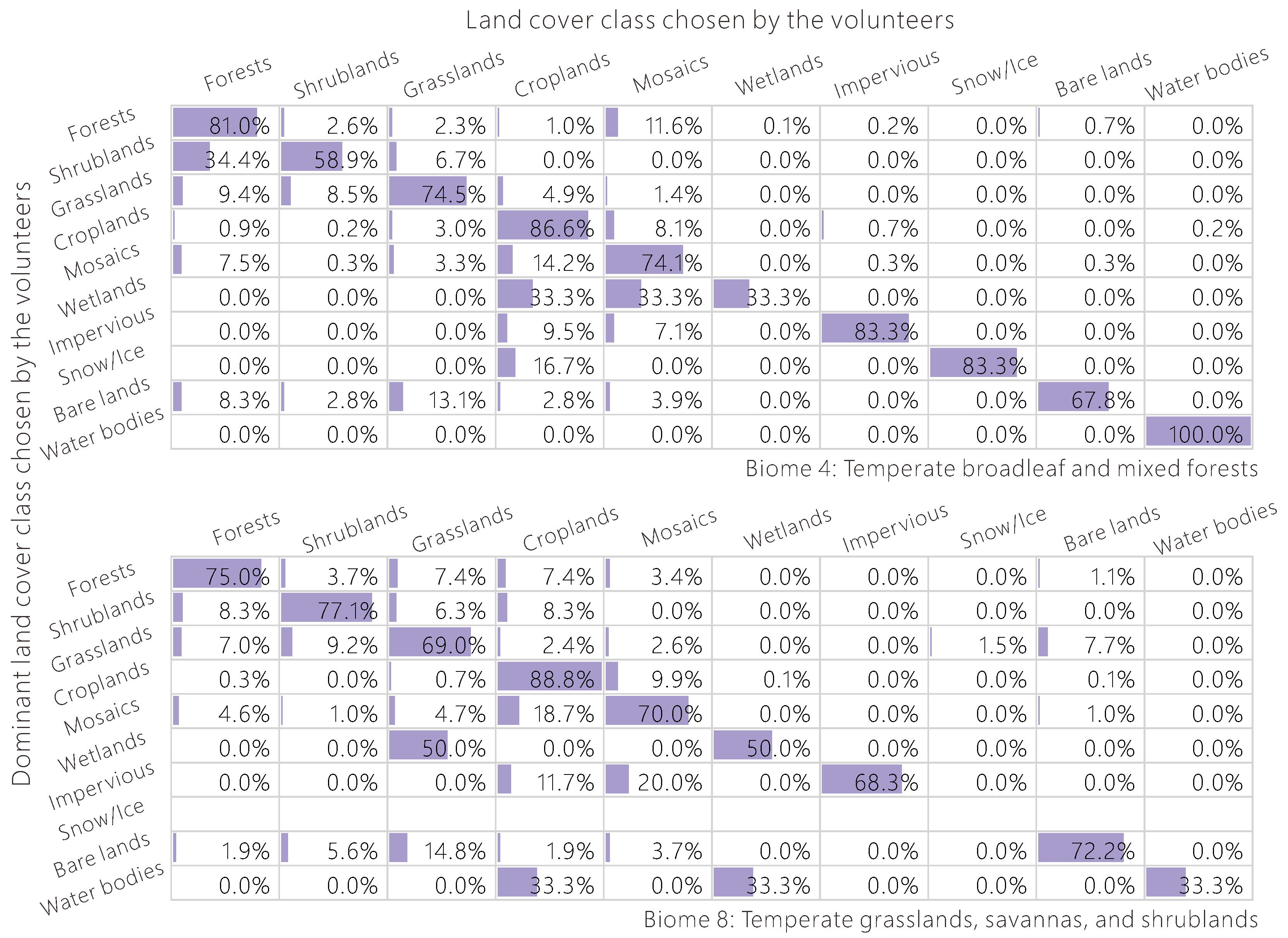

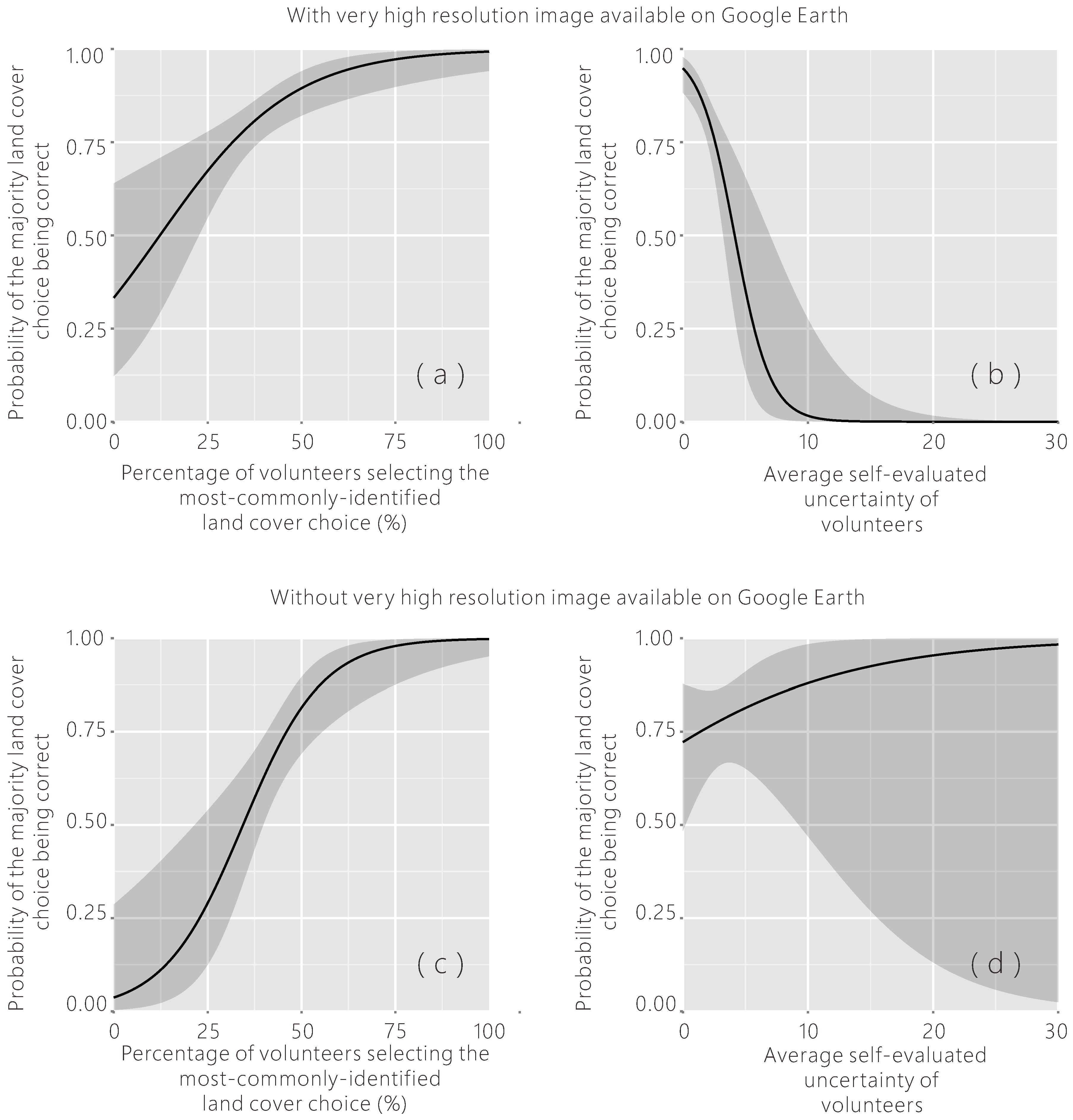

4.3. Reliability of Majority Opinion on Land Cover Classification

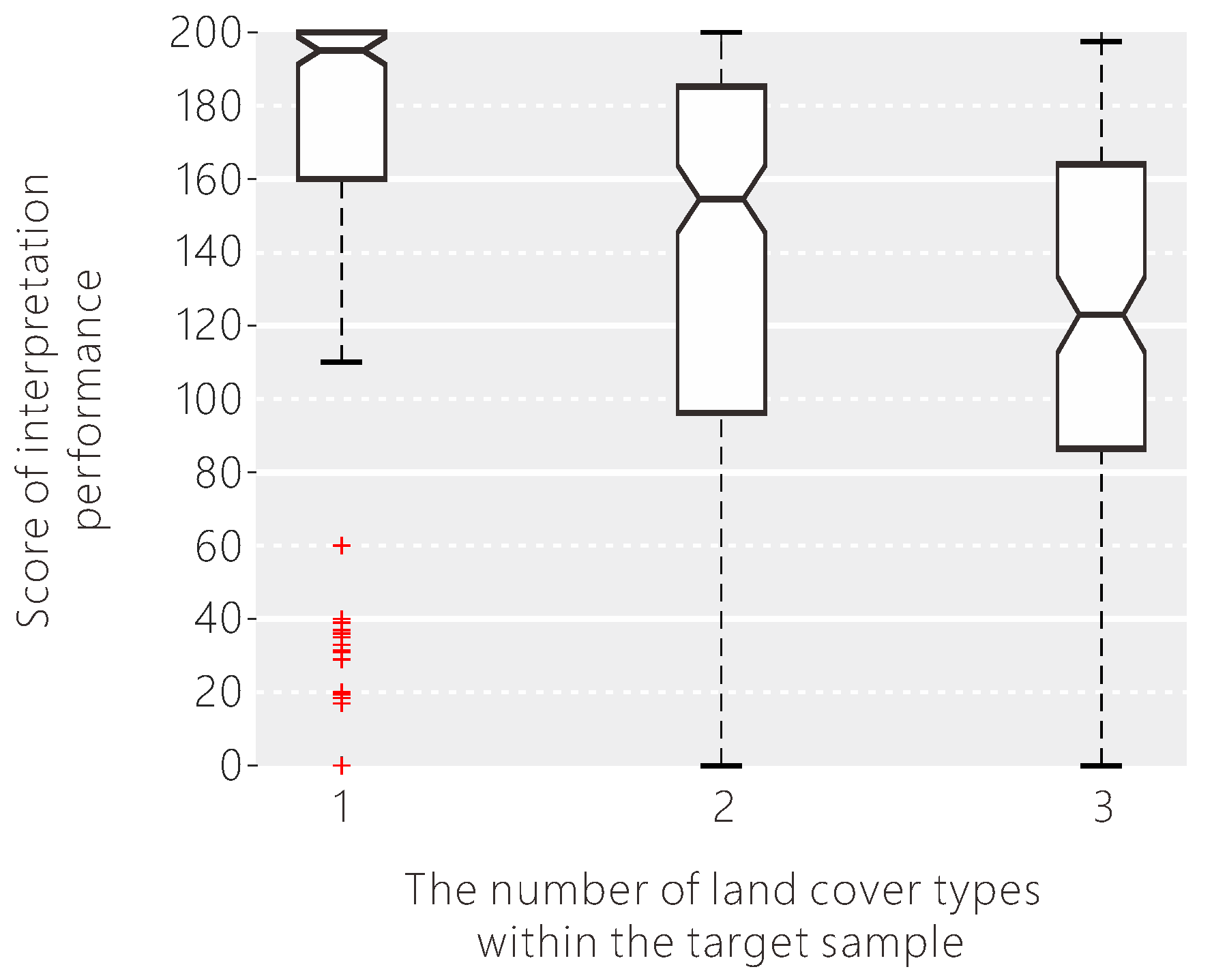

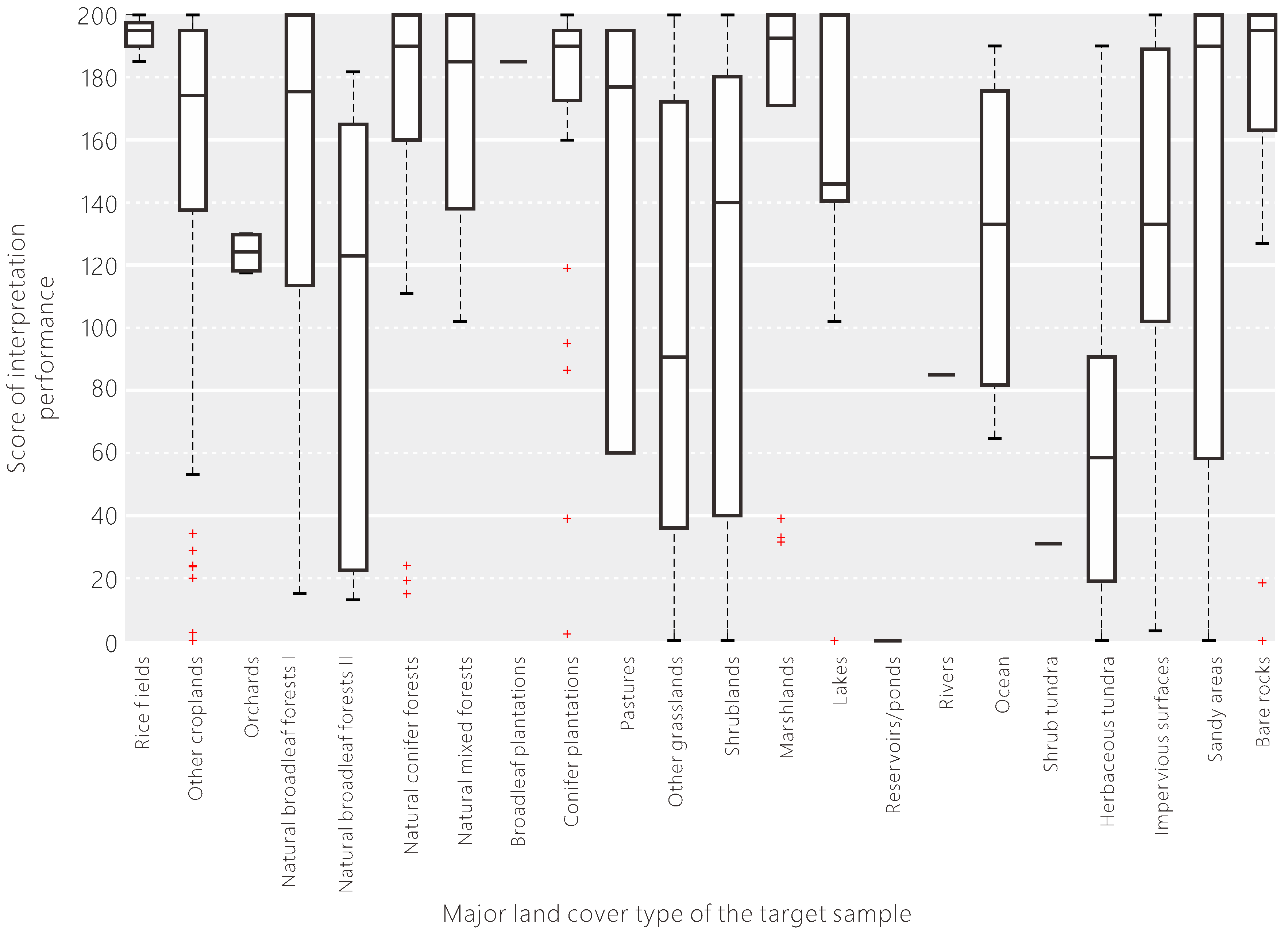

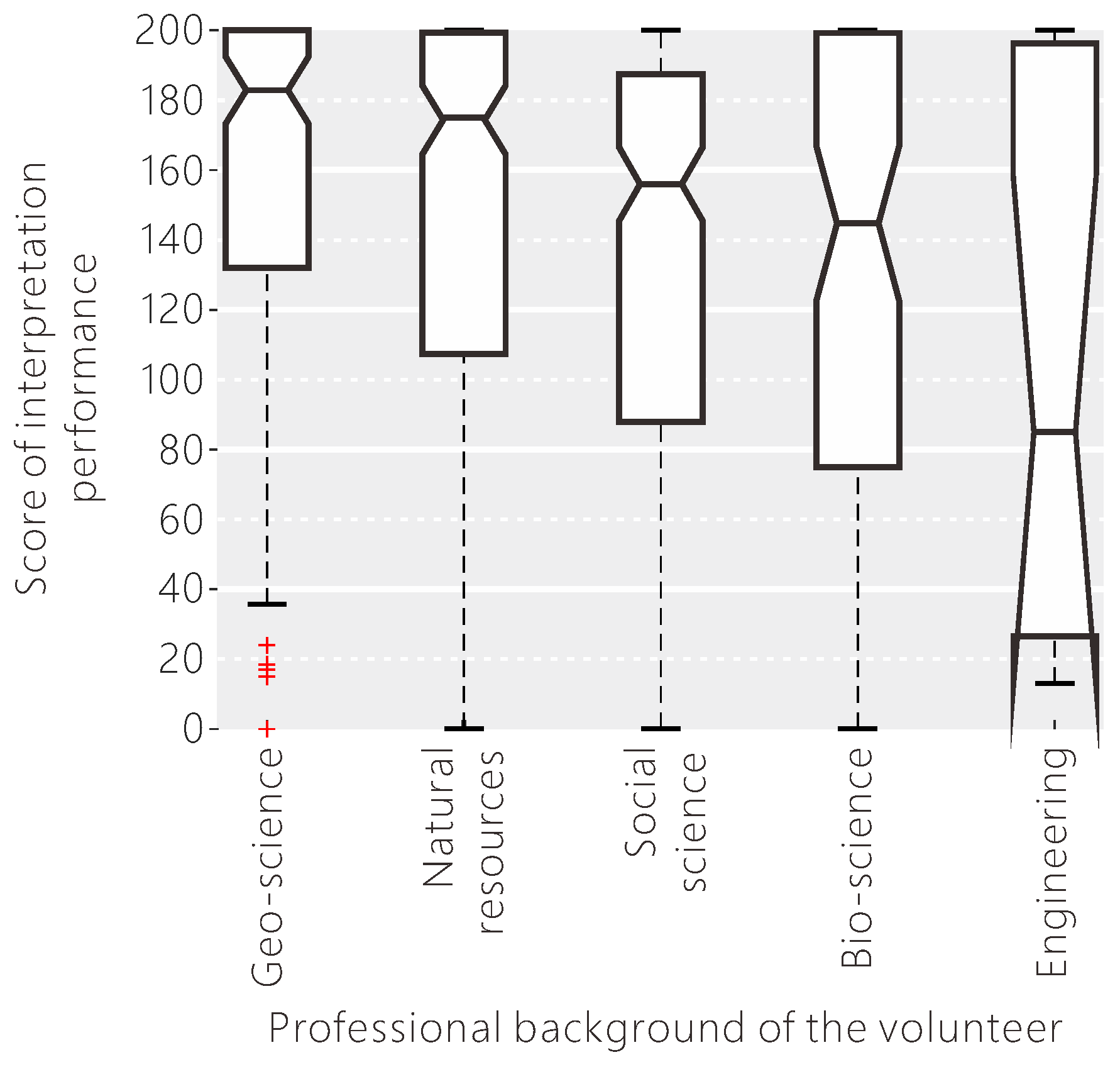

4.4. Reliability of Individual Classifications of Land Cover

5. Conclusions and Recommendations

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Grafius, D.R.; Corstanje, R.; Warren, P.H.; Evans, K.L.; Hancock, S.; Harris, J.A. The impact of land use/land cover scale on modelling urban ecosystem services. Landsc. Ecol. 2016, 31, 1509–1522. [Google Scholar] [CrossRef]

- Teixeira, R.F.; de Souza, D.M.; Curran, M.P.; Antón, A.; Michelsen, O.; i Canals, L.M. Towards consensus on land use impacts on biodiversity in LCA: UNEP/SETAC Life Cycle Initiative preliminary recommendations based on expert contributions. J. Clean. Prod. 2016, 112, 4283–4287. [Google Scholar] [CrossRef]

- Tompkins, A.M.; Caporaso, L. Assessment of malaria transmission changes in Africa, due to the climate impact of land use change using Coupled Model Intercomparison Project Phase 5 earth system models. Geospat. Health 2016, 11, 380. [Google Scholar] [CrossRef] [PubMed]

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef]

- De Albuquerque, J.P.; Almeida, J.P.; Fonte, C.C.; Cardoso, A. How volunteered geographic information can be integrated into emergency management practice? First lessons learned from an urban fire simulation in the city of Coimbra. In Proceedings of the 13th International Conference on Information Systems for Crisis Response and Management (ISCRAM 2016), Rio de Janeiro, Brazil, 22–25 May 2016. [Google Scholar]

- Goodchild, M.F.; Glennon, J.A. Crowdsourcing geographic information for disaster response: A research frontier. Int. J. Digit. Earth 2010, 3, 231–241. [Google Scholar] [CrossRef]

- Hung, K.C.; Kalantari, M.; Rajabifard, A. Methods for assessing the credibility of volunteered geographic information in flood response: A case study in Brisbane, Australia. Appl. Geogr. 2016, 68, 37–47. [Google Scholar] [CrossRef]

- Attard, M.; Haklay, M.; Capineri, C. The potential of volunteered geographic information (VGI) in future transport systems. Urban Plan. 2016, 1. [Google Scholar] [CrossRef]

- Herrick, J.E.; Urama, K.C.; Karl, J.W.; Boos, J.; Johnson, M.V.V.; Shepherd, K.D.; Hempel, J.; Bestelmeyer, B.T.; Davies, J.; Guerra, J.L.; et al. The global Land-Potential Knowledge System (LandPKS): Supporting evidence-based, site-specific land use and management through cloud computing, mobile applications, and crowdsourcing. J. Soil Water Conserv. 2013, 68, 5A–12A. [Google Scholar] [CrossRef]

- Fritz, S.; McCallum, I.; Schill, C.; Perger, C.; See, L.; Schepaschenko, D.; Van der Velde, M.; Kraxner, F.; Obersteiner, M. Geo-Wiki: An online platform for improving global land cover. Environ. Model. Softw. 2012, 31, 110–123. [Google Scholar]

- Fonte, C.C.; Bastin, L.; See, L.; Foody, G.; Lupia, F. Usability of VGI for validation of land cover maps. Int. J. Geogr. Inf. Sci. 2015, 29, 1269–1291. [Google Scholar] [CrossRef]

- Laso Bayas, J.C.; See, L.; Fritz, S.; Sturn, T.; Perger, C.; Dürauer, M.; Karner, M.; Moorthy, I.; Schepaschenko, D.; Domian, D.; et al. Crowdsourcing In-Situ Data on Land Cover and Land Use Using Gamification and Mobile Technology. Remote Sens. 2016, 8, 905. [Google Scholar] [CrossRef]

- See, L.; Fritz, S.; Dias, E.; Hendriks, E.; Mijling, B.; Snik, F.; Stammes, P.; Vescovi, F.D.; Zeug, G.; Mathieu, P.P.; et al. Supporting earth-observation calibration and validation: A new generation of tools for crowdsourcing and citizen science. IEEE Geosci. Remote Sens. Mag. 2016, 4, 38–50. [Google Scholar] [CrossRef]

- Arsanjani, J.J.; Helbich, M.; Bakillah, M. Exploiting volunteered geographic information to ease land use mapping of an urban landscape. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, London, UK, 21–24 May 2013; pp. 29–31. [Google Scholar]

- Liu, X.; Long, Y. Automated identification and characterization of parcels with OpenStreetMap and points of interest. Environ. Plan. B Plan. Des. 2016, 43, 341–360. [Google Scholar] [CrossRef]

- Johnson, B.A.; Iizuka, K.; Bragais, M.A.; Endo, I.; Magcale-Macandog, D.B. Employing crowdsourced geographic data and multi-temporal/multi-sensor satellite imagery to monitor land cover change: A case study in an urbanizing region of the Philippines. Comput. Environ. Urban Syst. 2017, 64, 184–193. [Google Scholar] [CrossRef]

- See, L.; Schepaschenko, D.; Lesiv, M.; McCallum, I.; Fritz, S.; Comber, A.; Perger, C.; Schill, C.; Zhao, Y.; Maus, V.; et al. Building a hybrid land cover map with crowdsourcing and geographically weighted regression. ISPRS J. Photogramm. Remote Sens. 2015, 103, 48–56. [Google Scholar] [CrossRef] [Green Version]

- Schepaschenko, D.; See, L.; Lesiv, M.; McCallum, I.; Fritz, S.; Salk, C.; Moltchanova, E.; Perger, C.; Shchepashchenko, M.; Shvidenko, A.; et al. Development of a global hybrid forest mask through the synergy of remote sensing, crowdsourcing and FAO statistics. Remote Sens. Environ. 2015, 162, 208–220. [Google Scholar] [CrossRef]

- Foody, G.M.; Boyd, D.S. Exploring the potential role of volunteer citizen sensors in land cover map accuracy assessment. In Proceedings of the 10th International Symposium on Spatial Accuracy Assessment in Natural Resources and Environmental Science (Accuracy 2012), Florianopolis, Brazil, 10–13 July 2012; pp. 203–208. [Google Scholar]

- Comber, A.; See, L.; Fritz, S.; Van der Velde, M.; Perger, C.; Foody, G. Using control data to determine the reliability of volunteered geographic information about land cover. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 37–48. [Google Scholar]

- Salk, C.F.; Sturn, T.; See, L.; Fritz, S.; Perger, C. Assessing quality of volunteer crowdsourcing contributions: lessons from the Cropland Capture game. Int. J. Digit. Earth 2016, 9, 410–426. [Google Scholar] [CrossRef] [Green Version]

- Allahbakhsh, M.; Benatallah, B.; Ignjatovic, A.; Motahari-Nezhad, H.R.; Bertino, E.; Dustdar, S. Quality control in crowdsourcing systems: Issues and directions. IEEE Internet Comput. 2013, 17, 76–81. [Google Scholar] [CrossRef]

- See, L.; Comber, A.; Salk, C.; Fritz, S.; van der Velde, M.; Perger, C.; Schill, C.; McCallum, I.; Kraxner, F.; Obersteiner, M. Comparing the quality of crowdsourced data contributed by expert and non-experts. PLoS ONE 2013, 8, e69958. [Google Scholar] [CrossRef] [PubMed]

- Salk, C.; Sturn, T.; See, L.; Fritz, S. Local knowledge and professional background have a minimal impact on volunteer citizen science performance in a land-cover classification task. Remote Sens. 2016, 8, 774. [Google Scholar] [CrossRef] [Green Version]

- Comber, A.; Mooney, P.; Purves, R.S.; Rocchini, D.; Walz, A. Crowdsourcing: It Matters Who the Crowd Are. The Impacts of between Group Variations in Recording Land Cover. PLoS ONE 2016, 11, e0158329. [Google Scholar] [CrossRef] [PubMed]

- See, L.; Fritz, S.; Perger, C.; Schill, C.; McCallum, I.; Schepaschenko, D.; Duerauer, M.; Sturn, T.; Karner, M.; Kraxner, F.; et al. Harnessing the power of volunteers, the internet and Google Earth to collect and validate global spatial information using Geo-Wiki. Technol. Forecast. Soc. Chang. 2015, 98, 324–335. [Google Scholar] [CrossRef] [Green Version]

- Cai, X.; Zhang, X.; Wang, D. Land availability for biofuel production. Environ. Sci. Technol. 2010, 45, 334–339. [Google Scholar] [CrossRef] [PubMed]

- Fritz, S.; See, L.; Perger, C.; Mccallum, I.; Schill, C.; Schepaschenko, D.; Duerauer, M.; Karner, M.; Dresel, C.; Laso-Bayas, J.C.; et al. A global dataset of crowdsourced land cover and land use reference data. Sci. Data 2017, 4, 170075. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Gong, P.; Yu, L.; Hu, L.; Li, X.; Li, C.; Zhang, H.; Zheng, Y.; Wang, J.; Zhao, Y.; et al. Towards a common validation sample set for global land-cover mapping. Int. J. Remote Sens. 2014, 35, 4795–4814. [Google Scholar] [CrossRef]

- Rubel, F.; Kottek, M. Observed and projected climate shifts 1901–2100 depicted by world maps of the Köppen-Geiger climate classification. Meteorol. Z. 2010, 19, 135–141. [Google Scholar] [CrossRef]

- Herrick, J.E.; Beh, A.; Barrios, E.; Bouvier, I.; Coetzee, M.; Dent, D.; Elias, E.; Hengl, T.; Karl, J.W.; Liniger, H.; et al. The Land-Potential Knowledge System (LandPKS): Mobile apps and collaboration for optimizing climate change investments. Ecosyst. Health Sustain. 2016, 2. [Google Scholar] [CrossRef] [Green Version]

- Kumar, N.; Belhumeur, P.; Biswas, A.; Jacobs, D.; Kress, W.; Lopez, I.; Soares, J. Leafsnap: A computer vision system for automatic plant species identification. In Proceedings of the Computer Vision–ECCV 2012, Florence, Italy, 7–13 October 2012; pp. 502–516. [Google Scholar]

- Nagendra, H.; Lucas, R.M.; Honrado, J.; Jongman, R.H.G.; Tarantino, C.; Adamo, M.; Mairota, P. Remote sensing for conservation monitoring: Assessing protected areas, habitat extent, habitat condition, species diversity, and threats. Ecol. Indicators 2013, 33, 45–59. [Google Scholar] [CrossRef]

- Nagendra, H.; Rocchini, D. High resolution satellite imagery for tropical biodiversity studies: the devil is in the detail. Biodivers. Conserv. 2008, 17, 3431–3442. [Google Scholar] [CrossRef]

- Pettorelli, N.; Laurance, W.F.; Obrien, T.G.; Wegmann, M.; Nagendra, H.; Turner, W. Satellite remote sensing for applied ecologists: Opportunities and challenges. J. Appl. Ecol. 2014, 51, 839–848. [Google Scholar] [CrossRef]

- Eurostat. Overview of LUCAS; Eurostat: Luxembourg, 2015. [Google Scholar]

- Sarmento, P.; Carrao, H.; Caetano, M.; Stehman, S.V. Incorporating reference classification uncertainty into the analysis of land cover accuracy. Int. J. Remote Sens. 2009, 30, 5309–5321. [Google Scholar] [CrossRef]

| Estimated Std. | Error | Z Value | Pr (>|z|) | |

|---|---|---|---|---|

| Very high resolution imagery available on Google Earth | ||||

| (Intercept) | 0.511 | 0.761 | 0.671 | 0.50211 |

| Dominant percentage | 0.057 | 0.017 | 3.294 | 0.00099 *** |

| Average uncertainty | −0.704 | 0.199 | −3.532 | 0.00041 *** |

| Very high resolution imagery not available on Google Earth | ||||

| (Intercept) | −3.592 | 1.376 | −2.610 | 0.00905 ** |

| Dominant percentage | 0.095 | 0.028 | 3.379 | 0.00073 *** |

| Average uncertainty | 0.104 | 0.146 | 0.713 | 0.47599 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Feng, D.; Yu, L.; See, L.; Fritz, S.; Perger, C.; Gong, P. Assessing and Improving the Reliability of Volunteered Land Cover Reference Data. Remote Sens. 2017, 9, 1034. https://doi.org/10.3390/rs9101034

Zhao Y, Feng D, Yu L, See L, Fritz S, Perger C, Gong P. Assessing and Improving the Reliability of Volunteered Land Cover Reference Data. Remote Sensing. 2017; 9(10):1034. https://doi.org/10.3390/rs9101034

Chicago/Turabian StyleZhao, Yuanyuan, Duole Feng, Le Yu, Linda See, Steffen Fritz, Christoph Perger, and Peng Gong. 2017. "Assessing and Improving the Reliability of Volunteered Land Cover Reference Data" Remote Sensing 9, no. 10: 1034. https://doi.org/10.3390/rs9101034