A Hybrid Pansharpening Algorithm of VHR Satellite Images that Employs Injection Gains Based on NDVI to Reduce Computational Costs

Abstract

:1. Introduction

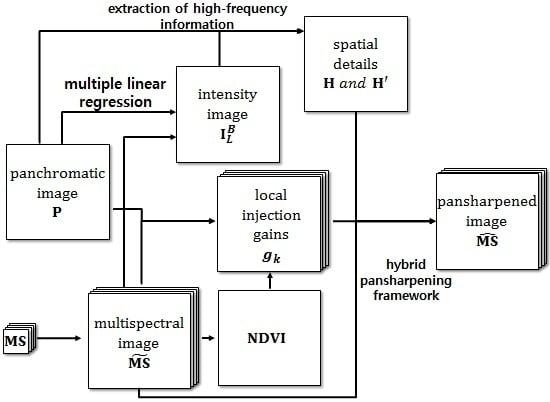

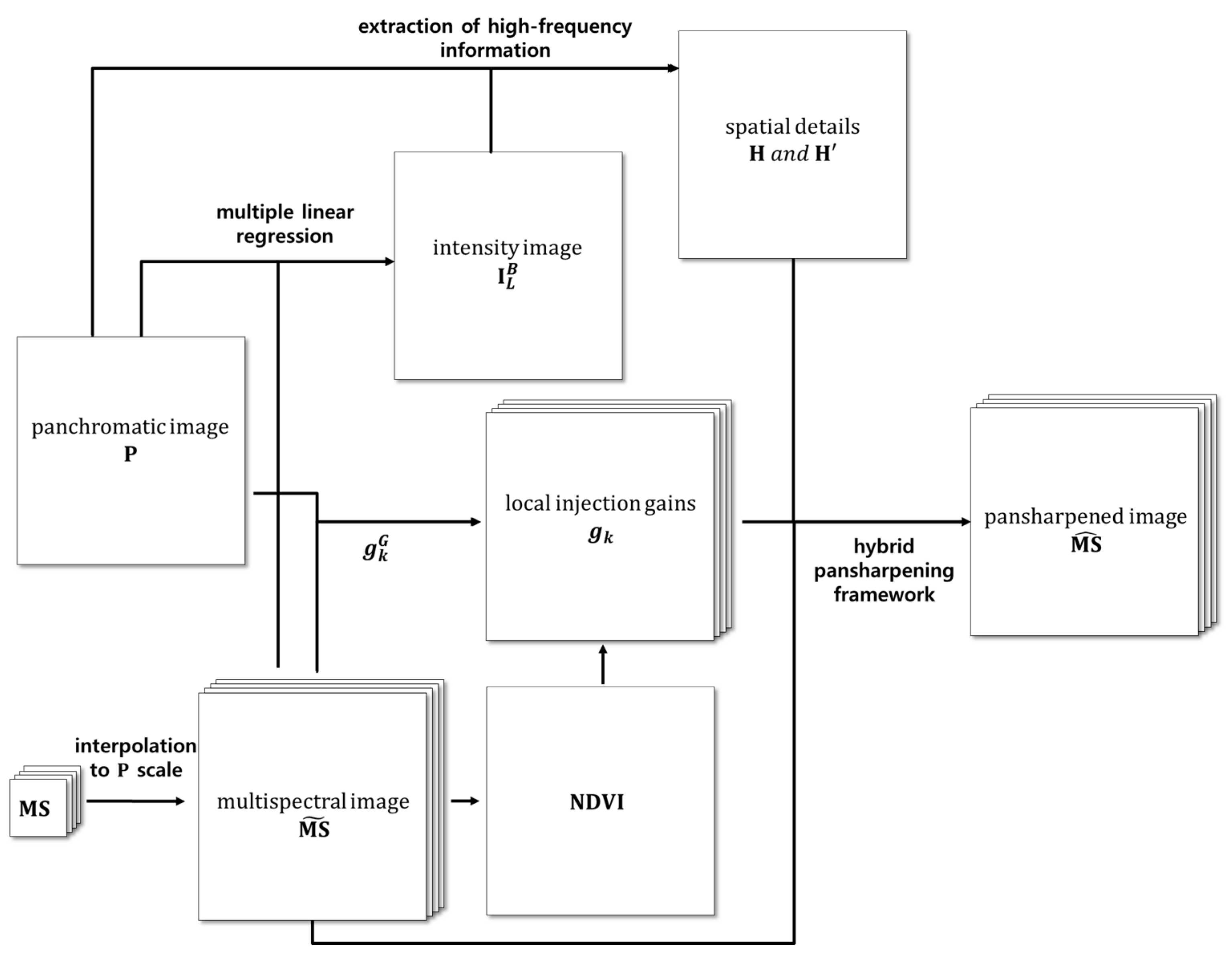

2. Overview and Characteristics of CS-Based Pansharpening Methods

3. The Proposed Methodology

3.1. Similarities between the NDVI and Injection Gain Parameter Models

3.2. Optimization of Pansharpening Parameter Using the NDVI

3.3. Construction of a Modified Hybrid Pansharpening Model

4. Experimental Results

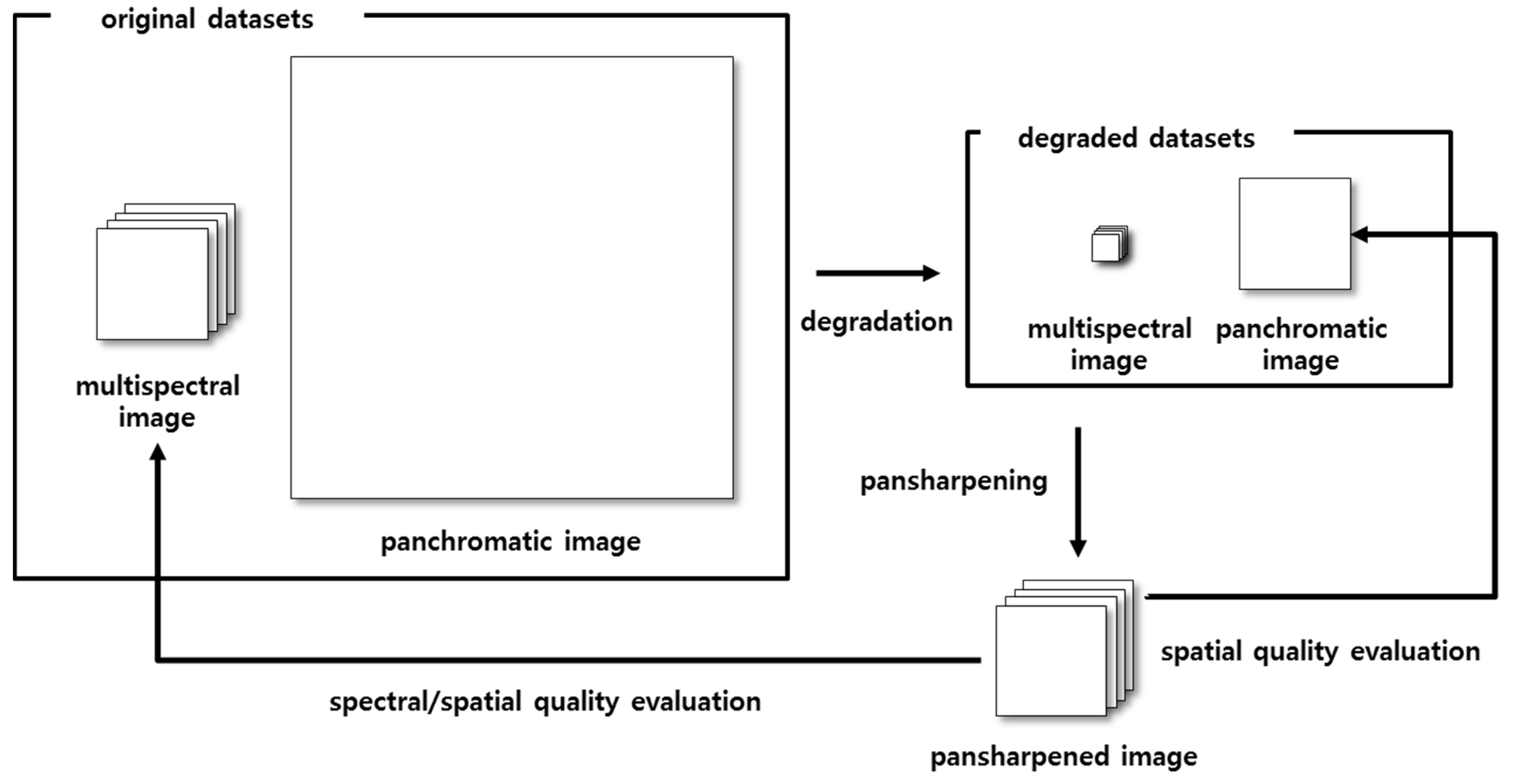

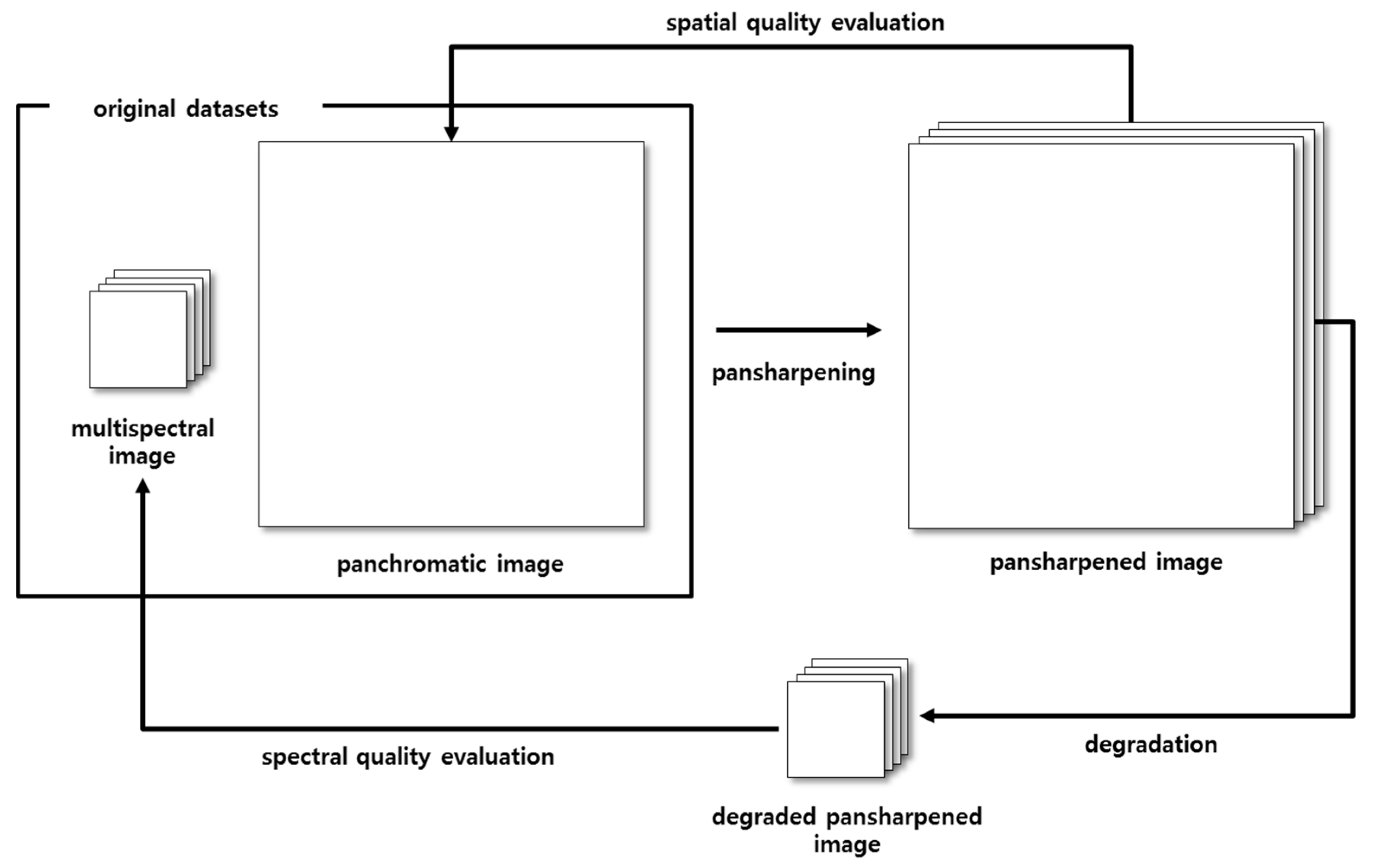

4.1. Quality Assessment of Pansharpened Images

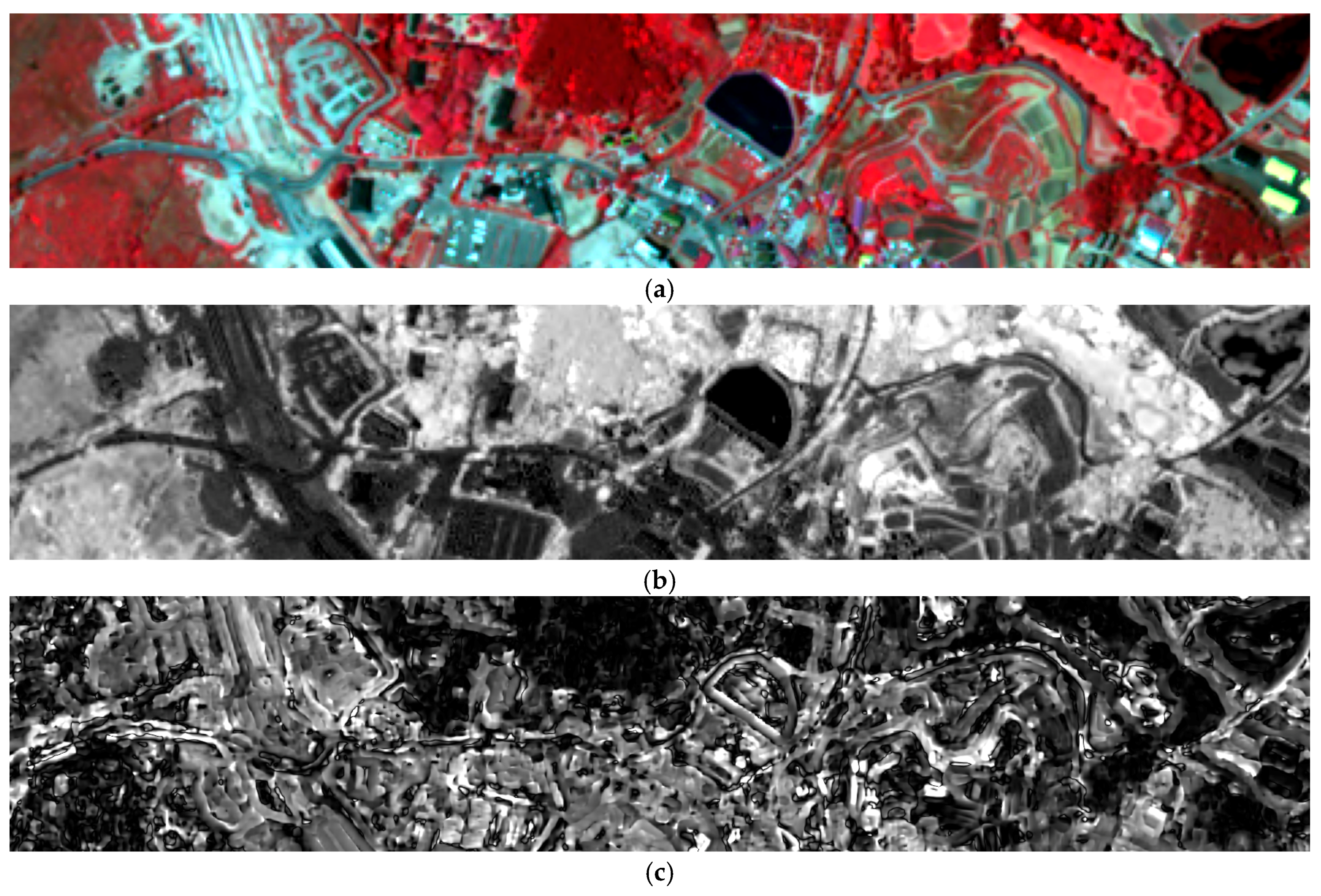

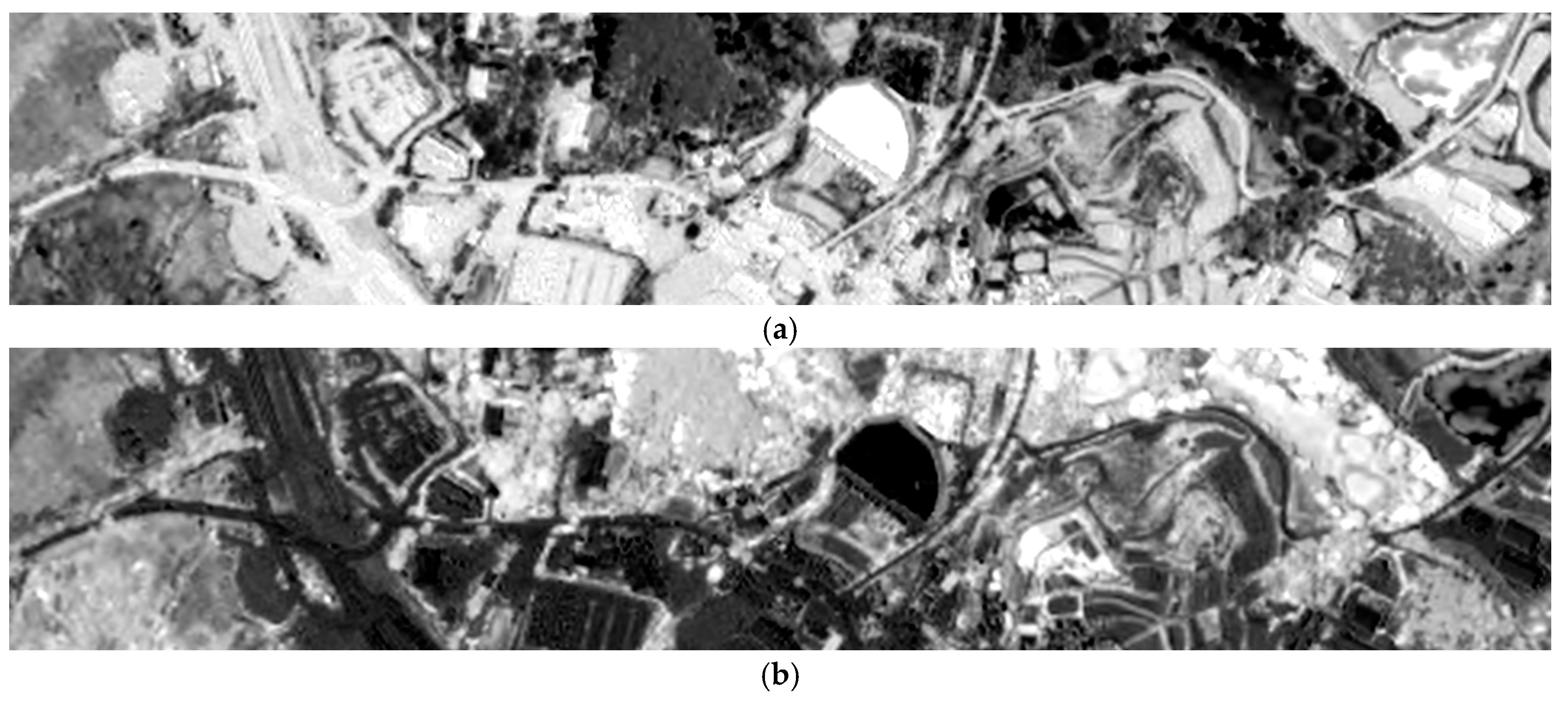

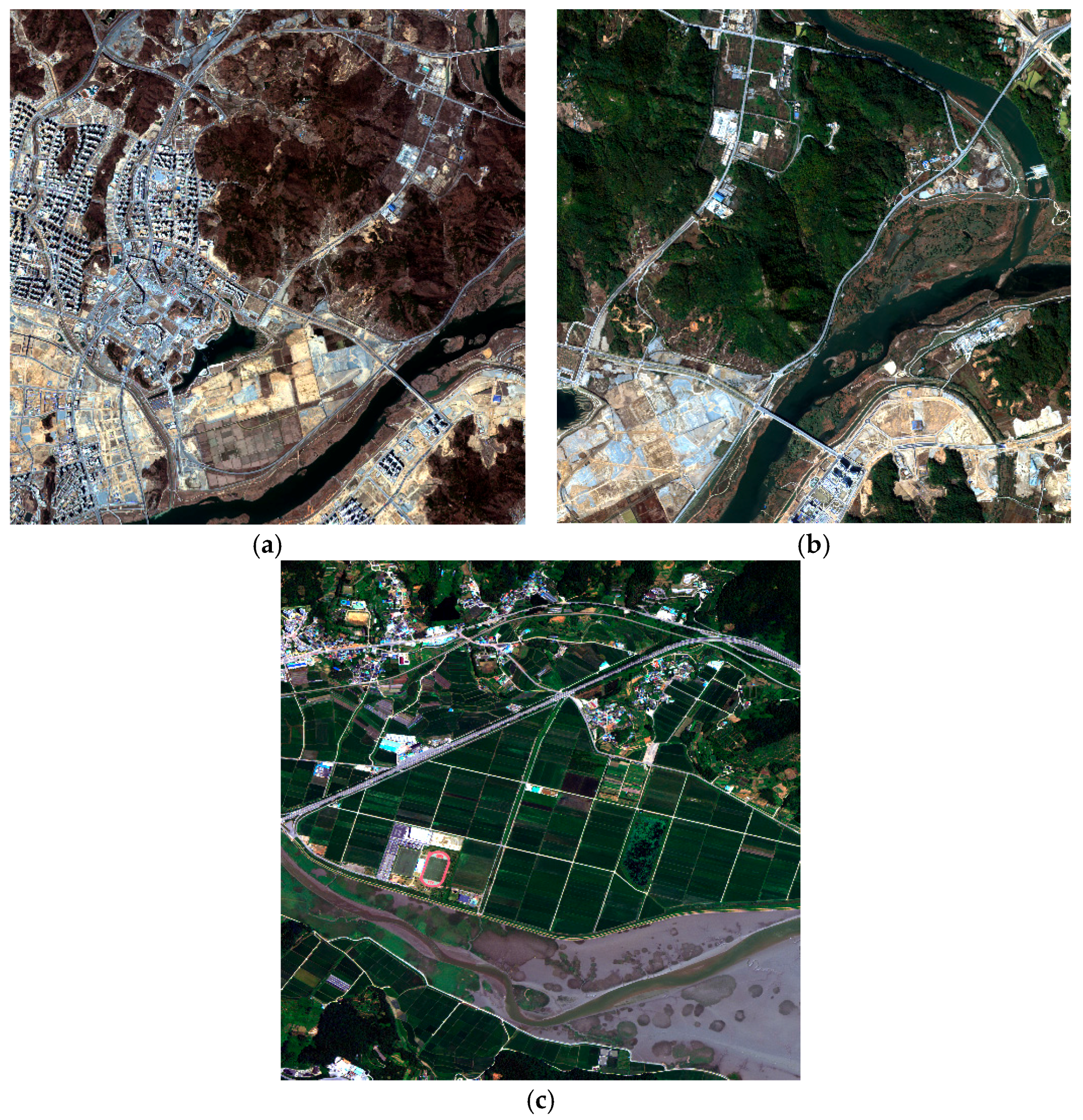

4.2. Test Data

4.3. Experimental Results and Analysis

- EXP: expanded multispectral image. EXP is interpolated to the image size of the pansharpened image using a polynomial kernel with 23 coefficients [16].

- GIHS: generalized intensity-hue-saturation image fusion [21].

- GSA: Gram–Schmidt adaptive [29]. GSA is subdivided into GSAG and GSAL, depending on whether local injection gains are used. GSAG uses global injection gains, whereas GSAL utilizes local injection gains determined using overlapping image blocks.

- NNDiffuse: nearest-neighbor diffusion-based pansharpening algorithm [34]. This approach has been implemented in the ENVI software package.

4.3.1. Quantitative Analysis

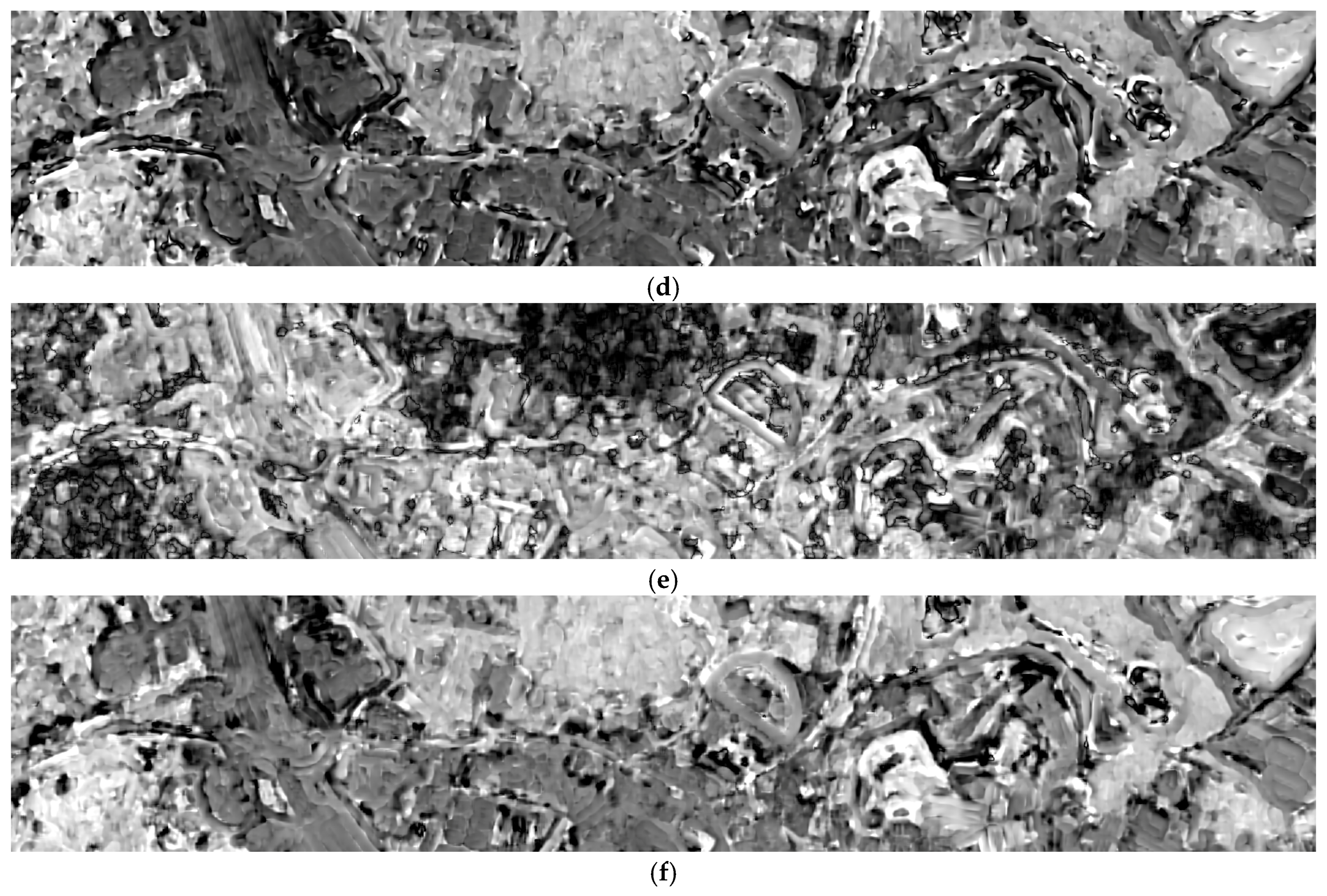

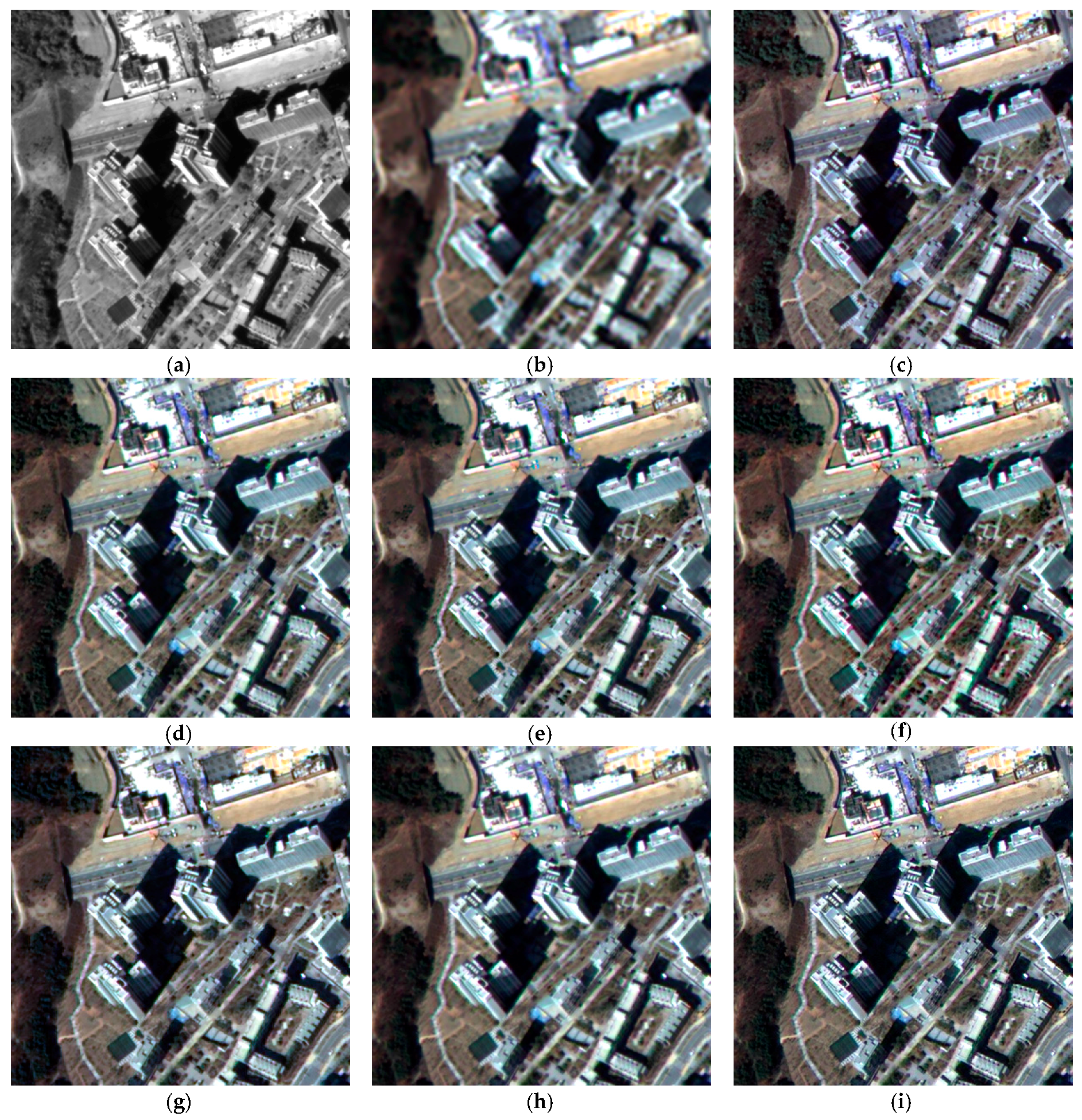

4.3.2. Qualitative Analysis

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, Y. Understanding image fusion. Photogramm. Eng. Remote Sens. 2004, 70, 653–660. [Google Scholar]

- Byun, Y.; Choi, J.; Han, Y. An area-based image fusion scheme for the integration of SAR and optical satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2212–2220. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Nencini, F.; Garzelli, A.; Baronti, S.; Alparone, L. Remote sensing image fusion using the curvelet transform. Inf. Fusion 2007, 8, 143–156. [Google Scholar] [CrossRef]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of pansharpening algorithms: Outcome of the 2006 GRS-S data-fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef] [Green Version]

- Bovolo, F.; Bruzzone, L.; Capobianco, L.; Garzelli, A.; Marchesi, S.; Nencini, F. Analysis of the effects of pansharpening in change detection on VHR images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 53–57. [Google Scholar] [CrossRef]

- Johnson, B. Effects of pansharpening on vegetation indices. ISPRS Int. J. Geo-Inf. 2014, 3, 507–522. [Google Scholar] [CrossRef]

- Laporterie-Déjean, F.; de Boissezon, H.; Flouzat, G.; Lefèvre-Fonollosa, M.-J. Thematic and statistical evaluations of five panchromatic/multispectral fusion methods on simulated PLEIADES-HR images. Inf. Fusion 2005, 6, 193–212. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Mura, M.D.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Chavez, P.S., Jr.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- González-Audícana, M.; Otazu, X.; Fors, O.; Seco, A. Comparison between Mallat’s and the ‘à trous’ discrete wavelet transform based algorithms for the fusion of multispectral and panchromatic images. Int. J. Remote Sens. 2005, 26, 595–614. [Google Scholar] [CrossRef]

- Otazu, X.; González-Audícana, M.; Fors, O.; Núñez, J. Introduction of sensor spectral response into image fusion methods. Application to wavelet-based methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.; Lee, C.; Han, D.; Kim, Y.; Kim, Y. Improved additive-wavelet image fusion. IEEE Geosci. Remote Sens. Lett. 2011, 8, 263–267. [Google Scholar] [CrossRef]

- Amro, I.; Mateos, J. General shearlet pansharpening method using Bayesian inference. In Proceedings of the Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 26–28 September 2013; pp. 231–235. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Lotti, F.; Selva, M. A comparison between global and context-adaptive pansharpening of multispectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 302–306. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. An MTF-based spectral distortion minimizing model for pan-sharpening of very high resolution multispectral images of urban areas. In Proceedings of the 2nd GRSS/ISPRS Joint Workshop Remote Sensing and Data Fusion URBAN Areas, Berlin, Germany, 22–23 May 2003; pp. 90–94. [Google Scholar]

- Yang, Y.; Wan, W.; Huang, S.; Lin, P.; Que, Y. A novel pan-sharpening framework based on matting model and multiscale transform. Remote Sens. 2017, 9, 391. [Google Scholar] [CrossRef]

- Restaino, R.; Vivone, G.; Mura, M.D.; Chanussot, J. Fusion of multispectral and panchromatic images based on morphological operators. IEEE Trans. Image-Process. 2016, 25, 2882–2895. [Google Scholar] [CrossRef] [PubMed]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and Pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component-substitution based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Tu, T.M.; Huang, P.S.; Hung, C.L.; Chang, C.P. A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Kim, G.; Park, N.; Choi, S.; Choi, J. Performance evaluation of pansharpening algorithms for Worldview-3 satellite imagery. J. Korean Soc. Surv. Geodesy Photogramm. Cartogr. 2016, 24, 413–423. [Google Scholar] [CrossRef]

- Dou, W.; Chen, Y.; Li, X.; Sui, D.Z. A general framework for component substitution image fusion: An implementation using the fast image fusion method. Comput. Geosci. 2007, 33, 219–228. [Google Scholar] [CrossRef]

- Kim, Y.; Eo, Y.; Kim, Y.; Kim, Y. Generalized IHS-based satellite imagery fusion using spectral response functions. ETRI J. 2011, 33, 497–505. [Google Scholar] [CrossRef]

- Rahmani, S.; Strait, M.; Merkurjev, D.; Moeller, M.; Wittman, T. An adaptive IHS pan-sharpening method. IEEE Geosci. Remote Sens. Lett. 2010, 7, 746–750. [Google Scholar] [CrossRef]

- Chien, C.L.; Tsai, W.H. Image fusion with no gamut problem by improved nonlinear IHS transforms for remote sensing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 651–663. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Xu, Q.; Li, B.; Zhang, Y.; Ding, L. High-fidelity component substitution pansharpening by the fitting of substitution data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7380–7392. [Google Scholar]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal MMSE pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 228–236. [Google Scholar] [CrossRef]

- Zhong, S.; Zhang, Y.; Chen, Y.; Wu, D. Combining component substitution and multiresolution analysis: A novel generalized BDSD pansharpening algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2867–2875. [Google Scholar] [CrossRef]

- Choi, J.; Yeom, J.; Chang, A.; Byun, Y.; Kim, Y. Hybrid pansharpening algorithm for high spatial resoluation satellite imagery to improve spatial quality. IEEE Geosci. Remote Sens. Lett. 2013, 10, 490–494. [Google Scholar] [CrossRef]

- Sun, W.; Chen, B.; Messinger, D.W. Nearest-neighbor diffusion-based pan-sharpening algorithm for spectral images. Opt. Eng. 2014, 53. [Google Scholar] [CrossRef]

- Shahdoosti, H.R.; Javaheri, N. Pansharpening of clustered MS and Pan images considering mixed pixels. IEEE Geosci. Remote Sens. Lett. 2017, 14, 826–830. [Google Scholar] [CrossRef]

- Dhamecha, H.M.; Zaveri, T.H.; Potdar, M.B. NDVI controlled based high frequency injection multispectral image fusion method. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 3513–3516. [Google Scholar]

- Xu, Q.; Zhang, Y.; Li, B.; Ding, L. Pansharpening using regression of classified MS and Pan images to reduce color distortion. IEEE Geosci. Remote Sens. Lett. 2015, 12, 28–32. [Google Scholar]

- Wang, H.; Jiang, W.; Lei, C.; Qin, S.; Wang, J. A robust image fusion method based on local spectral and spatial correlation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 454–458. [Google Scholar] [CrossRef]

- Mura, M.D.; Vivone, G.; Restaino, R.; Chanussot, J. Context-adaptive pansharpening based on binary partition tree segmentation. In Proceedings of the 2014 IEEE International Conference on Image-Processing (ICIP), Paris, France, 27–30 October 2014; pp. 3924–3928. [Google Scholar]

- Kim, G.; Choi, J. Pansharpening optimization of KOMPSAT-3 satellite imagery using NDVI. In Proceedings of the KAGIS Fall Conference 2015 & International Symposium on GIS, Busan, Korea, 5–7 November 2015; pp. 126–127. [Google Scholar]

- Nouri, H.; Beecham, S.; Anderson, S.; Nagler, P. High spatial resolution WorldView-2 imagery for mapping NDVI and its relationship to temporal urban landscape evapotranspiration factors. Remote Sens. 2014, 6, 508–602. [Google Scholar] [CrossRef]

- Starck, J.L.; Fadili, J.; Murtagh, F. The undecimated wavelet decomposition and its reconstruction. IEEE Trans. Image-Process. 2007, 16, 297–309. [Google Scholar] [CrossRef] [PubMed]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Quantitative quality evaluation of pansharpened imagery: Consistency versus synthesis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1247–1259. [Google Scholar] [CrossRef]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2004, 74, 193–200. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi-/hyper-spectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Li, Z.; Jing, Z.; Yang, X.; Sun, S. Color transfer based remote sensing image fusion using non-separable wavelet frame transform. Pattern Recognit. Lett. 2005, 26, 2006–2014. [Google Scholar] [CrossRef]

- Yang, S.; Wang, M.; Jiao, L. Fusion of multispectral and panchromatic images based on support value transform and adaptive principal component analysis. Inf. Fusion 2012, 13, 177–184. [Google Scholar] [CrossRef]

| Correlation Coefficient | Local Injection Gain by GSA | Local Injection Gain by Hybrid Pansharpening |

|---|---|---|

| Band 1 (blue) | −0.3120 | −0.4460 |

| Band 2 (green) | −0.4114 | −0.5104 |

| Band 3 (red) | −0.3363 | −0.4772 |

| Band 4 (NIR) | 0.4694 | 0.5095 |

| Sensor | KOMPSAT-3 | KOMPSAT-3A | WorldView-3 | |

|---|---|---|---|---|

| location | Sejong Korea | Sejong Korea | Beolgyo Korea | |

| date | 25 March 2015 | 28 October 2015 | 26 July 2015 | |

| multispectral resolution/size | 2.8 m | 2.2 m | 1.2 m | |

| 2048 × 2048 | 2048 × 2048 | 2048 × 2048 | ||

| panchromatic resolution/size | 0.7 m | 0.55 m | 0.3 m | |

| 8192 × 8192 | 8192 × 8192 | 8192 × 8192 | ||

| wavelength | panchromatic | 450–900 nm | 448–808 nm | |

| coastal blue | - | 397–454 nm | ||

| blue | 450–520 nm | 445–517 nm | ||

| green | 520–600 nm | 507–586 nm | ||

| yellow | - | 580–629 nm | ||

| red | 630–690 nm | 626–696 nm | ||

| red edge | - | 698–749 nm | ||

| NIR1 | 760–900 nm | 765–899 nm | ||

| NIR2 | - | 857–1039 nm | ||

| Dataset | Algorithm | ERGAS | SAM | Q4/Q8 | sCC | AG |

|---|---|---|---|---|---|---|

| KOMPSAT-3 | EXP | 0.8118 | 0.6409 | 0.9870 | 0.3036 | 63.82 |

| GIHS | 1.6505 | 1.0255 | 0.9088 | 0.9886 | 110.48 | |

| GSAG | 1.0023 | 0.7336 | 0.9671 | 0.9858 | 113.68 | |

| GSAL | 1.1029 | 0.9480 | 0.9608 | 0.9176 | 103.75 | |

| BDSD | 0.9532 | 0.8223 | 0.9791 | 0.9439 | 117.73 | |

| NNDiffuse | 1.1865 | 0.8244 | 0.9398 | 0.9366 | 119.04 | |

| HP-NDVIspectral | 0.8766 | 0.6627 | 0.9828 | 0.9737 | 101.67 | |

| HP-NDVIspatial | 0.8926 | 0.6694 | 0.9816 | 0.9471 | 146.95 | |

| KOMPSAT-3A | EXP | 0.5288 | 0.5147 | 0.9885 | 0.1027 | 49.07 |

| GIHS | 1.8083 | 1.0259 | 0.7142 | 0.9952 | 167.29 | |

| GSAG | 0.9206 | 0.7343 | 0.9121 | 0.9968 | 175.17 | |

| GSAL | 1.0500 | 1.1713 | 0.9301 | 0.7616 | 149.74 | |

| BDSD | 0.8479 | 0.9122 | 0.9695 | 0.8688 | 159.34 | |

| NNDiffuse | 3.0593 | 0.7103 | 0.5551 | 0.9451 | 216.92 | |

| HP-NDVIspectral | 0.5355 | 0.5221 | 0.9769 | 0.9471 | 106.40 | |

| HP-NDVIspatial | 0.5574 | 0.5298 | 0.9744 | 0.9382 | 168.62 | |

| WorldView-3 | EXP | 0.7030 | 0.6709 | 0.9845 | 0.0526 | 4.88 |

| GIHS | 3.2953 | 1.7482 | 0.6327 | 0.9784 | 9.00 | |

| GSAG | 1.7884 | 0.9160 | 0.8865 | 0.9787 | 8.93 | |

| GSAL | 2.8504 | 2.3264 | 0.8070 | 0.6309 | 9.24 | |

| BDSD | 1.7385 | 1.5836 | 0.9248 | 0.7629 | 8.77 | |

| NNDiffuse | 2.7860 | 0.9571 | 0.6412 | 0.8481 | 9.91 | |

| HP-NDVIspectral | 1.5924 | 0.8933 | 0.9474 | 0.8257 | 7.27 | |

| HP-NDVIspatial | 1.7045 | 0.9205 | 0.9403 | 0.8331 | 11.21 |

| Pansharpening Algorithm | Computational Cost (s) |

|---|---|

| GSAG | 240 s |

| GSAL | 25,246 s |

| HP-NDVIspatial | 245 s |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, J.; Kim, G.; Park, N.; Park, H.; Choi, S. A Hybrid Pansharpening Algorithm of VHR Satellite Images that Employs Injection Gains Based on NDVI to Reduce Computational Costs. Remote Sens. 2017, 9, 976. https://doi.org/10.3390/rs9100976

Choi J, Kim G, Park N, Park H, Choi S. A Hybrid Pansharpening Algorithm of VHR Satellite Images that Employs Injection Gains Based on NDVI to Reduce Computational Costs. Remote Sensing. 2017; 9(10):976. https://doi.org/10.3390/rs9100976

Chicago/Turabian StyleChoi, Jaewan, Guhyeok Kim, Nyunghee Park, Honglyun Park, and Seokkeun Choi. 2017. "A Hybrid Pansharpening Algorithm of VHR Satellite Images that Employs Injection Gains Based on NDVI to Reduce Computational Costs" Remote Sensing 9, no. 10: 976. https://doi.org/10.3390/rs9100976