1. Introduction

Ship detection holds the key for a wide array of applications, such as naval defense, situation assessment, traffic surveillance, maritime rescue, and fishery management. For decades, major achievements in this area focus on synthetic aperture radar (SAR) images [

1,

2,

3], which are less influenced by time and weather. However, the resolutions of SAR images are low and the targets lack texture and color features. Nonmetallic ships might not be visible and the capacity is limited in ship wake detection. With the rapid development of earth observation technology, the optical images from Unmanned Airborne Vehicles (UAVs) and satellites have more detailed information and more obvious geometric structure. Compared with the SAR technology, they are more intuitive and easier to understand. Attributing to the advantages, we take ships in optical remote sensing images as research targets in this paper. However, plenty of difficulties are still confronted. For instance, the imaging quality may degrade because of camera shake, uneven illumination, shadows, and clouds. In addition, the ship target is small and weak, while the sea surface is complex due to interferences from small islands, coastlines, sea clutters, and ship wakes, which may lead to false alarms. How to detect ships quickly, accurately, and automatically from remote sensing images with complicated sea surface is an urgent problem to be solved.

A number of studies in this field focus on gray statistics, threshold segmentation, and edge detection. Proia [

4] assumed the distribution of the sea background and identified small ships using Bayesian decision theory. Corbane [

5] extracted ship targets by morphological filtering, wavelet analysis and Radon transform. Xu [

6] used the level sets for multi-scale contour extraction. Yang [

7] selected ship candidates using a linear function based on sea surface analysis. These methods are suitable for simple and calm sea surface. If the sea scenes are complex, they are easy to be affected. Besides, the black and white polarity of ships cannot be easily solved or ships similar with the surroundings are hardly extracted. Other methods are based on the modeling. Sun [

8] proposed an automatic target detection method based on spatial sparse coding bag of words model. Cheng [

9,

10,

11] presented and improved a rotation-invariant framework for multi-class geospatial object detection and classification based on the deformable part mode features. Yokoya [

12] integrated sparse representations and Hough voting for ship detection. Wang [

13] constructed a discriminative sparse representation framework for multi-class target detection. These methods can effectively describe the targets by using a series of local structures. However, the computation complexity is high and some small ships may be missed. In addition to the above methods, great attention has focused on the feature extraction and supervised classification. Zhu [

14] applied the support vector machine classifier based on texture and shape features. Shi [

15] validated real ships out of the ship candidates using the circle frequency-histograms of oriented gradient features and the Adaboost algorithm. These methods turn the detection into the classification problems of ship and non-ship targets. They have a certain ability to resist the interferences from the sea background. However, the detection performance relies on the feature selection and the number of samples in the training database. The characteristics of the same target may vary in different sea backgrounds and the accurate extraction of the features is difficult. At present, deep learning technology has achieved remarkable accomplishment in the target detection. Tang [

16] presented a ship detection method using deep neural networks and extreme learning machine. Zou [

17] designed SVD networks based on convolution neural networks and singular value decompensation. These network models are more suitable for the targets with the larger size and higher contrast. However, some ships in remote sensing image may be relatively small, or the sea scene may be homogeneous, which can easily lead to the missing detection. Moreover, these models have high computational complexity.

For the small-sized ship targets, the sea background contains many interferences and redundant information. Visual saliency methods can quickly remove the redundant information and access to the interested targets. Many studies [

18] have attempted to simulate this mechanism to detect maritime targets. Visual saliency models can be mainly divided into two types: the top-down models and the bottom-up models. Top-down model [

19,

20] is related to specific goals and tasks, which use cognitive factors such as pre-knowledge and context information to perform a visual search. These models are usually complex and without generality. Most saliency detection models are bottom-up, which can be divided into the spatial domain and transform domain models. The spatial domain models mainly include the ITTI model (proposed by Itti) [

21], Attention based on Information Maximization model (AIM) [

22], Graph-Based Visual Saliency model (GBVS) [

23], Context Aware model (CA) [

24], Local Contrast model (LC) [

25], Maximum Symmetric Surround model (MSSS) [

26], globally rare features model (RARE) [

27] and saliency detection by combing simple priors model (SDSP) [

28]. These models integrate multiple features for detecting targets. However, they are sensitive to the interferences from the sea background. The transform domain includes the Fourier transform and Wavelet transform. Based on the former, Hou [

29] proposed the Spectral Residual model (SR) with log amplitude spectrum. Then, the Phase Quaternion Fourier Transform model (PQFT) [

30] and the Phase Spectrum of Biquaternion Fourier Transform model (PBFT) [

31] were proposed to process multi-channel features of color images. These models have advantages in speed and background suppression ability. However, the integrity of the target is poor especially for the large target, or the target regions are exceedingly bright or dark. Li [

32] proposed the Hypercomplex Frequency domain Transform model (HFT), which can maintain the integrity of the target. However, the results may be unsatisfied for the near targets that are too close to each other.

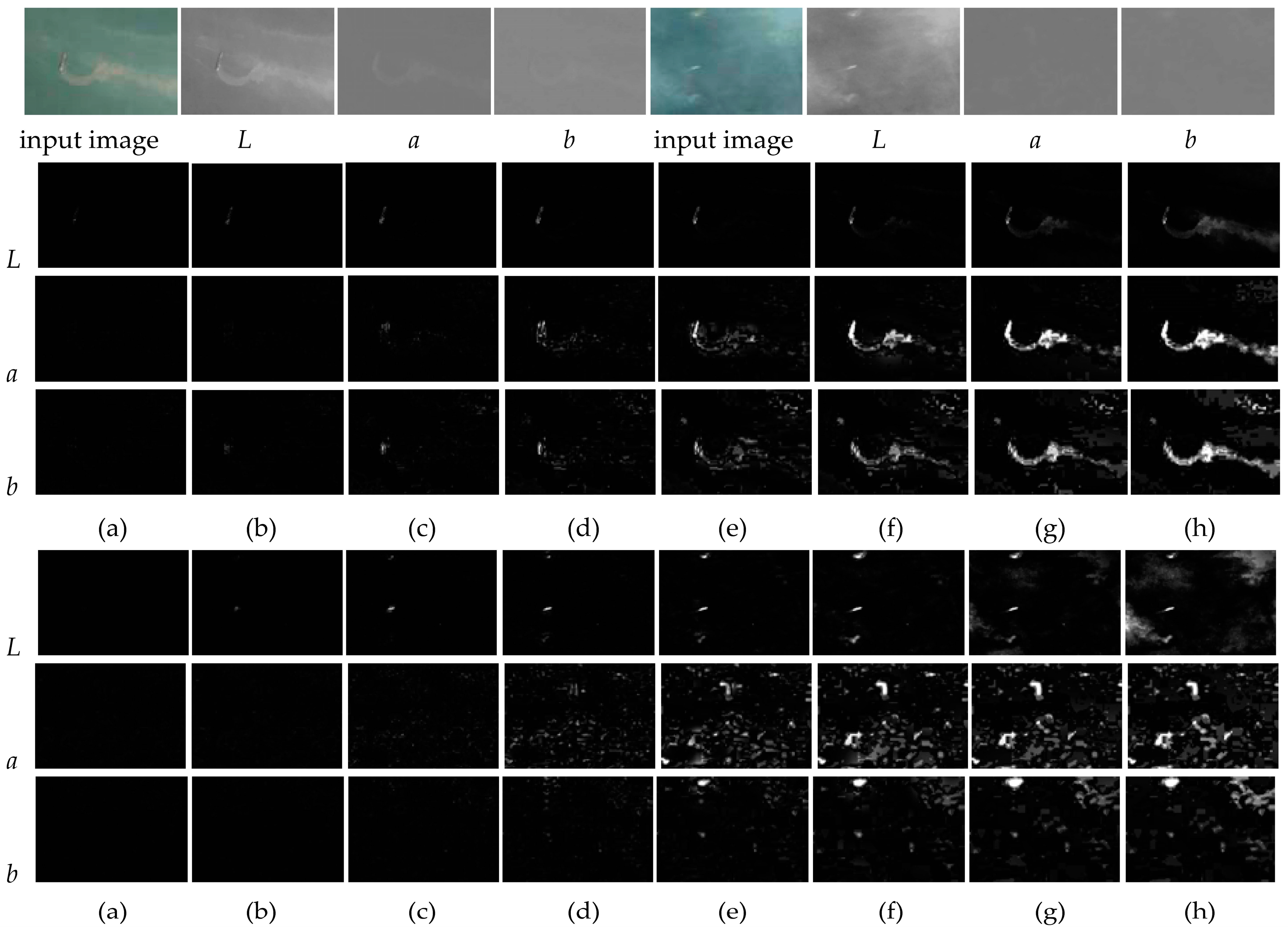

Recently, owing to the characteristics of the multi-scale and multi-direction wavelet analysis, Wavelet transform is gradually valued by researchers in the saliency modeling. Ma [

33] constructed saliency map based on wavelet domain and the entropy weights. The inverse Wavelet transform (IWT) is not performed. Although the computational cost can be reduced, its background suppression ability is not strong. Murray [

34] obtained the saliency map by IWT of the weight maps, which are derived from the high-pass wavelet coefficients of each level. This computation depended on the local contrast and lacked an explanation for global contrast. It is easy to lead to partial information loss or increase false alarms using the local features to detect the ship targets. Although İmamoğlu [

35] considered the global feature distribution besides the local analysis, the final saliency map is still dominated by the local contrast. A large array of interferences may be introduced in the sea target detection.

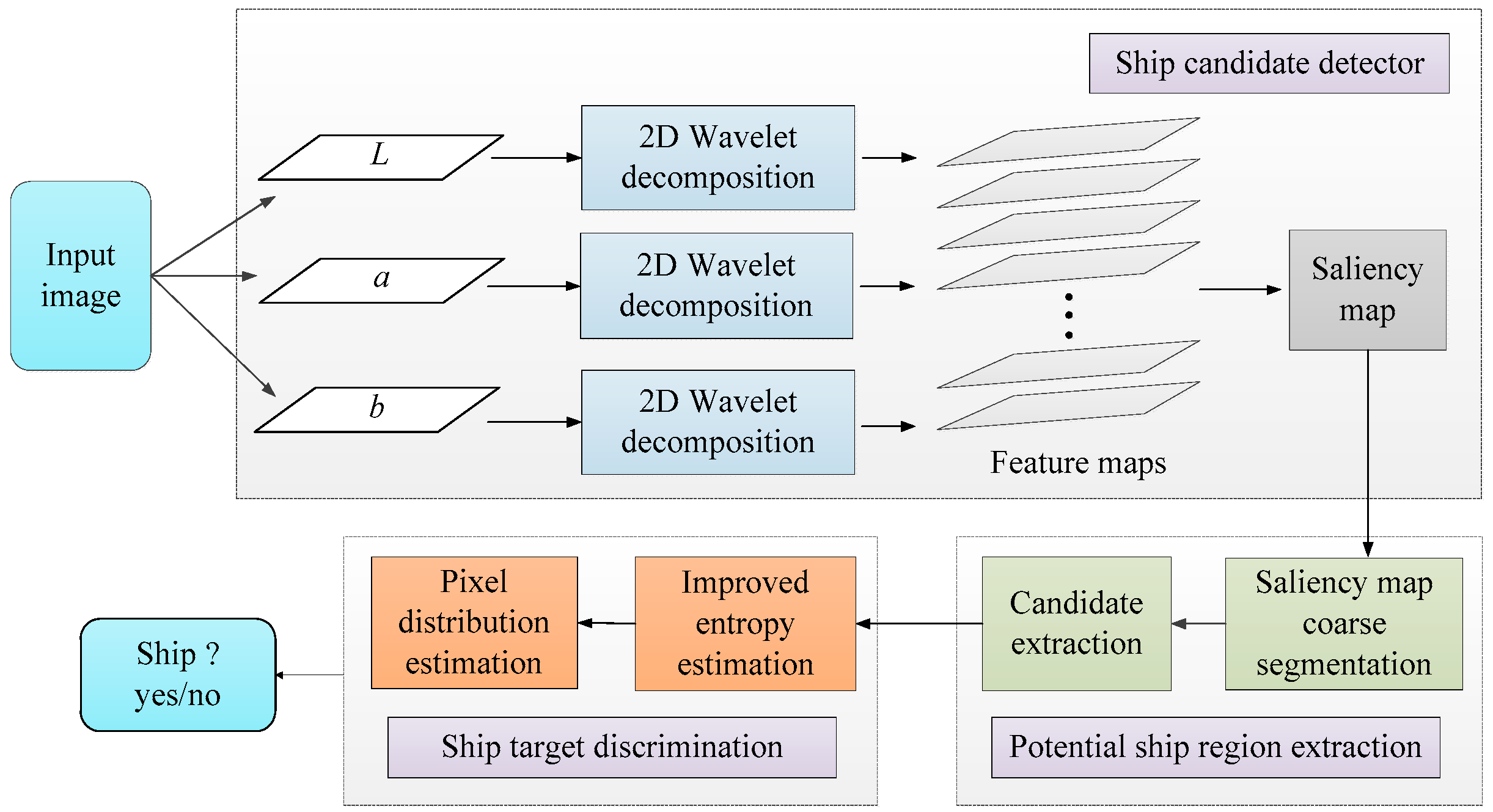

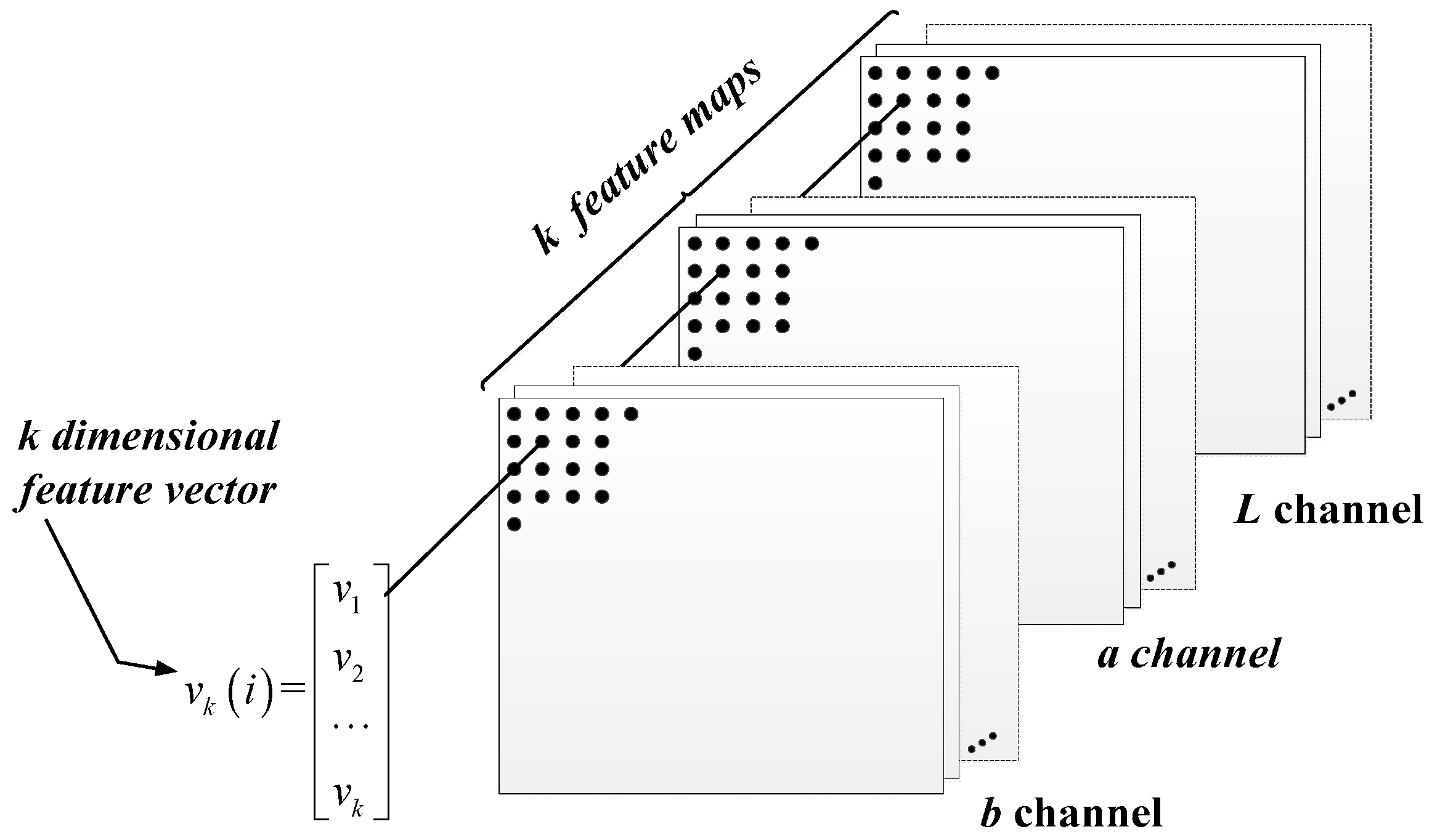

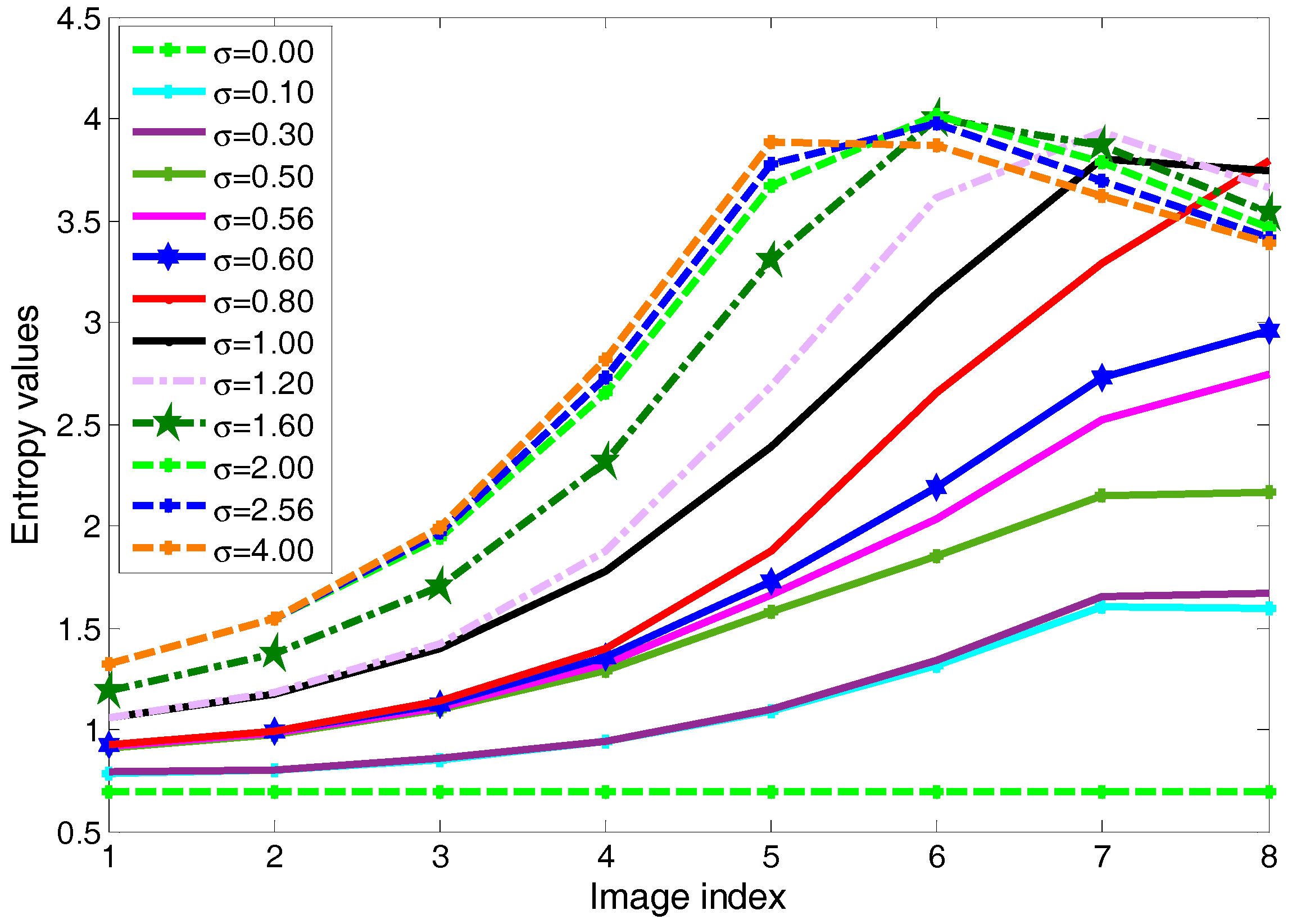

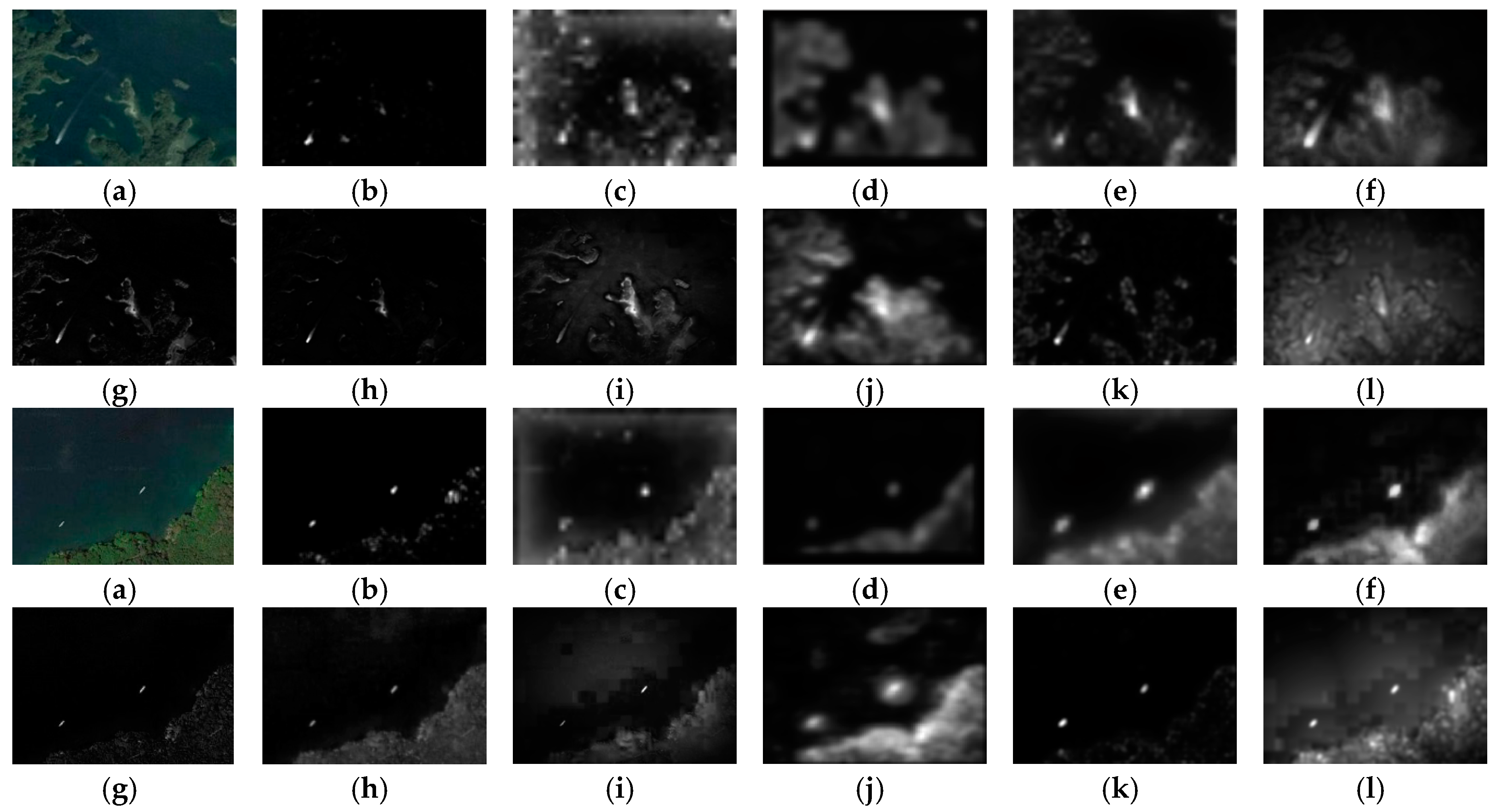

To solve the problems, a novel approach based on Wavelet transform and multi-level false alarm identification is proposed. The framework is similar to the two stages in [

36]. However, the extraction and discrimination features are different from there. First, a global saliency model based on high-pass coefficients of the wavelet decomposition is constructed, and the multi-color, multi-scale, and multi-direction features of the image are considered in the process of ship candidate extraction. The presented global saliency model results in a high detection rate regardless of the variety of the sea scenes, ship colors and sizes. For the false alarms led by shadows, clouds, coastlines, and islands that may be extracted as ship candidates, we design a new multi-level identification approach based on the improved entropy estimation and the pixel distribution. It provides a simple but efficient mean to achieve a more discriminative ship description. The pseudo targets are adaptively removed, while real ships are retained. Owing to these novel techniques and improvements, the presented approach shows higher robustness and discriminative power.

The rest of this paper is organized as follows. In

Section 2, the framework of saliency detection is devised.

Section 3 designs region segmentation and preliminary identification. In

Section 4, the improved entropy estimation and the pixel distribution are presented to reduce the false alarms. In

Section 5, the execution of the proposed approach is illustrated. A quantitative comparison and analysis is also provided in this section.

Section 6 reports the conclusion and possible extensions.

5. Experimental Results and Discussion

To validate the performance of our method, multiple tests are implemented from both subjective and objective aspects. The remote sensing images from the publicly available Google Earth service are used to perform the experiments. They are randomly selected from the eastern coast of China. In total, 273 representative images with the resolution of 2–15 m cover plenty of scenarios and the size of each test image is 300 × 210 pixels. The ship targets that may be black or white polar are under a variety of sea backgrounds, such as heavy clouds, shadows, ship wakes, coastlines, small islands and reefs. In addition, the pixel sizes of the ship targets vary from seven to hundreds.

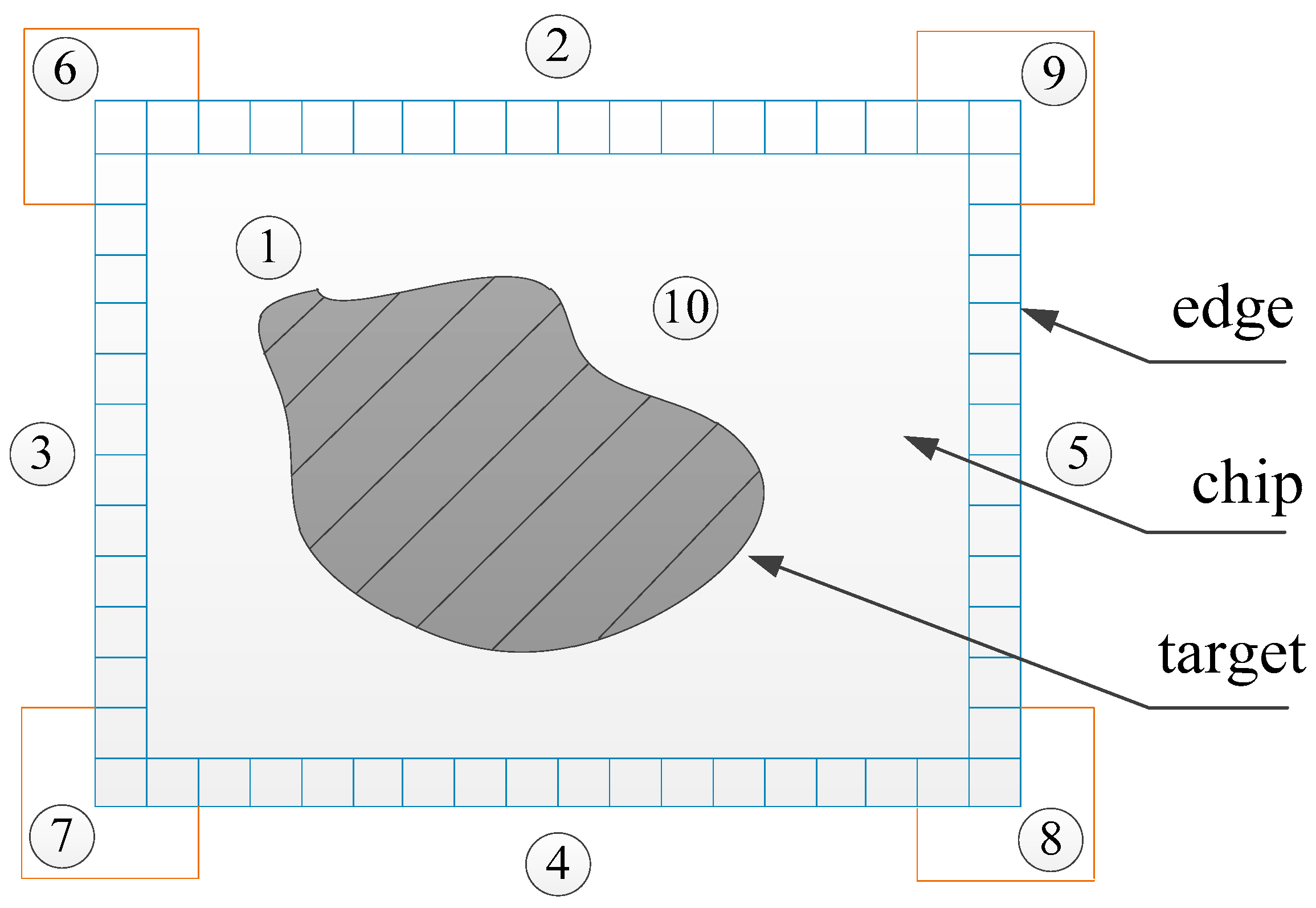

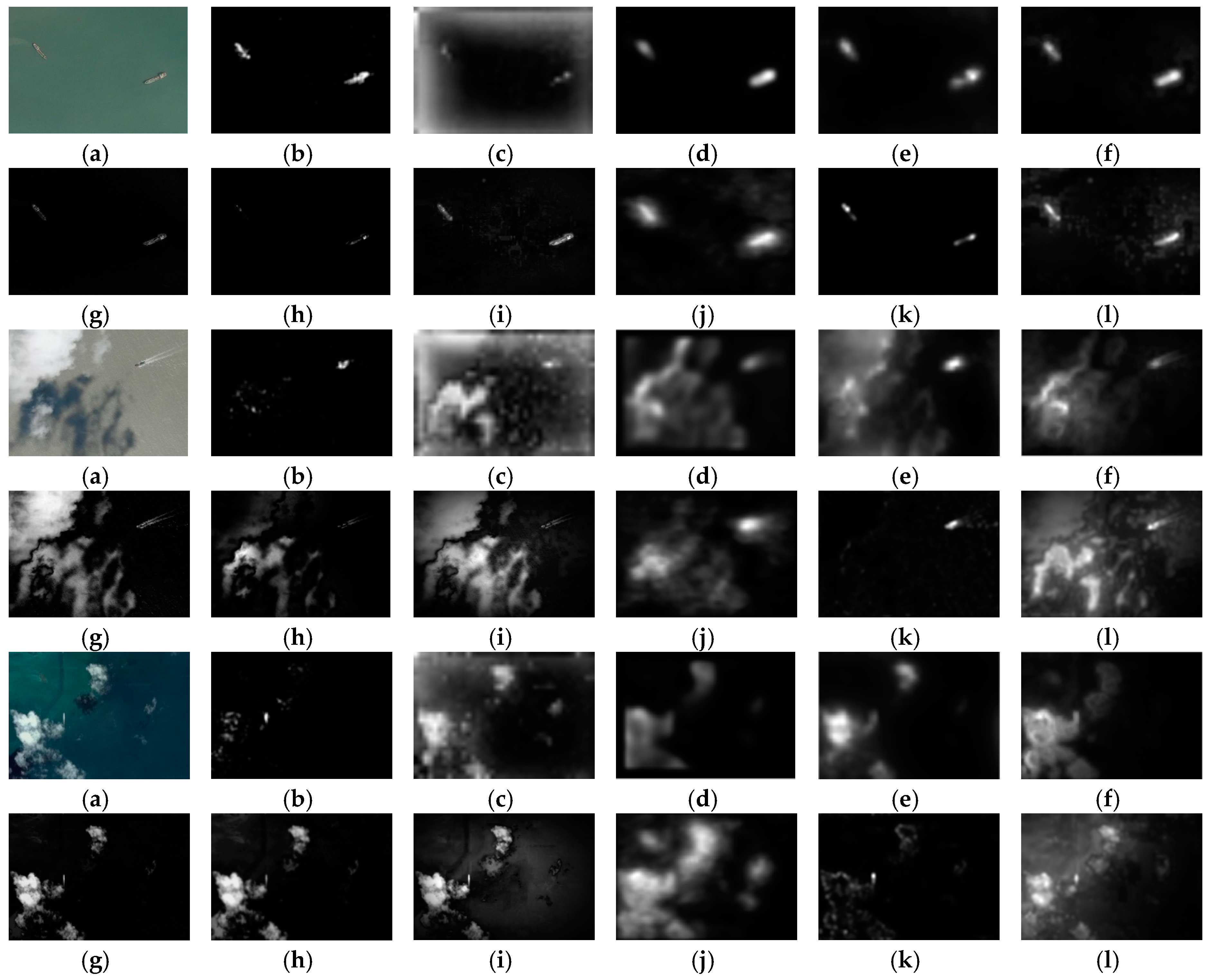

5.1. Subjective Visual Evaluation of Saliency Models

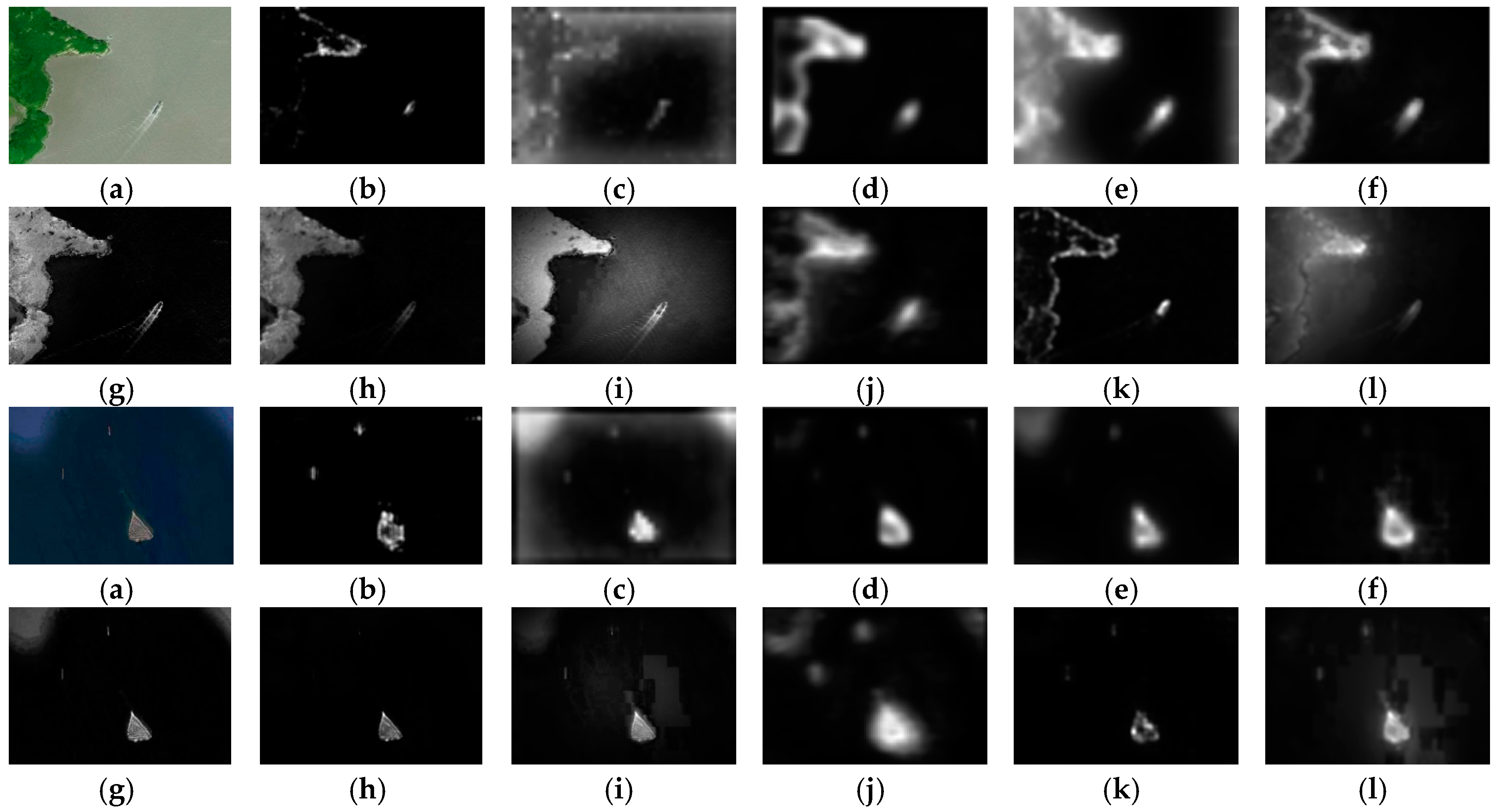

Figure 11 and

Figure 12 illustrate some comparisons with our saliency model against other typical models including the spatial, Fourier transform, and Wavelet transform domains in subjective visual representation. There are eight groups in total and each group has 12 images, including the input image, the results of our saliency model (WGS) and ten other models. The saliency model [

35] in wavelet domain is called Nevrez for short.

Figure 11 displays the comparisons from the background suppression and target highlighting abilities of the different models. Some input images have simple and calm sea backgrounds. The ships may be different sizes and colors. Contrarily, other images are under complex sea conditions including thin clouds, heavy clouds, shadows, and ship wakes.

In

Figure 11, it is noted that the detection performance of the WGS model is better and has successfully suppressed the undesired sea backgrounds and highlighted all the real ship targets. Although the ITTI, AIM, GBVS, CA, and RARE models can find the ship target locations, their background suppression abilities are weak. A lot of interference information is involved in their saliency maps, which may affect the subsequent processing speed and accuracy. Although the detection results are finer for the LC, MSSS and SDSP models, some ship targets may be missed in some cases caused by heavy clouds and dark ships, as shown in the first three groups. The detection results show that the SR model is effective for the images with clouds and mist, and most of the sea backgrounds are suppressed. However, it can only deal with gray images. The integrity of the ship target is poor and the whole ship region may be segmented into some small pieces as shown in the first and second groups. Although the Nevrez model is also based on the wavelet domain, the saliency detection results are relatively poor since the maximum value among the different color channels is considered at each level and the final saliency map is dominated by the local saliency. Compared with the other models, WGS can suppress many sea surface interferences including clouds and shadows. Although some ships are dark and they have much lower intensity than the sea surface background, WGS has successfully highlighted them and the integrities of the ship regions are well maintained.

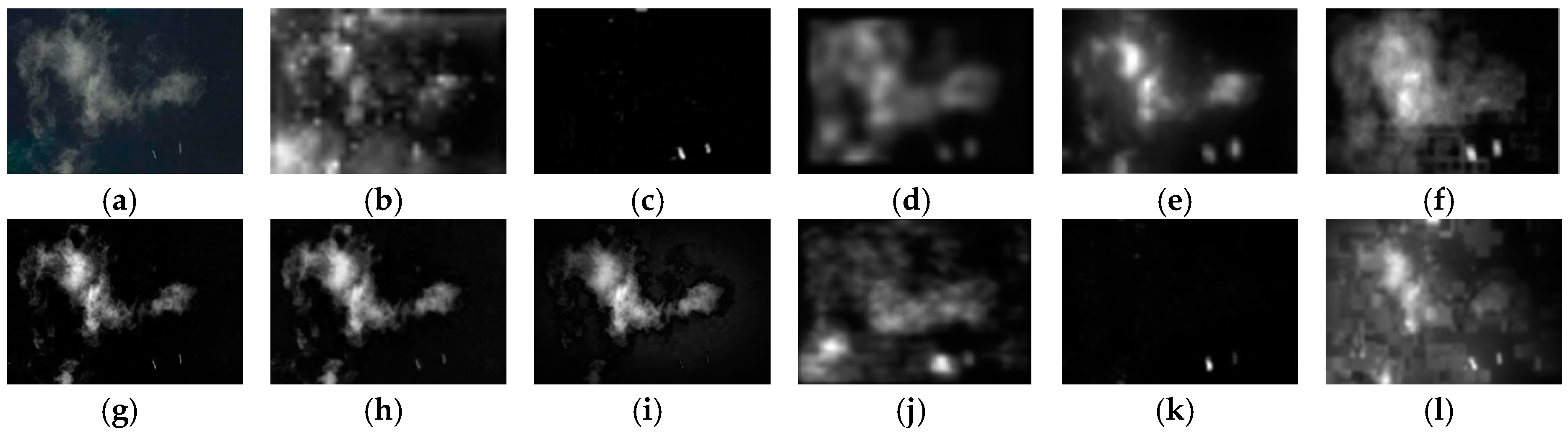

Figure 12 shows the saliency detection results of the eleven saliency models on the input images with the reefs, coastlines and islands.

After a general comparison among the eleven saliency models, we can find that the ship target can be well highlighted by all the models when the coastlines and islands are dim. However, compared with the WGS model, a large amount of false alarms are also highlighted in the other ten models, as shown in the first three groups. In the fourth group of the images with the bright island, the capability of detecting ship targets from the ten models is greatly weakened. The island is highlighted, while the ship region detected is dim and weak. Several ships are even missed in some models. Although the background suppression ability of the SR model is stronger than the other nine models, the detection result is still poor when the coastline has a bright edge or the island exists with the bright background, and the ship targets may be missed. Compared with the other ten models, the WGS model can achieve better detection results. The ship targets under the various complex sea backgrounds can be detected accurately and stably. The shape and the structure information of the ship targets are better maintained. Although some scattered interferences may still be introduced, the pseudo target can be largely eliminated in the subsequent ship target identification. In addition, it is conducive to the discrimination process, and the computational speed can be greatly improved because of the low repetition and the smaller amounts of false alarms.

5.2. Objective Quantitative Analysis

To evaluate the performance of different saliency models objectively and quantitatively, this section validates the eleven saliency models from the saliency detection precision and calculation speed. In addition, the capability of the saliency models on the images with different pixel sizes is also tested and analyzed.

To evaluate the integrity and precision of the ship target region detected by the saliency models, the Receiver Operating Characteristic (ROC) curve is computed, which plots the True Positive Rate (TPR) against the False Positive Rate (FPR). First, for the remote sensing image in the image database, we manually mark its ground-truth map G as the criterions of evaluations and analysis. The ground-truth map G refers to the accurate hull of the ship region in the input image, which is a binary map, and considered as prior information.

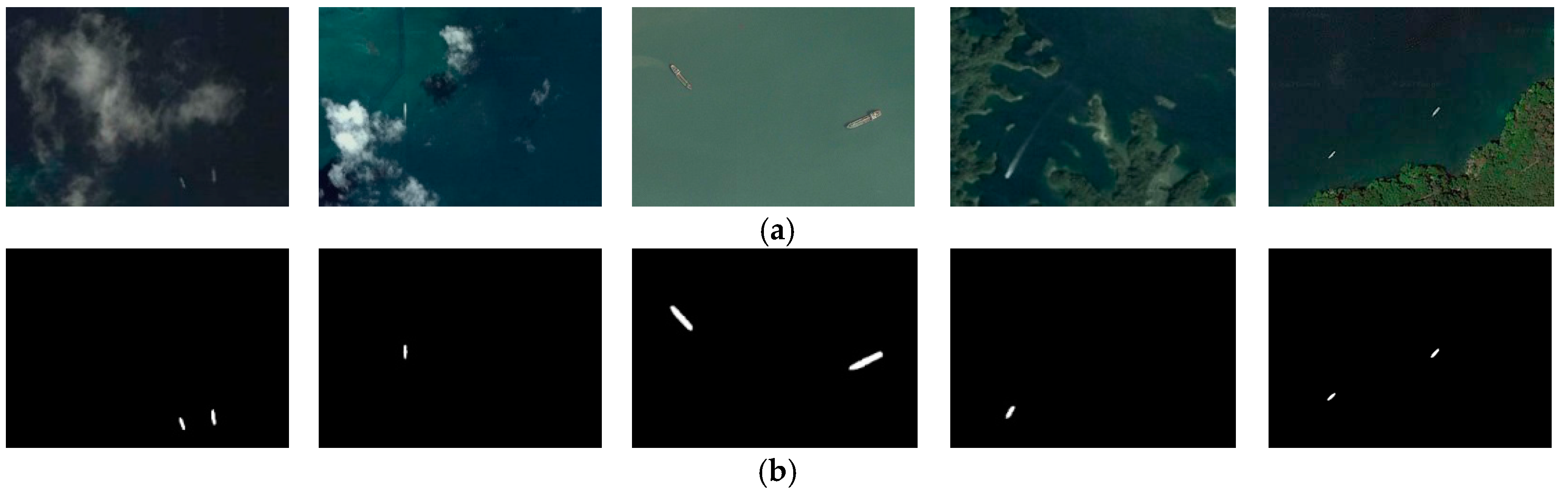

Figure 13 shows the ground-truth maps of some test images.

Then, a series of fixed integers from 0 to 255 are used as the threshold of the saliency map detected to obtain 256 binary images S. S is compared with the corresponding G, then, the four parameters can be counted as follows. The pixels belonging to G and S simultaneously are called True Positive (TP). The pixels belonging to G and not belonging to S are called False Negative (FN). The pixels not belonging to G and belonging to S are called False Positive (FP). The pixels not belonging to G and S simultaneously are called True Negative (TN). A set of TPR and FPR can be defined as follows.

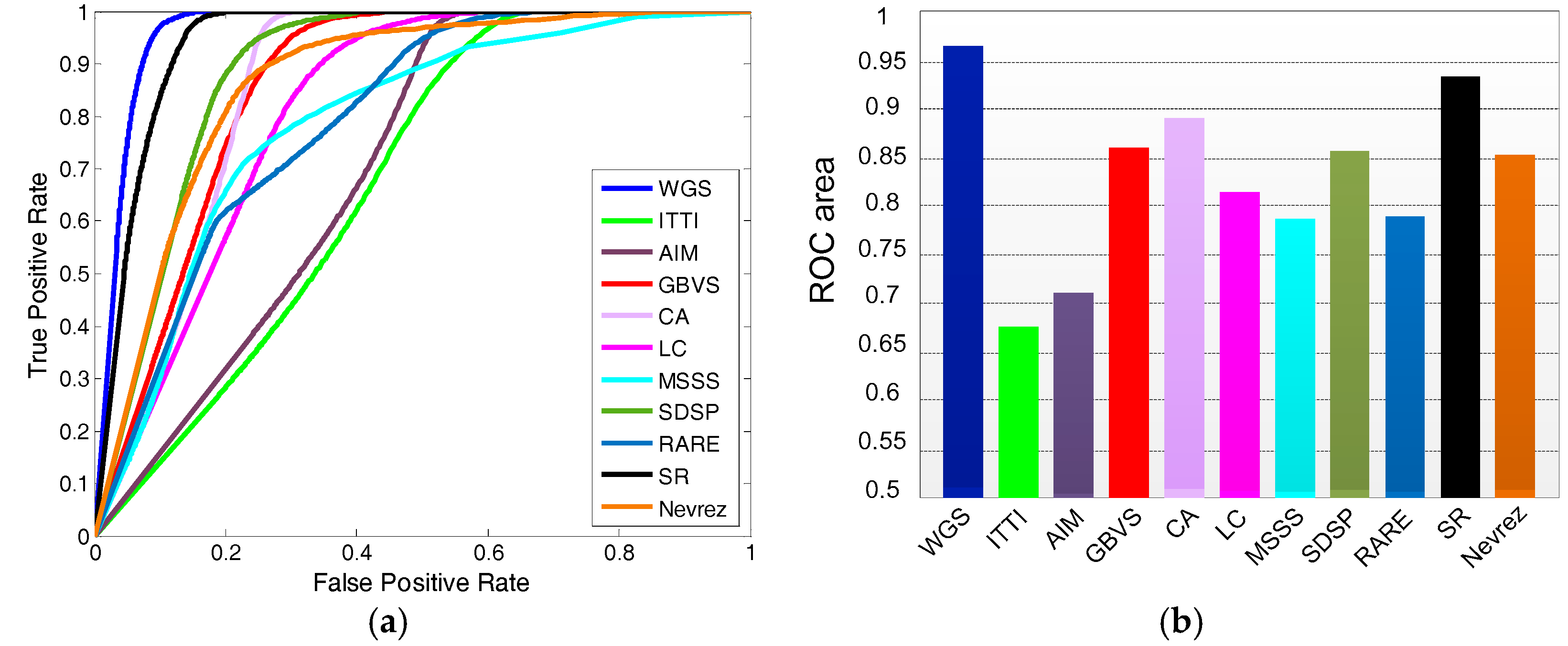

For each saliency model at the threshold, there are 256 average

TPR values and 256 average

TPR values in total. The ROC curves can be plotted, as shown in

Figure 14a. The Area Under the Curve (AUC) of each model is also calculated, as shown in

Figure 14b.

If the curve is closer to the upper left corner, the performance of the saliency model is better. We can see the ROC curve of the WGS model is higher than those of the tenother models. For a more intuitive comparison, the closer the AUC value of the model is to 1, the better the saliency detection performance is. As we can see in

Figure 14b, the AUC value of the WGS model is the largest and it consistently outperforms the other ten models.

The time cost is compared among the WGS model and the ten other state-of-the-art models. All experiments in this paper are conducted using a PC with an Intel Core 3.30 GHz processor and 4 GB of RAM. The programming platform is built in Matlab 2014a and called M for short. The time consumption of each model is shown in

Table 1.

In

Table 1, the average computing time of LC is the shortest because of the simple calculation. Compared with the processing speed of AIM, GBVS, CA and Nevrez, WGS is more time-efficient, while it is slower than the other six models. The main reason that affects its running speed lies in the calculation of the global saliency map using the probability density function on the multiple feature maps. This process is a time-consuming operation and consumes 1.204 s. While the time cost of the feature map generation using IWT is 0.829 s.

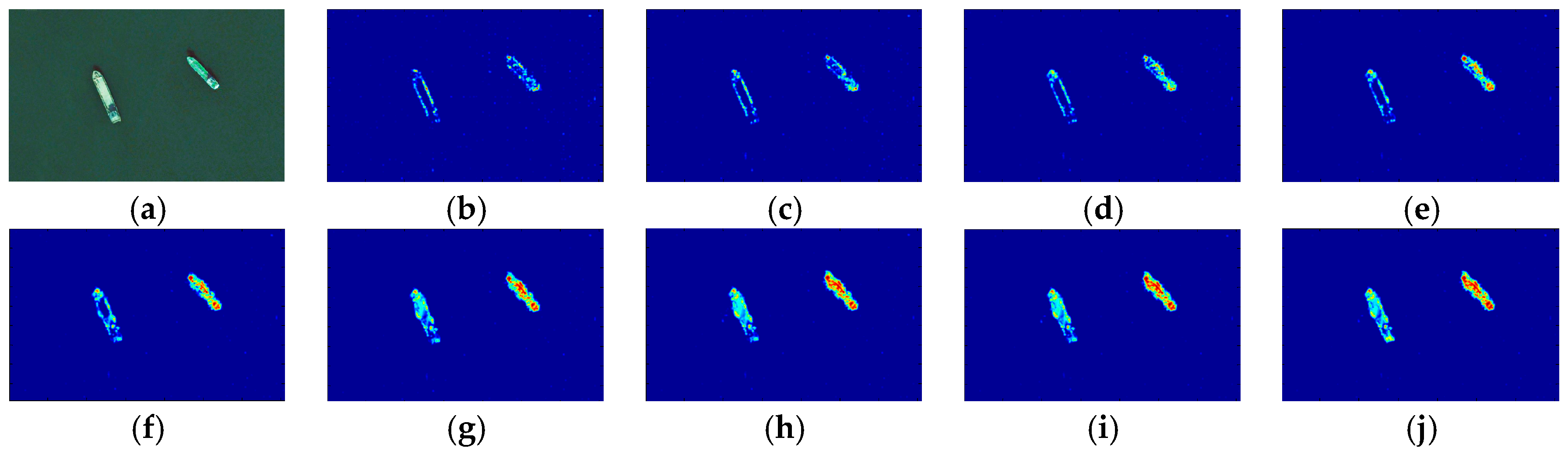

In addition, we test the capability of the saliency models on the images with different pixel sizes. Most saliency models are constructed using the low-level features of the input images, which are sensitive to the image size. The results of saliency detection on the images with different sizes may vary greatly. Therefore, we make a test on the multi-size images. In terms of detection performance in

Figure 11 and

Figure 12, SR is selected for comparing with WGS. The pixel sizes of the input images are 300 × 210, 406 × 283, 505 × 354, and 612 × 428. The detection results by the SR and WGS models are shown as follows.

As we can see in the two sets of results from

Figure 15, with the increase of the input image size, the information of interest is less and the integrity of target regions gradually decreases in the SR model. The saliency detection performance of SR becomes worse. Compared with SR, the detection effect of WGS for the images with different sizes is better owing to the characteristics of the multi-scale and multi-direction wavelet analysis. In the saliency maps of WGS, the background interferences can be suppressed effectively and the ships can be extracted accurately with a good integrity.

5.3. Overall Result Statistics

To evaluate the overall effectiveness of the ship extraction and each discrimination stage, the constructed image database includes plenty of images with islands, coastlines, reefs, heavy clouds and shadows, in addition to the simple and calm sea backgrounds. The detection accuracy ratio (

Cr), missing ratio (

Mr) and false alarm ratio (

Far) are computed, which are defined as follows.

where

Nt is the total number of the real ships in the database.

Ntt is the number of correctly detected ships.

Nfa is the number of the false alarms. The detailed extraction and discrimination results after each stage are presented in

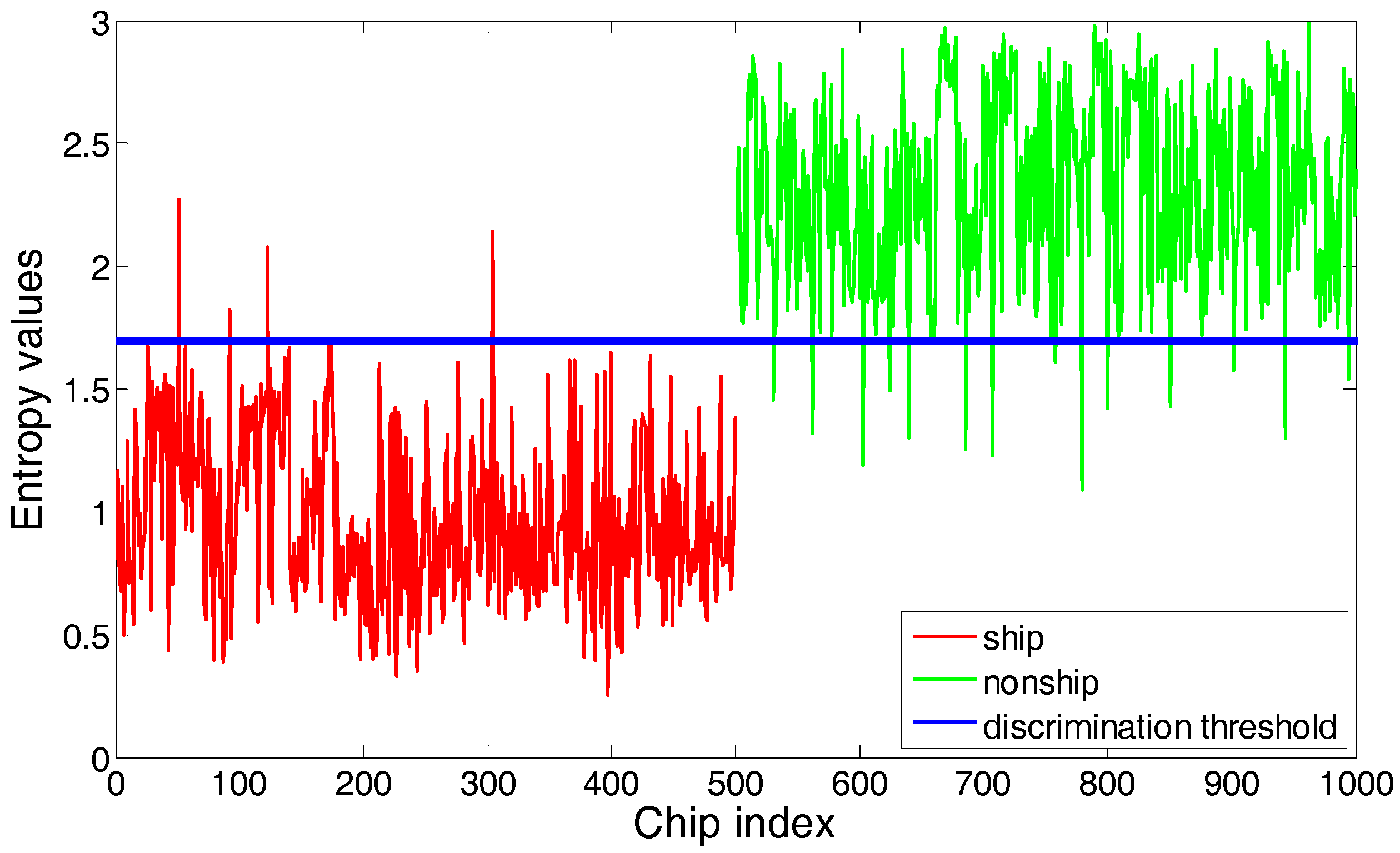

Table 2, which gives some reflections on the detection performance of the whole algorithm in each phase listed as follows. We only execute the global saliency detection based on the wavelet transform (abbreviated as WGS). The false alarms are preliminarily identified according to the sizes of the chips (abbreviated as SCD). The discrimination is based on the improved entropy (abbreviated as IED). The discrimination is executed based on the pixel distribution (abbreviated as PDD).

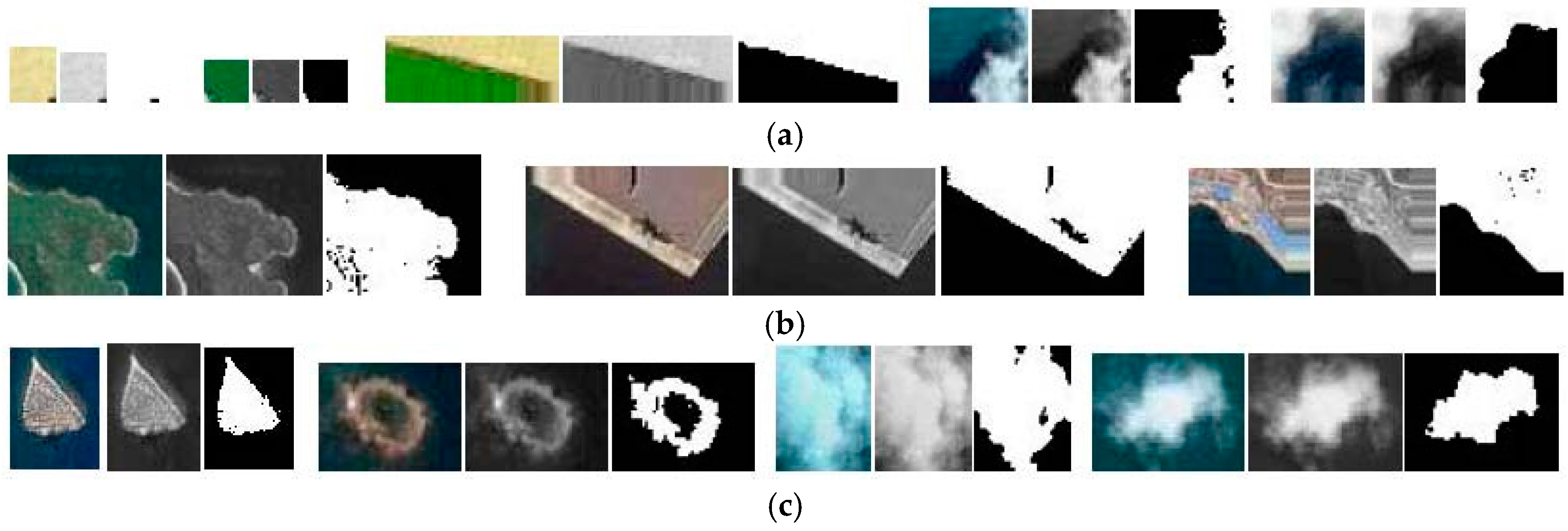

As we can see in the first row of the table, a few ships may be missed when only using the WGS model. That is because the details of the faint and small ships are not obvious when multiple ships with different sizes and colors exist. Their information may be ignored when the high-frequency wavelet coefficients are calculated for constructing the saliency map. In addition, the distance decay process will further weaken the significance level of the small ship targets and lead to the missed detection. We also find that the number of the false alarms is great in this stage. That is because there are many scattered false alarms in the saliency maps when the strong interferences exist, such as the islands and coastlines with the complex distribution, heavy clouds and shadows. After the adaptive threshold segmentation using the Otsu method, dozens of false alarms may be introduced in an image. Although some false alarms can be reduced by increasing the threshold in the segmentation, the ship pixel area of the chip may also decrease. It may result in the missed detection in the SCD stage. Therefore, it is safely concluded the saliency detection results using wavelet transform are accepted on the sea background without bright coastlines and islands. No false alarms or a small amount of false alarms is introduced in this case. Otherwise, plenty of the false alarms under the complex backgrounds may be introduced after binarization. In the second row of the table, although Cr has gone down by 0.898% after the SCD stage, Far decreases by 5.041%. In the third row of the table, a few real ships are identified as the false alarms under some complex backgrounds and Cr drops by 4.668% after implementing the IED stage. However, Far has reduced by 25.635%, which shows the effectiveness of the discrimination stage based on the improved entropy estimation. At last, for the false alarms whose entropy values are similar to those of the ships, we design the PDD stage for further removing them. After that, Far decreases to 5.741%. In addition, the chips containing the smaller targets can be effectively removed and this discrimination stage can achieve high detection adaptability and robustness. Through the analysis above, it is safely concluded that the multi-level false alarm discrimination method is effective for eliminating the pseudo targets and retaining the real ships. The detection performance of the whole method is improved greatly after these stages.

With regard to the computation time of the whole discrimination stage, it depends on the number and sizes of the chips. The more the candidates in the input image are, and the larger the chip size is, the higher the time consumption becomes. For the target chips with different sizes 17 × 18, 51 × 30 and 64 × 64, the average running time of each auxiliary algorithm including SCD, IED and PDD is shown in

Table 3, respectively. We can see the computation speed of each stage is very fast and a desirable time performance can be achieved.

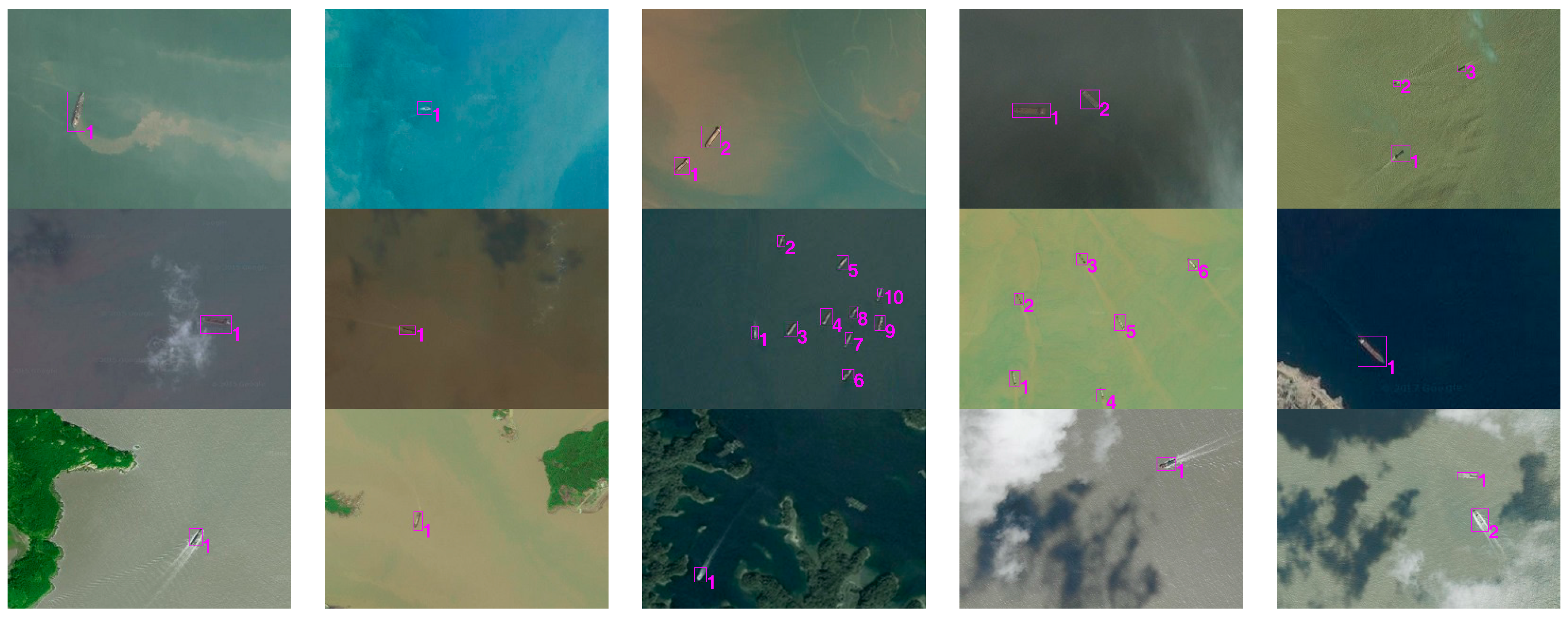

Some typical detection results for color images are displayed in

Figure 16. We can see most false alarms are eliminated, whereas the real ship targets are extracted after the multi-level discrimination. The regions containing real ships are detected and marked with the pink boxes. It is noted that the number and the locations of ships are determined accurately.

6. Conclusions

In this paper, a novel detection framework consisting of saliency detection and discrimination stages is presented for detecting and extracting ship targets from optical remote sensing images. To extract the ship candidates against the complex sea backgrounds, a global saliency model is constructed based on the multi-scale and multi-direction high-frequency wavelet coefficients. Then, the distance decay formula is implemented to weaken the non-salient information in the saliency map. The large range low-frequency information from the sea background can be suppressed, while most ship regions with clearer contours can be extracted accurately. The ship regions extracted are uniform and complete. In addition, the images with different sizes can be effectively processed by the proposed saliency model. Furthermore, to eliminate the islands, coastlines, heavy clouds, shadows and small clutter regions, a multi-level discrimination method is designed. According to the characteristics of the ship and no-ship chips, the improved entropy estimation is presented, which overcomes the deficiency of the traditional entropy relying on spatial geometric information. It can remove the false candidates and retain the real ship targets. For the false chips whose entropy values are confused with those of the ship targets, the pixel distribution identification is proposed to further decrease the false alarm rate. Experimental results on the images under various sea backgrounds demonstrate that the proposed method can achieve high detection robustness and a desirable speed performance.

Although our method has achieved promising results, several issues remain to be further settled and improved. In the discrimination stage, a few candidates may contain the slender reefs similar to the shape of the ship or the coastline with the bright edges. After binarization, their entropy values are similar to those of the ship chips, and they are difficult to be removed. More effective features and further attempts should be made to remove such false alarms.