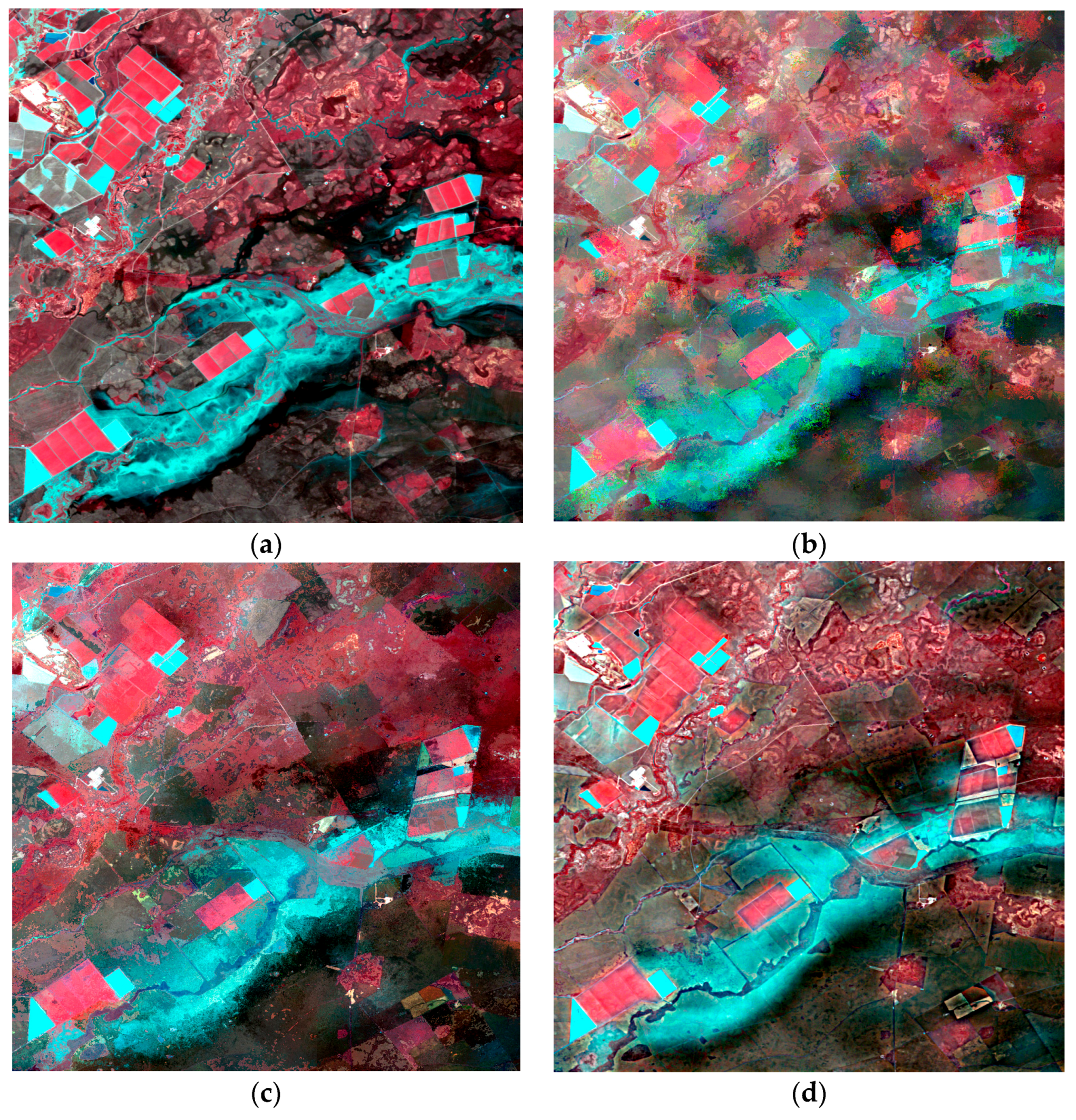

Differences in atmospheric conditions (e.g., clouds, fogs and aerosols), solar angle and altitude at different pixel locations and data pre-processing such as radiometric calibration, atmospheric correction and geometric rectification cannot completely remove this variability [

17,

41]. Furthermore, changes in the surface reflectance may differ among each pixel during an observation period. Thus, it is advisable to apply a local method that will act on each pixel and its neighboring pixels within a moving window. In the following subsections, we first display the theoretical basis of the RWSTFM and illustrate how the ordinary kriging method can be integrated into the spatiotemporal image fusion process. Then, we briefly introduce the ordinary kriging method. Third, we present the procedure of the RWSTFM for predicting the Landsat-like image and calculating the estimation variance. Finally, we provide several indices for an evaluation of the fusion results. We discuss the changed and unchanged land use areas with regard to their complex temporal dynamics in each corresponding band as follows. We suppose that the coarse-resolution sensor has similar spectral bands as the fine-resolution sensor.

2.1. Theoretical Basis

First, we assume that the land cover does not change from the date to the date .

For a given pixel

, the difference in the reflectance between a coarse-resolution pixel and a fine-resolution pixel is affected by system errors among the different sensors. Therefore, the relationship between the coarse- and fine-resolution reflectance can be built as a linear model:

where

and

denote the surface reflectance for Landsat and MODIS data, respectively,

is a given pixel location for either the Landsat or the MODIS image,

is the acquisition date for both the MODIS and Landsat data and

and

are the coefficients of the linear regression model. Similarly, we have the following for another coarse-resolution image acquired on the date

:

Suppose the system errors and the land cover type within the pixel

do not change from date

to

. This model will have the same parameters, namely,

, on the different observation dates. Consequently, we can obtain the predicted reflectance from Equations (1) and (2):

The fine-resolution reflectance at equals the sum of the fine-resolution reflectance at and the scaled change in the reflectance from to within the coarse-resolution images. can be derived by linearly regressing the reflectance values of spectrally similar neighboring pixels in the fine-resolution image and the corresponding coarse-resolution image on the base date . A search for spectrally similar pixels can be conducted within a moving window around the central pixel in the fine-resolution image at .

Next, we assume that the land cover changes from date to .

The assumption wherein the linear parameters

and

are equivalent for an unchanged land cover type is no longer reasonable, namely,

. We introduce an adjustment factor

l and make

. Then, we can obtain the reflectance of a fine-resolution pixel at

:

Subsequently, we can derive the predicted reflectance from Equations (1) and (4):

where

. We assume that spectrally similar pixels in the MODIS data at

have similar spectral characteristics (i.e., the same land cover type) as the corresponding pixels in the MODIS data at

. Therefore, we search the entire MODIS image for the date

and compare the neighbors of every pixel in the MODIS image at

(as candidate pixels) with those of a given pixel (namely, the target pixel) in the MODIS image for the date

We measure the similarity of the candidate and target pixels by calculating the spectral difference of neighboring pixel values in each band based on the sum of the Euclidean distance. The candidate pixel with the smallest spectral difference of neighbors is selected as the most similar pixel. This pixel is known as a spectrally-corresponding pixel to distinguish it from a spatially-corresponding pixel (with the same row and column). Then,

can be obtained from the linear regression of the fine-resolution reflectance against the coarse-resolution reflectance for spectrally-corresponding pixels at

. Similarly,

is obtained from the linear regression of the fine-resolution reflectance against the coarse-resolution reflectance for spatially-corresponding pixels at

according to Equation (3). Hence, the fine-resolution reflectance at

equals the sum of the fine-resolution reflectance at

with the scaled change of the modified reflectance at

and the original reflectance at

in the coarse-resolution images. When the land cover type does not change,

. Note that Equation (3) is a special case of Equation (5). Thus, we can show that Equations (3) and (5) exhibit the same form.

The moving window is utilized to search for similar pixels within the local window to introduce additional information from neighboring pixels [

15], and the information from these similar pixels is then integrated into the fine-resolution reflectance calculation. From all of the above, the fine-resolution surface reflectance of the central pixel on the date

with a weighted function can be computed as a unified function regardless of the distribution of changed and unchanged areas:

where

is the search window size,

is the central pixel of the moving window,

n is the number of similar pixels,

is the location of a similar pixel with a central pixel,

is the weight of the similar pixel and

is the adjustment factor. Only the spectrally similar pixels retrieved from the Landsat surface reflectance values within the moving window are used for weights while predicting the reflectance. Weights of similar pixels can be derived using a geostatistical method such as ordinary kriging (

Section 2.2). The search window size

is determined by visual analysis of the homogeneity of the surface and considering the spatial resolution ratio of coarse to fine resolution images. For example, the search window size is set to about 15–17 as the spatial resolution ratio of MODIS to Landsat images is almost 16 (calculated by 500/30). If the regional landscape is more homogeneous, the value of

can be smaller. Otherwise, it will be set larger. The selection of the parameter will be validated by the performance of experiments. Equation (6) indicates that the fine-resolution reflectance on the prediction date equals the weighted average of the fine-resolution reflectance values observed on the base date added to the change in the modified coarse-resolution reflectance.

2.2. Weighting via Ordinary Kriging

Kriging [

42] is a geostatistical technique that is employed to obtain the BLUP by assigning an optimum set of weights to all of the available and relevant observation data.

Suppose that the dataset

can be denoted using observations from a geographic space, where

is a location,

and

are the coordinates and

is the number of observations.

represents the unknown observation of

. We assume that the dataset Z has spatially homogenous properties for purely coarse-resolution pixels or end-members belonging to the same land cover type for mixed coarse-resolution pixels. The stationarity and isotropy of radiometric behavior are reasonable assumptions when we apply geostatistics to conduct remote sensing surface reflectance analysis within a local area [

31,

43]. Hence, we can obtain observations from the dataset

that have the same expected values and variance:

The kriging predictor is a weighted average of neighboring observations:

where

is the predictor of

,

are the weights associated with the sampling points and

is the zero-mean regression residual. The BLUP is obtained by minimizing the kriging variance:

According to the above assumption of Equation (7), we can get the following:

Hence, we can infer that Equation (9) is subject to:

With the minimizing target function of Equation (9) and the linear constrained condition (11), Lagrange’s method of multipliers can be used to solve this conditional extremum problem. Set

F as the Lagrange function:

where

is a Langrange multiplier, and the function

can be derived by

and

. Thus, we can derive the optimal values

from the matrix equation:

where:

Here, we introduce the semivariance:

The semivariance and distance can be derived from known sample points, and they can be fitted using a variogram function and an extra nugget model that captures the nugget effect. is a matrix of semivariances between all of the data points and is constructed using the variogram function. is a vector of the estimated semivariances between the data points and the point at which we wish to predict the unknown variable , and represents the resulting weights and .

Thus, the optimal linear predictor

is as follows:

Furthermore, the kriging variance is

In practice, we select spectrally similar pixels from a local neighborhood around the prediction location, i.e., the kriging search neighborhood [

44]. The search neighborhood of a given pixel includes enough pixels to infer the statistics (e.g., semi-variograms) for each local area. After this step, we can calculate the weights (

) from similar pixels within the moving window in the Landsat image acquired on the base date (

) using the kriging method. Thus, the derived weights (

) (identical to the weights (

)) can be used to predict the fine-resolution image for the prediction date (

) as described in Equation (6).

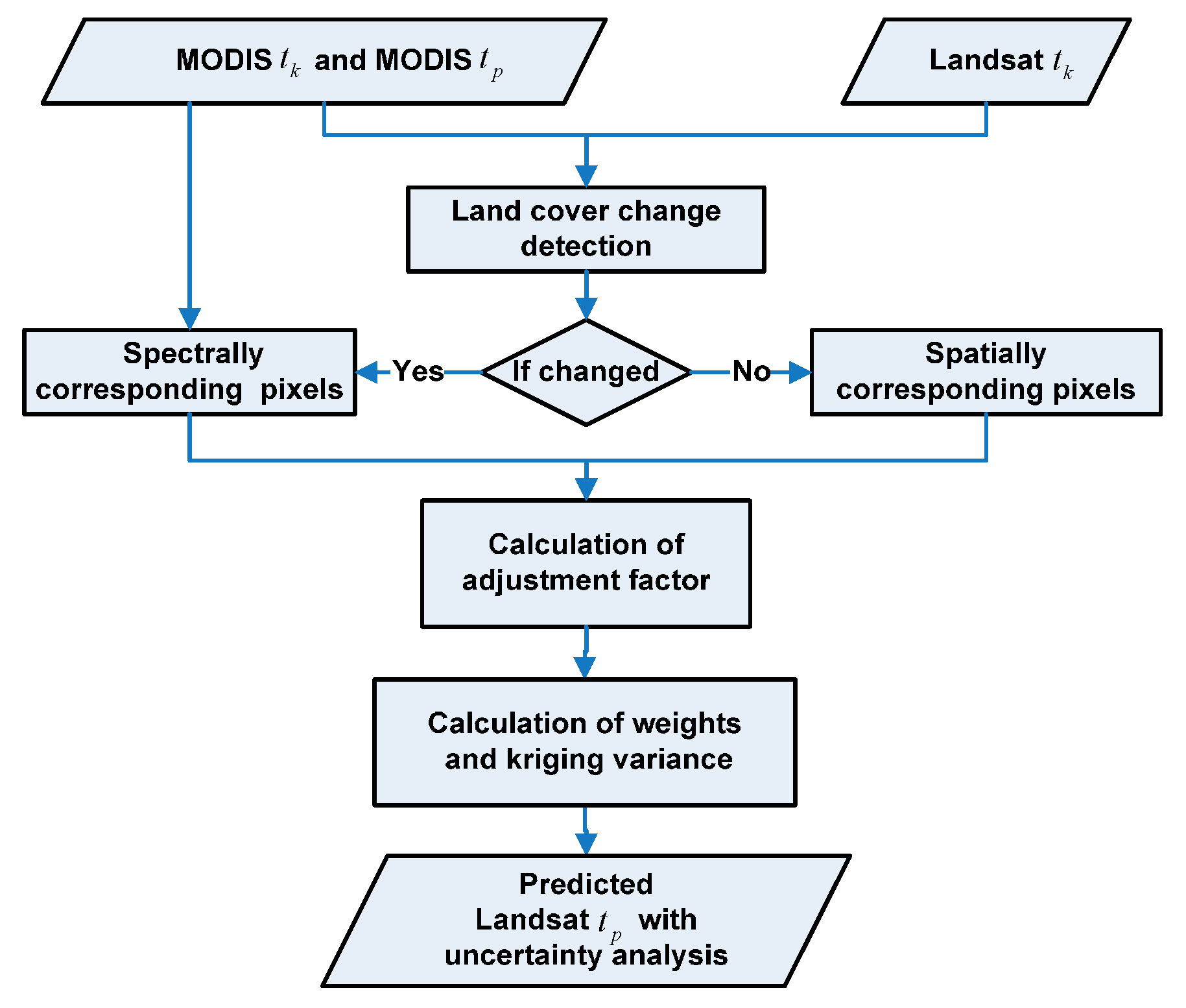

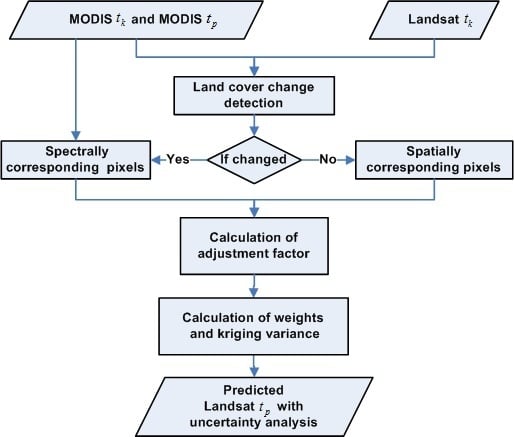

2.3. Process of the RWSTFM Implementation

Figure 1 presents the flowchart of the RWSTFM. There are four steps in the implementation of the RWSTFM: detecting land cover changes, calculating the adjustment factors and searching for similar pixels, calculating the weights for similar pixels and the kriging variance and predicting the fine-resolution image with uncertainty analysis. All of these steps are discussed in detail hereafter.

First, we adopt a thresholding method to detect the changes in the surface reflectance images at different dates:

where

are the average values of the three bands (such as near infrared, or NIR, red and green bands of the Landsat and MODIS images) at the location

at

and

for the Landsat and MODIS data, respectively, and

and

are the absolute difference maps. The corresponding thresholds are set as

and

, which denote the average values of absolute difference maps. If both of the absolute values of a given pixel lie beyond the designated thresholds (

), the land cover will be marked as changed. For all other cases, this means that the land cover is nearly the same as that on the base date

and is essentially unchanged.

Second, we calculate the adjustment factor for both changed and unchanged land cover areas. For changed land cover areas, . We apply a linear regression to the Landsat image against the corresponding MODIS image at within the spectrally similar pixels to obtain . We search the entire MODIS image at and find the spectrally-corresponding pixel for the regression of . In addition, we consider the structural similarity between spectrally-corresponding pixels in the MODIS image at and the target pixel in the MODIS image at by extracting edge information. We detect the edges using a Gaussian high-pass filter. Subsequently, we search for similar pixels along the same edge areas when the MODIS pixel at is detected as an edge pixel. Conversely, we search for similar pixels within the non-edge areas when the MODIS pixel at is not detected as an edge pixel. Furthermore, we apply linear regression to the Landsat image against the spatially corresponding MODIS image with similar pixels within a local window at to obtain . Finally, we obtain the adjustment factor via the ratio of to . Since the regression is conducted on neighboring similar pixels, a fine-resolution image acquired on the base date is used to search for pixels similar to the central pixel within a local window. For unchanged land cover areas, . Thus, for unchanged pixels, the calculation of the parameter is a special circumstance involving changed pixels. Following this step, we can obtain the parameter of for both changed and unchanged areas.

Third, we calculate the weights

and the kriging variance

using an ordinary kriging method. We apply a variogram function to fit the relationship between the distance and semivariance in the spectrally similar neighbor pixels of a given pixel in the Landsat image at

. Subsequently, we calculate the weights of spectrally similar neighbor pixels and the kriging variance using an ordinary kriging algorithm (

Section 2.2).

Finally, we predict the fine-resolution reflectance and calculate the estimation variance on the prediction date. After we obtain these two parameters, we can predict the fine-resolution reflectance at

for both changed and unchanged land cover areas according to Equation (6). Meanwhile, we can calculate the estimation variance (

). The kriging estimation variance

is the non-conditional minimized estimation variance

and represents a measure of the accuracy of the local estimation from similar pixels. However, the kriging method acts on similar pixels within a local window in the fine-resolution image at

, and the kriging variance (

) denotes the prediction variance of the fine-resolution image at

rather than at

. Thus, the prediction variance must be corrected by adding the squared deviations between the coarse-resolution images on the prediction date

and the base date

to the kriging variance:

where

is the estimation variance of the fine-resolution image at

,

is the kriging variance, and

and

are the actual MODIS reflectance values for a given pixel at

and

, respectively.