Fusion Approaches for Land Cover Map Production Using High Resolution Image Time Series without Reference Data of the Corresponding Period

Abstract

:1. Introduction

1.1. Short Review of Domain Adaptation Methods

- Feature extraction by selecting invariant features

- Adapting data distributions

- Adapting classifiers with semi-supervised approaches

- Adaptation of the classifier by active learning

2. Materials and Methods

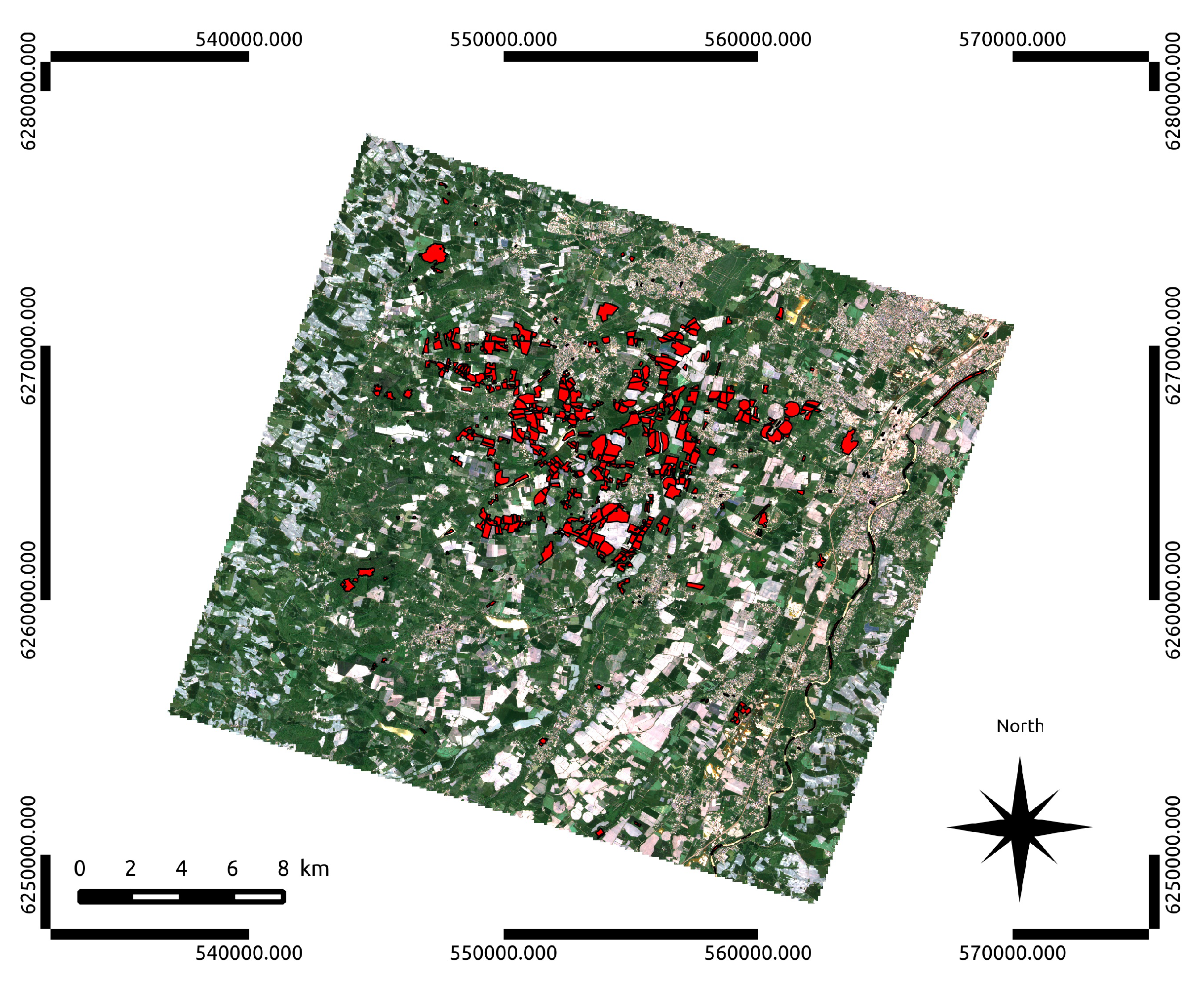

2.1. Optical Data

2.2. Reference Data

2.3. Methodology

- Data pre-processing

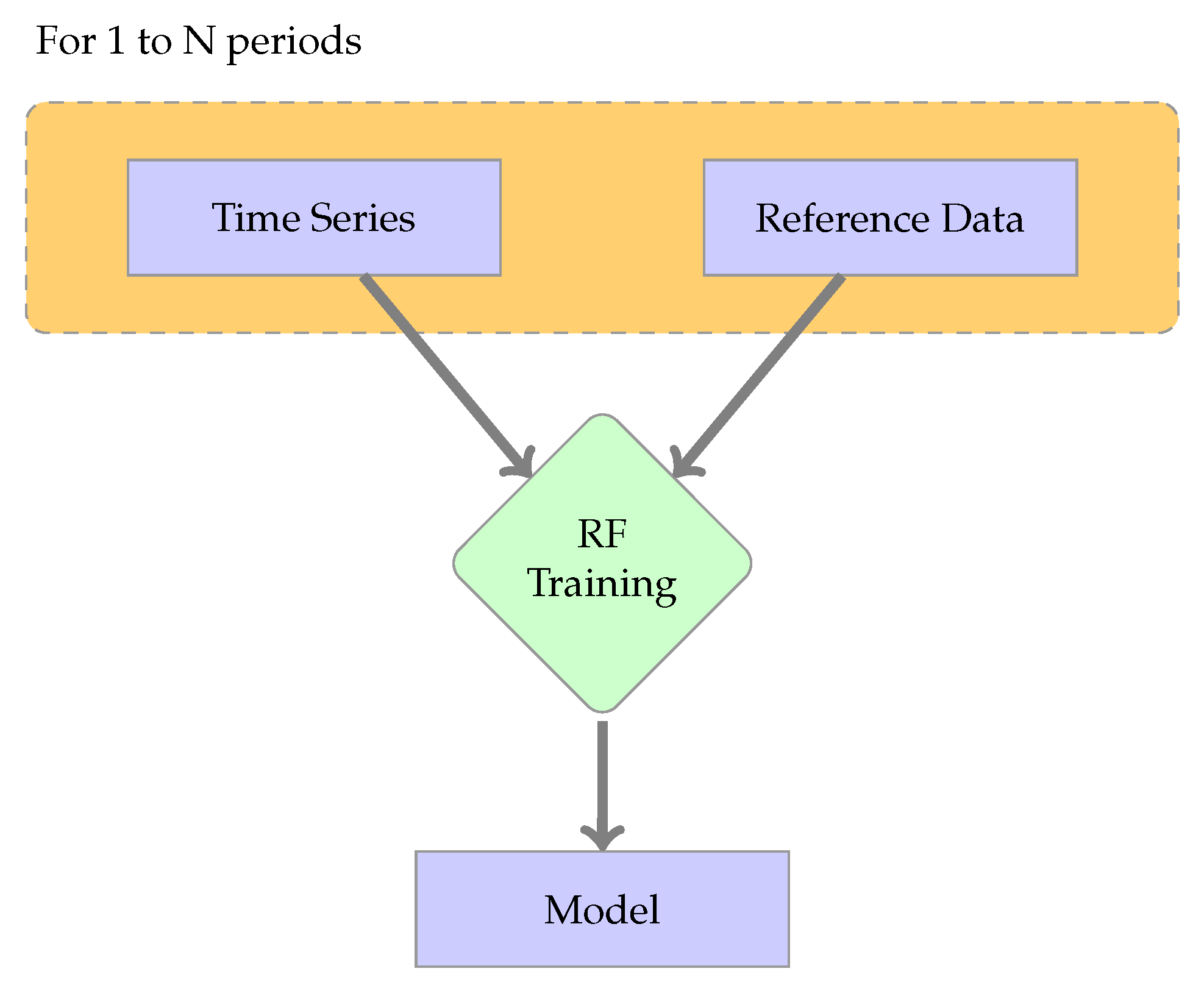

- Classifier training using labeled data to define the decision rules

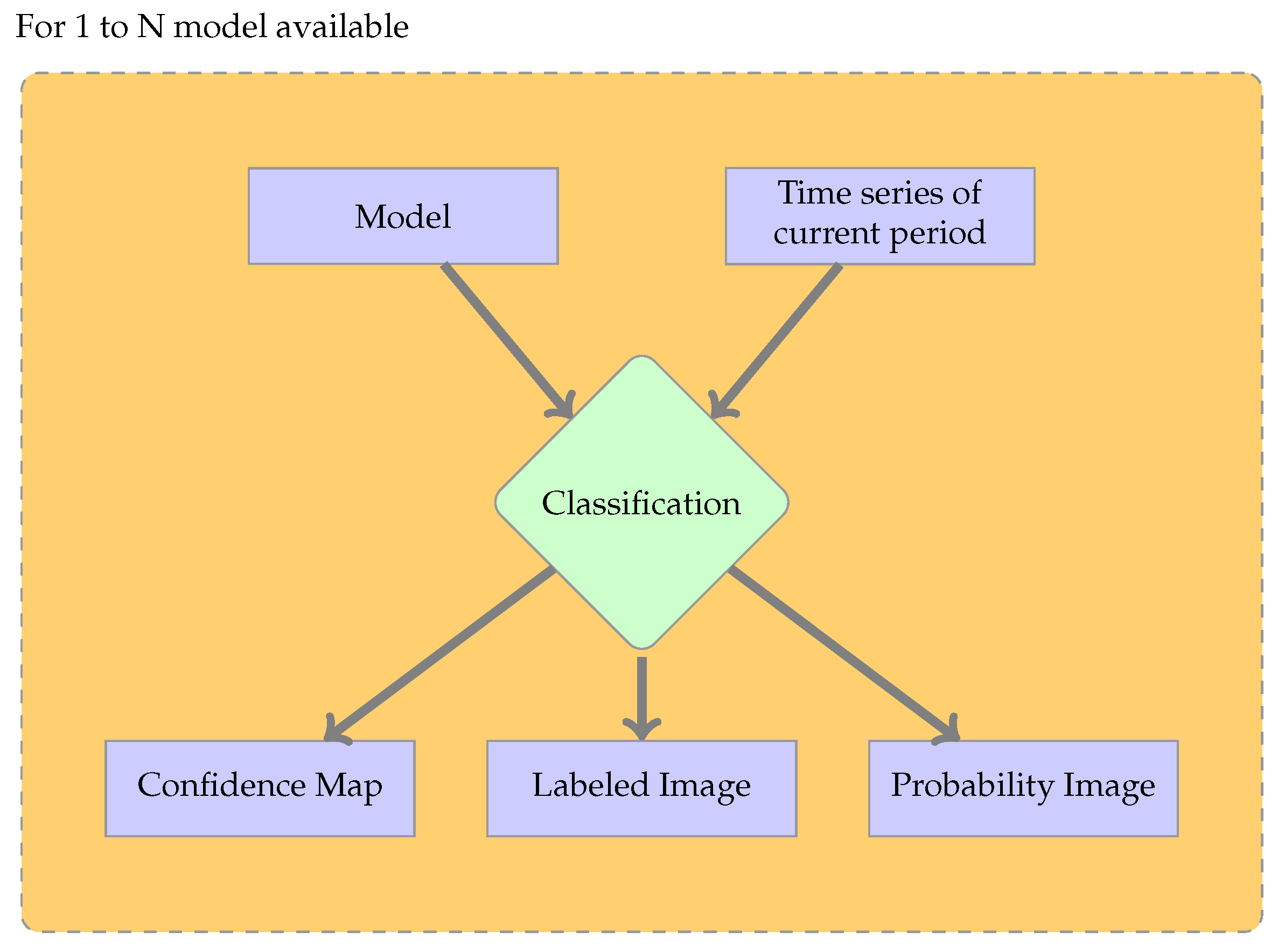

- Classification, using a trained classifier to predict the classes of unlabeled data

- Post-processing

2.3.1. Global Approach

2.3.2. Fusion Methods

- Majority Voting (MV): Each map votes for a label, and the majority label is chosen. In the case of a tie, a non-decision label is chosen.

- Confidence Voting (CV): Each voter selects a class, and the confidence is used as a weight to compute a score per label. The label with the highest score is chosen. This approach considers only the labels chosen by the classifier.

- Probability Voting (PV): Each voter uses the probability values to give a weight to every possible label. The label with the highest score is chosen.

2.4. Validation Procedure

- The recall (also called producer’s accuracy) is computed for each class. To this end, in the confusion matrix, each row is considered (one per class), and the number of correctly classified pixels is divided by the total number of reference data pixels of that class.

- The precision (also called user’s accuracy) is computed considering the column of the confusion matrix. It is the fraction of correctly classified pixels with regard to all pixels classified as this class.

3. Results

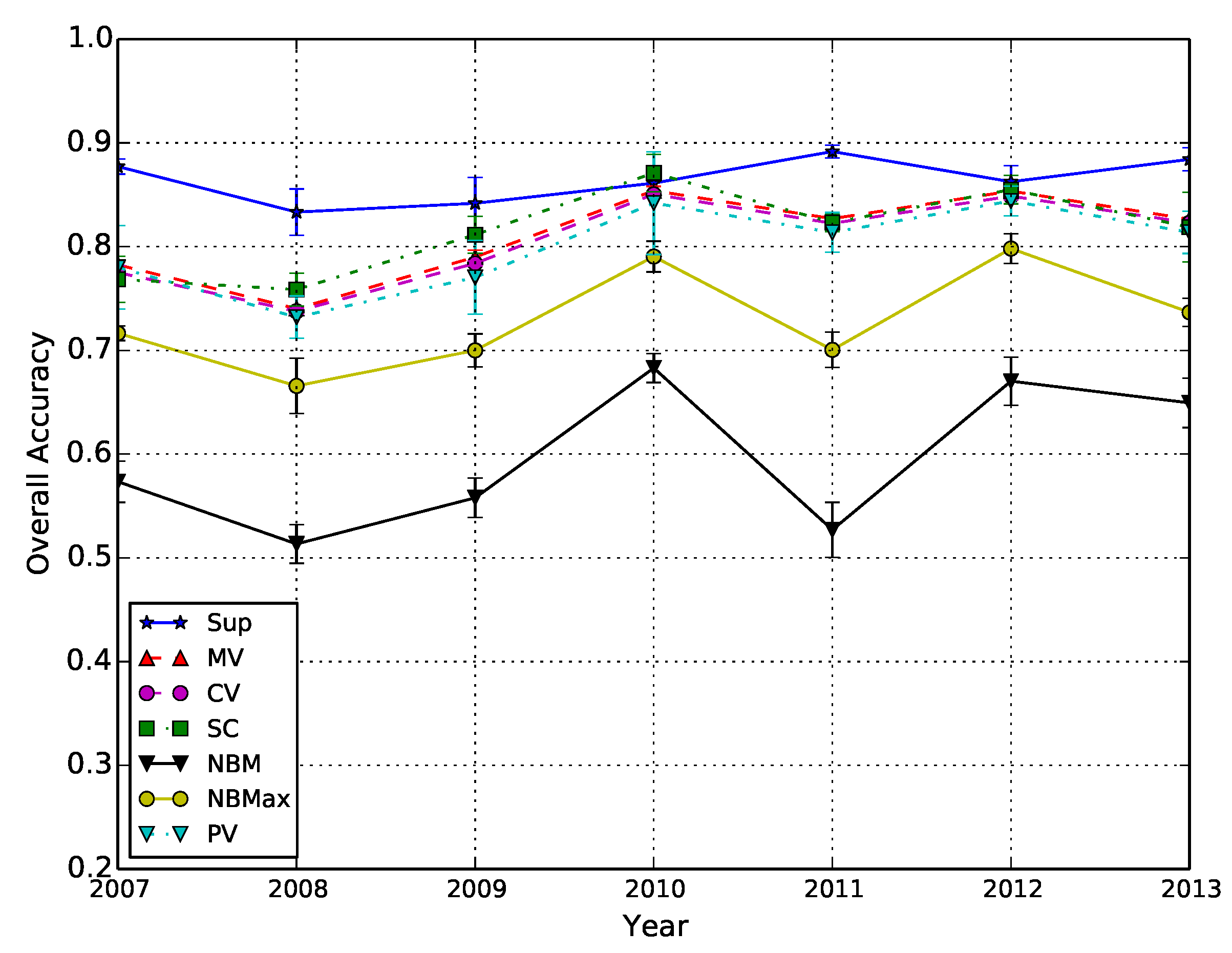

3.1. Baseline Configuration Analysis

3.2. Results of the Fusion Strategies

- Classes for which the Fscore is similar to the standard supervised case, with narrow confidence intervals. These classes are: broad-leaved tree, pine, wheat, maize and sunflower.

- Classes for which the Fscore is lower than for the standard supervised case and the confidence intervals are wide. These classes are: rapeseed, artificial surfaces, wasteland, river, lake, gravel pit and grass.

- Classes for which the Fscore is very low with narrow confidence intervals. These classes are: barley, sorghum, soybean, fallow lands and hemp.

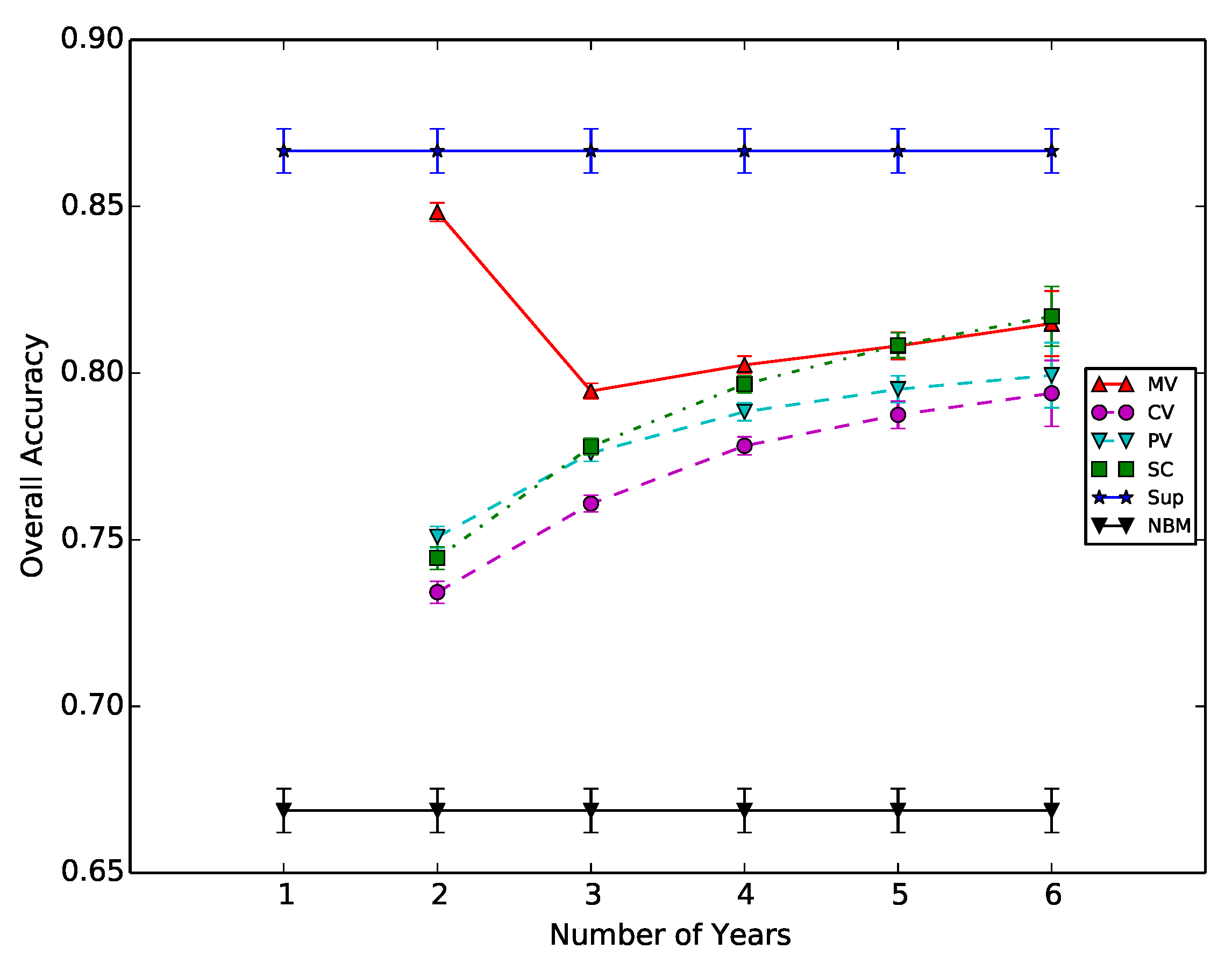

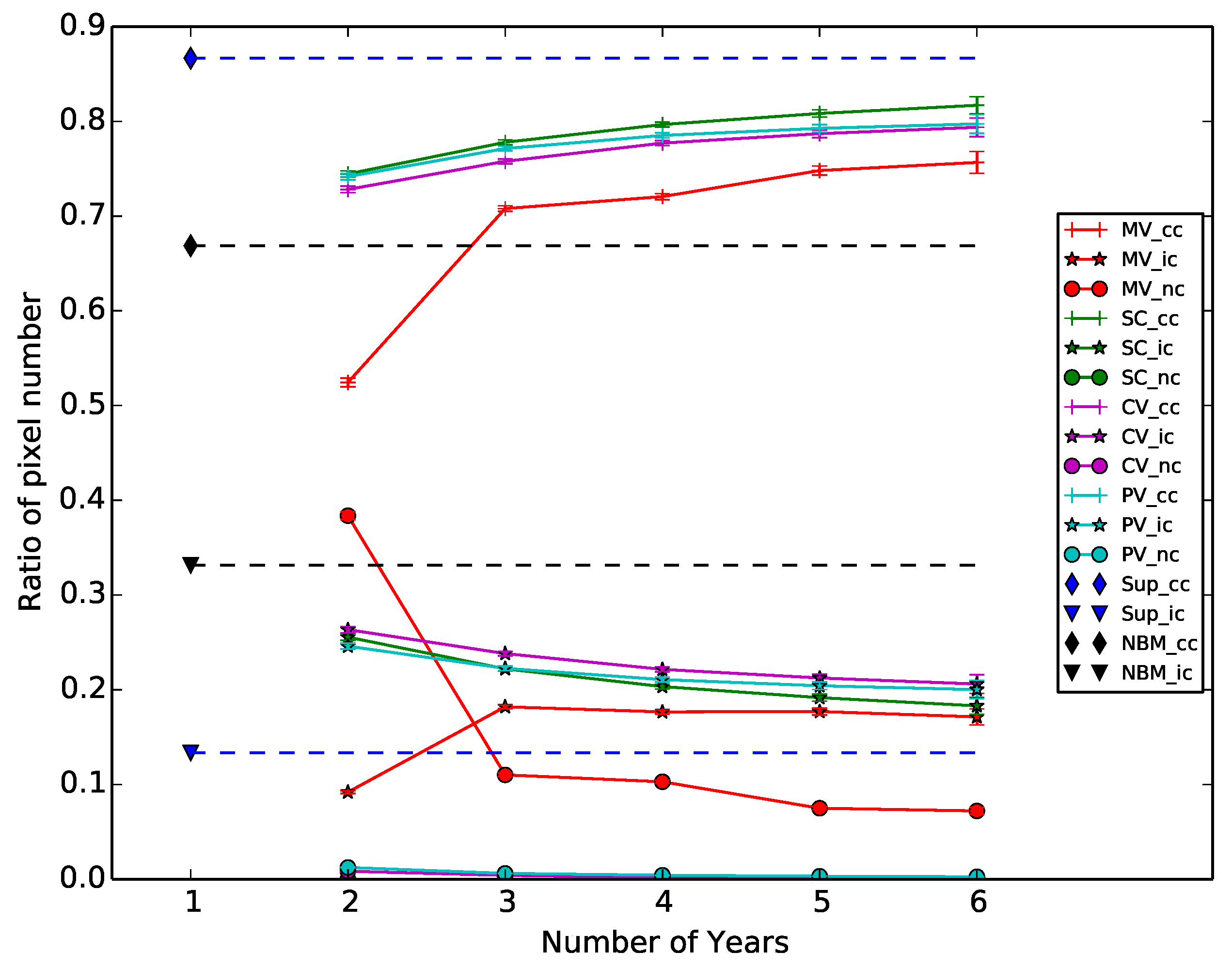

3.3. Impact of the History Size

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [PubMed]

- Dewan, A.M.; Yamaguchi, Y. Land Use and Land Cover Change in Greater Dhaka, Bangladesh: Using Remote Sensing to Promote Sustainable Urbanization. Appl. Geogr. 2009, 29, 390–401. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an Operational System for Crop Type Map Production Using High Temporal and Spatial Resolution Satellite Optical Imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First Experience With Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Srivastava, P.K.; Han, D.; Rico-Ramirez, M.A.; Bray, M.; Islam, T. Selection of Classification Techniques for Land Use/land Cover Change Investigation. Adv. Space Res. 2012, 50, 1250–1265. [Google Scholar] [CrossRef]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain Adaptation for the Classification of Remote Sensing Data: An Overview of Recent Advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Bruzzone, L.; Persello, C. A Novel Approach To the Selection of Spatially Invariant Features for the Classification of Hyperspectral Images With Improved Generalization Capability. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3180–3191. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. Kernel-Based Domain-Invariant Feature Selection in Hyperspectral Images for Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2615–2626. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Tardy, B.; Morin, D.; Rodes, I. Operational High Resolution Land Cover Map Production At the Country Scale Using Satellite Image Time Series. Remote Sens. 2017, 9, 95. [Google Scholar] [CrossRef]

- Matasci, G.; Volpi, M.; Kanevski, M.; Bruzzone, L.; Tuia, D. Semisupervised Transfer Component Analysis for Domain Adaptation in Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3550–3564. [Google Scholar] [CrossRef]

- Bailly, A.; Chapel, L.; Tavenard, R.; Camps-Valls, G. Nonlinear Time-Series Adaptation for Land Cover Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1–5. [Google Scholar] [CrossRef]

- Inamdar, S.; Bovolo, F.; Bruzzone, L.; Chaudhuri, S. Multidimensional Probability Density Function Matching for Preprocessing of Multitemporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1243–1252. [Google Scholar] [CrossRef]

- Petitjean, F.; Inglada, J.; Gancarski, P. Satellite Image Time Series Analysis Under Time Warping. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3081–3095. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D. Unsupervised Retraining of a Maximum Likelihood Classifier for the Analysis of Multitemporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2001, 39, 456–460. [Google Scholar] [CrossRef]

- Bruzzone, L.; Cossu, R. A Multiple-Cascade-Classifier System for a Robust and Partially Unsupervised Updating of Land-Cover Maps. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1984–1996. [Google Scholar] [CrossRef]

- Patel, V.M.; Gopalan, R.; Li, R.; Chellappa, R. Visual Domain Adaptation: A Survey of Recent Advances. IEEE Signal Process. Mag. 2015, 32, 53–69. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENμS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Flamary, R.; Fauvel, M.; Dalla Mura, M.; Valero, S. Analysis of Multitemporal Classification Techniques for Forecasting Image Time Series. IEEE Geosci. Remote Sens. Lett. 2015, 12, 953–957. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Lam, L.; Suen, S. Application of Majority Voting To Pattern Recognition: An Analysis of Its Behavior and Performance. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 1997, 27, 553–568. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Sicre, C.M.; Dedieu, G. Effect of Training Class Label Noise on Classification Performances for Land Cover Mapping With Satellite Image Time Series. Remote Sens. 2017, 9, 173. [Google Scholar] [CrossRef]

| Number of Samples of Each Period | ||||||||

|---|---|---|---|---|---|---|---|---|

| Years | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | 2013 | |

| Classes | ||||||||

| Broad-leaved tree | 33,659 | 39,060 | 40,905 | 28,702 | 39,743 | 39,743 | 39,989 | |

| Pine | 10,160 | 13,112 | 6486 | 3703 | 3611 | 3611 | 3611 | |

| Wheat | 66,116 | 49,848 | 23,854 | 66,047 | 340,803 | 58,476 | 97,825 | |

| Rapeseed | 27,651 | 12,933 | 25,937 | 13,869 | 67,104 | 9885 | 40,508 | |

| Barley | 1937 | 5908 | 3564 | 1203 | 35,799 | 12,055 | 20,270 | |

| Maize | 58,438 | 39,185 | 49,570 | 54,858 | 142,214 | 29,063 | 105,107 | |

| Sunflower | 5851 | 19,952 | 19,489 | 24,215 | 237,662 | 23,107 | 29,544 | |

| Sorghum | 2040 | 1746 | 10,696 | 9829 | 8806 | 0 | 362 | |

| Soya | 754 | 7921 | 8816 | 6497 | 12,482 | 0 | 2308 | |

| Artificial Surface | 1550 | 1047 | 1047 | 1339 | 2089 | 1426 | 1496 | |

| Fallow land | 16,148 | 5145 | 3396 | 0 | 35,110 | 0 | 0 | |

| Wasteland | 1089 | 1299 | 9954 | 4142 | 10,357 | 10,357 | 14,208 | |

| River | 5806 | 9092 | 6825 | 6736 | 13,298 | 8850 | 10,071 | |

| Lake | 14,294 | 9997 | 10,090 | 20,070 | 4615 | 4440 | 4508 | |

| Gravel pit | 14,659 | 12,919 | 12,919 | 11,496 | 12,894 | 12,894 | 12,894 | |

| Hemp | 0 | 0 | 960 | 1806 | 5881 | 670 | 279 | |

| Grass | 42,656 | 11,900 | 13,571 | 18,379 | 120,299 | 21,182 | 25,858 | |

| Time Series | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | 2013 |

|---|---|---|---|---|---|---|---|

| Model 2007 | |||||||

| Model 2008 | |||||||

| Model 2009 | |||||||

| Model 2010 | |||||||

| Model 2011 | |||||||

| Model 2012 | |||||||

| Model 2013 |

| Class | Sup | MV | CV | SC | PV | NB |

|---|---|---|---|---|---|---|

| broad-leaved tree | ||||||

| pine | ||||||

| wheat | ||||||

| rapeseed | ||||||

| barley | ||||||

| maize | ||||||

| sunflower | ||||||

| sorghum | ||||||

| soybean | ||||||

| artificial surfaces | ||||||

| fallows | ||||||

| wastelands | ||||||

| river | ||||||

| lake | ||||||

| gravel pit | ||||||

| hemp | ||||||

| grass |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tardy, B.; Inglada, J.; Michel, J. Fusion Approaches for Land Cover Map Production Using High Resolution Image Time Series without Reference Data of the Corresponding Period. Remote Sens. 2017, 9, 1151. https://doi.org/10.3390/rs9111151

Tardy B, Inglada J, Michel J. Fusion Approaches for Land Cover Map Production Using High Resolution Image Time Series without Reference Data of the Corresponding Period. Remote Sensing. 2017; 9(11):1151. https://doi.org/10.3390/rs9111151

Chicago/Turabian StyleTardy, Benjamin, Jordi Inglada, and Julien Michel. 2017. "Fusion Approaches for Land Cover Map Production Using High Resolution Image Time Series without Reference Data of the Corresponding Period" Remote Sensing 9, no. 11: 1151. https://doi.org/10.3390/rs9111151