Sparse Unmixing of Hyperspectral Data with Noise Level Estimation

Abstract

:1. Introduction

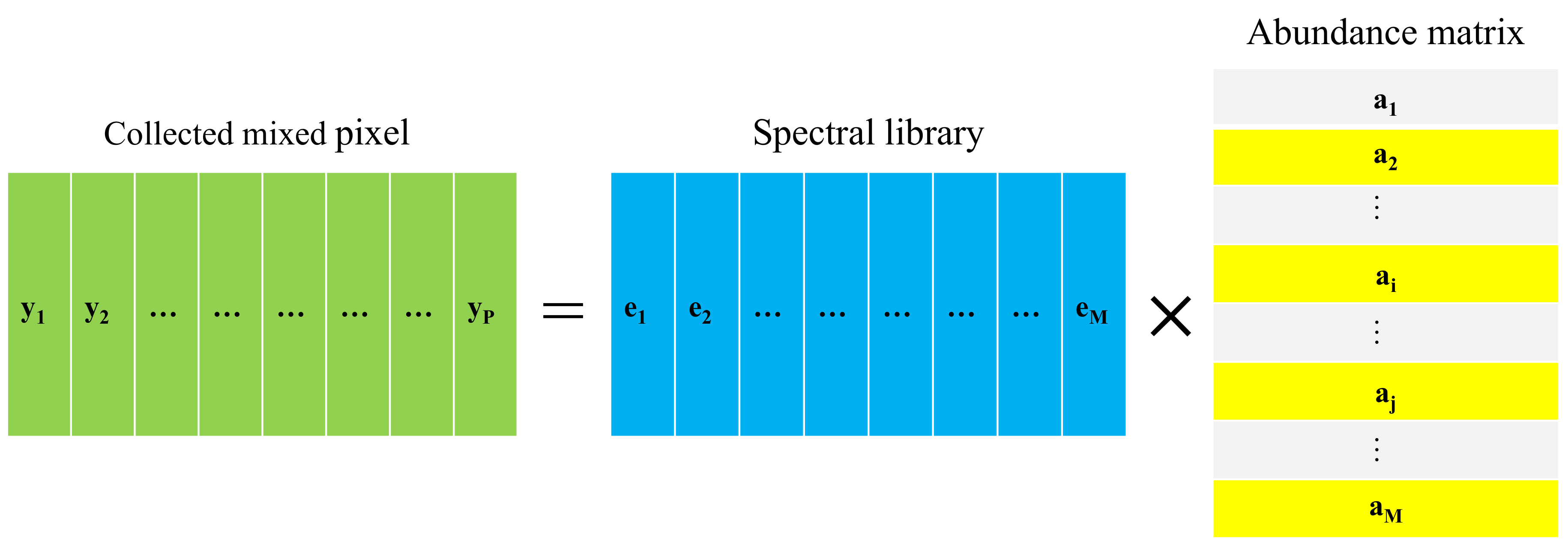

2. Sparse Unmixing of HSI with Noise Level Estimation

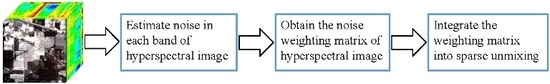

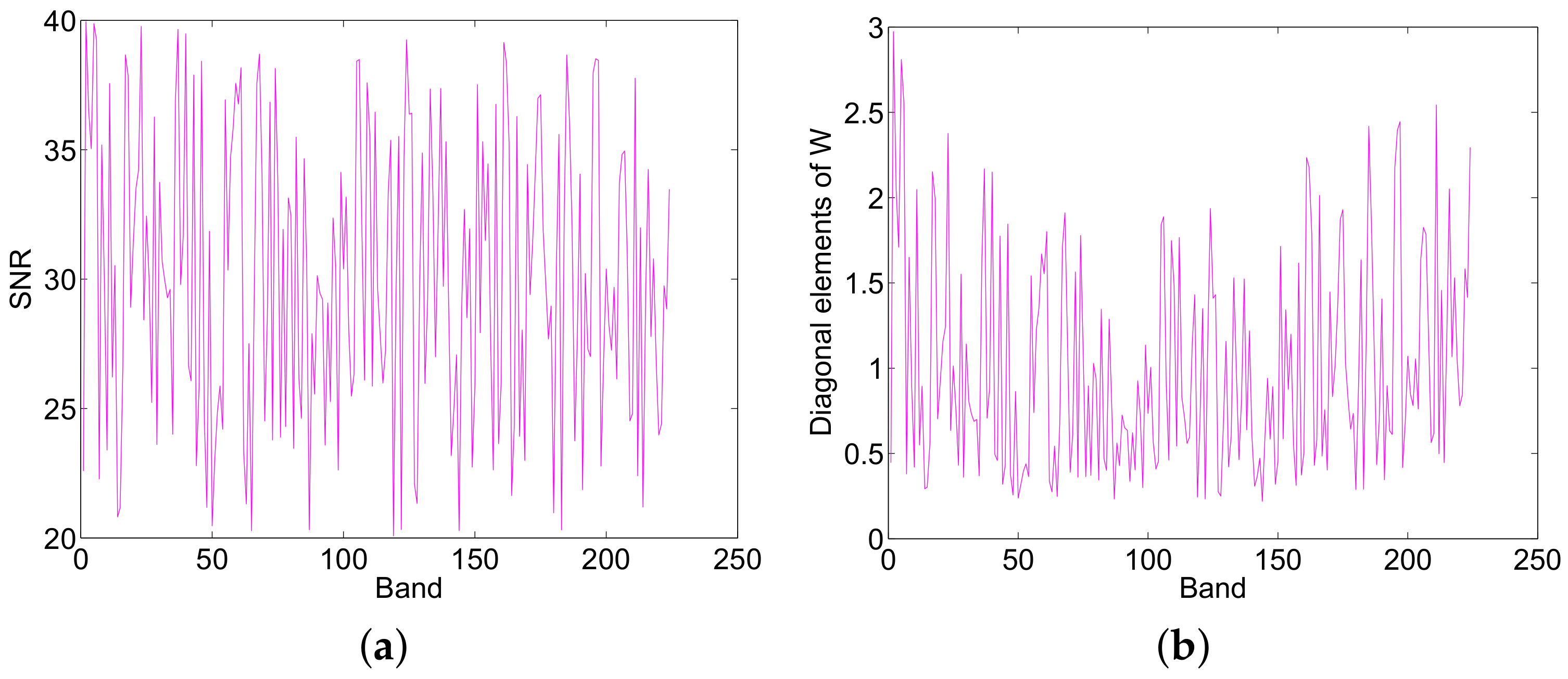

2.1. The Proposed SU-NLE

| Algorithm 1: Obtain the weighting matrix |

|

2.2. ADMM for Solving SU-NLE

| Algorithm 2: Solving Equation (12) with ADMM |

|

2.3. Relation to Traditional Sparse Regression Unmixing Methods

3. Experiments

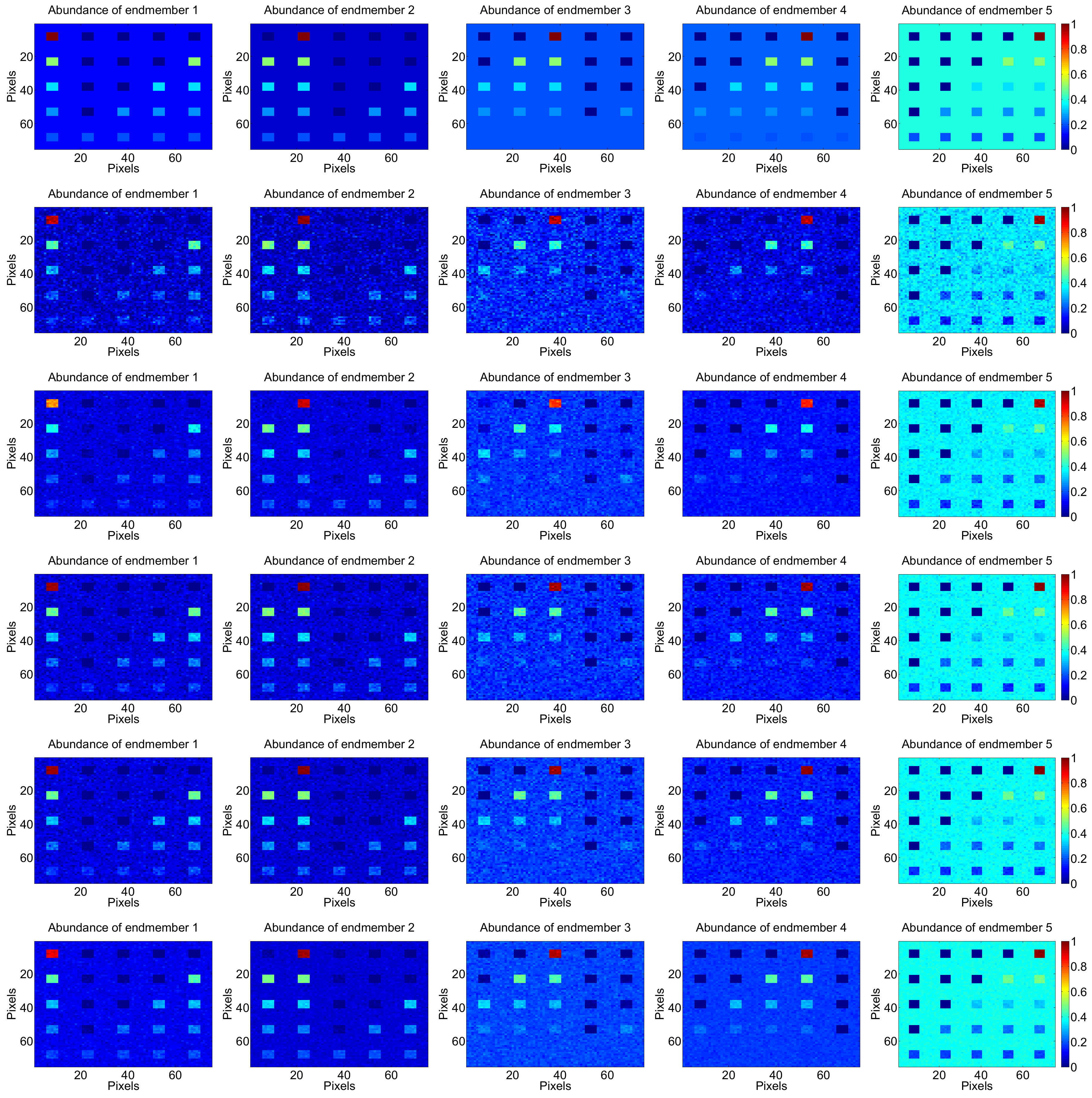

3.1. Experimental Results with Synthetic Data

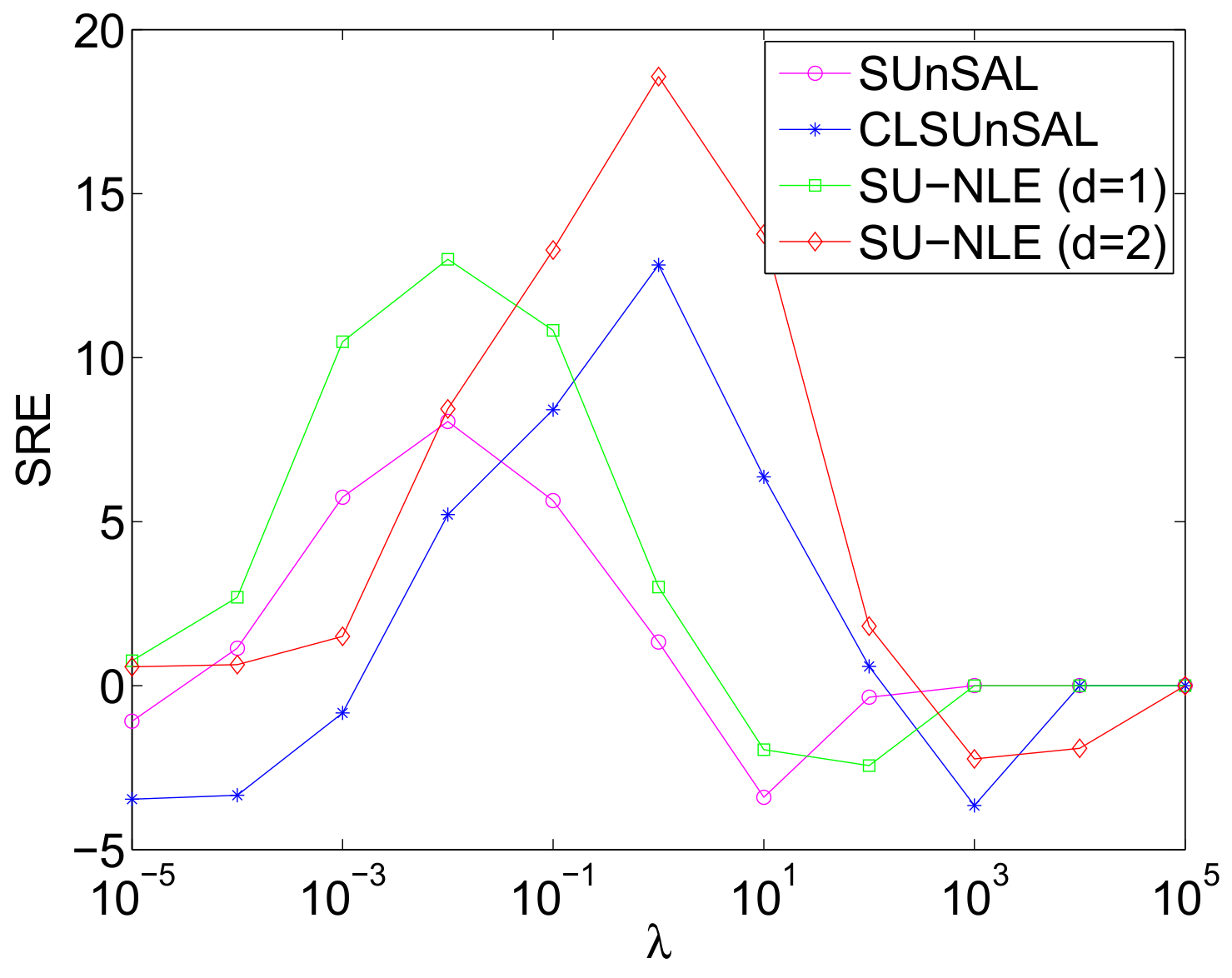

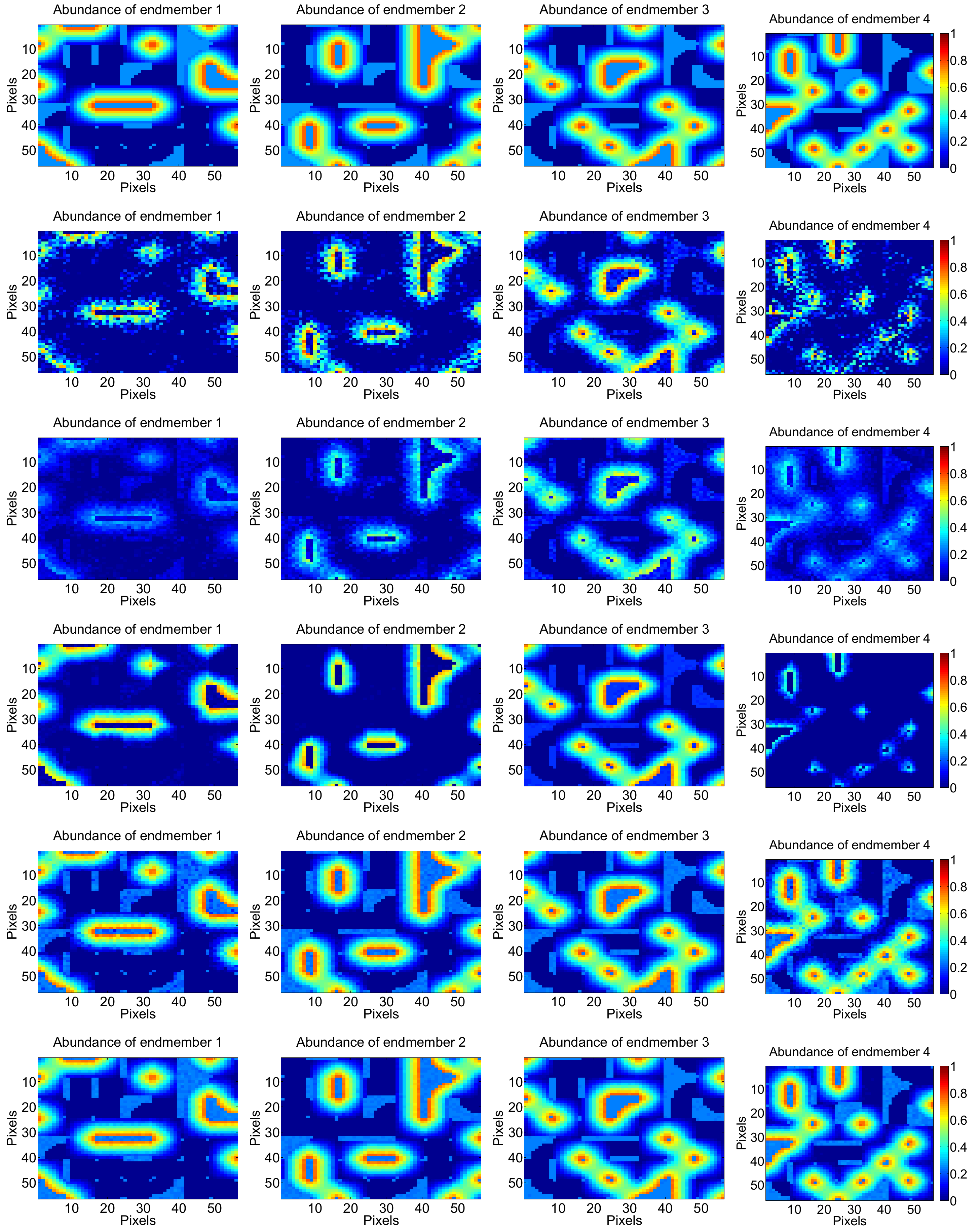

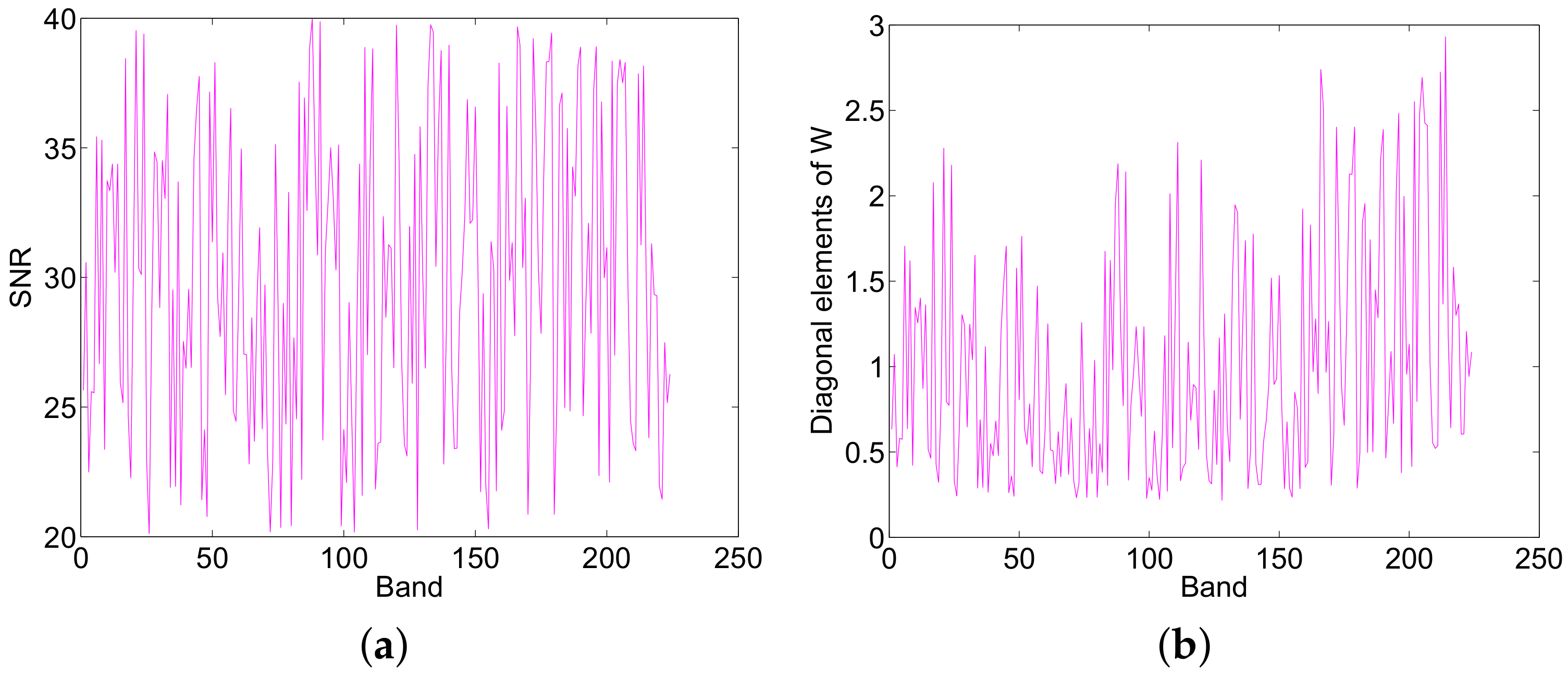

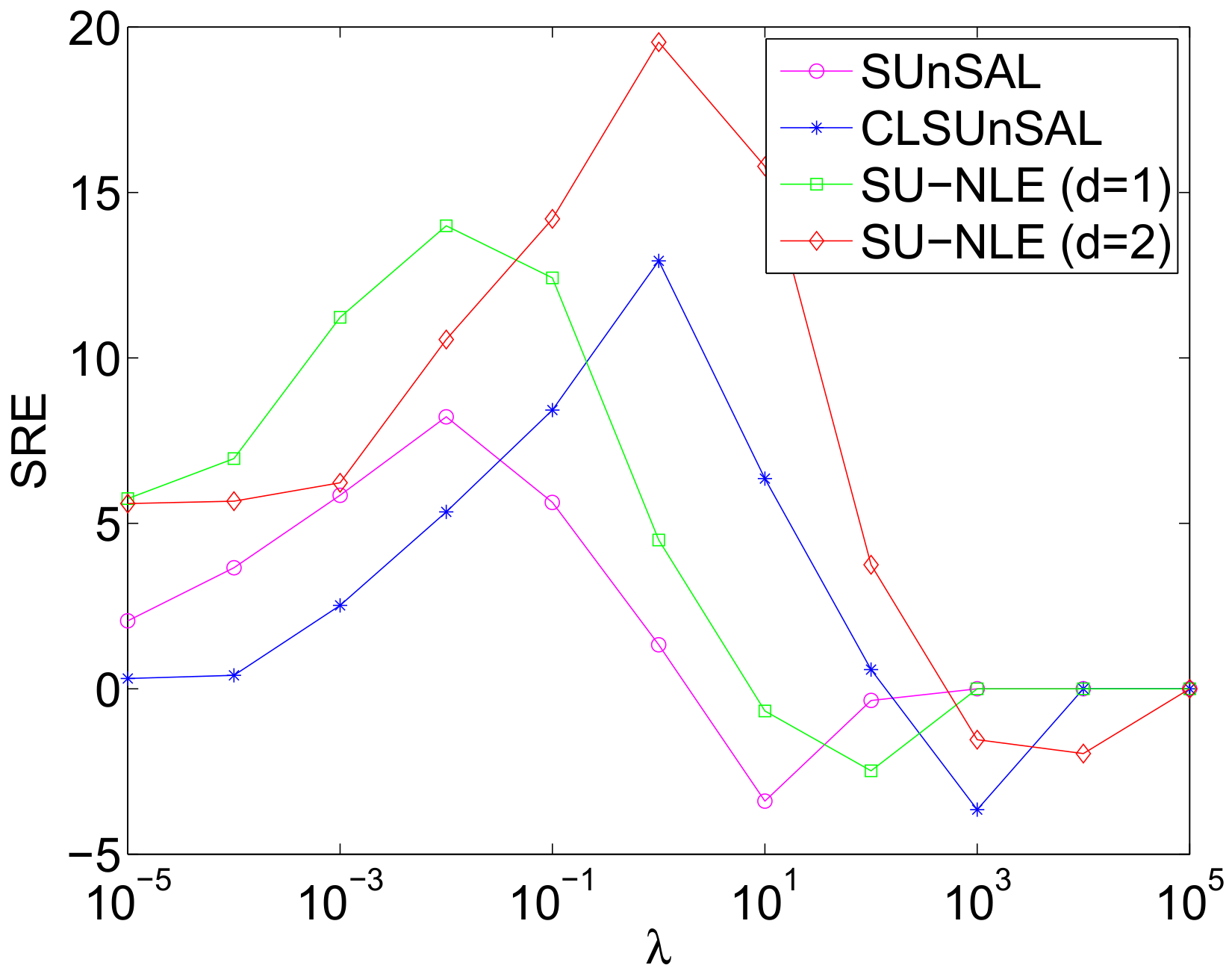

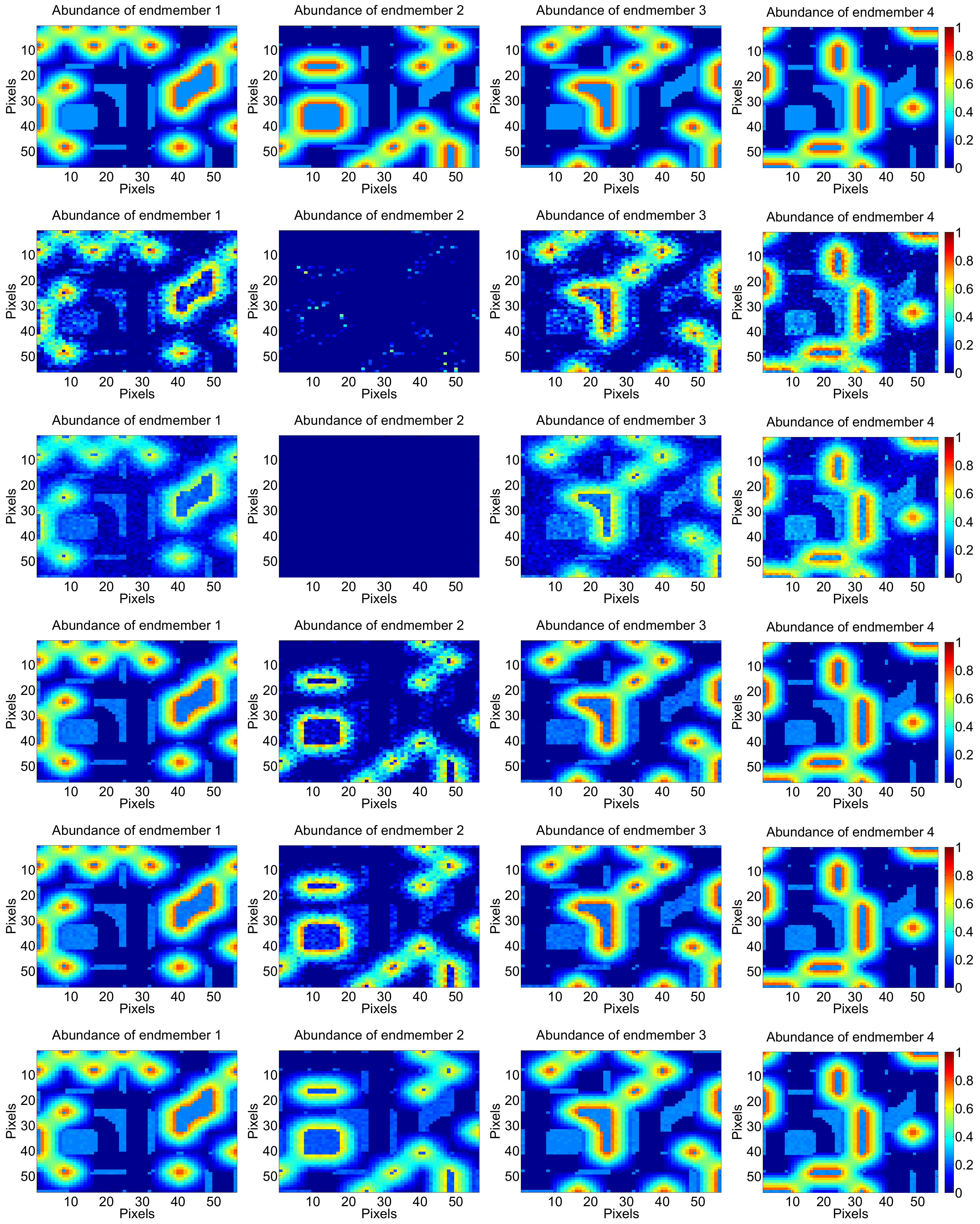

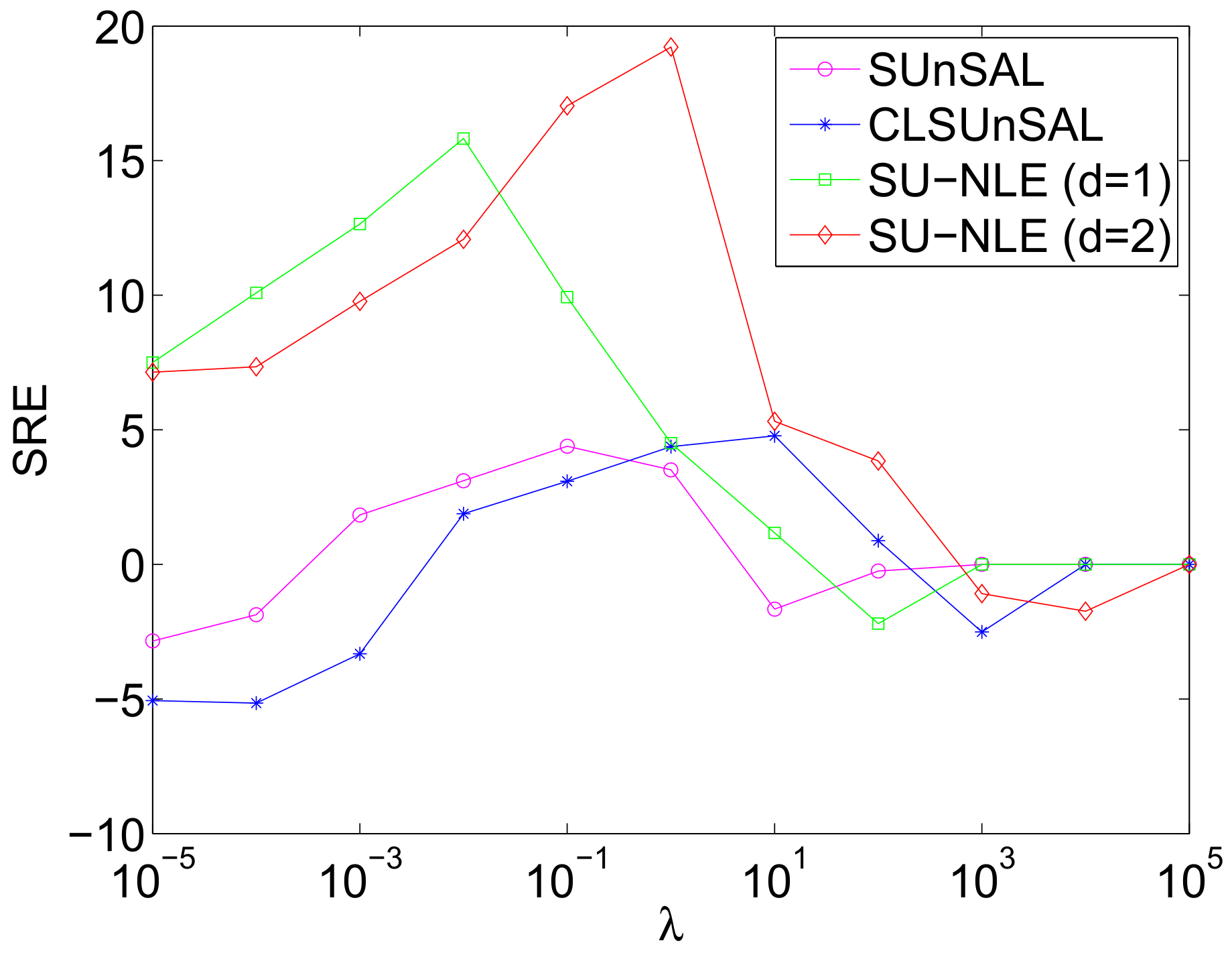

3.1.1. Simulated experiment I

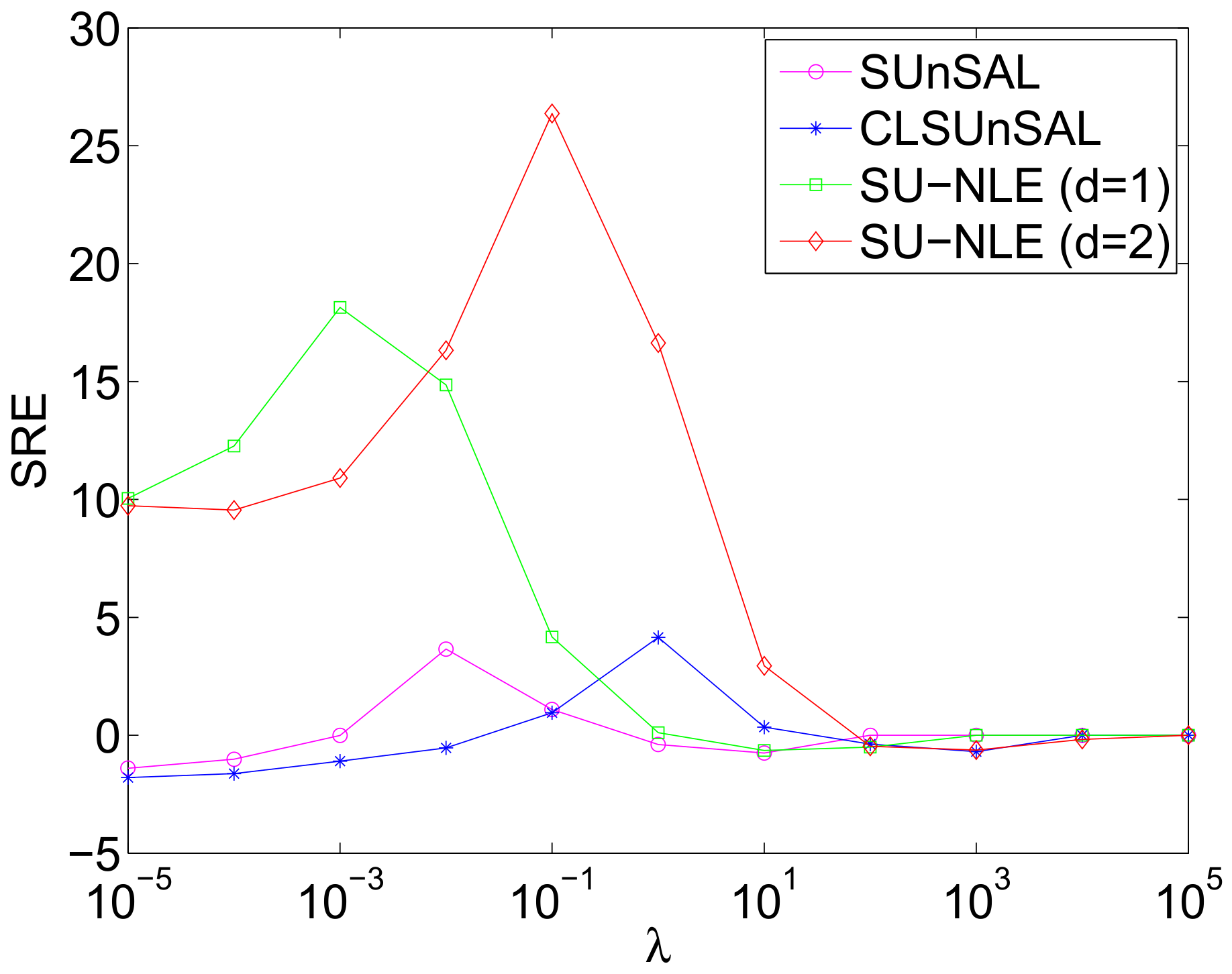

3.1.2. Simulated experiment II

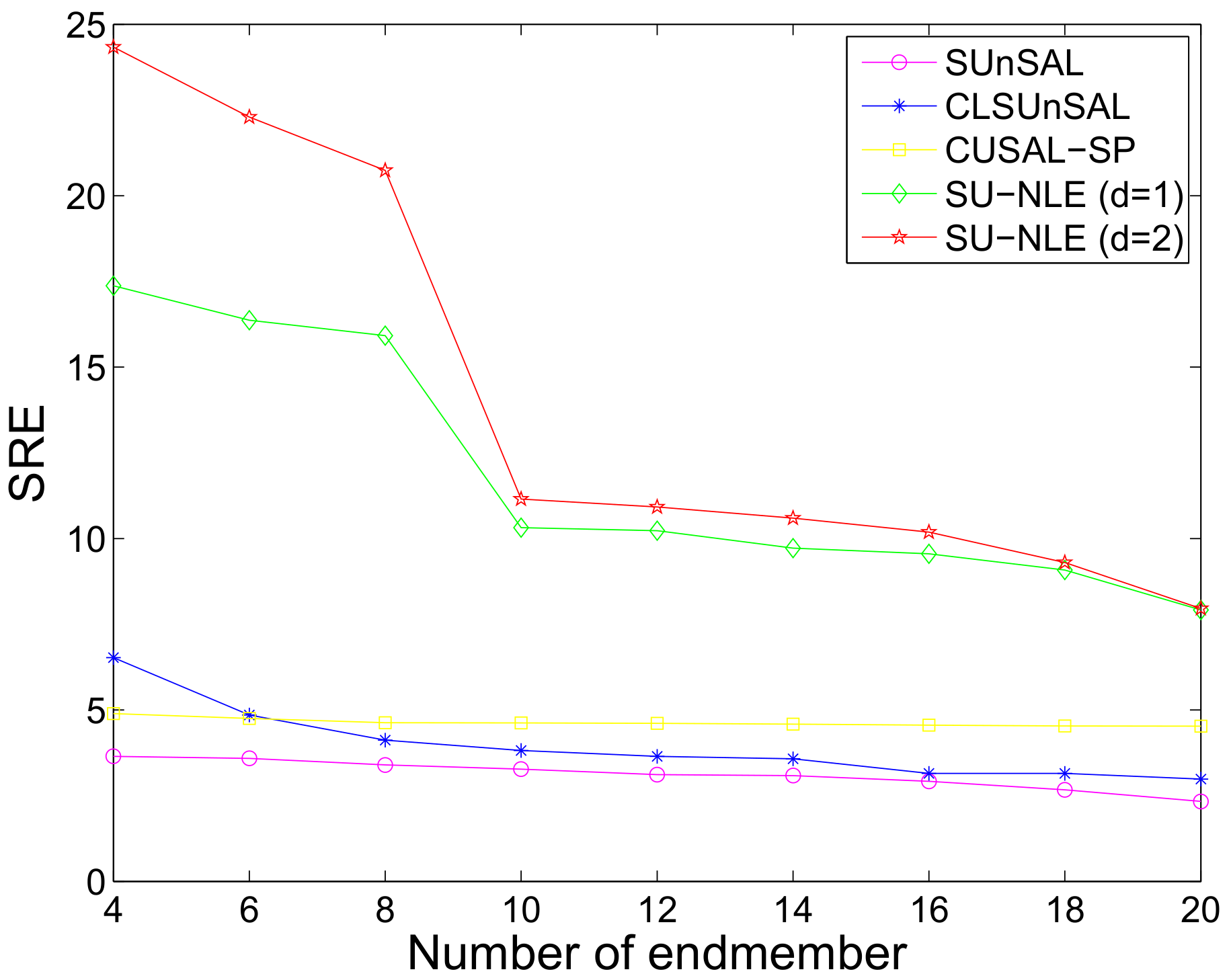

3.1.3. Simulated experiment III

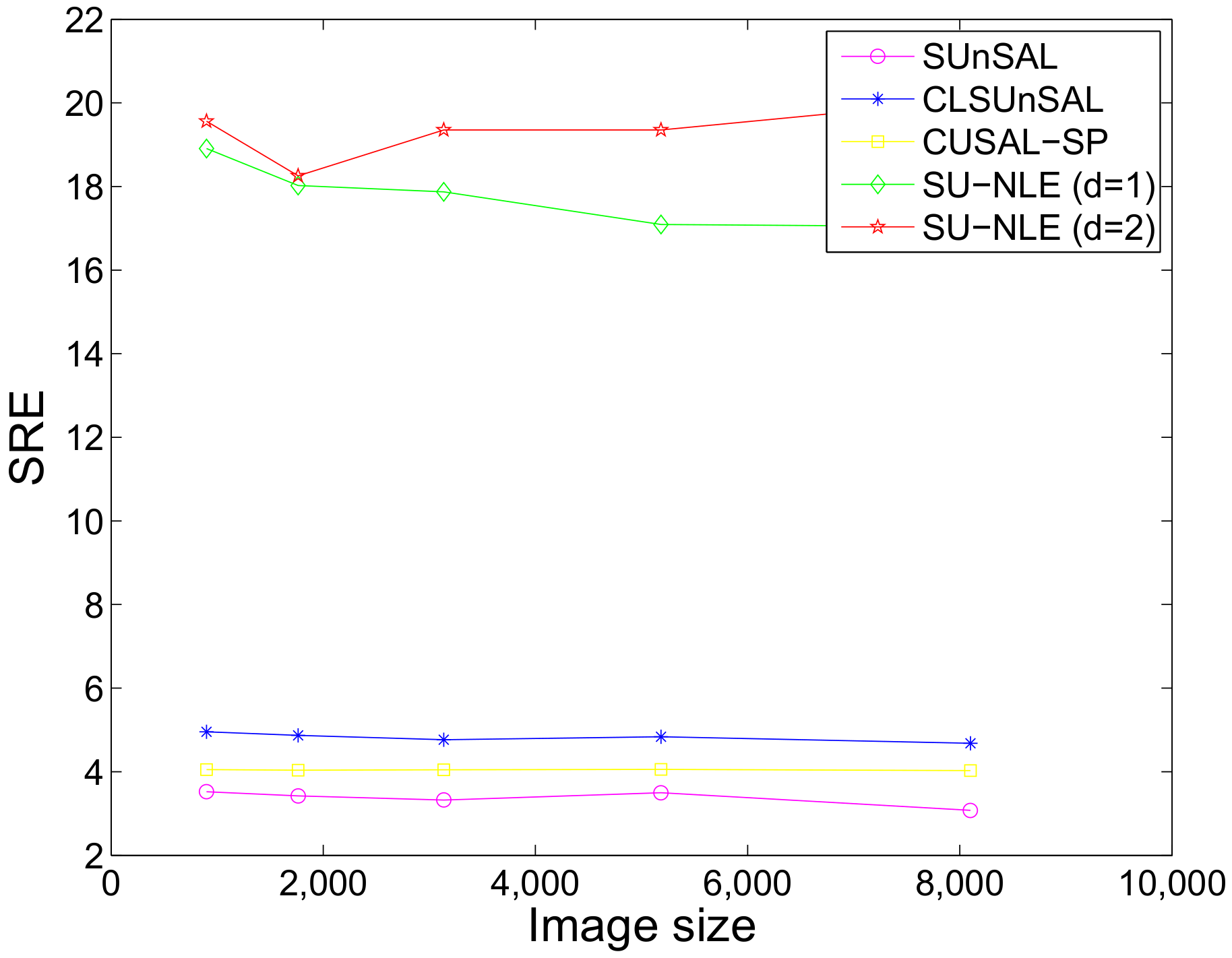

3.1.4. Simulated experiment IV

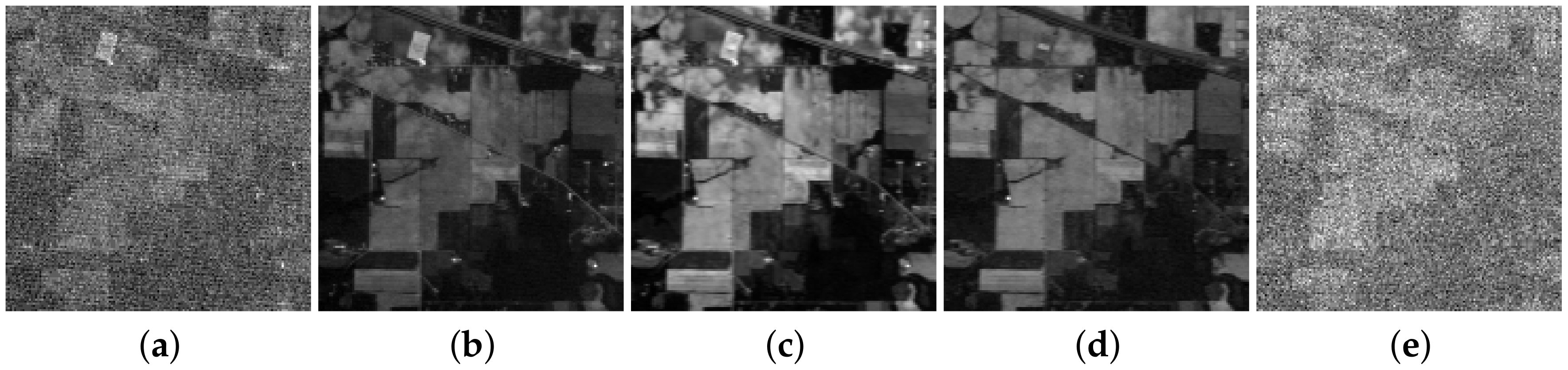

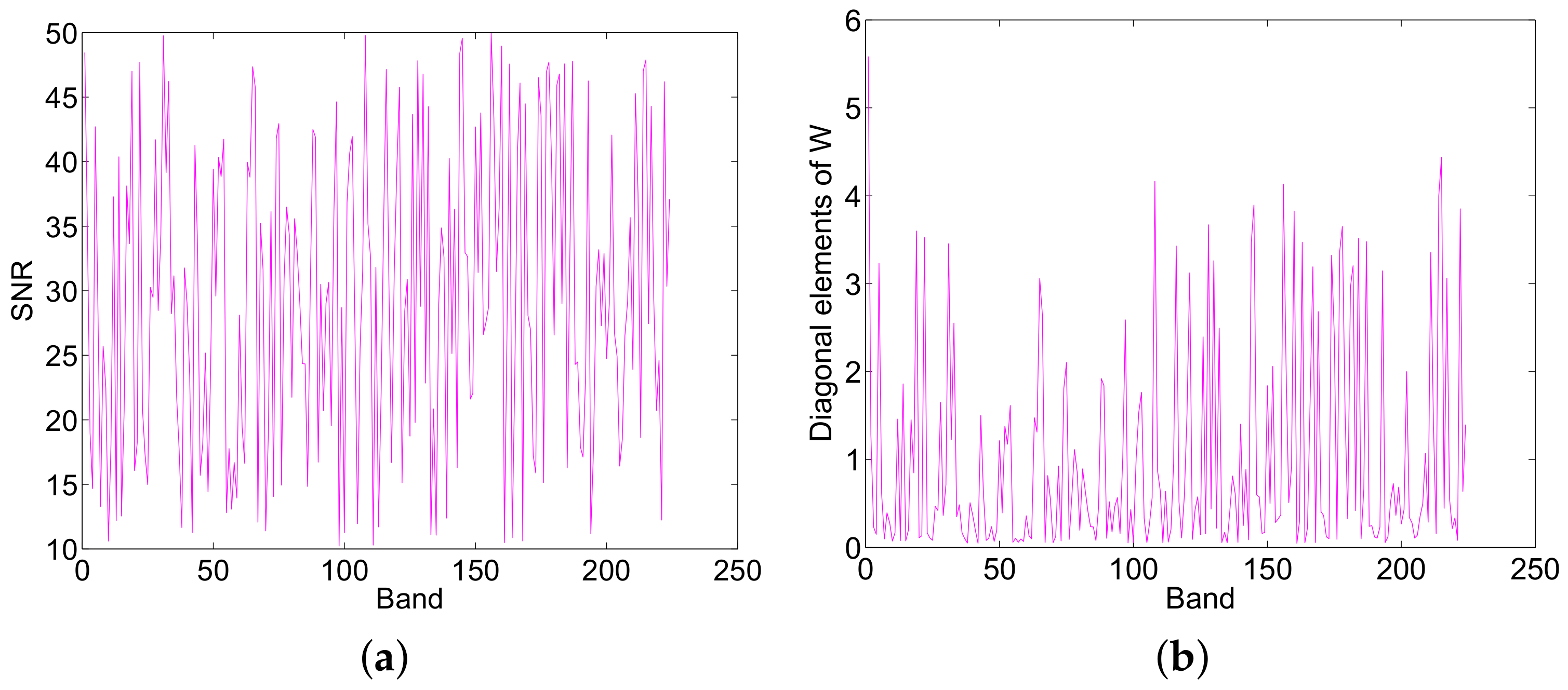

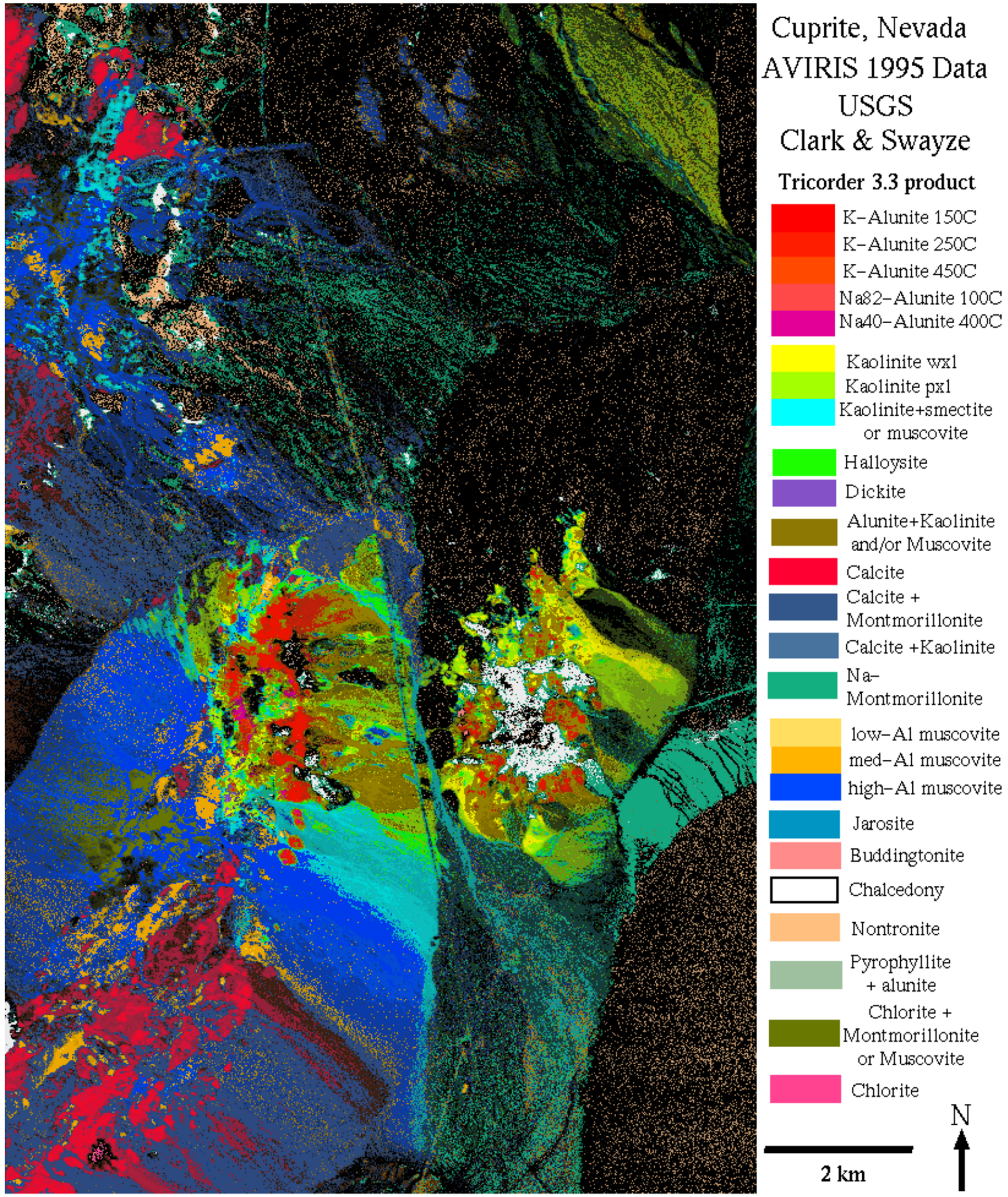

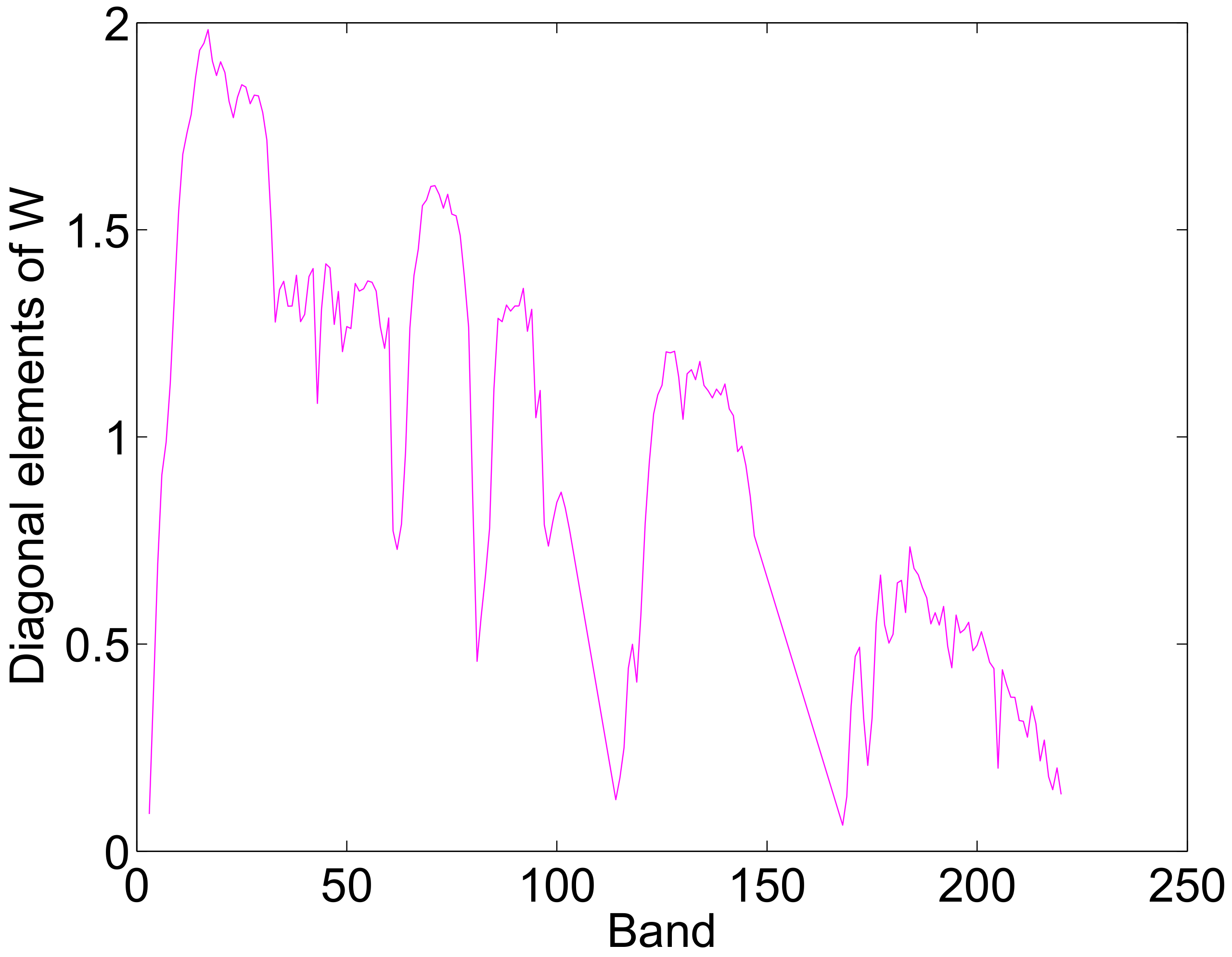

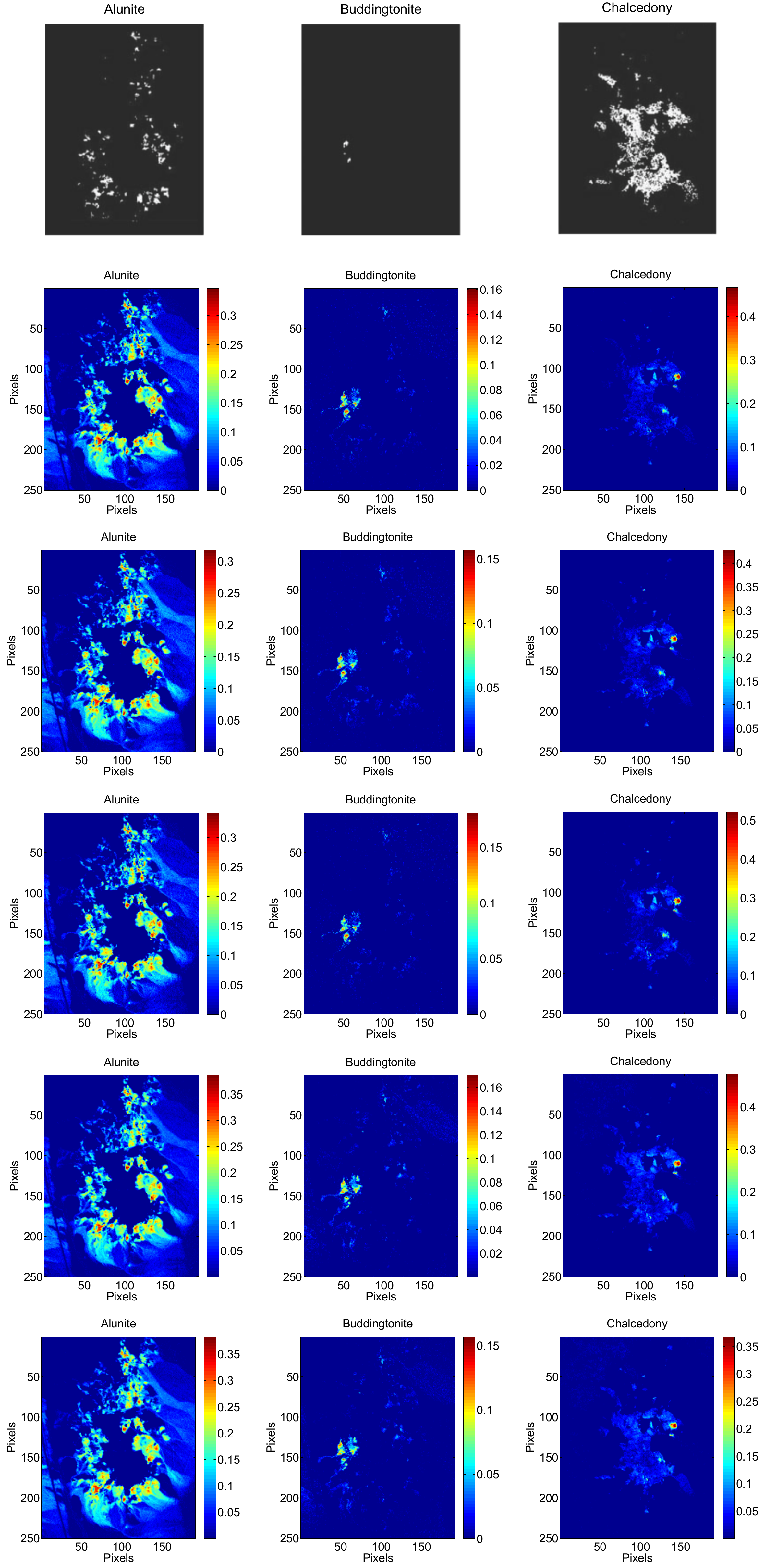

3.2. Experimental Results with Real Data

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Williams, M.D.; Parody, R.J.; Fafard, A.J.; Kerekes, J.P.; van Aardt, J. Validation of Abundance Map Reference Data for Spectral Unmixing. Remote Sens. 2017, 9, 473. [Google Scholar] [CrossRef]

- Fan, H.; Chen, Y.; Guo, Y.; Zhang, H.; Kuang, G. Hyperspectral Image Restoration Using Low-Rank Tensor Recovery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4589–4604. [Google Scholar] [CrossRef]

- Ahmed, A.M.; Duran, O.; Zweiri, Y.; Smith, M. Hybrid Spectral Unmixing: Using Artificial Neural Networks for Linear/Non-Linear Switching. Remote Sens. 2017, 9, 775. [Google Scholar] [CrossRef]

- Sui, C.; Tian, Y.; Xu, Y.; Xie, Y. Unsupervised band selection by integrating the overall accuracy and redundancy. IEEE Geosci. Remote Sens. Lett. 2015, 12, 185–189. [Google Scholar]

- Li, Y.; Tao, C.; Tan, Y.; Shang, K.; Tian, J. Unsupervised multilayer feature learning for satellite image scene classification. IEEE Geosci. Remote Sens. Lett. 2016, 13, 157–161. [Google Scholar] [CrossRef]

- Li, Y.; Huang, X.; Liu, H. Unsupervised deep feature learning for urban village detection from high-resolution remote sensing images. Photogramm. Eng. Remote Sens. 2017, 83, 567–579. [Google Scholar] [CrossRef]

- Ghasrodashti, E.K.; Karami, A.; Heylen, R.; Scheunders, P. Spatial Resolution Enhancement of Hyperspectral Images Using Spectral Unmixing and Bayesian Sparse Representation. Remote Sens. 2017, 9, 541. [Google Scholar]

- Li, C.; Ma, Y.; Mei, X.; Liu, C.; Ma, J. Hyperspectral Image Classification With Robust Sparse Representation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 641–645. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Huang, X.; Zhu, H.; Ma, J. Large-Scale Remote Sensing Image Retrieval by Deep Hashing Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, in press. [Google Scholar] [CrossRef]

- Sui, C.; Tian, Y.; Xu, Y.; Xie, Y. Weighted Spectral-Spatial Classification of Hyperspectral Images via Class-Specific Band Contribution. IEEE Trans. Geosci. Remote Sens. 2017, 1–15. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, H.; Zhao, J.; Gao, Y.; Jiang, J.; Tian, J. Robust Feature Matching for Remote Sens. Image Registration via Locally Linear Transforming. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6469–6481. [Google Scholar] [CrossRef]

- Yang, K.; Pan, A.; Yang, Y.; Zhang, S.; Ong, S.H.; Tang, H. Remote Sensing Image Registration Using Multiple Image Features. Remote Sens. 2017, 9, 581. [Google Scholar] [CrossRef]

- Wei, Z.; Han, Y.; Li, M.; Yang, K.; Yang, Y.; Luo, Y.; Ong, S.H. A Small UAV Based Multi-Temporal Image Registration for Dynamic Agricultural Terrace Monitoring. Remote Sens. 2017, 9, 904. [Google Scholar] [CrossRef]

- Esmaeili Salehani, Y.; Gazor, S.; Kim, I.M.; Yousefi, S. l0-Norm Sparse Hyperspectral Unmixing Using Arctan Smoothing. Remote Sens. 2016, 8, 187. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Liu, R.; Du, B.; Zhang, L. Hyperspectral unmixing via double abundance characteristics constraints based NMF. Remote Sens. 2016, 8, 464. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Huang, J.; Mei, X.; Liu, C.; Ma, J. GBM-Based Unmixing of Hyperspectral Data Using Bound Projected Optimal Gradient Method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 952–956. [Google Scholar] [CrossRef]

- Themelis, K.; Rontogiannis, A.A.; Koutroumbas, K. Semi-Supervised Hyperspectral Unmixing via the Weighted Lasso. In Proceedings of the 35th International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Dallas, TX, USA, 15–19 March 2010; pp. 1194–1197. [Google Scholar]

- Heinz, D.C.; Chang, C.I. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Winter, M.E. N-FINDR: An algorithm for fast autonomous spectral end-member determination in hyperspectral data. In Proceedings of the SPIE’s International Symposium on Optical Science, Engineering, and Instrumentation, Denver, CO, USA, 27 October 1999; International Society for Optics and Photonics: Bellingham, DC, USA, 1999; pp. 266–275. [Google Scholar]

- Nascimento, J.M.; Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Nascimento, J.M.; Dias, J.M. Does independent component analysis play a role in unmixing hyperspectral data? IEEE Trans. Geosci. Remote Sens. 2005, 43, 175–187. [Google Scholar] [CrossRef]

- Wang, J.; Chang, C.I. Applications of independent component analysis in endmember extraction and abundance quantification for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2601–2616. [Google Scholar] [CrossRef]

- Pauca, V.P.; Piper, J.; Plemmons, R.J. Nonnegative matrix factorization for spectral data analysis. Linear Algebra Appl. 2006, 416, 29–47. [Google Scholar] [CrossRef]

- Jia, S.; Qian, Y. Constrained nonnegative matrix factorization for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2009, 47, 161–173. [Google Scholar] [CrossRef]

- Qian, Y.; Jia, S.; Zhou, J.; Robles-Kelly, A. Hyperspectral unmixing via sparsity-constrained nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4282–4297. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Tian, J.; Bai, X.; Tu, Z. Regularized vector field learning with sparse approximation for mismatch removal. Pattern Recognit. 2013, 46, 3519–3532. [Google Scholar] [CrossRef]

- Gao, Y.; Ma, J.; Yuille, A.L. Semi-Supervised Sparse Representation Based Classification for Face Recognition With Insufficient Labeled Samples. IEEE Trans. Image Process. 2017, 26, 2545–2560. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Jiang, J.; Liu, C.; Li, Y. Feature guided Gaussian mixture model with semi-supervised EM and local geometric constraint for retinal image registration. Inf. Sci. 2017, 417, 128–142. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Futuristic greedy approach to sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2157–2174. [Google Scholar] [CrossRef]

- Tang, W.; Shi, Z.; Wu, Y. Regularized simultaneous forward-backward greedy algorithm for sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5271–5288. [Google Scholar] [CrossRef]

- Shi, Z.; Tang, W.; Duren, Z.; Jiang, Z. Subspace matching pursuit for sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3256–3274. [Google Scholar] [CrossRef]

- Fu, X.; Ma, W.K.; Chan, T.H.; Bioucas-Dias, J. Self-Dictionary Sparse Regression for Hyperspectral Unmixing: Greedy Pursuit and Pure Pixel Search Are Related. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 1128–1141. [Google Scholar] [CrossRef]

- Zhang, G.; Xu, Y.; Fang, F. Framelet-Based Sparse Unmixing of Hyperspectral Images. IEEE Trans. Image Process. 2016, 25, 1516–1529. [Google Scholar] [CrossRef] [PubMed]

- Bioucas-Dias, J.M.; Figueiredo, M.A. Alternating direction algorithms for constrained sparse regression: Application to hyperspectral unmixing. In Proceedings of the 2010 2nd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Reykjavìk, Iceland, 14–16 June 2010; pp. 1–4. [Google Scholar]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Total variation spatial regularization for sparse hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4484–4502. [Google Scholar] [CrossRef]

- Mei, S.; Du, Q.; He, M. Equivalent-Sparse Unmixing Through Spatial and Spectral Constrained Endmember Selection From an Image-Derived Spectral Library. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2665–2675. [Google Scholar] [CrossRef]

- Zhong, Y.; Feng, R.; Zhang, L. Non-local sparse unmixing for hyperspectral remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1889–1909. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Collaborative sparse regression for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 341–354. [Google Scholar] [CrossRef]

- Zheng, C.Y.; Li, H.; Wang, Q.; Chen, C.P. Reweighted Sparse Regression for Hyperspectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2016, 54, 479–488. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. Adaptive Spatial Regularization Sparse Unmixing Strategy Based on Joint MAP for Hyperspectral Remote Sens. Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5791–5805. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Mei, X.; Liu, C.; Ma, J. Hyperspectral unmixing with robust collaborative sparse regression. Remote Sens. 2016, 8, 588. [Google Scholar] [CrossRef]

- Ma, Y.; Li, C.; Mei, X.; Liu, C.; Ma, J. Robust Sparse Hyperspectral Unmixing With ℓ2,1 Norm. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1227–1239. [Google Scholar] [CrossRef]

- Chen, F.; Zhang, Y. Sparse Hyperspectral Unmixing Based on Constrained lp-l 2 Optimization. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1142–1146. [Google Scholar] [CrossRef]

- Xu, Y.; Fang, F.; Zhang, G. Similarity-Guided and-Regularized Sparse Unmixing of Hyperspectral Data. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2311–2315. [Google Scholar] [CrossRef]

- Themelis, K.E.; Rontogiannis, A.A.; Koutroumbas, K.D. A novel hierarchical Bayesian approach for sparse semisupervised hyperspectral unmixing. IEEE Trans. Signal Process. 2012, 60, 585–599. [Google Scholar] [CrossRef]

- Xu, X.; Shi, Z. Multi-objective based spectral unmixing for hyperspectral images. ISPRS J. Photogramm. Remote Sens. 2017, 124, 54–69. [Google Scholar] [CrossRef]

- Tang, W.; Shi, Z.; Wu, Y.; Zhang, C. Sparse Unmixing of Hyperspectral Data Using Spectral A Priori Information. IEEE Trans. Geosci. Remote Sens. 2015, 53, 770–783. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, C.; Xiang, S.; Zhu, F. Robust hyperspectral unmixing with correntropy-based metric. IEEE Trans. Image Process. 2015, 24, 4027–4040. [Google Scholar] [CrossRef] [PubMed]

- Zhu, F.; Halimi, A.; Honeine, P.; Chen, B.; Zheng, N. Correntropy Maximization via ADMM: Application to Robust Hyperspectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4944–4955. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Nascimento, J.M. Hyperspectral subspace identification. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2435–2445. [Google Scholar] [CrossRef]

- Uss, M.L.; Vozel, B.; Lukin, V.V.; Chehdi, K. Local signal-dependent noise variance estimation from hyperspectral textural images. IEEE J. Sel. Top. Signal Process. 2011, 5, 469–486. [Google Scholar] [CrossRef]

- Gao, L.; Du, Q.; Zhang, B.; Yang, W.; Wu, Y. A comparative study on linear regression-based noise estimation for hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 488–498. [Google Scholar] [CrossRef]

- Eldar, Y.C.; Rauhut, H. Average case analysis of multichannel sparse recovery using convex relaxation. IEEE Trans. Inf. Theory 2010, 56, 505–519. [Google Scholar] [CrossRef]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Donoho, D.L. De-noising by soft-thresholding. IEEE Trans. Inf. Theory 1995, 41, 613–627. [Google Scholar] [CrossRef]

- Wright, S.J.; Nowak, R.D.; Figueiredo, M.A. Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 2009, 57, 2479–2493. [Google Scholar] [CrossRef]

- Hoyer, P.O. Non-negative matrix factorization with sparseness constraints. J. Mach. Learn. Res. 2004, 5, 1457–1469. [Google Scholar]

- Miao, L.; Qi, H. Endmember extraction from highly mixed data using minimum volume constrained nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2007, 45, 765–777. [Google Scholar] [CrossRef]

- Clark, R.N.; Swayze, G.A.; Livo, K.E.; Kokaly, R.F.; Sutley, S.J.; Dalton, J.B.; McDougal, R.R.; Gent, C.A. Imaging spectroscopy: Earth and planetary remote sensing with the USGS Tetracorder and expert systems. J. Geophys. Res. Planets 2003, 108. [Google Scholar] [CrossRef]

| Maxiter | |||

|---|---|---|---|

| {, , , , , 1, , , , , } | 1000 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Ma, Y.; Mei, X.; Fan, F.; Huang, J.; Ma, J. Sparse Unmixing of Hyperspectral Data with Noise Level Estimation. Remote Sens. 2017, 9, 1166. https://doi.org/10.3390/rs9111166

Li C, Ma Y, Mei X, Fan F, Huang J, Ma J. Sparse Unmixing of Hyperspectral Data with Noise Level Estimation. Remote Sensing. 2017; 9(11):1166. https://doi.org/10.3390/rs9111166

Chicago/Turabian StyleLi, Chang, Yong Ma, Xiaoguang Mei, Fan Fan, Jun Huang, and Jiayi Ma. 2017. "Sparse Unmixing of Hyperspectral Data with Noise Level Estimation" Remote Sensing 9, no. 11: 1166. https://doi.org/10.3390/rs9111166