Rolling Guidance Based Scale-Aware Spatial Sparse Unmixing for Hyperspectral Remote Sensing Imagery

Abstract

:1. Introduction

2. Rolling Guidance Based Spatial Sparse Unmixing

2.1. Spatial Sparse Unmixing

2.2. Rolling Guidance Spatial Regularization Model

2.3. Rolling Guidance Based Spatial Sparse Unmixing

| Algorithm 1 |

|

3. Experiments and Analysis

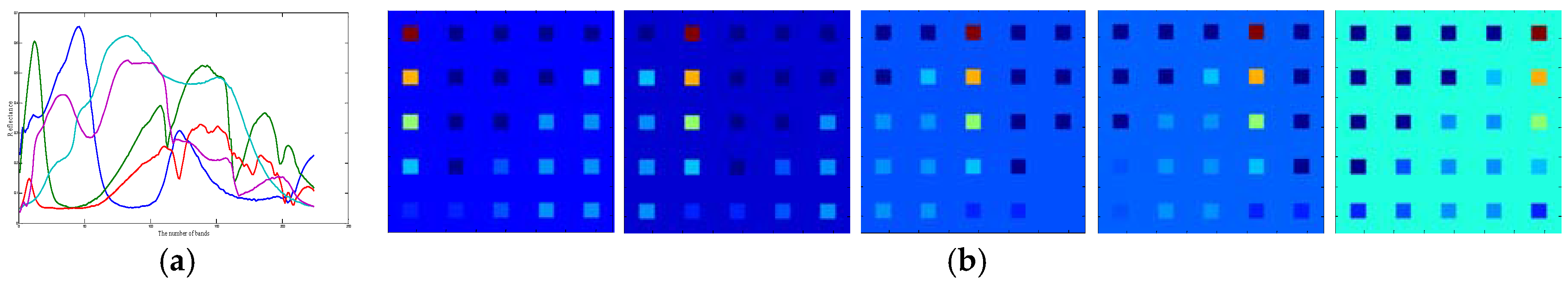

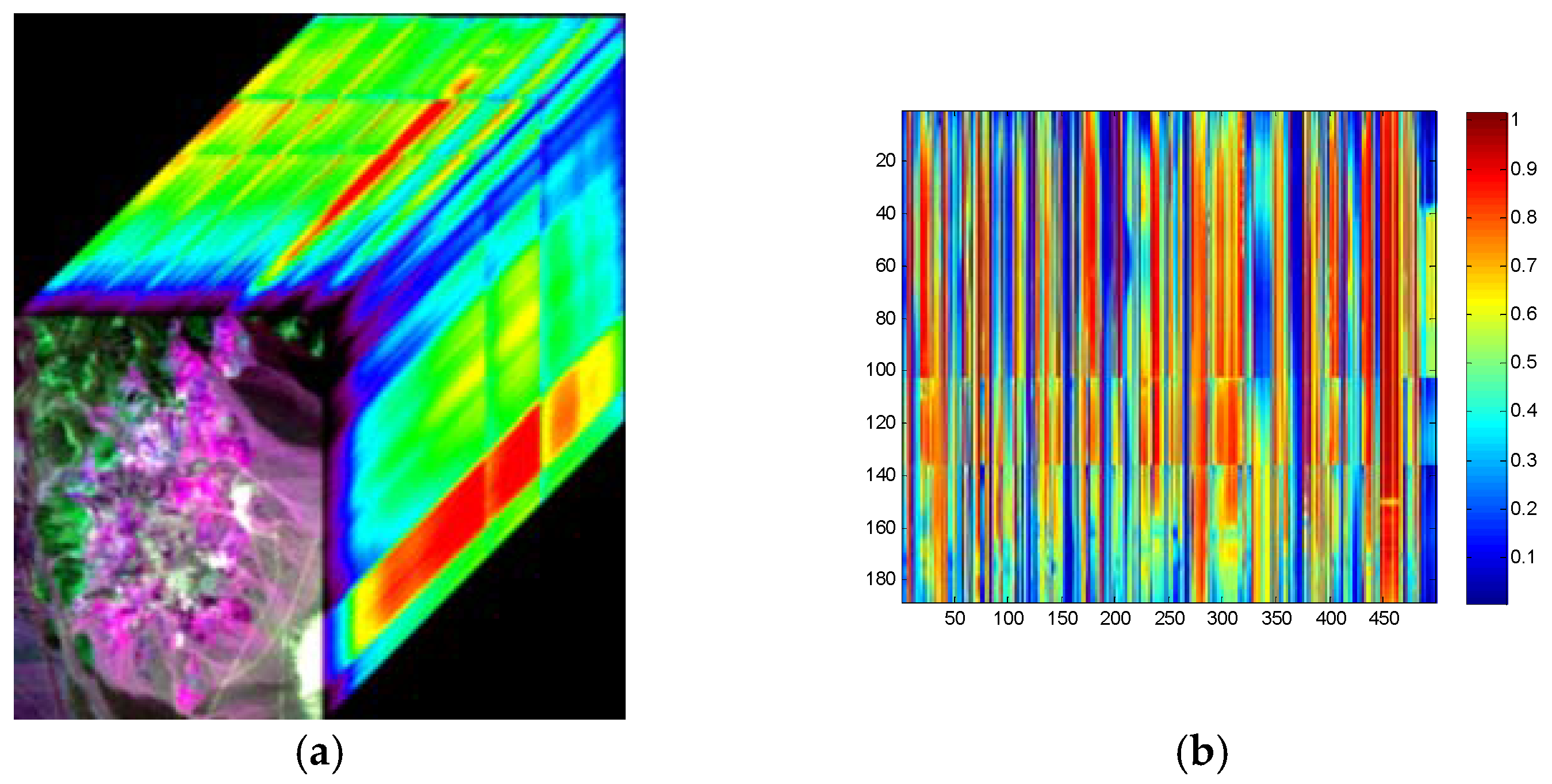

3.1. Experimental Datasets

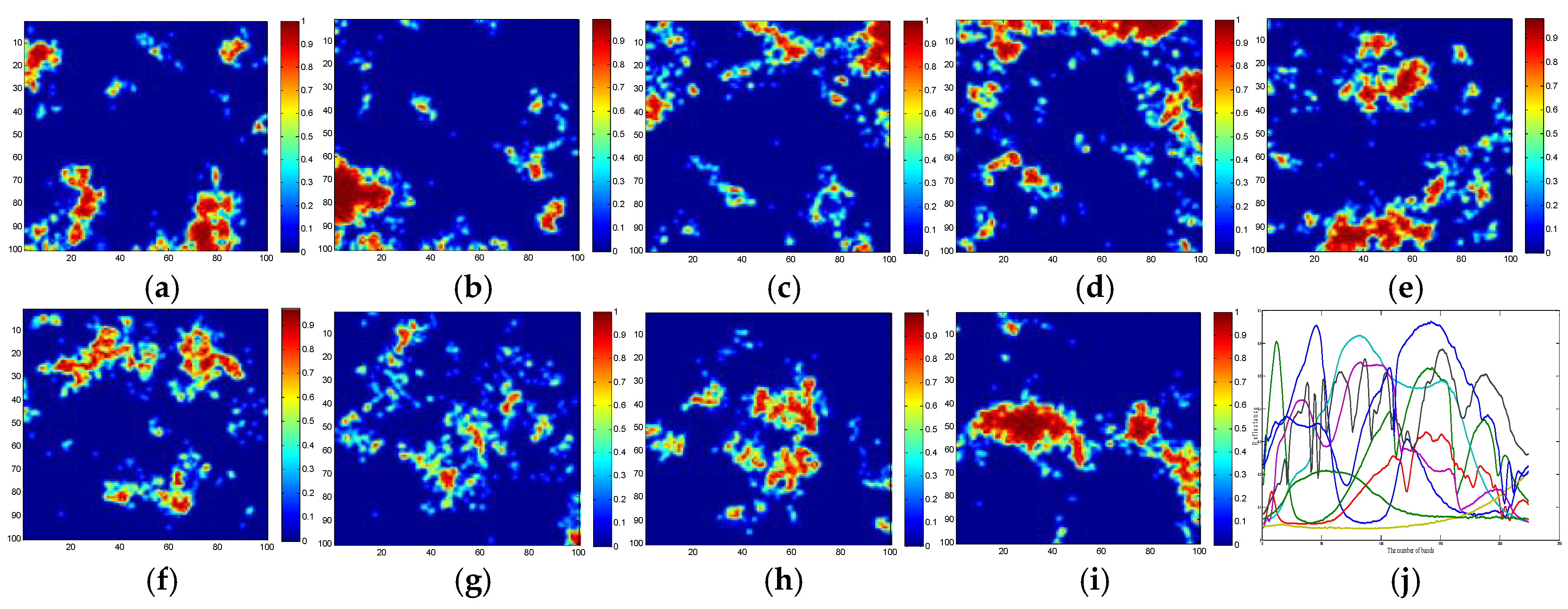

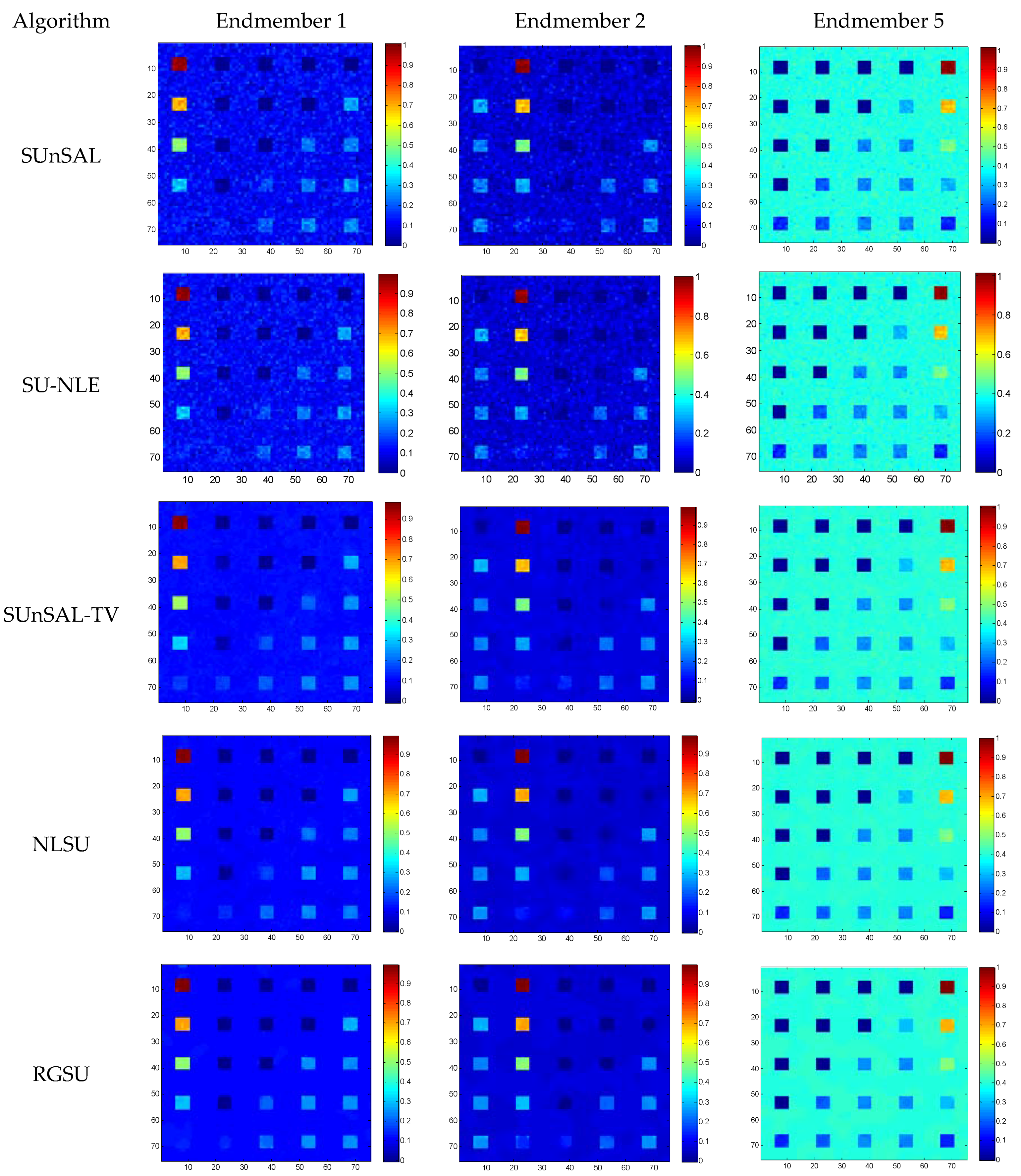

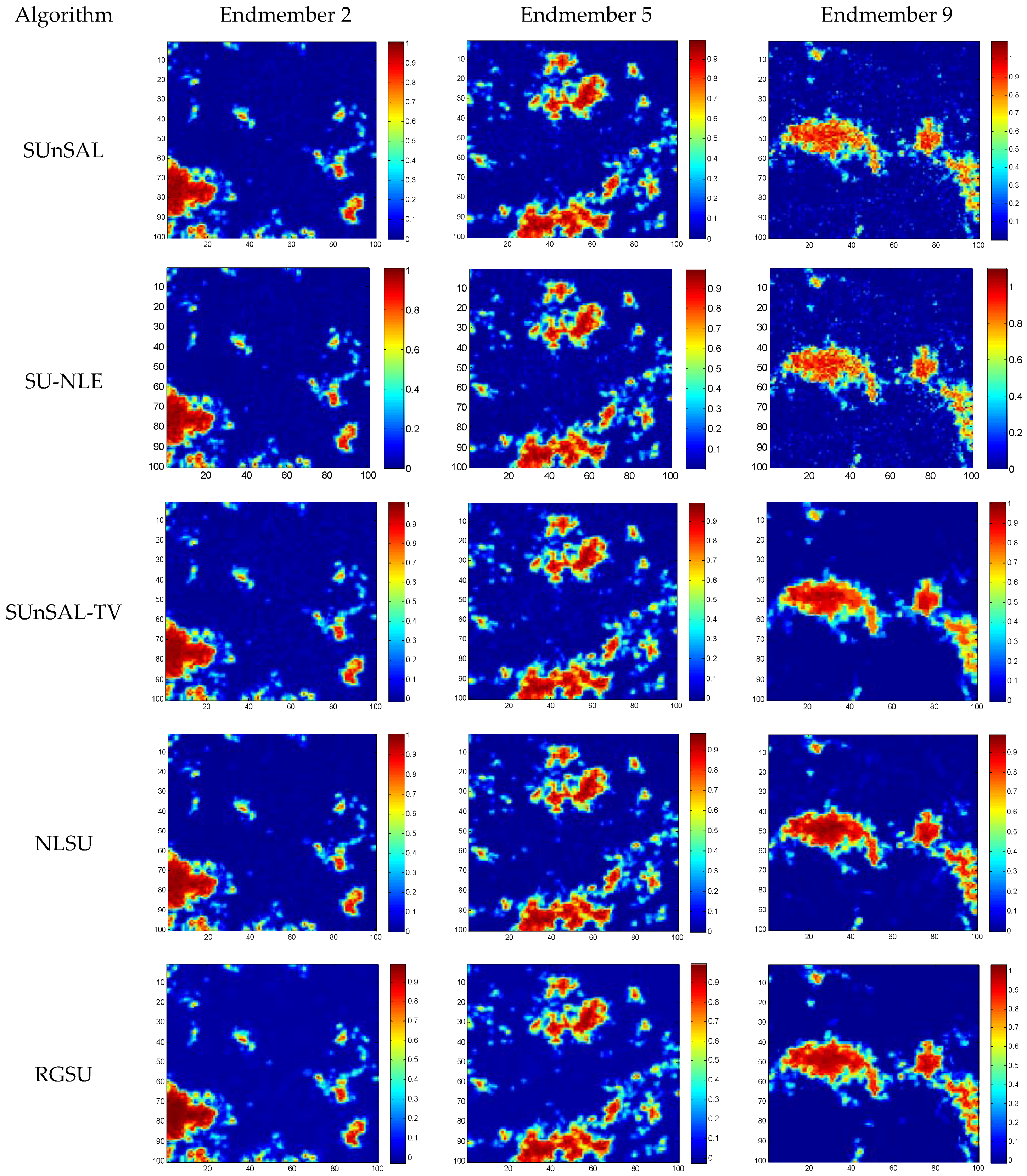

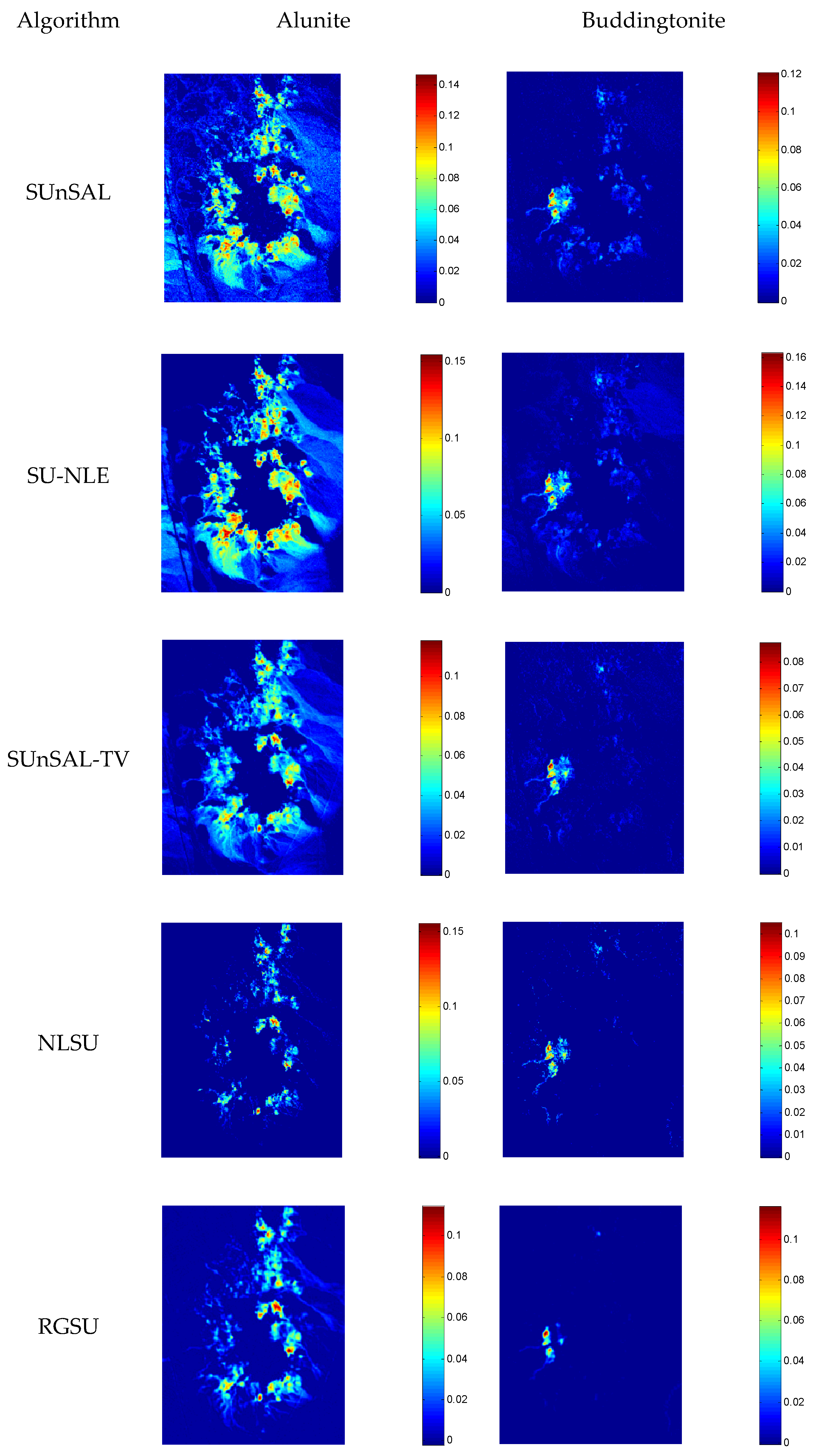

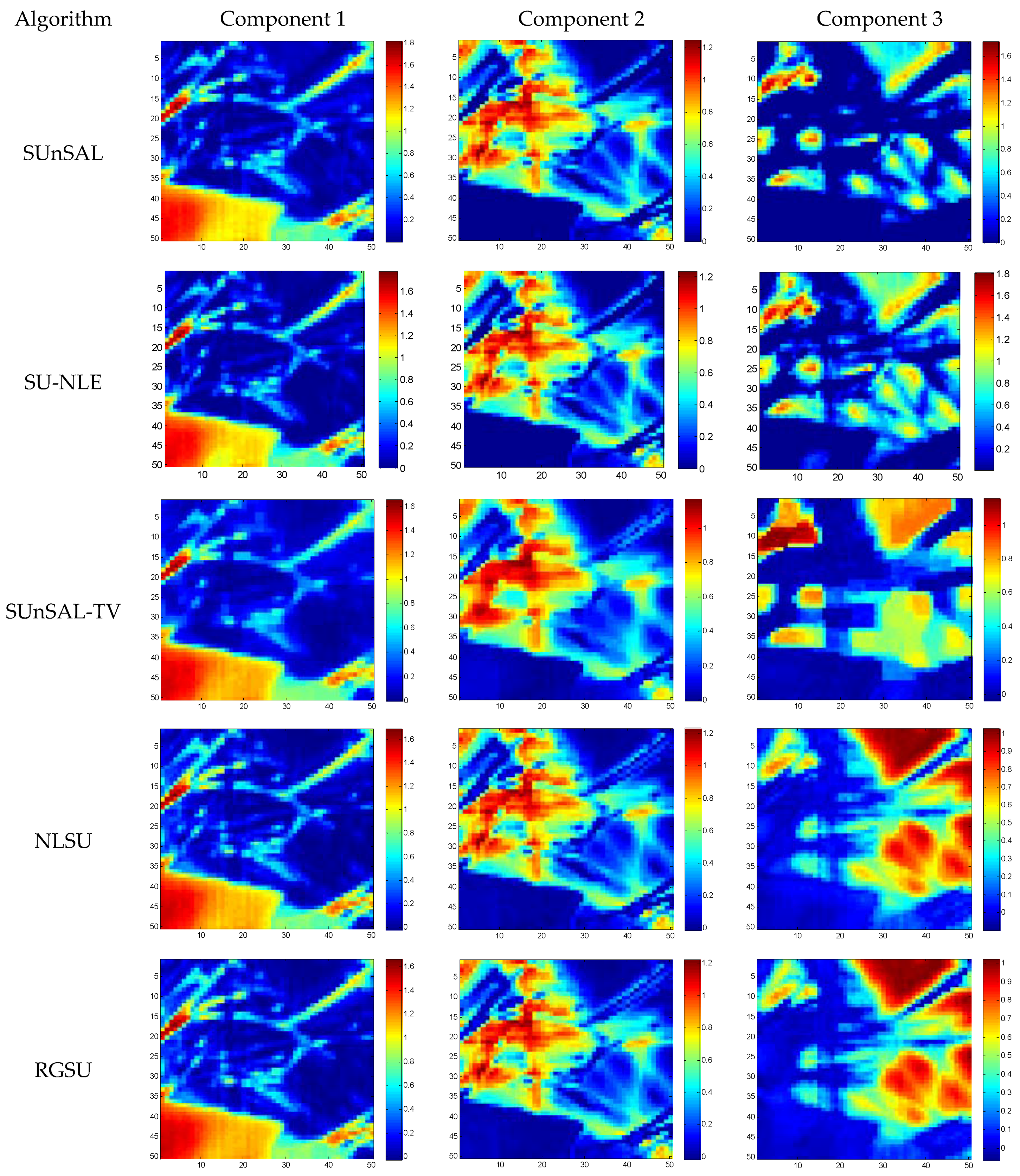

3.2 Results and Analysis

3.3. Sensitivity Analysis

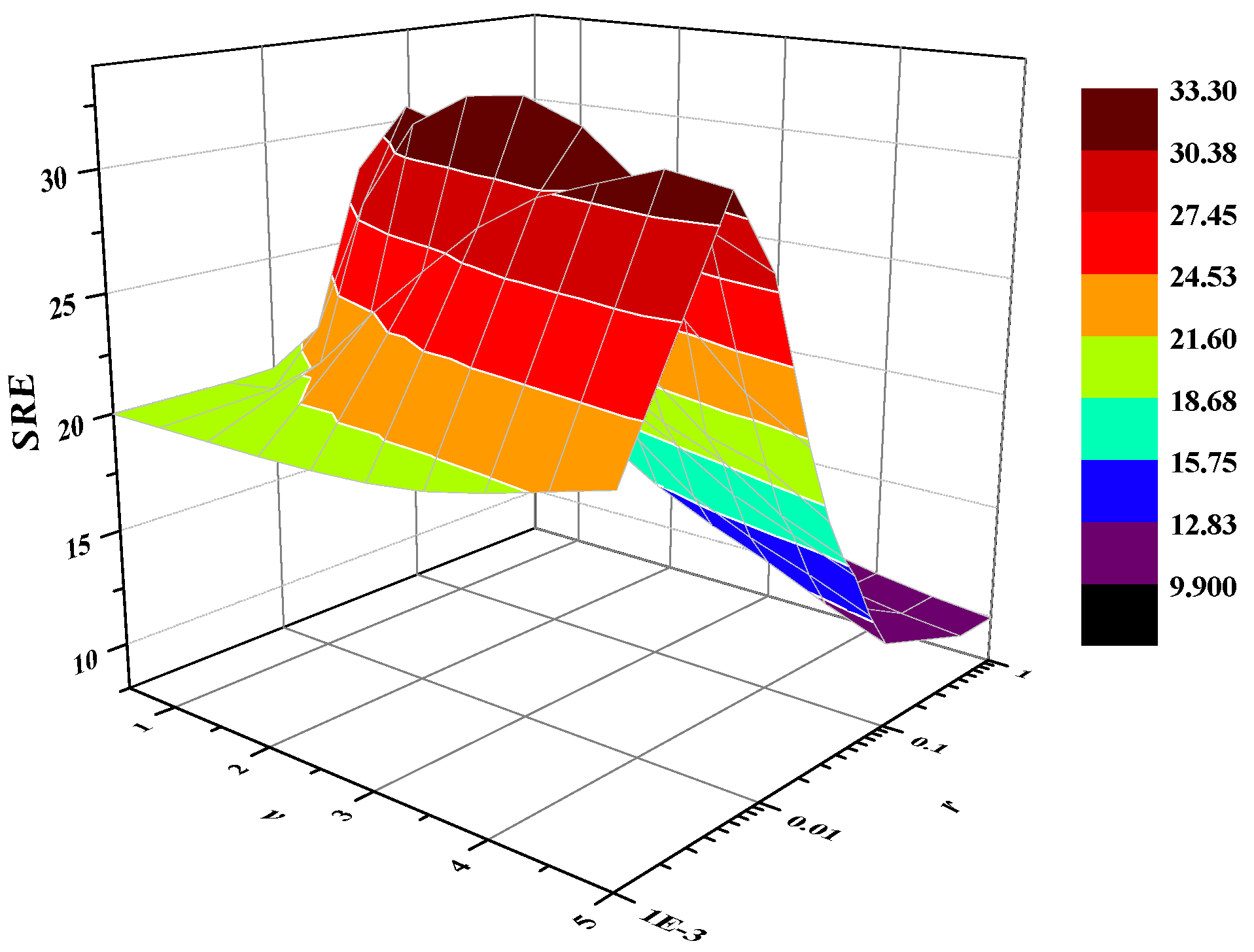

3.3.1. Discussion on Sensitivity Analysis for the Inner Parameters, v and r

3.3.2. Discussion on Sensitivity Analysis for the Regularization Parameters, λsps and λRG

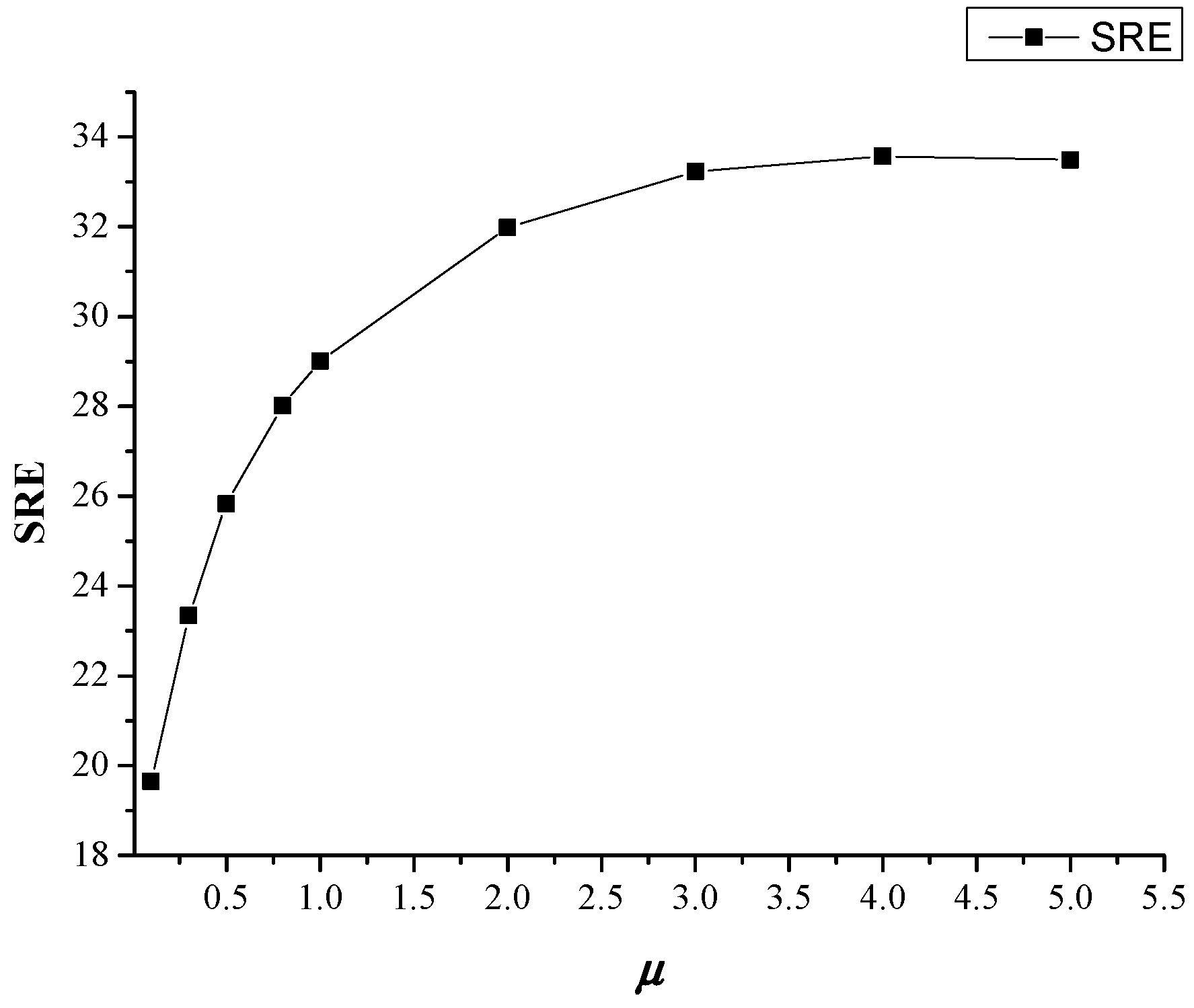

3.3.3. Discussion on Sensitivity Analysis for the Lagrangian Multiplier, μ

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Tong, Q.; Xue, Y.; Zhang, L. Progress in hyperspectral remote sensing science and technology in China over the past three decades. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 70–91. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.M.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009, 113, 110–122. [Google Scholar] [CrossRef]

- Ghasrodashti, E.K.; Karami, A.; Heylen, R.; Scheunders, P. Spatial resolution enhancement of hyperspectral images using spectral unmixing and Bayesian sparse representation. Remote Sens. 2017, 9, 154. [Google Scholar] [CrossRef]

- Xu, X.; Tong, X.; Plaza, A.; Zhong, Y.; Xie, H.; Zhang, L. Joint sparse sub-pixel mapping model with endmember variability for remote sensing imagery. Remote Sens. 2017, 9, 15. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Wei, Q.; Chen, M.; Tourneret, J.Y.; Godsill, S. Unsupervised nonlinear spectral unmixing based on a multilinear mixing model. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4534–4544. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Heinz, D.C.; Chang, C.-I. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Kizel, F.; Shoshany, M.; Netanyahu, A.S.; Even-Tzur, G.; Benediktsson, J.A. A stepwise analytical projected gradient descent search for hyperspectral unmixing and its code vectorization. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4923–4925. [Google Scholar] [CrossRef]

- Xu, M.; Du, B.; Zhang, L. Spatial-spectral information based abundance-constrained endmember extraction methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2004–2015. [Google Scholar] [CrossRef]

- Williams, M.; Kerekes, J.P.; Aardt, J. Application of abundance map reference data for spectral unmixing. Remote Sens. 2017, 9, 793. [Google Scholar] [CrossRef]

- Ma, W.K.; Bioucas-Dias, J.M.; Tsung-Han, C.; Gillis, N.; Gader, P.; Plaza, A.; Ambikapathi, A.; Chong-Yung, C. A signal processing perspective on hyperspectral unmixing: Insights from remote sensing. IEEE Signal Process. Mag. 2014, 31, 67–81. [Google Scholar] [CrossRef]

- Ammanouil, R.; Ferrari, A.; Richard, C.; Mary, D. Blind and fully constrained unmixing of hyperspectral images. IEEE Trans. Image Process. 2014, 23, 5510–5518. [Google Scholar] [CrossRef] [PubMed]

- Qian, Y.; Xiong, F.; Zeng, S.; Zhou, J.; Tang, Y. Matrix-vector nonnegative tensor factorization for blind unmixing of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1776–1792. [Google Scholar] [CrossRef]

- Jia, S.; Qian, Y. Spectral and spatial complexity-based hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3867–3879. [Google Scholar]

- Lu, X.; Wu, H.; Yuan, Y.; Yan, P.; Li, X. Manifold regularized sparse NMF for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2815–2826. [Google Scholar] [CrossRef]

- Salehani, Y.E.; Gazor, S.; Kim, I.-K.; Yousefi, S. l0-norm sparse hyperspectral unmixing using arctan smoothing. Remote Sens. 2016, 8, 187. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Collaborative sparse regression for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 341–354. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A.; Somers, B. MUSIC-CSR: Hyperspectral Unmixing via Multiple Signal Classification and Collaborative Sparse Regression. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4364–4382. [Google Scholar] [CrossRef]

- Ma, Y.; Li, C.; Mei, X.; Liu, C.; Ma, J. Robust sparse hyperspectral umixing with l2,1-norm. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1227–1239. [Google Scholar] [CrossRef]

- Boardman, J.W.; Kruse, F.A.; Green, R.O. Mapping target signatures via partial unmixing of AVIRIS data. In Proceedings of the Fifth Annual JPL Airborne Earth Science Workshop, Pasadena, CA, USA, 23–26 January 1995. [Google Scholar]

- Winter, M.E. N-FINDR: An algorithm for fast autonomous spectral end-member determination in hyperspectral data. Proc. SPIE 2003, 3753, 266–275. [Google Scholar]

- Nascimento, J.M.P.; Bioucas-Dias, J.M. Does independent component analysis play a role in unmixing hyperspectral data? IEEE Trans. Geosci. Remote Sens. 2005, 43, 175–187. [Google Scholar] [CrossRef]

- Miao, L.; Qi, H. Endmember extraction from highly mixed data using minimum volume constrained nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2007, 45, 765–777. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, X.; Zhao, L.; Feng, R.; Zhang, L.; Xu, Y. Blind spectral unmixing based on sparse component analysis for hyperspectral remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2016, 119, 49–63. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Figueiredo, M. Alternating direction algorithms for constrained sparse regression: Application to hyperspectral unmixing. In Proceedings of the 2nd IEEE GRSS Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Reykjavik, Iceland, 14–16 June 2010. [Google Scholar]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Total variation spatial regularization for sparse hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4484–4502. [Google Scholar] [CrossRef]

- Zhong, Y.; Feng, R.; Zhang, L. Non-local sparse unmixing for hyperspectral remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1889–1909. [Google Scholar] [CrossRef]

- Altmann, Y.; Pereyra, M.; Bioucas-Dias, J.M. Collaborative sparse regression using spatially correlated supports-application to hyperspectral unmixing. IEEE Trans. Image Process. 2015, 24, 5800–5811. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Ma, Y.; Mei, X.; Liu, C.; Ma, J. Hyperspectral unmixing with robust collaborative sparse regression. Remote Sens. 2016, 8, 588. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. Adaptive spatial regularization sparse unmixing strategy based on joint MAP for hyperspectral remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5791–5805. [Google Scholar] [CrossRef]

- Rudin, L.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithm. Phys. D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Haralick, R.M.; Sternbery, S.R.; Zhuang, X. Image analysis using mathematical morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 532–550. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. Proc. IEEE Conf. Comput. Vis. Pattern Recognit. 2005, 2, 60–65. [Google Scholar]

- Zhang, Q.; Shen, X.; Xu, L.; Jia, J. Rolling guidance filter. Proc. Eur. Conf. Comput. Vis. 2014, 8691, 815–830. [Google Scholar]

- Xia, J.; Bombrun, L.; Adali, T.; Berthoumieu, Y.; Germain, C. Classification of hyperspectral data with ensemble of subspace ICA and edge-preserving filtering. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016. [Google Scholar]

- Lillo-Saavedra, M.; Gonzalo-Martin, C.; Garcia-Pedrero, A.; Lagos, O. Scale-aware pansharpening algorithm for agricultural fragmented landscapes. Remote Sens. 2016, 8, 870. [Google Scholar] [CrossRef]

- Wang, P.; Fu, X.; Tong, X.; Liu, S.; Guo, B. Rolling guidance normal filter for geometric processing. ACM Trans. Graphics (TOG) 2015, 34, 173. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. Adaptive non-local Euclidean medians sparse unmixing for hyperspectral imagery. ISPRS J. Photogramm. Remote Sens. 2014, 97, 9–24. [Google Scholar] [CrossRef]

- Li, X.; Wang, G. Optimal band selection for hyperspectral data with improved differential evolution. J. Ambient Intel. Hum. Comput. 2015, 6, 675–688. [Google Scholar] [CrossRef]

- Pan, S.; Wu, J.; Zhu, X.; Zhang, C. Graph ensemble boosting for imbalanced noisy graph stream classification. IEEE Trans. Cybern. 2015, 45, 954–968. [Google Scholar]

- Wang, L.; Song, W.; Liu, P. Link the remote sensing big data to the image features via wavelet transformation. Cluster Comput. 2016, 19, 793–810. [Google Scholar] [CrossRef]

- Lindeberg, T. Scale-space theory: A basic tool for analyzing structures at different scales. J. Appl. Stat. 1994, 21, 225–270. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral filtering for grey and color images. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar]

- Kang, X.; Li, S.; Benediktsson, A. Spectral-spatial hyperspectral image classification with edge-preserving filtering. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2666–2677. [Google Scholar] [CrossRef]

- Wu, J.; Wu, B.; Pan, S.; Wang, H.; Cai, Z. Locally weighted learning: How and when does it work in bayesian networks? Int. J. Comput. Int. Sys. 2015, 8, 63–74. [Google Scholar] [CrossRef]

- Wu, J.; Pan, S.; Zhu, X.; Cai, Z.; Zhang, P.; Zhang, C. Self-adaptive attribute weighting for Naive Bayes classification. Expert Syst. Appl. 2015, 42, 1478–1502. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Mei, X.; Fan, F.; Huang, J.; Ma, J. Sparse unmixing of hyperspectral data with noise level estimation. Remote Sens. 2017, 9, 1166. [Google Scholar] [CrossRef]

- Iordache, M.D. A Sparse Regression Approach to Hyperspectral Unmixing. Ph.D. Thesis, School of Electrical and Computer Engineering, Ithaca, NY, USA, 2011. [Google Scholar]

- Xu, X.; Zhong, Y.; Zhang, L.; Zhang, H. Sub-pixel mapping based on a MAP model with multiple shifted hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 580–593. [Google Scholar] [CrossRef]

| Datasets | Simulated Dataset 1 | Simulated Dataset 2 | Cuprite Dataset | Nuance Data |

|---|---|---|---|---|

| Sensor | AVIRIS | AVIRIS | AVIRIS | Nuance NIR |

| Image size | 75 × 75 | 100 × 100 | 250 × 191 | 50 × 50 |

| Number of bands | 224 | 224 | 188 | 46 |

| SNR value (dB) | 30 | 30 | — | — |

| Data | SUnSAL | SU-NLE | SUnSAL-TV | NLSU | RGSU | |

|---|---|---|---|---|---|---|

| Simulated dataset 1 | SRE (dB) | 15.1471 () | 15.7062 () | 25.8333 (;) | 29.6743 (;;;) | 33.3420 (;;;) |

| Time(s) | 0.4281 | 13.0313 | 30.4375 | 19.5000 | 47.5469 | |

| Simulated dataset 2 | SRE (dB) | 15.8856 () | 15.88 () | 18.7186 (;) | 21.3335 (;;;) | 23.4529 (;;;) |

| Time(s) | 2.7813 | 50.9219 | 62.7969 | 39.3750 | 88.5000 | |

| Nuance data | SRE (dB) | 4.9281 () | 4.8570 () | 5.3092 (;) | 6.0017 (;;;) | 6.0385 (;;;) |

| Time(s) | 2.9219 | 3.6563 | 4.4531 | 12.2188 | 24.3146 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, R.; Zhong, Y.; Wang, L.; Lin, W. Rolling Guidance Based Scale-Aware Spatial Sparse Unmixing for Hyperspectral Remote Sensing Imagery. Remote Sens. 2017, 9, 1218. https://doi.org/10.3390/rs9121218

Feng R, Zhong Y, Wang L, Lin W. Rolling Guidance Based Scale-Aware Spatial Sparse Unmixing for Hyperspectral Remote Sensing Imagery. Remote Sensing. 2017; 9(12):1218. https://doi.org/10.3390/rs9121218

Chicago/Turabian StyleFeng, Ruyi, Yanfei Zhong, Lizhe Wang, and Wenjuan Lin. 2017. "Rolling Guidance Based Scale-Aware Spatial Sparse Unmixing for Hyperspectral Remote Sensing Imagery" Remote Sensing 9, no. 12: 1218. https://doi.org/10.3390/rs9121218