1. Introduction

Land use and land cover (LULC) consists of fundamental characteristics of the Earth’s system intimately connected with many human activities and the physical environment [

1]. Information on LULC is of key importance in environmentally or ecologically protected ecosystems or native habitat mapping and restoration (Council Directive, 92/43/EEC, 1992). Wetlands in particular represent one of the world’s most important and productive ecosystems, having a critical role in climate change, biodiversity, hydrology, and human health [

2,

3]. Wetlands include permanent water bodies, lands that remain completely dry over several months, and areas where water is below a dense vegetation cover, such as peat bogs or mangroves [

4]. Those also include important natural complex habitat types such as fresh water marsh and riverine forests, scrublands, as well as agricultural landscapes [

5]. Although freshwater wetlands cover only 1% of the Earth’s surface, these areas provide shelter to over 40% of the world’s flora and fauna species [

6]. As such, wetlands are internationally recognized as an indispensable resource for humans [

2] providing a wide range of services that are dependent on water, such as freshwater, agricultural production, fisheries and tourism [

7].

Despite their importance, wetlands are one of the most threatened ecosystems due to anthropogenic factors such as intensive agricultural production, irrigation, water extraction for domestic and industrial use, urbanization, infrastructure, industrial development and pollution [

7,

8,

9]. Many wetlands are under pressure due to the natural and anthropogenic climate change (namely, changes in rainfall patterns and temperature and extreme events [

9], as well as changes in land use brought by increasing populations and urban expansion). Environmental concerns on the degradation of wetlands came to the fore during the Ramsar Convention [

8]. Over the last century, it is estimated that 50% of the world wetlands have disappeared, with an increased rate of 3.7 times that during the 20th and 21st centuries [

10]. Thus, mapping and monitoring their dynamics over time is of crucial importance, as well as in the broader context of quantifying the temporal and spatial patterns of land use/land cover (LULC) and of its changes [

11].

Earth Observation (EO) offers a repeated and frequent coverage of the Earth’s surface over long time periods, which is ideal for monitoring wetlands [

8]. This has resulted in EO becoming the preferred method for natural resource managers and researchers [

12]. Identification and characterization of key resource attributes allows resource managers to monitor landscape dynamics over large areas, including those where access is difficult or hazardous, and also facilitates extrapolation of expensive ground measurements for monitoring and management [

13]. LULC mapping using satellite or airborne images allows for short- or long-term change detection and monitoring in such vulnerable habits [

14,

15,

16].

Use of Synthetic Aperture Radar (SAR) imagery has been highly effective in wetland mapping and, although they are less frequently used in land-cover classification studies than optical data, they can be an important alternative or complementary data source [

17]. Synergistic use of optical data with SAR imagery may enhance the wetland-related information. This is because SAR provides data associated with the inundation level, biomass, and soil moisture, complimentary to optical sensors’ information [

18]. In this context, Sentinel-1 offers dual-polarimetric C-band data and Sentinel-2 offers a wide range of high resolution spectral bands, thus testing their synergistic capability is of crucial importance.

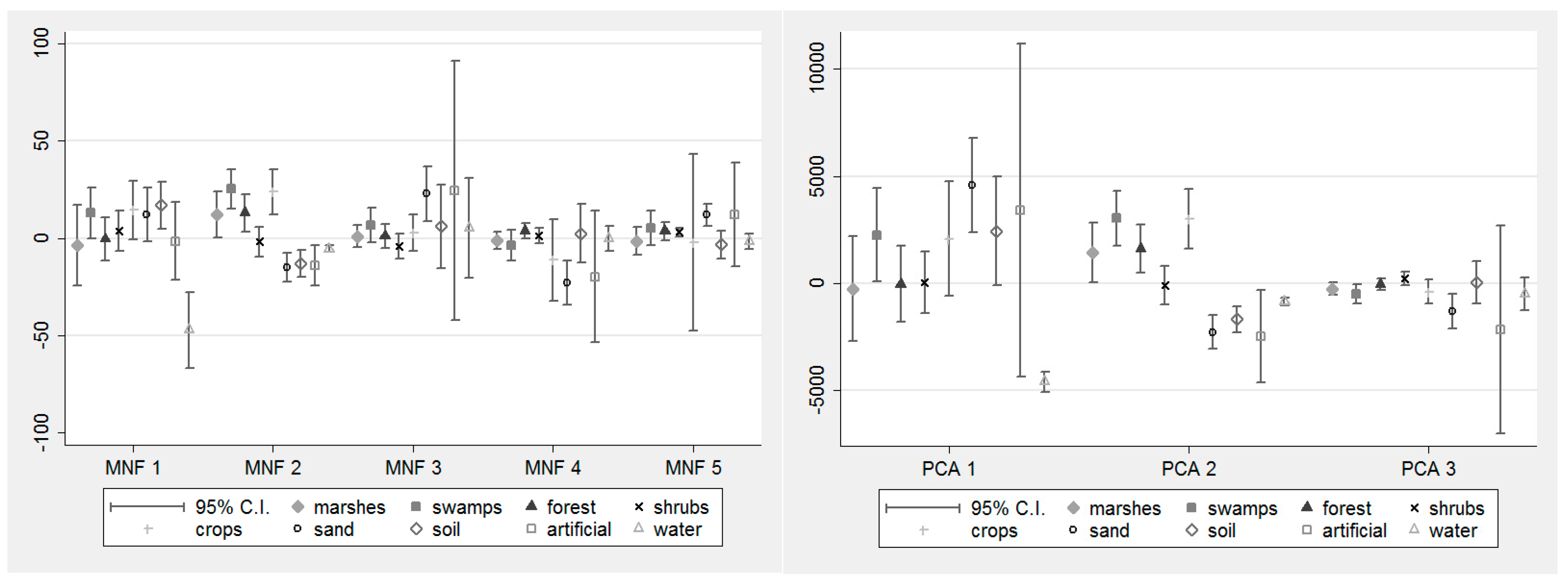

Many studies have focused on mapping wetlands utilizing different band manipulation methods (e.g., Tasseled Cap (TC), Normalized Difference Water Index (NDWI), and Normalized Difference Vegetation Index (NDVI)) and image analysis techniques (e.g., Principal Component Analysis (PCA) and Minimum Noise Fraction (MNF)) for assisting image classification [

19,

20,

21,

22]. Thus far, most studies have been based on a single-date image, neglecting the seasonal phenology that a lake can have [

8]. Recent studies published have explored the use of advanced machine learning classification algorithms, such as Support Vector Machines (SVMs), random forests (RFs), decision trees (DTs) and artificial neural networks (ANNs) for LULC mapping [

15,

16,

17,

23,

24,

25,

26,

27,

28,

29,

30].

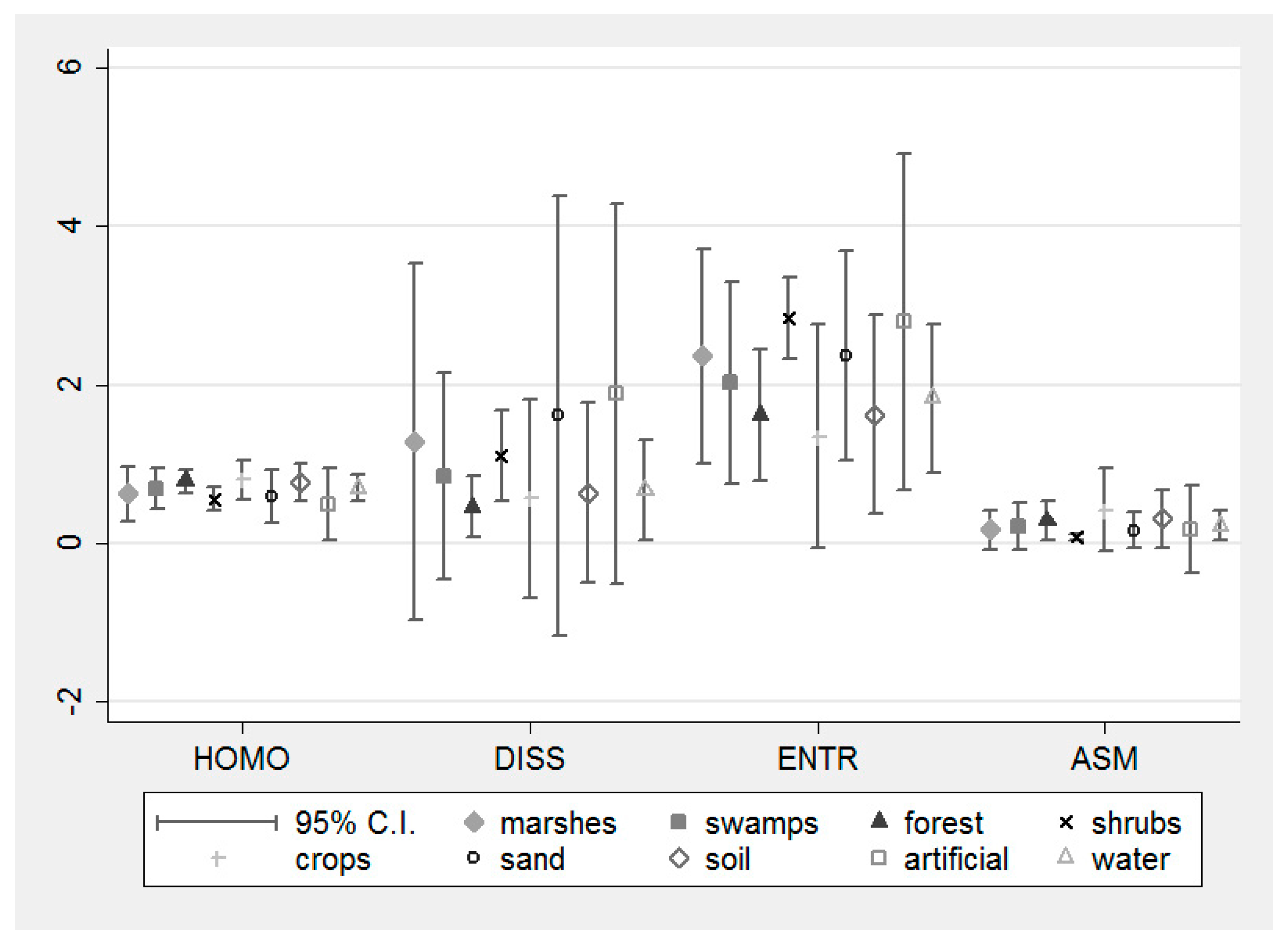

Although spectral information about ground objects is important in information extraction from remote sensing data, previous studies have indicated that texture features are also important for differentiating wetland classes [

15,

16,

31,

32,

33,

34,

35]. Spectral features describe the tonal variations in different bands, whereas texture features describe the spatial distribution [

36]. The most commonly used texture features are first order measurements (minimum, maximum, mean, range, standard deviation, skewness, and kurtosis) and second order measurements (mean, angular second moment, contrast, correlation, homogeneity, dissimilarity, variance, and entropy). First order statistics are used to quantify the distribution properties of the images’ spectral tone for a given neighborhood, while second-order statistics contain the frequency of co-occurring gray scale values and are calculated from the gray-level co-occurrence matrix (GLCM) [

15].

The recent growth of EO technology has resulted to the launch of sophisticated instruments such as that of the Sentinels series from the European Space Agency (ESA). This has opened up opportunities for new techniques development which aim at improving our ability to map wetland ecosystems. The Sentinel Mission is part of Copernicus Programme for monitoring climate change, natural resources management, civil protection and disaster management. Copernicus open data are available at Copernicus Open Access Hub (

https://scihub.copernicus.eu/). Sentinel-1 (S1) is a Synthetic Aperture Radar (SAR) mission, providing data regardless of weather conditions and cloud coverage. Sentinel-2 (S2) is a land monitoring mission part of the Copernicus Program that provides high-resolution optical imagery to perform terrestrial observations in support of land services. The mission provides a global coverage of the Earth’s land surface every five days and at a spatial resolution of 10, 20 and 60 m, making the data of great use in studies related to land use/cover mapping and quantification of its changes. The Sentinel satellites can play a vital role in future land surface monitoring programs. Thus, exploring and evaluating the use of Sentinel data for wetland mapping is a thematic area of key interest and priority to develop. The improved discrimination capabilities offered by S1 and S2 and their effectiveness for wetland mapping combined with contemporary classification algorithms (e.g., Support Vector Machines (SVMs)) would therefore be important to be investigated.

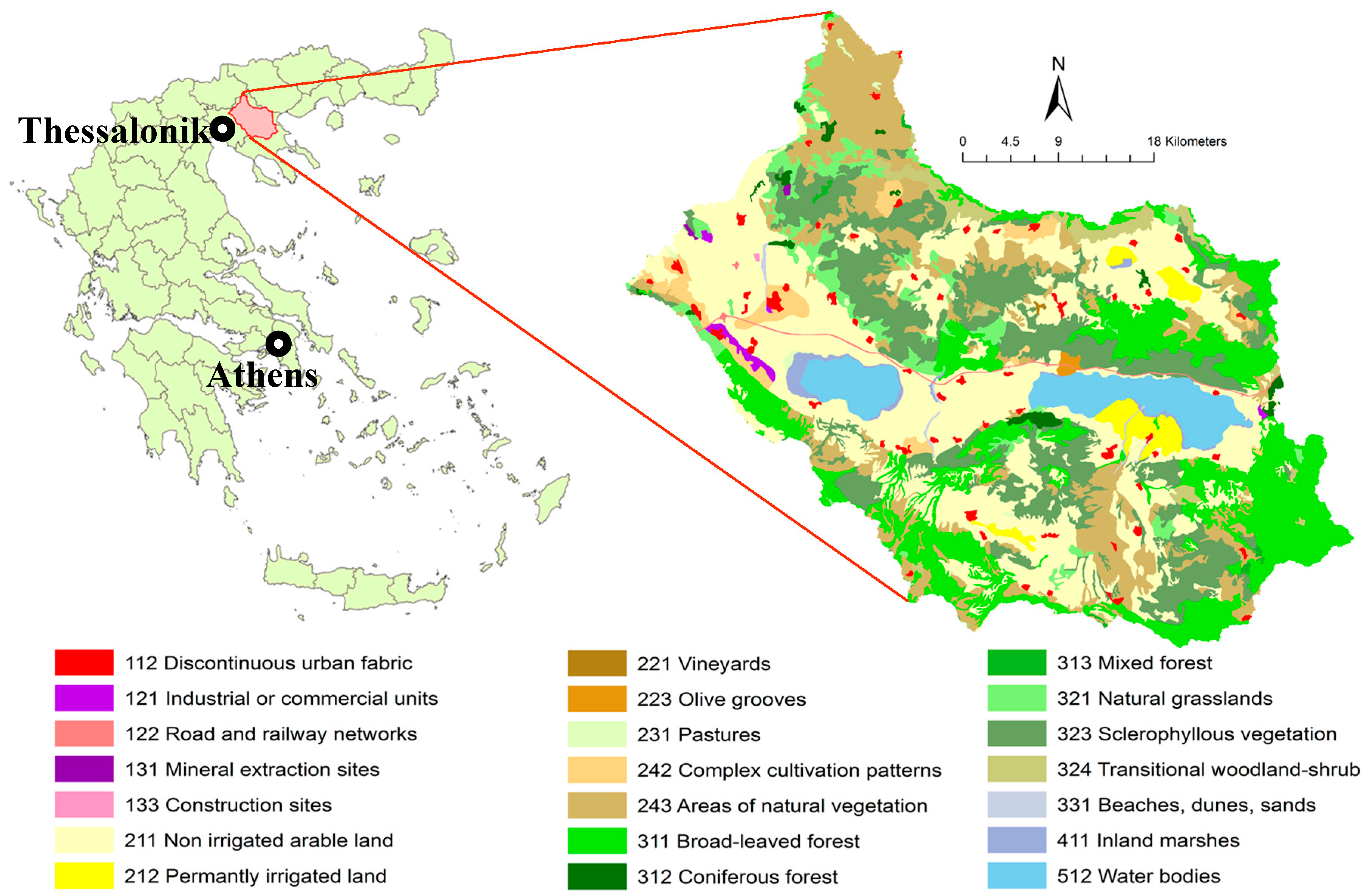

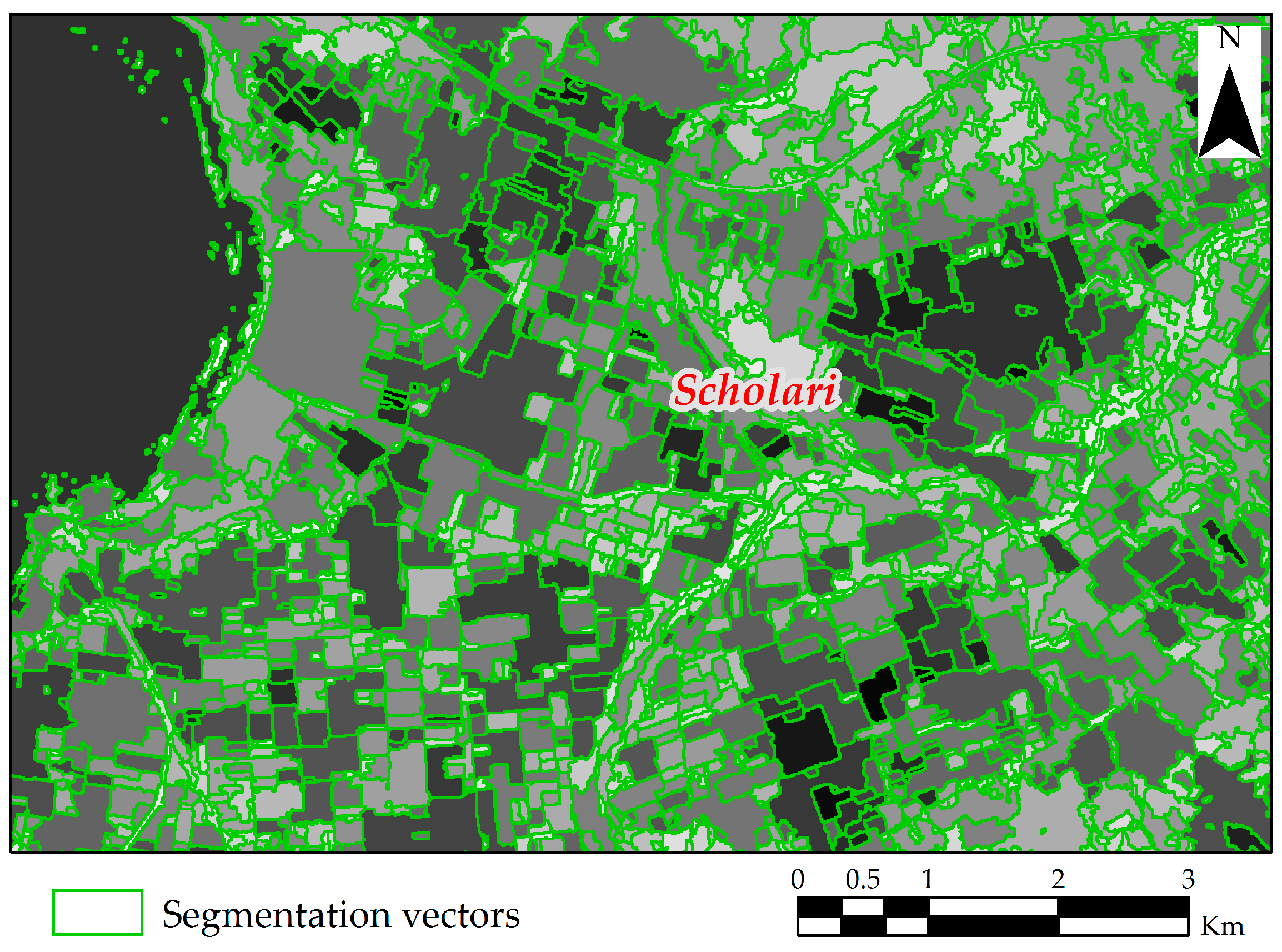

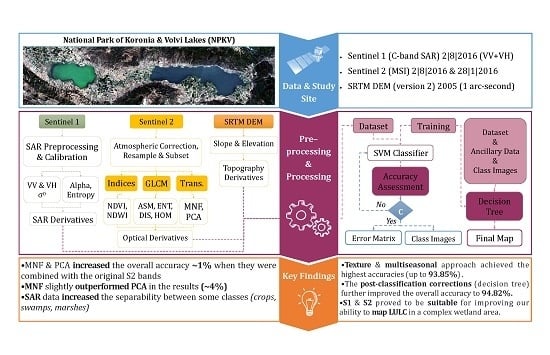

This study aims at exploring the synergistic use between a range of spectral information products derived from Sentinel 1 and 2 and the SVMs classifier in evaluating their ability to map a complex area containing wetland and non-wetland LULC classes. In particular, the study objectives are: (1) to analyze a number of secondary derivatives produced from S2 data with the SVMs to evaluate their added value in mapping LULC and specifically wetlands; and (2) to investigate the suitability of S2 data and their synergistic use with S1 data with contemporary LULC mapping techniques (SVMs and knowledge rules) for LULC mapping with emphasis on mapping wetlands.

6. Discussion

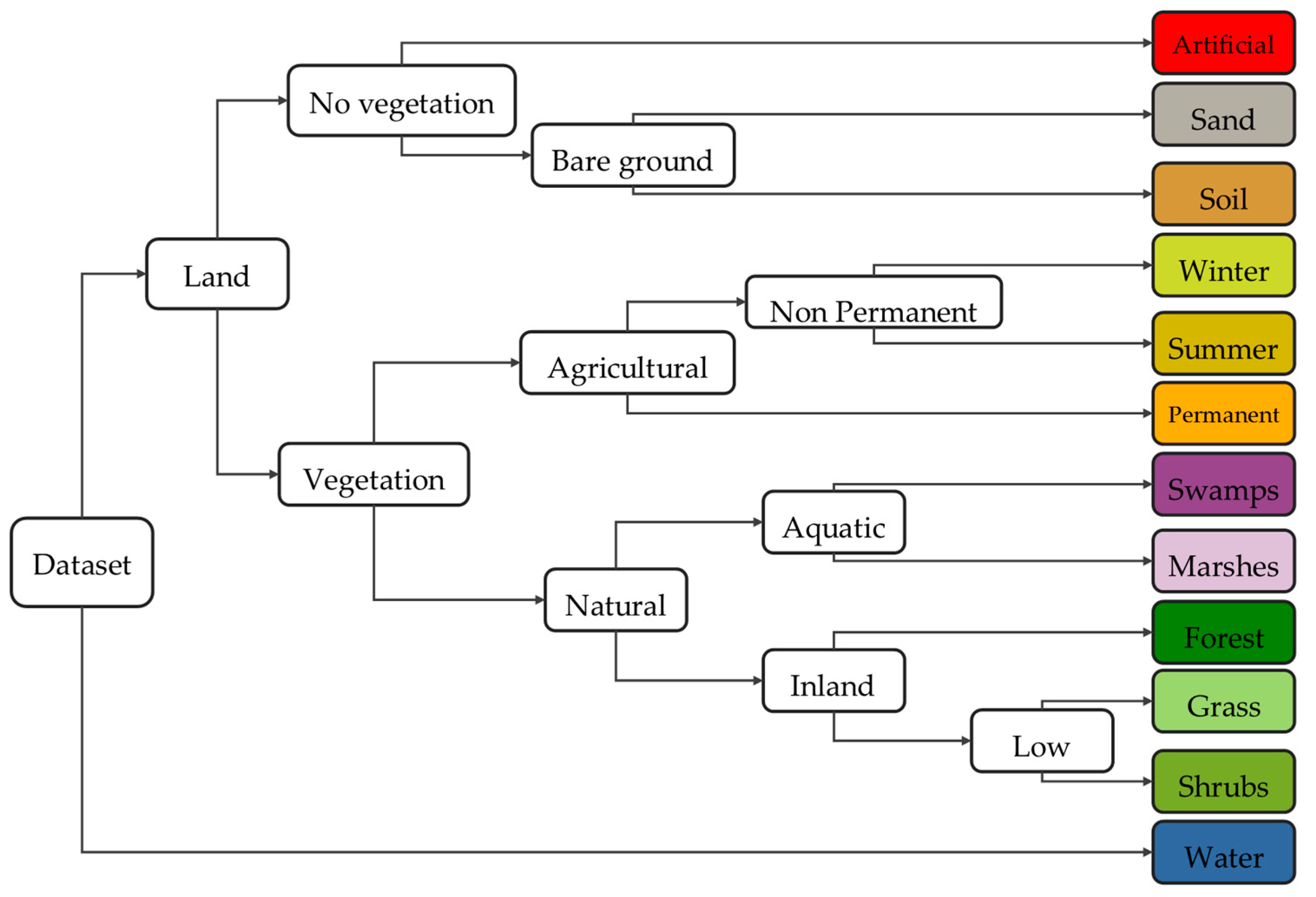

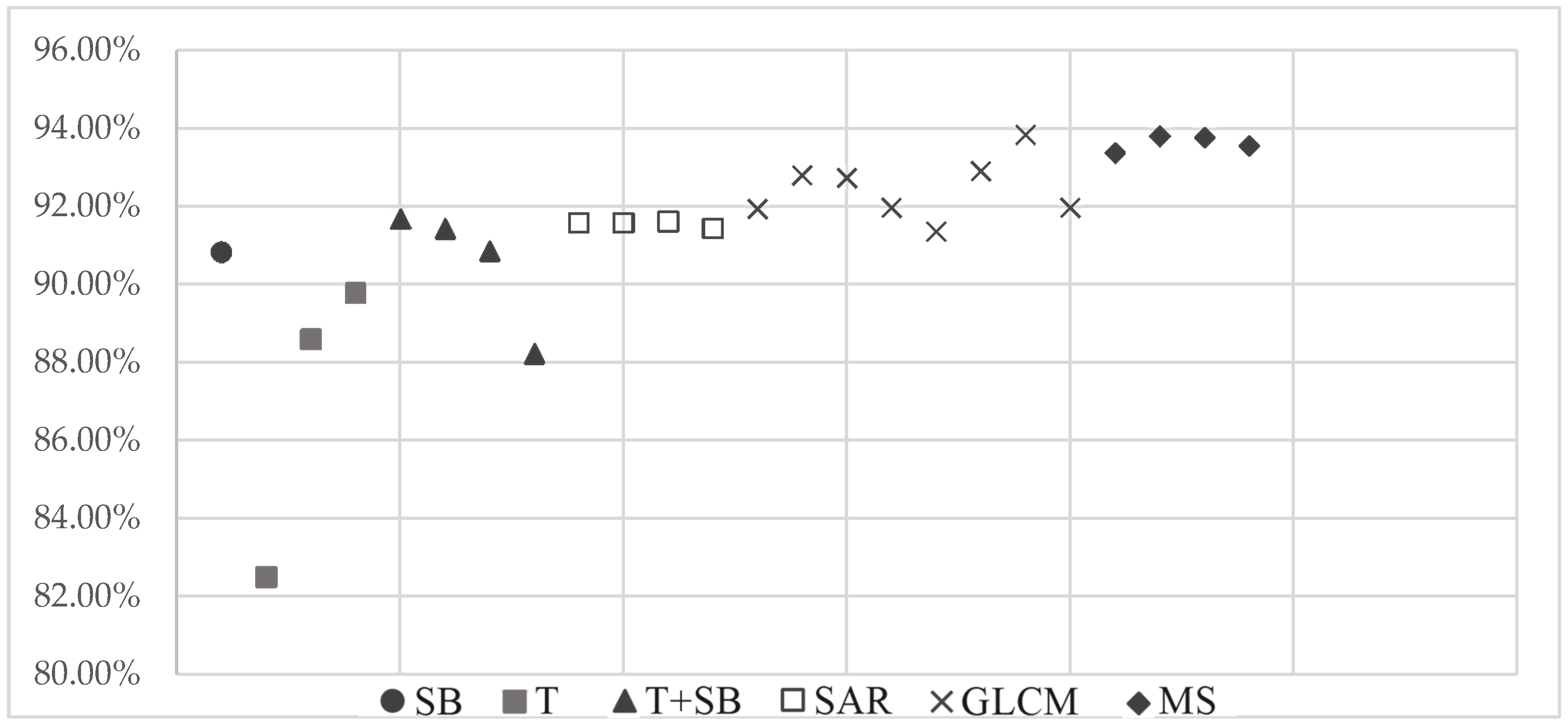

Accuracy assessment results suggest that the synergistic use of S1 and S2 data can achieve high classification accuracy, especially when combined with information on elevation, texture and shape. In a wetland area, the classes that prevail are water, aquatic vegetation and agriculture. In our case, water covers almost 15% of the area. The above, combined with the seasonality of both the lakes and the different types of vegetation, constitute a highly complex ecosystem in which the classification of LULC types may prove difficult. Moreover, weather conditions affect the quality of optical data (e.g., cloud cover) and the ground conditions (e.g., drought) affect the quality of SAR data, since it reduces the backscatter variations of the different classes due to their moisture difference. This is confirmed by both OA, UA and PA for specific classes. Wetland classes (especially marshes) were the most misclassified and the results were more accurate when texture and seasonality were considered.

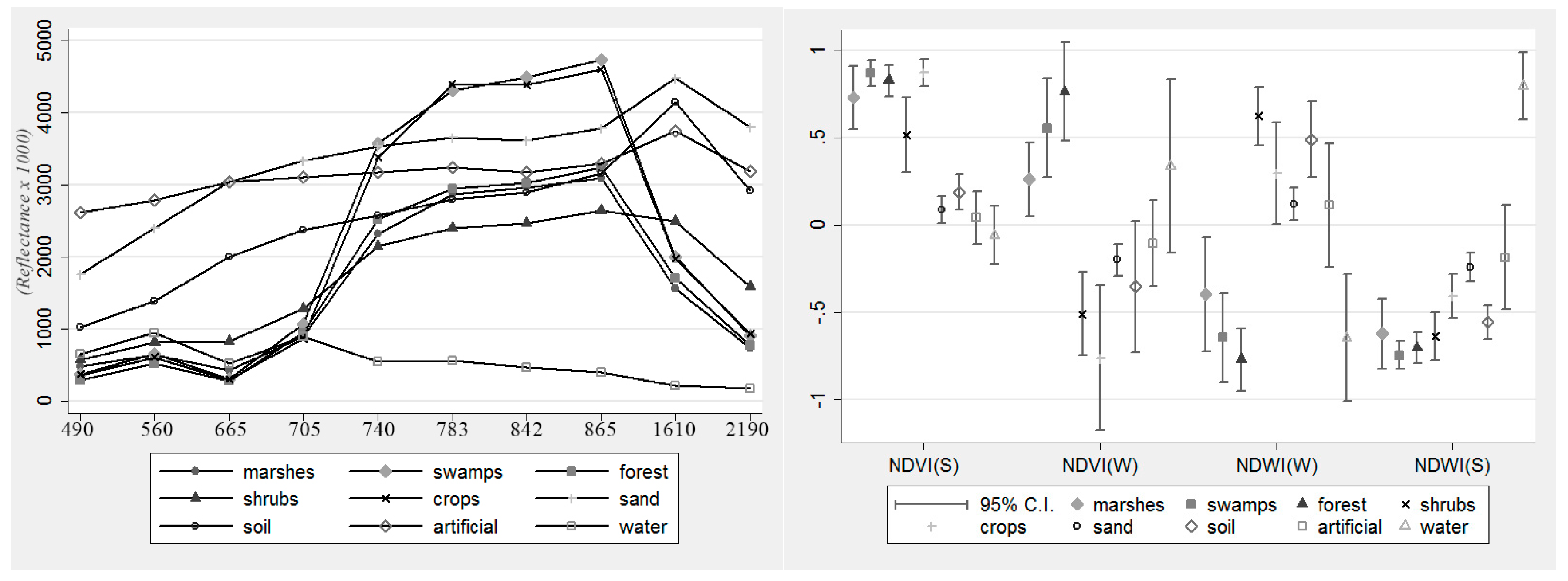

Although vegetation classes have a similar spectral response, spectral signatures between some of the different LULC types can be distinguished in the red-edge area of the spectrum. That may explain the significant contribution of S2 red bands in the classification accuracy improvement. UA and PA for vegetation classes (marshes, swamps and crops) decreased significantly when S2 bands were removed (v2.1–T). Moreover, vegetation’s phenological cycle helped the separation of different types in the multi-seasonal approach. A difficulty in distinguishing the aquatic and the inland vegetation was also observed. Texture features helped in this respect, as indicated by several previous studies [

35,

56,

57,

58]. Crops were successfully separated from natural vegetation using shape features, which can be easily explained as the cultivated land has more regular patterns and shape than natural vegetation areas.

Spectral features showed to be less effective in the separation of artificial, soil and sand classes, which was possibly due to their low spectral separability (refer to

Figure 2). While the first image was acquired in mid-summer, the presence of moisture is significantly low on the ground which can lead to high reflectance values and, thus, confusion between artificial surfaces and natural surfaces with no vegetation (soil, sand). Specifically, according to data collected from the Meteorological Station of Lagkadas (

http://www.meteo.gr/meteoplus/index.cfm), the cumulative rainfall height for the previous month was only 6.0 mm, with temperature ranging between 14 °C (nighttime) and 38.8 °C (daytime). These weather conditions cause severe droughts to the area during the summer (see

Section 2.1).

Findings reported herein also align with the other studies conducted independently using different multispectral satellite data underlining as well the promising capabilities of SVMs [

23,

24,

59,

60,

61,

62,

63,

64,

65]. Evidently, a proper parameterisation can highly affect the SVMs performance [

66,

67]. The technique we chose to use in this study to parameterize the SVMs has been successfully implemented in other LULC investigations [

27,

68,

69]. RBF kernel was used due to its promising capabilities as suggested by previous studies [

23,

50,

68,

70]. It is possible that another kernel function might have been more appropriate to the particular study site and data. SVMs algorithm achieved high classification accuracies, especially in wetland classes.

A number of studies have also indicated that SAR data may improve the classification accuracy, especially in extracting artificial classes (roads, buildings, etc.) [

71,

72] and change detection (wetland delineation, flood, etc.) [

67,

71,

72,

73]. However, in our study, S1 data slightly improved the classification accuracy, since the accurateness enhancement was about 1%. As mentioned above, weather conditions were not ideal at the specific time of the images acquisition, with high temperature and very low rainfall which had caused severe drought in the area. Although SAR data are only affected by wind speed, which in our case did not exceed 1.8 km/h (WSW direction), the severe drought conditions may have affected the data quality. Finally, the differences in the spatial resolution between the combined datasets and the image processing implemented to unify this spatial resolution could be another source of misclassifications reported herein.

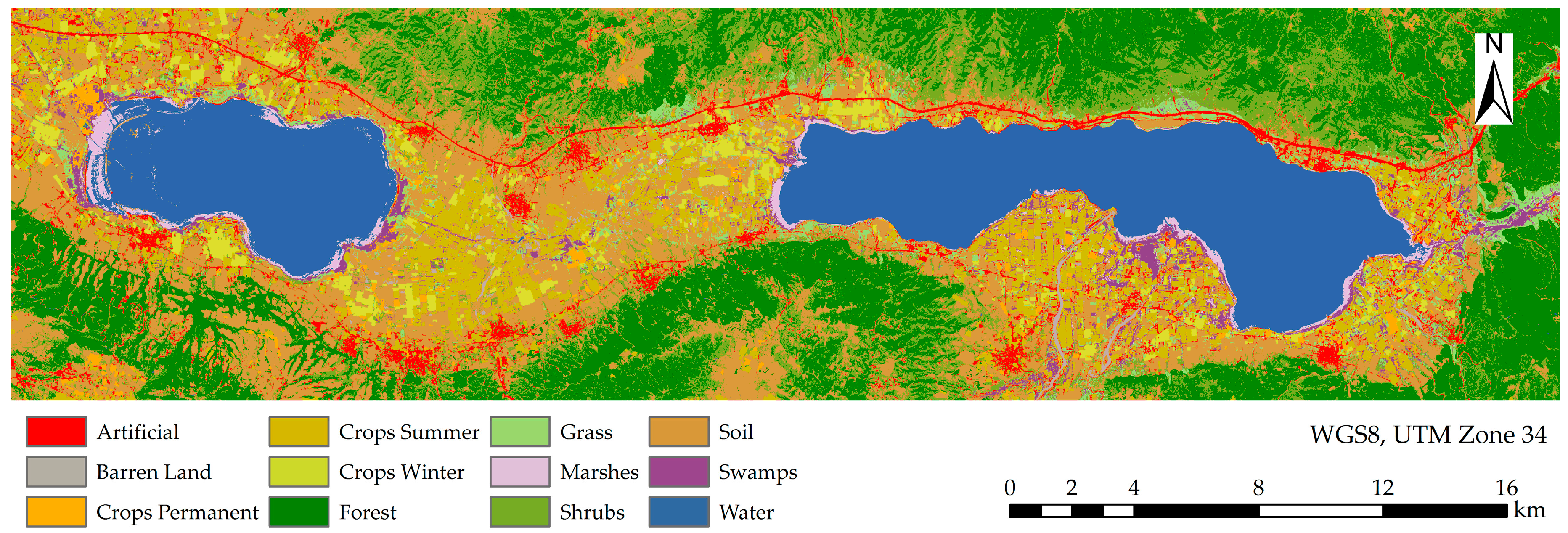

Previous studies have indicated the improvement of accuracy in classifying land cover when knowledge rules are used [

31,

32,

33]. In our study, post classification results achieved very high accuracy and indicated the efficiency of this method in producing a high-accuracy LULC map (

Figure 9).

More broadly, the proposed methodology includes image analysis, extraction of key characteristics (e.g., texture) and usage of additional information (e.g., elevation) to compose a new dataset that will be used in the classification process. In this respect, findings of our study provide important assistance towards the development of relevant EO-based products not only in Greece (where the method was tested herein), but potentially also allowing a cost-effective and consistent long term monitoring of wetland/non-wetlands at different scales based on the latest EO sensing technology. With the provision of continuous, reliable spectral information from the ESA’s Sentinels 1 and 2 missions, the protraction of the proposed herein methodology has to some extend been assured. The proposed methodology may be easily transferred to be implemented at another location in terms of geographical scalability as well as in different wetland types (e.g., coastal wetlands, river estuaries, etc.). For this to be done successfully, the post-classification rules should be appropriately adapted when the method is implemented in different settings. The inclusion of this adjustment may both further improve the accuracy of the current technique and lead to a more detailed classification hierarchy. Finally, the present study also meliorates existing EU international figures, assisting efforts towards the development of operational products via the EU’s Copernicus service.

7. Conclusions

This study is, to our knowledge, among the first to investigate the combined use of Sentinel with advanced machine learning algorithms for LULC mapping with an emphasis on wetlands, particularly in a Mediterranean setting. Results showed that spatial, spectral and temporal resolutions of S2 data are suitable for classifying wetland areas. Overall accuracy using S2 bands alone reached 90.83% and kappa coefficient 0.894, which indicates a strong agreement with reality. The inclusion of S1 data did not significantly improve classification overall accuracy (<1%), at least this was the case in our study. However, the inclusion of additional spectral (NDVI, NDWI, PCA, and MNF), texture (GLCM) and shape indicators improved classification results, in some cases not significantly. Highest accuracies were achieved when the texture features (3%) where included, while the lowest when only selected transformed components were used (<0.5%). Cultivated land was successfully separated from natural using shape features. Finally, post classification techniques achieved very high overall accuracy (94.82%) with individual class accuracies more than 75% for all classes, thus providing an accurate map of the specific study site.

Wetlands are complex systems with a high presence of water and both natural and artificial surfaces. Thus, mapping wetlands and monitoring their changes over time is a challenging procedure, especially using multispectral imagery for this purpose. Sentinel missions can provide researchers and decision-makers invaluable data, with a satisfactory spatial and temporal resolution and, most importantly, free of charge. S2 proved to be appropriate for mapping such complex areas, thanks to the high spectral and spatial resolution of the data. Further work is required to verify the results obtained by testing the methods on other sites. The expansion of the techniques investigated herein using multi-seasonal as well as multi-annual datasets would also be another direction to be perhaps investigated next. Besides this, the use of other classifiers (e.g., random forests, artificial neural networks, etc.) would be an interesting avenue to be explored, as it may also provide information that can result in improving thematic information extraction accuracy from S1 and S2 satellites to map wetlands worldwide.