A Novel Pixel-Level Image Matching Method for Mars Express HRSC Linear Pushbroom Imagery Using Approximate Orthophotos

Abstract

:1. Introduction

2. Materials and Methods

2.1. Terrain Characteristics of the Martian Surface

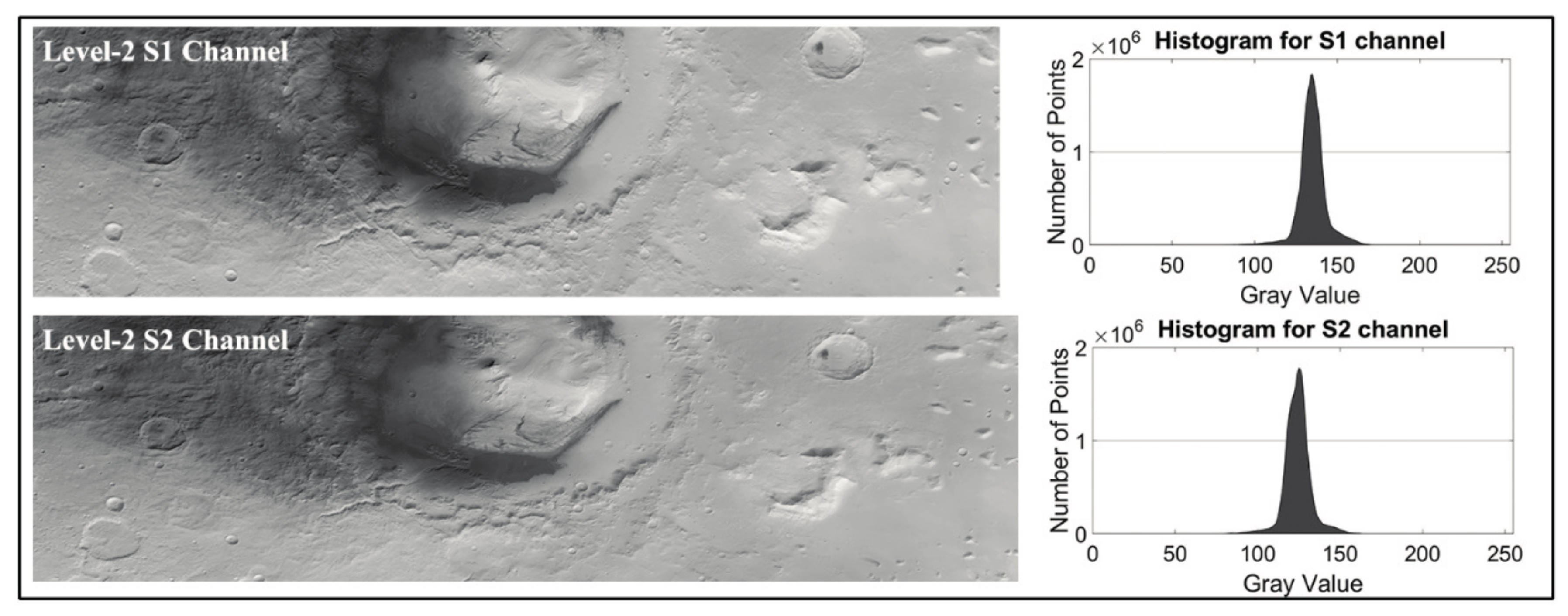

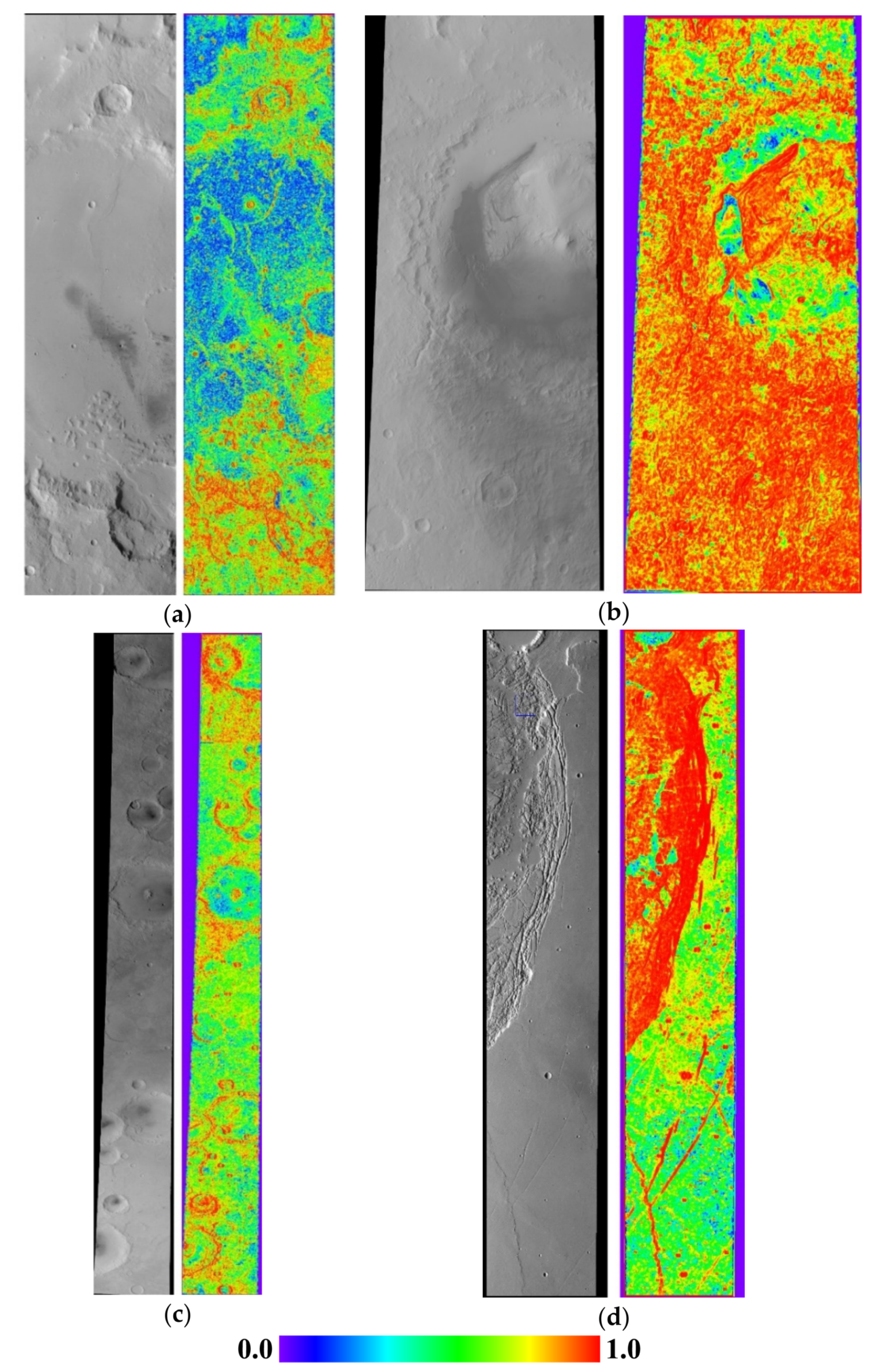

- (1)

- Low contrast. As observed in the left parts of Figure 1, Martian surface images always deliver low contrast compared with the rich texture information delivered by images of earth. The right parts of Figure 1 show that the histograms concentrate in a small range. Thus, the low signal-to-noise ratio of Martian surface images will decrease the success rate of image matching.

- (2)

- Insufficient feature points. Feature-based matching methods such as scale-invariant feature transform (SIFT) and speeded up robust features (SURF) are more robust than area-based matching methods. Unfortunately, the feature points on Martian surface images are very limited. These feature points can be used as tie points for bundle adjustment, which are not suitable for generating DEM.

- (3)

- Poor image quality. Image quality is influenced by many factors such as imaging instruments, atmospheric environment, incidence angle and emission angle. It is well known that Martian surface images perform worse than earth observation images as far as image quality is concerned.

- (4)

- Repetitive patterns. Repetitive patterns will result in wrongly matched points especially when an area-based matching method is carried out. To address this issue, some constraint conditions such as camera geometry need to be utilized.

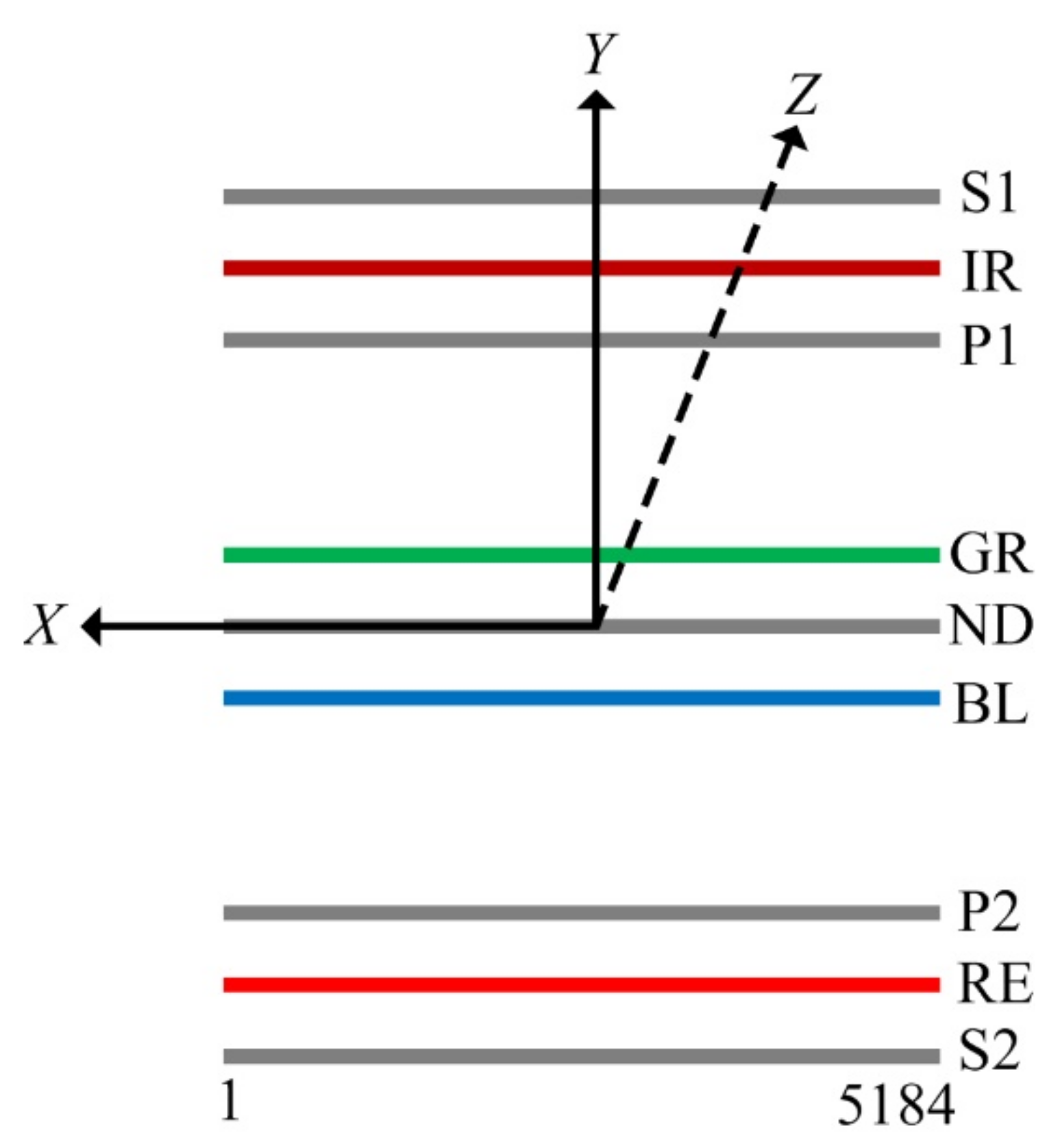

2.2. Image Geometry of HRSC

2.2.1. HRSC Linear Pushbroom Imagery Overview

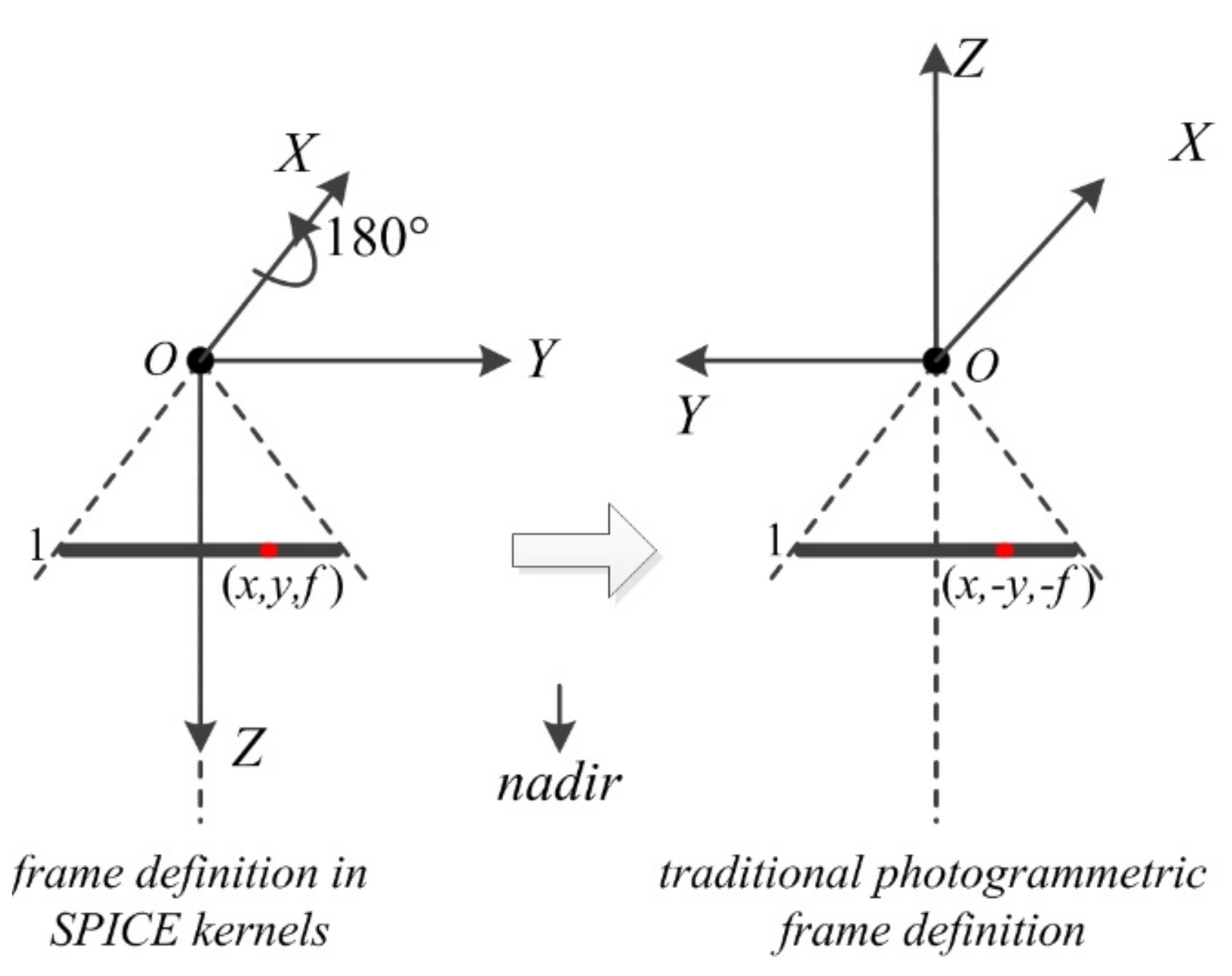

2.2.2. Rigorous Geometric Model

2.2.3. Fast Back-Projection Algorithm

2.3. Pixel-Level Image Matching Strategies

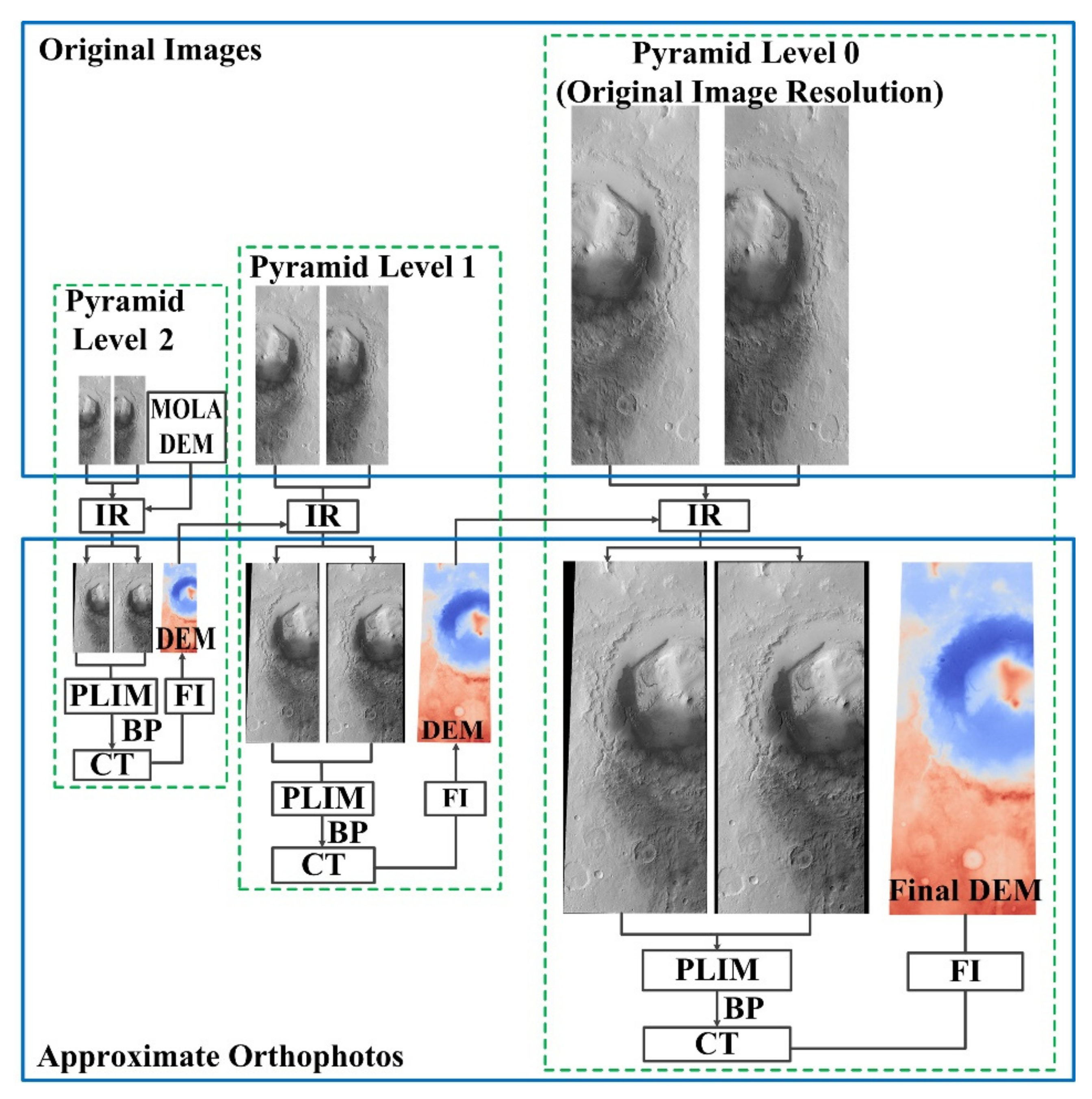

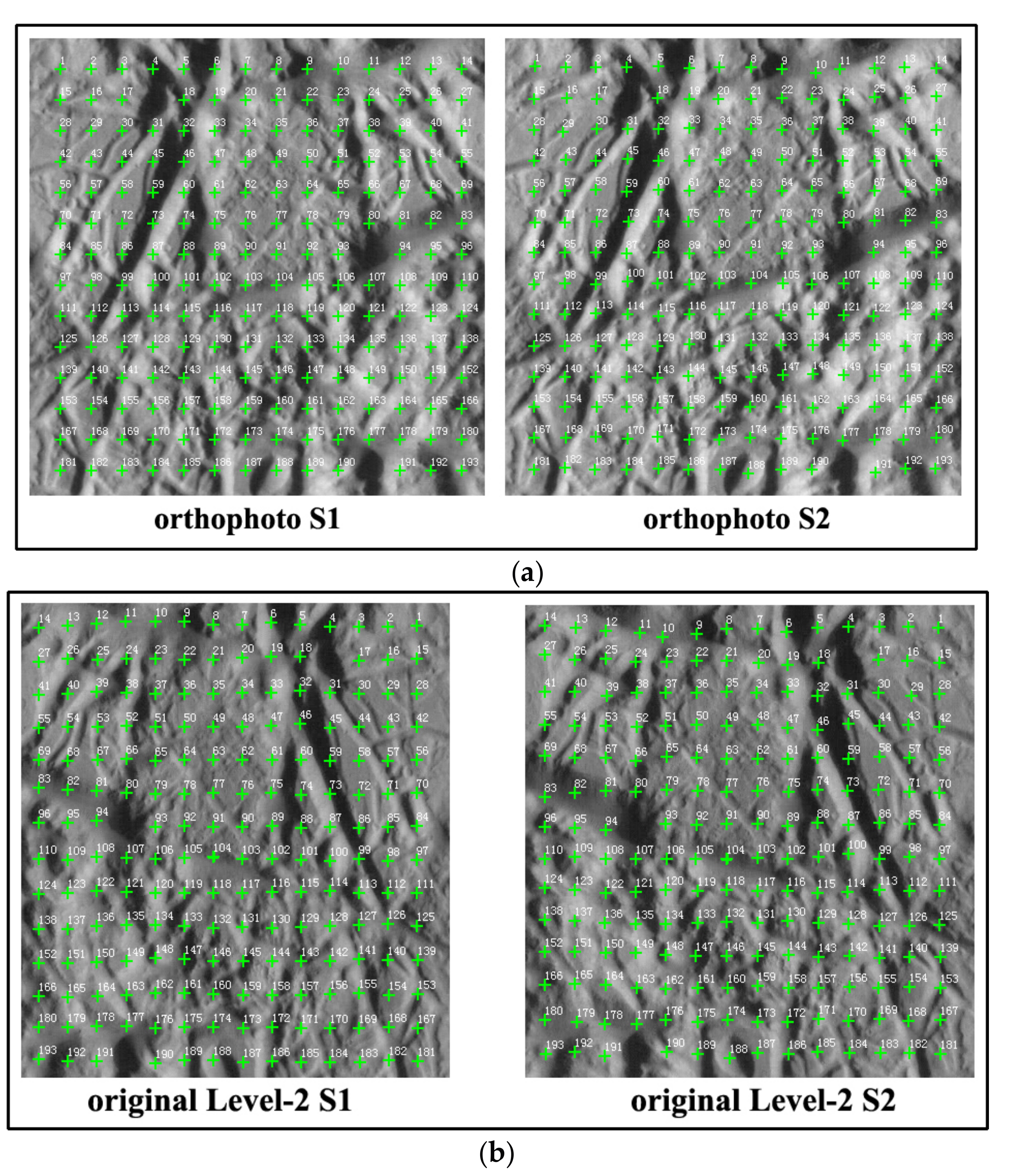

- (1)

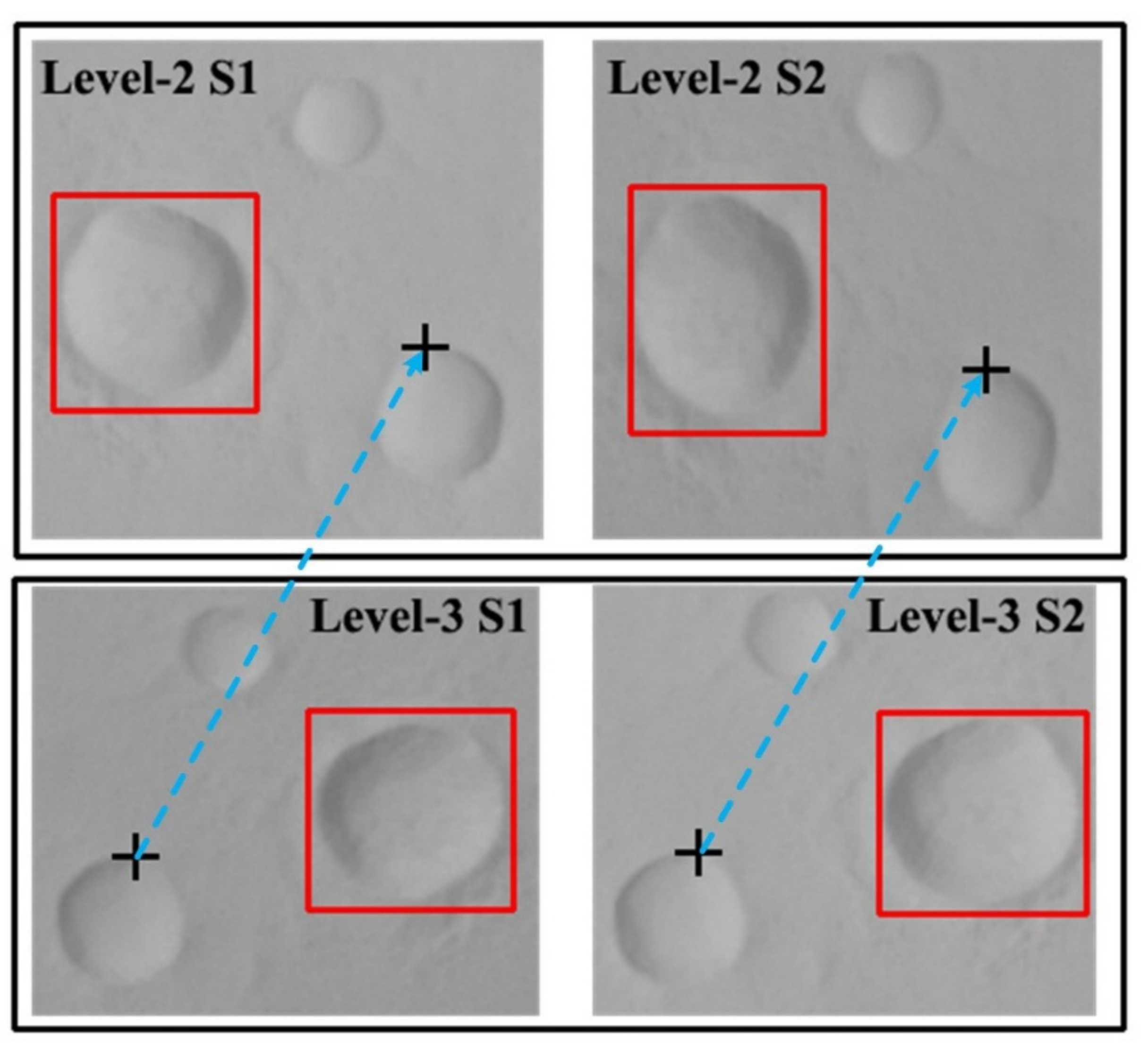

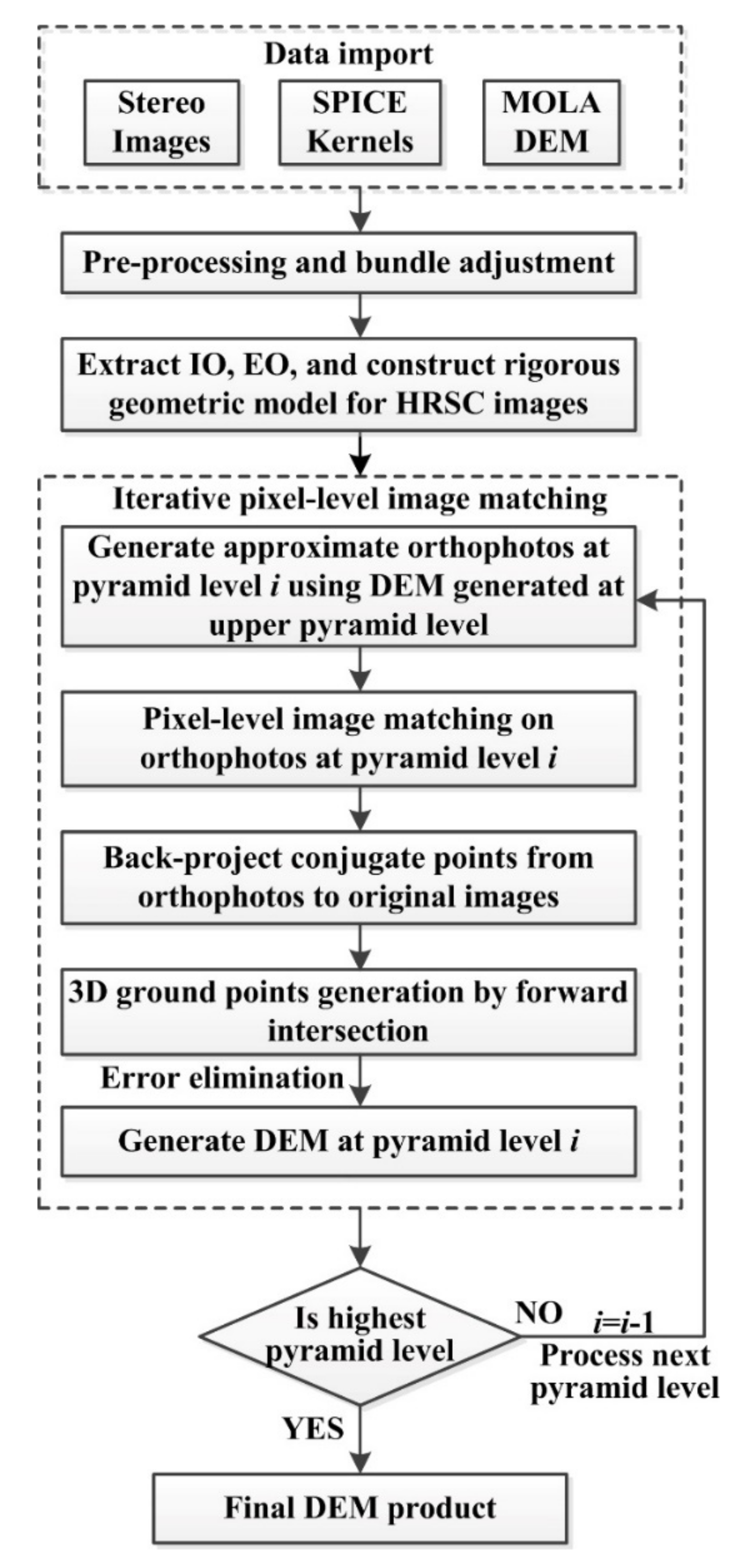

- Suppose that we start from the second pyramid level. Firstly, a rough MOLA DEM is used to perform image rectification, and two approximate orthophotos can be generated using the original HRSC Level-2 S1 and S2 images. Then, the pixel-level image matching is performed on approximate orthophotos instead of original images. The matched points on the approximate orthophotos are converted to the original images. Both image rectification and coordinate transformation of the matched points can use the fast back-projection algorithm described previously.

- (2)

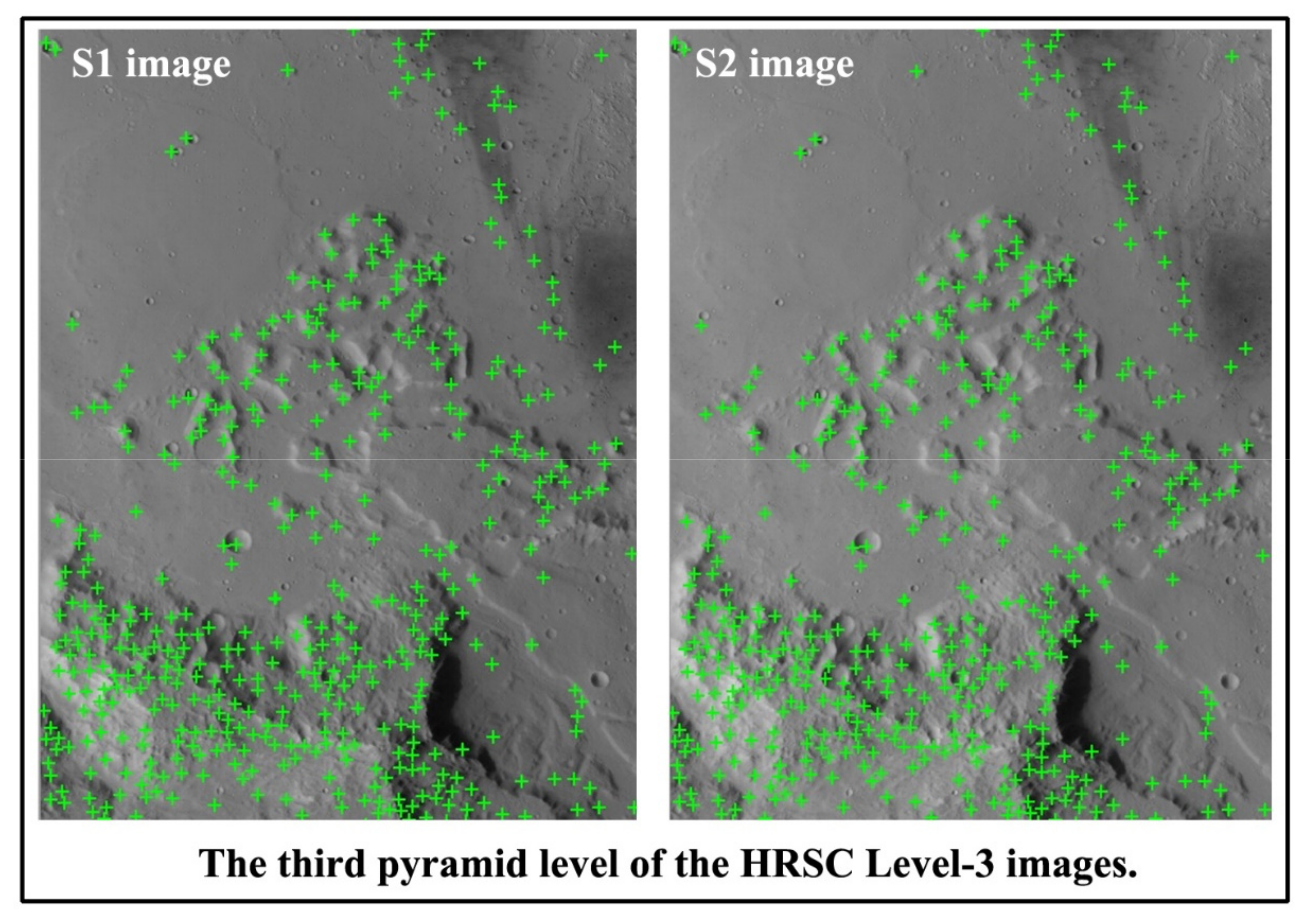

- As shown in Figure 5, the hierarchical image matching strategy is utilized. The matched points at each pyramid level are used to generate DEM through forward intersection.

- (3)

- The generated DEM at the second pyramid level is used as a reference DEM for image rectification at the first pyramid level. Similarly, the generated DEM at the first pyramid level is used as a reference DEM to rectify the original images. Hence, the image distortions caused by perspective projection and terrain relief can be removed by refined DEM. This is very helpful for decreasing the search range.

- (4)

- In the image matching procedure, the approximate positons of conjugate points are estimated using the ground point coordinates of orthophotos, which can efficiently and accurately determine the start point of template matching.

2.3.1. Image Matching on Approximate Orthophotos

- (1)

- The original stereo images are resampled with the identical ground sample distance (GSD), which is helpful for improving the matching accuracy and success rate.

- (2)

- As previously described, the Martian surface has a continuous topography. Moreover, the image distortions caused by perspective projection and terrain variation are removed by image rectification. Therefore, an area-based image matching method using normalized cross-correlation (NCC) can obtain better matching results for approximate orthophotos.

- (3)

- The coordinates displacements of conjugate points on stereo approximate orthophotos are very small. Therefore, there is no need to perform epipolar resampling, and conjugate points can be determined with a small search range. This indicates that strong geometric constraints for image matching can be introduced implicitly by image rectification.

- (4)

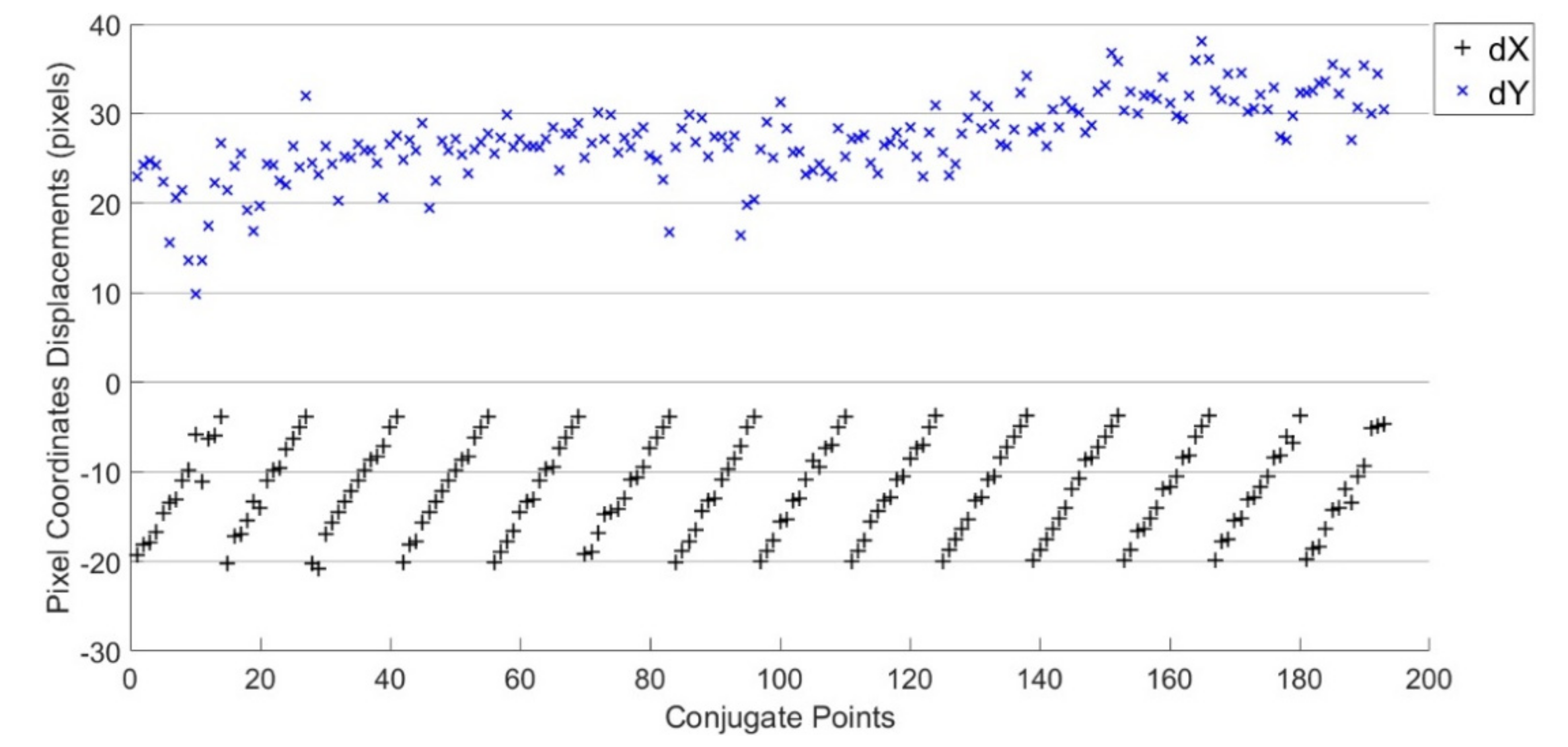

- For the HRSC stereo pairs, due to the fact that the stereo images (such as S1 and S2 channels) are acquired by identical optical cameras, a high relative accuracy can be achieved. Hence the conjugate points on the approximate orthophotos will show small pixel coordinate displacements (PCDs).

2.3.2. Hierarchical Image Matching with Iteratively-Refined DEM

2.3.3. Estimating Approximate Positions of Conjugate Points

2.4. DEM Generation

2.4.1. DEM Generation Procedure

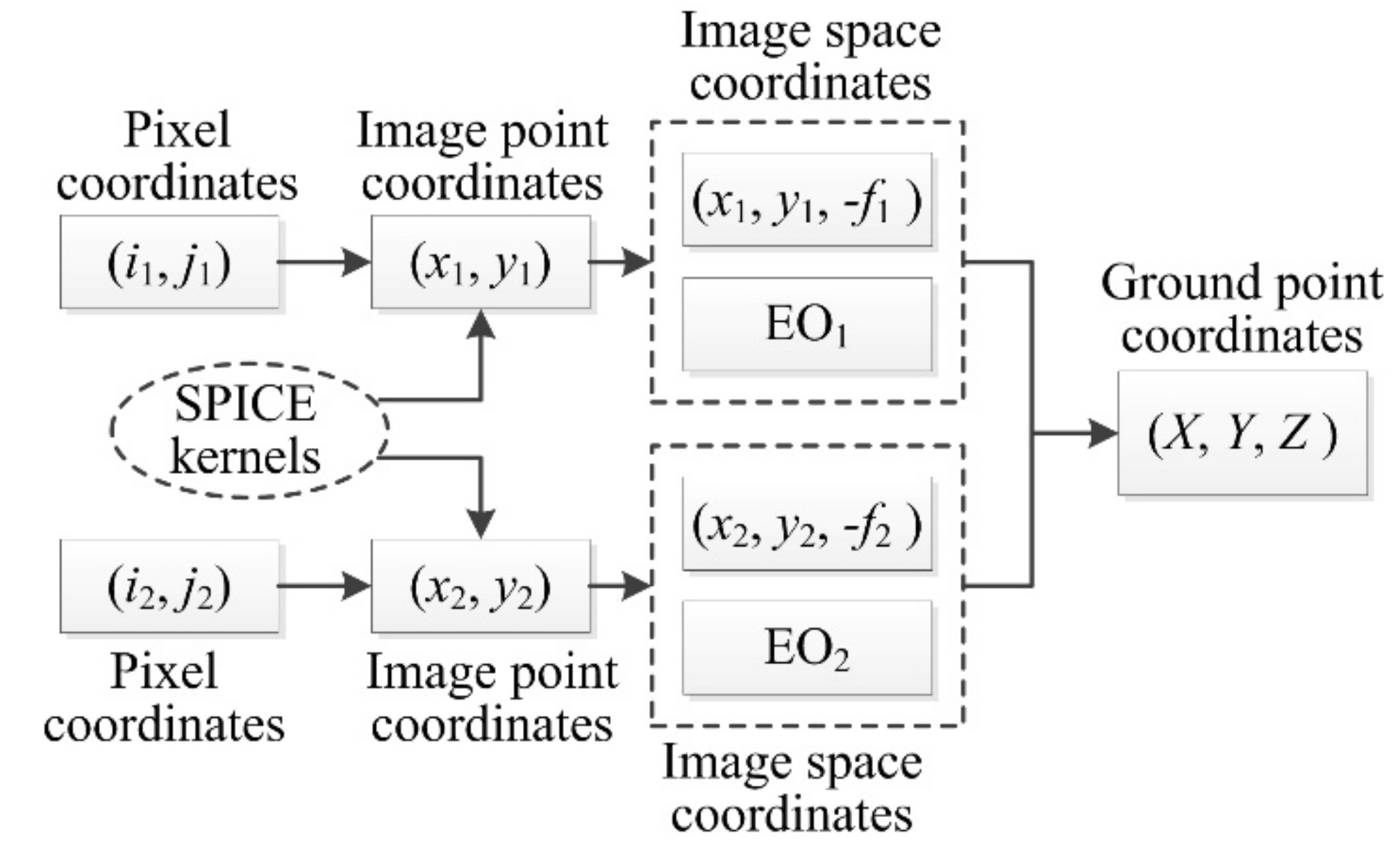

- (1)

- ISIS and SPICE kernels are used to perform data pre-processing. Several programs provided by ISIS are required, including hrsc2isis, spiceinit, tabledump and jigsaw. The HRSC Level-2 images in a raw planetary data system (PDS) format are imported into ISIS using hrsc2isis, and the kernels corresponding to the images are determined with spiceinit.

- (2)

- Data pre-processing including contrast enhancement and image pyramid generation is carried out, which is useful to improve image matching results. The bundle adjustment process is performed with jigsaw.

- (3)

- The IO and EO data are extracted from SPICE kernels. The scan line exposure time parameters are acquired with tabledump. Then, the rigorous geometric model for HRSC linear pushbroom images is constructed using Equation (2).

- (4)

- The DEM is refined iteratively. At the lowest pyramid level, the approximate orthophotos are generated using a rough DEM (MOLA DEM) as reference data. The matched points on orthophotos are converted to the original Level-2 images. Then, the DEMs are generated through forward intersection. The DEM generated at the current pyramid level is used as a source of reference data to generate approximate orthophotos at the next pyramid level.

2.4.2. Forward Intersection for HRSC Linear Pushbroom Imagery

2.4.3. Wrongly Matched Points Elimination

2.4.4. Comparison with the HRSC Team’s Method

- (1)

- Both methods noted the importance of image rectification for image matching in HRSC linear pushbroom imagery. However, epipolar or quasi-epipolar geometric constraints is not used in the proposed method. As previously described, we use geometric constraints introduced by image rectification to restrict the search range.

- (2)

- A multi-image matching method was utilized by the HRSC team, with the merit of generating more accurate DEM results. However, we suggest that S1 and S2 channels be used to generate DEMs because of computation efficiency.

- (3)

- Both methods required that the matched points be converted to the original images. This is referred to as back-projection in the proposed method. However, the detailed back-projection algorithm was not presented in the HRSC team’s method. We realized the significance of the back-projection algorithm for image matching efficiency, and used a fast back-projection method using geometric constraints in object space.

- (4)

- Due to the fact that the proposed method uses a pixel-level image matching strategy, it is not necessary to use the SfS method to refine the generated DEM further.

3. Results

3.1. Experimental Datasets

- (a)

- spacecraft’s position data: ORMM__070401000000_00387.BSP for orbits 4165 and 4235, ORMM__080201000000_00474.BSP for orbit 5273, and ORMM__071201000000_00457.BSP for orbit 5124;

- (b)

- spacecraft’s orientation data: ATNM_P060401000000_01122.BC;

- (c)

- spacecraft’s clock coefficients: MEX_150108_STEP.TSC;

- (d)

- HRSC’s geometric parameters: MEX_HRSC_V03.TI and hrscAddendum004.ti;

- (e)

- reference frame specifications: MEX_V12.TF;

- (f)

- leapseconds tabulation: naif0010.tls; and

- (g)

- target body (Mars) size, shape and orientation: pck00009.tpc.

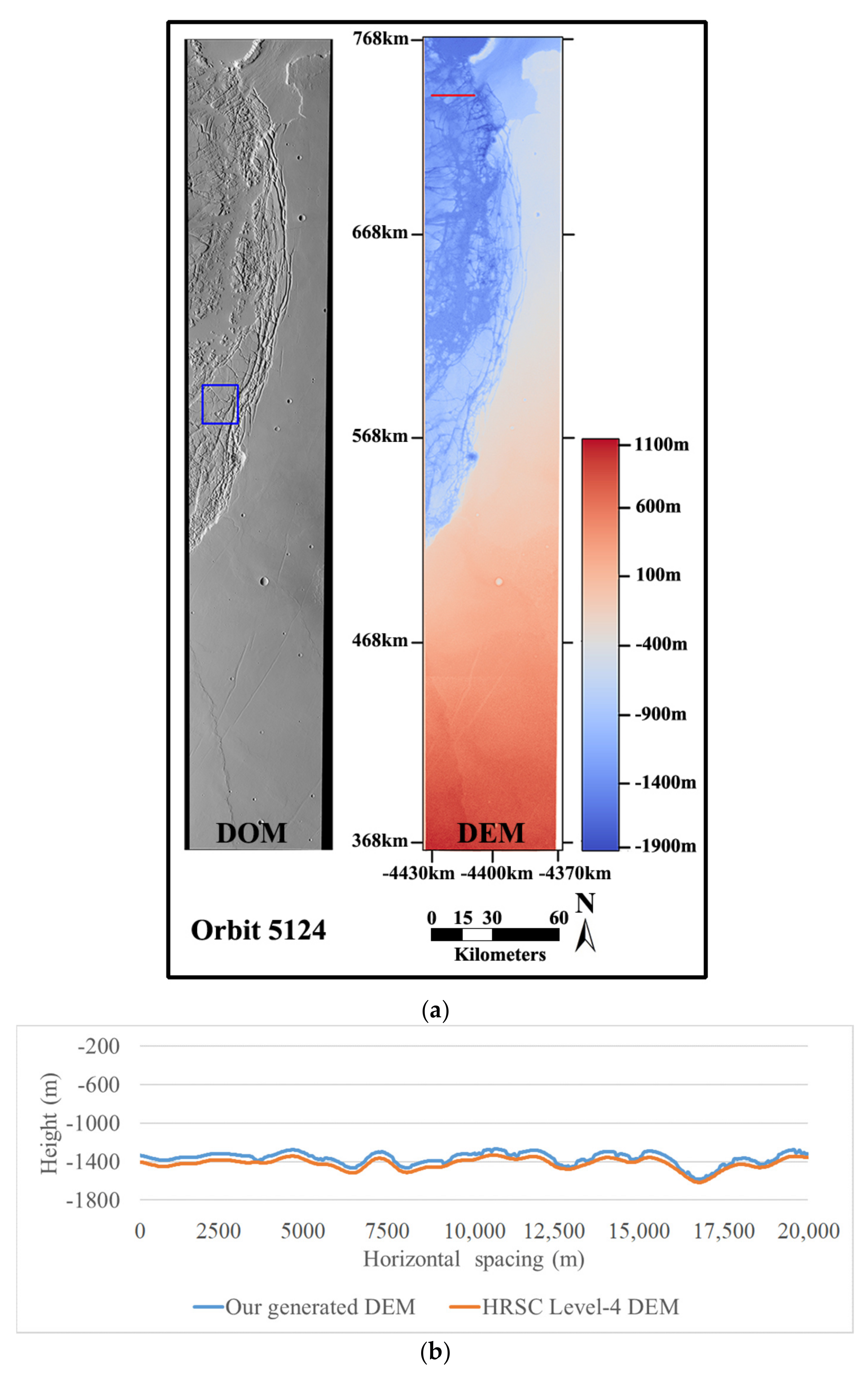

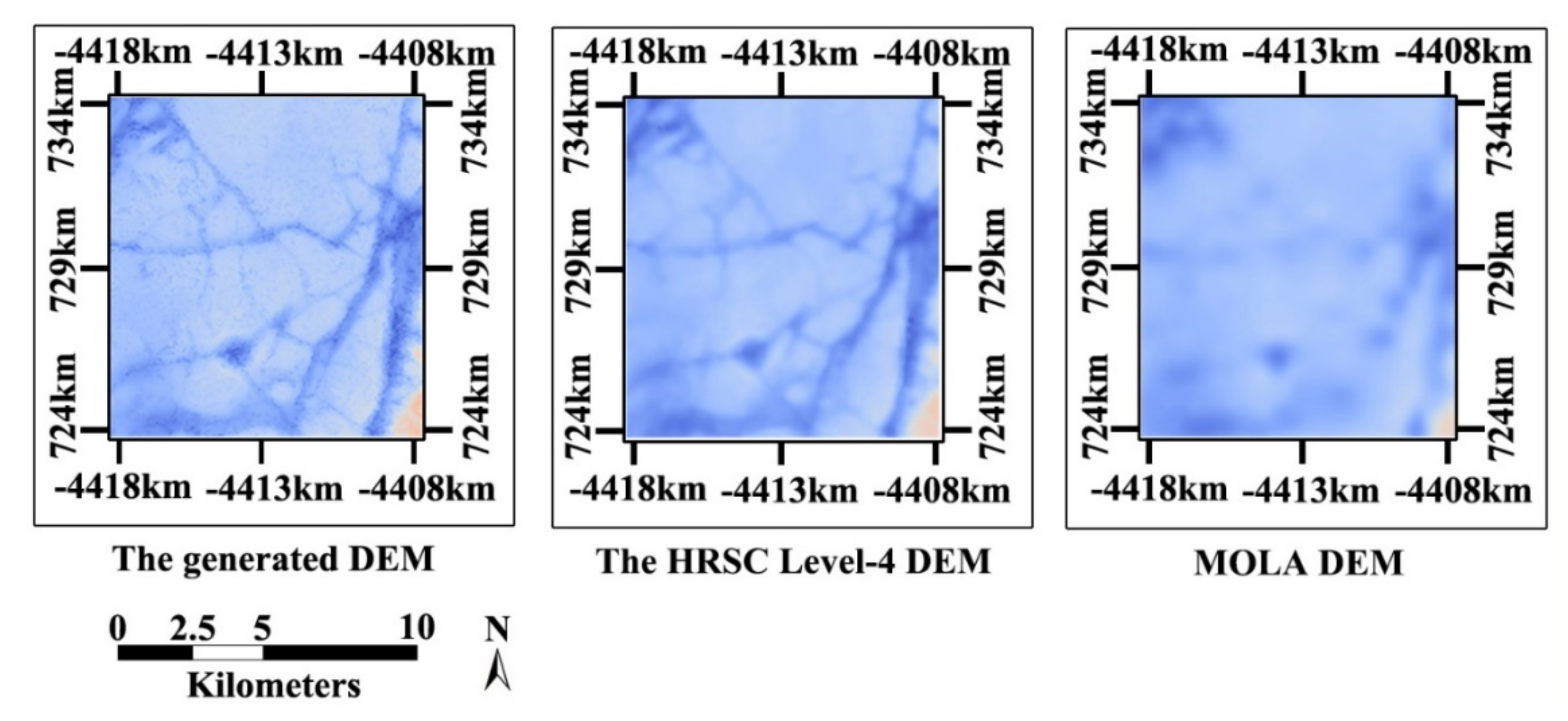

3.2. Experimental Results

3.2.1. Image Matching Results

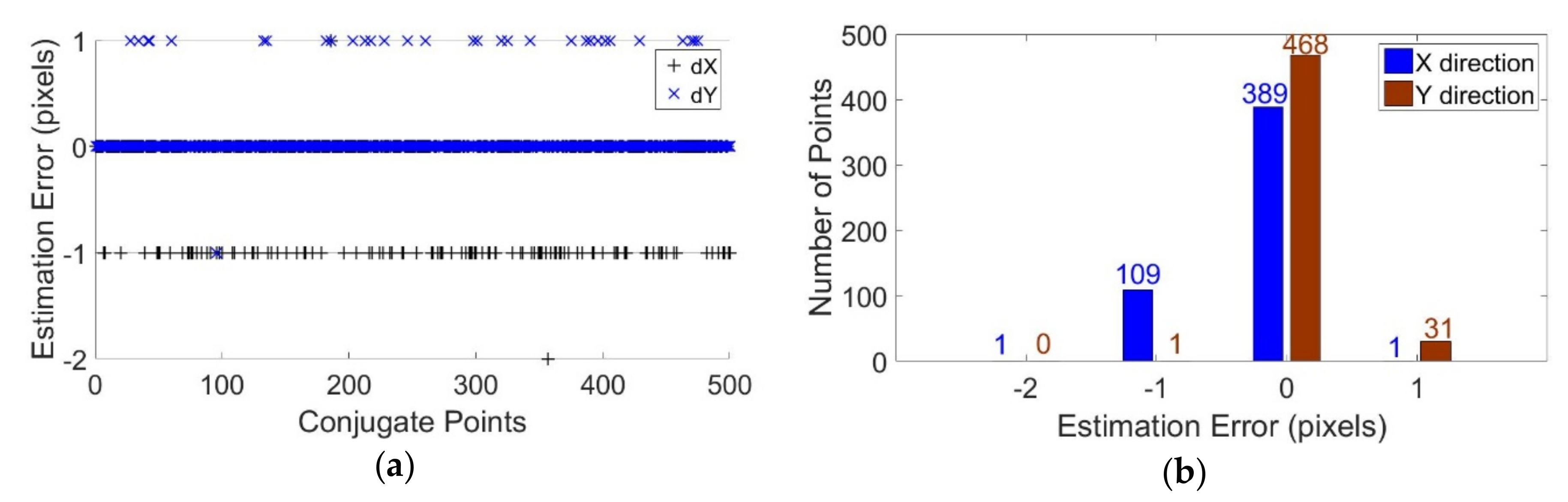

3.2.2. Estimation Accuracy for Approximate Positions of Conjugate Points

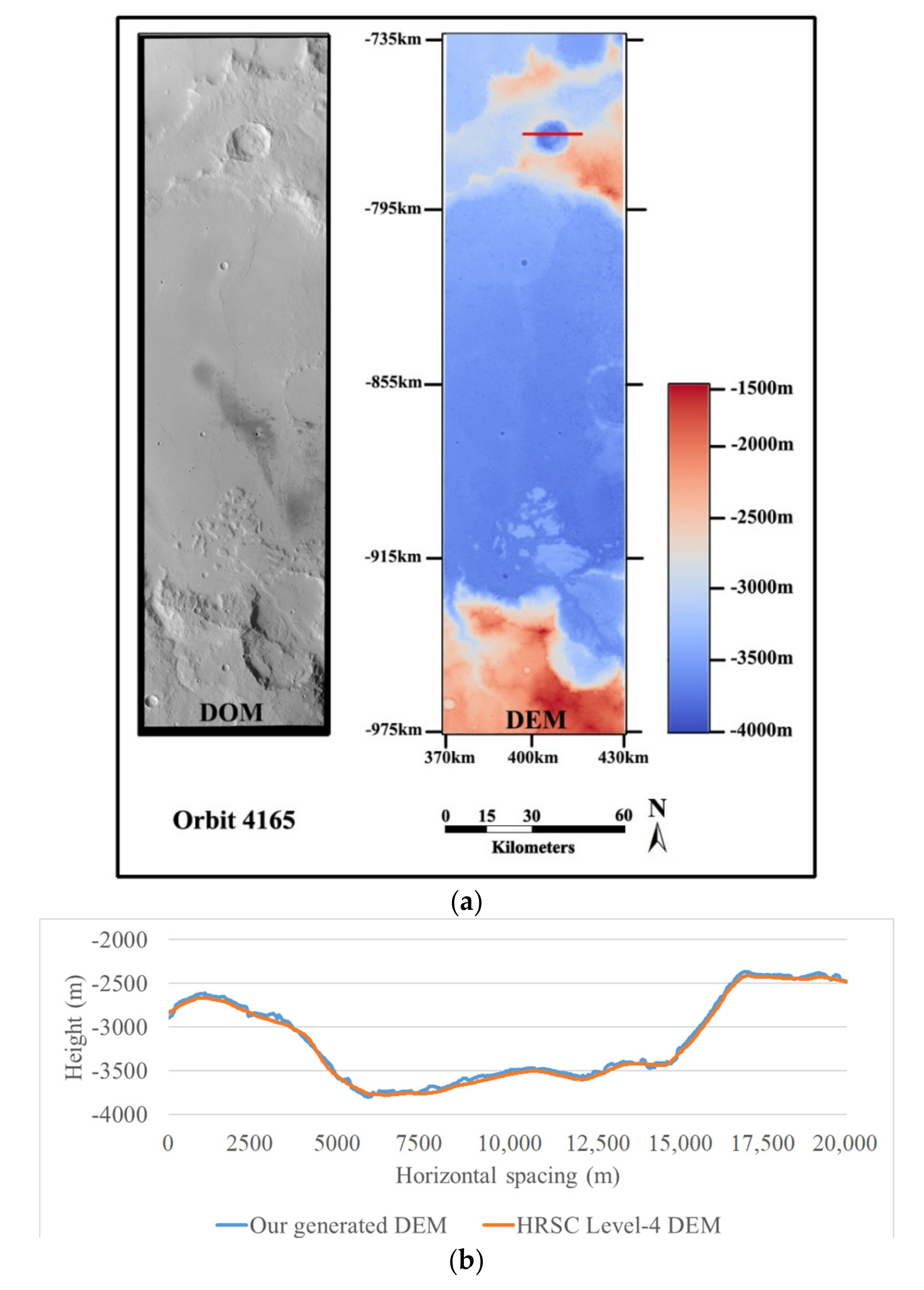

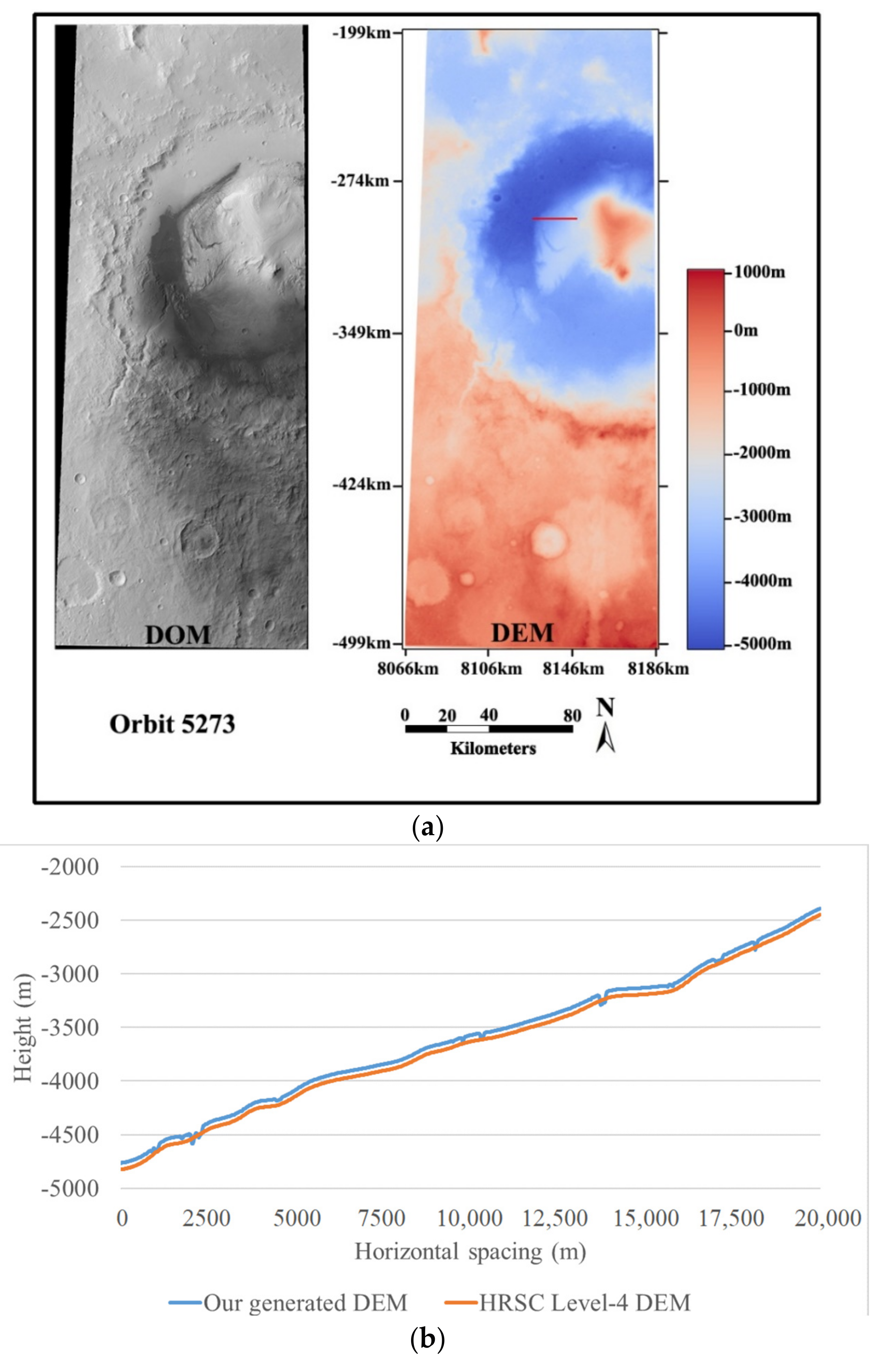

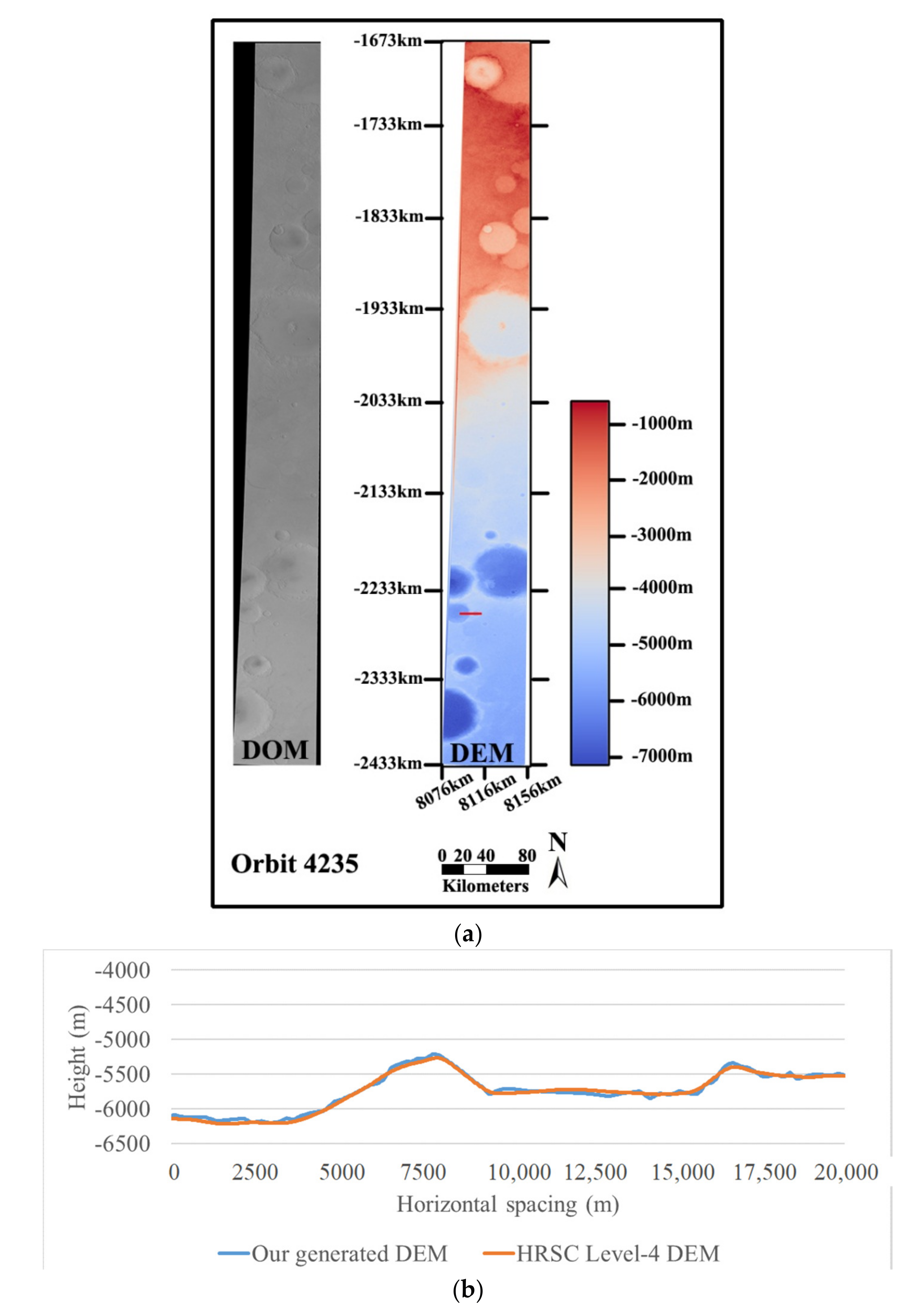

3.2.3. DEM Results

3.2.4. DEM Accuracy Analysis

4. Discussion

5. Conclusions

- (1)

- We use the derived DEM at current pyramid level to generate orthophotos at the next pyramid level. Hence the proposed image matching method has the advantage that the a priori knowledge at each pyramid level is used to the greatest extent possible. This characteristic strategy greatly improves the image matching efficiency and accuracy.

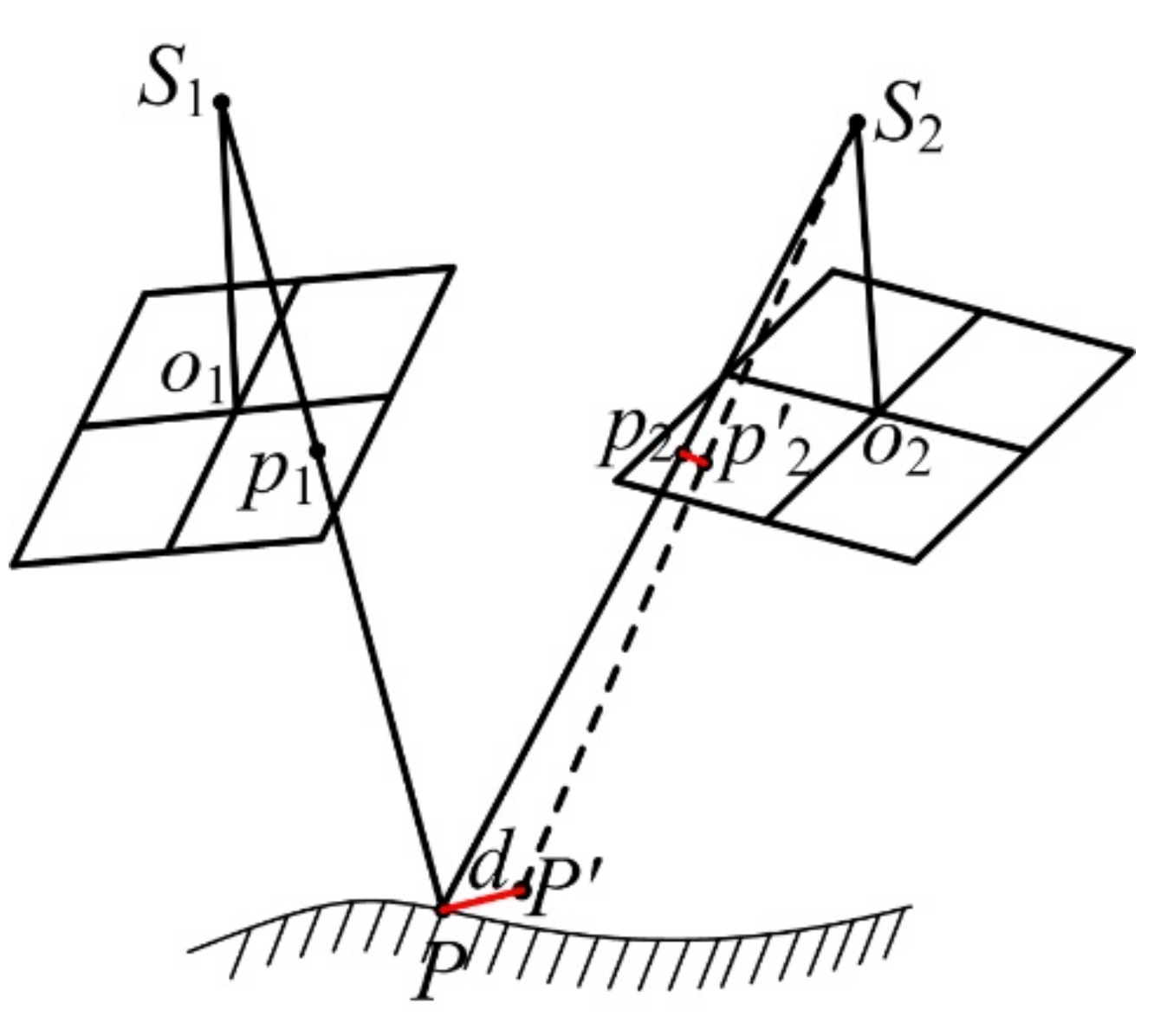

- (2)

- Though the epipolar resampling method is not utilized by the proposed image matching method, strong geometric constraints can be introduced through image rectification. Hence, it is verified that the use of pixel coordinates of orthophotos to estimate the approximate positions of conjugate points is a practical method.

- (3)

- The pixel-level image matching method generally results in very long processing times. For a typical stereo pair of HRSC images with about 20,000 scan lines, pixel-level image matching can be accomplished within three hours using a normal personal computer. Hence, the computation efficiency of the proposed method is satisfied for pixel-level image matching.

- (4)

- We also noted that the generated DEM exhibits some deficiencies. In low contrast areas, area-based image matching may fail, and results in pointless regions. Additionally, some noise caused by inaccurate matched points should be processed by a small amount of manual editing.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Wu, S.S.C.; Elassal, A.A.; Jordan, R.; Schafer, F.J. Photogrammetric application of Viking orbital photography. Planet. Space Sci. 1982, 30, 45–53. [Google Scholar] [CrossRef]

- Kirk, R.L.; Kraus, E.H.; Rosiek, M. Recent planetary topographic mapping at the USGS, Flagstaff: Moon, Mars, Venus, and beyond. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Amsterdam, The Netherlands, 16–22 July 2000; pp. 476–490. [Google Scholar]

- Kirk, R.L.; Kraus, E.H.; Redding, B.; Galuszka, D.; Hare, T.M.; Archinal, B.A.; Soderblom, L.A.; Barrett, J.M. High-resolution topomapping of candidate MER landing sites with Mars Orbiter Camera narrow-angle images. J. Geophys. Res. Planets 2003, 108, 8088. [Google Scholar] [CrossRef]

- Kirk, R.L.; Kraus, E.H.; Rosiek, R.M.; Anderson, J.A.; Archinal, B.A.; Becker, K.J.; Cook, D.A.; Galuszka, D.M.; Geissler, P.E.; Hare, T.M.; et al. Ultrahigh resolution topographic mapping of Mars with MRO HiRISE stereo images: Meter-scale slopes of candidate Phoenix landing sites. J. Geophys. Res. Planets 2008, 113, 5578–5579. [Google Scholar] [CrossRef]

- Di, K.C.; Xu, F.; Wang, J.; Agarwal, S.; Brodyagina, E.; Li, R.X.; Matthies, L. Photogrammetric processing of rover imagery of the 2003 Mars Exploration Rover mission. ISPRS J. Photogramm. Remote Sens. 2008, 63, 181–201. [Google Scholar] [CrossRef]

- Wu, S.S.C. Extraterrestrial photogrammetry. In Manual of Photogrammetry, 6th ed.; McGlone, J.C., Ed.; American Society for Photogrammetry and Remote Sensing: Bethesda, MD, USA, 2013; pp. 1139–1152. [Google Scholar]

- Jones, E.; Caprarelli, G.; Mills, F.P.; Doran, B.; Clarke, J. An alternative approach to mapping thermophysical units from Martian thermal inertia and albedo data using a combination of unsupervised classification techniques. Remote Sens. 2014, 6, 5184–5237. [Google Scholar] [CrossRef]

- Price, M.A.; Ramsey, M.S.; Crown, D.A. Satellite-based thermophysical analysis of volcaniclastic deposits: A terrestrial analog for mantled lava flows on Mars. Remote Sens. 2016, 8, 152. [Google Scholar] [CrossRef]

- Edmundson, K.L.; Cook, D.A.; Thomas, O.H.; Archinal, B.A.; Kirk, R.L. Jigsaw: The ISIS3 bundle adjustment for extraterrestrial photogrammetry. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, Australia, 25 August–1 September 2012; pp. 203–208. [Google Scholar]

- Shean, D.E.; Alexandrov, O.; Moratto, Z.M.; Smith, B.E.; Joughin, I.R.; Porter, C.; Morin, P. An automated, open-source pipeline for mass production of digital elevation models (DEMs) from very-high-resolution commercial stereo satellite imagery. ISPRS J. Photogramm. Remote Sens. 2016, 116, 101–117. [Google Scholar] [CrossRef]

- Albertz, J.; Attwenger, M.; Barrett, J.; Casley, S.; Dorninger, P.; Dorrer, E.; Ebner, H.; Gehrke, S.; Giese, B.; Gwinner, K.; et al. HRSC on Mars Express—Photogrammetric and cartographic research. Photogramm. Eng. Remote Sens. 2005, 71, 1153–1166. [Google Scholar] [CrossRef]

- Scholten, F.; Gwinner, K.; Roatsch, T.; Matz, K.D.; Wahlisch, M.; Giese, B.; Oberst, J.; Jaumann, R.; Neukum, G. The HRSC Co-Investigator Team. Mars Express HRSC data processing—Methods and operational aspects. Photogramm. Eng. Remote Sens. 2005, 71, 1143–1152. [Google Scholar] [CrossRef]

- Rosiek, M.R.; Kirk, R.L.; Archinal, B. Utility of Viking Orbiter images and products for Mars mapping. Photogramm. Eng. Remote Sens. 2005, 71, 1187–1195. [Google Scholar] [CrossRef]

- Shan, J.; Yoon, J.; Lee, D.S.; Kirk, R.L.; Neumann, G.A.; Acton, C.H. Photogrammetric analysis of the Mars Global Surveyor mapping data. Photogramm. Eng. Remote Sens. 2005, 71, 97–108. [Google Scholar] [CrossRef]

- Li, R.X.; Hwangbo, J.; Chen, Y.H.; Di, K.C. Rigorous photogrammetric processing of HiRISE stereo imagery for Mars topographic mapping. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2558–2572. [Google Scholar]

- Hirschmüller, H.; Mayer, H.; Neukum, G. Stereo processing of HRSC Mars Express images by Semi-Global Matching. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Goa, India, 25–30 September 2006. [Google Scholar]

- Heipke, C.; Oberst, J.; Albertz, J.; Attwenger, M.; Dorninger, P.; Dorrer, E.; Ewe, M.; Gehrke, S.; Gwinner, K.; Hirschmuller, H. Evaluating planetary digital terrain models—The HRSC DTM test. Planet. Space Sci. 2007, 55, 2173–2191. [Google Scholar] [CrossRef]

- Gwinner, K.; Scholten, F.; Spiegel, M.; Schmidt, R.; Giese, B.; Oberst, J.; Heipke, C.; Jaumann, R.; Neukum, G. Derivation and validation of high-resolution digital terrain models from mars express HRSC data. Photogramm. Eng. Remote Sens. 2009, 75, 1127–1142. [Google Scholar] [CrossRef]

- Sidiropoulos, P.; Muller, J. Batch co-registration of Mars high-resolution images to HRSC MC11-E mosaic. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume XLI, Part B4, pp. 491–495. [Google Scholar]

- Geng, X.; Xu, Q.; Lan, C.Z.; Xing, S. An iterative pixel-level image matching method for Mars mapping using approximate orthophotos. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Hong Kong, China, 13–16 August 2017; pp. 41–48. [Google Scholar]

- Gruen, A. Development and status of image matching in photogrammetry. Photogramm. Rec. 2012, 27, 36–57. [Google Scholar] [CrossRef]

- Haala, N. The landscape of dense image matching algorithms. In Proceedings of the Photogrammetric Week ’13, Stuttgart, Germany, 9–13 September 2013; pp. 271–284. [Google Scholar]

- Zhang, Y.F.; Zhang, Y.J.; Mo, D.L.; Zhang, Y.; Li, X. Direct digital surface model generation by semi-global vertical line locus matching. Remote Sens. 2017, 9, 214. [Google Scholar] [CrossRef]

- Shao, Z.; Yang, N.; Xiao, X.; Zhang, L.; Peng, Z. A multi-view dense point cloud generation algorithm based on low-altitude remote sensing images. Remote Sens. 2016, 8, 381–397. [Google Scholar] [CrossRef]

- Zhang, L.; Gruen, A. Multi-image matching for DSM generation from IKONOS imagery. ISPRS J. Photogramm. Remote Sens. 2006, 60, 195–211. [Google Scholar] [CrossRef]

- Hirschmüller, H. Stereo processing by Semi-Global Matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Wenzel, K.; Rothermel, M.; Haala, N.; Fritsch, D. SURE—The ifp software for dense image matching. In Proceedings of the Photogrammetric Week ’13, Stuttgart, Germany, 9–13 September 2013; pp. 59–70. [Google Scholar]

- Wu, B.; Liu, W.C.; Grumpe, A.; Wohler, C. Shape and albedo from shading (SAfS) for pixel-level DEM generation from monocular images constrained by low-resolution DEM. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume XLI, pp. 521–527. [Google Scholar]

- Acton, C.; Bachman, N.; Semenov, B.; Wright, E. SPICE tools supporting planetary remote sensing. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; pp. 357–359. [Google Scholar]

- Ogohara, K.; Kouyama, T.; Yamamoto, H.; Sato, N.; Takagi, M.; Imamura, T. Automated cloud tracking system for the Akatsuki Venus Climate Orbiter data. Icarus 2012, 217, 661–668. [Google Scholar] [CrossRef]

- Kim, T.; Lee, Y.; Shin, D. Development of a robust algorithm for transformation of a 3D object point onto a 2D image point for linear pushbroom imagery. Photogramm. Eng. Remote Sens. 2001, 67, 449–452. [Google Scholar]

- Wang, M.; Hu, F.; Li, J.; Pan, J. A fast approach to best scanline search of airborne linear pushbroom images. Photogramm. Eng. Remote Sens. 2009, 75, 1059–1067. [Google Scholar] [CrossRef]

- Zhao, S.M.; Li, D.R.; Mou, L.L. Inconsistency analysis of CE-1 stereo camera images and laser altimeter data. Acta Geod. Cartogr. Sin. 2011, 40, 751–755. [Google Scholar]

- Geng, X.; Xu, Q.; Xing, S.; Lan, C.Z.; Hou, Y.F. Differential rectification of linear pushbroom imagery based on the fast algorithm for best scan line searching. Acta Geod. Cartogr. Sin. 2013, 42, 861–868. [Google Scholar]

- Kim, T. A study on the epipolarity of linear pushbroom images. Photogramm. Eng. Remote Sens. 2000, 66, 961–966. [Google Scholar]

- Morgan, M.; Kim, K.; Jeong, S.; Habib, A. Epipolar resampling of space-borne linear array scanner scenes using parallel projection. Photogramm. Eng. Remote Sens. 2006, 72, 1255–1263. [Google Scholar] [CrossRef]

- Wang, M.; Hu, F.; Li, J. Epipolar resampling of linear pushbroom satellite imagery by a new epipolarity model. ISPRS J. Photogramm. Remote Sens. 2011, 66, 347–355. [Google Scholar] [CrossRef]

- Afsharnia, H.; Arefi, H.; Sharifi, M.A. Optimal weight design approach for the geometrically-constrained matching of satellite stereo images. Remote Sens. 2017, 9, 965. [Google Scholar] [CrossRef]

- Liu, B.; Jia, M.N.; Di, K.C.; Oberst, J.; Xu, B.; Wan, W.H. Geopositioning precision analysis of multiple image triangulation using LROC NAC lunar images. Planet. Space Sci. 2017, in press. [Google Scholar] [CrossRef]

- DGAP Software. Available online: http://www.ifp.uni-stuttgart.de/publications/software/openbundle/index.en.html (accessed on 10 November 2017).

| Instrument Name | Focal Length (mm) | Boresight Sample (Pixels) |

|---|---|---|

| MEX_HRSC_S2 | 174.80 | 2588.7635 |

| MEX_HRSC_RED | 174.61 | 2585.3891 |

| MEX_HRSC_P2 | 174.74 | 2589.3140 |

| MEX_HRSC_BLUE | 175.01 | 2602.0075 |

| MEX_HRSC_NADIR | 175.01 | 2597.3376 |

| MEX_HRSC_GREEN | 175.23 | 2598.8432 |

| MEX_HRSC_P1 | 174.80 | 2597.1421 |

| MEX_HRSC_IR | 174.82 | 2595.9317 |

| MEX_HRSC_S1 | 174.87 | 2588.7635 |

| ET Time (s) | Line Exposure Duration (s) | Line Start |

|---|---|---|

| 255818927.127810 | 0.005013 | 1 |

| 255818938.197460 | 0.005120 | 2209 |

| 255818950.649500 | 0.005227 | 4641 |

| 255818962.691930 | 0.005333 | 6945 |

| 255818974.638750 | 0.005440 | 9185 |

| 255818986.128180 | 0.005547 | 11,297 |

| 255818997.487880 | 0.005653 | 13,345 |

| 255819008.704190 | 0.005760 | 15,329 |

| 255819019.394850 | 0.005867 | 17,185 |

| Channel | Traditional ISI Algorithm | Fast Back-Projection Algorithm | Speed-Up Ratio | ||||

|---|---|---|---|---|---|---|---|

| Maximum Errors (Pixels) | Number of Iterations | CT1 (ms) | Maximum Errors (pixels) | Number of Iterations | CT2 (ms) | CT1/CT2 | |

| S1 | 0.00087 | 12~15 | 16,399 | 0.00086 | 3~7 | 832 | 19.71 |

| S2 | 0.00090 | 12~15 | 16,407 | 0.00093 | 3~7 | 844 | 19.44 |

| Matching Strategies | The Proposed Method | HRSC Team’s Method |

|---|---|---|

| is matching performed on orthophotos? | Yes | Yes |

| is area based image matching used? | Yes | Yes |

| is multi-view image matching used? | No | Yes |

| is pixel-level image matching used? | Yes | No |

| geometric constraints | image rectification | quasi-epipolar line |

| back-projection method | a fast back-projection method | not presented in detail |

| Orbit Number | Date and Time | GSD (m/Pixel) | ScanLines | Incidence Angle | Emission Angle | Matching Time (Hour) |

|---|---|---|---|---|---|---|

| 4165 | 3 April 2007 (22:34:32—22:36:29) | 12.8 | S1: 5176 × 22000 S2: 5176 × 21920 | S1: 64.87° S2: 64.67° | S1: 20.35° S2: 21.12° | 2.58 |

| 5273 | 9 February 2008 (08:47:41—08:51:39) | 23.1 | S1: 5176 × 18544 S2: 5176 × 19184 | S1: 50.95° S2: 51.39° | S1: 21.74° S2: 23.13° | 1.96 |

| 4235 | 23 April 2007 (12:51:12—12:55:26) | 25.7 | S1: 2584 × 32672 S2: 2584 × 32920 | S1: 49.93° S2: 49.75° | S1: 20.77° S2: 21.55° | 1.85 |

| 5124 | 28 December 2007 (21:14:42—21:17:28) | 27.5 | S1: 2584 × 16976 S2: 2584 × 16816 | S1: 68.65° S2: 68.89° | S1: 20.58° S2: 21.11° | 0.89 |

| Orbit Number | Height Displacements (m) | Height Displacements in GSD (Pixels) | ||||

|---|---|---|---|---|---|---|

| Maximum Value | Mean Value | RMSE | Maximum Value | Mean Value | RMSE | |

| 4165 | 81.6 | 25.4 | 31.3 | 6.3 | 2.0 | 2.4 |

| 5273 | 76.3 | 30.5 | 34.5 | 3.1 | 1.2 | 1.4 |

| 4235 | 93.8 | 25.1 | 30.2 | 3.8 | 1.0 | 1.2 |

| 5124 | 79.2 | 40.9 | 28.6 | 3.2 | 1.6 | 1.1 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geng, X.; Xu, Q.; Xing, S.; Lan, C.; Xu, J. A Novel Pixel-Level Image Matching Method for Mars Express HRSC Linear Pushbroom Imagery Using Approximate Orthophotos. Remote Sens. 2017, 9, 1262. https://doi.org/10.3390/rs9121262

Geng X, Xu Q, Xing S, Lan C, Xu J. A Novel Pixel-Level Image Matching Method for Mars Express HRSC Linear Pushbroom Imagery Using Approximate Orthophotos. Remote Sensing. 2017; 9(12):1262. https://doi.org/10.3390/rs9121262

Chicago/Turabian StyleGeng, Xun, Qing Xu, Shuai Xing, Chaozhen Lan, and Junyi Xu. 2017. "A Novel Pixel-Level Image Matching Method for Mars Express HRSC Linear Pushbroom Imagery Using Approximate Orthophotos" Remote Sensing 9, no. 12: 1262. https://doi.org/10.3390/rs9121262