Supervised Sub-Pixel Mapping for Change Detection from Remotely Sensed Images with Different Resolutions

Abstract

:1. Introduction

2. The Fractional Difference Image

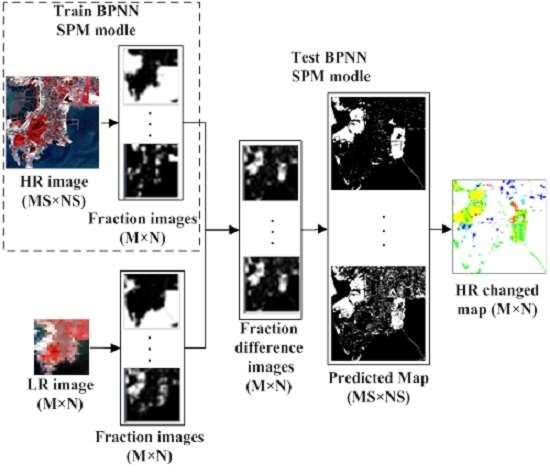

2.1. The Endmember Combination of the LR Image

2.2. Computation of the Differences in the Proportions

3. Sub-Pixel Mapping for SLCCD_DR Based on BPNN

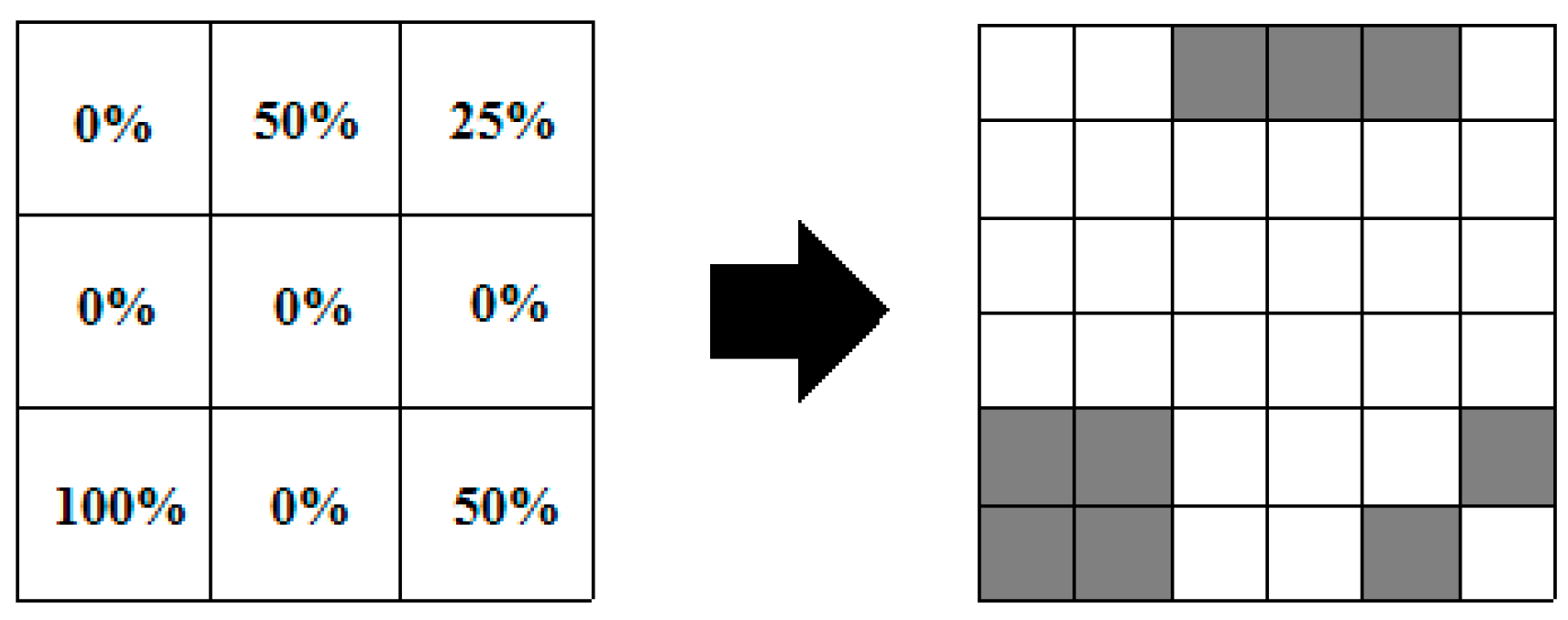

3.1. Sub-Pixel Mapping

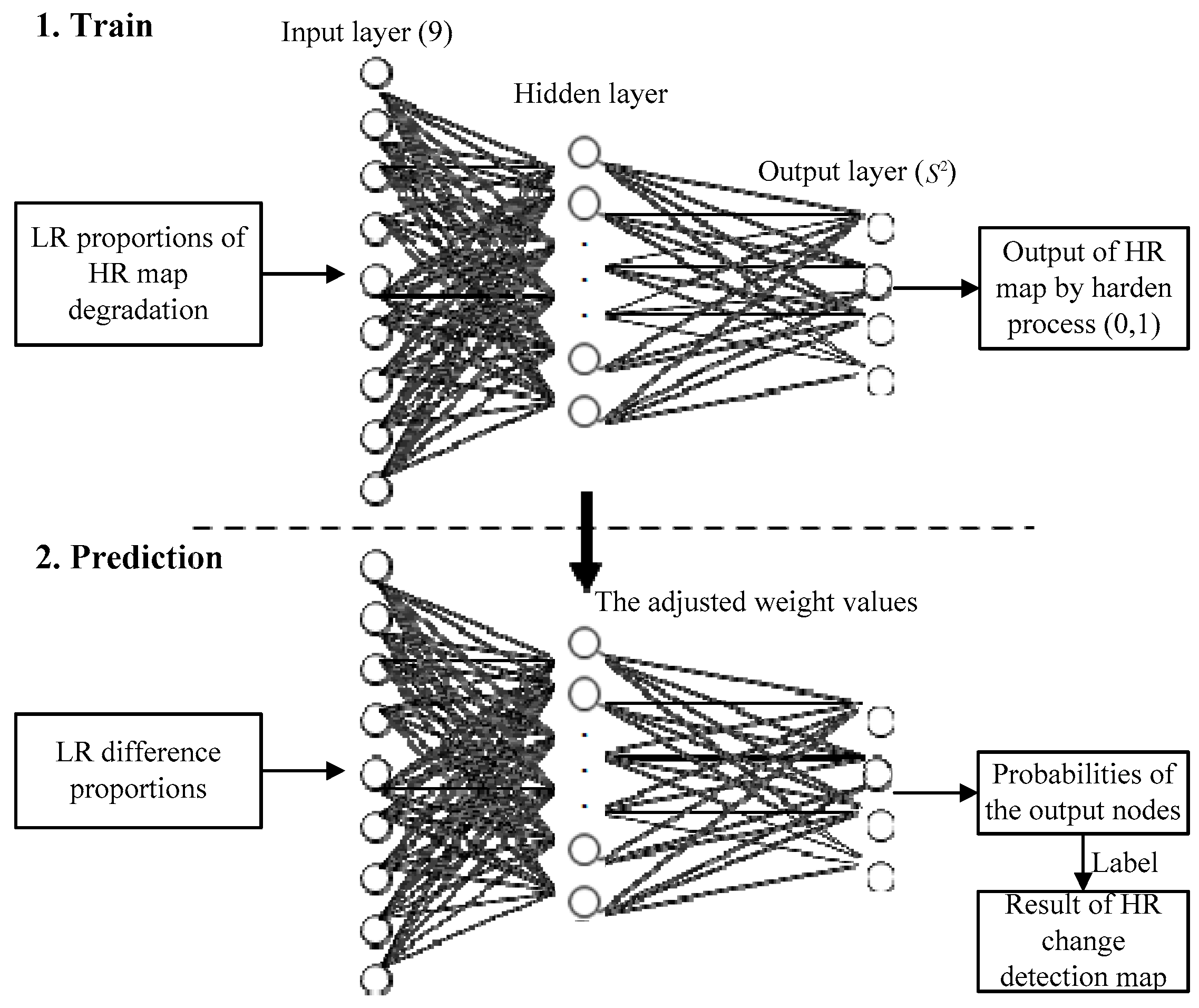

3.2. BPNN-Based Sub-Pixel Mapping Model

3.3. The Architecture of BPNN for SLCCD_DR

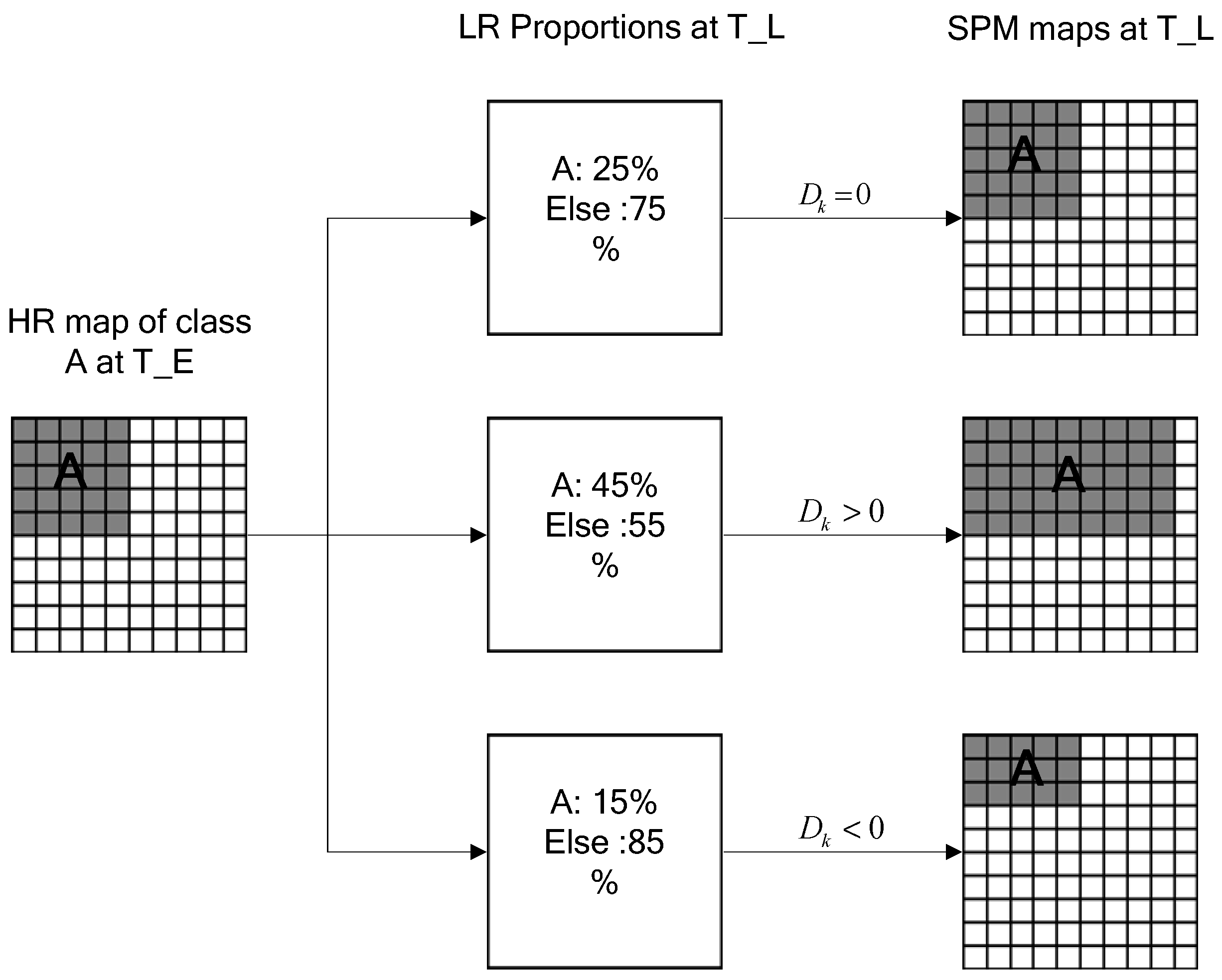

3.4. The SLCCD_DR Hypothesis

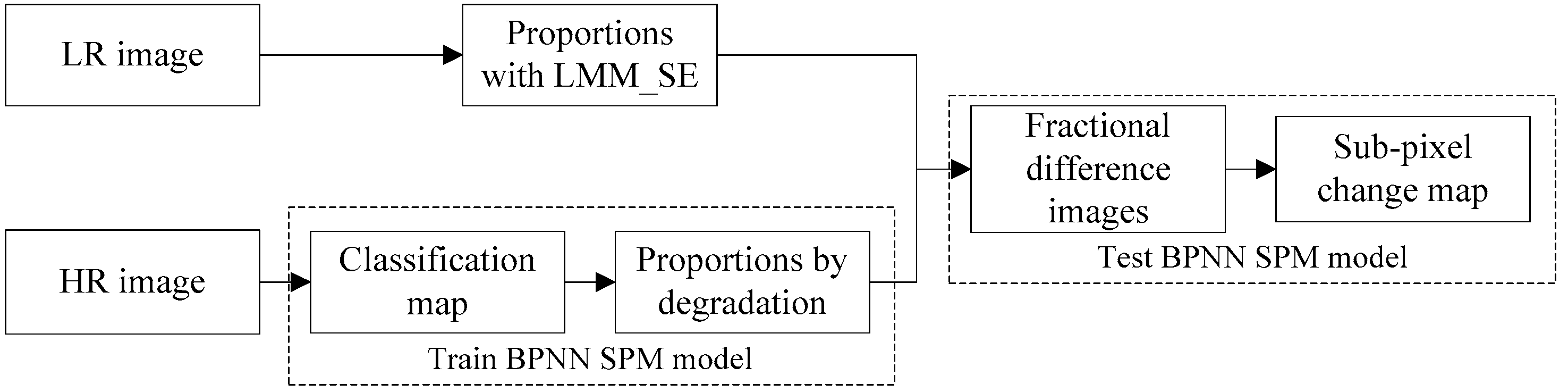

4. The Flowchart of the Proposed Algorithm

5. Experiments and Analysis

5.1. Synthetic Data Test

5.1.1. Effects of the LMM_SE

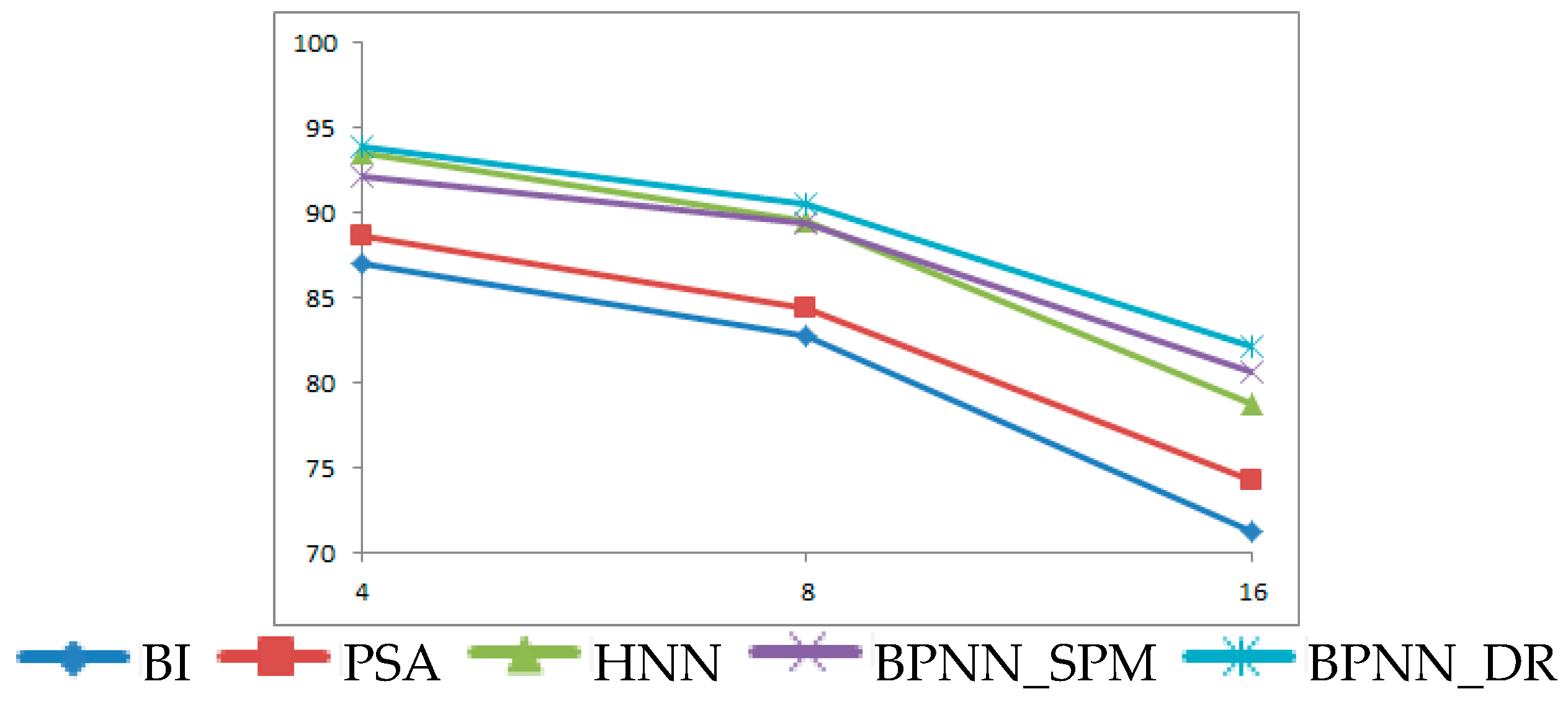

5.1.2. Comparison of the Different SLCCD_DR Methods

5.1.3. Different Scale and Computational Efficiency

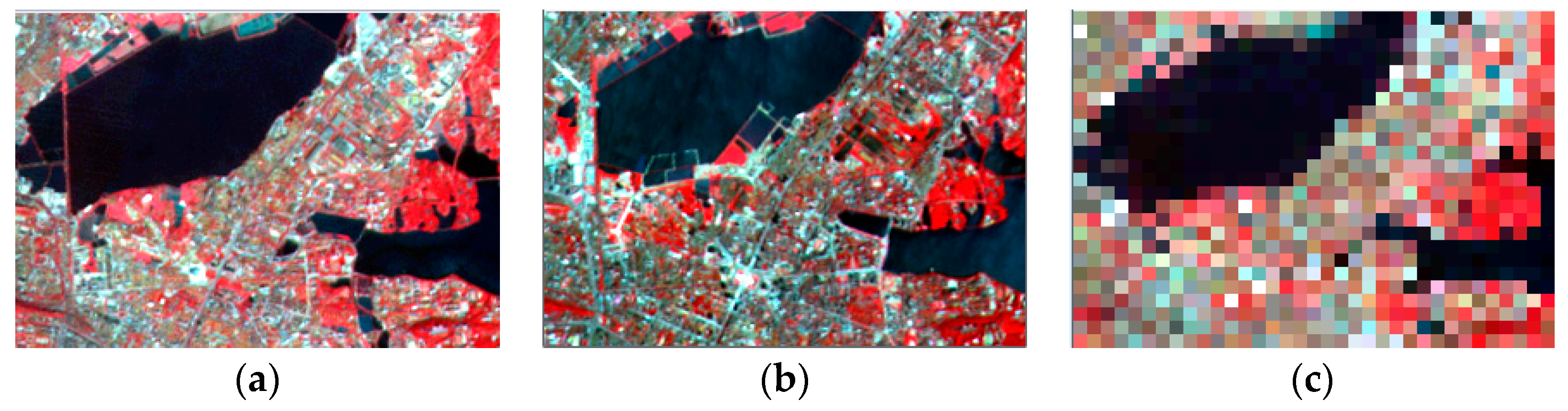

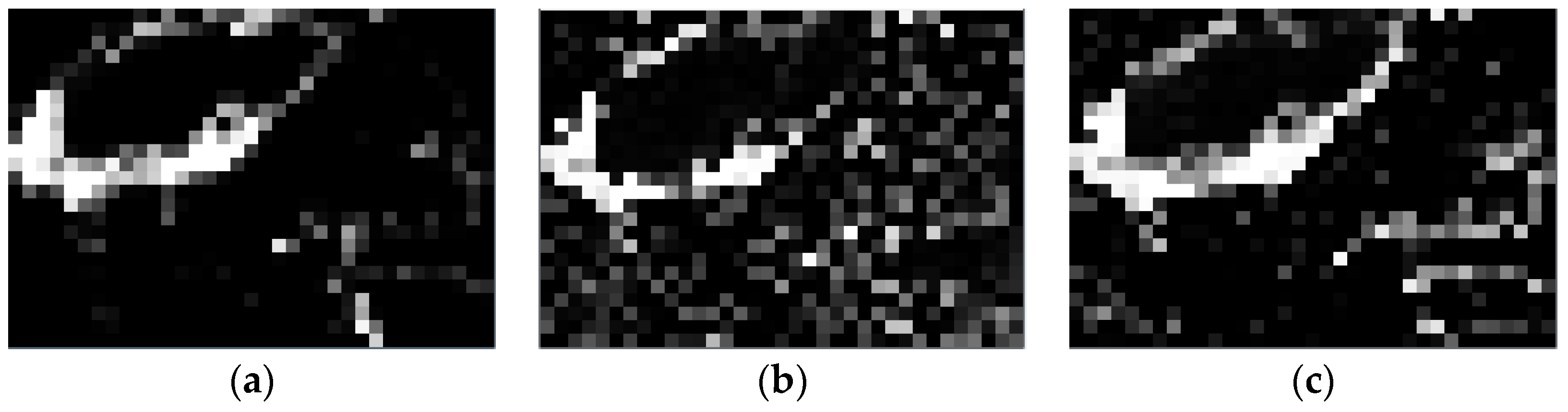

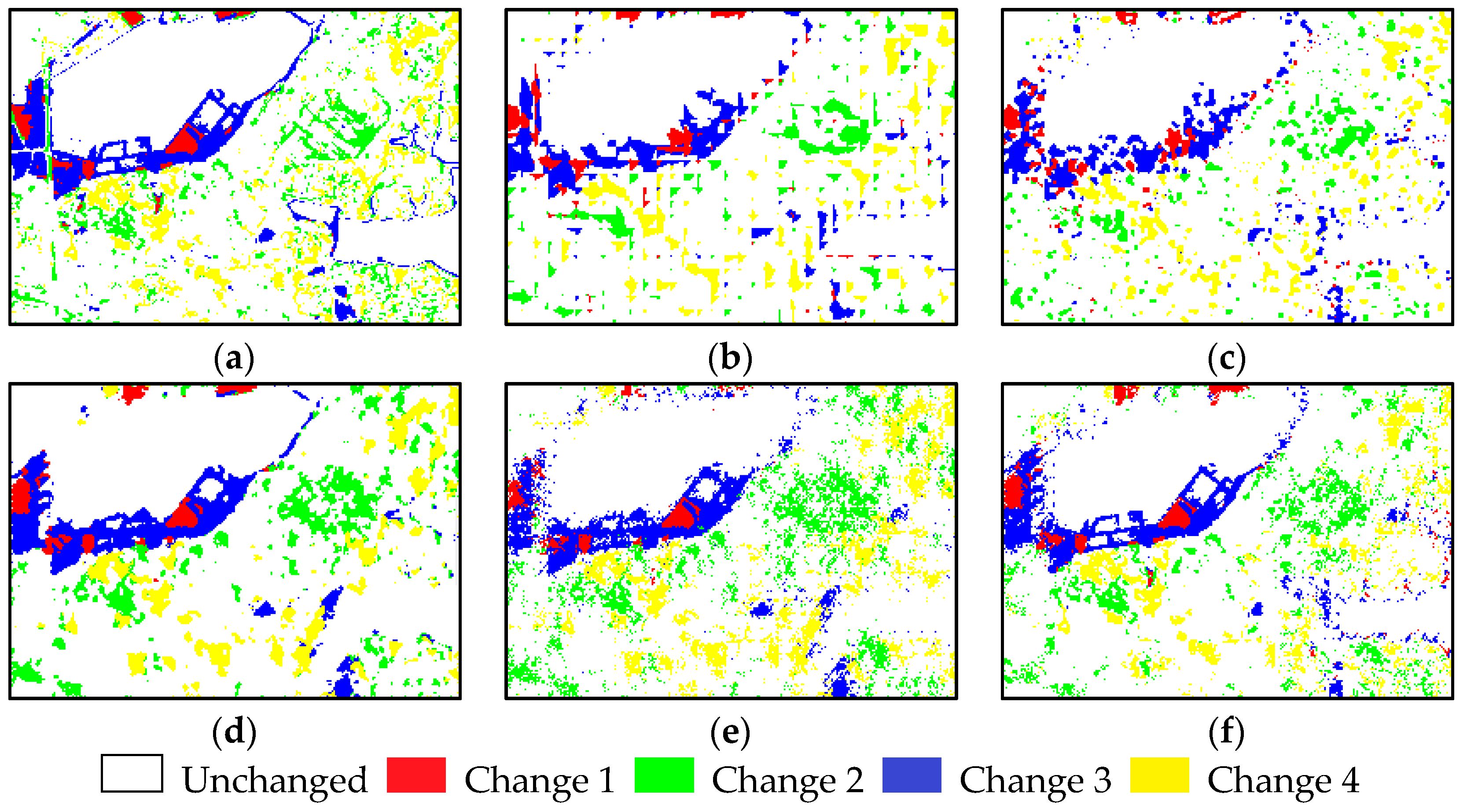

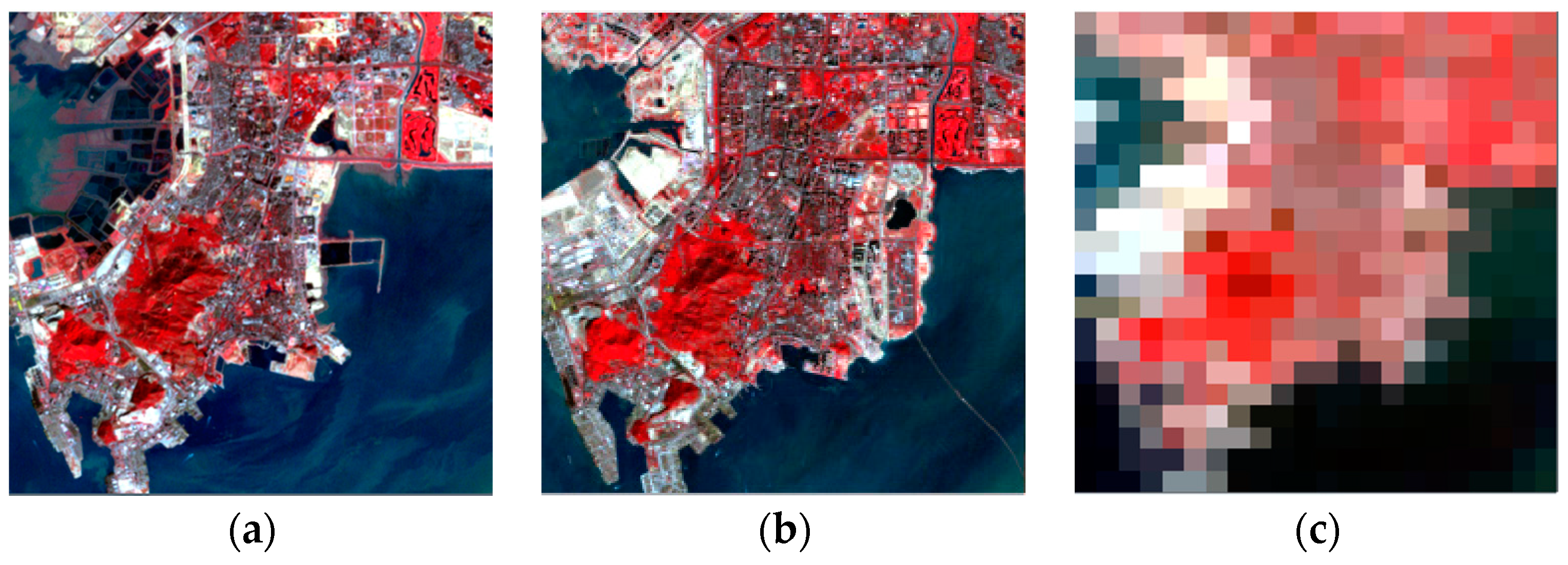

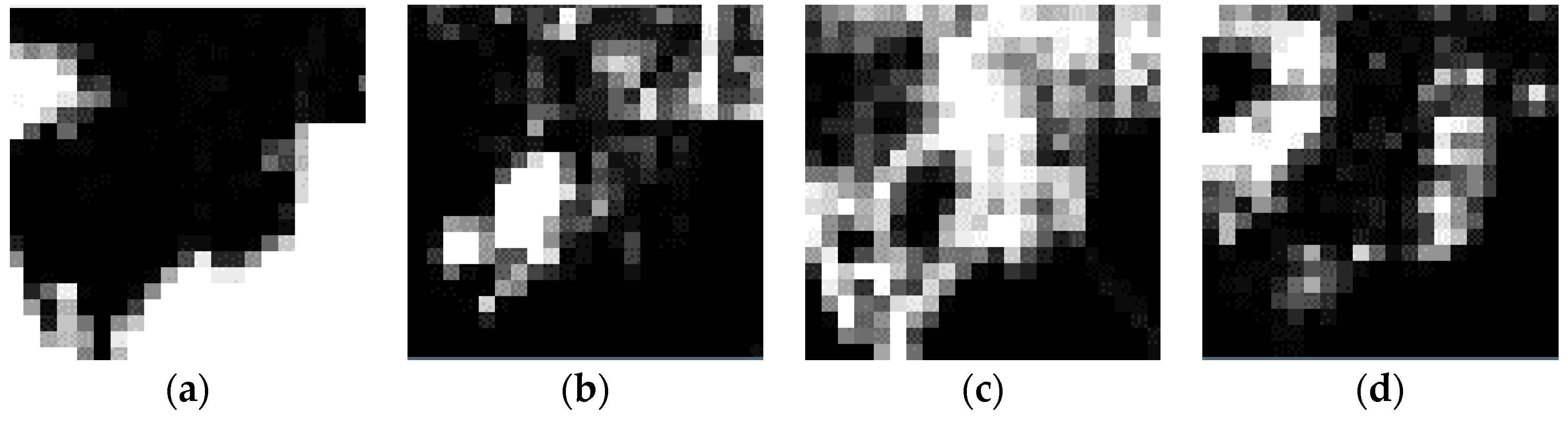

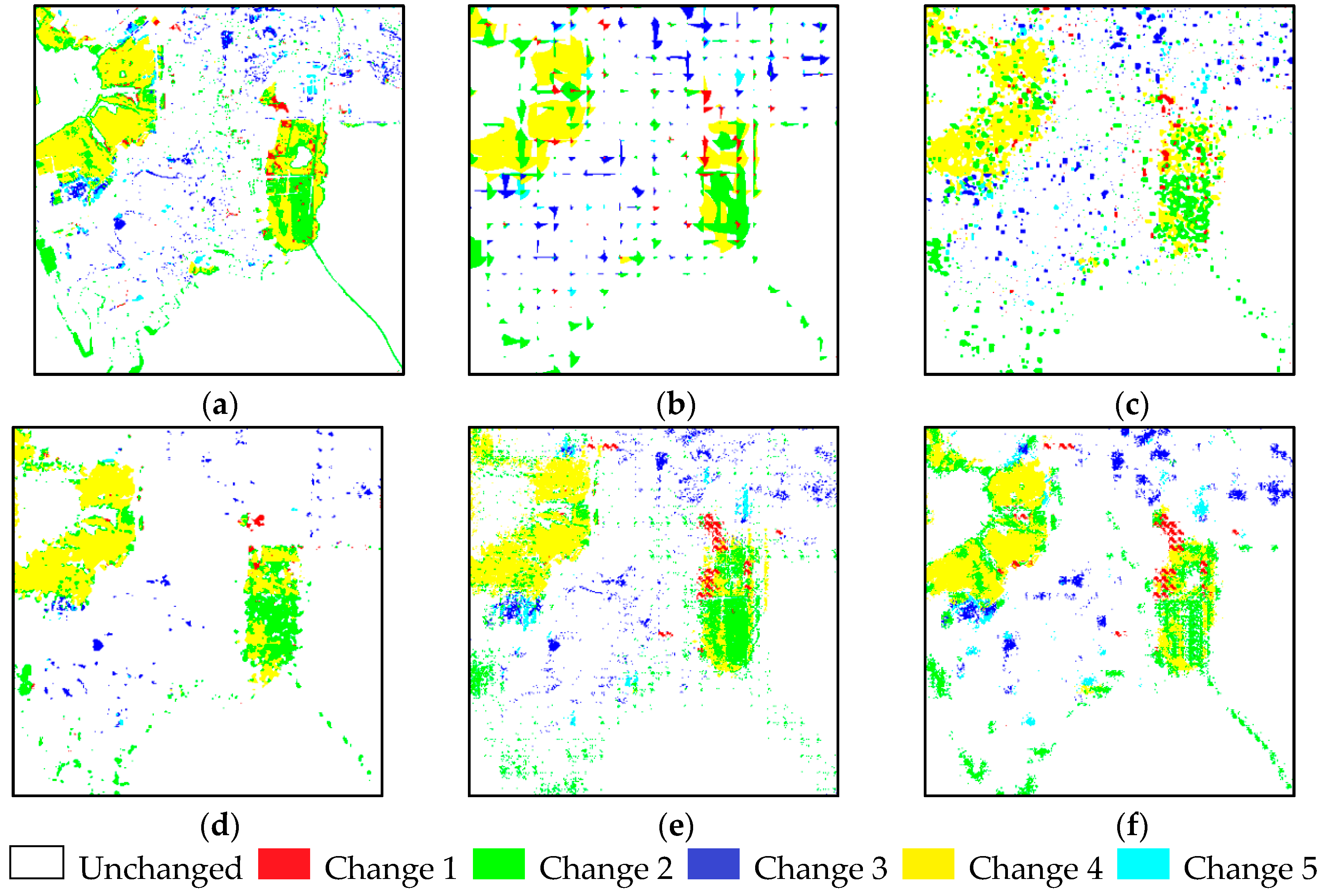

5.2. Real Data Experiment

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| BI | Bilinear interpolation-based method |

| BPNN_DR | SLCCD approach based on BPNN with different resolution images |

| BPNN_SPM | SLCCD approach based on the SPM result of BPNN |

| BPNN | Back propagation neural network |

| CCSM | Cross-correlogram spectral matching |

| CD | Change detection |

| EM | Expectation Maximization |

| HNN | Hopfield neural network |

| HR | High resolution |

| LCCD | Land-cover change detection |

| LMM | Linear mixture model |

| LR | Low resolution |

| PSA | Pixel swapping attraction |

| RMSE | Root mean squared error |

| SC | Soft classification |

| SE | Selective endmember |

| LMM_SE | Linear mixture model with selective endmember |

| SLCCD | Sub-pixel land-cover change detection |

| SLCCD_DR | Sub-pixel land-cover change detection with different resolution images |

| SPM | Sub-pixel mapping |

References

- Turner, B.L.; Meyer, W.B.; Skole, D.L. Global land-use/land-cover change: Towards an integrated study. Ambio. Stockh. 1994, 23, 91–95. [Google Scholar]

- Le Hégarat-Mascle, S.; Seltz, R. Automatic change detection by evidential fusion of change indices. Remote Sens. Environ. 2004, 91, 390–404. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Review ArticleDigital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Keshava, N.; Mustard, J.F. Spectral unmixing. IEEE Signal Process. Mag. 2002, 44–57. [Google Scholar] [CrossRef]

- Yang, L.; Xian, G.; Klaver, J.M.; Deal, B. Urban land-cover change detection through sub-pixel imperviousness mapping using remotely sensed data. Photogramm. Eng. Remote Sens. 2003, 69, 1003–1010. [Google Scholar] [CrossRef]

- Haertel, V.; Shimabukuro, Y.E.; Almeida-Filho, R. Fraction images in multitemporal change detection. Int. J. Remote Sens. 2004, 25, 5473–5489. [Google Scholar] [CrossRef]

- Anderson, L.O.; Shimabukuro, Y.E.; Arai, E. Cover: Multitemporal fraction images derived from Terra MODIS data for analysing land cover change over the Amazon region. Int. J. Remote Sens. 2005, 26, 2251–2257. [Google Scholar] [CrossRef]

- Verhoeye, J.; De Wulf, R. Land cover mapping at sub-pixel scales using linear optimization techniques. Remote Sens. Environ. 2002, 79, 96–104. [Google Scholar] [CrossRef]

- Mertens, K.C.; Verbeke, L.P.C.; Ducheyne, E.I.; De Wulf, R.R. Using genetic algorithms in sub-pixel mapping. Int. J. Remote Sens. 2003, 24, 4241–4247. [Google Scholar] [CrossRef]

- Thornton, M.W.; Atkinson, P.M.; Holland, D.A. A linearised pixel-swapping method for mapping rural linear land cover features from fine spatial resolution remotely sensed imagery. Comput. Geosci. 2007, 33, 1261–1272. [Google Scholar] [CrossRef]

- Tatem, A.J.; Lewis, H.G.; Atkinson, P.M.; Nixon, M.S. Super-resolution target identification from remotely sensed images using a Hopfield neual network. Geosci. IEEE Trans. Remote Sens. 2001, 39, 781–796. [Google Scholar] [CrossRef]

- Le Hégarat-Mascle, S.; Ottlé, C.; Guérin, C. Land cover change detection at coarse spatial scales based on iterative estimation and previous state information. Remote Sens. Environ. 2005, 95, 464–479. [Google Scholar] [CrossRef]

- Ling, F.; Li, W.; Du, Y.; Li, X. Land cover change mapping at the subpixel scale with different spatial-resolution remotely sensed imagery. IEEE Geosci. Remote Sens. Lett. 2011, 8, 182–186. [Google Scholar] [CrossRef]

- Wu, K.; Yi, W.; Niu, R.; Wei, L. Subpixel land cover change mapping with multitemporal remote-sensed images at different resolution. J. Appl. Remote Sens. 2015, 9, 097299. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Atkinson, P.M.; Li, Z. Land cover change detection at subpixel resolution with a Hopfield neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1339–1352. [Google Scholar] [CrossRef]

- Li, X.; Ling, F.; Du, Y.; Feng, Q.; Zhang, Y. A spatial–temporal Hopfield neural network approach for super-resolution land cover mapping with multi-temporal different resolution remotely sensed images. ISPRS J. Photogramm. Remote Sens. 2014, 93, 76–87. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M.; Shi, W. Fast subpixel mapping algorithms for subpixel resolution change detection. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1692–1706. [Google Scholar] [CrossRef]

- Ling, F.; Du, Y.; Xiao, F.; Li, X. Subpixel land cover mapping by integrating spectral and spatial information of remotely sensed imagery. IEEE Geosci. Remote Sens. Lett. 2012, 9, 408–412. [Google Scholar] [CrossRef]

- Li, X.; Ling, F.; Foody, G.M.; Du, Y. A superresolution land-cover change detection method using remotely sensed images with different spatial resolutions. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3822–3841. [Google Scholar] [CrossRef]

- Powell, R.L.; Roberts, D.A.; Dennison, P.E.; Hess, L.L. Sub-pixel mapping of urban land cover using multiple endmember spectral mixture analysis: Manaus, Brazil. Remote Sens. Environ. 2007, 106, 253–267. [Google Scholar] [CrossRef]

- Franke, J.; Roberts, D.A.; Halligan, K.; Menz, G. Hierarchical multiple endmember spectral mixture analysis (MESMA) of hyperspectral imagery for urban environments. Remote Sens. Environ. 2009, 113, 1712–1723. [Google Scholar] [CrossRef]

- Zortea, M.; Plaza, A. A quantitative and comparative analysis of different implementations of N-FINDR: A fast endmember extraction algorithm. IEEE Geosci. Remote Sens. Lett. 2009, 6, 787–791. [Google Scholar] [CrossRef]

- Van Der Meer, F.; Bakker, W. CCSM: Cross correlogram spectral matching. Int. J. Remote Sens. 1997, 18, 1197–1201. [Google Scholar] [CrossRef]

- Liu, W.; Wu, E.Y. Comparison of non-linear mixture models: Sub-pixel classification. Remote Sens. Environ. 2005, 94, 145–154. [Google Scholar] [CrossRef]

- Heinz, D.C.; Chang, C. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Lu, D.; Batistella, M.; Moran, E. Multitemporal spectral mixture analysis for Amazonian land-cover change detection. Can. J. Remote Sens. 2004, 30, 87–100. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. An adaptive semiparametric and context-based approach to unsupervised change detection in multitemporal remote-sensing images. IEEE Trans. Image Process. 2002, 11, 452–466. [Google Scholar] [CrossRef] [PubMed]

- Atkinson, P.M.; Cutler, M.; Lewis, H.G. Mapping sub-pixel proportional land cover with AVHRR imagery. Int. J. Remote Sens. 1997, 18, 917–935. [Google Scholar] [CrossRef]

- Atkinson, P.M. Super-resolution target mapping from softclassified remotely sensed imagery. In Proceedings of the 6th International Conference on Geocomputation, Brisbane, Australia, 24–26 September 2001.

- Gu, Y.; Zhang, Y.; Zhang, J. Integration of spatial–spectral information for resolution enhancement in hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1347–1358. [Google Scholar]

- Nigussie, D.; Zurita-Milla, R.; Clevers, J. Possibilities and limitations of artificial neural networks for subpixel mapping of land cover. Int. J. Remote Sens. 2011, 32, 7203–7226. [Google Scholar] [CrossRef]

- Irie, B.; Miyake, S. Capabilities of three-layered perceptrons. In Proceedings of IEEE International Conference on Neural Networks, San Diego, CA, USA, 24–27 July 1988.

- Mertens, K.C.; Verbeke, L.P.C.; Westra, T.; De Wulf, R.R. Sub-pixel mapping and sub-pixel sharpening using neural network predicted wavelet coefficients. Remote Sens. Environ. 2004, 91, 225–236. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, K.; Zhong, Y.; Li, P. A new sub-pixel mapping algorithm based on a BP neural network with an observation model. Neurocomputing 2008, 71, 2046–2054. [Google Scholar] [CrossRef]

- Ling, F.; Du, Y.; Li, X.; Li, W.; Xiao, F.; Zhang, Y. Interpolation-based super-resolution land cover mapping. Remote Sens. Lett. 2013, 4, 629–638. [Google Scholar] [CrossRef]

- Van Oort, P.A.J. Interpreting the change detection error matrix. Remote Sens. Environ. 2007, 108, 1–8. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Stehman, S.V.; Woodcock, C.E. Making better use of accuracy data in land change studies: Estimating accuracy and area and quantifying uncertainty using stratified estimation. Remote Sens. Environ. 2013, 129, 122–131. [Google Scholar] [CrossRef]

| Water | Vegetation | Urban | |

|---|---|---|---|

| LMM | 0.345 | 0.304 | 0.307 |

| LMM_SE | 0.168 | 0.299 | 0.293 |

| BI | PSA | HNN | BPNN_SPM | BPNN_DR | ||

|---|---|---|---|---|---|---|

| Omission Error | Change 1 | 23.1% | 26.8% | 14.9% | 19.4% | 11.9% |

| Change 2 | 25.1% | 18.4% | 13.4% | 15.1% | 14.4% | |

| Change 3 | 17.8% | 16.6% | 7.9% | 8.8% | 7.3% | |

| Change 4 | 7.9% | 7.4% | 9.9% | 7.9% | 7.1% | |

| Commission Error | Change 1 | 51.9% | 48.8% | 31.5% | 26.9% | 28.7% |

| Change 2 | 8.5% | 5.8% | 3.5% | 6.4% | 6.1% | |

| Change 3 | 12.9% | 12.2% | 7.5% | 8.7% | 5.3% | |

| Change 4 | 5.5% | 6.3% | 13.4% | 12.8% | 10.0% | |

| OA | 82.8% | 84.4% | 89.5% | 89.4% | 90.5% | |

| Kappa | 0.78 | 0.80 | 0.85 | 0.85 | 0.87 | |

| Time (ms) | BI | PSA | HNN | BPNN_SPM | BPNN_DR |

|---|---|---|---|---|---|

| S = 4 | 9142 | 28,172 | 37,532 | 42,932 | 41,874 |

| S = 8 | 15,652 | 41,723 | 57,623 | 68,234 | 61,234 |

| S = 16 | 23,872 | 68,987 | 88,234 | 92,342 | 84,623 |

| BI | PSA | HNN | BPNN_SPM | BPNN_DR | ||

|---|---|---|---|---|---|---|

| Omission Error | Change 1 | 82.6% | 75.2% | 73.1% | 50.6% | 46.8% |

| Change 2 | 41.3% | 33.9% | 36.1% | 30.5% | 17.3% | |

| Change 3 | 28.6% | 20.6% | 23.4% | 7.2% | 5.3% | |

| Change 4 | 26.0% | 23.8% | 16.4% | 18.4% | 16.2% | |

| Change 5 | 73.5% | 72.7% | 73.8% | 49.2% | 36.1% | |

| Commission Error | Change 1 | 79.0% | 79.8% | 69.1% | 66.0% | 62.0% |

| Change 2 | 51.8% | 45.7% | 38.2% | 33.4% | 29.8% | |

| Change 3 | 31.9% | 33.4% | 18.7% | 12.6% | 14.7% | |

| Change 4 | 19.4% | 15.7% | 14.8% | 14.9% | 7.6% | |

| Change 5 | 52.9% | 59.8% | 81.9% | 40.8% | 30.9% | |

| OA | 67.3% | 71.3% | 75.8% | 77.6% | 80.2% | |

| Kappa | 0.62 | 0.67 | 0.71 | 0.73 | 0.76 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, K.; Du, Q.; Wang, Y.; Yang, Y. Supervised Sub-Pixel Mapping for Change Detection from Remotely Sensed Images with Different Resolutions. Remote Sens. 2017, 9, 284. https://doi.org/10.3390/rs9030284

Wu K, Du Q, Wang Y, Yang Y. Supervised Sub-Pixel Mapping for Change Detection from Remotely Sensed Images with Different Resolutions. Remote Sensing. 2017; 9(3):284. https://doi.org/10.3390/rs9030284

Chicago/Turabian StyleWu, Ke, Qian Du, Yi Wang, and Yetao Yang. 2017. "Supervised Sub-Pixel Mapping for Change Detection from Remotely Sensed Images with Different Resolutions" Remote Sensing 9, no. 3: 284. https://doi.org/10.3390/rs9030284