Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images

Abstract

:1. Introduction

2. Related Work

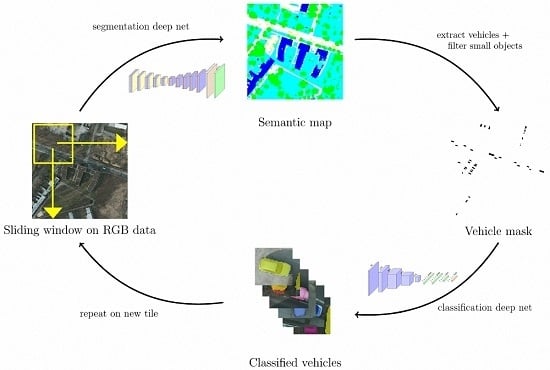

3. Proposed Method

- Semantic segmentation to infer pixel-level class masks using a fully convolutional network;

- Vehicle detection by regressing the bounding boxes of connected components;

- Object-level classification with a traditional convolutional neural network.

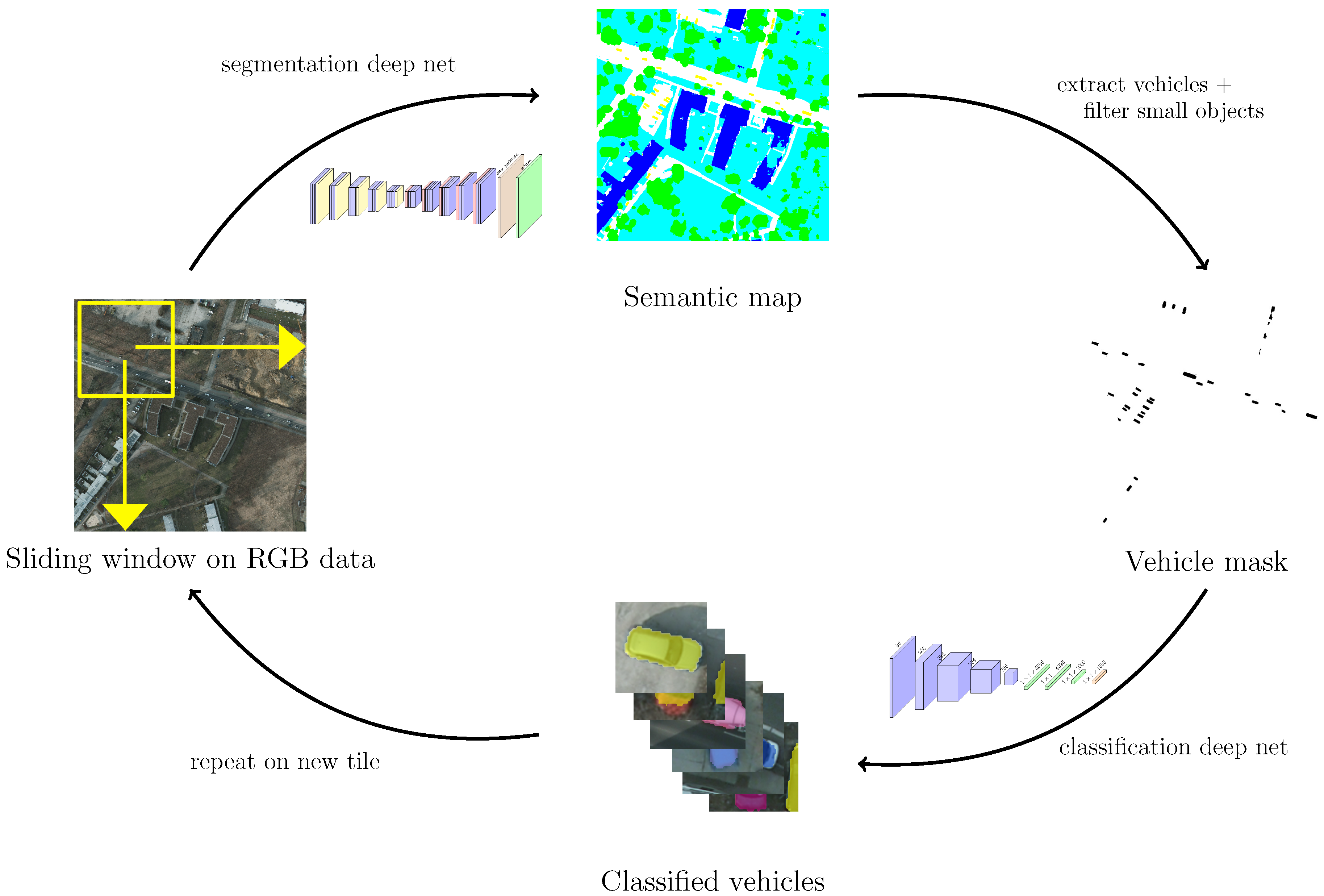

3.1. SegNet for Semantic Segmentation

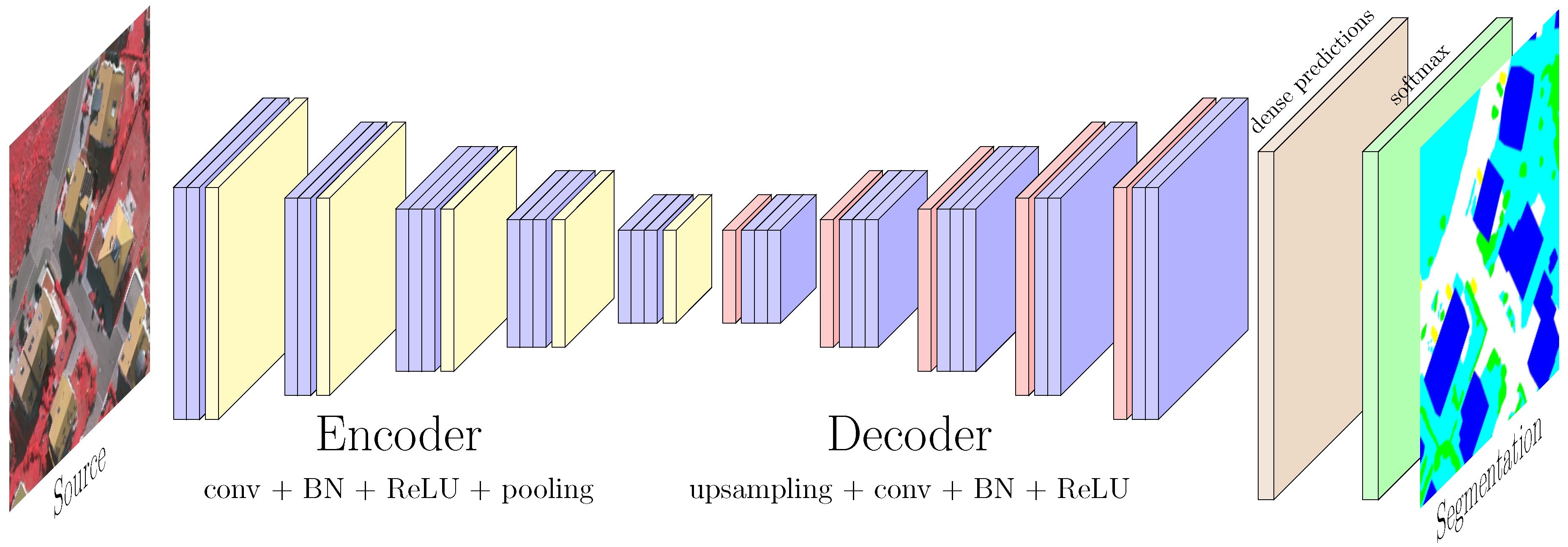

3.2. Small Object Detection

3.3. CNN-Based Vehicle Classification

4. Experiments

4.1. Datasets

4.1.1. VEDAI

4.1.2. ISPRS Potsdam

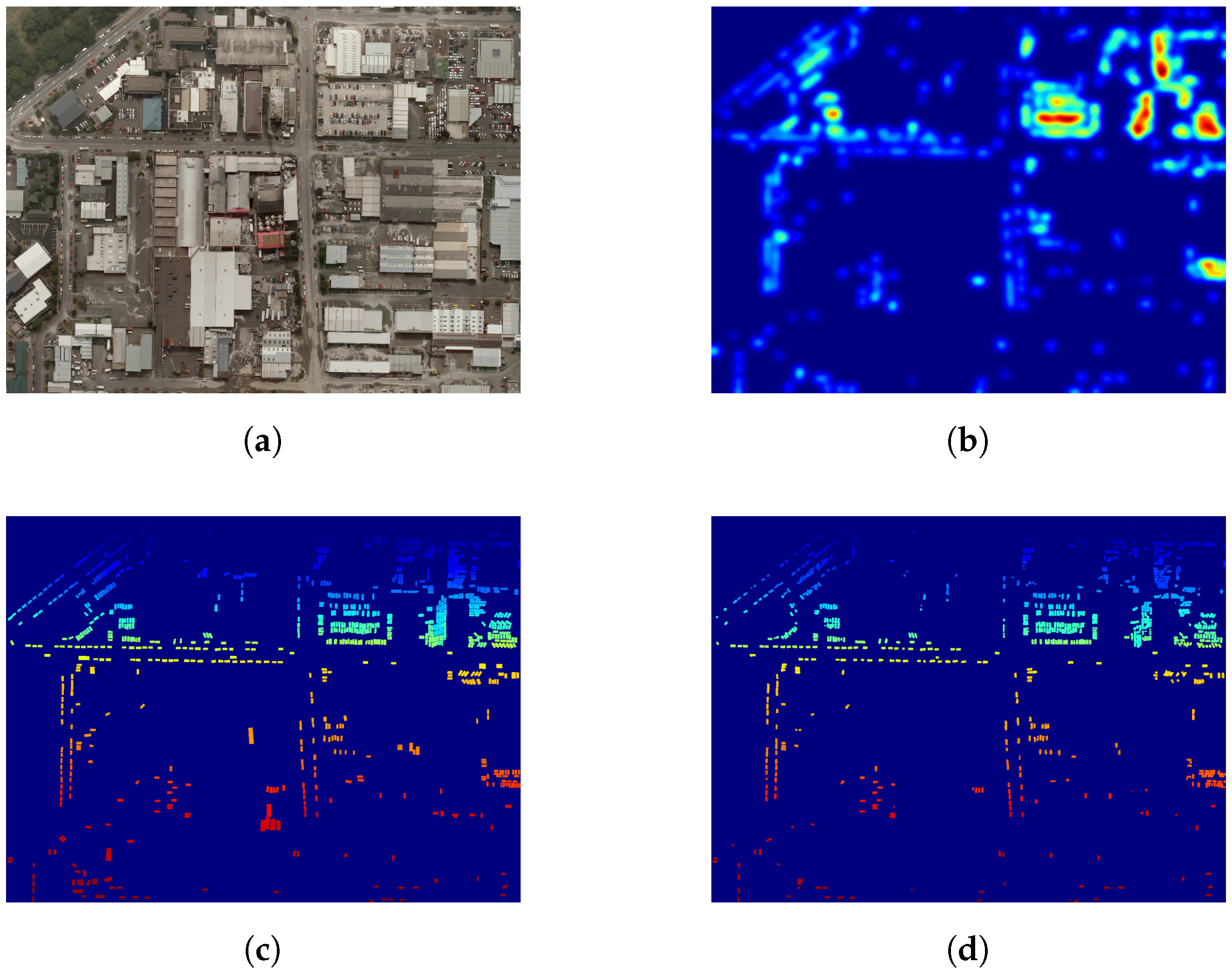

4.1.3. NZAM/ONERA Christchurch

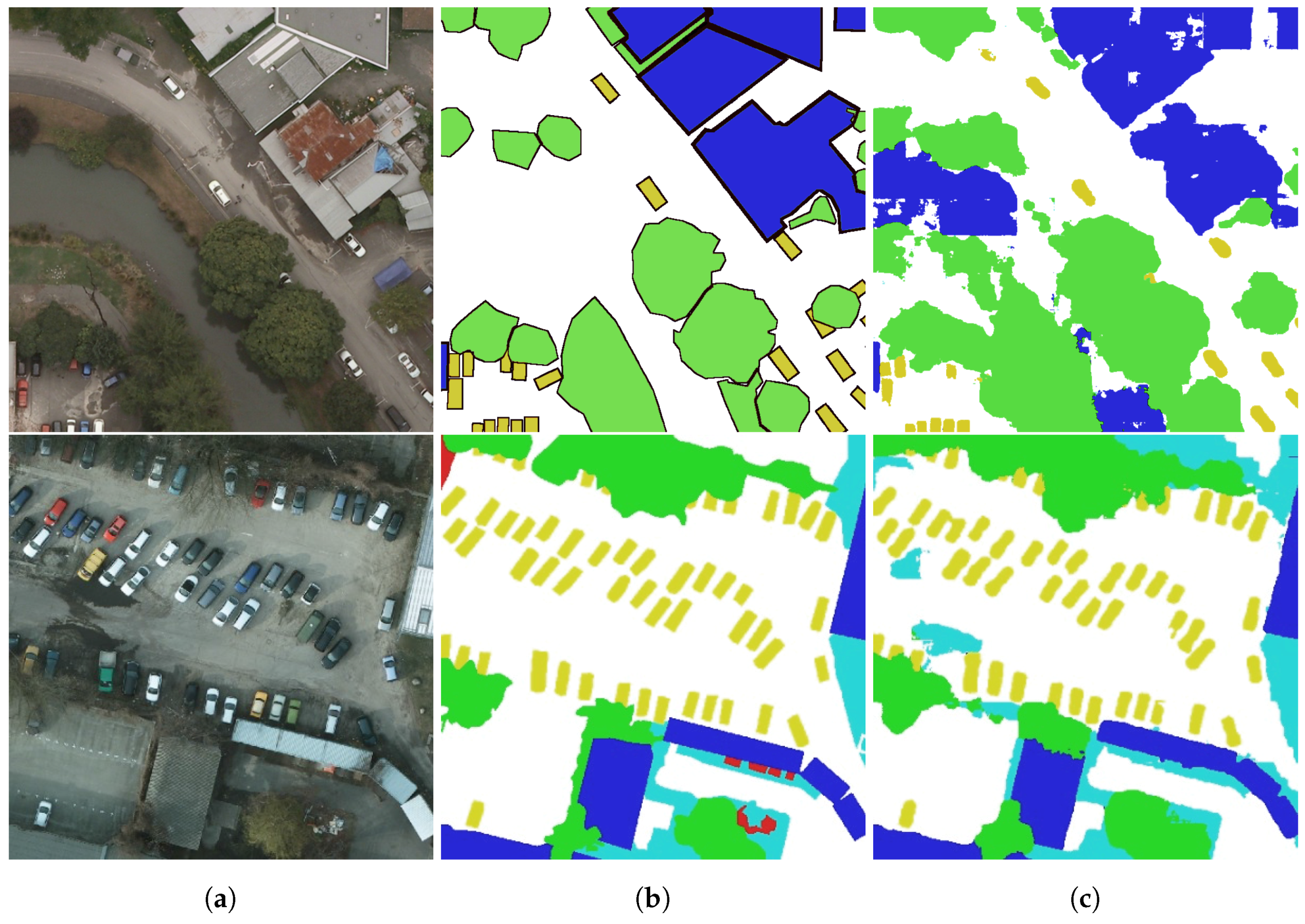

4.2. Semantic Segmentation

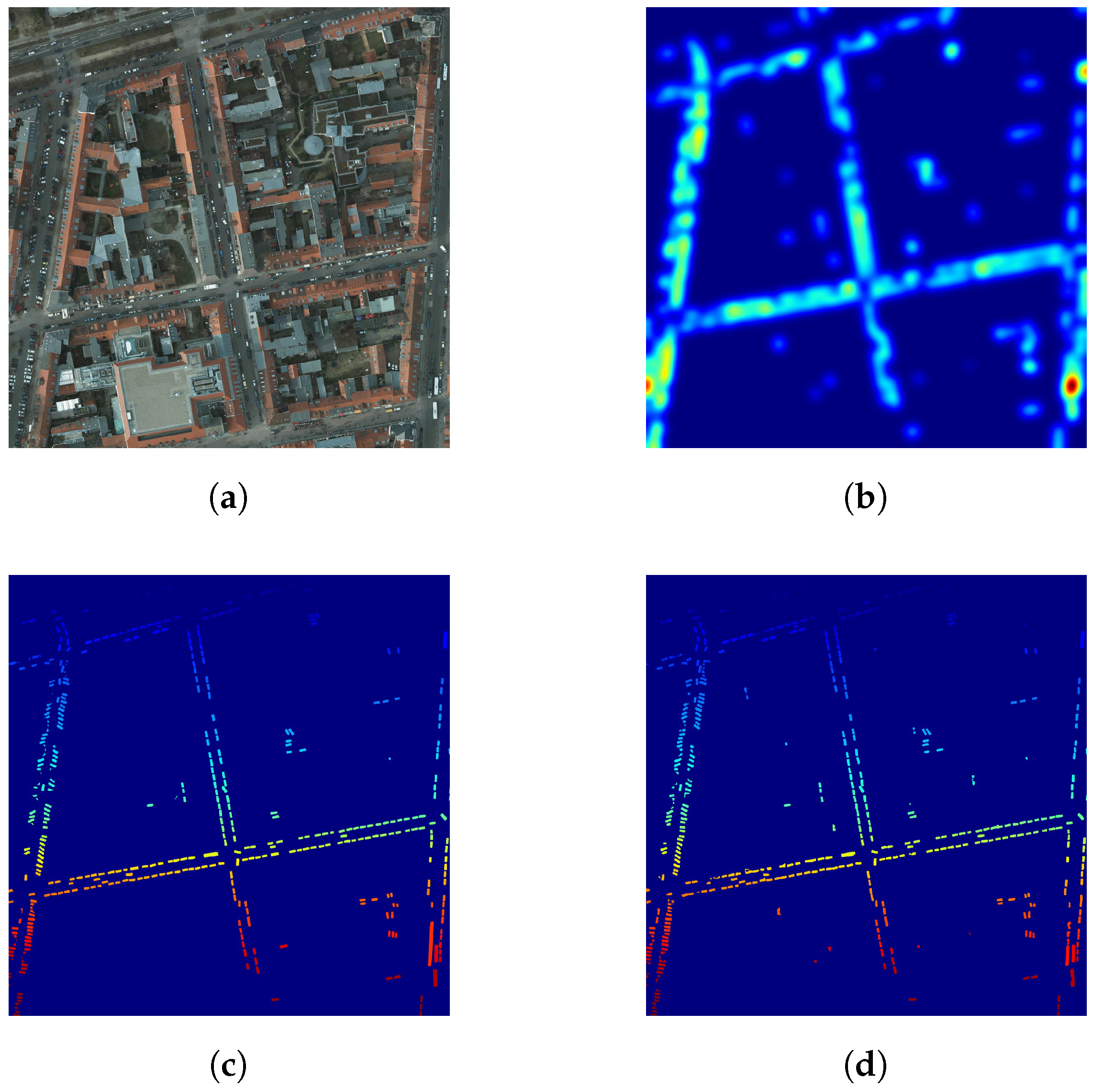

4.2.1. ISPRS Potsdam

4.2.2. NZAM/ONERA Christchurch

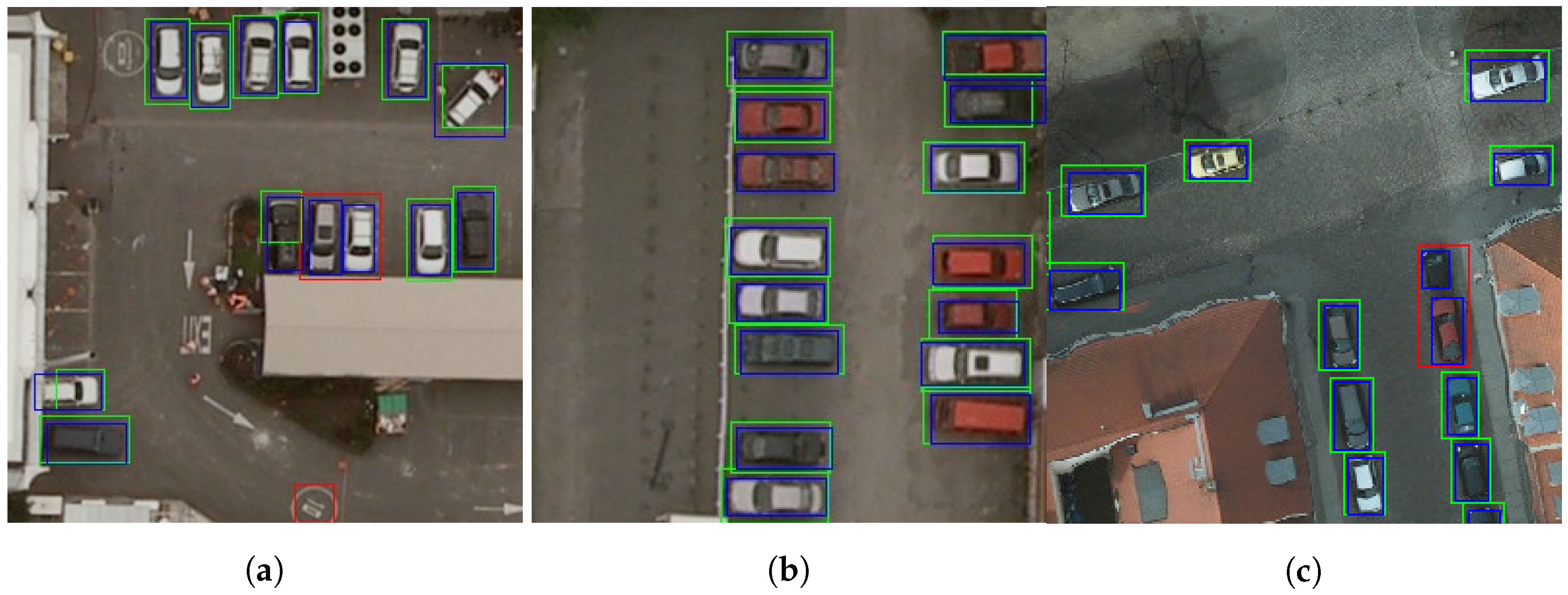

4.3. Detection Results

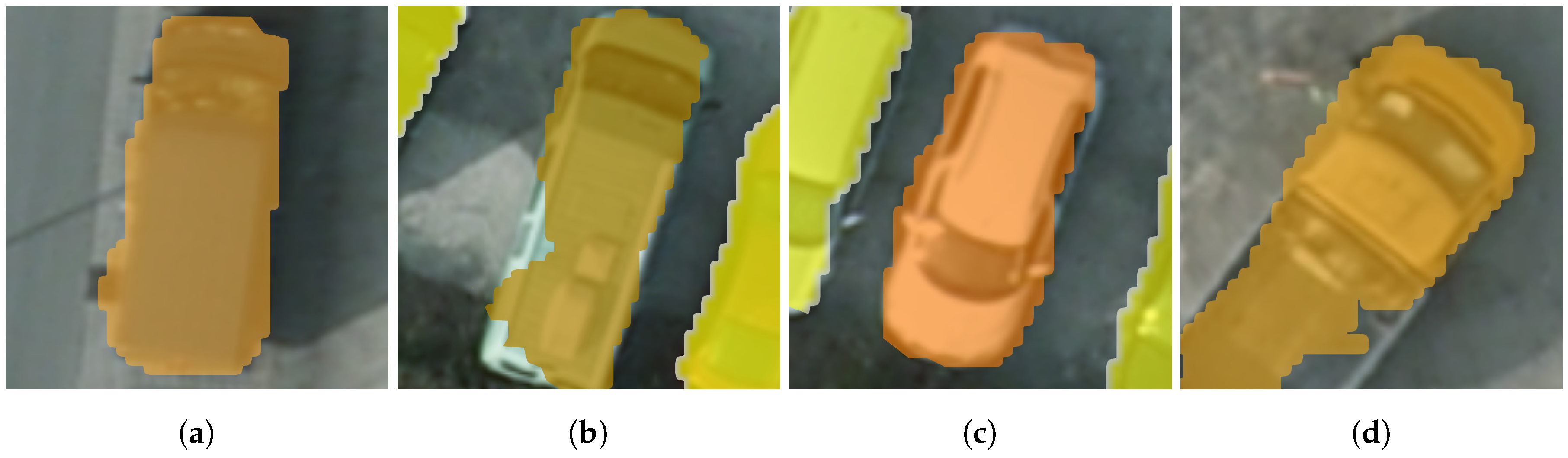

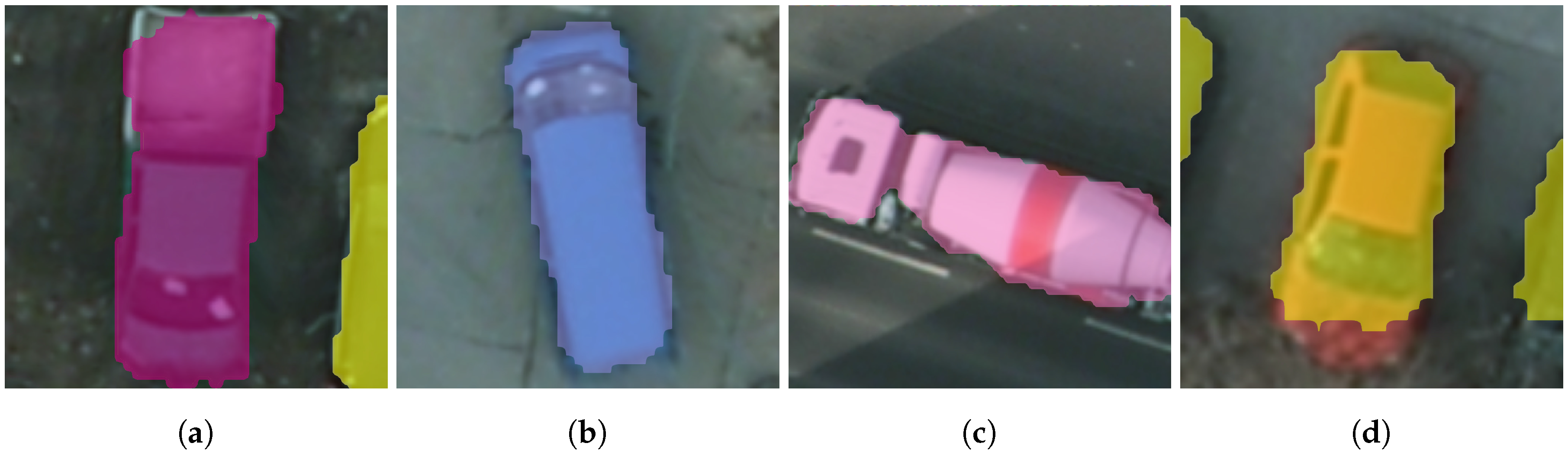

4.4. Learning a Vehicle Classifier

4.5. Transfer Learning for Vehicle Classification

4.6. Traffic Density Estimation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| AA | Average Accuracy |

| CNN | Convolutional Neural Network |

| COCO | Common Objects in Context |

| CRF | Conditional Random Field |

| DTIS | Département de Traitement de l’Information et Systèmes |

| DtMM | Discriminatively-trained Mixture of Models |

| FCN | Fully Convolutional Network |

| GRSS | Geoscience & Remote Sensing Society |

| HOG | Histogram of Oriented Gradients |

| IEEE | Institute of Electrical and Electronics Engineers |

| ILSVRC | ImageNet Large Scale Visual Recognition Competition |

| IR | Infrared |

| IRRGB | Infrared-Red-Green-Blue |

| ISPRS | International Society for Photogrammetry and Remote Sensing |

| NZAM | New Zealand Assets Management |

| OA | Overal Accuracy |

| ONERA | Office national d’études et de recherches aérospatiales |

| RGB | Red-Green-Blue |

| ReLU | Rectified Linear Unit |

| VEDAI | Vehicle Detection in Aerial Imagery |

| VGG | Visual Geometry Group |

| VHR | Very High Resolution |

| VOC | Visual Object Classes |

| SGD | Stochastic Gradient Descent |

| SVM | Support Vector Machine |

References

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Everingham, M.; Eslami, S.M.A.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2014, 111, 98–136. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 1–15. [Google Scholar]

- Campos-Taberner, M.; Romero-Soriano, A.; Gatta, C.; Camps-Valls, G.; Lagrange, A.; Le Saux, B.; Beaupère, A.; Boulch, A.; Chan-Hon-Tong, A.; Herbin, S.; et al. Processing of Extremely High-Resolution LiDAR and RGB Data: Outcome of the 2015 IEEE GRSS Data Fusion Contest Part A: 2-D Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1–13. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. How Useful is Region-Based Classification of Remote Sensing Images in a Deep Learning Framework? In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5091–5094. [Google Scholar]

- Nogueira, K.; Penatti, O.A.B.; Dos Santos, J.A. Towards Better Exploiting Convolutional Neural Networks for Remote Sensing Scene Classification. arXiv, 2016; arXiv:1602.01517. [Google Scholar]

- Marmanis, D.; Wegner, J.D.; Galliani, S.; Schindler, K.; Datcu, M.; Stilla, U. Semantic Segmentation of Aerial Images with an Ensemble of CNNs. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 473–480. [Google Scholar] [CrossRef]

- Paisitkriangkrai, S.; Sherrah, J.; Janney, P.; Hengel, A.V.D. Effective Semantic Pixel Labelling with Convolutional Networks and Conditional Random Fields. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 36–43. [Google Scholar]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 293–298. [Google Scholar] [CrossRef]

- Cramer, M. The DGPF test on digital aerial camera evaluation—Overview and test design. Photogramm. Fernerkund. Geoinf. 2010, 2, 73–82. [Google Scholar] [CrossRef] [PubMed]

- Penatti, O.A.B.; Nogueira, K.; dos Santos, J.A. Do Deep Features Generalize from Everyday Objects to Remote Sensing and Aerial Scenes Domains? In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. arXiv, 2015; arXiv:1511.00561. [Google Scholar]

- Razakarivony, S.; Jurie, F. Vehicle Detection in Aerial Imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Lagrange, A.; Saux, B.L.; Beaupère, A.; Boulch, A.; Chan-Hon-Tong, A.; Herbin, S.; Randrianarivo, H.; Ferecatu, M. Benchmarking Classification of Earth-Observation Data: From Learning Explicit Features to Convolutional Networks. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4173–4176. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H.S. Conditional Random Fields as Recurrent Neural Networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1529–1537. [Google Scholar]

- Sherrah, J. Fully Convolutional Networks for Dense Semantic Labelling of High-Resolution Aerial Imagery. arXiv, 2016; arXiv:1606.02585. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Fully Convolutional Neural Networks for Remote Sensing Image Classification. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5071–5074. [Google Scholar]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Semantic Segmentation of Earth Observation Data Using Multimodal and Multi-scale Deep Networks. In Proceedings of the Computer Vision—ACCV, Taipei, Taiwan, 20–24 November 2016; Springer: Cham, Switzerland, 2016; pp. 180–196. [Google Scholar]

- Michel, J.; Grizonnet, M.; Inglada, J.; Malik, J.; Bricier, A.; Lahlou, O. Local Feature Based Supervised Object Detection: Sampling, Learning and Detection Strategies. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 2381–2384. [Google Scholar]

- Gleason, J.; Nefian, A.V.; Bouyssounousse, X.; Fong, T.; Bebis, G. Vehicle Detection from Aerial Imagery. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2065–2070. [Google Scholar]

- Randrianarivo, H.; Saux, B.L.; Ferecatu, M. Urban Structure Detection with Deformable Part-Based Models. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Melbourne, Australia, 21–26 July 2013; pp. 200–203. [Google Scholar]

- Janney, P.; Booth, D. Pose-invariant vehicle identification in aerial electro-optical imagery. Mach. Vis. Appl. 2015, 26, 575–591. [Google Scholar] [CrossRef]

- Randrianarivo, H.; Saux, B.L.; Ferecatu, M.; Crucianu, M. Contextual Discriminatively Trained Model Mixture for Object Detection in Aerial Images. In Proceedings of the International Conference on Big Data from Space (BiDS’16), Santa Cruz de Tenerife, Spain, 15–17 March 2016. [Google Scholar]

- Kamenetsky, D.; Sherrah, J. Aerial Car Detection and Urban Understanding. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, Australia, 23–25 November 2015; pp. 1–8. [Google Scholar]

- Chen, X.; Xiang, S.; Liu, C.L.; Pan, C.H. Vehicle Detection in Satellite Images by Hybrid Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Holt, A.C.; Seto, E.Y.; Rivard, T.; Gong, P. Object-based detection and classification of vehicles from high-resolution aerial photography. Photogramm. Eng. Remote Sens. 2009, 75, 871–880. [Google Scholar] [CrossRef]

- Eikvil, L.; Aurdal, L.; Koren, H. Classification-based vehicle detection in high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2009, 64, 65–72. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. On the Usability of Deep Networks for Object-Based Image Analysis. In Proceedings of the International Conference on Geo-Object based Image Analysis (GEOBIA16), Enschede, The Netherlands, 22 September 2016. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; Berg, A.C.; Fei-Fei, L. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification With an Edge: Improving Semantic Image Segmentation with Boundary Detection. arXiv, 2016; arXiv:1612.01337. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Zhou, W.; Shao, Z.; Cheng, Q. Deep Feature Representations for High-Resolution Remote Sensing Scene Classification. In Proceedings of the 2016 4th International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Guangzhou, China, 4–6 July 2016; pp. 338–342. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Beucher, S.; Meyer, F. The Morphological Approach to Segmentation: The Watershed Transformation; Optical Engineering New York-Marcel Dekker Inc.: New York, NY, USA, 1992; Volume 34, pp. 433–481. [Google Scholar]

- Dai, J.; He, K.; Sun, J. Instance-aware Semantic Segmentation via Multi-task Network Cascades. arXiv, 2015; arXiv:1512.04412. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain Adaptation for the Classification of Remote Sensing Data: An Overview of Recent Advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Courty, N.; Flamary, R.; Tuia, D.; Rakotomamonjy, A. Optimal Transport for Domain Adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2016; arXiv:1507.00504. [Google Scholar]

- Ren, M.; Zemel, R.S. End-to-End Instance Segmentation and Counting with Recurrent Attention. arXiv, 2016; arXiv:1605.09410. [Google Scholar]

- Firat, O.; Can, G.; Vural, F.T.Y. Representation Learning for Contextual Object and Region Detection in Remote Sensing. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 3708–3713. [Google Scholar]

| Dataset/Class | Car | Truck | Van | Pickup | Boat | Camping Car | Other | Plane | Tractor |

|---|---|---|---|---|---|---|---|---|---|

| VEDAI | 1340 | 300 | 100 | 950 | 170 | 390 | 200 | 47 | 190 |

| NZAM/ONERA Christchurch | 2267 | 73 | 120 | 90 | - | - | - | - | - |

| ISPRS Potsdam | 1990 | 33 | 181 | 40 | - | - | - | - | - |

| Dataset | Method | Imp. Surfaces | Building | Low veg. | Tree | Cars | OA |

|---|---|---|---|---|---|---|---|

| Validation 12.5cm/px | SegNet RGB | 92.4% ± 0.6 | 95.8% ± 1.9 | 85.8% ± 1.3 | 83.0% ± 2.1 | 95.7% ± 0.3 | 90.6% ± 0.6 |

| Test 5cm/px | SegNet IRRG | 92.4% | 95.8% | 86.7% | 87.4% | 95.1% | 90.0% |

| FCN + CRF [17] | 91.8% | 95.9% | 86.3% | 87.7% | 89.2% | 89.7% | |

| ResNet-101 [CASIA] | 92.8% | 96.9% | 86.0% | 88.2% | 94.2% | 89.6% |

| Source | Background | Building | Vegetation | Vehicle | OA |

|---|---|---|---|---|---|

| RGB | 75.6% ± 8.9 | 91.7% ± 1.3 | 55.2% ± 11.6 | 61.9% ± 2.4 | 84.4% ± 2.6 |

| Dataset | Preprocessing | mIoU | Precision | Recall |

|---|---|---|---|---|

| NZAM/ONERA Christchurch | ∅ | 60.0% | 0.597 | 0.797 |

| Opening | 69.8% | 0.817 | 0.791 | |

| Opening + remove small objects | 70.7% | 0.833 | 0.791 | |

| ISPRS Potsdam | ∅ | 70.1% | 0.748 | 0.842 |

| Opening | 73.3% | 0.866 | 0.842 | |

| Opening + remove small objects | 74.2% | 0.907 | 0.841 |

| Dataset | Method | Precision | Recall |

|---|---|---|---|

| NZAM/ONERA Christchurch | HOG + SVM [20] | 0.402 | 0.398 |

| DtMM (5 models) [24] | 0.743 | 0.737 | |

| Ours | 0.833 | 0.791 | |

| ISPRS Potsdam | Ours | 0.907 | 0.841 |

| Model | Car | Truck | Ship | Tractor | Camping Car | Van | Pickup | Plane | Vehicle | OA | Time (ms) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| LeNet | 74.3 | 54.4 | 31.0 | 61.1 | 85.9 | 38.3 | 67.7 | 13.0 | 47.5 | 66.3 ± 1.7 | 2.1 |

| AlexNet | 91.0 | 84.8 | 81.4 | 83.3 | 98.0 | 71.1 | 85.2 | 91.4 | 77.8 | 87.5 ± 1.5 | 5.7 |

| VGG-16 | 90.2 | 86.9 | 86.9 | 86.5 | 99.6 | 71.1 | 91.4 | 100.0 | 77.2 | 89.7 ± 1.5 | 31.7 |

| Model | Car | Truck | Ship | Tractor | Camping Car | Van | Pickup | Plane | Vehicle | OA | AA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 90.4 | 66.7 | 80.4 | 89.5 | 96.6 | 63.3 | 78.7 | 92.6 | 75.0 | 83.9 ± 2.7 | 81.5 ± 1.9 |

| DA | 88.2 | 82.2 | 78.4 | 82.5 | 97.4 | 63.3 | 85.1 | 66.7 | 73.3 | 85.6 ± 1.4 | 77.3 ± 8.7 |

| R | 87.9 | 71.1 | 86.3 | 84.2 | 97.4 | 73.3 | 87.2 | 100.0 | 75.0 | 86.1 ± 0.9 | 84.7 ± 1.7 |

| DA + R | 91.4 | 85.6 | 88.2 | 87.6 | 97.4 | 70.0 | 87.2 | 100.0 | 81.7 | 89.0 ± 0.5 | 87.7 ± 1.5 |

| Dataset | Classifier | Car | Van | Truck | Pick up | OA | AA |

|---|---|---|---|---|---|---|---|

| Potsdam | Cars only | 100% | 0% | 0% | 0% | 94% | 25% |

| AlexNet | 98% | 66% | 67% | 0% | 95% | 58% | |

| VGG-16 | 92% | 66% | 75% | 33% | 89% | 67% | |

| Christchurch | Cars only | 100% | 0% | 0% | 0% | 94% | 25% |

| AlexNet | 94% | 40% | 67% | 89% | 93% | 73% | |

| VGG-16 | 97% | 80% | 67% | 78% | 96% | 80% |

| Dataset | ISPRS Potsdam | NZAM/ONERA Christchurch |

|---|---|---|

| Absolute error (average error/ground truth total) | 3/52 | 6/66 |

| Relative error | 7.9% | 9.1% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images. Remote Sens. 2017, 9, 368. https://doi.org/10.3390/rs9040368

Audebert N, Le Saux B, Lefèvre S. Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images. Remote Sensing. 2017; 9(4):368. https://doi.org/10.3390/rs9040368

Chicago/Turabian StyleAudebert, Nicolas, Bertrand Le Saux, and Sébastien Lefèvre. 2017. "Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images" Remote Sensing 9, no. 4: 368. https://doi.org/10.3390/rs9040368