Terrestrial Hyperspectral Image Shadow Restoration through Lidar Fusion

Abstract

:1. Introduction

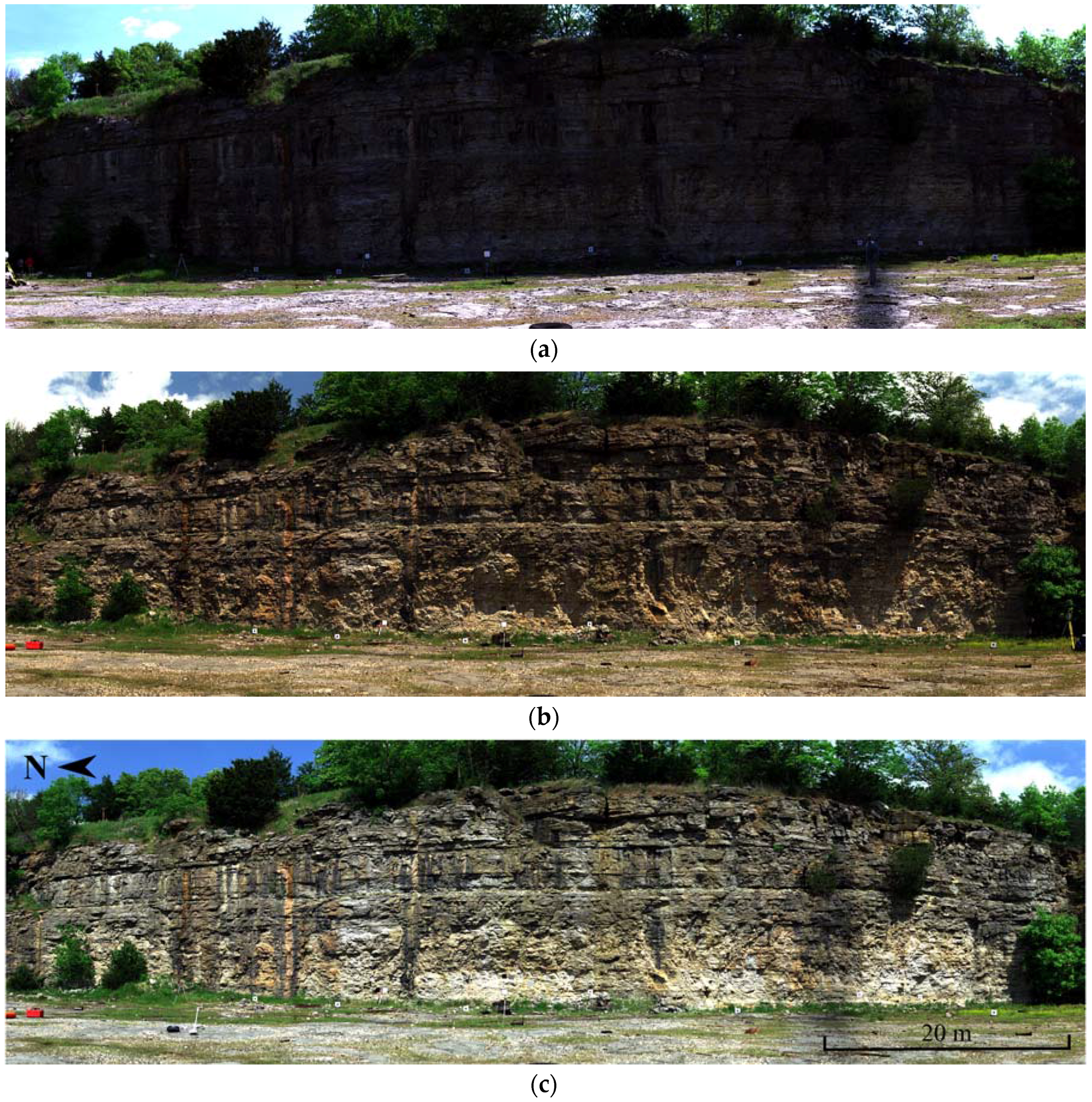

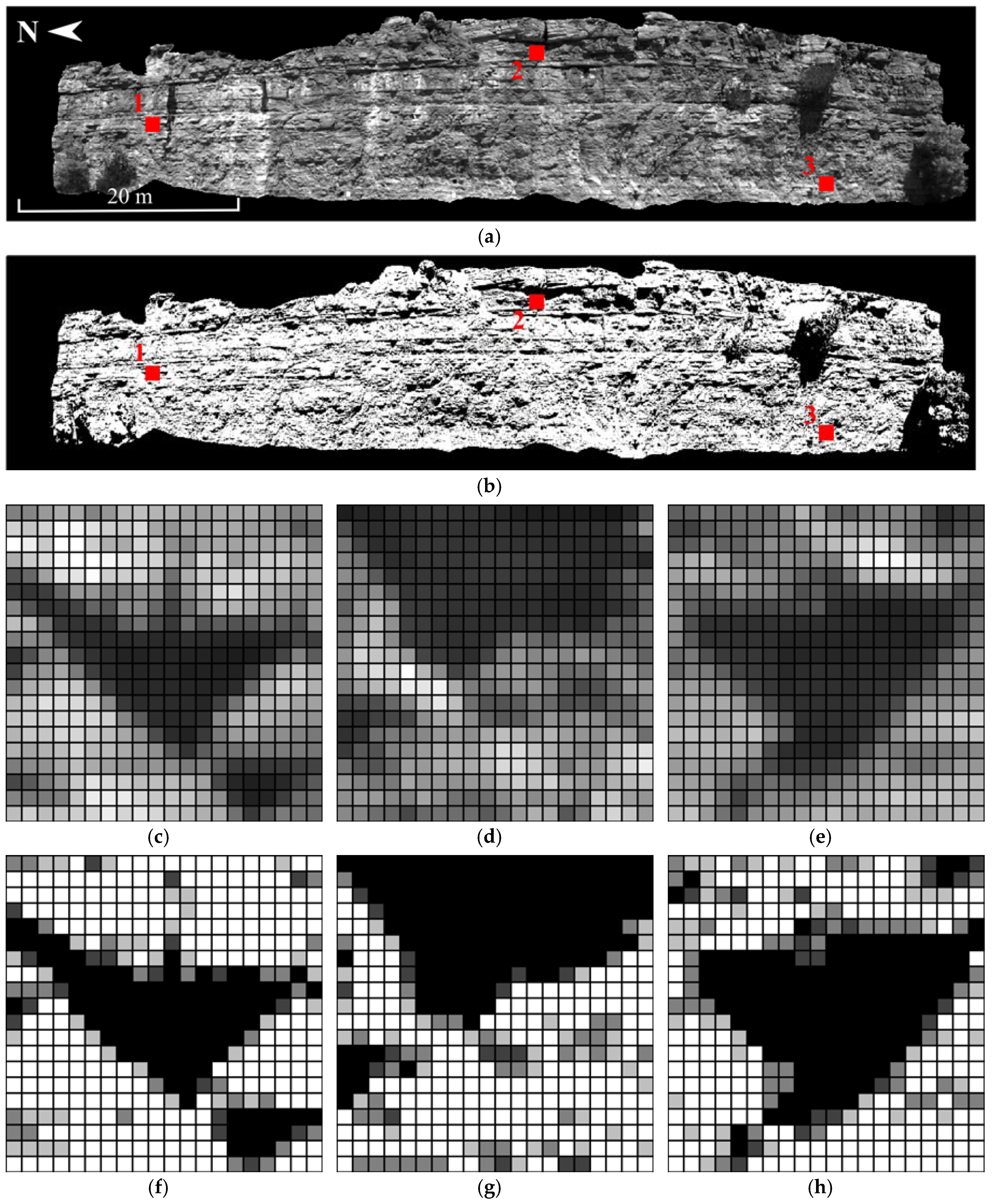

2. Data Description

2.1. TLS

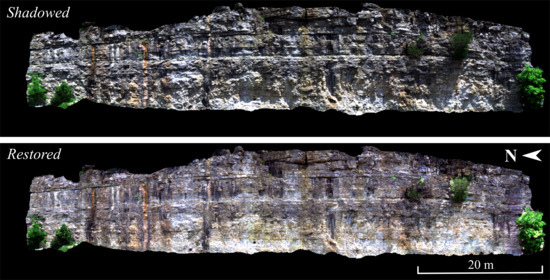

2.2. HSI

2.3. TLS and HSI Fusion

2.3.1. Camera Model

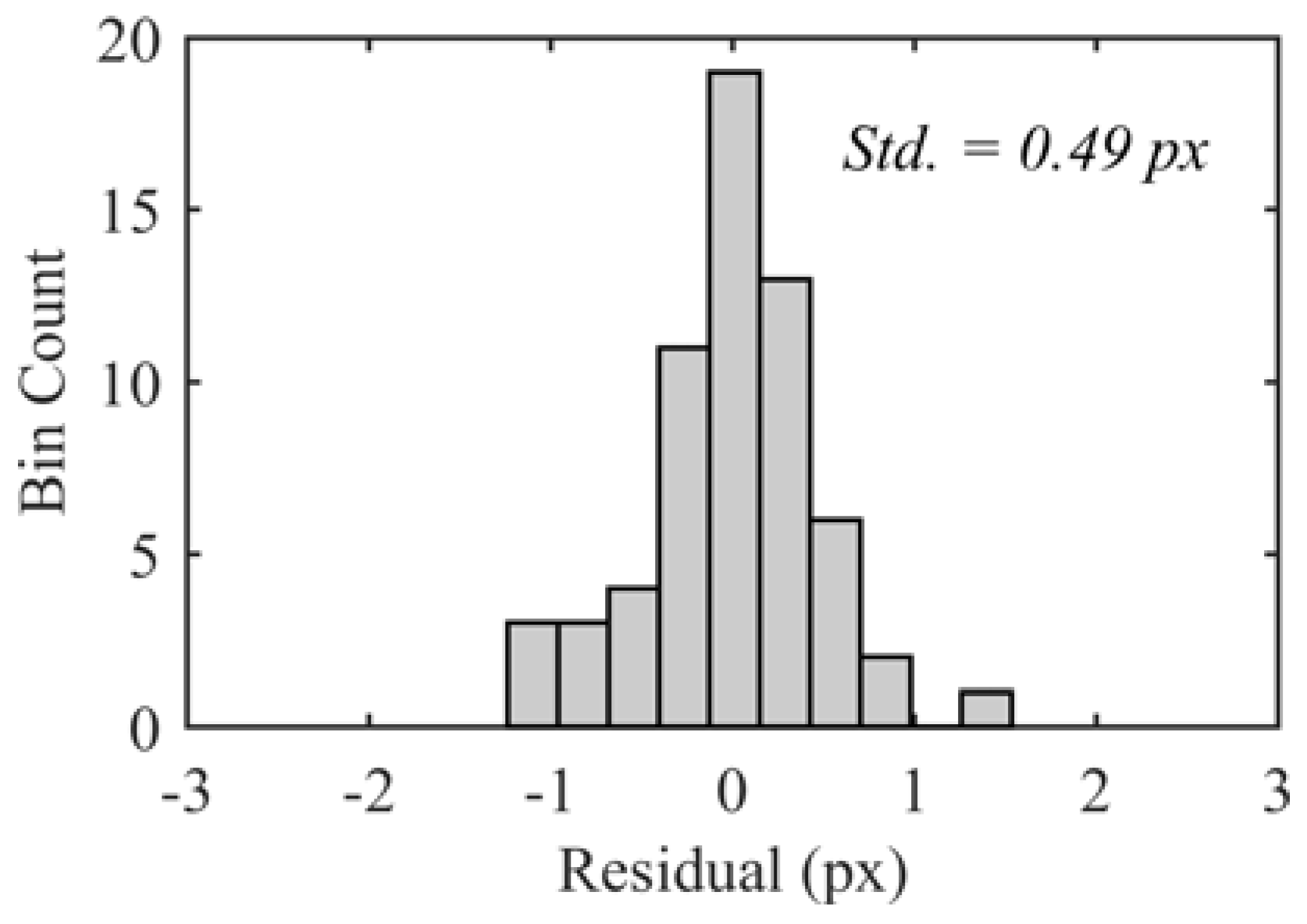

2.3.2. HSI Registration

2.3.3. TLS Intensity Registration and Segmentation

3. Methodology

3.1. HSI Pixel Shadow Determination

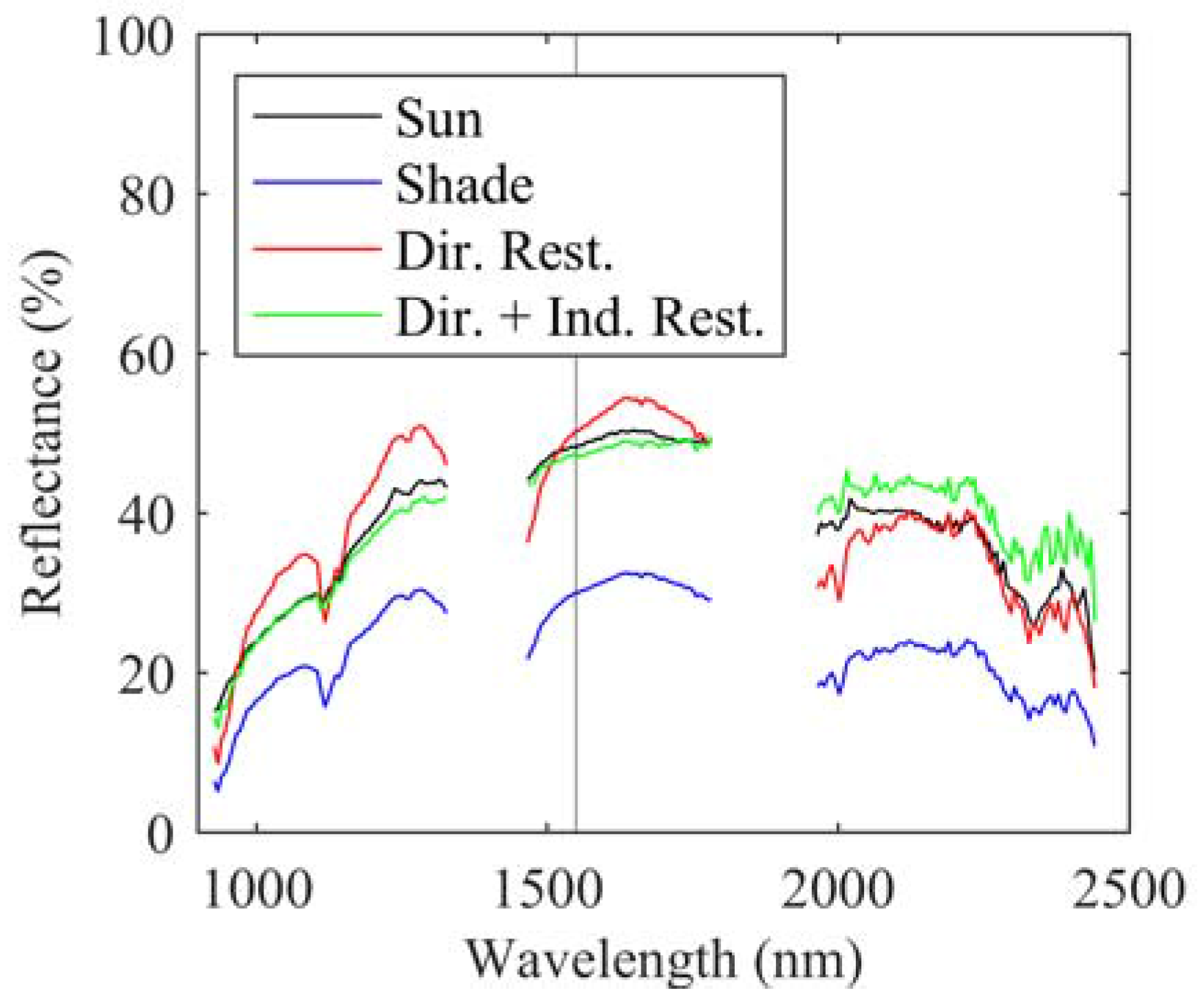

3.2. HSI Shadow Restoration

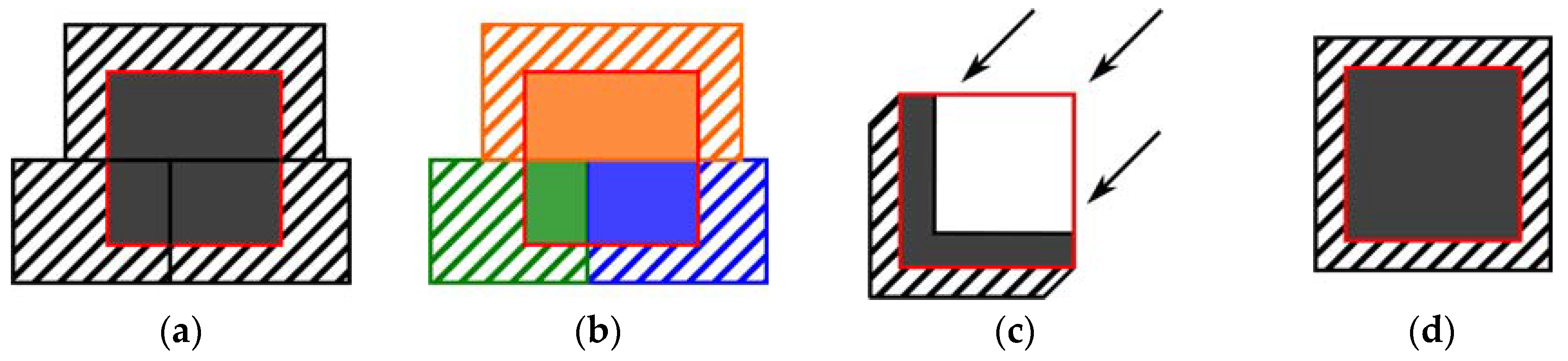

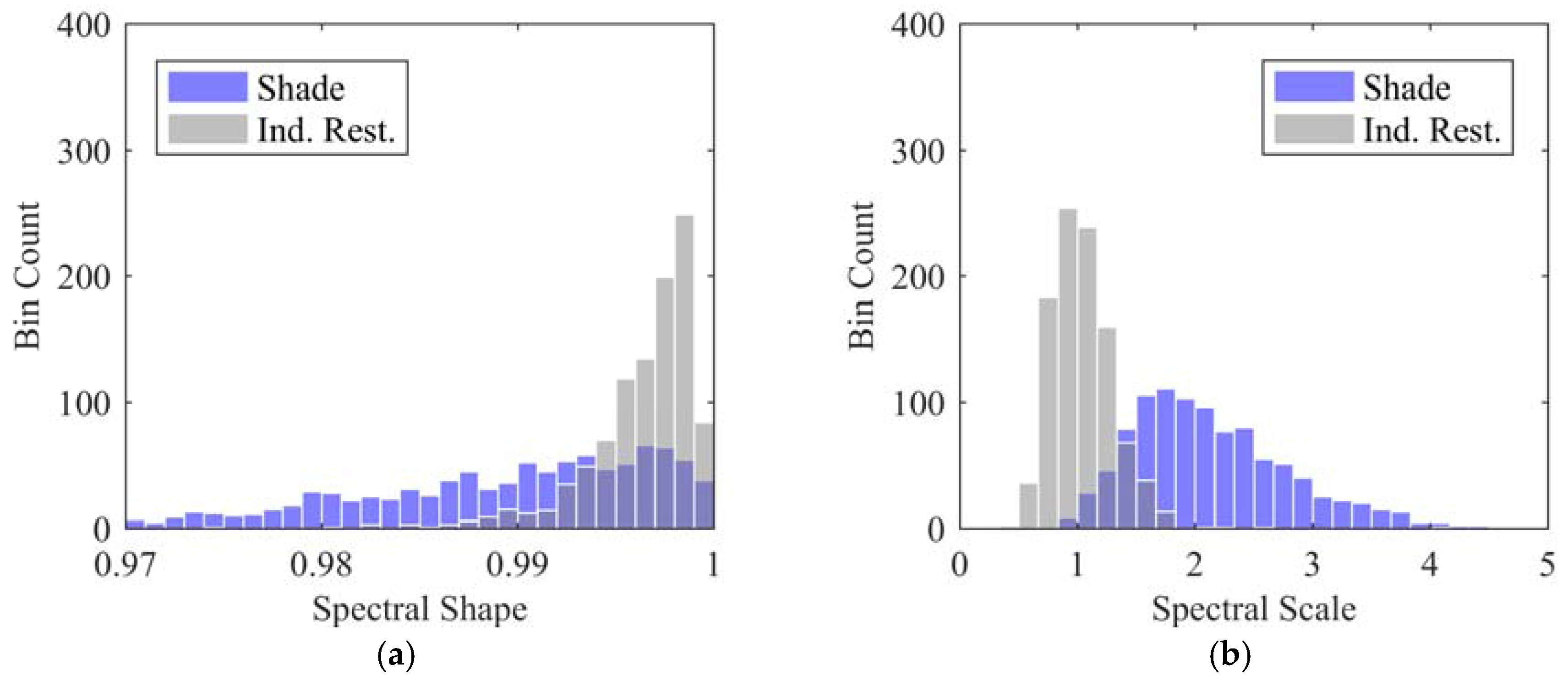

3.2.1. Indirect Shadow Restoration

- Selection and restoration is applied to the union of all segments intersecting a shadow area, where the matching sun and shade regions are created from the combination of multiple contiguous active reflectance segments that intersect the shadow area of interest.

- Selection and restoration is applied segment by segment, where the matching sun and shade regions are restricted to exist within a common segment.

- Buffers of pixels along the far edge of the cast shadow, as in [36], one pushing into the sunlit area and the other into the shadowed area.

- The complete shadow area and a buffer of sunlit pixels around the entire boundary of the shadow area.

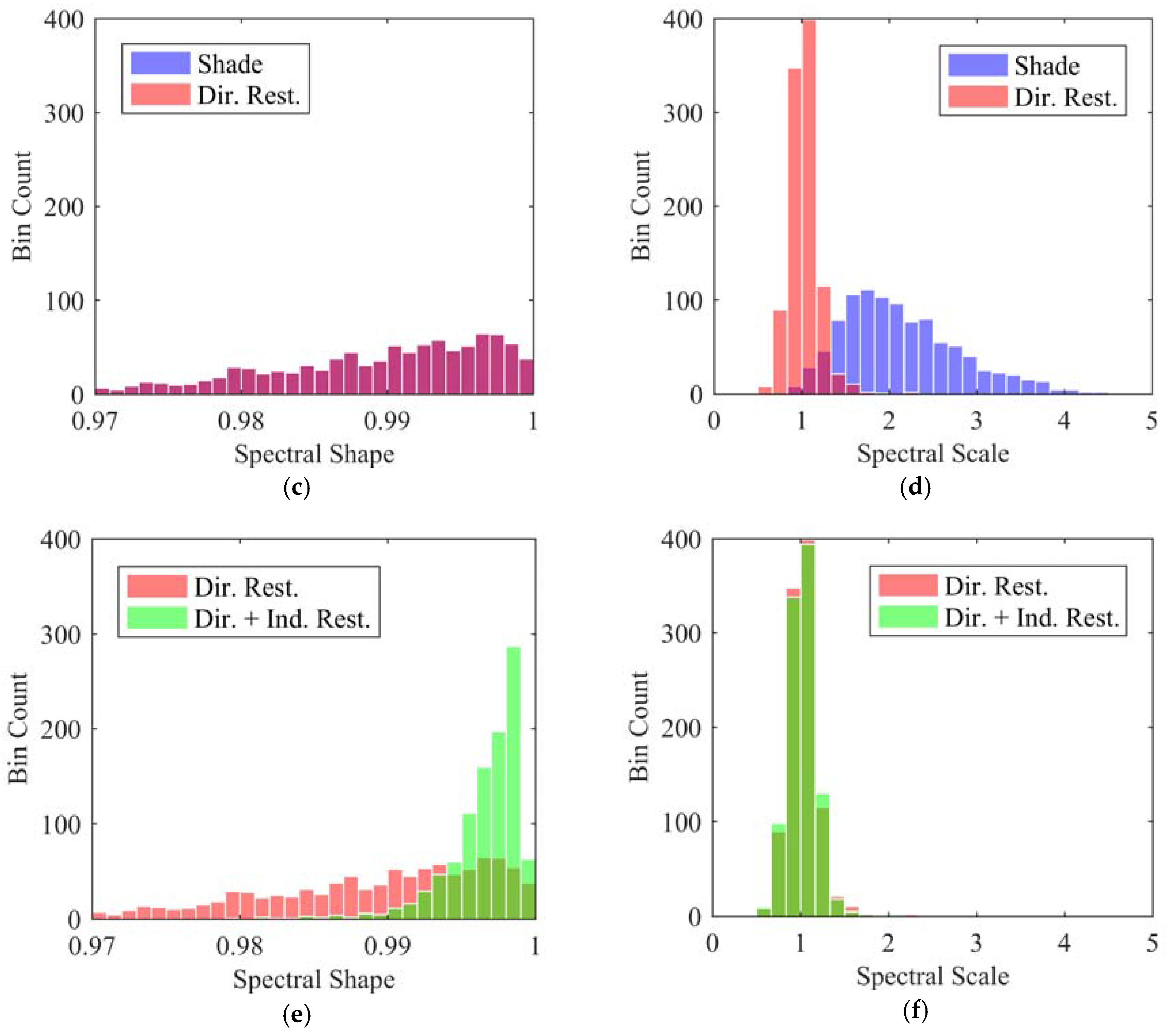

3.2.2. Direct Shadow Restoration

3.2.3. Restoration Metrics

4. Results and Discussion

4.1. Shadow Determination

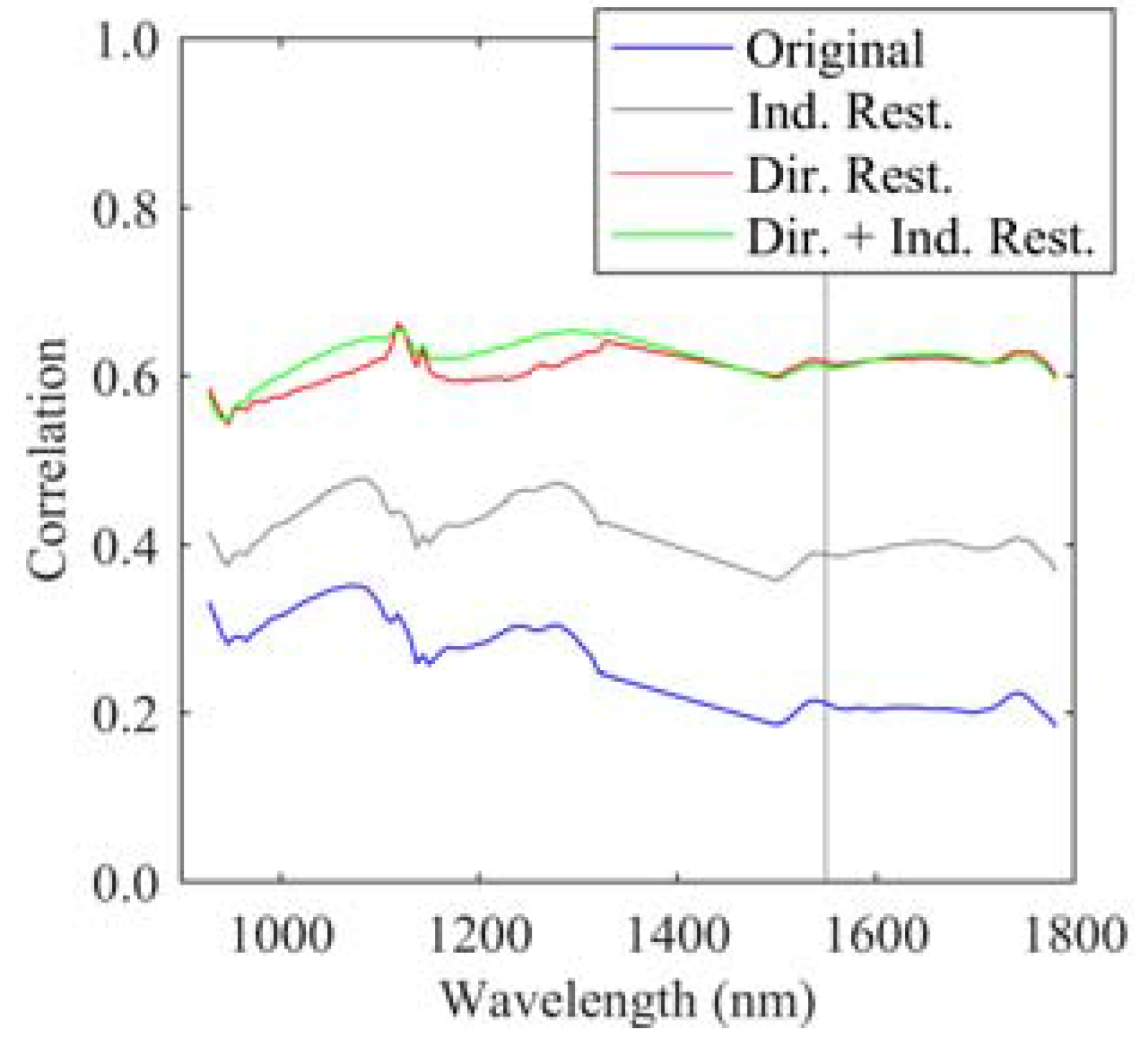

4.2. Shadow Restoration

4.2.1. Indirect Restoration

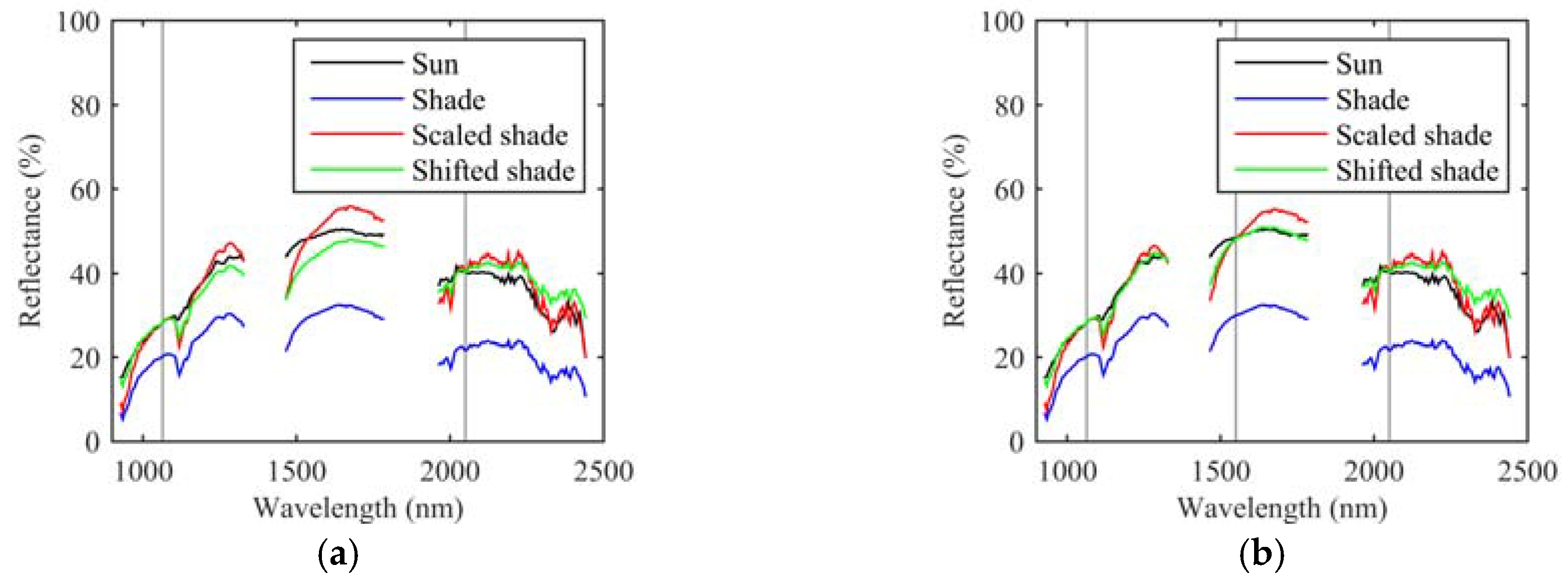

4.2.2. Single Wavelength Direct Restoration

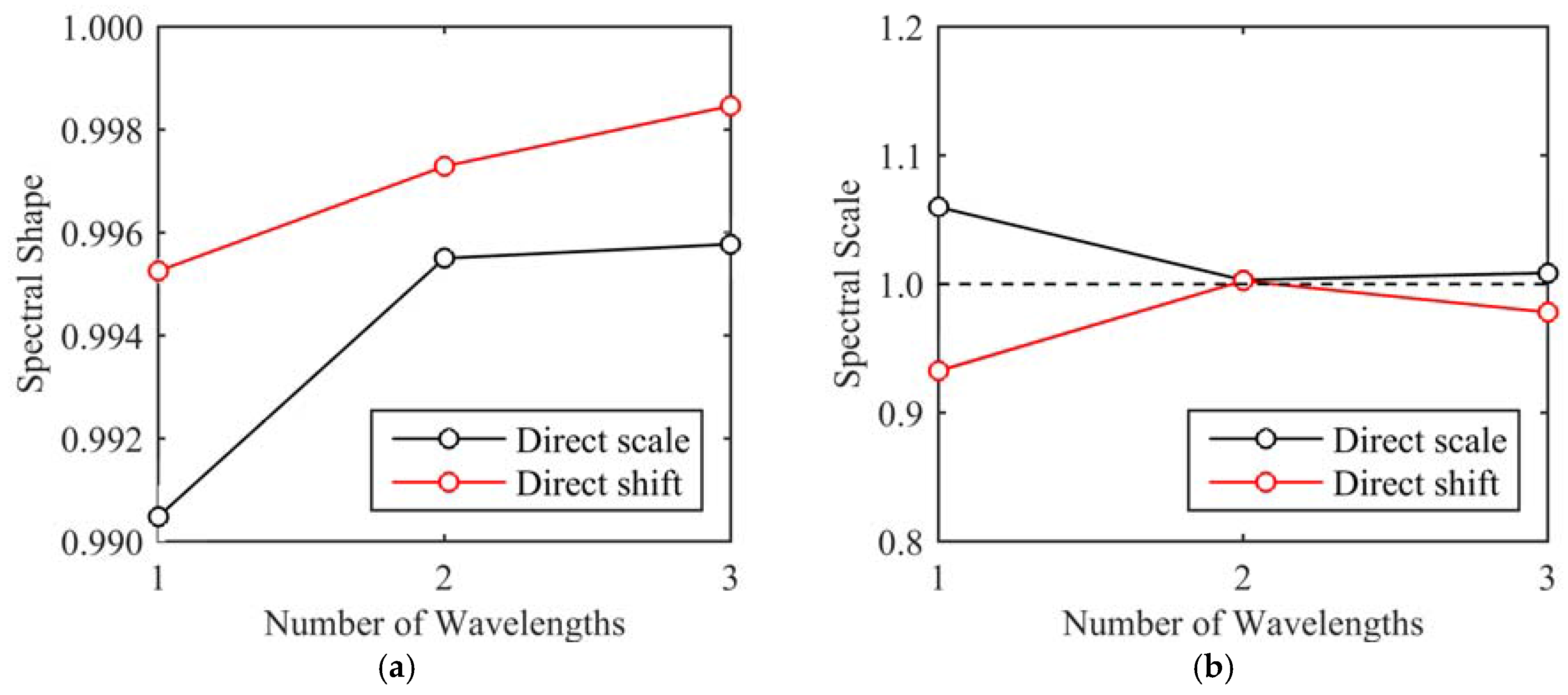

4.2.3. Multiple Wavelength Direct Restoration Simulation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Schaepman, M.E.; Ustin, S.L.; Plaza, A.J.; Painter, T.H.; Verrelst, J.; Liang, S. Earth system science related imaging spectroscopy—An assessment. Remote Sens. Environ. 2009, 113, S123–S137. [Google Scholar] [CrossRef]

- Goetz, A.F.H. Three decades of hyperspectral remote sensing of the Earth: A personal view. Remote Sens. Environ. 2009, 113, S5–S16. [Google Scholar] [CrossRef]

- Buckley, S.J.; Kurz, T.H.; Howell, J.A.; Schneider, D. Terrestrial lidar and hyperspectral data fusion products for geological outcrop analysis. Comput. Geosci. 2013, 54, 249–258. [Google Scholar] [CrossRef]

- Kurz, T.H.; Buckley, S.J.; Howell, J.A. Close-range hyperspectral imaging for geological field studies: Workflow and methods. Int. J. Remote. Sens. 2013, 34, 1798–1822. [Google Scholar] [CrossRef]

- Kurz, T.H.; Buckley, S.J.; Howell, J.A.; Schneider, D. Integration of panoramic hyperspectral imaging with terrestrial lidar data. Photogramm. Rec. 2011, 26, 212–228. [Google Scholar] [CrossRef]

- Kurz, T.H.; Dewit, J.; Buckley, S.J.; Thurmond, J.B.; Hunt, D.W.; Swennen, R. Hyperspectral image analysis of different carbonate lithologies (limestone, karst and hydrothermal dolomites): The Pozalagua Quarry case study (Cantabria, North-west Spain). Sedimentology. 2012, 59, 623–645. [Google Scholar] [CrossRef]

- Murphy, R.J.; Schneider, S.; Taylor, Z.; Nieto, J. Mapping clay minerals in an open-pit mine using hyperspectral imagery and automated feature extraction. In Proceedings of the Vertical Geology Conference, Lausanne, Switzerland, 6–7 February 2014. [Google Scholar]

- Murphy, R.J.; Monteiro, S.T. Mapping the distribution of ferric iron minerals on a vertical mine face using derivative analysis of hyperspectral imagery (430–970nm). ISPRS J. Photogramm. Remote Sens. 2013, 75, 29–39. [Google Scholar] [CrossRef]

- Murphy, R.J.; Monteiro, S.T.; Schneider, S. Evaluating Classification Techniques for Mapping Vertical Geology Using Field-Based Hyperspectral Sensors. IEEE Trans. Geoscience Remote Sens. 2012, 50, 3066–3080. [Google Scholar] [CrossRef]

- Clark, R.N. Chapter 1: Spectroscopy of Rocks and Minerals, and Principles of Spectroscopy. In Manual of Remote Sensing, Remote Sensing for the Earth Sciences; Rencz, A.N., Ed.; John Wiley & Sons: New York, NY, USA, 1999; Volume 3, pp. 3–58. [Google Scholar]

- Van der Meer, F.D.; van der Werff, H.M.A.; van Ruitenbeek, F.J.A.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; de Smeth, J.B.; Woldai, T. Multi- and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinform. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Buckley, S.J.; Enge, H.D.; Carlsson, C.; Howell, J.A. Terrestrial laser scanning for use in virtual outcrop geology. Photogramm. Rec. 2010, 25, 225–239. [Google Scholar] [CrossRef]

- Alexander, J. A discussion on the use of analogues for reservoir geology. Geol. Soc. Lond. Spec. Publ. 1992, 69, 175–194. [Google Scholar] [CrossRef]

- Ientilucci, E.J. SHARE 2012: Analysis of illumination differences on targets in hyperspectral imagery. Proc. SPIE 2013. [Google Scholar] [CrossRef]

- Adler-Golden, S.M.; Matthew, M.W.; Anderson, G.P.; Felde, G.W.; Gardner, J.A. An Algorithm for De-Shadowing Spectral Imagery. In Proceedings of the 11th JPL Airborne Earth Science Workshop, Pasadena, CA, USA, 5–8 March 2002. [Google Scholar]

- Friman, O.; Tolt, G.; Ahlberg, J. Illumination and shadow compensation of hyperspectral images using a digital surface model and non-linear least squares estimation. In Proceedings of the SPIE 8180, Image and Signal Processing for Remote Sensing XVII, Prague, Czech Republic, 19 September 2011. [Google Scholar]

- Schläpfer, D.; Richter, R.; Damm, A. Correction of Shadowing in Imaging Spectroscopy Data by Quantification of the Proportion of Diffuse Illumination. In Proceedings of the 8th SIG-IS EARSeL Imaging Spectroscopy Workshop, Nantes, France, 8–10 April 2013. [Google Scholar]

- Anderson, G.P.; Felde, G.W.; Hoke, M.L.; Ratkowski, A.J.; Cooley, T.; Chetwynd, J.H.; Gardner, J.A.; Adler-Golden, S.M.; Matthew, M.W.; Berk, A.; et al. MODTRAN4-based atmospheric correction algorithm: FLAASH (fast line-of-sight atmospheric analysis of spectral hypercubes). In Proceedings of the SPIE 4725, Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery VIII, Orlando, FL, USA, 1 April 2002. [Google Scholar]

- Richter, R.; Schläpfer, D. Geo-atmospheric processing of airborne imaging spectrometry data. Part 2: Atmospheric/topographic correction. Int. J. Remote Sens. 2002, 23, 2631–2649. [Google Scholar] [CrossRef]

- Hartzell, P.J.; Glennie, C.L.; Finnegan, D.C. Empirical Waveform Decomposition and Radiometric Calibration of a Terrestrial Full-Waveform Laser Scanner. IEEE Trans. Geosci. Remote Sens. 2015, 53, 162–172. [Google Scholar] [CrossRef]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. 2013, 32, 1–13. [Google Scholar] [CrossRef]

- CloudCompare (Version: 2.6.1). Available online: http://www.cloudcompare.org/ (accessed on 15 May 2015).

- Han, T.; Goodenough, D.G.; Dyk, A.; Love, J. Detection and correction of abnormal pixels in Hyperion images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 23–28 July 2002. [Google Scholar]

- Schneider, D.; Maas, H.G. A geometric model for linear-array-based terrestrial panoramic cameras. Photogramm. Rec. 2006, 21, 198–210. [Google Scholar] [CrossRef]

- Scheibe, K.; Huang, F.; Klette, R. Calibration of Rotating Sensors. Proceedings of Computer Analysis of Images and Pattern, Münster, Germany, 2–4 September 2009. [Google Scholar]

- Hartzell, P.J. Active and Passive Sensor Fusion for Terrestrial Hyperspectral Image Shadow Detection and Restoration. Ph.D. Dissertation, University of Houston, Houston, TX, USA, May 2016. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Dare, P.M. Shadow Analysis in High-Resolution Satellite Imagery of Urban Areas. Photogramm. Eng. Remote Sens. 2005, 71, 169–177. [Google Scholar] [CrossRef]

- Xia, H.; Chen, X.; Guo, P. A shadow detection method for remote sensing images using Affinity Propagation algorithm. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 5–8 October 2009. [Google Scholar]

- Tsai, V.J.D. A comparative study on shadow compensation of color aerial images in invariant color models. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1661–1671. [Google Scholar] [CrossRef]

- Tolt, G.; Shimoni, M.; Ahlberg, J. A shadow detection method for remote sensing images using VHR hyperspectral and LIDAR data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 23–28 July 2011. [Google Scholar]

- Adeline, K.R.M.; Chen, M.; Briottet, X.; Pang, S.K.; Paparoditis, N. Shadow detection in very high spatial resolution aerial images: A comparative study. ISPRS J. Photogramm. Remote Sens. 2013, 80, 21–38. [Google Scholar] [CrossRef]

- Fredembach, C.; Finlayson, G. Simple Shadow Removal. In Proceedings of the 18th International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006. [Google Scholar]

- Sarabandi, P.; Yamazaki, F.; Matsuoka, M.; Kiremidjian, A. Shadow detection and radiometric restoration in satellite high resolution images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004. [Google Scholar]

- Zhou, W.; Huang, G.; Troy, A.; Cadenasso, M.L. Object-based land cover classification of shaded areas in high spatial resolution imagery of urban areas: A comparison study. Remote Sens. Environ. 2009, 113, 1769–1777. [Google Scholar] [CrossRef]

- Li, Y.; Gong, P.; Sasagawa, T. Integrated shadow removal based on photogrammetry and image analysis. Int. J. Remote Sens. 2005, 26, 3911–3929. [Google Scholar] [CrossRef]

- Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Chen, Y. Full waveform hyperspectral LiDAR for terrestrial laser scanning. Opt. Express 2012, 20, 7119. [Google Scholar] [CrossRef] [PubMed]

- Junttila, S.; Kaasalainen, S.; Vastaranta, M.; Hakala, T.; Nevalainen, O.; Holopainen, M. Investigating bi-temporal hyperspectral lidar measurements from declined trees-Experiences from laboratory test. Remote Sens. 2015, 7, 13863–13877. [Google Scholar] [CrossRef]

- Powers, M.A.; Davis, C.C. Spectral LADAR: Active range-resolved three-dimensional imaging spectroscopy. Appl. Opt. 2012, 51, 1468–1478. [Google Scholar] [CrossRef] [PubMed]

- Vauhkonen, J.; Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Nevalainen, O.; Vastaranta, M.; Holopainen, M.; Hyyppa, J. Classification of Spruce and Pine Trees Using Active Hyperspectral LiDAR. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1138–1141. [Google Scholar] [CrossRef]

| TLS: Riegl VZ-400 | HSI: Spectral Imaging, Ltd. Spectral Camera SWIR | ||

|---|---|---|---|

| Property | Value | Property | Value |

| Laser wavelength | 1550 nm | Wavelength range | 970–2500 nm |

| Beam divergence | 0.35 mrad | Spectral pixel count | 240 |

| Maximum range | 400 m | Spectral sampling | 6.3 nm/pixel |

| Pulse repetition | 300 kHz | Spatial pixel count | 320 |

| Range accuracy | 5 mm at 100 m | Camera output | 14 bit |

| Lens focal length | 22.5 mm | ||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hartzell, P.; Glennie, C.; Khan, S. Terrestrial Hyperspectral Image Shadow Restoration through Lidar Fusion. Remote Sens. 2017, 9, 421. https://doi.org/10.3390/rs9050421

Hartzell P, Glennie C, Khan S. Terrestrial Hyperspectral Image Shadow Restoration through Lidar Fusion. Remote Sensing. 2017; 9(5):421. https://doi.org/10.3390/rs9050421

Chicago/Turabian StyleHartzell, Preston, Craig Glennie, and Shuhab Khan. 2017. "Terrestrial Hyperspectral Image Shadow Restoration through Lidar Fusion" Remote Sensing 9, no. 5: 421. https://doi.org/10.3390/rs9050421