Revealing Implicit Assumptions of the Component Substitution Pansharpening Methods

Abstract

:1. Introduction

2. Concepts and Methodology

2.1. Bayesian Fusion Framework

2.2. CS Methods from a Bayesian Perspective

2.3. Best Practices in Histogram Matching

3. Experiments and Results

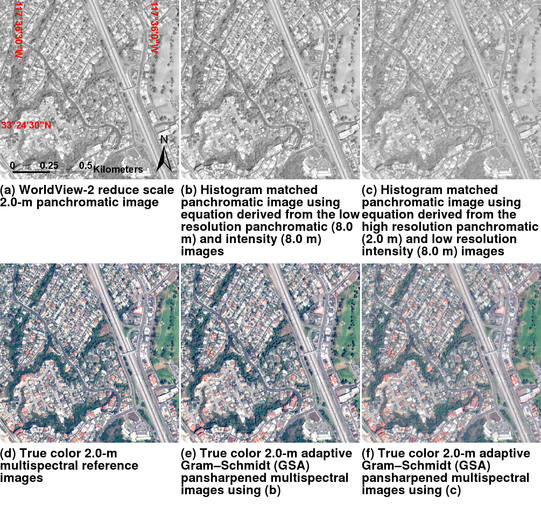

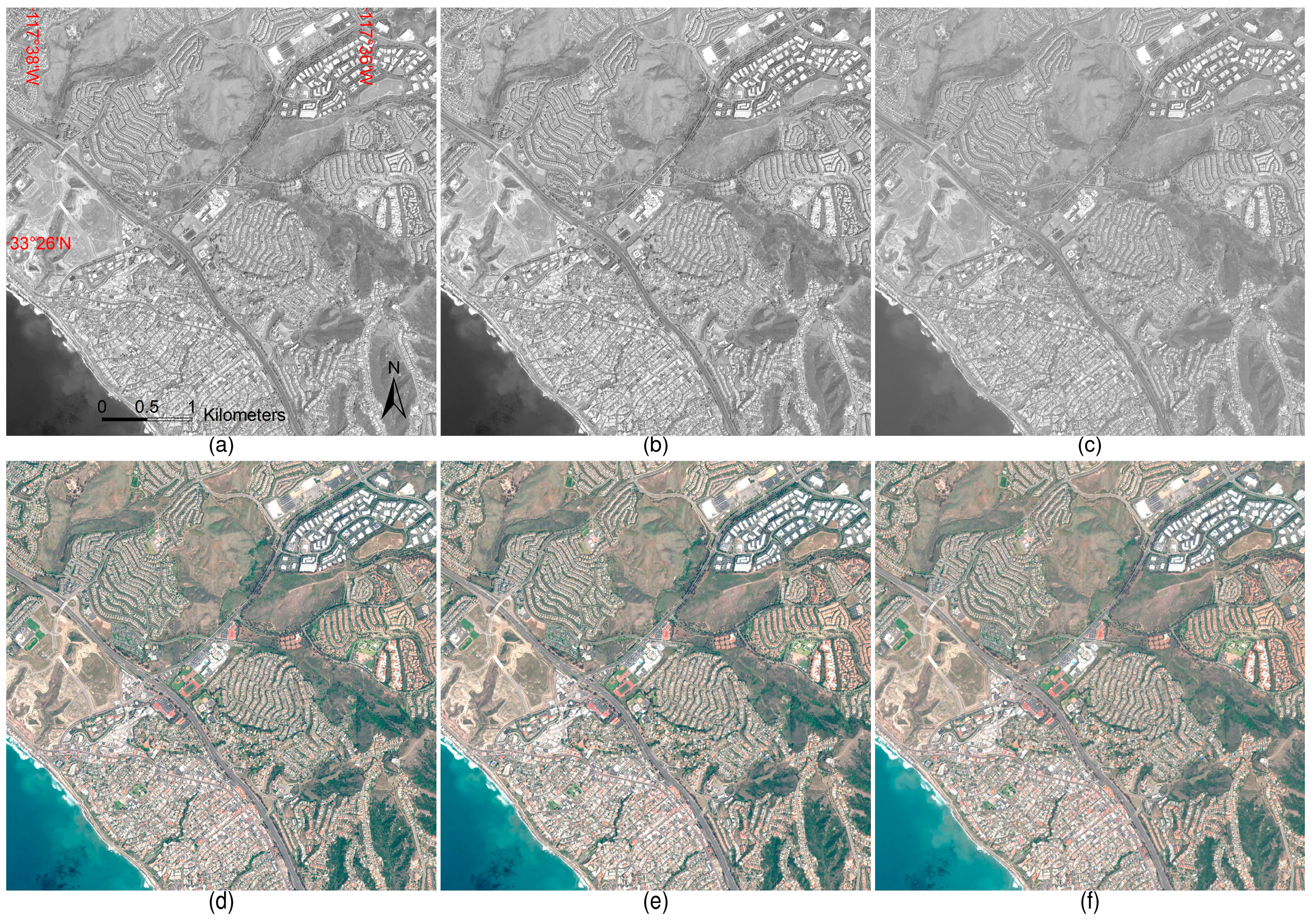

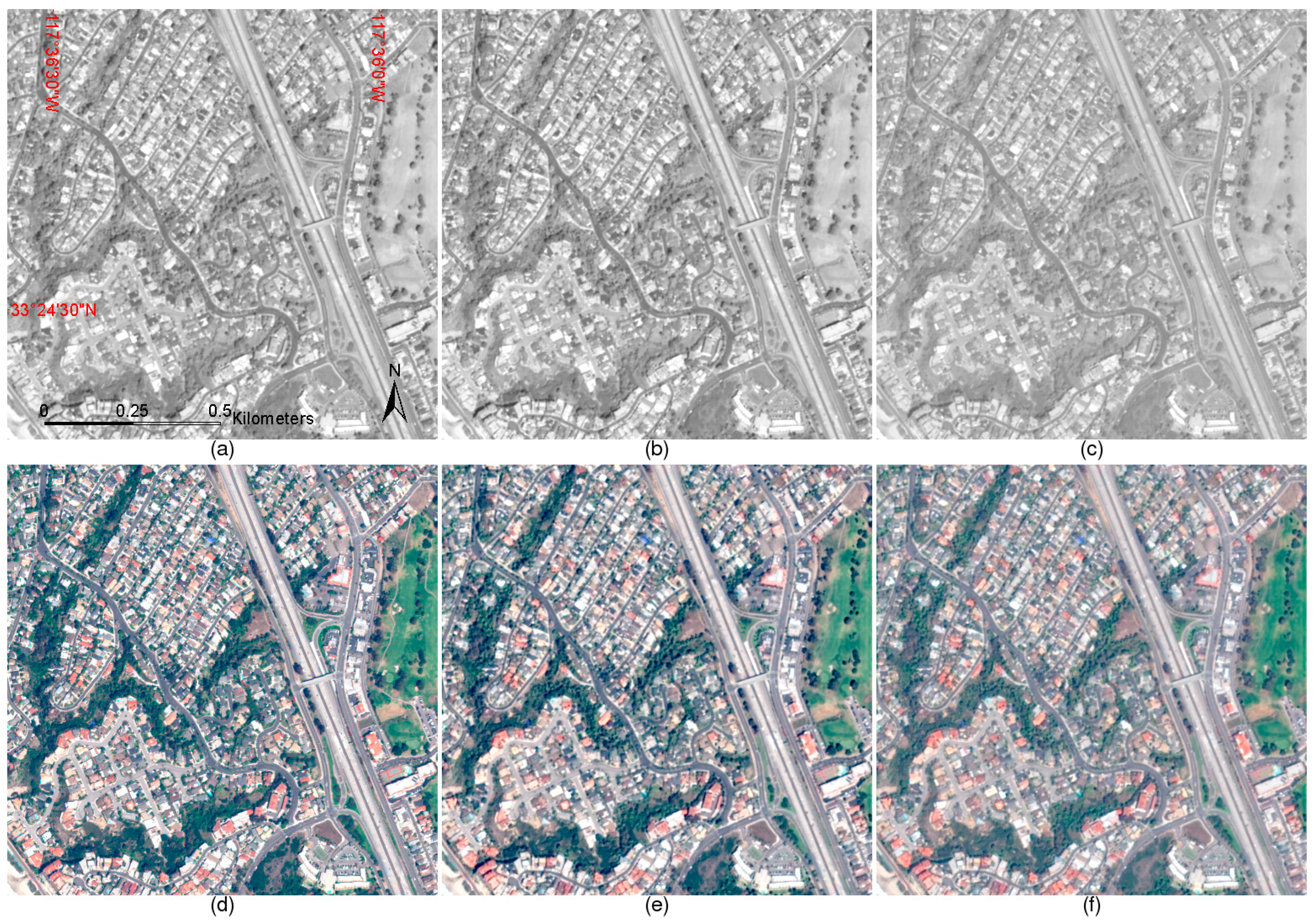

3.1. Data and Experimental Settings

3.2. Quantitative Evaluation of the Experimental Results

3.3. Results

4. Discussion

4.1. The Usefulness of the Revealed Statistical Assumptions

4.2. Time Complexity of the Suggested Histogram Matching Method

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, Y.; Roshan, A.; Jabari, S.; Khiabani, S.A.; Fathollahi, F.; Mishra, R.K. Understanding the quality of pansharpening—A lab study. Photogramm. Eng. Remote Sens. 2016, 82, 747–755. [Google Scholar] [CrossRef]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.; Masek, J.; Wang, P.; Yang, Y. Fusing Landsat and MODIS data for vegetation monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Garzelli, A. A review of image fusion algorithms based on the super-resolution paradigm. Remote Sens. 2016, 8, 797. [Google Scholar] [CrossRef]

- Wald, L. Some terms of reference in data fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1190–1193. [Google Scholar] [CrossRef]

- Selva, M.; Aiazzi, B.; Butera, F.; Chiarantini, L.; Baronti, S. Hyper-sharpening: A first approach on SIM-GA data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3008–3024. [Google Scholar] [CrossRef]

- Loncan, L.; Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Mura, M.D.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Schmitt, M.; Zhu, X.X. Data fusion and remote sensing: An ever-growing relationship. IEEE Geosci. Remote Sens. Mag. 2016, 4, 6–23. [Google Scholar] [CrossRef]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A. Remote Sensing Image Fusion; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Li, S.; Yang, B. A new pan-sharpening method using a compressed sensing technique. IEEE Trans. Geosci. Remote Sens. 2011, 49, 738–746. [Google Scholar] [CrossRef]

- Zhu, X.X.; Grohnfeldt, C.; Bamler, R. Exploiting Joint Sparsity for Pansharpening: The J-SparseFI Algorithm. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2664–2681. [Google Scholar] [CrossRef]

- Li, J.; Yuan, Q.; Shen, H.; Zhang, L. Noise Removal from Hyperspectral Image with Joint Spectral—Spatial Distributed Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5425–5439. [Google Scholar] [CrossRef]

- Zhang, D.; You, X.; Wang, P.S.; Yanushkevich, S.N.; Tang, Y.Y. Facial biometrics using nontensor product wavelet and 2D discriminant techniques. Int. J. Pattern Recognit. Artif. Intell. 2009, 23, 521–543. [Google Scholar] [CrossRef]

- You, X.; Du, L.; Cheung, Y.M.; Chen, Q. A blind watermarking scheme using new nontensor product wavelet filter banks. IEEE Trans. Image Process. 2010, 19, 3271–3284. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Hong, G. An IHS and wavelet integrated approach to improve pan-sharpening visual quality of natural colour IKONOS and QuickBird images. Inf. Fusion 2005, 6, 225–234. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. MTF-Based Deblurring Using a Wiener Filter for CS and MRA Pansharpening Methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2255–2269. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS plus Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Tu, T.-M.; Su, S.-C.; Shyu, H.-C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Dou, W.; Chen, Y.; Li, X.; Sui, D.Z. A general framework for component substitution image fusion: An implementation using the fast image fusion method. Comput. Geosci. 2007, 33, 219–228. [Google Scholar] [CrossRef]

- Xu, Q.; Li, B.; Zhang, Y.; Ding, L. High-fidelity component substitution pansharpening by the fitting of substitution data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7380–7392. [Google Scholar]

- Jelének, J.; Kopačková, V.; Koucká, L.; Mišurec, J. Testing a modified PCA-based sharpening approach for image fusion. Remote Sens. 2016, 8, 794. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carlà, R.; Garzelli, A.; Santurri, L. Sensitivity of pansharpening methods to temporal and instrumental changes between multispectral and panchromatic data sets. IEEE Trans. Geosci. Remote Sens. 2016, 55, 308–319. [Google Scholar] [CrossRef]

- Zhang, H.K.; Huang, B. A new look at image fusion methods from a Bayesian perspective. Remote Sens. 2015, 7, 6828–6861. [Google Scholar] [CrossRef]

- Fasbender, D.; Radoux, J.; Bogaert, P. Bayesian data fusion for adaptable image pansharpening. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1847–1857. [Google Scholar] [CrossRef]

- Zhang, Y.; Backer, S.D.; Scheunders, P. Noise-resistant wavelet-based Bayesian fusion of multispectral and hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3834–3843. [Google Scholar] [CrossRef]

- Eismann, M.T.; Hardie, R.C. Application of the stochastic mixing model to hyperspectral resolution enhancement. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1924–1933. [Google Scholar] [CrossRef]

- Hardie, R.C.; Eismann, M.T.; Wilson, G.L. MAP estimation for hyperspectral image resolution enhancement using an auxiliary sensor. IEEE Trans. Image Process. 2004, 13, 1174–1184. [Google Scholar] [CrossRef] [PubMed]

- Palubinskas, G. Model-based view at multi-resolution image fusion methods and quality assessment measures. Int. J. Image Data Fusion 2016, 7, 203–218. [Google Scholar] [CrossRef]

- Shen, H.; Meng, X.; Zhang, L. An Integrated Framework for the Spatio–Temporal–Spectral Fusion of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7135–7148. [Google Scholar] [CrossRef]

- Ng, M.K.P.; Yuan, Q.; Yan, L.; Sun, J. An Adaptive Weighted Tensor Completion Method for the Recovery of Remote Sensing Images with Missing Data. IEEE Trans. Geosci. Remote Sens. 2017, PP, 1–15. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Aiazzi, B.; Garzelli, A. Spatial methods for multispectral pansharpening: Multiresolution analysis demystified. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2563–2576. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Strahler, A.H. The factor of scale in remote sensing. Remote Sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef]

- Updike, T.; Comp, C. Radiometric Use of WorldView-2 Imagery; Digital Globe: Westminster, CO, USA, 2010; pp. 1–16. [Google Scholar]

- Zhang, H.K.; Roy, D.P. Computationally inexpensive Landsat 8 operational land imager (OLI) pansharpening. Remote Sens. 2016, 8, 180. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Quantitative quality evaluation of pansharpened imagery: Consistency versus synthesis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1247–1259. [Google Scholar] [CrossRef]

- Javan, F.D.; Samadzadegan, F.; Reinartz, P. Spatial quality assessment of pan-sharpened high resolution satellite imagery based on an automatically estimated edge based metric. Remote Sens. 2013, 5, 6539–6559. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A global quality measurement of pan-sharpened multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi/hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Gao, F.; Jin, Y.; Schaaf, C.B.; Strahler, A.H. Bidirectional NDVI and atmospherically resistant BRDF inversion for vegetation canopy. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1269–1278. [Google Scholar]

- Vivone, G.; Simões, M.; Mura, M.D.; Restaino, R.; Bioucas-Dias, J.M.; Licciardi, G.A.; Chanussot, J. Pansharpening based on semiblind deconvolution. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1997–2010. [Google Scholar] [CrossRef]

- Xu, Q.; Zhang, Y.; Li, B.; Ding, L. Pansharpening using regression of classified MS and pan images to reduce color distortion. IEEE Geosci. Remote Sens. Lett. 2015, 12, 28–32. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Lotti, F.; Selva, M. A comparison between global and context-adaptive pansharpening of multispectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 302–306. [Google Scholar] [CrossRef]

- Wang, H.; Jiang, W.; Lei, C.; Qin, S.; Wang, J. A robust image fusion method based on local spectral and spatial correlation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 454–458. [Google Scholar] [CrossRef]

- Garzelli, A. Pansharpening of multispectral images based on nonlocal parameter optimization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2096–2107. [Google Scholar] [CrossRef]

- Restaino, R.; Mura, M.D.; Vivone, G.; Chanussot, J. Context-adaptive pansharpening based on image segmentation. IEEE Trans. Geosci. Remote Sens. 2017, 55, 753–766. [Google Scholar] [CrossRef]

- Li, H.; Jing, L.; Wang, L.; Cheng, Q. Improved pansharpening with un-mixing of mixed MS sub-pixels near boundaries between vegetation and non-vegetation objects. Remote Sens. 2016, 8, 83. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, J.; Huang, G. A parallel computing paradigm for pan-sharpening algorithms of remotely sensed images on a multi-core computer. Remote Sens. 2014, 6, 6039–6063. [Google Scholar] [CrossRef]

| Synthesis Property at Reduced Scale | Consistency Property at Full Scale | |||||||

|---|---|---|---|---|---|---|---|---|

| Method | Q4 | SAM | ERGAS | Q4 | SAM | ERGAS | ||

| EXP () | 0.630 | 4.440 | 4.627 | NA | 0.973 | 1.188 | 1.165 | NA |

| GIHS Phist(p→i) | 0.827 | 5.406 | 4.192 | 0.0051 | 0.973 | 1.899 | 2.115 | 0.0079 |

| GIHS Phist(P→ĩ) | 0.780 | 5.111 | 4.237 | 0.0071 | 0.966 | 1.696 | 2.228 | 0.0089 |

| GS Phist(p→i) | 0.850 | 4.801 | 3.929 | 0.0051 | 0.976 | 1.514 | 1.872 | 0.0079 |

| GS Phist(P→ĩ) | 0.805 | 4.680 | 4.076 | 0.0071 | 0.969 | 1.426 | 2.011 | 0.0089 |

| GSA Phist(p→i) | 0.853 | 4.491 | 3.608 | 0.0024 | 0.976 | 1.256 | 1.241 | 0.0068 |

| GSA Phist(P→ĩ) | 0.807 | 4.474 | 3.845 | 0.0074 | 0.967 | 1.276 | 1.494 | 0.0092 |

| Synthesis Property at Reduced Scale | Consistency Property at Full Scale | |||||||

|---|---|---|---|---|---|---|---|---|

| Method | Q4 | SAM | ERGAS | Q4 | SAM | ERGAS | ||

| EXP () | 0.571 | 5.310 | 5.184 | NA | 0.733 | 1.745 | 1.526 | NA |

| GIHS Phist(p→i) | 0.607 | 5.563 | 3.540 | 0.0099 | 0.679 | 2.318 | 2.636 | 0.0145 |

| GIHS Phist(P→ĩ) | 0.583 | 5.730 | 3.821 | 0.0113 | 0.657 | 2.413 | 2.665 | 0.0146 |

| GS Phist(p→i) | 0.624 | 5.553 | 3.478 | 0.0099 | 0.667 | 2.646 | 2.485 | 0.0145 |

| GS Phist(P→ĩ) | 0.599 | 5.871 | 3.789 | 0.0113 | 0.649 | 2.822 | 2.523 | 0.0146 |

| GSA Phist(p→i) | 0.626 | 5.000 | 3.149 | 0.0023 | 0.764 | 1.807 | 1.828 | 0.0088 |

| GSA Phist(P→ĩ) | 0.592 | 5.335 | 3.542 | 0.0072 | 0.723 | 1.999 | 1.959 | 0.0097 |

| Synthesis Property at Reduced Scale | Consistency Property at Full Scale | |||||||

|---|---|---|---|---|---|---|---|---|

| Method | Q4 | SAM | ERGAS | Q4 | SAM | ERGAS | ||

| EXP () | 0.317 | 6.545 | 5.458 | NA | 0.557 | 2.051 | 1.542 | NA |

| GIHS Phist(p→i) | 0.341 | 6.679 | 3.797 | 1.266 | 0.465 | 2.495 | 2.924 | 1.7855 |

| GIHS Phist(P→ĩ) | 0.303 | 6.688 | 4.291 | 1.414 | 0.398 | 2.508 | 2.929 | 1.7876 |

| GS Phist(p→i) | 0.362 | 6.501 | 3.725 | 1.266 | 0.434 | 2.766 | 2.657 | 1.7855 |

| GS Phist(P→ĩ) | 0.308 | 6.648 | 4.287 | 1.414 | 0.385 | 2.838 | 2.684 | 1.7876 |

| GSA Phist(p→i) | 0.356 | 6.350 | 3.209 | 0.243 | 0.636 | 2.123 | 1.882 | 0.9771 |

| GSA Phist(P→ĩ) | 0.320 | 6.436 | 3.930 | 0.980 | 0.550 | 2.209 | 2.088 | 1.1309 |

| Method | 4-Band QuickBird Dataset (Figure 1) | 8-Band WorldView-2 Urban 1 Dataset (Figure 2) | 8-Band WorldView-2 Urban 2 Dataset (Figure 3) |

|---|---|---|---|

| EXP () | 0.11 | 0.56 | 0.22 |

| GIHS Phist(p→i) | 0.31 | 5.98 | 0.47 |

| GIHS Phist(P→ĩ) | 0.26 | 5.10 | 0.37 |

| GS Phist(p→i) | 0.37 | 6.49 | 0.50 |

| GS Phist(P→ĩ) | 0.29 | 5.17 | 0.41 |

| GSA Phist(p→i) | 0.40 | 6.52 | 0.55 |

| GSA Phist(P→ĩ) | 0.41 | 6.50 | 0.56 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, B.; Zhang, H.K.; Huang, B. Revealing Implicit Assumptions of the Component Substitution Pansharpening Methods. Remote Sens. 2017, 9, 443. https://doi.org/10.3390/rs9050443

Xie B, Zhang HK, Huang B. Revealing Implicit Assumptions of the Component Substitution Pansharpening Methods. Remote Sensing. 2017; 9(5):443. https://doi.org/10.3390/rs9050443

Chicago/Turabian StyleXie, Bin, Hankui K. Zhang, and Bo Huang. 2017. "Revealing Implicit Assumptions of the Component Substitution Pansharpening Methods" Remote Sensing 9, no. 5: 443. https://doi.org/10.3390/rs9050443