1. Introduction

Urban areas are the main areas of human activity, and they have a great impact on the Earth’s land-surface change and the ecological environment. An urban distribution map can reflect the degree of regional urbanization, and is also the most basic geographic data source for urban planning, development, and change monitoring [

1]. In recent decades, remote sensing has been the main approach to efficient urban land-cover mapping. Considerable efforts have been made to improve the accuracy of urban land-cover mapping [

2,

3,

4,

5,

6,

7,

8]. A number of different approaches have been proposed in recent years for automatically detecting urban areas from remotely sensed images [

9,

10].

In the early years, only low or medium spatial resolution images were available to the general public and researchers [

11,

12]. In these images, the characteristics of urban areas are mainly identified by impervious surfaces [

9] or built-up areas [

10]. In previous research, the traditional pattern recognition and classification methods, such as spectral unmixing [

13], artificial neural networks [

14], support vector machine, random forest [

15,

16,

17], and so on. However, the results of these (supervised) methods depend on the quality of the selected training samples. Furthermore, their collection is a time-consuming task.

Many different indices for impervious surfaces and built-up areas have been presented, including the urban index (UI) [

18], the normalized difference built-up index (NDBI) [

19], the normalized difference impervious surface index (NDISI) [

20], the enhanced built-up and bareness index (EBBI) [

21], and the combinational build-up index (CBI) [

9]. These indices are quick, simple, and convenient in practical applications. However, these indices face a variety of challenges. For example, they are subject to seasonal variation, and it can be difficult to distinguish between built-up and bare land areas. These indices may also require special bands, or they may be sensor-dependent [

9]. In [

22], multitemporal synthetic aperture radar (SAR) data were used to define the built-up index.

With the increase of the spatial resolution of images, the significant spatial structure information of the land surface can be used to improve the accuracy of urban area extraction [

23,

24,

25]. Urban scenes have unique textures when compared to natural scenes, and this can be exploited to classify, i.e., gray-level co-occurrence matrix (GLCM) [

26], the normalized gray-level histogram [

27], and the Gabor wavelet [

28]. Pesaresi et al. [

10] proposed a robust built-up area presence index, namely, the PanTex index, which is an anisotropic rotation-invariant textural measure derived from panchromatic satellite data. In [

29], morphological multiscale operators were used to undertake urban texture recognition.

In all the references reviewed, it was found that most of the image resolutions (e.g., Moderate Resolution Imaging Spectroradiometer (MODIS), Landsat 8, Landsat Thematic Mapper (TM), and SPOT-5) used for urban area extraction are lower than 5 m [

9,

11]. In addition, with the increase of the resolution, the extraction accuracy of urban areas is reduced [

10], for the following reasons. Urban areas are a complex combination of various land-cover types (vegetation, buildings with different colors, roads, water, parking lots, etc.), and they show a variety of land-use structures and spatial layouts. Furthermore, impervious surfaces (buildings, roads, parking lots, and so on) dominate urban areas. Thus, in low- and medium-resolution images, urban areas are mainly manifested by impervious surfaces with similar spectral information. With the increase of the image resolution, the small objects of the non-impervious surface land-cover types, such as vegetation, water, grass, soil, and so on, are clearly displayed in the images, which brings great challenges to the urban area extraction, especially for high-resolution remote sensing images containing only four channels (red (R), green (G), blue (B), and near-infrared (

NIR)).

An urban place can be regarded as a spatial concentration of people whose lives are organized around nonagricultural activities [

30]. Urban areas are created through urbanization, and are categorized by urban morphology as cities, towns, conurbations, or suburbs. “Built-up area” is a term used primarily in urban planning, real estate development, building design, and the construction industry. It encompasses the following: firstly, a developed area, i.e., any land on which buildings are present, normally as part of a larger development; and, secondly, a “gross building area” (construction area). Impervious surfaces are mainly artificial structures (pavements, roads, sidewalks, driveways, and parking lots) that are covered by impenetrable materials such as asphalt, concrete, brick, and stone. It is clear that urban areas partly equate to built-up areas, and impervious surfaces are not equivalent to urban areas, which also contain green spaces. Due to one pixel covering a large area of ground in low- and medium-resolution images, and the fact that impervious surfaces dominate urban zones, urban areas and impervious surfaces have similar spectral information. The extraction of urban areas, built-up areas, and impervious surfaces is indistinguishable when using low- and medium-resolution images. However, in all the references reviewed, we found that these concepts of urban areas, built-up areas, and impervious surface are usually not distinguished in high spatial resolution imagery [

10,

31].

The aim of this paper is to extract urban areas from high spatial resolution images, and to simultaneously analyze the spatial structure distribution of the urban areas. This task is faced with many challenges:

Firstly, urban areas contain various land-cover types (vegetation, buildings, roads, water bodies, parking lots, green spaces, bare soil, shadow, etc.) at microscopic (local spatial units) spatial extent, and complex combinations of the many land-cover types can be clearly seen in high spatial resolution images.

Secondly, many of the impervious land-cover types of urban areas, such as buildings, have similar spectral information to the impervious land-cover types of non-urban areas, such as bare land, sand, or rock, especially for the images obtained in dry and cold seasons or high-latitude regions.

Thirdly, urban morphology shows diversity in the macroscopic spatial extent. Urban areas are categorized by urban morphology as cities, towns, conurbations, or suburbs, which show a wide variety of land-use structures and spatial layouts. Furthermore, with the development of urban planning, the greening of urban areas is becoming more common, which can result in confusion with rural areas.

Wilkinson [

32] and Li et al. [

33] demonstrated that new algorithms can improve the accuracy of land-cover and land-use mapping, but the improvement is limited. Gong et al. [

34] suggested that more effort should be made to include new features to improve the accuracy of land-cover mapping. In addition, recent studies of deep learning have demonstrated that a combination of low-level features can form a more abstract high-level representation of the attribute categories or characteristics, and can significantly improve the recognition accuracy [

35,

36].

The aim of this study is to extract the urban areas from high spatial resolution images. In order to solve the above problems, a new approach is proposed based on small spatial units and high-level features extracted from the primitive features, such as regional features and line segments. This approach is much easier than the fusion of features at the land-use level. Classification and urban spatial structure analysis are then performed for all the non-overlapping small spatial units of the entire image (the flow chart of the proposed approach is shown in

Section 3). The contributions of this paper are as follows:

- (1)

The proposed method has the advantage of strong fault tolerance. The multiple high-level features, which are extracted from the primitive features in microscopic spatial extents, are used to describe the complex spectral and spatial properties of urban areas.

- (2)

Line segments and their spatial relationship with regional features are used to describe the urban area.

- (3)

Urban morphology analysis is undertaken at a macroscopic spatial extent, based on morphological spatial pattern analysis (MSPA).

The rest of this paper is organized as follows. The study areas and remotely sensed data are introduced in

Section 2.

Section 3 introduces the proposed method, including the low-level feature extraction, the chessboard segmentation of the entire image into non-overlapping small spatial units, the high-level feature extraction, the random forest classification, and the spatial structure analysis. The results of the experiments are reported in

Section 4. Finally, a discussion and conclusions are provided in

Section 5 and

Section 6.

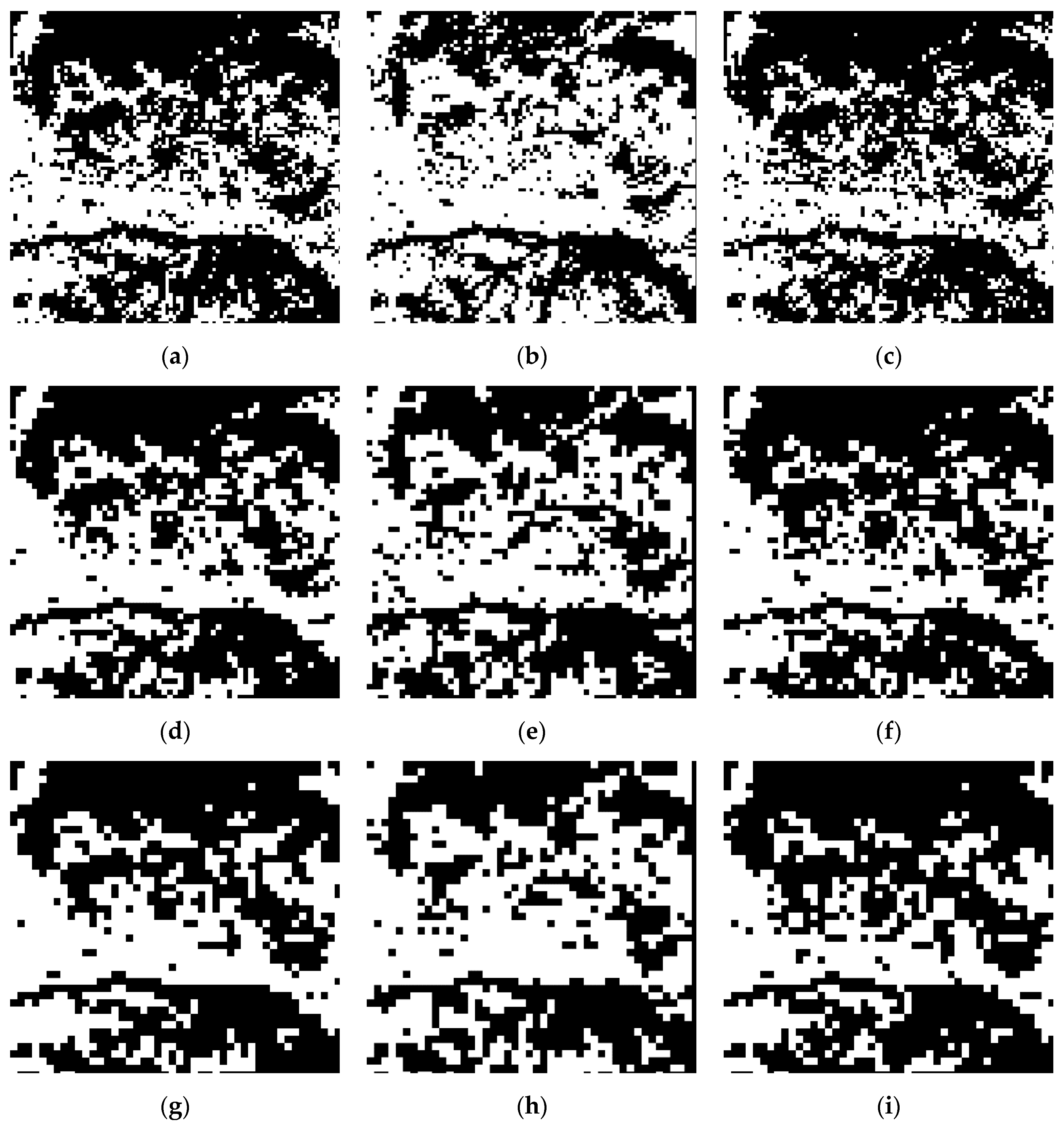

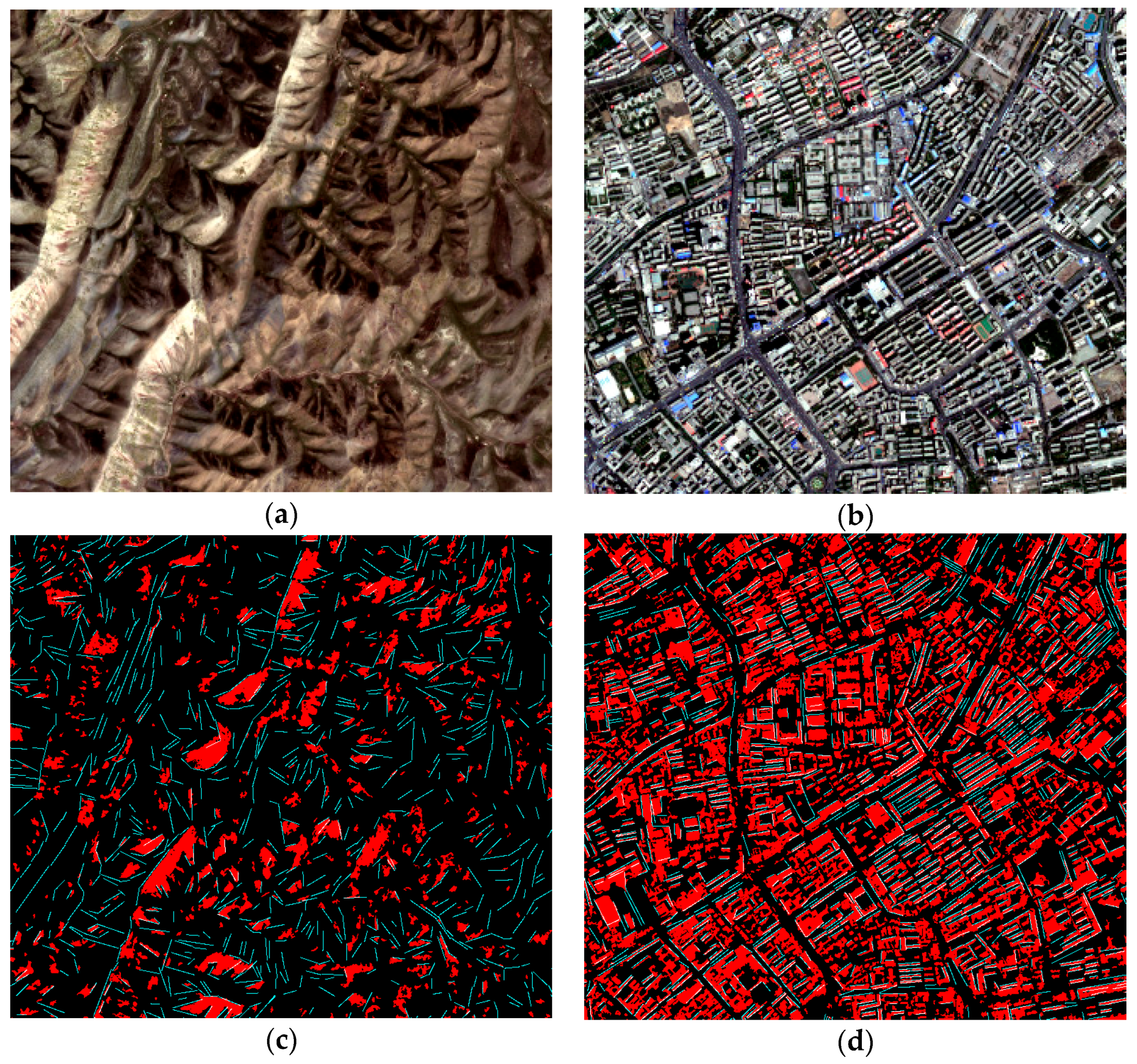

2. Study Areas and Data

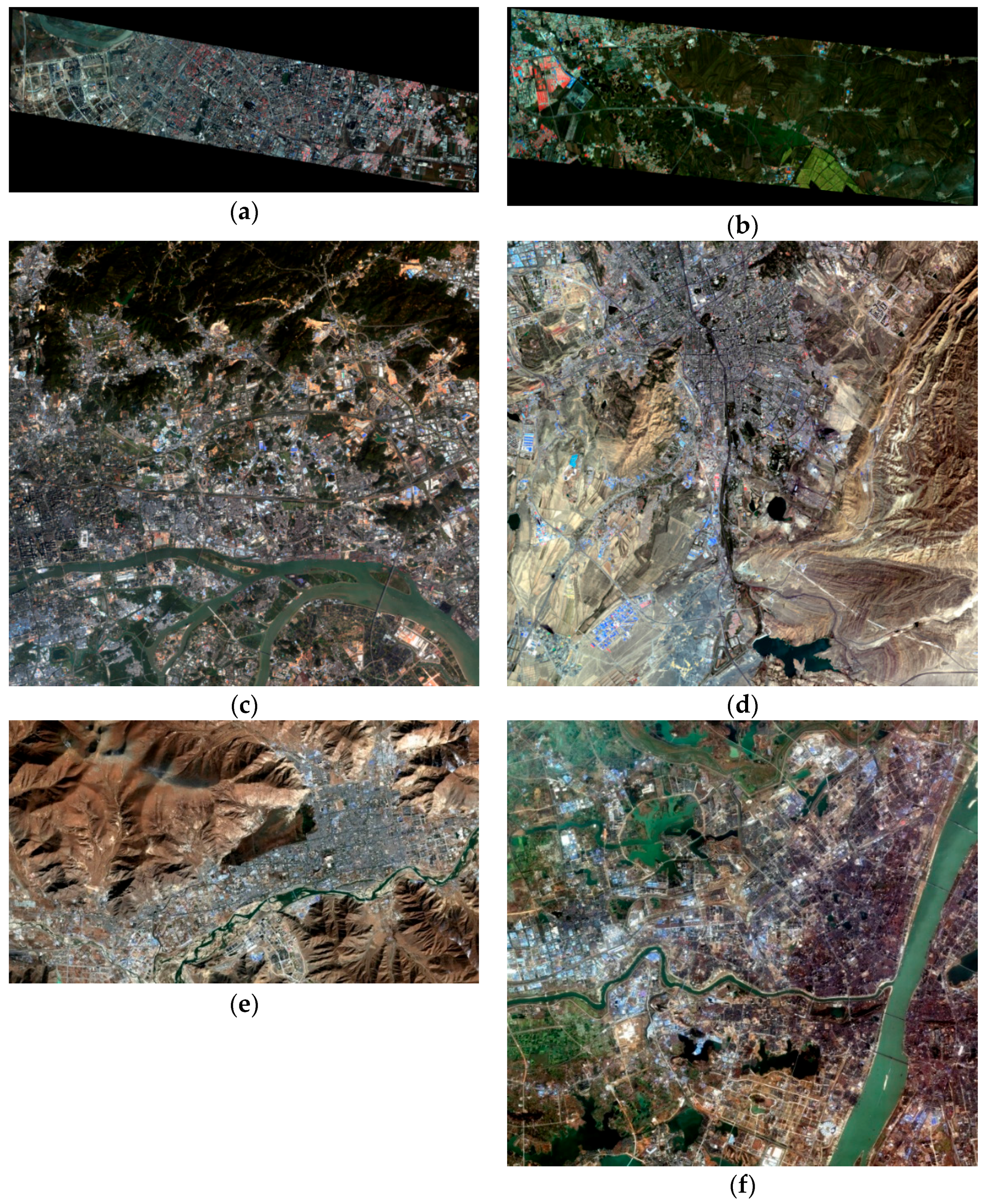

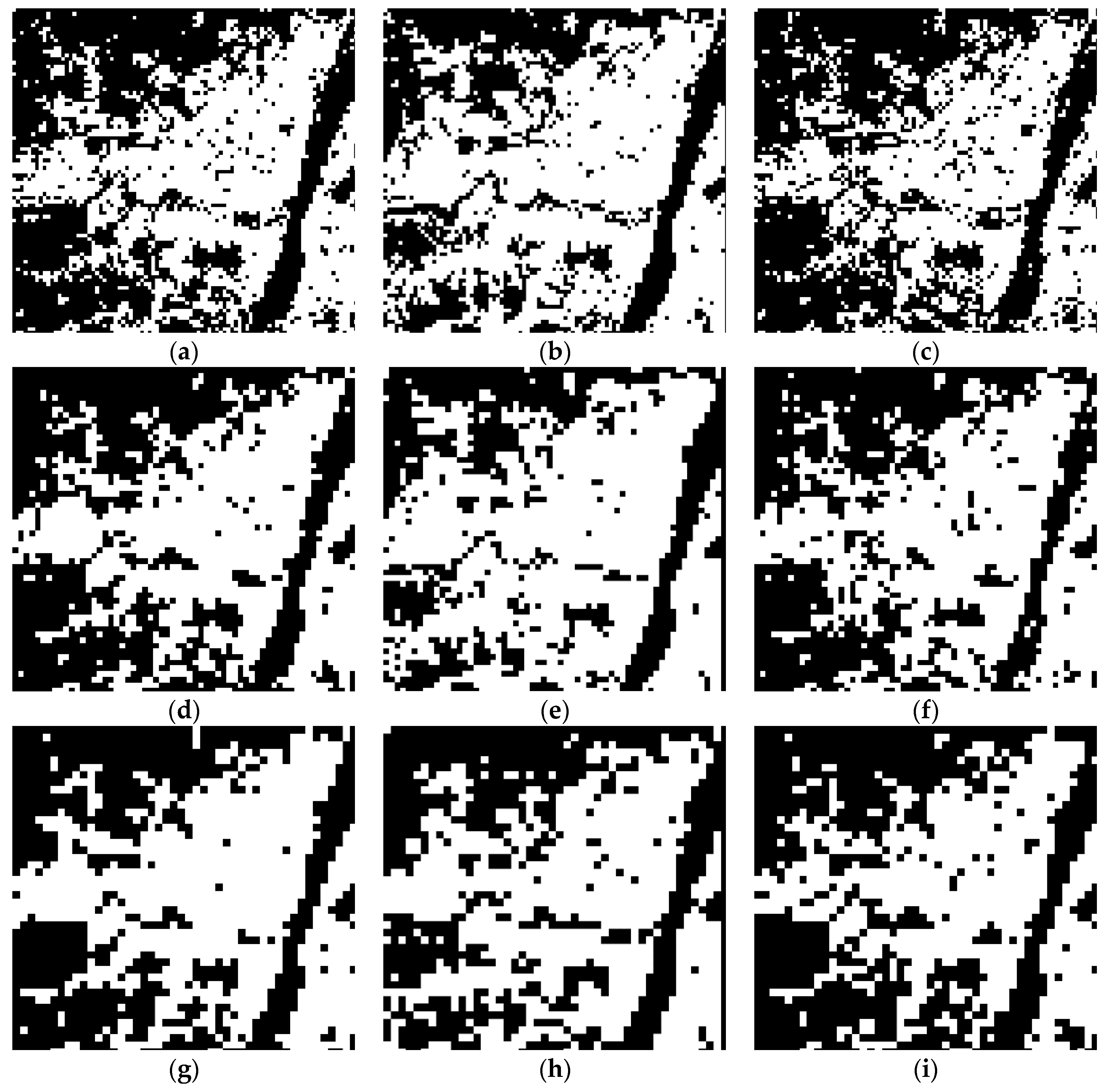

In order to test the robustness of the proposed method, six different test sites in China were selected, for which the data were obtained by different satellites and in different seasons. The images are denoted as R1–R6, and are shown in

Table 1 and

Figure 1. The images comprise two WorldView-2 images with a 2-m resolution (R1 and R2) and four Gaofen 2 (GF-2) images with a 4-m resolution (R3–R6). All the images have four bands, namely, R, G, B, and

NIR.

The two WorldView-2 (WV-2) images, denoted as R1 and R2, cover Harbin, Heilongjiang province, and were acquired in September 2011 during the autumn (

Figure 1a,b). Harbin features the highest latitude and the lowest temperature of all of China’s big cities, and has a temperate continental monsoon climate. There is less than 5% overlap between the R1 and R2 images. The vegetation in the R1 and R2 images is very lush. The R1 image mainly contains city center and the surrounding towns, regions under development, and some rural areas. A small area of urban area, sparsely scattered villages, and large tracts of farmland comprise image R2.

Image R3 (

Figure 1c) mainly covers the Tianhe and Huangpu districts of Guangzhou, Guangdong province, and was acquired in January 2015, during the winter. Guangzhou is a rapidly developing city, and image R3 contains many bright patches of bare land that have been prepared for building. Guangzhou is a hilly area that is located on the coast. The climate is subtropical monsoon.

Images R4 and R5 (

Figure 1d,e) are of Wulumuqi and Lhasa, Xinjiang province. Urumqi is the world’s farthest city from the ocean, and features a temperate continental arid climate. Image R4 was obtained in May 2015, during the early spring, and both the farmland and hills feature bright dry soil with sparse vegetation, which is difficult to separate from the Urumqi city areas. The bare hills feature a distinctive and unique texture characteristic, as shown in the right part of

Figure 1d. Image R5 covers the city of Lhasa and its surrounding hills. The climate in this area is temperate semi-arid monsoon. Image R5 was obtained in November 2014, during the winter. As a result, the image contains bare vegetation, gravel, and dry soil, which have similar spectral characteristics to the buildings and roads in the city areas.

Image R6 is of Wuhan, China. The image was obtained in February 2015, during the winter. Wuhan is a humid subtropical monsoon climate zone. The Yangtze River and the Han River intersect in the center of the city of Wuhan, and many lakes and water bodies are found on both sides of the Yangtze River. It can be seen from

Figure 1f that this image is very challenging and contains very complex and diverse land-cover types, e.g., water, river, old city with dense old buildings, newly developed areas, blocks of paddy fields, bare land, vegetation, areas to be developed with bright bare soil, and so on.

In general, images R3 and R6 contain much more complex land-cover and land-use types than the other test images. Clearly, images R4 and R5 are similar, containing large areas of bare mountain and bare soil, covered with very little vegetation. Lush vegetation is found on most of the non-built-up areas of images R1, R2, R3, and R6. The many patches of bright bare land with bright soil found in images R1, R2, R3, and R6 have a negative impact on the urban area extraction.

3. Methodology

Urban areas are complex compositions of multiple objects of multiple material types, especially in the impervious areas, which often leads to confusion with non-urban areas. This complexity of the urban areas not only refers to the spectra of the urban objects, but also the size, spatial structure, and layout, especially in high spatial resolution images. The spatial layout and the relationship between buildings and vegetation reflect the essential characteristics of the city.

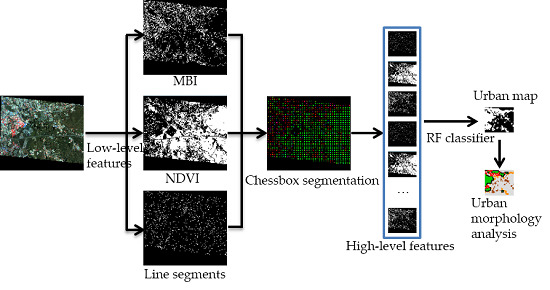

Therefore, in this study, a new approach is proposed to extract urban areas in high spatial resolution images. The flow chart of the proposed approach is shown in

Figure 2. The primitive features reflecting the characteristics of buildings (building regions), vegetation, and line segments are first extracted.

In this study, the morphological building index (MBI) and the NDVI are used to represent the regional features of buildings and vegetation. However, the NDVI cannot always detect vegetation areas correctly, due to seasonal variations. The bare land is then mistakenly extracted as buildings, and is considered as an urban area. The line segments are used to solve this problem. The line segment characteristics of urban areas are significant, due to the dominant urban objects, such as buildings and roads, having regular shapes. Because it is quick and easy to implement, a chessboard segmentation algorithm is used to segment the entire image to obtain non-overlapping small spatial units. In each spatial unit, high-level features are abstracted from the MBI, the NDVI, and the line segment map. The random forest (RF) classifier is then employed for the classification of each spatial unit. Then, based on the spatial units, the spatial structure of the urban area is analyzed. The following sections describe the details.

3.1. Morphological Building Index (MBI)

The state-of-the-art MBI [

37,

38] building extraction method is used because it is free of parameters, multiscale, multidirectional, and unsupervised. The MBI has also been proved to be effective for automatic building extraction from high spatial resolution images [

39,

40]. The basic principle of the MBI is that buildings are brighter than their surroundings (especially building shadow). The MBI uses a set of morphological operators (e.g., top-hat by reconstruction, granulometry, and directionality) to represent the spectral–spatial regional properties of buildings (e.g., brightness, size, contrast, directionality, and shape). The calculation of the MBI is briefly described as follows.

Step 1: Brightness image. The maximum value for each pixel in the visible bands is kept as the brightness, since the visible bands make the most significant contribution to the spectral property of buildings.

Step 2: Top-hat morphological profiles. The differential morphological profiles of the top-hat transformation (DMP

TH) represent the spectral–structural property of the buildings:

where TH represents the top-hat by reconstruction of the brightness image;

s and

d indicate the scale and direction of a linear structural element (SE), respectively;

S and

D represent the sets of scales and directions, respectively; and

s is the interval of the profiles. The top-hat transformation can highlight the locally bright structures with a size up to a predefined value, and is used to measure the contrast.

Step 3: Calculation of the MBI. The MBI is defined by Equation (2):

where

Nd and

Ns are the number of directions and scales, respectively. The building index is defined as the average of the multiscale and multidirectional DMP

TH, since building structures have larger feature values in most of the scales and directions in the morphological profiles, due to their local contrast and isotropy.

3.2. Normalized Difference Vegetation Index (NDVI)

The NDVI is used to represent the vegetation components, such as the grass and trees of urban areas, and farmland and forest in non-urban areas. Its calculation is based on the physical–chemical characteristic of vegetation, which has a strong reflectance in the

NIR channel, but strong absorption in the red channel:

The vegetation map is obtained by applying Otsu thresholding [

41] to the NDVI index.

3.3. Line Segments

The linear features are one of the most important characteristics of buildings, and are a good indicator of the existence of candidate buildings. Many studies have considered shadow as the proof of the existence of buildings [

42,

43]. Unfortunately, there are many factors that are beyond the control of the data user in very high resolution (VHR) satellite image acquisition, such as the sensor viewing angle, the solar angles, the season, the time, and the atmospheric conditions. Consequently, the shadow characteristics, such as size, shape, width, and length, are different in different images. Furthermore, in addition to buildings, many other objects, such as trees, vehicles, garden walls, pools, and bridges, cast shadows. Thus, the process of building shadow detection and extraction is time-consuming, and the accuracy of the results is not guaranteed.

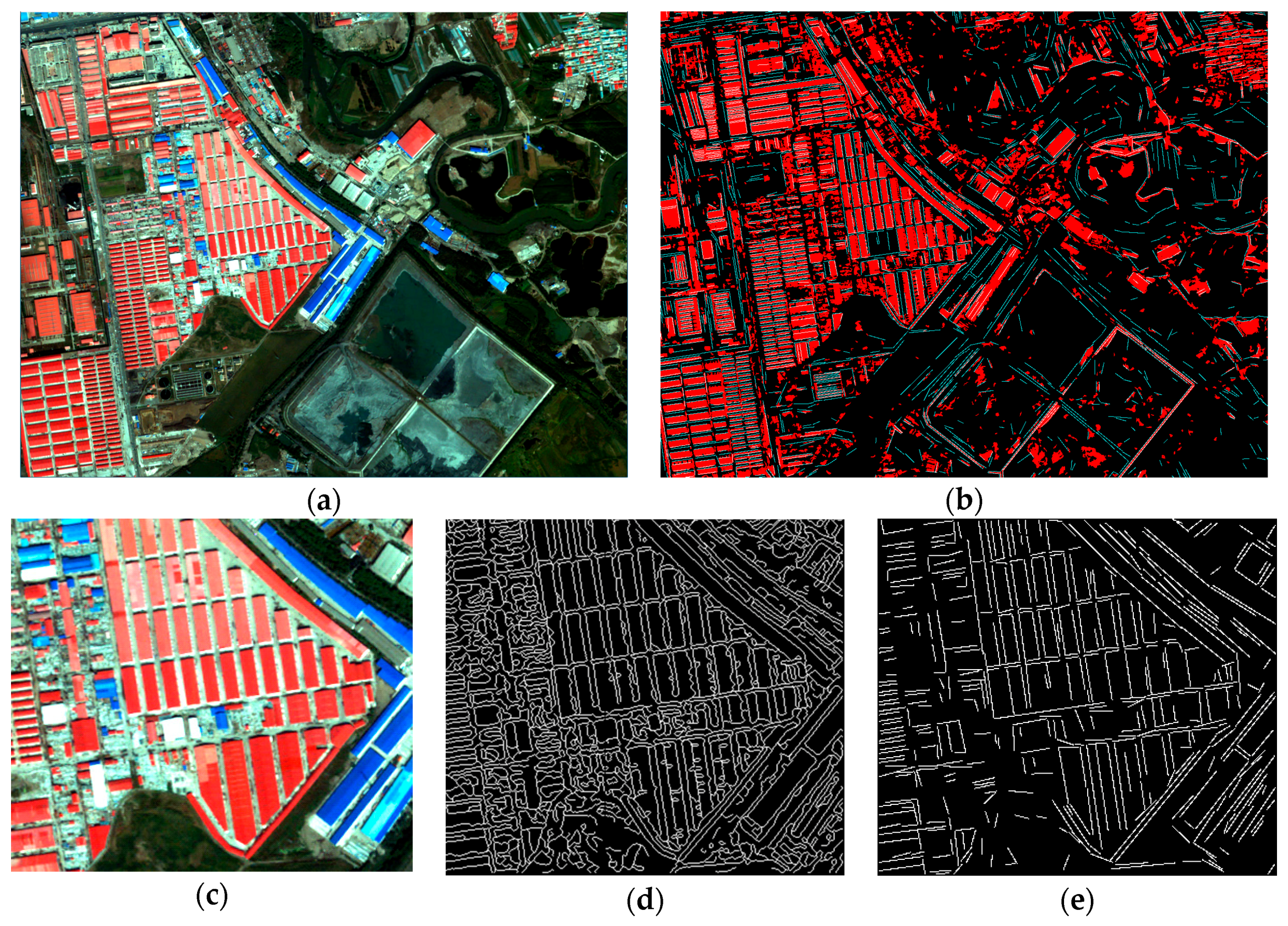

Figure 3b shows the MBI and line segment features of

Figure 3a. The line segments of the built-up areas are very different to those of the bare land. Because the bare land spectrum changes slowly, the MBI features of bare land are generally far away from the line segments. However, due to the relatively high luminance contrast between buildings and shadow, straight lines always appear on the sides of buildings in the immediate vicinity of shadow, as shown in

Figure 3b. Such a line is called a dominant line (DL). In general, the length of the DL is similar to the length of the real building, and its inclination angle is also similar to the orientation of the real building.

In this study, the line segments are extracted by a fast, parameterless line segment detector named EDLines [

44], based on the grayscale image. The grayscale image is obtained by forming a weighted sum of the R, G, and B bands of the original image (0.2989 × R + 0.5870 × G + 0.1140 × B [

45]). The process consists of three steps: edge segment detection, line segment extraction, and line validation.

Step 1: Edge segments. Clean, contiguous chains of pixels are extracted by the edge drawing (ED) algorithm [

46], based on the gradient magnitude and direction. The ED algorithm is very quick and accurate compared to the other existing edge detectors (e.g., Hough transform). The ED algorithm comprises four steps: denoising and smoothing of the images by a Gaussian filter, gradient magnitude and direction extraction for each pixel, detecting anchors which have the local maximum gradient values, and connecting anchors to obtain edge segments.

Step 2: Line segments. The least-squares line fitting method is used as the straightness criterion to extract line segments from the edge segments produced in the first step.

Step 3: Line validation. Line validation is adopted based on the Helmholtz principle to eliminate the false line segments.

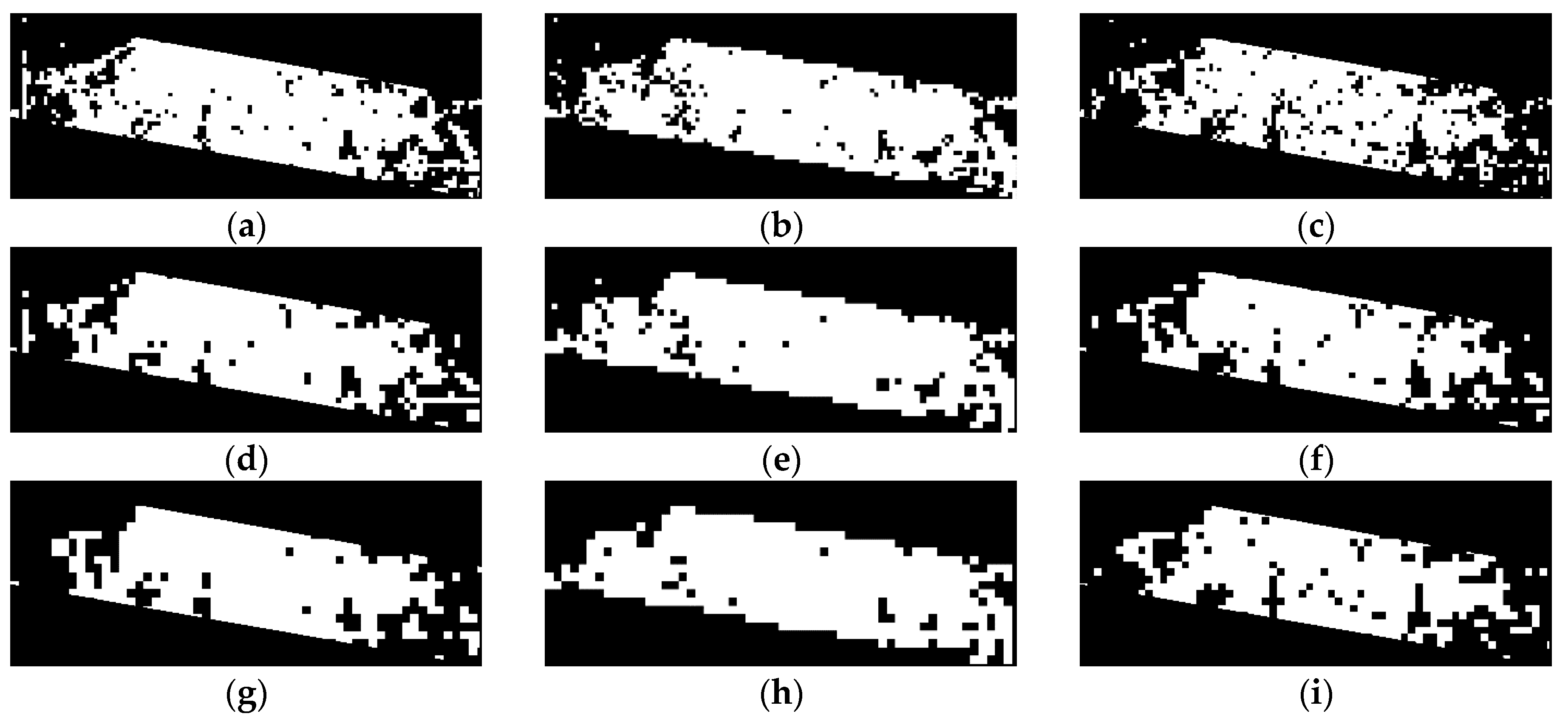

Figure 3c–e shows an example of EDLines extraction.

Figure 3d shows the ED results of

Figure 3c, where the boundaries of the image are extracted. The boundaries of the interior of building objects are extracted, and the boundaries are not smooth and neat.

Figure 3e shows the final result of the EDLines algorithm, where it can be seen that the line segments are much smoother than the edge segments (

Figure 3d), and many of the trivial short boundaries are removed.

3.4. Chessboard Segmentation

Chessboard segmentation is the simplest segmentation method as it involves splitting the image into non-overlapping square objects with a size predefined by the user. Chessboard segmentation does not consider the underlying data (e.g., the MBI, the NDVI, and the line segment feature maps in this study) and, therefore, the features within the data are not delineated. This method is very suitable for segmenting complex urban areas.

3.5. High-Level Feature Extraction

High-level features reflecting the intrinsic characteristics of the urban areas are extracted from the primitive features for each spatial unit obtained by the chessboard segmentation.

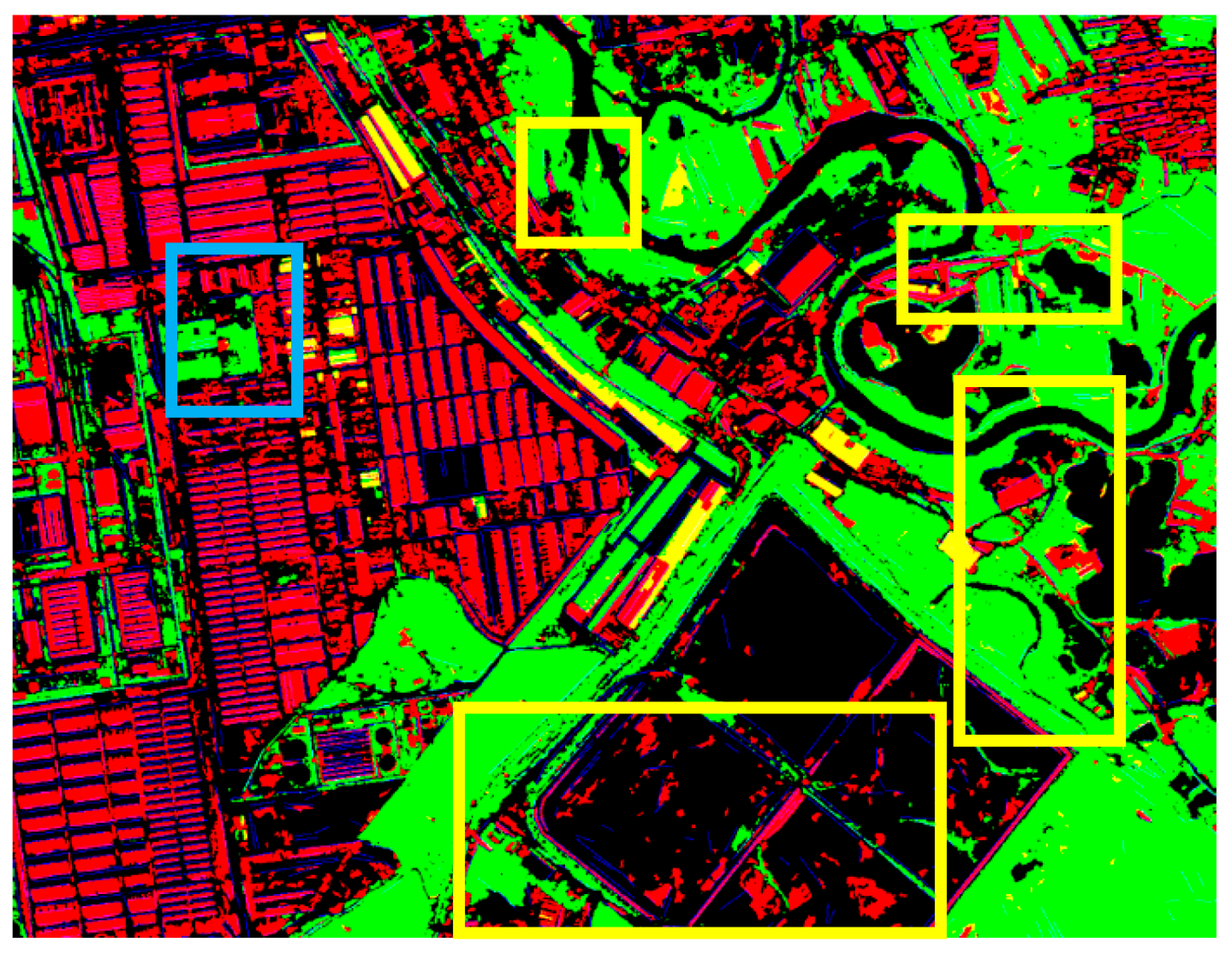

Figure 4 is the combination of the MBI, the NDVI, and the line segment feature maps of

Figure 3a. The line segments of the built-up areas are very different to those of the bare land. Clearly, the MBI feature is the main factor to distinguish urban and non-urban areas. However, some bare soil is detected by the MBI, and some blue buildings are detected by both the MBI and the NDVI.

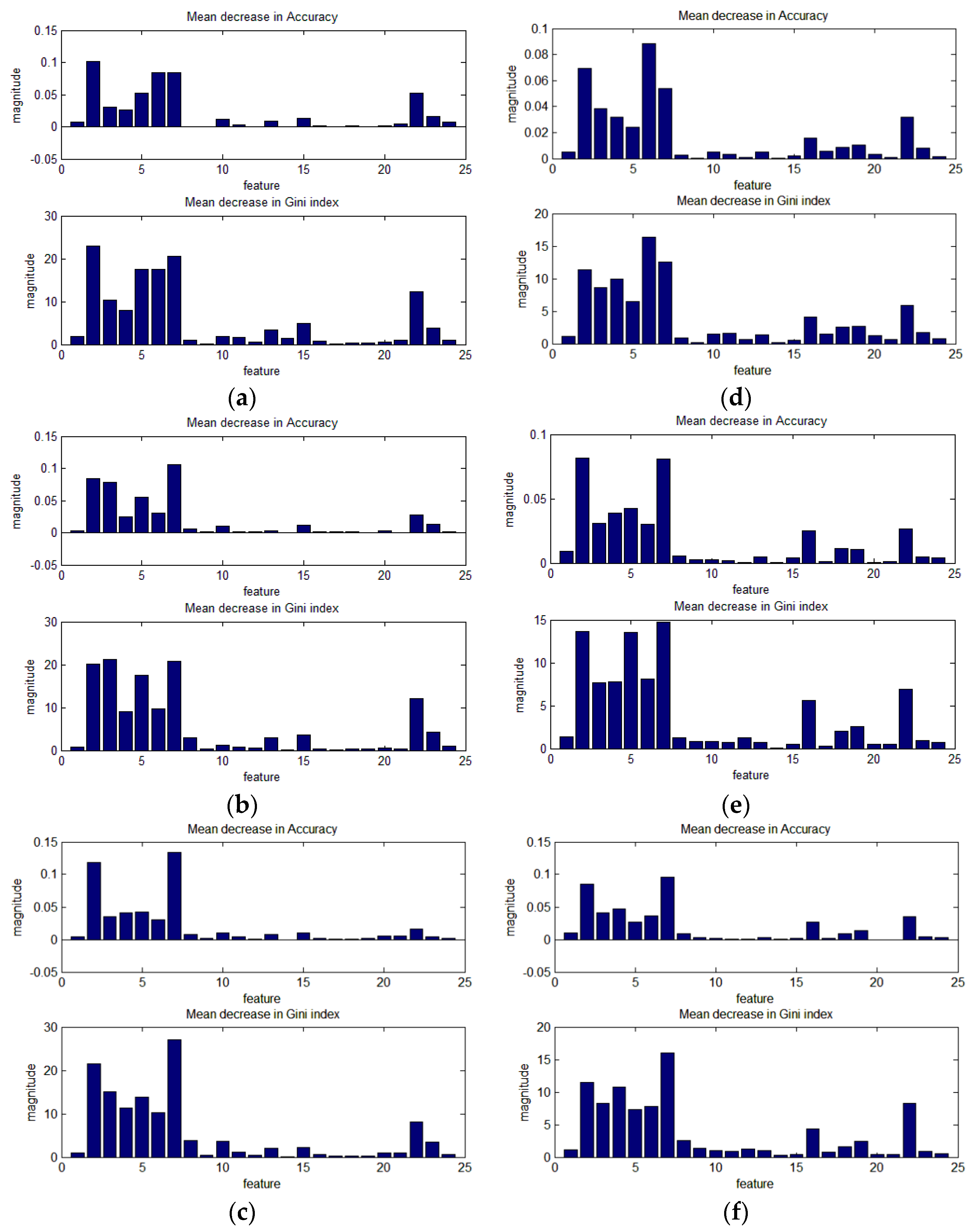

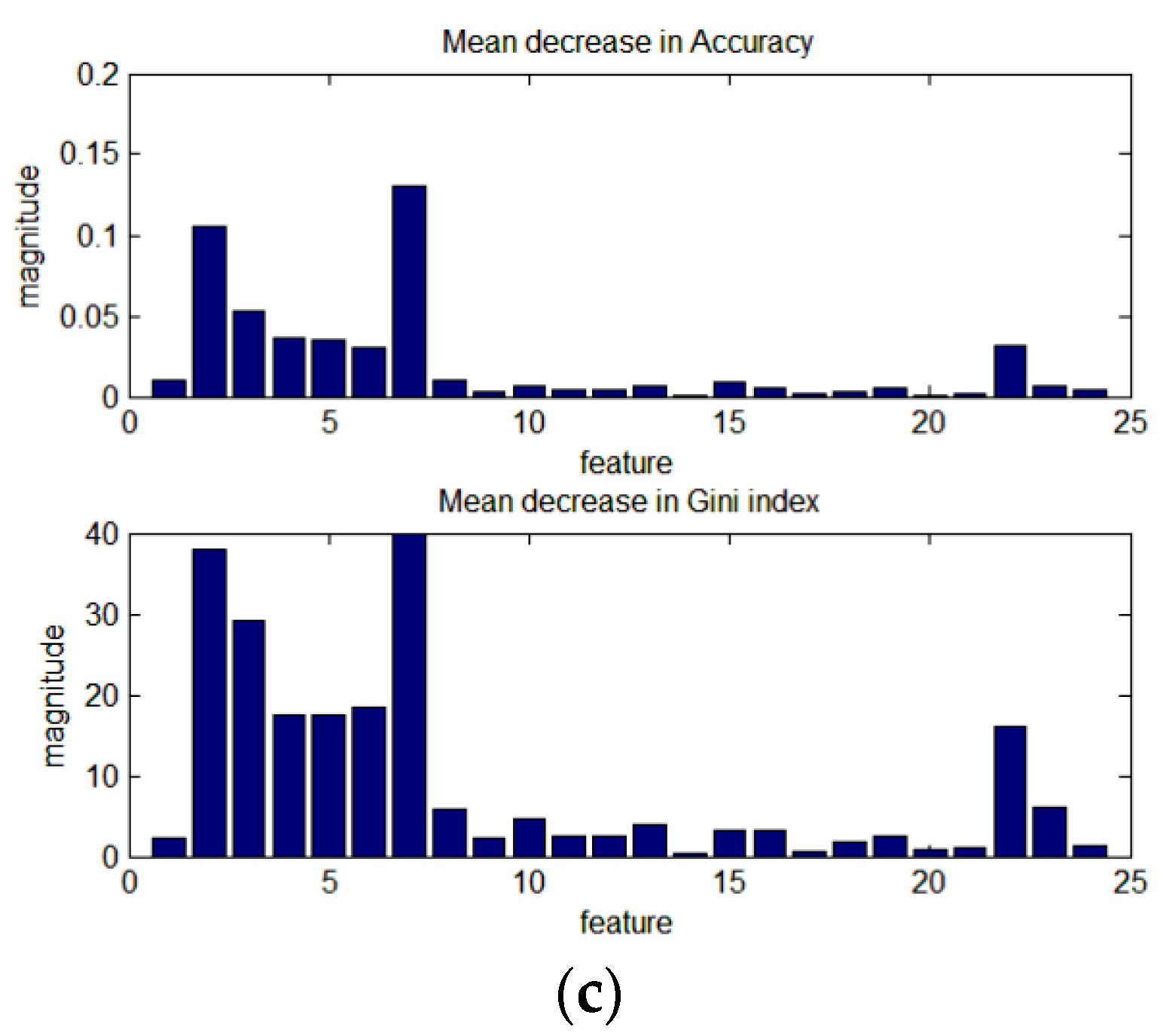

In each spatial unit, the high-level features of the three primitive feature maps of the MBI, the NDVI, and the line segments are extracted, respectively. In addition, the relationship between the MBI, the NDVI, and the line segments is also considered. In total, 24 high-level features are extracted from each spatial unit, as listed in

Table 2.

For the MBI and the NDVI, the sum of the area percentage of the MBI and the NDVI is calculated. In general, water bodies, shadow, and some bare land (semi-arid and sub wetness land) belong to the black in

Figure 4. Usually, in urban areas, buildings are distributed densely. Thus, in the MBI feature map, both the total and four quadrants of the area percentage are calculated. To distinguish the large and small areas of bare soil detected by the MBI, the number plot and area percentage of the MBI plot with different areas are calculated. The large-area MBI plot is larger than 500 pixels in this study, which is determined by the resolution of the images and the average areas of the buildings. In the NDVI feature map, the total area percentage is calculated. In general, urban area vegetation distribution is fragmented, and non-urban-area vegetation usually occurs in large-area blocks. Thus, the number and area percentage of the NDVI plot (area >500 pixels) are calculated.

In the line segment map, the number of line segments and the mean and variance of all the line segments lengths are calculated. It can be seen in

Figure 3b and

Figure 4 that the directional distribution of the line segments is significantly different between the urban and non-urban areas. In this study, the direction of the line segments is defined as 0–180° with a step of 30°, namely, six directions. The histogram is sorted in descending order according to the number of line segments. The direction with the maximum number of line segments, namely, the first direction, is called the dominant direction. Three features are extracted from the direction histogram of the line segments, namely, the percentage of dominant direction line segments (DLR), the angle difference between the top two dominant directions (difv), and the percentage of the top two dominant direction line segments.

Many bright non-building areas are detected as buildings by the MBI, especially the large areas of bare land and bare mountain, which seriously hinders the urban area extraction accuracy. Through observation of the experimental data, it can be seen that the spatial relationships between the MBI, the NDVI, and the line segments are different in different spatial contexts. In general, the MBI features of buildings overlap or are in close proximity to the line segments, due to the sudden significant spectral change between buildings and their surroundings (especially building shadow). However, the MBI features of bare land are generally far away from the line segments. This is because the bare land spectrum changes slowly (as shown in

Figure 3b). Thus, the spatial relationships between the MBI, the NDVI, and the line segments are extracted in the neighborhood of the line segments, namely, the percentage of the MBI, the NDVI, and others (water, shadow, and so on) in the line segment buffer with a 1-pixel width.

The values of all the 24 features are greater than or equal to zero. The scales of all the features’ values are similar, i.e., 0–100, except for features 16, 17, 20, and 21.

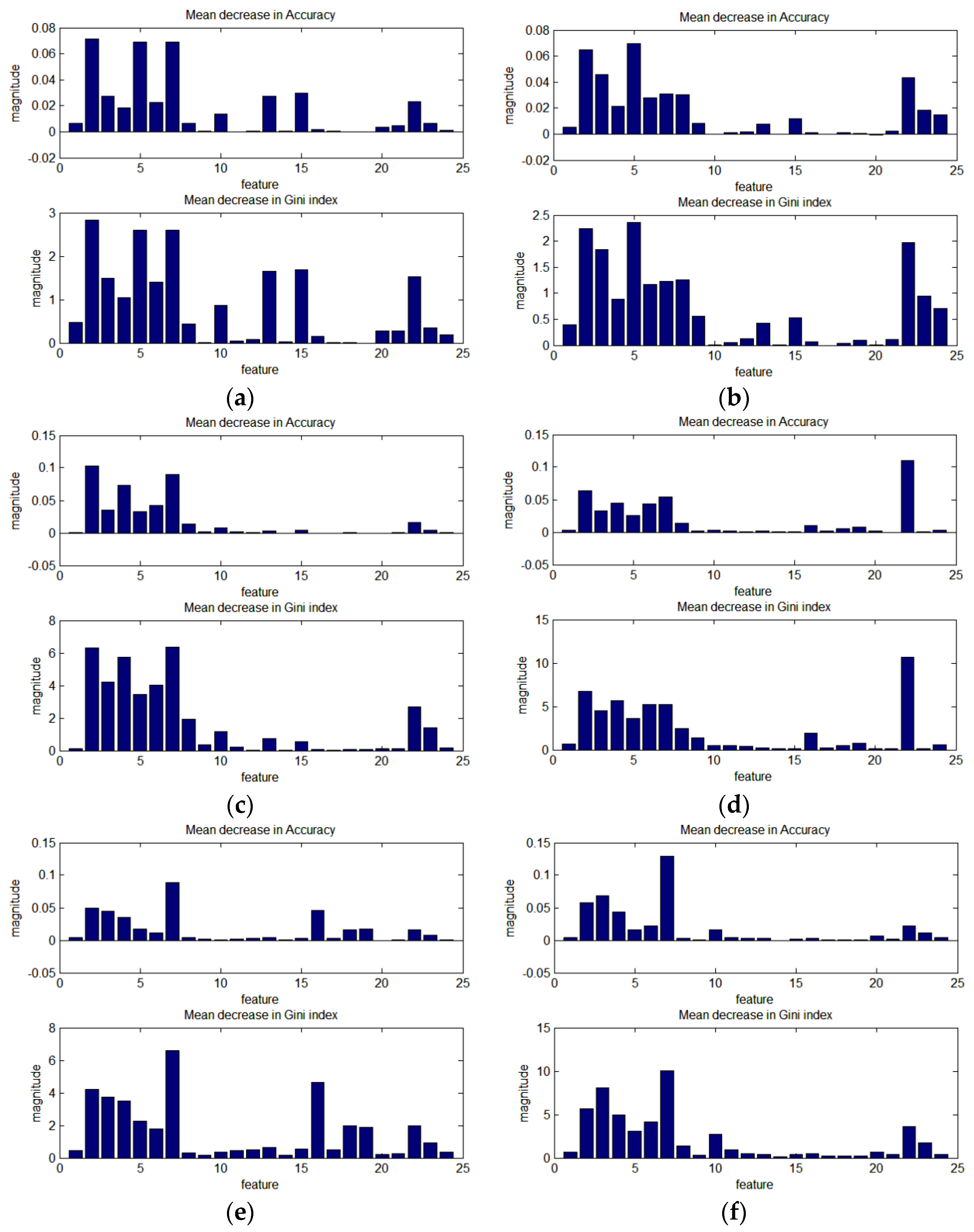

3.6. Classification

High-dimensionality features extracted for recognizing urban areas are a binary classification problem. Hence, a nonparametric classifier is required, i.e., a classifier that does not assume any peculiar statistical distribution for the input. The classifier also has to be able to handle high-dimensional feature spaces, since the high-level features are composed of 24 bands. Although the high-dimensional features are artificially selected for the urban area extraction, they may contain redundancies in the feature space. It is also important to understand the importance of each feature. Therefore, the random forest (RF) classifier is used in this study for the urban area mapping, and to analyze the importance of each feature. The RF classifier can manage a high-dimensional feature space, and is both quick and robust [

16].

The RF classifier constructs a multitude of decision trees, and is an approach that has been shown to perform very well [

15,

16]. A large number of trees (classifiers) are generated, and majority voting is finally used to assign an unknown model to a class. Each decision tree of RF chooses a subset of features randomly, and creates a classifier with a bootstrap sample from the original training samples. After the training, RF can assign a quality measure to each feature. In this study, the Gini index [

12] is used as the feature selection measure in the decision tree design. The Gini index measures the impurity of a feature with respect to the classes:

where

T represents the training set, and

Ci represent a class.

f(

Ci,

T)/|

T| is the probability of the selected pixel belonging to class

Ci.

At each node of the decision tree, the feature with the lowest Gini index value is selected from the randomly selected features as the best split, and is used to split the corresponding node. About one-third of the observed training samples (the “out-of-bag” samples) are not used when growing a tree, due to each tree of RF being grown from a bootstrapped sample. The variable importance is represented by the decrease in accuracy using the out-of-bag observations when permuting the values of the corresponding variables.

3.7. Morphological Spatial Pattern Analysis (MSPA)

Urban areas are created through urbanization and are categorized by urban morphology as cities, towns, conurbations, or suburbs. In general, conurbations and suburbs are interconnected with cities or towns, and the remote, sparse villages are not part of the urban areas. The RF classification map cannot clearly distinguish between villages and urban areas based on the local spatial and spectral information. Furthermore, some bare land with rich texture information can be misclassified as urban areas. MSPA [

3,

47,

48] is therefore introduced to refine the urban map, and to further analyze the urban morphology.

In the binary or thresholded map (foreground and background), MSPA is used to analyze the shape, form, geometry, and connectivity of the map components. This consists of a series of sequential mathematical morphological operators such as erosion, geodesic dilation, reconstruction by dilation, and anchored skeletonisation. MSPA borrows the notion of path connectivity to determine the eight-connected or four-connected regions of the foreground. Each foreground pixel is classified as one of a mutually exclusive set of structural classes, i.e.,

Core,

Islet,

Edge,

Loop,

Perforation,

Bridge, or

Branch. In different applications, the corresponding objects in the generic MSPA categories are different [

3,

49]. There are two key parameters in MSPA, i.e., the foreground connectivity (FGconn) and the size parameter (

Ew). Parameter

Ew defines the width or thickness of the non-core classes in the pixels. In this study, FGconn is set to four-connected, and

Ew is set to 1 pixel.

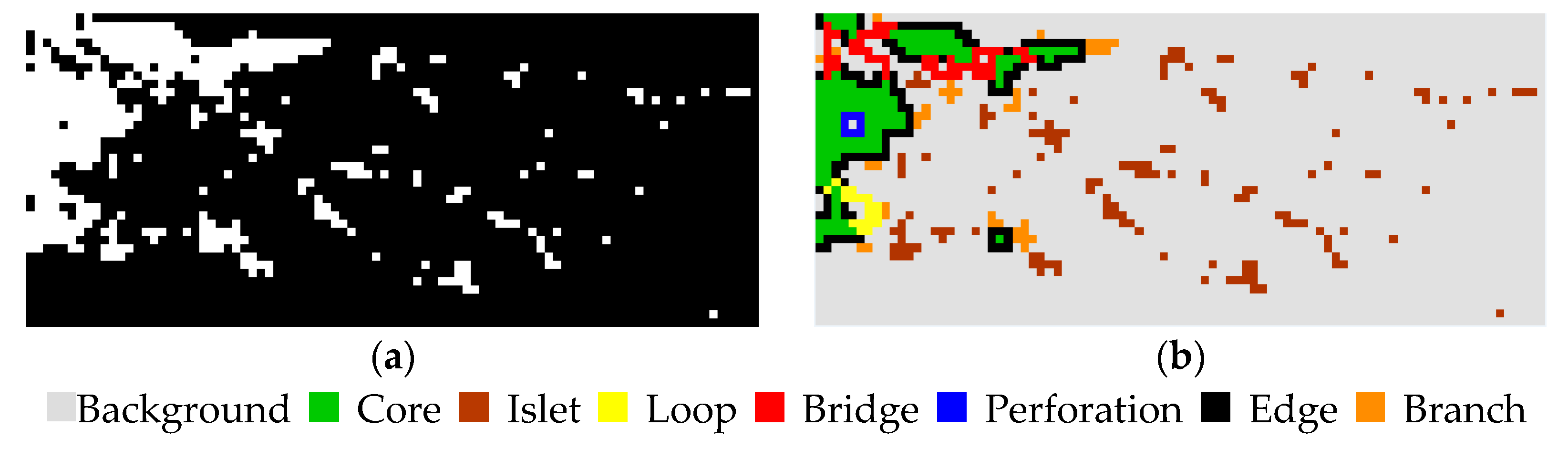

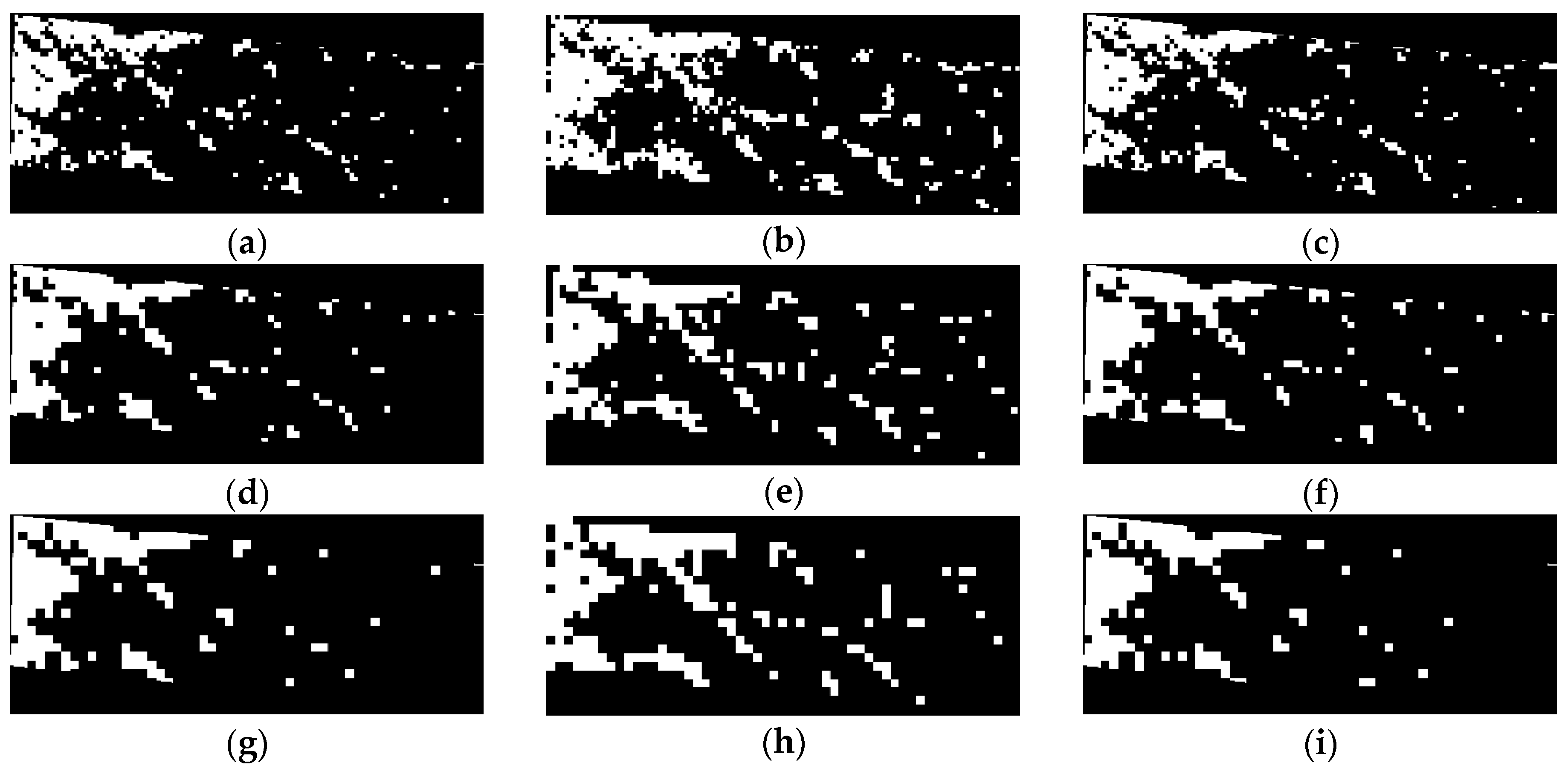

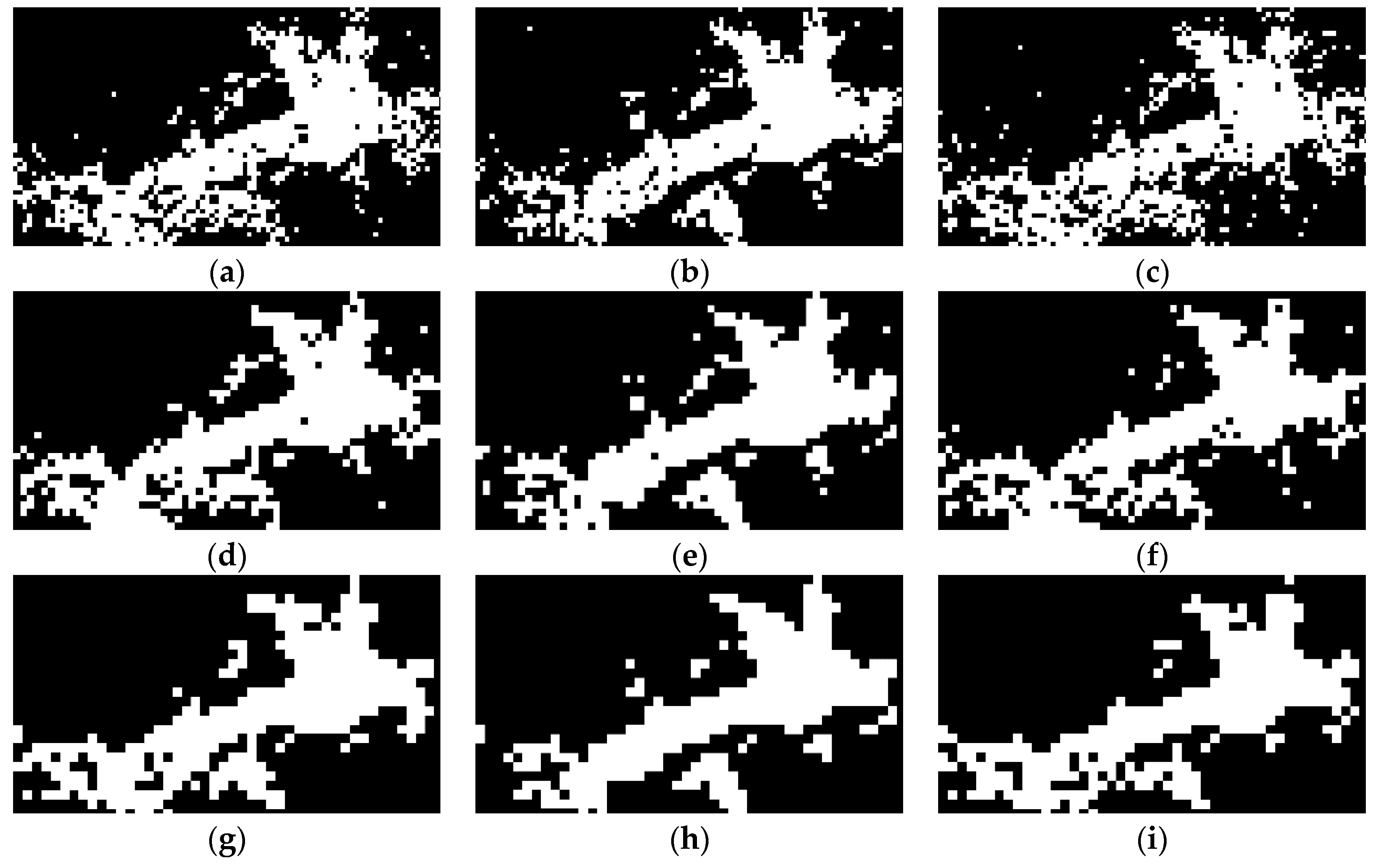

As shown in

Figure 5, the urban map of the test image R2 (

Figure 5a) is input into MSPA. All the pixels of urban areas are foreground, and the others are background.

Figure 5b shows the MSPA result. The output classes of MSPA are as follows:

Core, the interior of the urban areas, city or town, excluding the urban perimeter edge;

Islet, disjoint cities or towns that are too small to contain

Core, which are mainly sparse villages;

Loop, connected at more than one end to the same

Core area cities, which occurs due to a river or wide road splitting the city or town;

Bridge, connected at more than one end to different

Core areas; and

Branch, connected at one end to

Edge,

Bridge, or

Loop, and it shows the trail of the conurbations or suburbs that link the cities or towns. In this paper, for urban area extraction and urban morphology analysis, some of the

Islets need to be abandoned. These

Islets are mainly small villages far away from the city or built-up areas.

5. Discussion

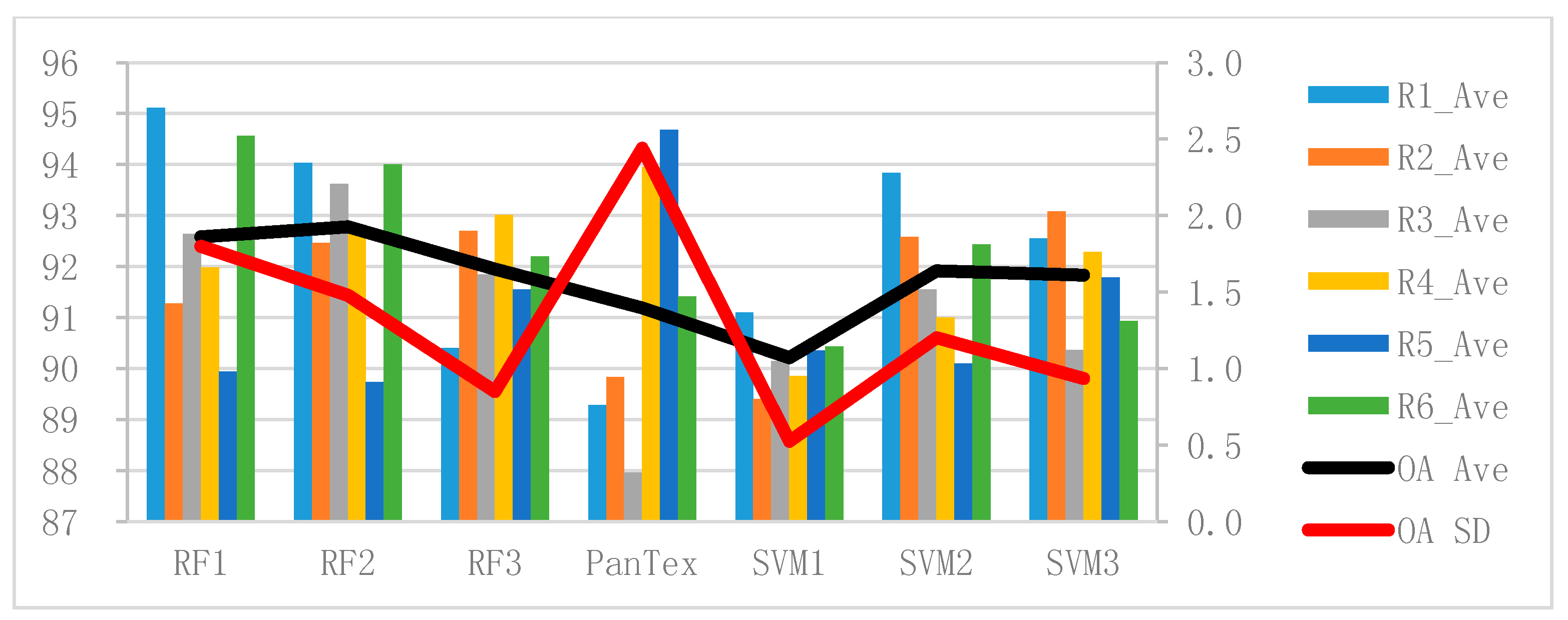

In this study, experiments were conducted with six very challenging images acquired by different satellites and different seasons. According to the results of the experiments, the following conclusions can be made:

(1) The low-level primitive features used are the MBI, the NDVI, and line segments. The MBI and the NDVI are efficient proxies of regional features of buildings and vegetation, and the line segments are very different between urban and non-urban areas.

(2) The fast chessboard segmentation method is used to split the image into non-overlapping square objects, and does not consider the underlying data. This approach is very suitable for segmenting complex urban areas.

(3) The high-level feature extraction is conducted in the segmented square objects. The results of the experiments proved that the advanced features are well described and can reflect the characteristics of urban and non-urban areas. The feature analysis experiments with all six test images showed that the spatial relationship between the MBI, the NDVI, and the neighborhood of the line segments has a great effect on the improvement of the classification accuracy.

(4) The proposed urban area extraction framework was compared with the state-of-the-art PanTex method. The proposed framework was also tested using SVM methods. The experiments proved that the proposed urban area extraction framework is much more robust than PanTex. In general, both the RF- and SVM-based methods can obtain much higher OAs than PanTex, especially when the image is complex and challenging. However, PanTex can obtain better results when the image is simple and the scale is smaller. Overall, the RF-based method performs better than the SVM-based method. Furthermore, the optimal value of the RF parameter is much easier to find than those of SVM. In addition, the training schemes proved that when the training samples are sufficient and accurate, RF and SVM can reach their full potential. This study also proved that the number and abstraction level of the features play a very important role for object recognition, which will be further studied in our follow-up research.

(5) The urban morphology is well recognized, and is consistent with the visual interpretation. In this study, the MSPA was proven to be a very effective tool for the analysis of urban morphology at a macroscopic spatial extent.