Image Fusion-Based Land Cover Change Detection Using Multi-Temporal High-Resolution Satellite Images

Abstract

:1. Introduction

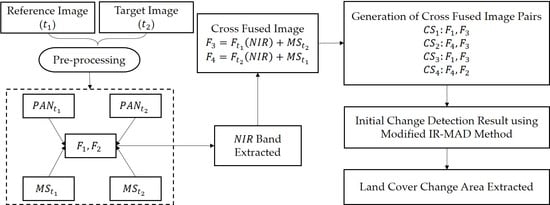

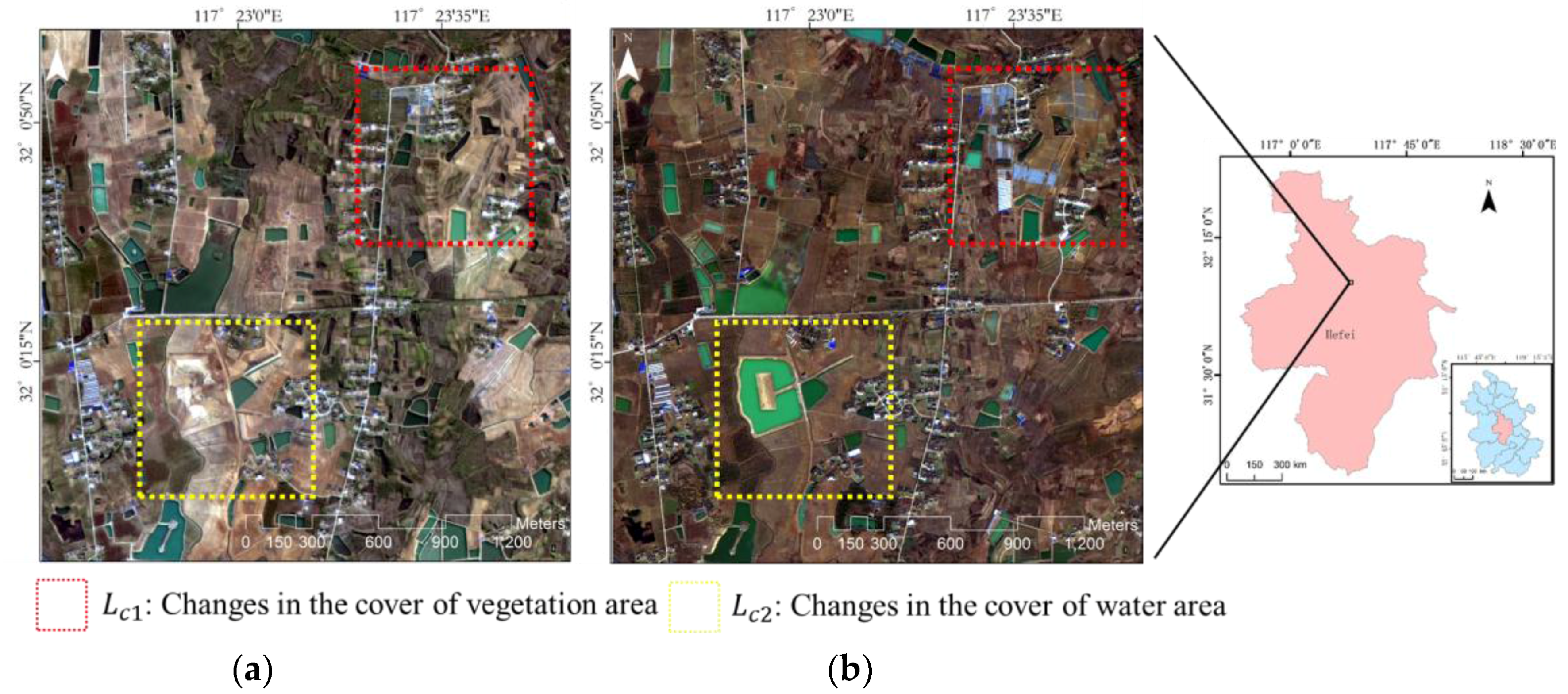

2. Cross Fusion-Based Change Detection Method

2.1. Gram–Schmidt Adaptive (GSA) Image Fusion

2.2. Cross-Fused Image Generation

2.3. Application of Modified IR-MAD by Cross-Fused Image

2.4. Extract of the Final Land Cover Change Area

2.5. Validation and Accuracy Evaluation

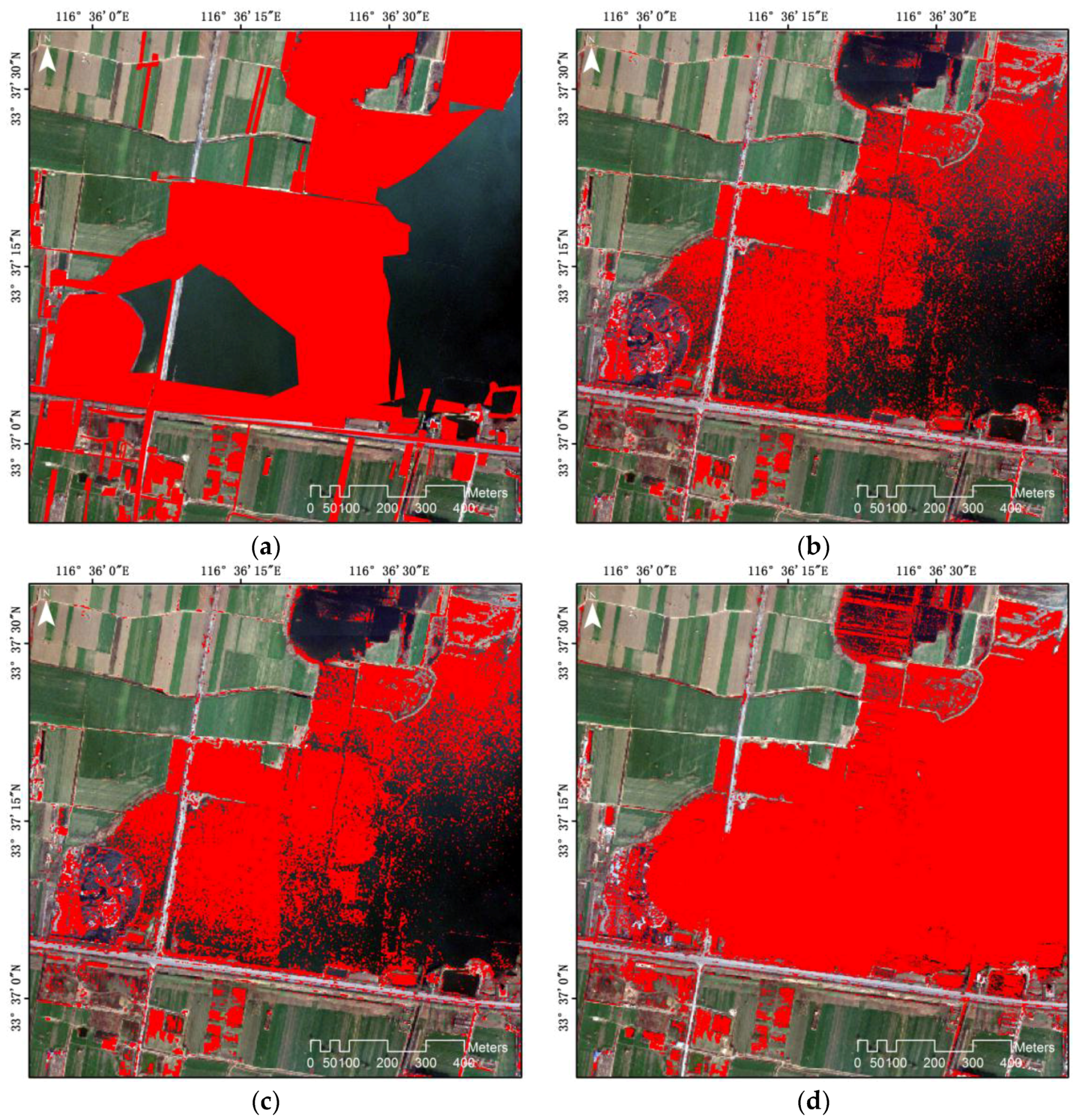

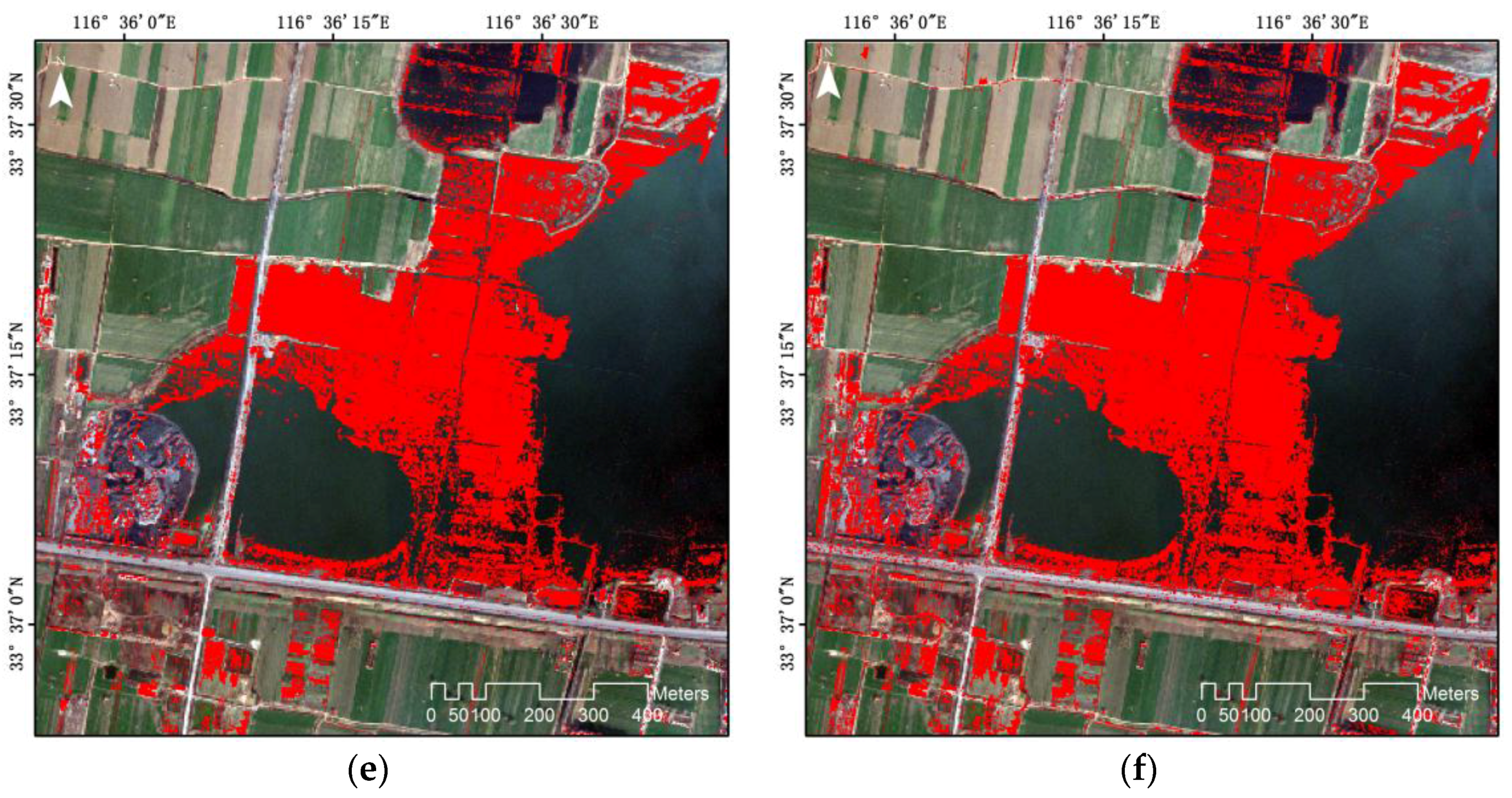

3. Experimental Results and Discussion

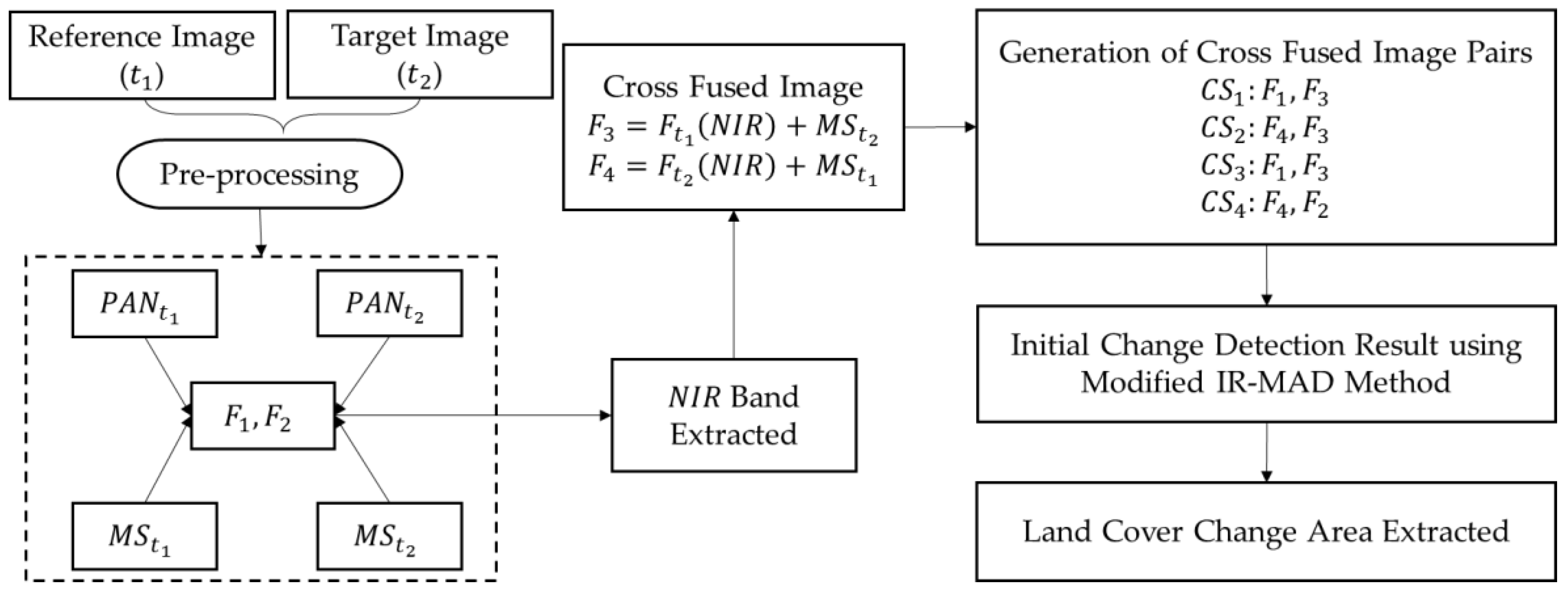

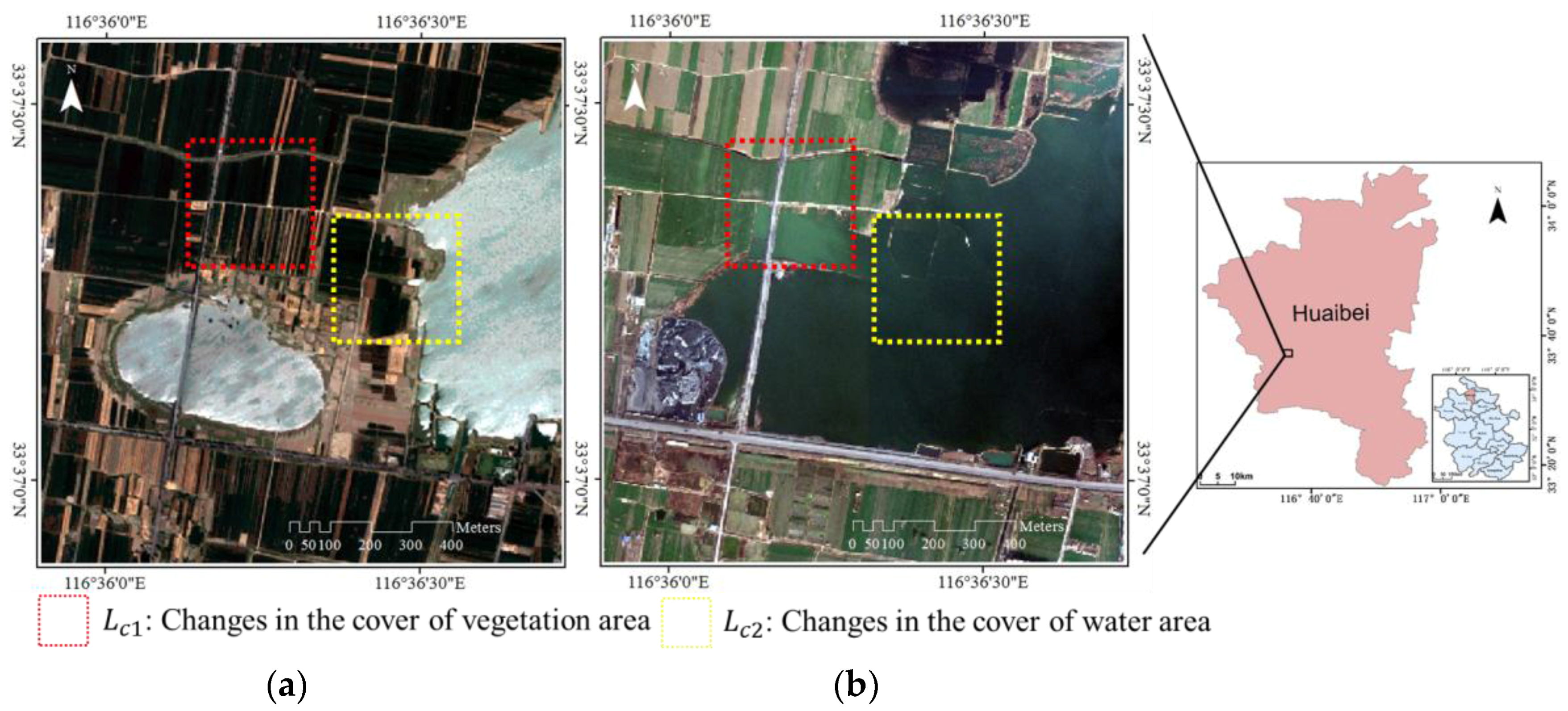

3.1. Image Preparation

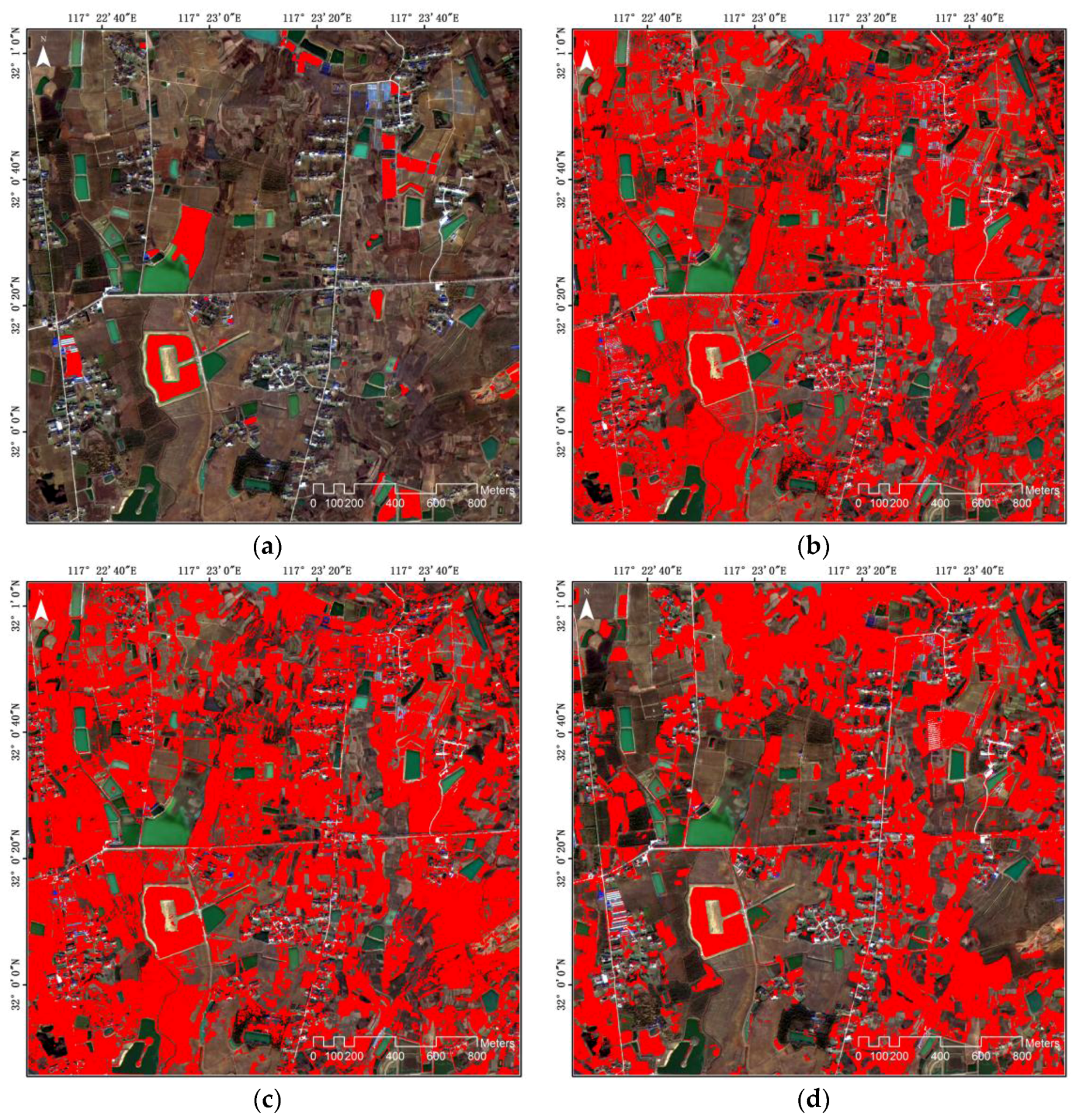

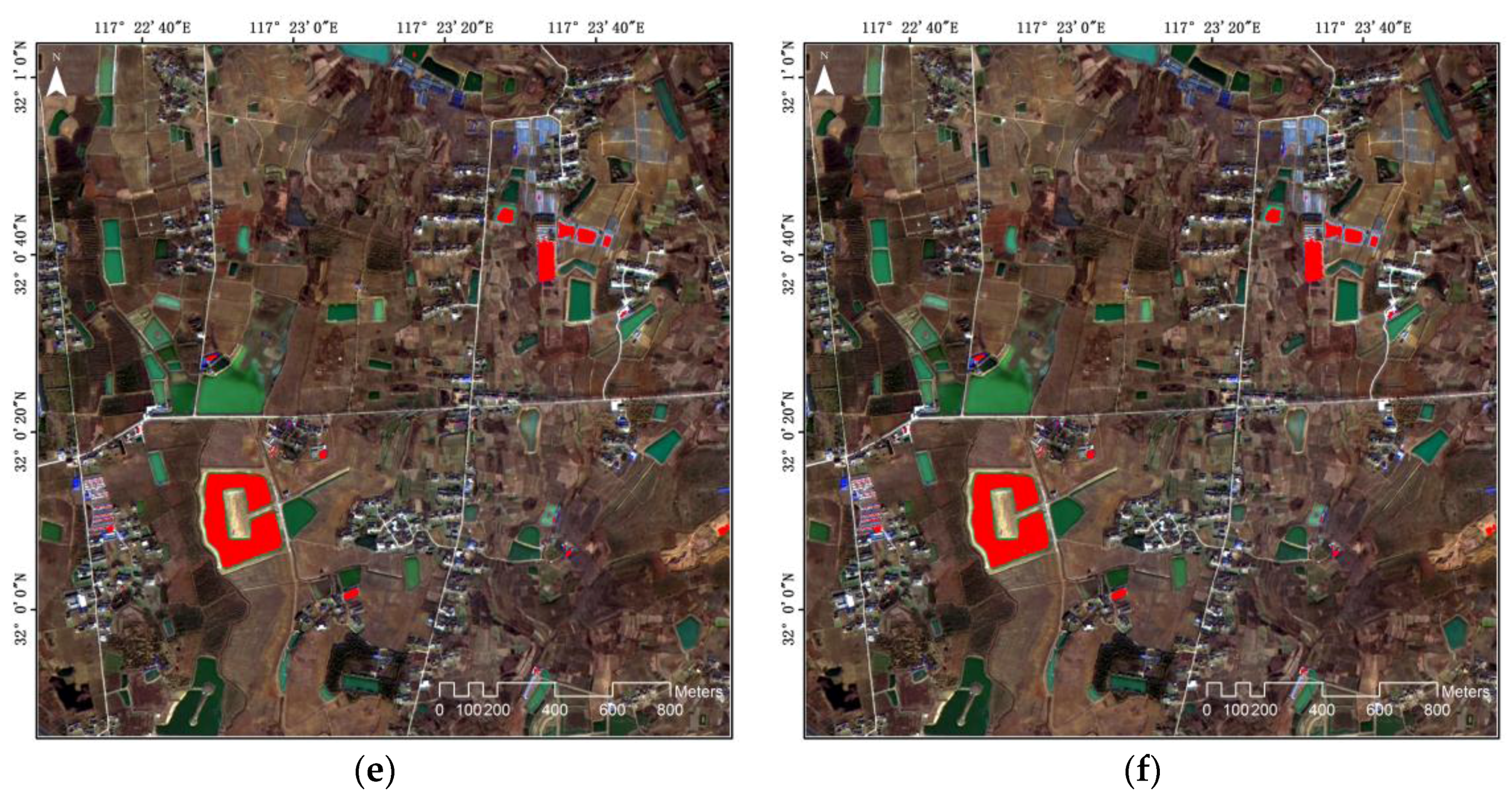

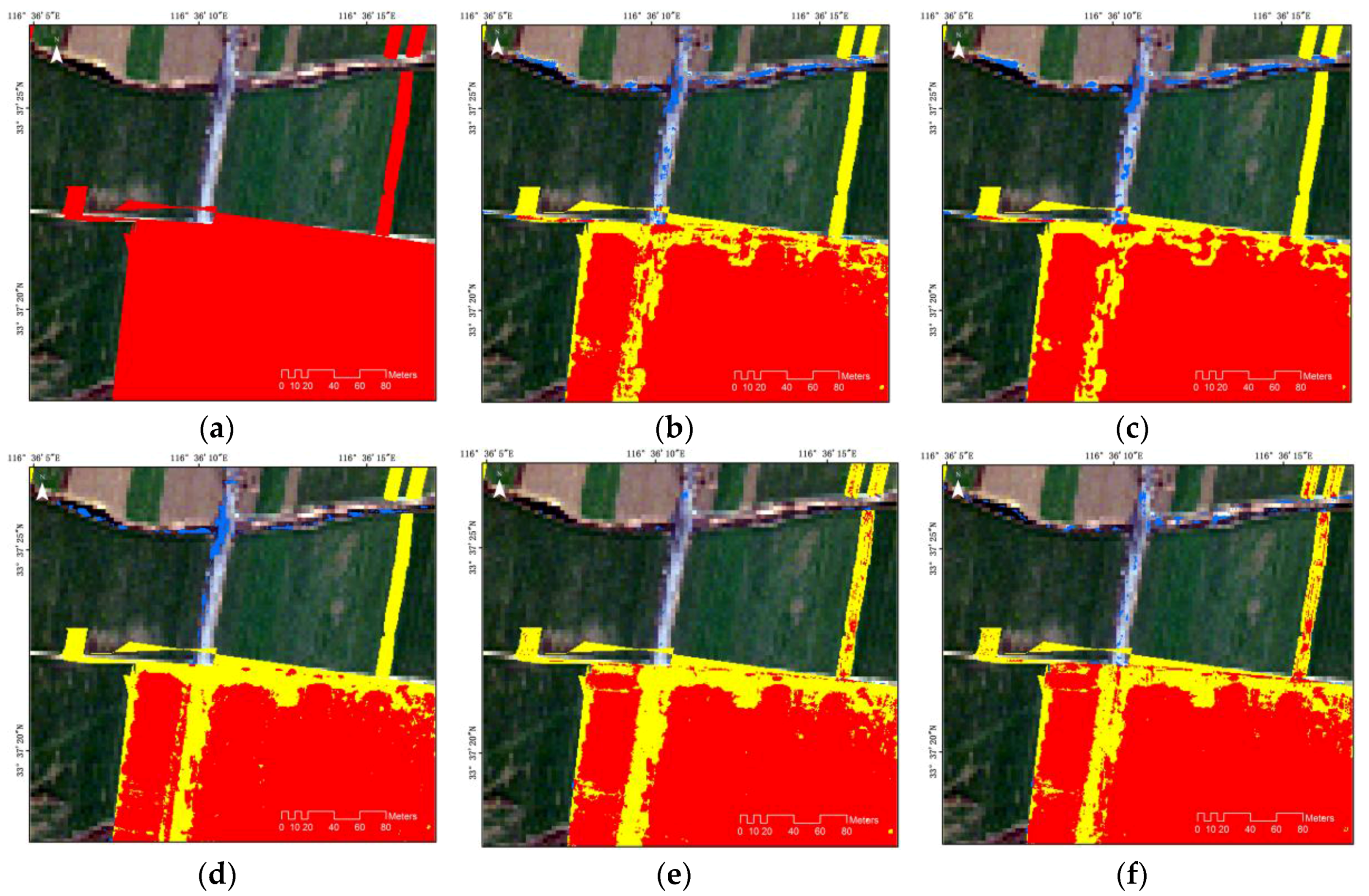

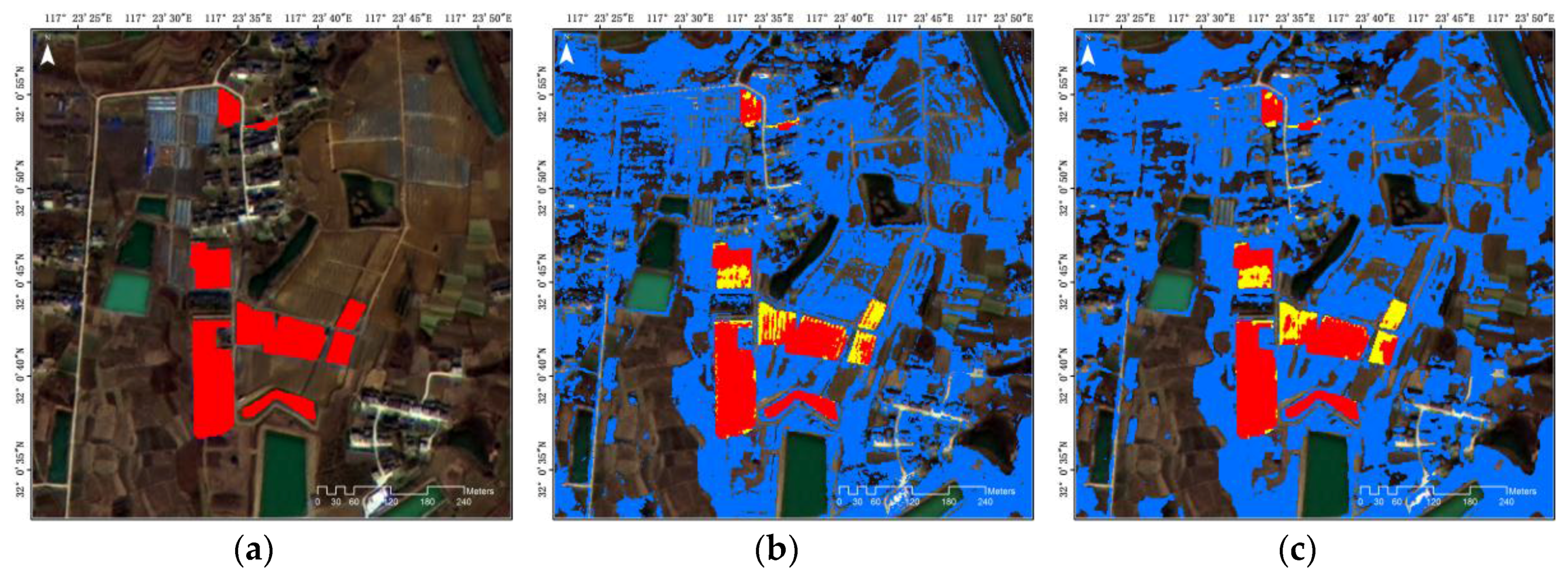

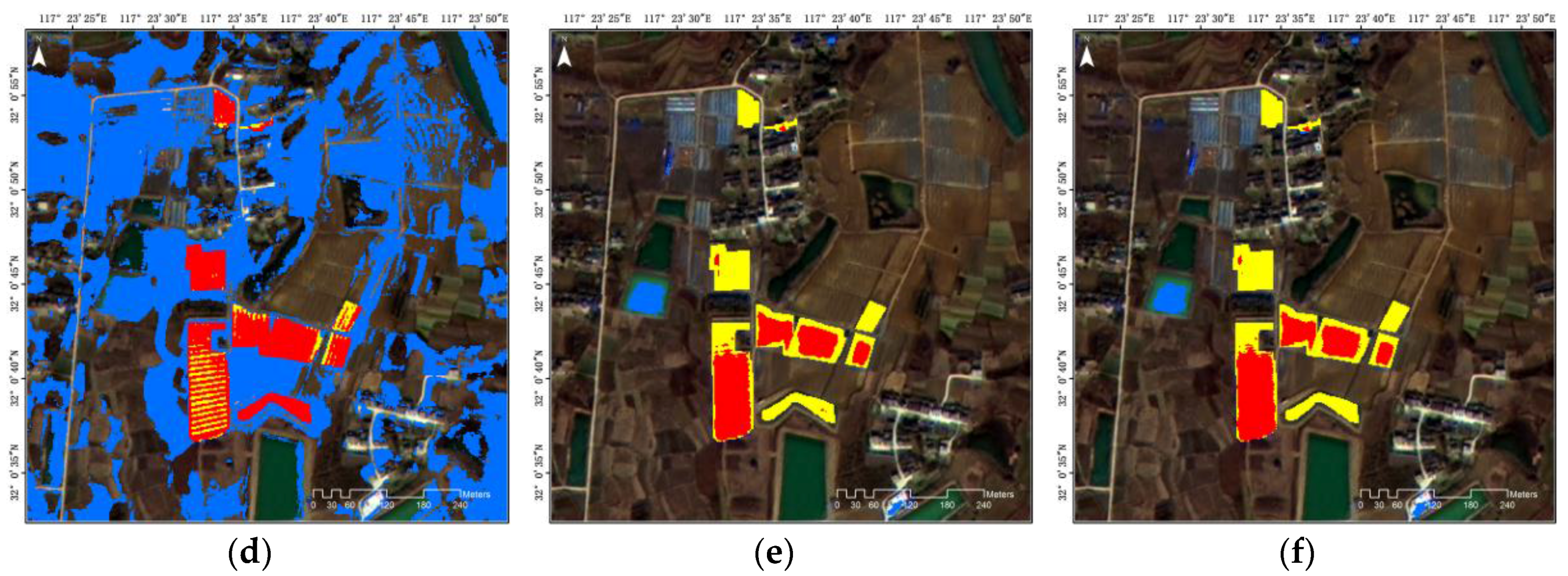

3.2. Procedures and Results

3.3. Analysis and Discussion of the Results

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Aleksandrowicz, S.; Turlej, K.; Lewiński, S.; Bochenek, Z. Change detection algorithm for the production of land cover change maps over the european union countries. Remote Sens. 2014, 6, 5976–5994. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E.; Olofsson, P. Continuous monitoring of forest disturbance using all available landsat imagery. Remote Sens. Environ. 2012, 122, 75–91. [Google Scholar] [CrossRef]

- Burns, P.; Nolin, A. Using atmospherically-corrected landsat imagery to measure glacier area change in the cordillera blanca, peru from 1987 to 2010. Remote Sens. Environ. 2014, 140, 165–178. [Google Scholar] [CrossRef]

- Song, X.-P.; Sexton, J.O.; Huang, C.; Channan, S.; Townshend, J.R. Characterizing the magnitude, timing and duration of urban growth from time series of landsat-based estimates of impervious cover. Remote Sens. Environ. 2016, 175, 1–13. [Google Scholar] [CrossRef]

- Schroeder, T.A.; Wulder, M.A.; Healey, S.P.; Moisen, G.G. Mapping wildfire and clearcut harvest disturbances in boreal forests with landsat time series data. Remote Sens. Environ. 2011, 115, 1421–1433. [Google Scholar] [CrossRef]

- Jin, S.; Yang, L.; Zhu, Z.; Homer, C. A land cover change detection and classification protocol for updating alaska nlcd 2001 to 2011. Remote Sens. Environ. 2017, 195, 44–55. [Google Scholar] [CrossRef]

- Unlu, T.; Akcin, H.; Yilmaz, O. An integrated approach for the prediction of subsidence for coal mining basins. Eng. Geol. 2013, 166, 186–203. [Google Scholar] [CrossRef]

- Yenilmez, F.; Kuter, N.; Emil, M.K.; Aksoy, A. Evaluation of pollution levels at an abandoned coal mine site in turkey with the aid of gis. Int. J. Coal Geol. 2011, 86, 12–19. [Google Scholar] [CrossRef]

- Schroeter, L.; Gläβer, C. Analyses and monitoring of lignite mining lakes in eastern germany with spectral signatures of landsat tm satellite data. Int. J. Coal Geol. 2011, 86, 27–39. [Google Scholar] [CrossRef]

- Scharsich, V.; Mtata, K.; Hauhs, M.; Lange, H.; Bogner, C. Analysing land cover and land use change in the matobo national park and surroundings in zimbabwe. Remote Sens. Environ. 2017, 194, 278–286. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Multi-source remotely sensed data fusion for improving land cover classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Jiang, L.; Lin, H.; Ma, J.; Kong, B.; Wang, Y. Potential of small-baseline sar interferometry for monitoring land subsidence related to underground coal fires: Wuda (Northern China) case study. Remote Sens. Environ. 2011, 115, 257–268. [Google Scholar] [CrossRef]

- Raucoules, D.; Cartannaz, C.; Mathieu, F.; Midot, D. Combined use of space-borne sar interferometric techniques and ground-based measurements on a 0.3 km2 subsidence phenomenon. Remote Sens. Environ. 2013, 139, 331–339. [Google Scholar] [CrossRef]

- Jiang, M.; Yong, B.; Tian, X.; Malhotra, R.; Hu, R.; Li, Z.; Yu, Z.; Zhang, X. The potential of more accurate insar covariance matrix estimation for land cover mapping. ISPRS J. Photogramm. Remote Sens. 2017, 126, 120–128. [Google Scholar] [CrossRef]

- Renza, D.; Martinez, E.; Arquero, A. A new approach to change detection in multispectral images by means of ergas index. IEEE Geosci. Remote Sens. Lett. 2013, 10, 76–80. [Google Scholar] [CrossRef] [Green Version]

- Byun, Y.; Han, Y.; Chae, T. Image fusion-based change detection for flood extent extraction using bi-temporal very high-resolution satellite images. Remote Sens. 2015, 7, 10347–10363. [Google Scholar] [CrossRef]

- Nielsen, A.A.; Conradsen, K.; Simpson, J.J. Multivariate alteration detection (mad) and maf postprocessing in multispectral, bitemporal image data: New approaches to change detection studies. Remote Sens. Environ. 1998, 64, 1–19. [Google Scholar] [CrossRef]

- Chen, Q.; Chen, Y. Multi-feature object-based change detection using self-adaptive weight change vector analysis. Remote Sens. 2016, 8, 549. [Google Scholar] [CrossRef]

- Wang, B.; Choi, S.-K.; Han, Y.-K.; Lee, S.-K.; Choi, J.-W. Application of ir-mad using synthetically fused images for change detection in hyperspectral data. Remote Sens. Lett. 2015, 6, 578–586. [Google Scholar] [CrossRef]

- Nielsen, A.A. The regularized iteratively reweighted mad method for change detection in multi-and hyperspectral data. IEEE Trans. Image Process. 2007, 16, 463–478. [Google Scholar] [CrossRef] [PubMed]

- Almutairi, A.; Warner, T.A. Change detection accuracy and image properties: A study using simulated data. Remote Sens. 2010, 2, 1508–1529. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Liu, Z.; Blasch, E.; John, V. Statistical comparison of image fusion algorithms: Recommendations. Inf. Fusion 2017, 36, 251–260. [Google Scholar] [CrossRef]

- Chen, C.M.; Hepner, G.F.; Forster, R.R. Fusion of hyperspectral and radar data using the ihs transformation to enhance urban surface features. ISPRS J. Photogramm. Remote Sens. 2003, 58, 19–30. [Google Scholar] [CrossRef]

- El-Mezouar, M.C.; Taleb, N.; Kpalma, K.; Ronsin, J. An ihs-based fusion for color distortion reduction and vegetation enhancement in ikonos imagery. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1590–1602. [Google Scholar] [CrossRef]

- Baronti, S.; Aiazzi, B.; Selva, M.; Garzelli, A.; Alparone, L. A theoretical analysis of the effects of aliasing and misregistration on pansharpened imagery. IEEE J. Sel. Top. Signal Process. 2011, 5, 446–453. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patents 6011875 A, 4 January 2000. [Google Scholar]

- Pushparaj, J.; Hegde, A.V. Comparison of various pan-sharpening methods using quickbird-2 and landsat-8 imagery. Arab. J. Geosci. 2017, 10, 1–17. [Google Scholar] [CrossRef]

- Dou, W.; Chen, Y.; Li, X.; Sui, D.Z. A general framework for component substitution image fusion: An implementation using the fast image fusion method. Comput. Geosci. 2007, 33, 219–228. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of ms +pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Lu, M.; Chen, J.; Tang, H.; Rao, Y.; Yang, P.; Wu, W. Land cover change detection by integrating object-based data blending model of landsat and modis. Remote Sens. Environ. 2016, 184, 374–386. [Google Scholar] [CrossRef]

- Ding, C.; Liu, X.; Huang, F.; Li, Y.; Zou, X. Onset of drying and dormancy in relation to water dynamics of semi-arid grasslands from modis ndwi. Agric. For. Meteorol. 2017, 234–235, 22–30. [Google Scholar] [CrossRef]

- Deng, C.; Wu, C. Bci: A biophysical composition index for remote sensing of urban environments. Remote Sens. Environ. 2012, 127, 247–259. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef] [Green Version]

- Marpu, P.R.; Gamba, P.; Canty, M.J. Improving change detection results of ir-mad by eliminating strong changes. IEEE Geosci. Remote Sens. Lett. 2011, 8, 799–803. [Google Scholar] [CrossRef]

- Level Otsu, N. A threshold selection method from gray-level histogram. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Wang, B.; Choi, S.; Byun, Y.; Lee, S.; Choi, J. Object-based change detection of very high resolution satellite imagery using the cross-sharpening of multitemporal data. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1151–1155. [Google Scholar] [CrossRef]

| Reference Image | Target Image | |

|---|---|---|

| Sensor | IKONOS-2 | WorldView-3 |

| Date | 17/05/2004 | 07/12/2014 |

| Spatial resolution | PAN: 1 m MS: 4 m | PAN: 0.31 m MS: 1.24 m |

| Image size (pixels) | PAN: 1600 MS: 400 | PAN: 1600 MS: 400 |

| Radiometric resolution | 11 bit | 11 bit |

| Wavelength (μm) | Band 1: 0.45–0.52 Band 2: 0.51–0.60 Band 3: 0.63–0.70 Band 4: 0.76–0.85 | Band 1: 0.400–0.450 Band 2: 0.450–0.510 Band 3: 0.510–0.580 Band 4: 0.585–0.625 Band 5: 0.630–0.690 Band 6: 0.705–0.745 Band 7: 0.770–0.895 Band 8: 0.860–1.040 |

| Reference Image | Target Image | |

|---|---|---|

| Sensor | GF-1 | |

| Date | 14/04/2015 | 26/01/2016 |

| Spatial resolution | PAN: 2 m MS: 8 m | |

| Image size (pixels) | PAN: 1200 MS: 1200 | |

| Radiometric resolution | 10 bit | |

| Wavelength (μm) | Band 1: 0.45–0.52 Band 2: 0.52–0.59 Band 3: 0.63–0.69 Band 4: 0.77–0.89 | |

| OA (%) | KC | CR | FAR | CE (%) | OE (%) | |

|---|---|---|---|---|---|---|

| CVA | 69.74 | 0.33 | 0.66 | 0.15 | 34.04 | 53.90 |

| ERGAS | 70.29 | 0.35 | 0.66 | 0.16 | 34.18 | 50.66 |

| SAM | 64.36 | 0.28 | 0.53 | 0.37 | 46.60 | 33.35 |

| Original IR-MAD | 78.47 | 0.50 | 0.93 | 0.02 | 7.30 | 51.46 |

| Proposed Method | 80.51 | 0.56 | 0.89 | 0.04 | 11.42 | 42.66 |

| OA (%) | KC | CR | FAR | CE (%) | OE (%) | |

|---|---|---|---|---|---|---|

| CVA | 47.94 | 0.01 | 0.03 | 0.52 | 0.97 | 0.44 |

| ERGAS | 47.73 | 0.01 | 0.03 | 0.53 | 0.97 | 0.42 |

| SAM | 64.10 | 0.03 | 0.05 | 0.36 | 0.96 | 0.46 |

| Original IR-MAD | 97.86 | 0.52 | 0.91 | 0.01 | 0.10 | 0.63 |

| Proposed Method | 97.87 | 0.52 | 0.92 | 0.01 | 0.11 | 0.43 |

| OA (%) | KC | OA (%) | KC | |

| CVA | 89.42 | 0.77 | 70.65 | 0.34 |

| ERGAS | 89.93 | 0.78 | 70.10 | 0.39 |

| SAM | 88.56 | 0.75 | 71.56 | 0.02 |

| Original IR-MAD | 88.82 | 0.75 | 89.74 | 0.77 |

| Proposed Method | 89.78 | 0.78 | 92.68 | 0.83 |

| OA (%) | KC | OA (%) | KC | |

| CVA | 52.81 | 0.06 | 54.52 | 0.14 |

| ERGAS | 52.64 | 0.06 | 54.11 | 0.14 |

| SAM | 58.21 | 0.08 | 87.68 | 0.51 |

| Original IR-MAD | 96.67 | 0.56 | 99.12 | 0.94 |

| Proposed Method | 96.70 | 0.56 | 99.16 | 0.94 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Choi, J.; Choi, S.; Lee, S.; Wu, P.; Gao, Y. Image Fusion-Based Land Cover Change Detection Using Multi-Temporal High-Resolution Satellite Images. Remote Sens. 2017, 9, 804. https://doi.org/10.3390/rs9080804

Wang B, Choi J, Choi S, Lee S, Wu P, Gao Y. Image Fusion-Based Land Cover Change Detection Using Multi-Temporal High-Resolution Satellite Images. Remote Sensing. 2017; 9(8):804. https://doi.org/10.3390/rs9080804

Chicago/Turabian StyleWang, Biao, Jaewan Choi, Seokeun Choi, Soungki Lee, Penghai Wu, and Yan Gao. 2017. "Image Fusion-Based Land Cover Change Detection Using Multi-Temporal High-Resolution Satellite Images" Remote Sensing 9, no. 8: 804. https://doi.org/10.3390/rs9080804