On-Board Ortho-Rectification for Images Based on an FPGA

Abstract

:1. Introduction

2. FPGA Implementation for the Ortho-Rectification Algorithm

2.1. A Brief Review of the Ortho-Rectification Algorithm

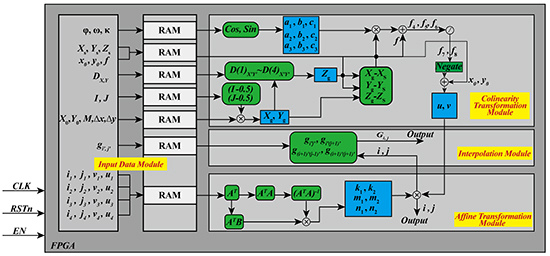

2.2. FPGA-Based Implementation for Ortho-Rectification Algorithms

- (1)

- The original data and parameters are stored in the RAM of the input data module. These original data and parameters are sent to the CETM, ATM, and IM in the same clock cycle, when the enable signal is being received;

- (2)

- The coefficients of the collinearity conditional equation, geodetic coordinates of the ortho-photo, and photo coordinates are calculated by the CETM. In the same clock cycle, the acquired photo coordinates are sent to the ATM;

- (3)

- The coefficients of affine transformation are calculated in the ATM and then the coefficients and photo coordinates are combined to calculate the scanning coordinates, which are sent to the IM and output in the same clock cycle;

- (4)

- The gray-scale of the ortho-photo is obtained by scanning the coordinates and cached gray-scale of the original image in the IM. In the same clock cycle, the obtained gray-scale of the ortho-photo is output to the external memory.

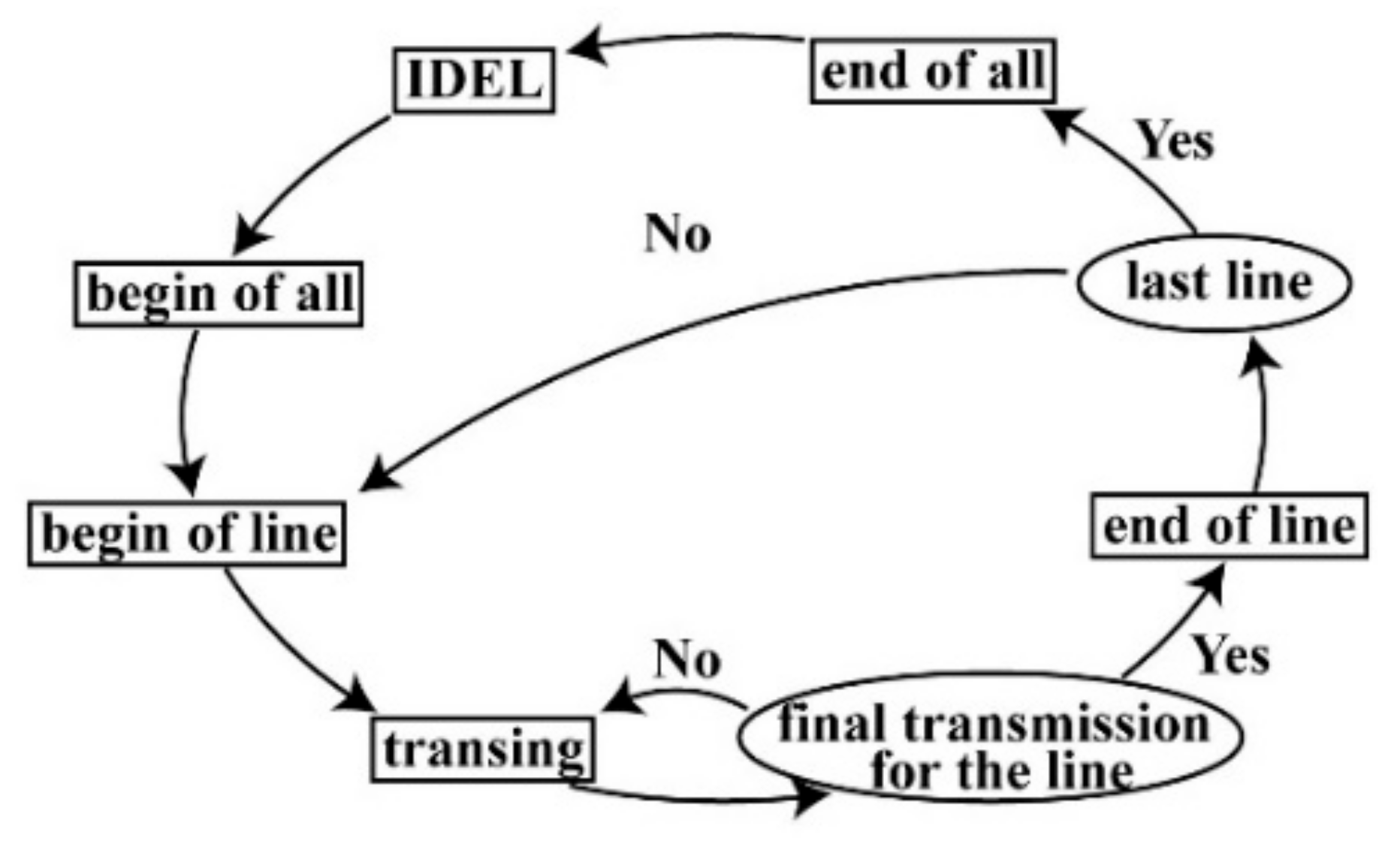

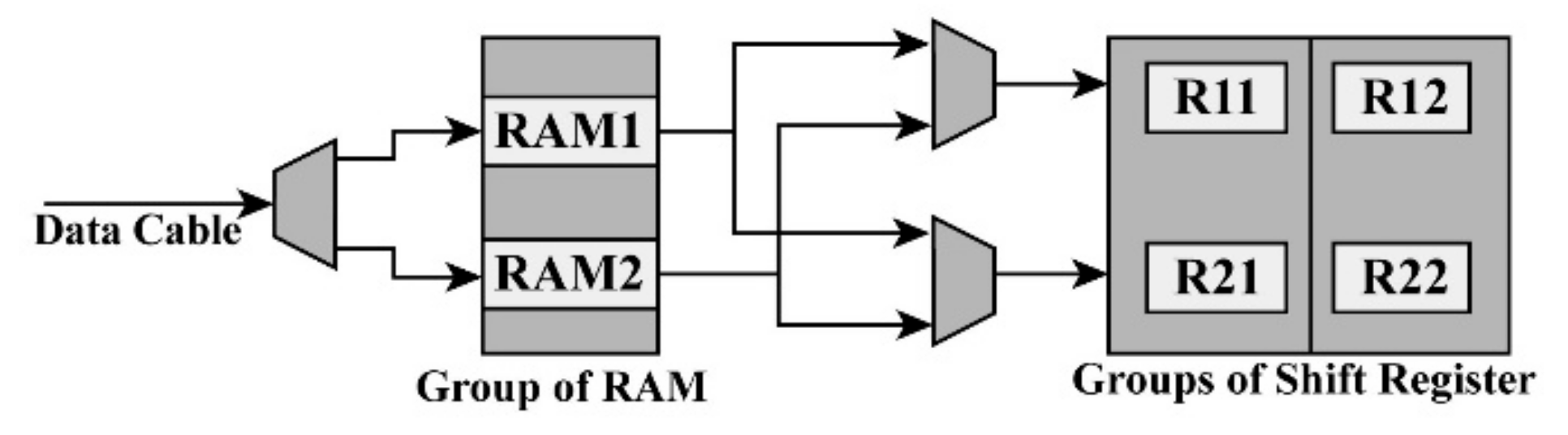

2.2.1. FPGA-Based Implementation for a Two-Row Buffer

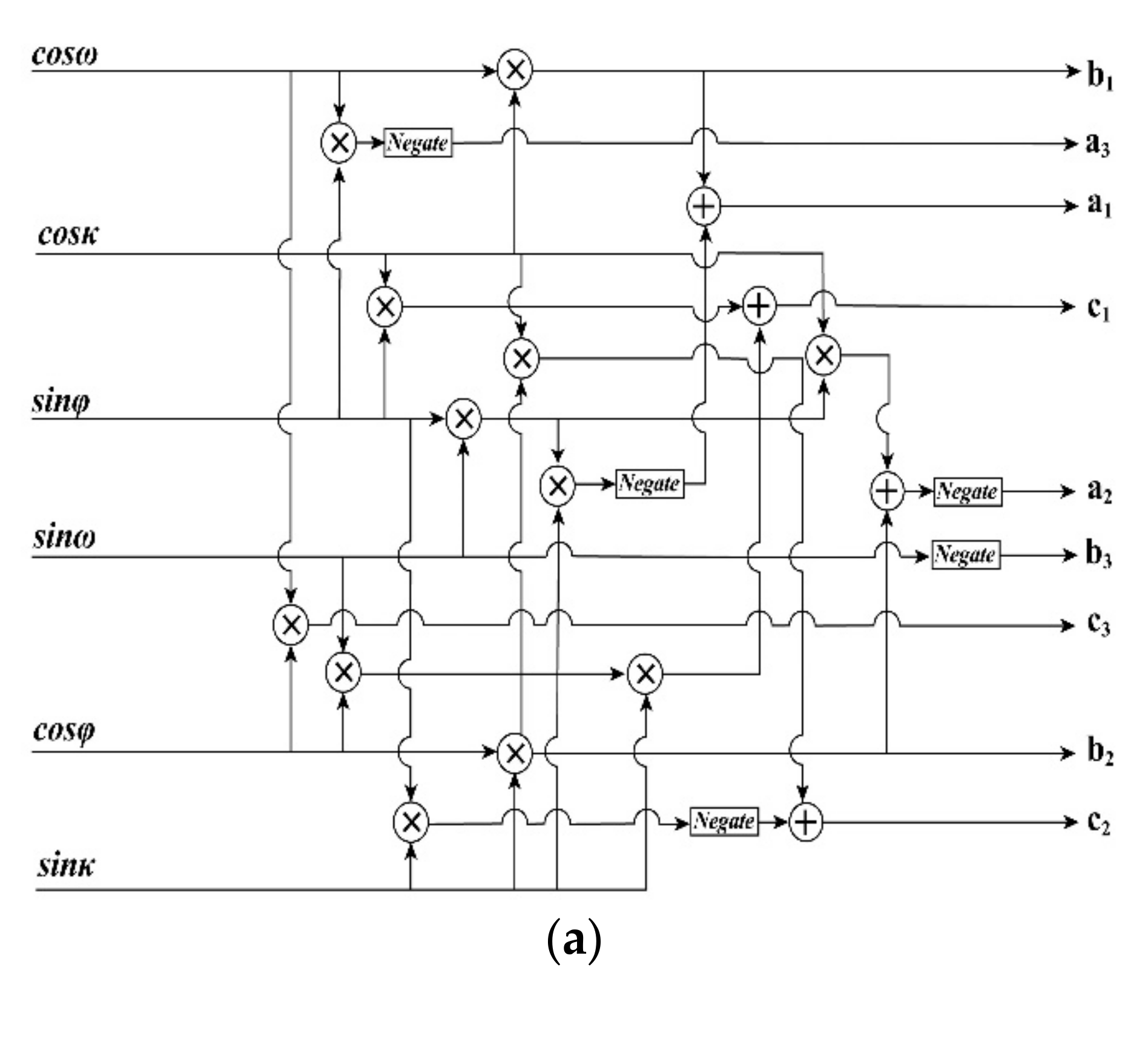

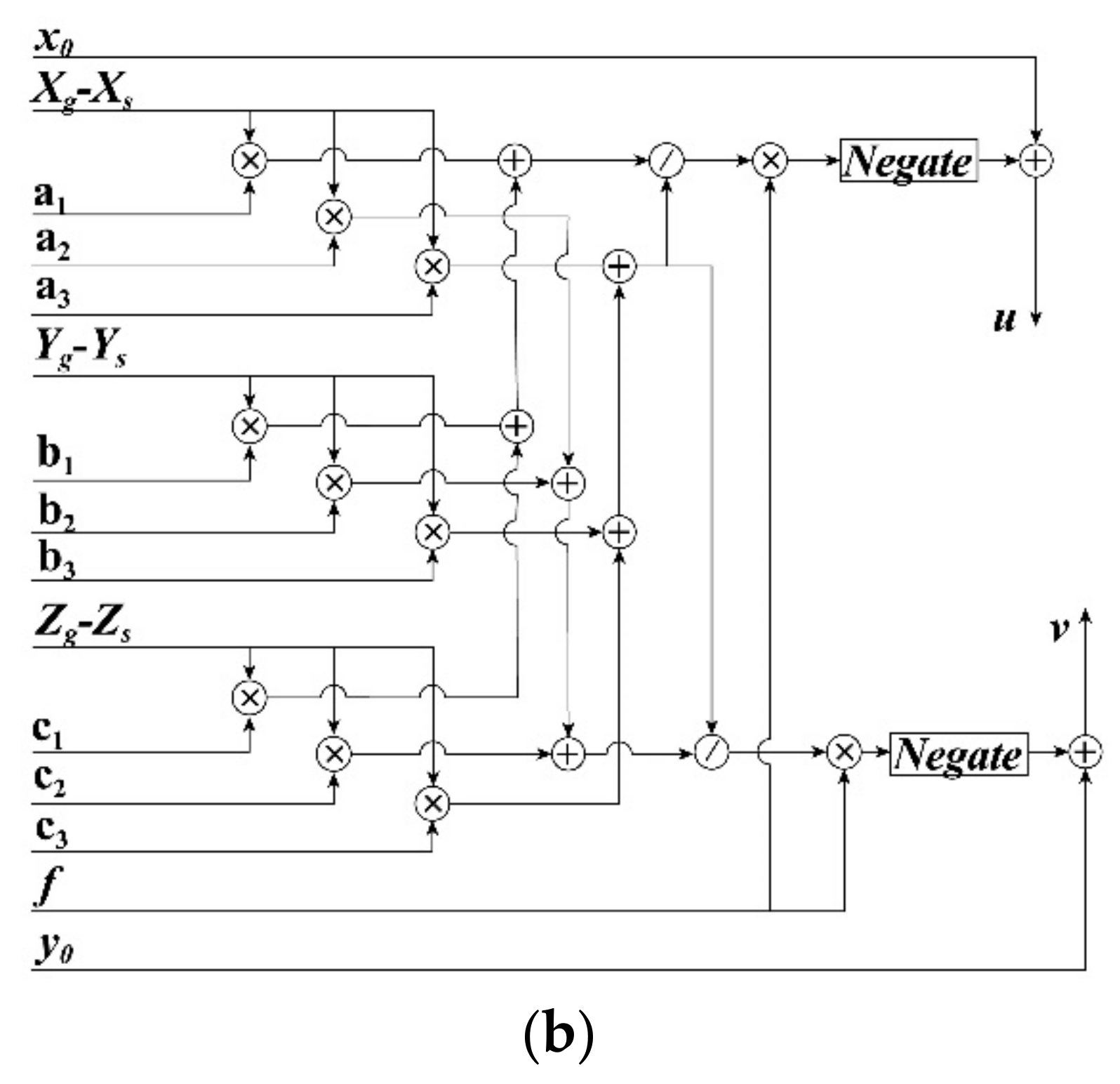

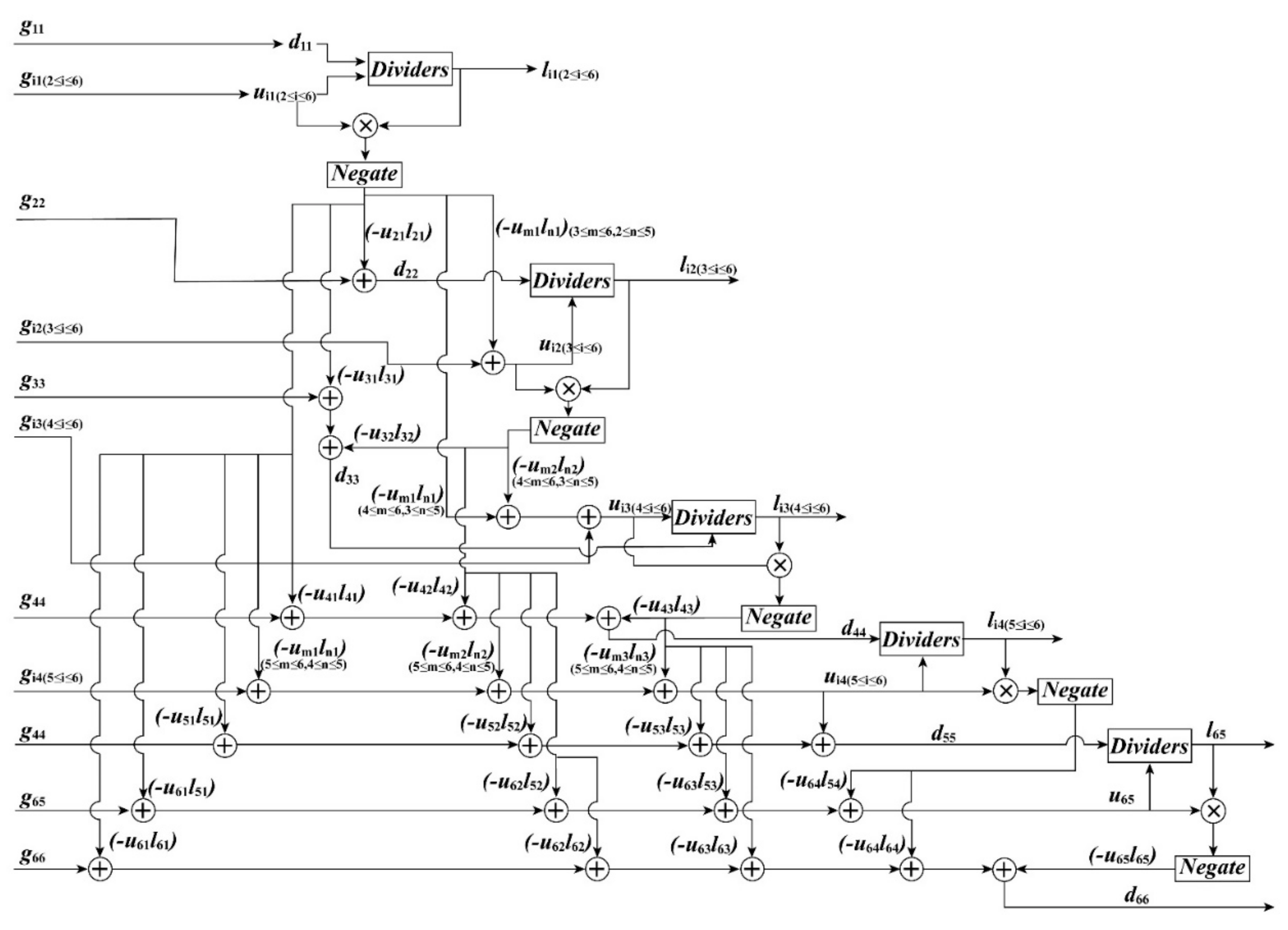

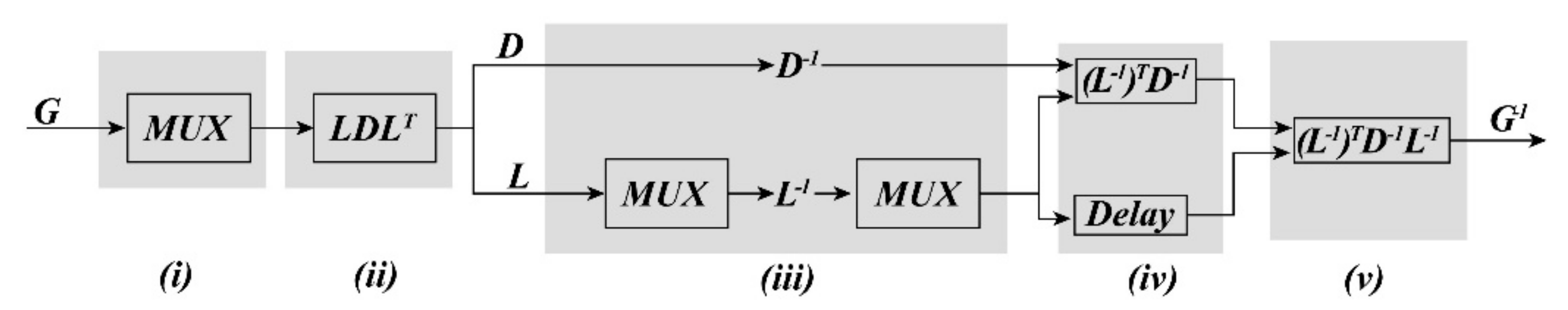

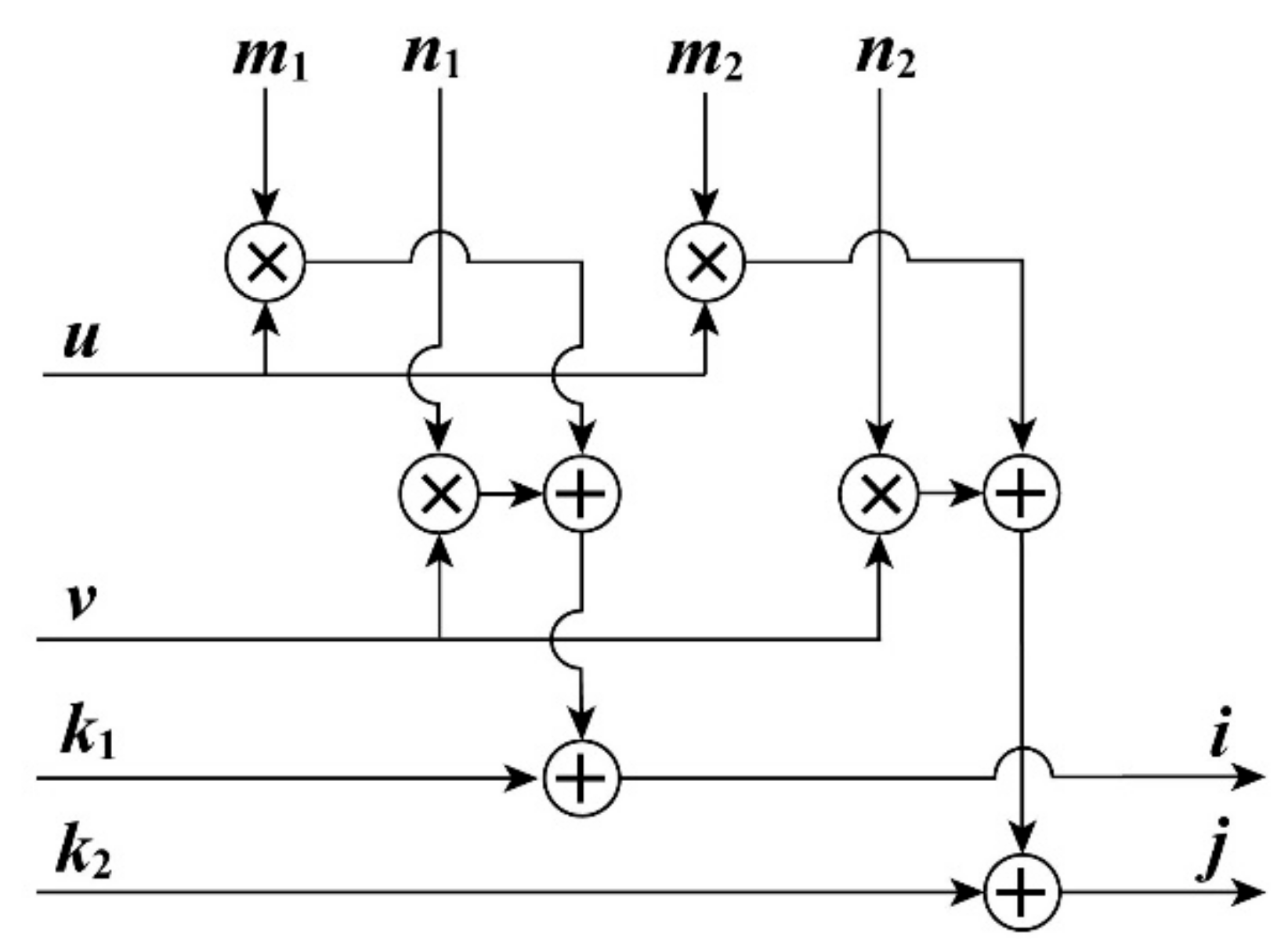

2.2.2. FPGA-Based Implementation for Coordinate Transformation

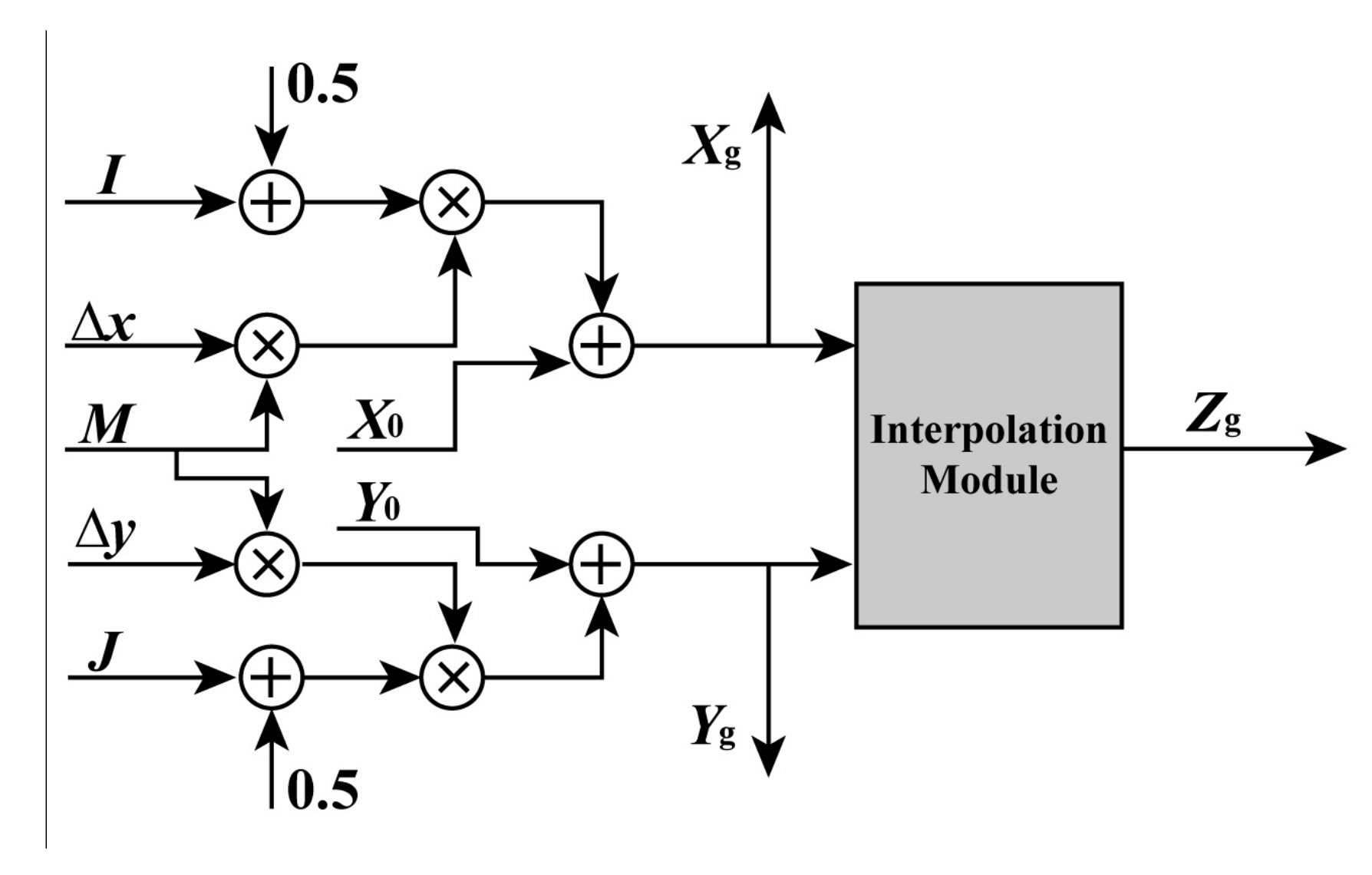

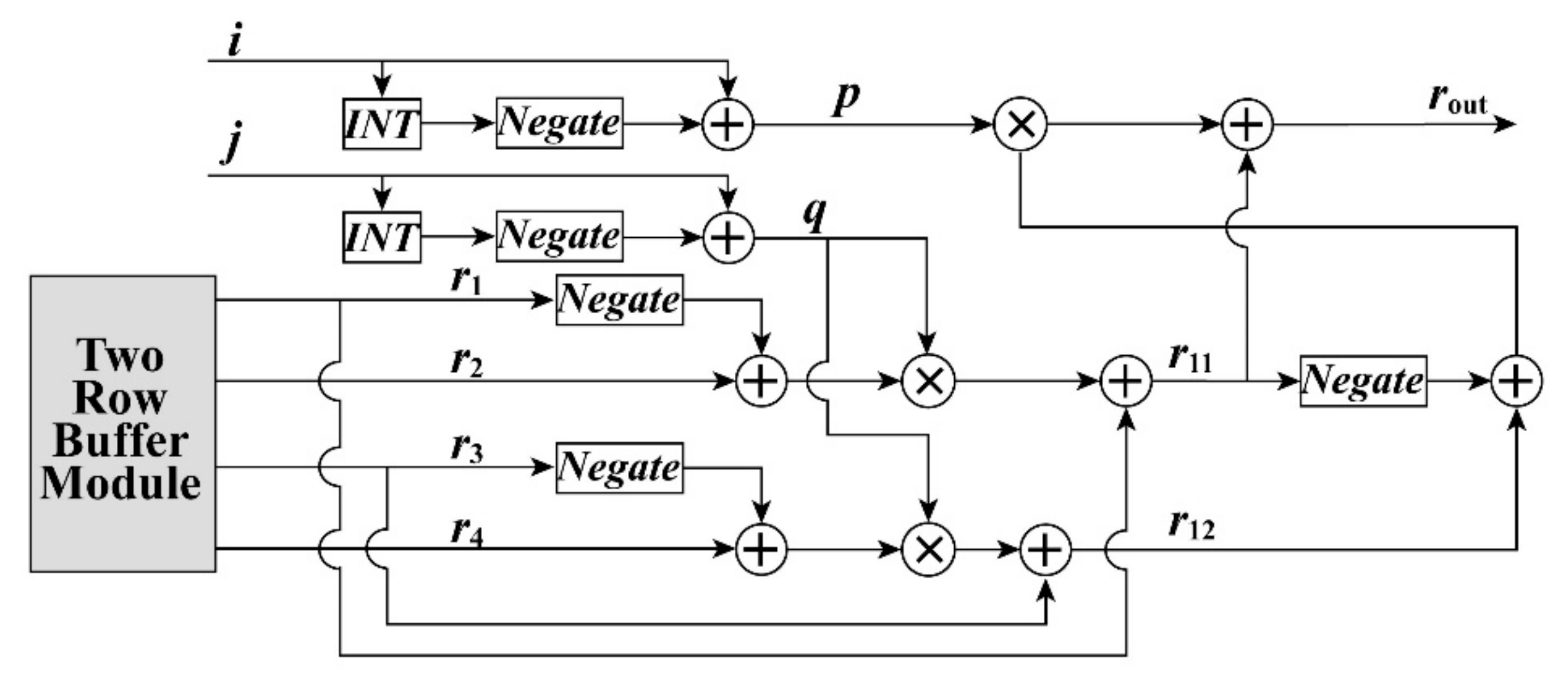

2.2.3. FPGA-Based Implementation for Bilinear Interpolation

3. Experiment

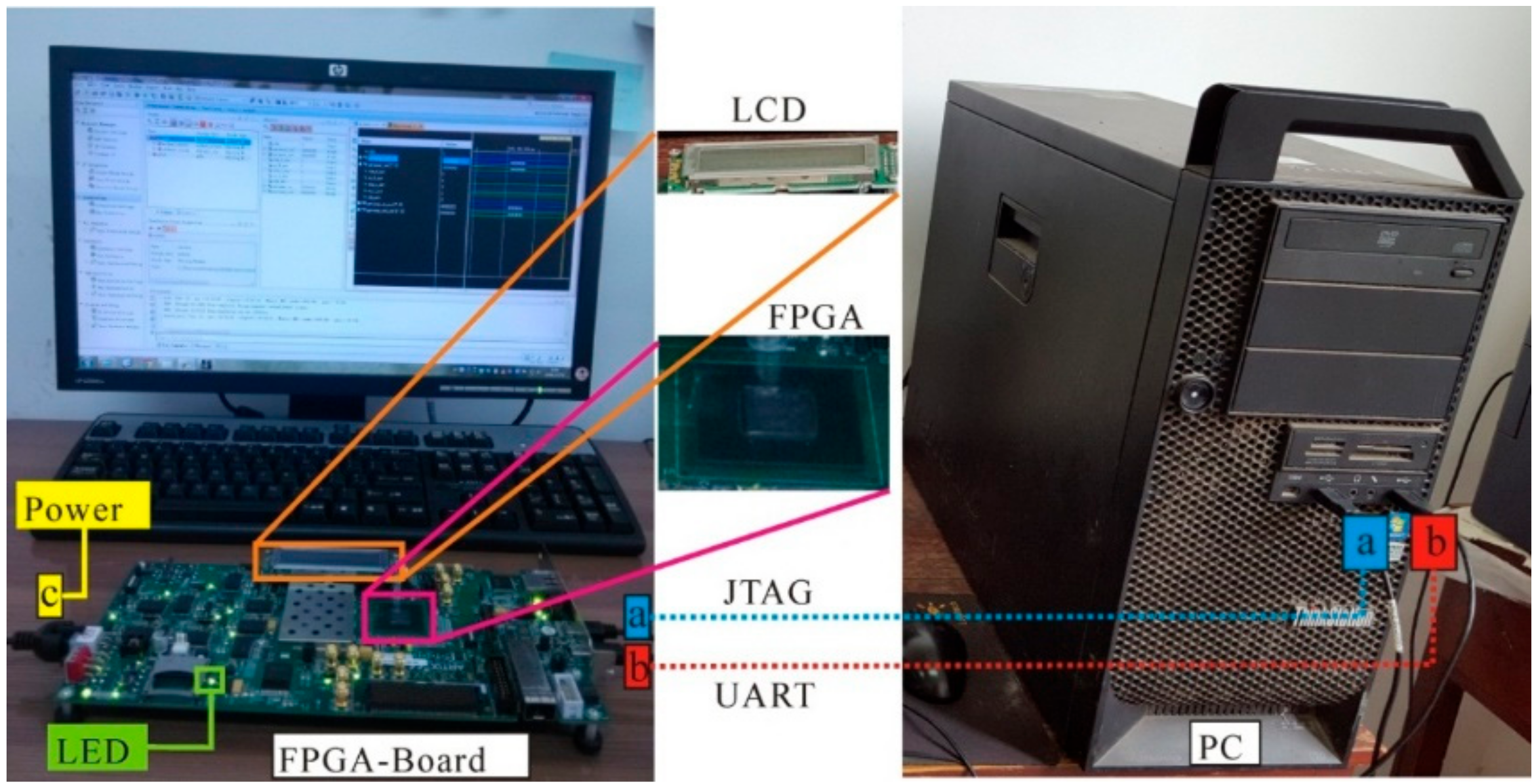

3.1. The Software and Hardware Environment

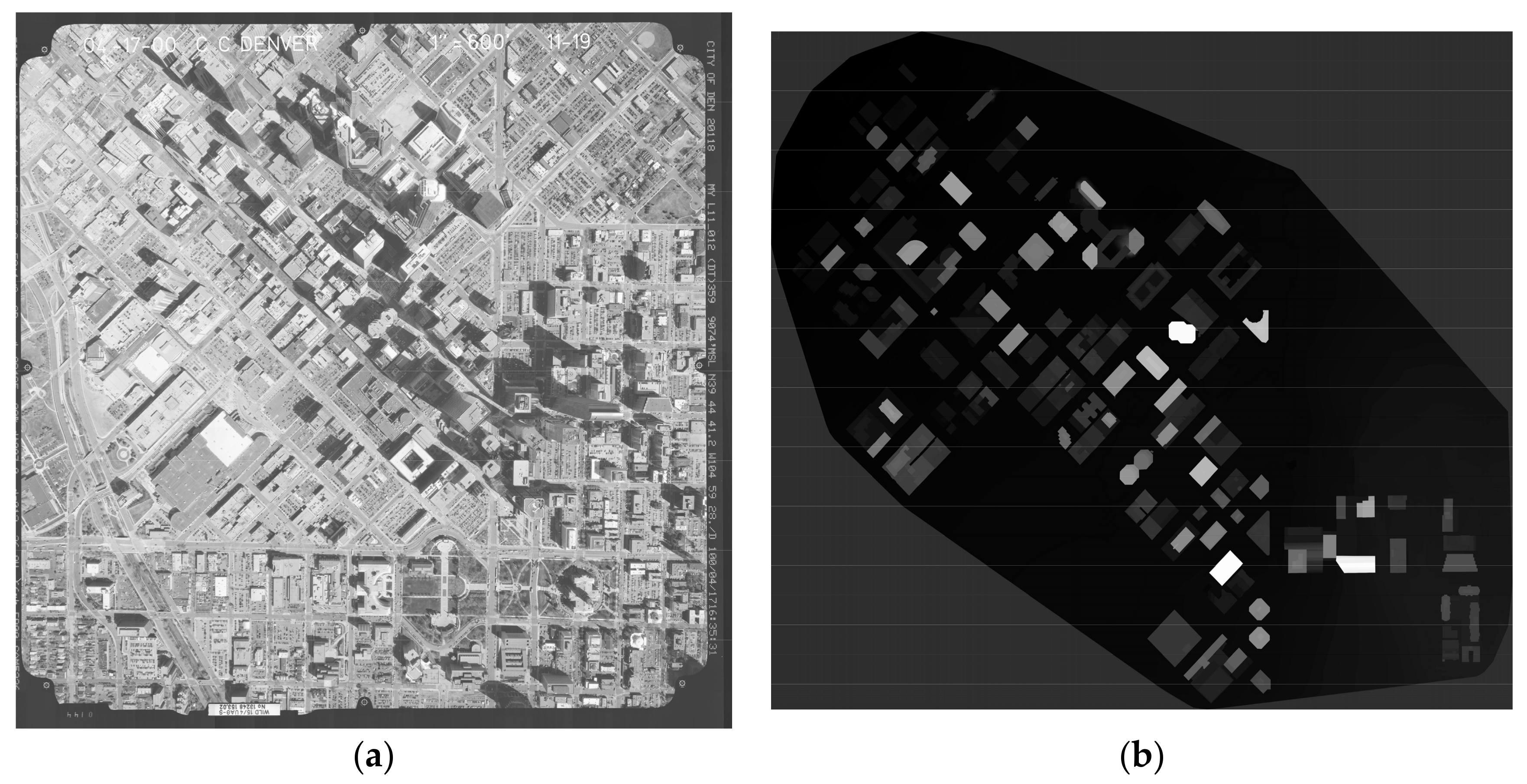

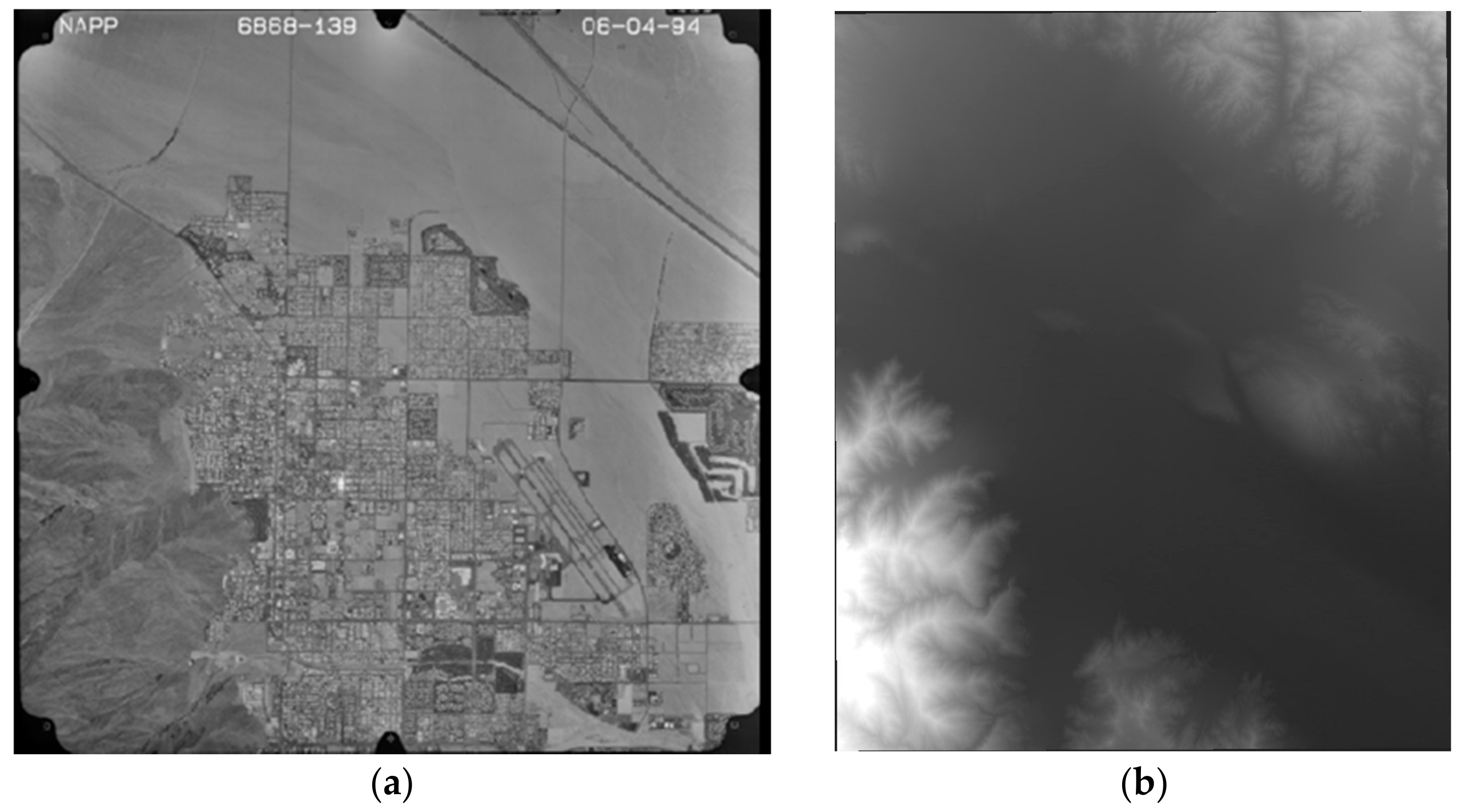

3.2. Data

4. Discussion

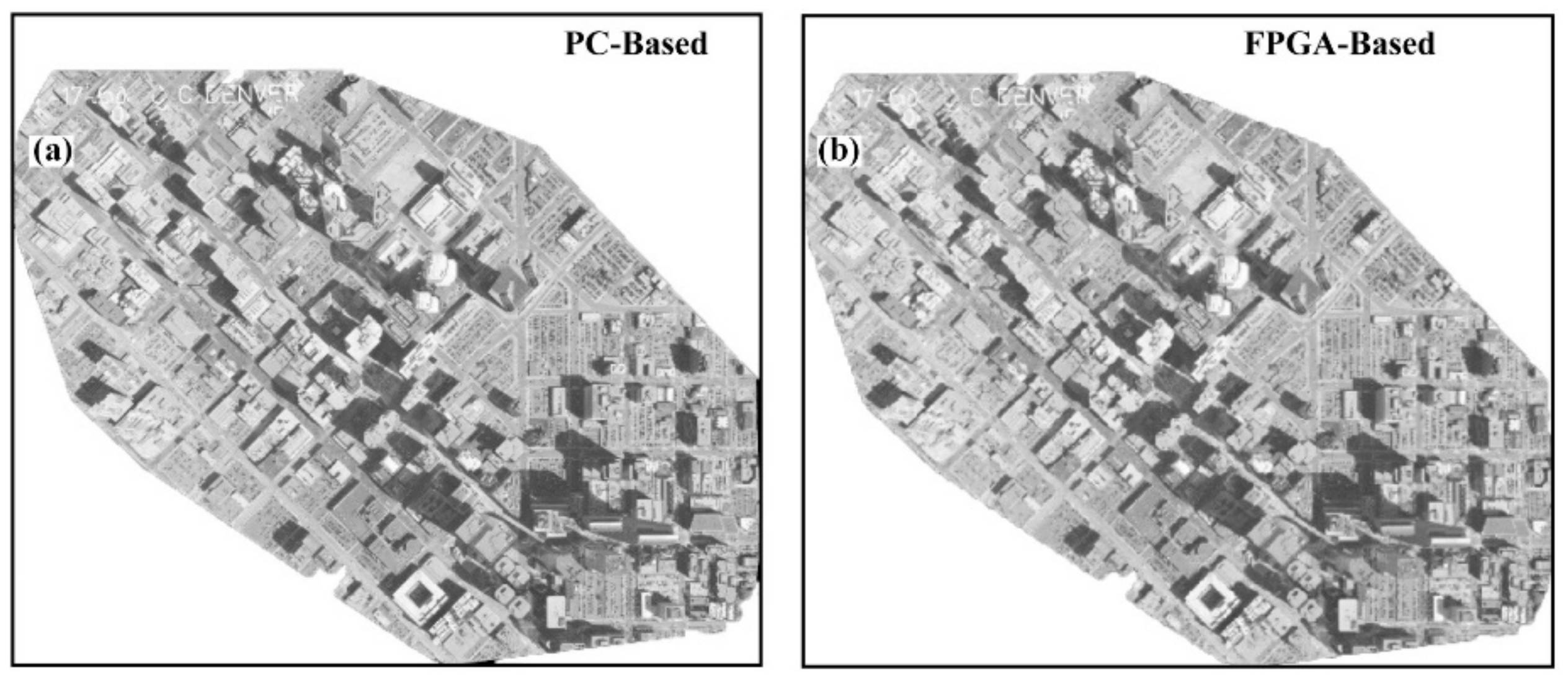

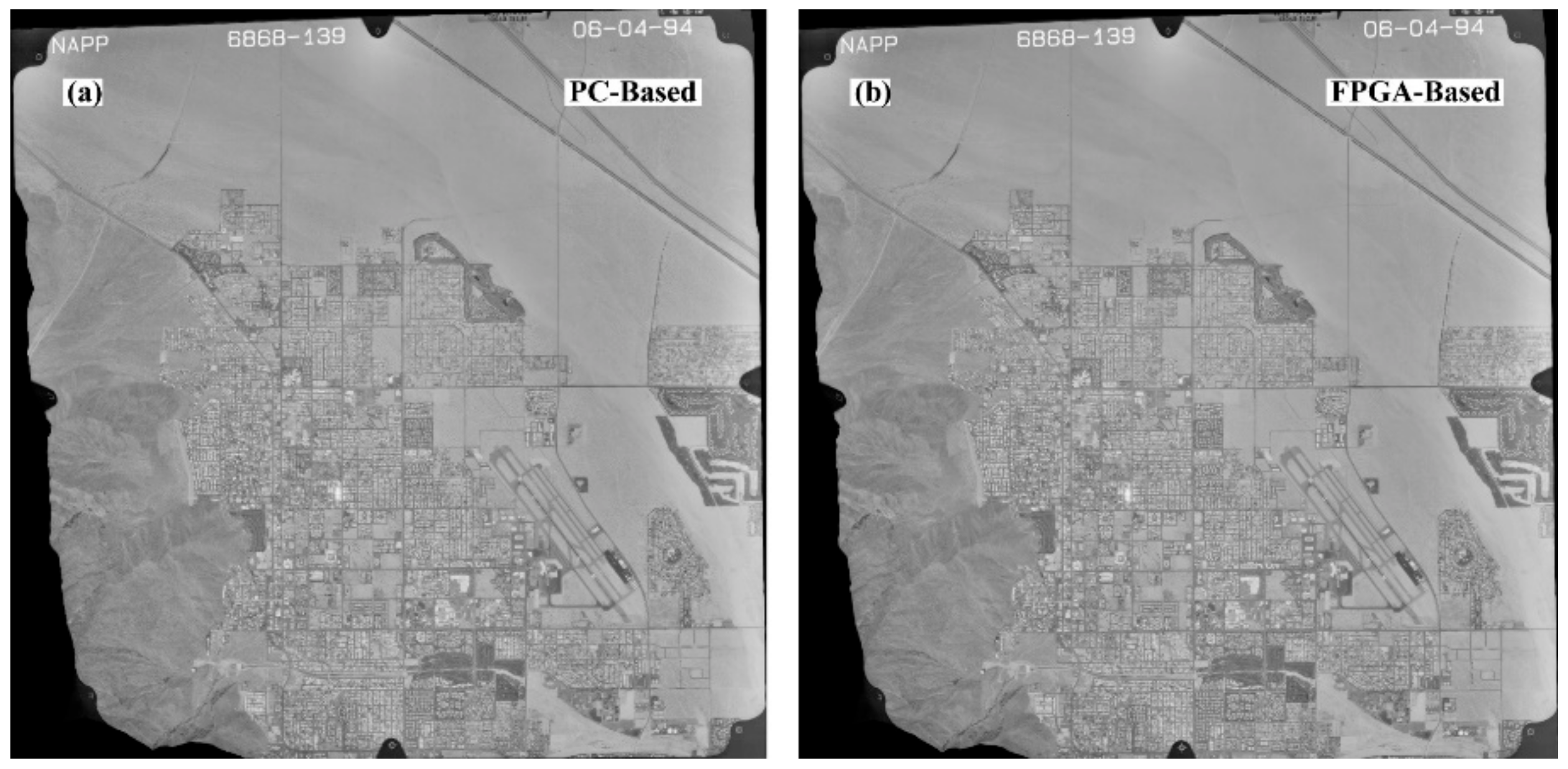

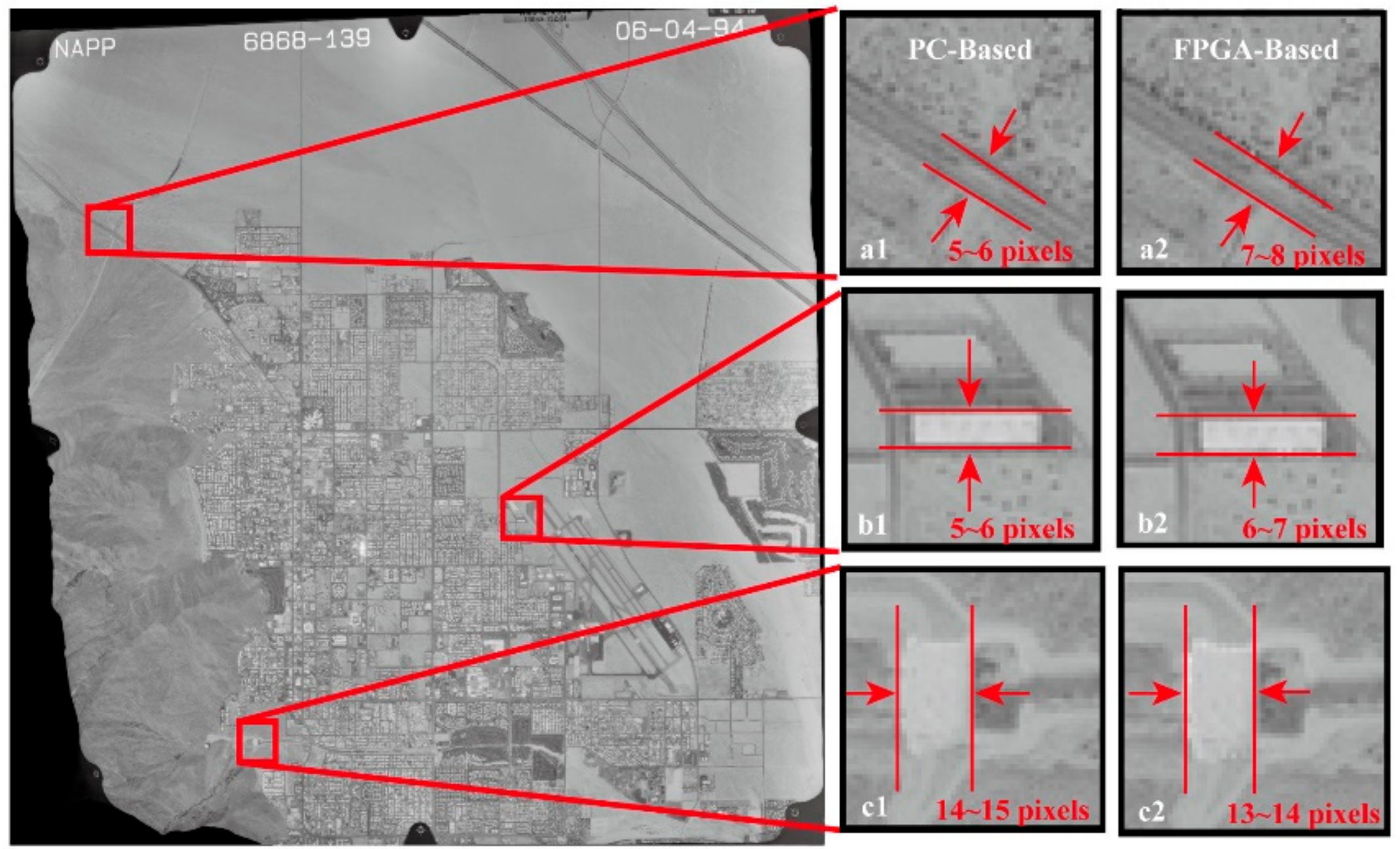

4.1. Visual Check

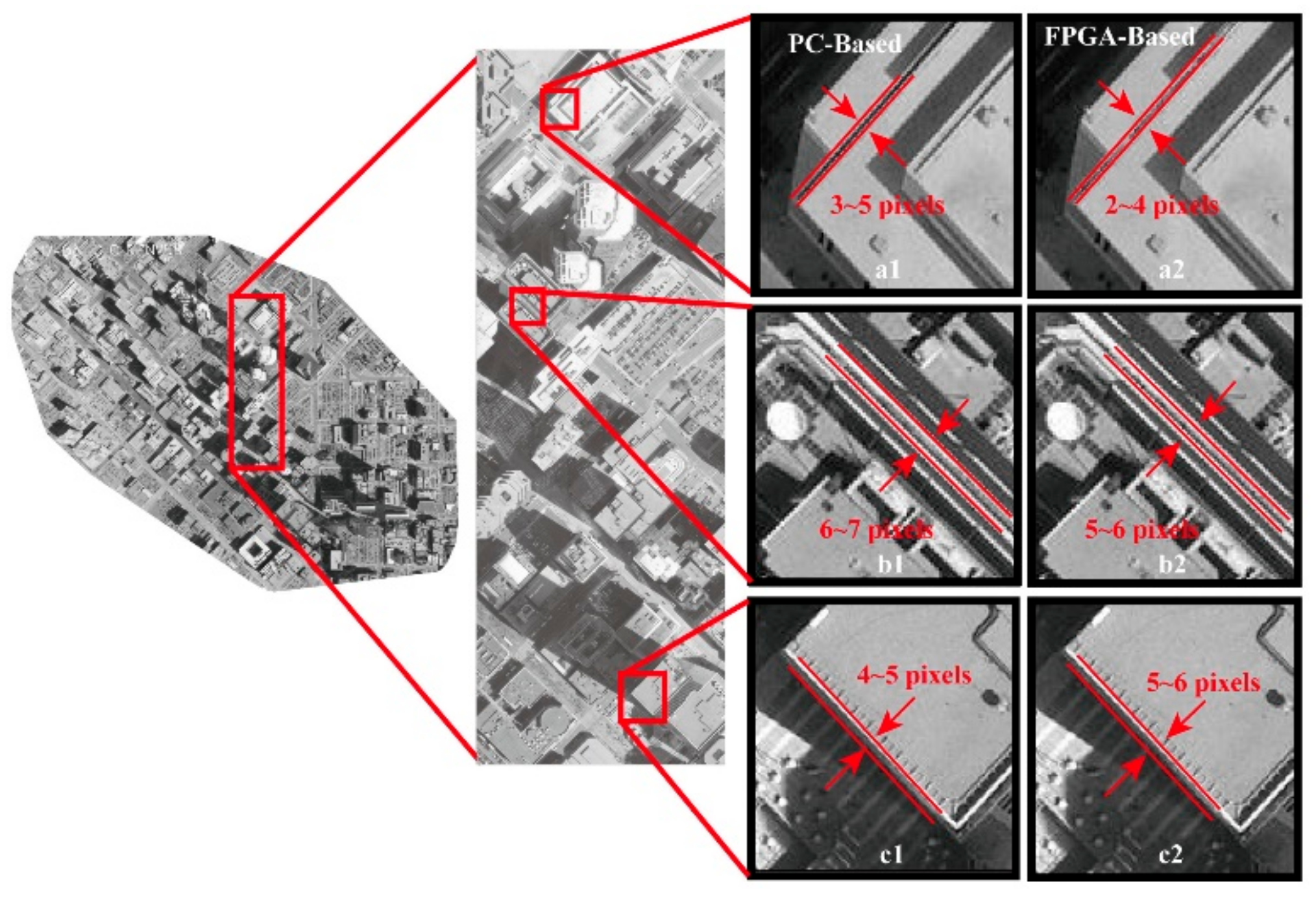

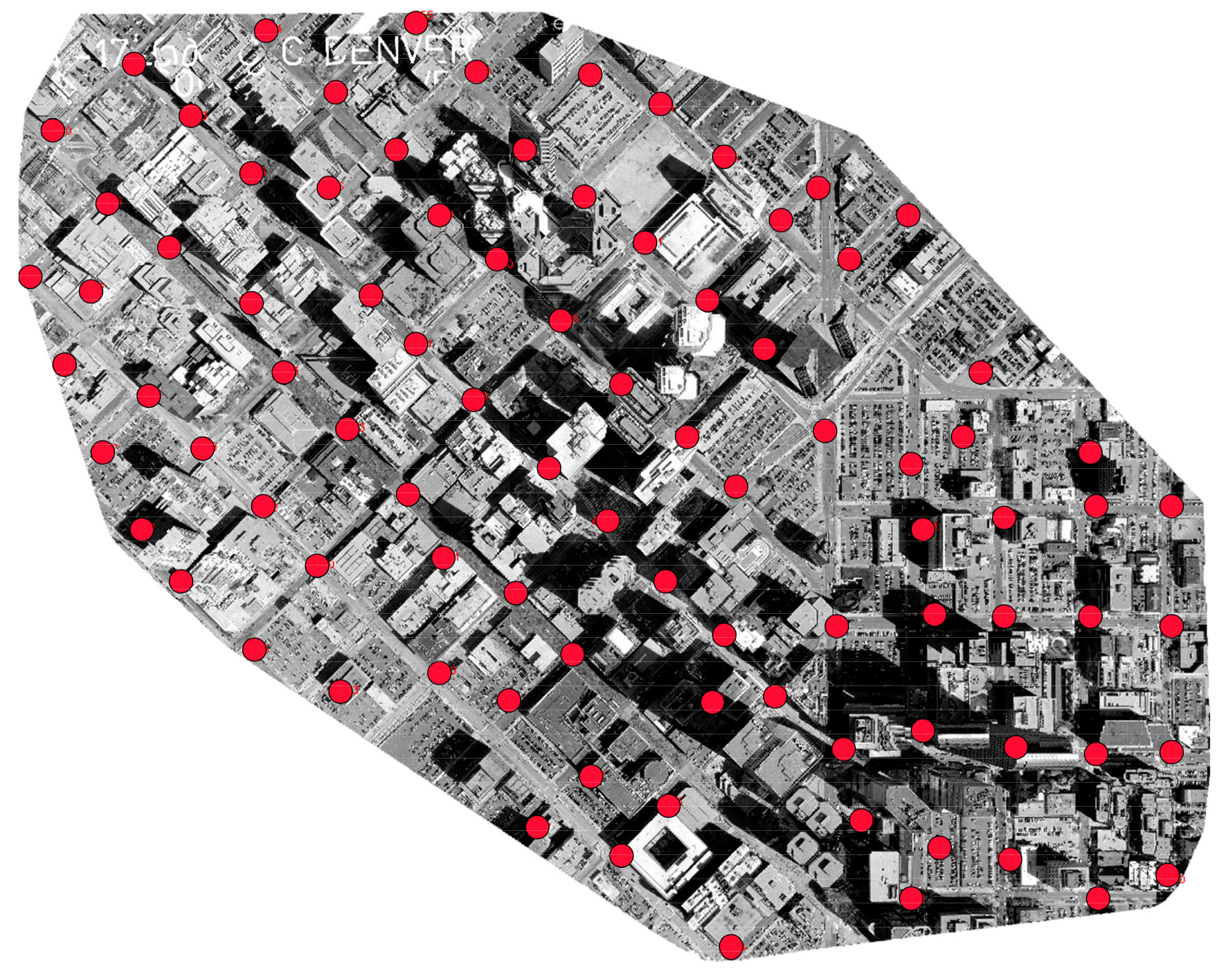

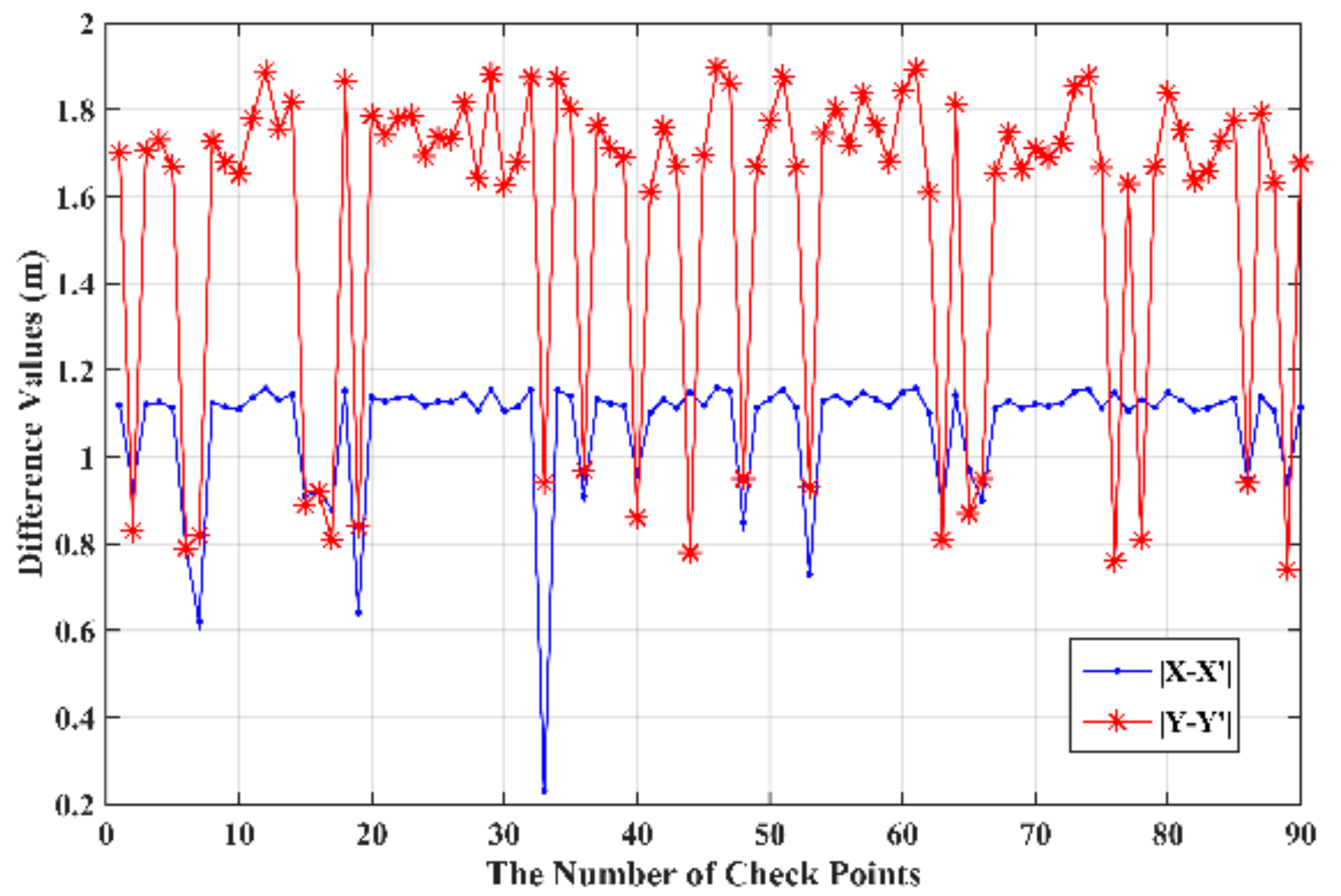

4.2. Error Analysis

4.3. Processing Speed Comparison

4.4. Resource Consumption

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Pan, Z.; Lei, J.; Zhang, Y.; Sun, X.; Kwong, S. Fast motion estimation based on content property for low-complexity H.265/HEVC encoder. IEEE Trans. Broadcast. 2016, 62, 675–684. [Google Scholar] [CrossRef]

- Jiang, C.; Nooshabadi, S. A scalable massively parallel motion and disparity estimation scheme for multiview video coding. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 346–359. [Google Scholar] [CrossRef]

- Warpenburg, M.R.; Siegel, L.J. SIMD image resampling. IEEE Trans. Comput. 1982, 31, 934–942. [Google Scholar] [CrossRef]

- Wittenbrink, C.M.; Somani, A.K. 2D and 3D optimal parallel image warping. J. Parallel Distrib. Comput. 1995, 25, 197–208. [Google Scholar] [CrossRef]

- Sylvain, C.V.; Serge, M. A load-balanced algorithm for parallel digital image warping. Int. J. Pattern Recognit. Artif. Intell. 1999, 13, 445–463. [Google Scholar]

- Dai, C.; Yang, J. Research on orthorectification of remote sensing images using GPU-CPU cooperative processing. In Proceedings of the International Symposium on Image and Data Fusion, Tengchong, China, 9–11 August 2011; pp. 1–4. [Google Scholar]

- Escamilla-Hernández, E.; Kravchenko, V.; Ponomaryov, V.; Robles-Camarillo, D.; Ramos, L.E. Real time signal compression in radar using FPGA. Científica 2008, 12, 131–138. [Google Scholar]

- Kate, D. Hardware implementation of the huffman encoder for data compression using altera DE2 board. Int. J. Adv. Eng. Sci. 2012, 2, 11–15. [Google Scholar]

- Pal, C.; Kotal, A.; Samanta, A.; Chakrabarti, A.; Ghosh, R. An efficient FPGA implementation of optimized anisotropic diffusion filtering of images. Int. J. Reconfig. Comput. 2016, 2016, 1. [Google Scholar] [CrossRef]

- Wang, E.; Yang, F.; Tong, G.; Qu, P.; Pang, T. Particle filtering approach for gnss receiver autonomous integrity monitoring and FPGA implementation. Telecommun. Comput. Electron. Control 2016, 14. [Google Scholar] [CrossRef]

- Zhang, C.; Liang, T.; Mok, P.K.T.; Yu, W. FPGA implementation of the coupled filtering method. In Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; pp. 435–442. [Google Scholar]

- Ontiveros-Robles, E.; Vázquez, J.G.; Castro, J.R.; Castillo, O. A FPGA-based hardware architecture approach for real-time fuzzy edge detection. Nat. Inspired Des. Hybrid Intell.Syst. 2017, 667, 519–540. [Google Scholar]

- Ontiveros-Robles, E.; Gonzalez-Vazquez, J.L.; Castro, J.R.; Castillo, O. A hardware architecture for real-time edge detection based on interval type-2 fuzzy logic. In Proceedings of the IEEE International Conference on Fuzzy Systems, Vancouver, BC, Canada, 24–29 July 2016. [Google Scholar]

- Li, H.H.; Liu, S.; Piao, Y. Snow removal of video image based on FPGA. In Proceedings of the 5th International Conference on Electrical Engineering and Automatic Control, Weihai, China, 16–18 October 2015; Huang, B., Yao, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 207–215. [Google Scholar]

- Li, H.; Xiang, F.; Sun, L. Based on the FPGA video image enhancement system implementation. DEStech Trans. Comput. Sci. Eng. 2016. [Google Scholar] [CrossRef]

- González, D.; Botella, G.; Meyer-Baese, U.; García, C.; Sanz, C.; Prieto-Matías, M. A low cost matching motion estimation sensor based on the NIOS II microprocessor. Sensors 2012, 12, 13126–13149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- González, D.; Botella, G.; García, C.; Prieto, M.; Tirado, F. Acceleration of block-matching algorithms using a custom instruction-based paradigm on a NIOS II microprocessor. EURASIP J. Adv. Signal Proc. 2013, 2013, 118. [Google Scholar] [CrossRef] [Green Version]

- Botella, G.; Garcia, A.; Rodriguez-Alvarez, M.; Ros, E.; Meyer-Baese, U.; Molina, M.C. Robust bioinspired architecture for optical-flow computation. IEEE Trans. VLSI Syst. 2010, 18, 616–629. [Google Scholar] [CrossRef]

- Waidyasooriya, H.; Hariyama, M.; Ohtera, Y. FPGA architecture for 3-D FDTD acceleration using open CL. In Proceedings of the 2016 Progress in Electromagnetic Research Symposium (PIERS), Shanghai, China, 8–11 August 2016; p. 4719. [Google Scholar]

- Rodriguez-Donate, C.; Botella, G.; Garcia, C.; Cabal-Yepez, E.; Prieto-Matias, M. Early experiences with OpenCL on FPGAs: Convolution case study. In Proceedings of the 2015 IEEE 23rd Annual International Symposium on Field-Programmable Custom Computing Machines, Vancouver, BC, Canada, 2–6 May 2015; p. 235. [Google Scholar]

- Thomas, U.; Rosenbaum, D.; Kurz, F.; Suri, S.; Reinartz, P. A new software/hardware architecture for real time image processing of wide area airborne camera images. J. Real-Time Image Proc. 2008, 4, 229–244. [Google Scholar] [CrossRef]

- Kalomiros, J.A.; Lygouras, J. Design and evaluation of a hardware/software FPGA-based system for fast image processing. Microproc. Microsyst. 2008, 32, 95–106. [Google Scholar] [CrossRef]

- Kuo, D.; Gordon, D. Real-time orthorectification by FPGA-based hardware acceleration. In Remote Sensing; International Society for Optics and Photonics: Bellingham, WA, USA, 2010; p. 78300Y. [Google Scholar]

- Halle, W. Thematic data processing on board the satellite BIRD. In Proceedings of the SPIE 4540, Sensors, Systems, and Next-Generation Satellites V, Toulouse, France, 17–20 September 2001. [Google Scholar]

- Malik, A.W.; Thornberg, B.; Imran, M.; Lawal, N. Hardware architecture for real-time computation of image component feature descriptors on a FPGA. Int. J. Distrib. Sens. Netw. 2014, 2014, 14. [Google Scholar] [CrossRef]

- Tomasi, M.; Vanegas, M.; Barranco, F.; Diaz, J.; Ros, E. Real-time architecture for a robust multi-scale stereo engine on FPGA. IEEE Trans. Very Large Scale Integr. Syst. 2012, 20, 2208–2219. [Google Scholar] [CrossRef]

- Greisen, P.; Heinzle, S.; Gross, M.; Burg, A.P. An FPGA-based processing pipeline for high-definition stereo video. EURASIP J. Image Video Proc. 2011, 2011, 1–13. [Google Scholar] [CrossRef]

- Kumar, P.R.; Sridharan, K. VLSI-efficient scheme and FPGA realization for robotic mapping in a dynamic environment. IEEE Trans. Very Large Scale Integr. Syst. 2007, 15, 118–123. [Google Scholar] [CrossRef]

- Hsiao, P.Y.; Lu, C.L.; Fu, L.C. Multilayered image processing for multiscale harris corner detection in digital realization. IEEE Trans. Ind. Electron. 2010, 57, 1799–1805. [Google Scholar] [CrossRef]

- Kazmi, M.; Aziz, A.; Akhtar, P. An efficient and compact row buffer architecture on FPGA for real-time neighbourhood image processing. J. Real-Time Image Proc. 2017. [Google Scholar] [CrossRef]

- Cao, T.P.; Elton, D.; Deng, G. Fast buffering for FPGA implementation of vision-based object recognition systems. J. Real-Time Image Proc. 2012, 7, 173–183. [Google Scholar] [CrossRef]

- Hu, X.; Zhu, Y. Research on FPGA based image input buffer design mechanism. Microcomput. Inform. 2010, 26, 5–6. [Google Scholar]

- El-Amawy, A. A systolic architecture for fast dense matrix inversion. IEEE Trans. Comput. 1989, 38, 449–455. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, W.; Kelmelis, J.A.; Zhang, D. A comprehensive study on urban true orthorectification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2138–2147. [Google Scholar] [CrossRef]

- Shi, W.; Shaker, A. Analysis of terrain elevation effects on IKONOS imagery rectification accuracy by using non-rigorous models. Photogramm. Eng. Remote Sens. 2003, 69, 1359–1366. [Google Scholar] [CrossRef]

- Reinartz, P.; Müller, R.; Lehner, M.; Schroeder, M. Accuracy analysis for DSM and orthoimages derived from SPOT HRS stereo data using direct georeferencing. ISPRS J. Photogramm. Remote Sens. 2006, 60, 160–169. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, D.; Gu, Y.; Tao, F. Impact of GCP distribution on the rectification accuracy of Landsat TM imagery in a coastal zone. ACTA Oceanol. Sin Engl. Ed. 2006, 25, 14. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

| Known Parameters | First Study Area | Second Study Area |

|---|---|---|

| x0 | 0.002 | −0.004 |

| y0 | −0.004 | 0.000 |

| f (mm) | 153.022 | 152.8204 |

| XS (m) | 3,143,040.5560 | 543,427.1886 |

| YS (m) | 1,696,520.9258 | 3,744,740.3247 |

| ZS (m) | 9072.2729 | 6743.2730 |

| ω (rad) | −0.02985539 | 0.63985182 |

| φ (rad) | −0.00160606 | −0.65999005 |

| κ (rad) | −1.55385318 | 0.86709830 |

| # | The First Study Area | The Second Study Area | ||||||

|---|---|---|---|---|---|---|---|---|

| i | j | u | v | i | j | u | v | |

| FP1 | 683.403 | 881.001 | −196.100 | 191.150 | 87.500 | 88.501 | −106.000 | 106.000 |

| FP2 | 15,820.521 | 835.103 | 182.325 | 192.300 | 2208.499 | 83.501 | 105.999 | 105.994 |

| FP3 | 15,868.602 | 15,970.971 | 183.525 | −186.075 | 2213.503 | 2204.504 | 105.998 | −105.999 |

| FP4 | 730.452 | 16,019.980 | −194.925 | −187.300 | 92.500 | 2209.501 | −106.008 | −105.998 |

| # | Max | Min | Mean | Standard Deviation |

|---|---|---|---|---|

| X coordinates | 1.16 m | 0.23 m | 1.07 m | 0.14 m |

| Y coordinates | 1.89 m | 0.74 m | 1.55 m | 0.38 m |

| # | Name of Logic Unit | Utilization Ratio (%) |

|---|---|---|

| Logic unit resource | Register | 34 |

| Distribution of logic unit | Flip Flop | 12 |

| LUT | 27 | |

| LUT-FF Pairs | 58 | |

| Control Sets | 2 | |

| Input and output (IO) | IOs | 78 |

| IOBs | 54 |

| # | Name of Logic Unit | Utilization Ratio (%) |

|---|---|---|

| Use ratio of logic unit | Register | 24 |

| Distribution of logic unit | Flip Flop | 17 |

| LUT | 56 | |

| LUT-FF Pairs | 64 | |

| Control Sets | 7 | |

| Input and output (IO) | IOs | 72 |

| IOBs | 65 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, G.Z.R.Z.N.L.J.H.a.X.; Zhang, R.; Liu, N.; Huang, J.; Zhou, X. On-Board Ortho-Rectification for Images Based on an FPGA. Remote Sens. 2017, 9, 874. https://doi.org/10.3390/rs9090874

Zhou GZRZNLJHaX, Zhang R, Liu N, Huang J, Zhou X. On-Board Ortho-Rectification for Images Based on an FPGA. Remote Sensing. 2017; 9(9):874. https://doi.org/10.3390/rs9090874

Chicago/Turabian StyleZhou, Guoqing Zhou Rongting Zhang Na Liu Jingjin Huang and Xiang, Rongting Zhang, Na Liu, Jingjin Huang, and Xiang Zhou. 2017. "On-Board Ortho-Rectification for Images Based on an FPGA" Remote Sensing 9, no. 9: 874. https://doi.org/10.3390/rs9090874