2.1. Convolutional Neural Network

The architecture of the used CNN model is shown in

Figure 2. We implemented the CNN using Caffe deep learning networks [

24]. A CNN is usually composed of convolutional layers and pooling layers (denoted as Conv and pool here). These layers can help the network extract hierarchical features from the original inputs, followed by several fully connected layers (denoted as FC here) to perform the classification.

Considering a CNN with K layers, we denote the kth output layer as , where and the input data are denoted as . In each layer, there are two parts of the parameters for us to train, of which the weight matrix connects the previous layer with and the bias vector .

As shown in

Figure 2, the input data is usually followed with the convolution layer. The convolution layer first performs a convolution operation with the kernels

. Then, we add the bias vector

with the resulting feature maps. After obtaining the features, a pointwise non-linear activation operation

is usually performed subsequently. The size of the convolution kernels in our model is 3 × 3. A pooling layer (e.g., taking the average or maximum of adjacent locations) is followed, which uses the non-overlapping square windows per feature map to select the dominant features. The pooling layer can reduce the number of network parameters and improve the robustness of the translation. This layer offers invariance by reducing the resolution of the feature maps, and each pooling layer corresponds to the previous convolutional layer. The neuron in the pooling layer combines a small N × 1 patch of the convolution layer. The most common pooling operation is max pooling, which we chose for our experiments. The entire process can be formulated as

denotes the convolution operator and pool denotes the pooling operator.

The hierarchical feature extraction architecture is formed by stacking several convolution layers and pooling layers one by one. Then, we combine the resultant features with the 1D features using the FC layer. A fully connected layer takes all neurons in the previous layer and connects them to every single neuron contained in the layer. It first processes its inputs with a linear transformation by the weight

and the bias vector

, and then, the pointwise non-linear activation is performed as follows:

For the non-linear activation operation

, we use rectified linear units. Unlike binary units, the rectified linear units (ReLU) [

25] preserve information about the relative intensities as information travels through multiple layers of features detectors. It uses a fast approximation where the sampled value of the rectified linear unit is not constrained to be an integer. The function can be written as

Training deep neural networks is complicated by the distribution of each layer’s inputs during the training. Batch Normalization (BN) [

26] allows us to use much higher learning rates, to be less careful about initialization, and to prevent overfitting. Consider a mini-batch

B of size m

. The BN transform is performed as follows:

and

are parameters to be learned and

is a constant added for numerical stability.

The last classification layer is always a softmax layer, with the number of neurons equaling the number of classes to be classified. Using a logistic regression layer with one neuron to perform binary classification, the activation value represents the probability that the input belongs to the positive class. The softmax layer guarantees that the output is a probability distribution, as the value for each label is between 0 and 1 and all the values add up to 1. The multi-label problem is then seen as a regression on the desired output label vectors.

If there is a set T of all possible classes, for the n training samples

, the loss function L quantifies the misclassification by comparing the target label vectors

and the predicted label vectors

. We use the common cross-entropy loss in this work, which is defined as

The cross-entropy loss is numerically stable when coupled with the softmax normalization [

27] and has a fast convergence rates when training neural networks.

When the loss function is defined, the model parameters that minimize the loss should be solved. The model parameters are composed by the weights and the biases . The back propagation algorithm is widely used to optimize these parameters. It propagates the prediction error from the last layer to the first layer and optimize the parameters according to the propagated error at each layer. The derivative of the parameters W and b can be described as and , respectively. The loss function can be optimized from the gradient of the parameters.

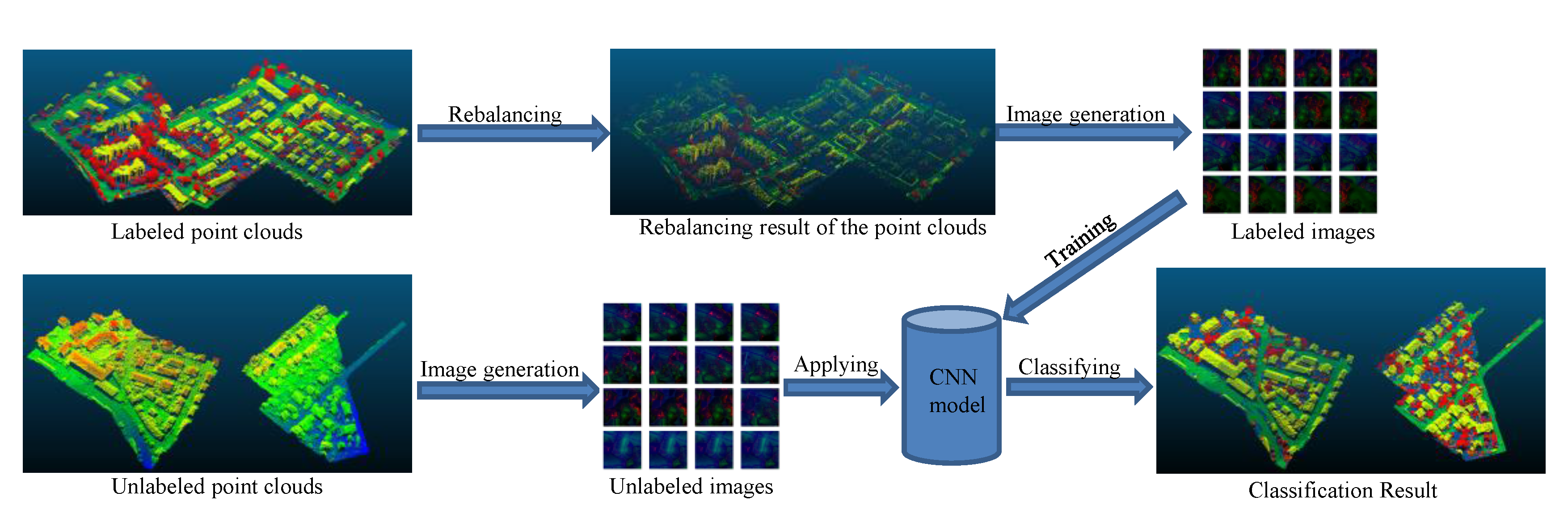

2.2. Feature Image Generation

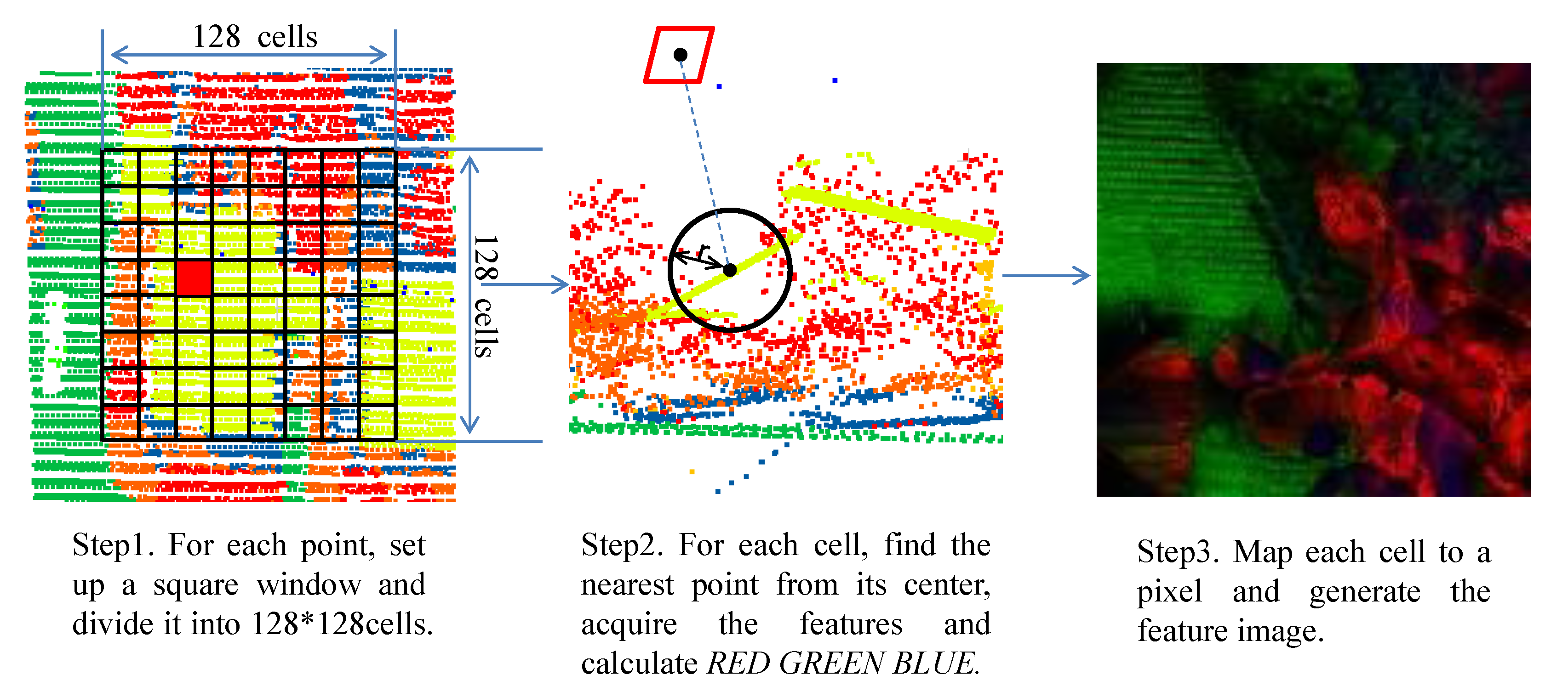

To match the CNN with our ALS data, a feature image-generation method was proposed. In Hu’s method, each point Pk and its surrounding points within a “square window” were divided into 128 × 128 cells. The height relations among all the points within the cell are obtained and used to generate images which are then used to train the CNN model. This area-based feature image-generation method performed well in the DTM-generation task. However, the method may have problems when facing the multi-objects semantic labeling task. Objects such as trees and roofs are difficult to distinguish, so we proposed a single point-based feature image-generation method.

Like Hu’s method, we set up a square window for each ALS point

(coordinates are

,

and

) and divide the window into 128 × 128 cells. Based on the ALS dataset we choose the width of the cell to be

. For each cell, instead of using the height relations between all points, we choose a unique point and calculate the point based features. To find this unique point, we first need to calculate the center coordinate of the cell. The coordinates

,

and

are calculated as follows:

i denotes the row number,

j denotes the column number and

is the width of the cell.

The unique point is the nearest point from the center. We calculate three types of features based on this point, which are the local geometric features, global geometric features and full-waveform LiDAR features as follows:

- (1)

We calculate the local geometric features. All the features are extracted in a sphere of radius

r. For a point

, based on its n neighboring points, we obtain a center of gravity

. We then calculate the vector

. The variance–covariance matrix is written as

- (2)

The Eigenvalues

>

>

are calculated from the matrix. Using these eigenvalues we acquire addition features [

6,

28] as follows:

Chehata [

6] confirmed that

is the most important eigenvalue. The planar objects such as roofs and roads have low values in

, while non-planar objects have high values. Therefore in planar objects, the planarity

is represented with high values. In contrast, the sphericity

gives high values for an isotropically distributed 3D neighborhood. These two features help to distinguish planar objects such as roofs, façades, and roads easily from objects such as vegetation and cars.

The planarity of the local neighborhood is also the local geometric feature that will help discriminate buildings from vegetation. The local plane Π

P is estimated using a robust M-estimator with norm

[

29]. For each point, we obtain a normal vector from the plane. Then, the angle between the normal vector and the vertical direction can be calculated. We can obtain several angles from the neighboring points within a sphere of radius

r. Using the variance

of these angles helps us to discriminate planar surfaces such as roads from vegetation.

- (1)

We extract the global geometric features. We generate the DTM for the feature height above DTM based on robust filtering [

30], which is implemented in the commercial software package SCOP++. The height above DTM represents the global distribution for a point and helps to classify the data. Based on the analysis by Niemeyer [

15], this feature is by far the most important since it is the strongest and most discernible feature for all the classes and relations. For instance, this feature has the capability to distinguish between a relation of points on a roof or on a road level.

- (2)

The full-waveform LiDAR features are also needed [

31]. The chosen echo intensity values are high on building roofs, on gravels roads, and on cars, while low values are asphalt roads and tar streets [

6], which makes these objects easy to distinguish.

After calculating the features from the point

, we then transfer these features into three integers from 0 to 255 as follows:

and are normalized between 0 and 1 and are the eigenvalue-based features, is normalized between 0 and 1 and is the variance of the normal vector angle from the vertical direction, is the normalized height above DTM between 0 and 1, and Intensity is the echo intensity value normalized between 0 and 255.

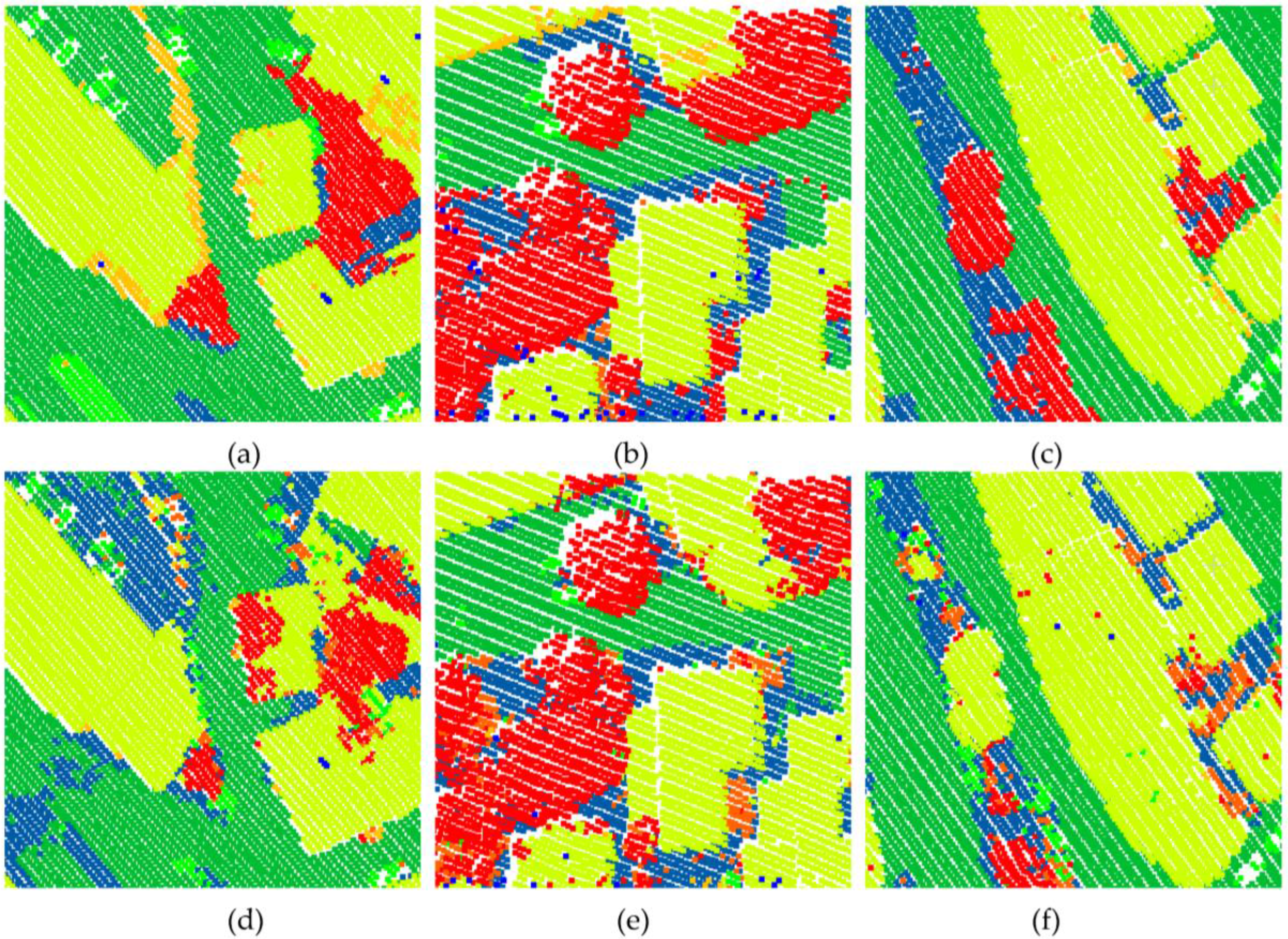

For each cell, the RED, GREEN and BLUE are mapped to a pixel with red, blue and green colors. Then, the square window can be transferred to a 128 × 128 image. The steps of the feature image generation are shown in

Figure 3.

The spatial context of the point is successfully transferred into an image by the distribution of point and its three types of features. The CNN model extracts the high-level representations through these limited low-level feature images and performs well in the ALS point clouds semantic labeling task.