Static Human Detection and Scenario Recognition via Wearable Thermal Sensing System

Abstract

:1. Introduction

- (1)

- It is able to detect static targets.

- (2)

- It is portable with users.

- (3)

- It is highly useful to blind people and disabled people with visual problems for the perception of surrounding human scenarios.

2. System Setup

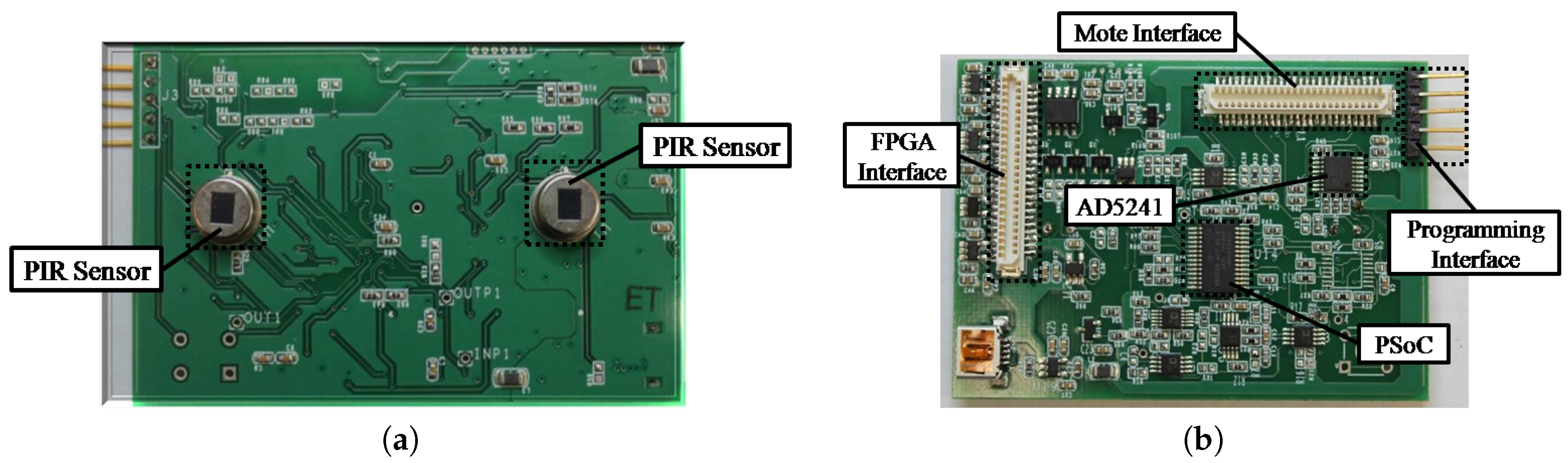

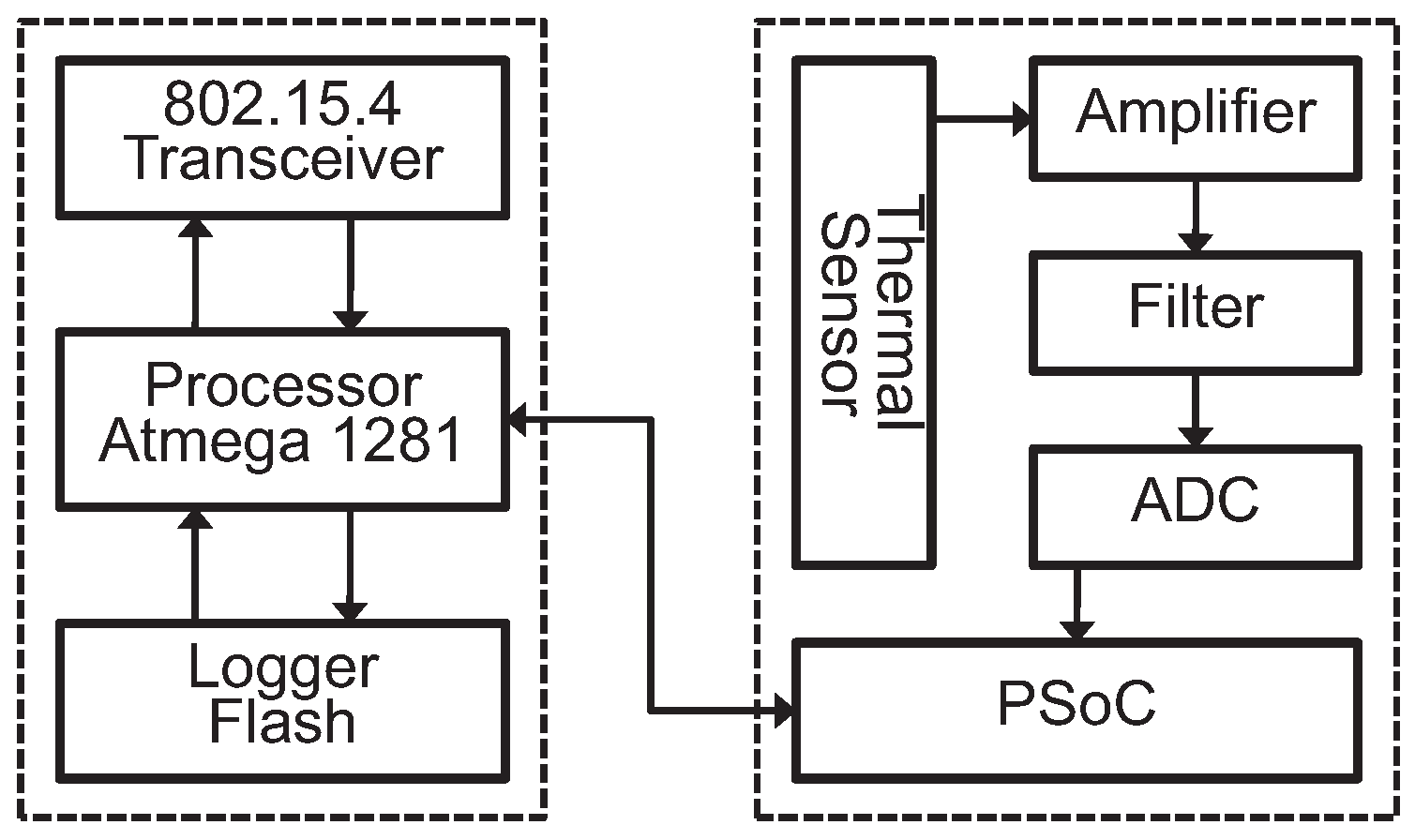

2.1. PIR Sensor Circuit Design

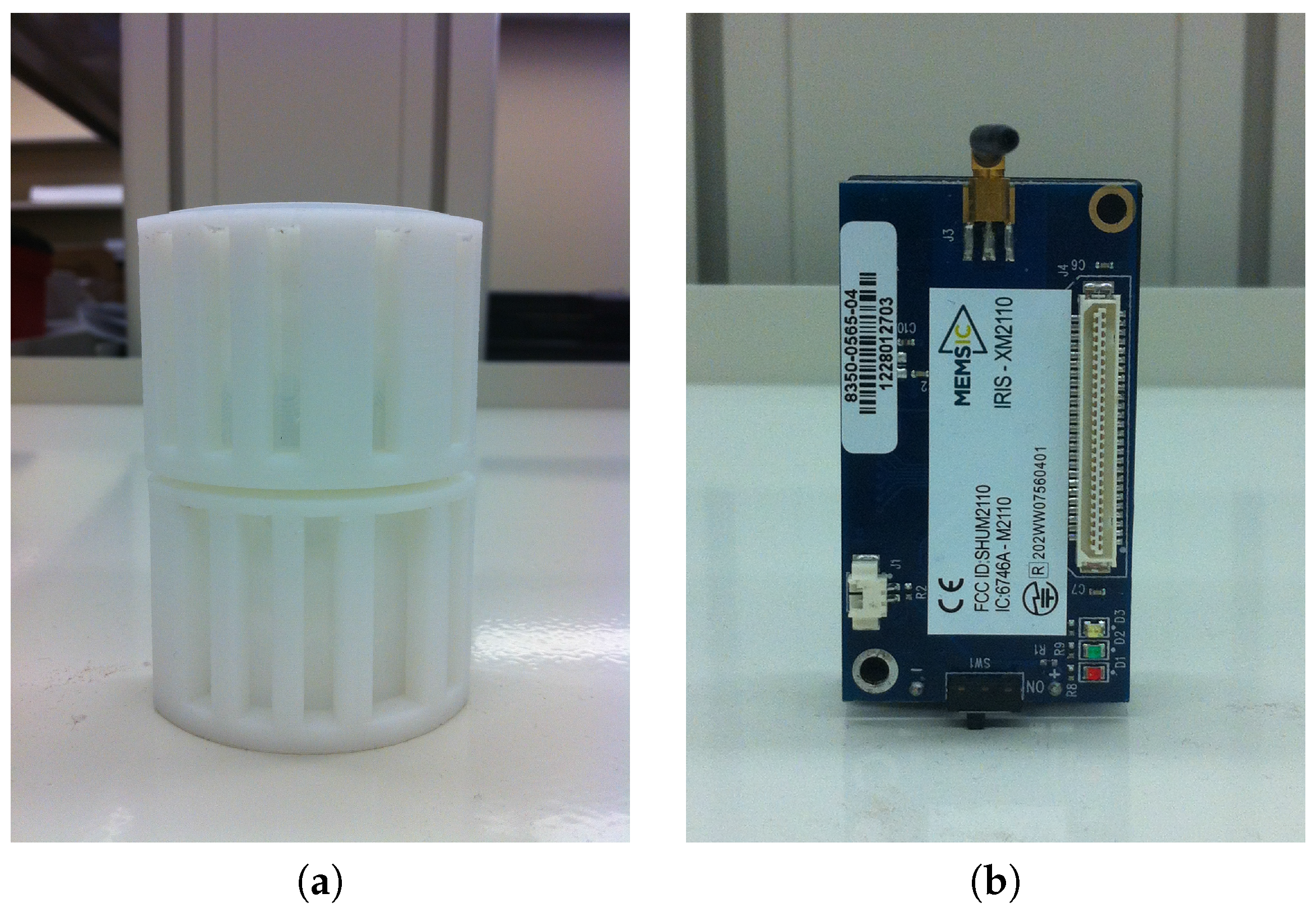

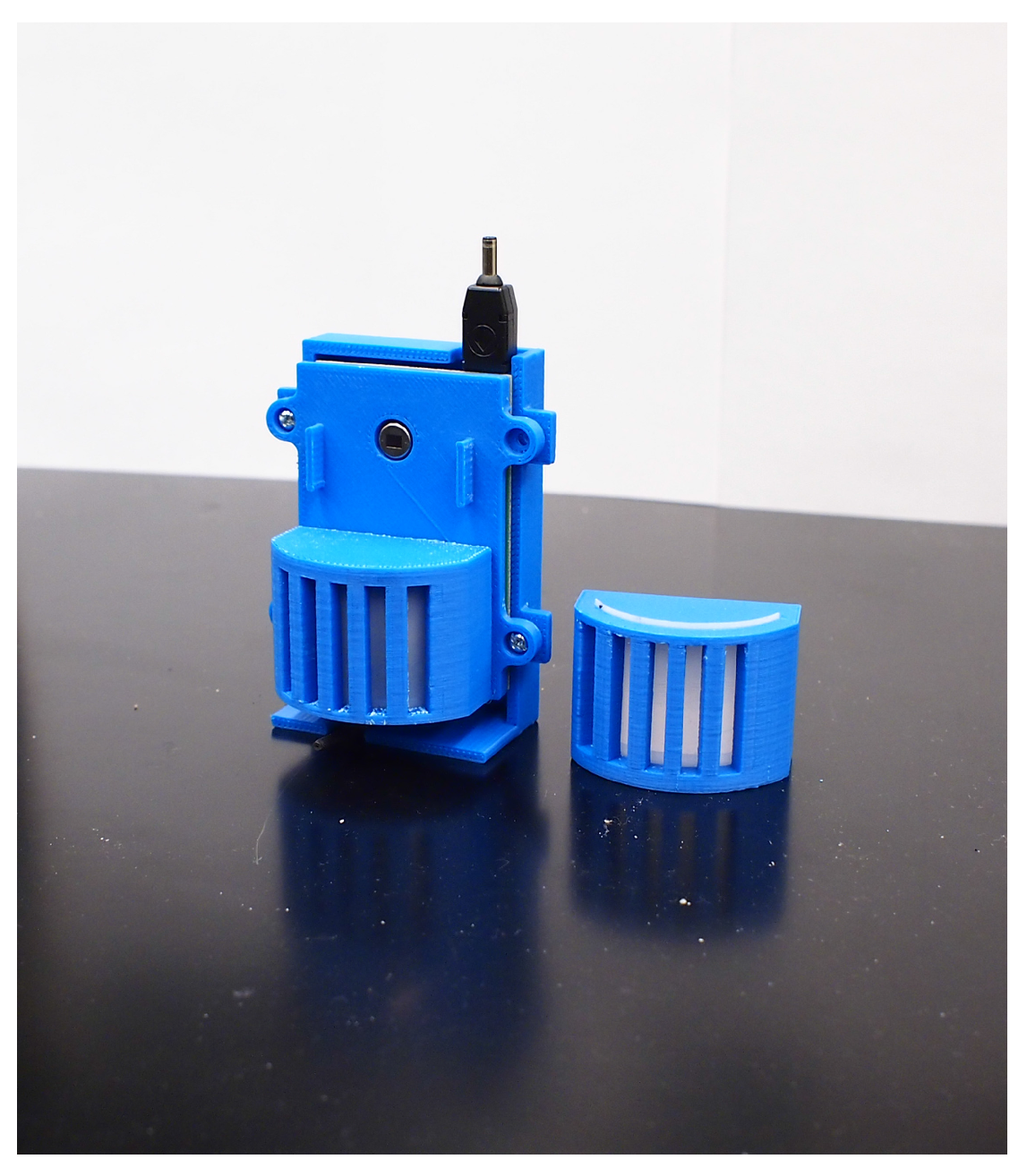

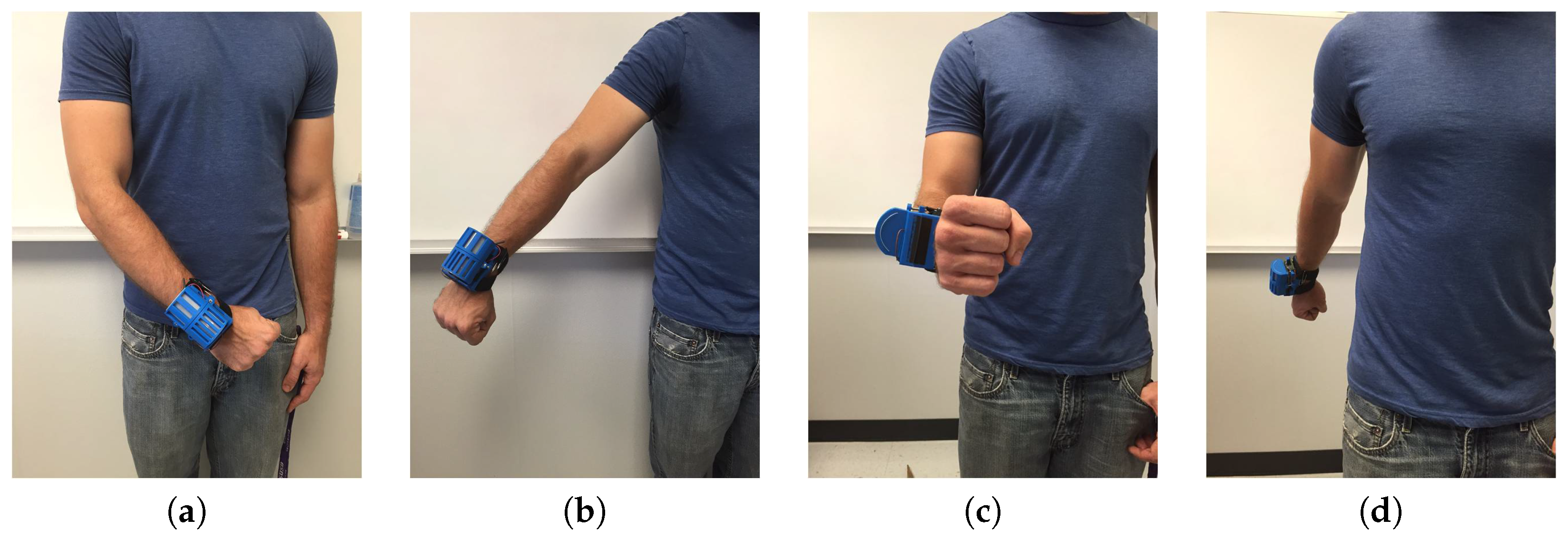

2.2. Wearable Sensor Node Design

2.3. Wireless Platform

2.4. Active Sensing Protocol

3. Wearable PIR Sensing and Statistical Feature Extraction

3.1. Wearable PIR Sensing for Static Targets

3.2. Wearable PIR Sensing for Scenario Perception

3.3. Buffon’s Needle-Based Target Detection

3.4. Target Feature Extraction

3.4.1. Probability of Intersection Process

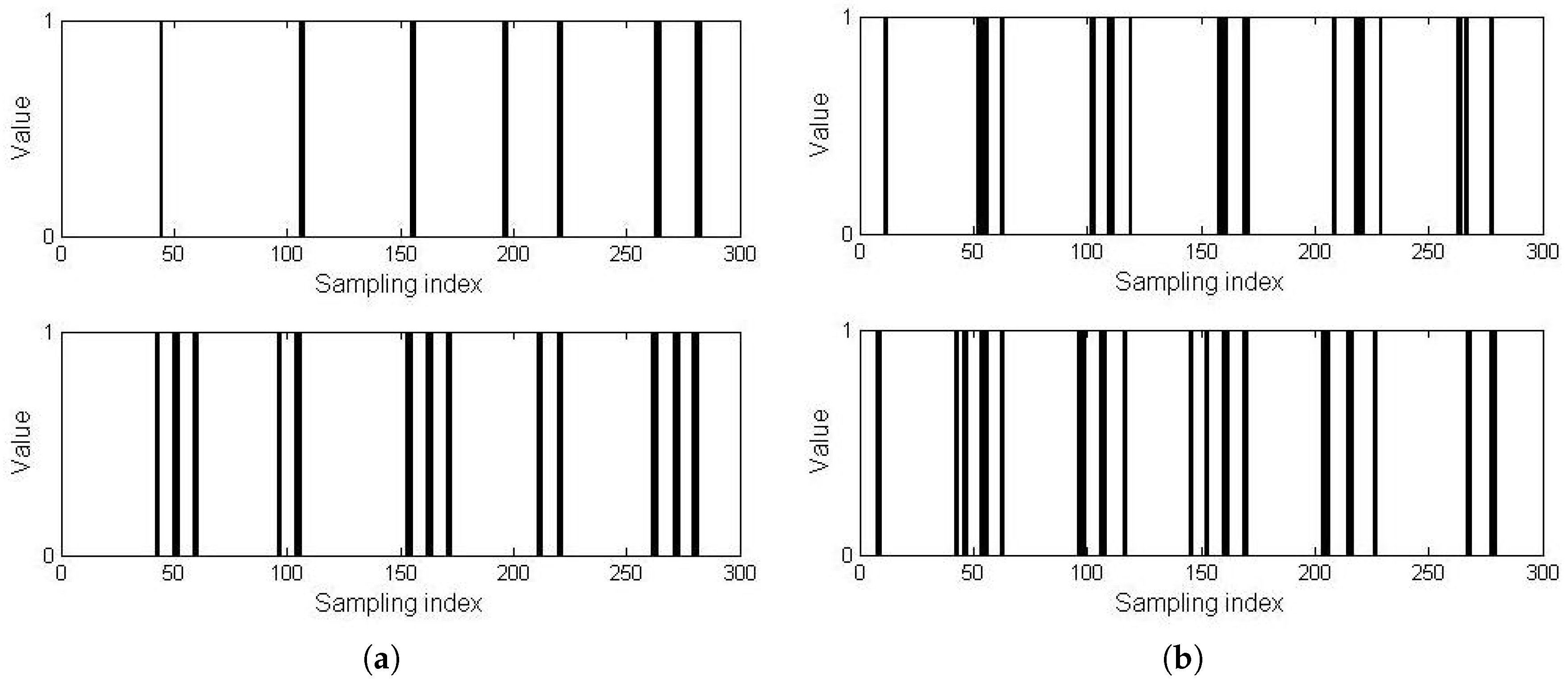

3.4.2. Temporal Statistical Feature

3.4.3. Spacial Statistical Feature

4. Experiment and Evaluation

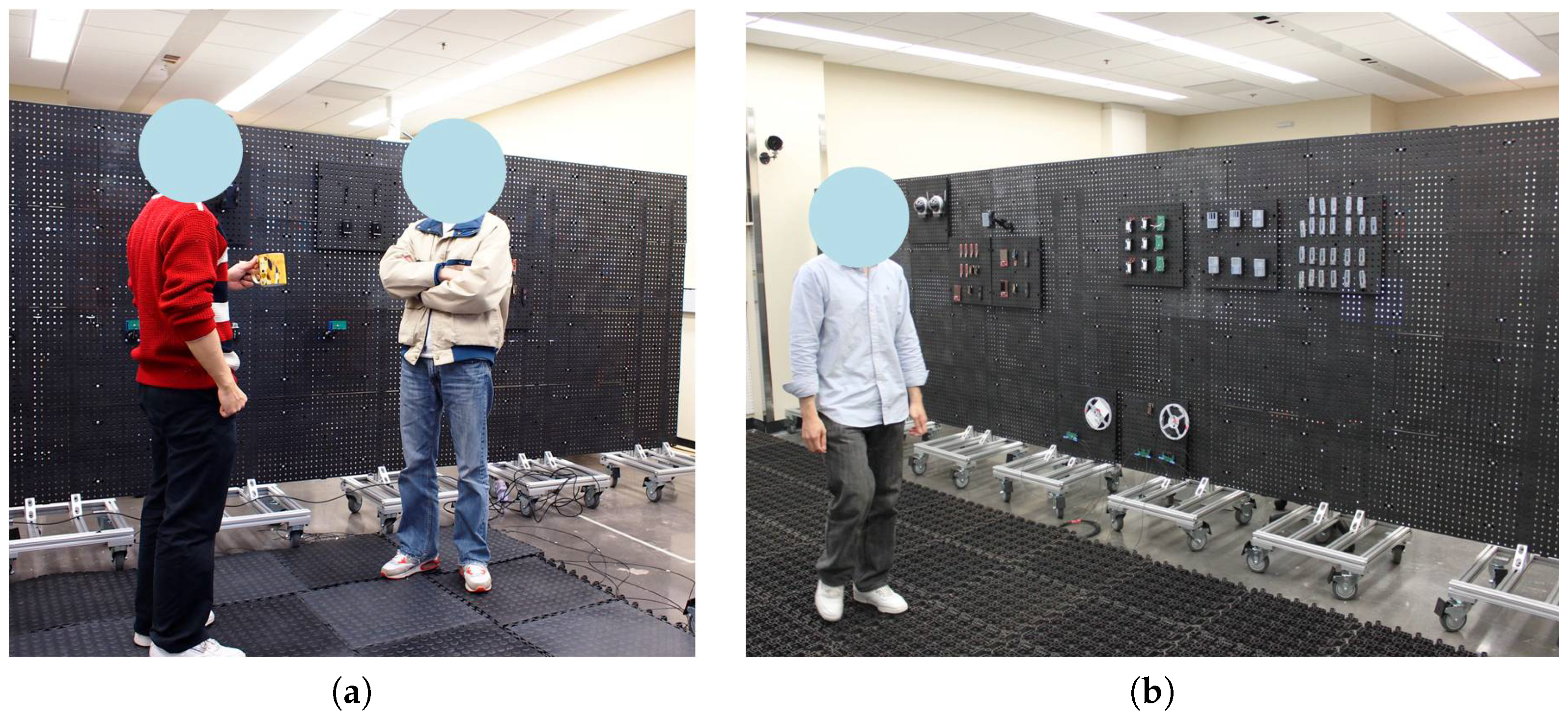

4.1. Experiment Setup

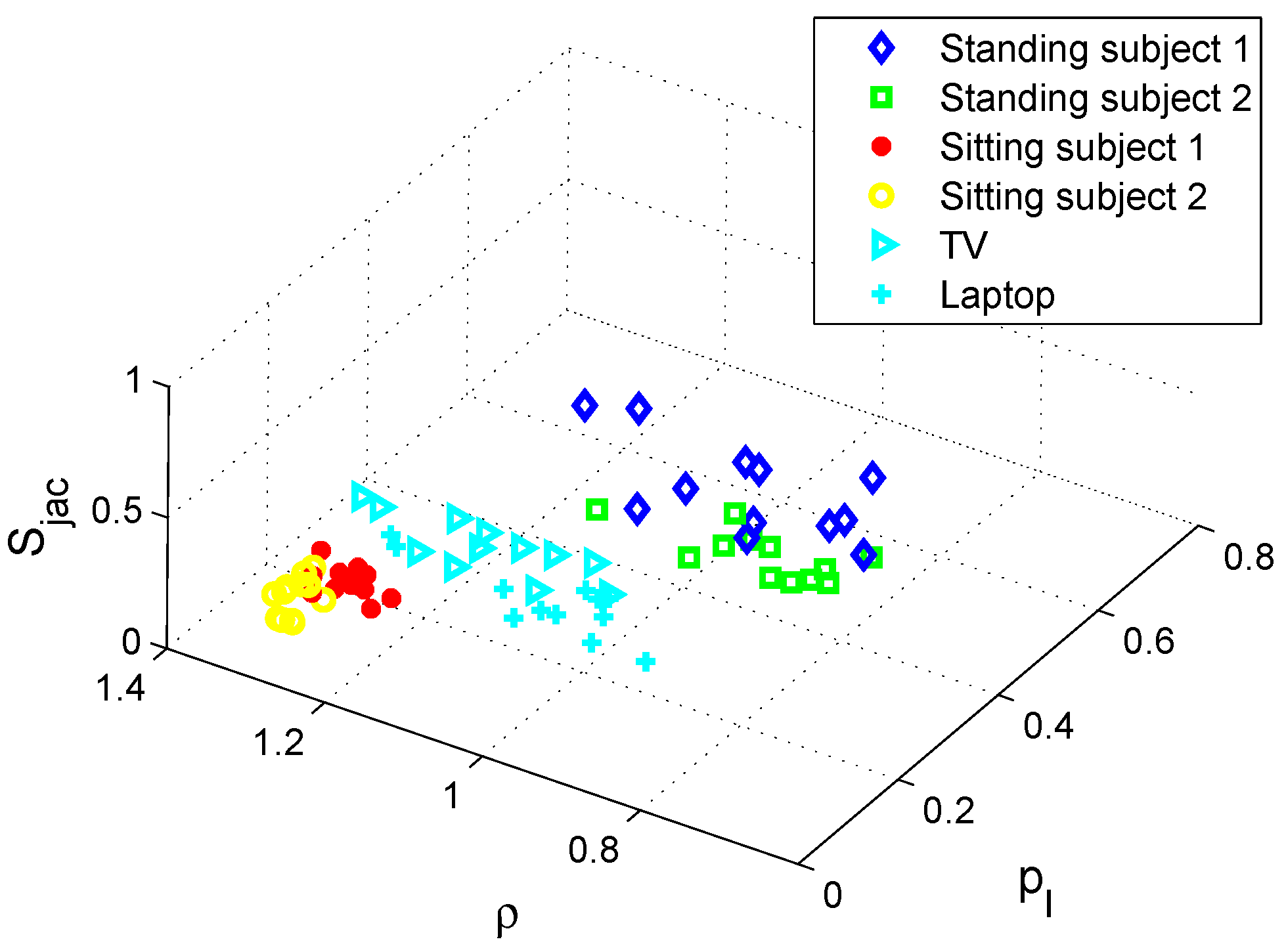

4.2. Static Human Subject Recognition

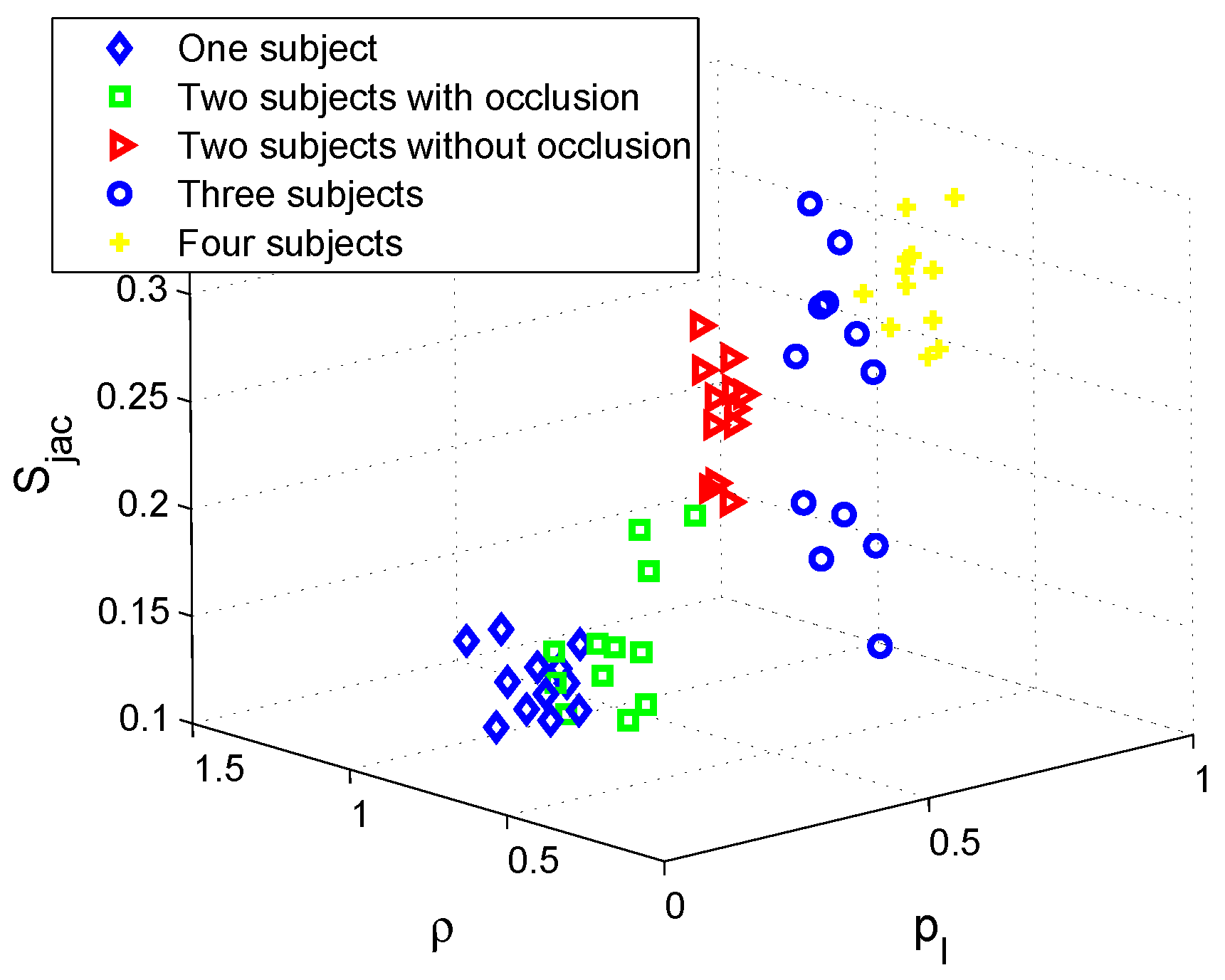

4.3. Human Scenario Recognition

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Song, B.; Vaswani, N.; Roy-Chowdhury, A.K. Closed-Loop Tracking and Change Detection in Multi-Activity Sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- Oliver, N.M.; Rosario, B.; Pentland, A.P. A Bayesian computer vision system for modeling human interactions. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 831–843. [Google Scholar] [CrossRef]

- Tang, N.C.; Lin, Y.; Weng, M.; Liao, H.Y.M. Cross-Camera Knowledge Transfer for Multiview People Counting. IEEE Trans. Image Proc. 2015, 24, 80–93. [Google Scholar] [CrossRef] [PubMed]

- Hao, Q.; Hu, F.; Xiao, Y. Multiple human tracking and identification with wireless distributed hydroelectric sensor systems. IEEE Syst. J. 2009, 3, 428–439. [Google Scholar] [CrossRef]

- Sun, Q.; Hu, F.; Hao, Q. Human Movement Modeling and Activity Perception Based on Fiber-Optic Sensing System. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 743–754. [Google Scholar] [CrossRef]

- Tapia, E.; Intille, S.; Larson, K. Activity recognition in the home using simple and ubiquitous sensors. In Proceedings of the International Conference on Pervasive Computing, Vienna, Austria, 21–23 April 2004; pp. 158–175.

- Wahl, F.; Milenkovic, M.; Amft, O. A Distributed PIR-based Approach for Estimating People Count in Office Environments. In Proceedings of the 2012 IEEE 15th International Conference on Computational Science and Engineering (CSE), Paphos, Cyprus, 5–7 December 2012; pp. 640–647.

- Lu, J.; Gong, J.; Hao, Q.; Hu, F. Space encoding based compressive multiple human tracking with distributed binary pyroelectric infrared sensor networks. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Hamburg, Germany, 13–15 September 2012; pp. 180–185.

- Zappi, P.; Farella, E.; Benini, L. Tracking Motion Direction and Distance With Pyroelectric IR Sensors. IEEE Sens. J. 2010, 10, 1486–1494. [Google Scholar] [CrossRef]

- Yun, J.; Song, M. Detecting Direction of Movement Using Pyroelectric Infrared Sensors. IEEE Sens. J. 2014, 14, 1482–1489. [Google Scholar] [CrossRef]

- Sun, Q.; Hu, F.; Hao, Q. Mobile Target Scenario Recognition Via Low-Cost Pyroelectric Sensing System: Toward a Context-Enhanced Accurate Identification. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 375–384. [Google Scholar] [CrossRef]

- Kaushik, A.R.; Lovell, N.H.; Celler, B.G. Evaluation of PIR detector characteristics for monitoring occupancy patterns of elderly people living alone at home. In Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 23–26 August 2007; pp. 2802–2805.

- Lu, J.; Zhang, T.; Hu, F.; Hao, Q. Preprocessing Design in Pyroelectric Infrared Sensor-Based Human-Tracking System: On Sensor Selection and Calibration. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 263–275. [Google Scholar] [CrossRef]

- Sun, Q.; Hu, F.; Hao, Q. Context Awareness Emergence for Distributed Binary Pyroelectric Sensors. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Salt Lake City, UT, USA, 5–7 September 2010; pp. 162–167.

- Yue, T.; Hao, Q.; Brady, D.J. Distributed binary geometric sensor arrays for low-data-throughput human gait biometrics. In Proceedings of the IEEE 7th Sensor Array and Multichannel Signal Processing Workshop (SAM), Hoboken, NJ, USA, 17–20 June 2012; pp. 457–460.

| Classifier | Standing Subject | Sitting Subject | Electrical Device | Accuracy (%) |

|---|---|---|---|---|

| k-centroid | 30/36 | 34/36 | 36/36 | 90.84 |

| knn | 33/36 | 33/36 | 36/36 | 94.44 |

| Classifier | One Sub | Two Sub (Occlusion) | Two Sub | Three Sub | Four Sub | Accuracy (%) |

|---|---|---|---|---|---|---|

| k-centroid | 27/36 | 24/36 | 36/36 | 35/36 | 35/36 | 87.22 |

| knn | 33/36 | 27/36 | 36/36 | 35/36 | 35/36 | 92.22 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Q.; Shen, J.; Qiao, H.; Huang, X.; Chen, C.; Hu, F. Static Human Detection and Scenario Recognition via Wearable Thermal Sensing System. Computers 2017, 6, 3. https://doi.org/10.3390/computers6010003

Sun Q, Shen J, Qiao H, Huang X, Chen C, Hu F. Static Human Detection and Scenario Recognition via Wearable Thermal Sensing System. Computers. 2017; 6(1):3. https://doi.org/10.3390/computers6010003

Chicago/Turabian StyleSun, Qingquan, Ju Shen, Haiyan Qiao, Xinlin Huang, Chen Chen, and Fei Hu. 2017. "Static Human Detection and Scenario Recognition via Wearable Thermal Sensing System" Computers 6, no. 1: 3. https://doi.org/10.3390/computers6010003