Data Partitioning Technique for Improved Video Prioritization †

Abstract

:1. Introduction

2. Source Coding Context

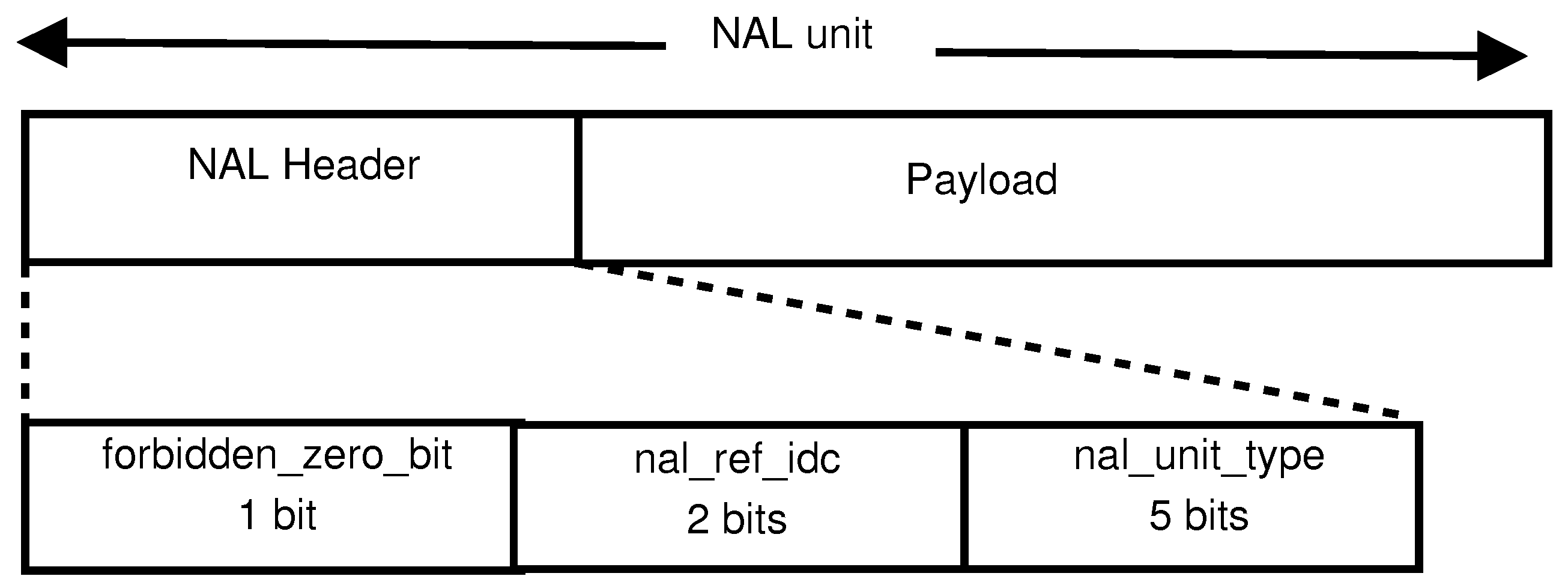

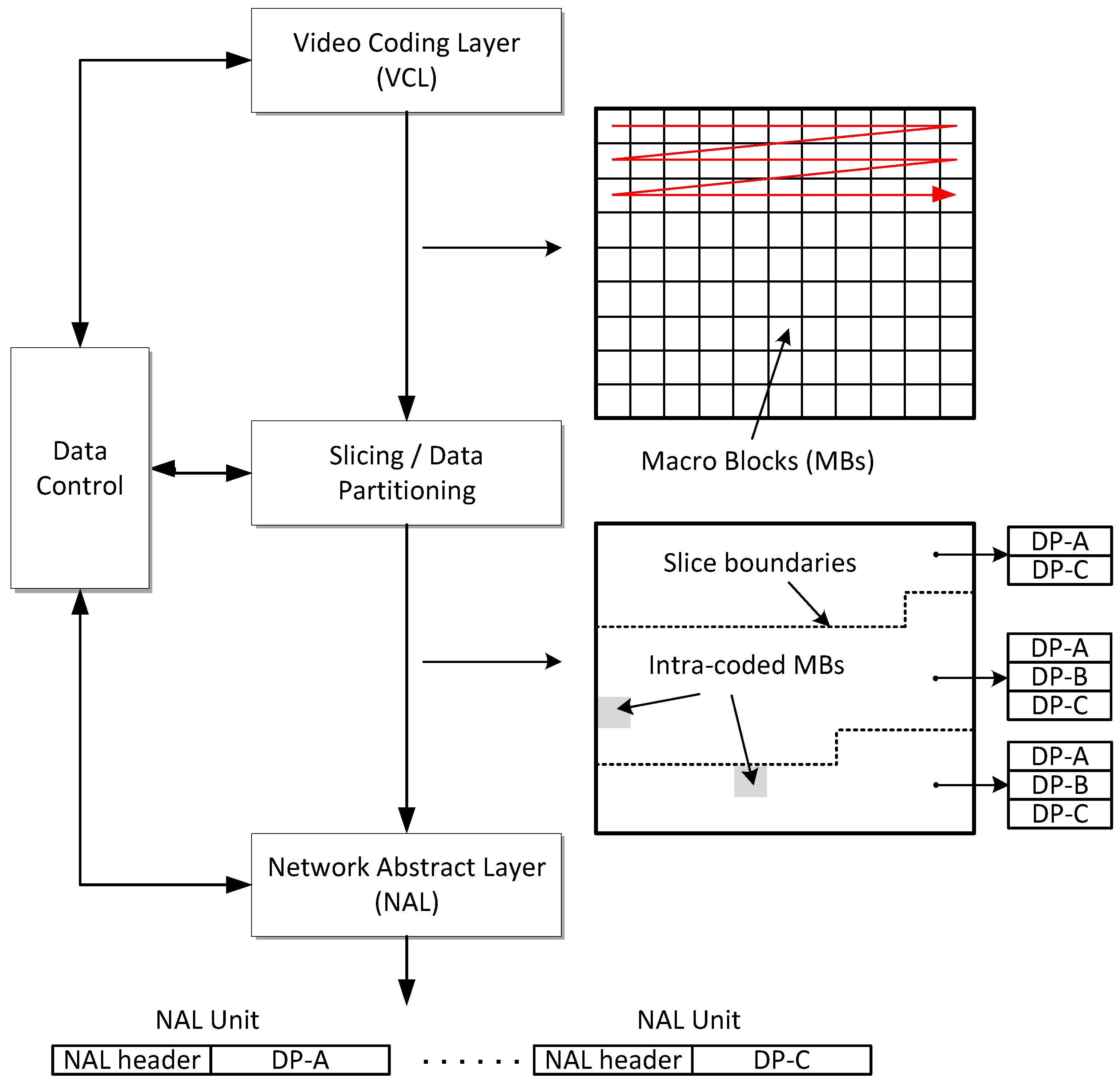

2.1. H.264/AVC Coding

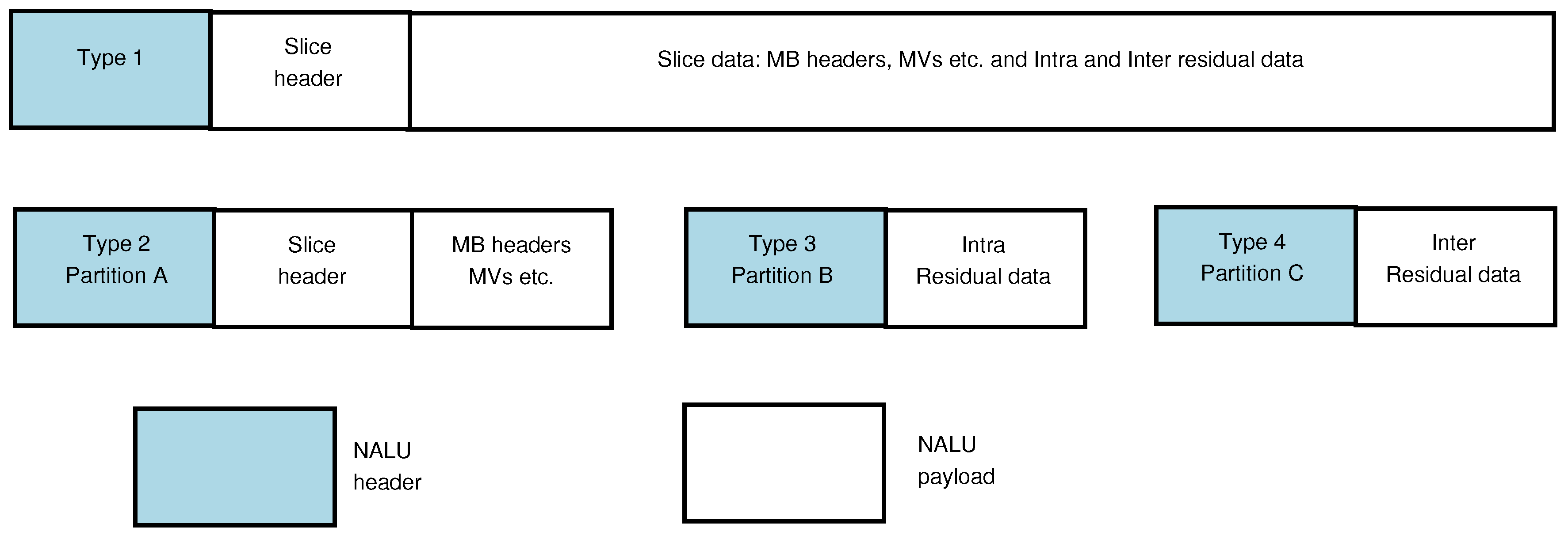

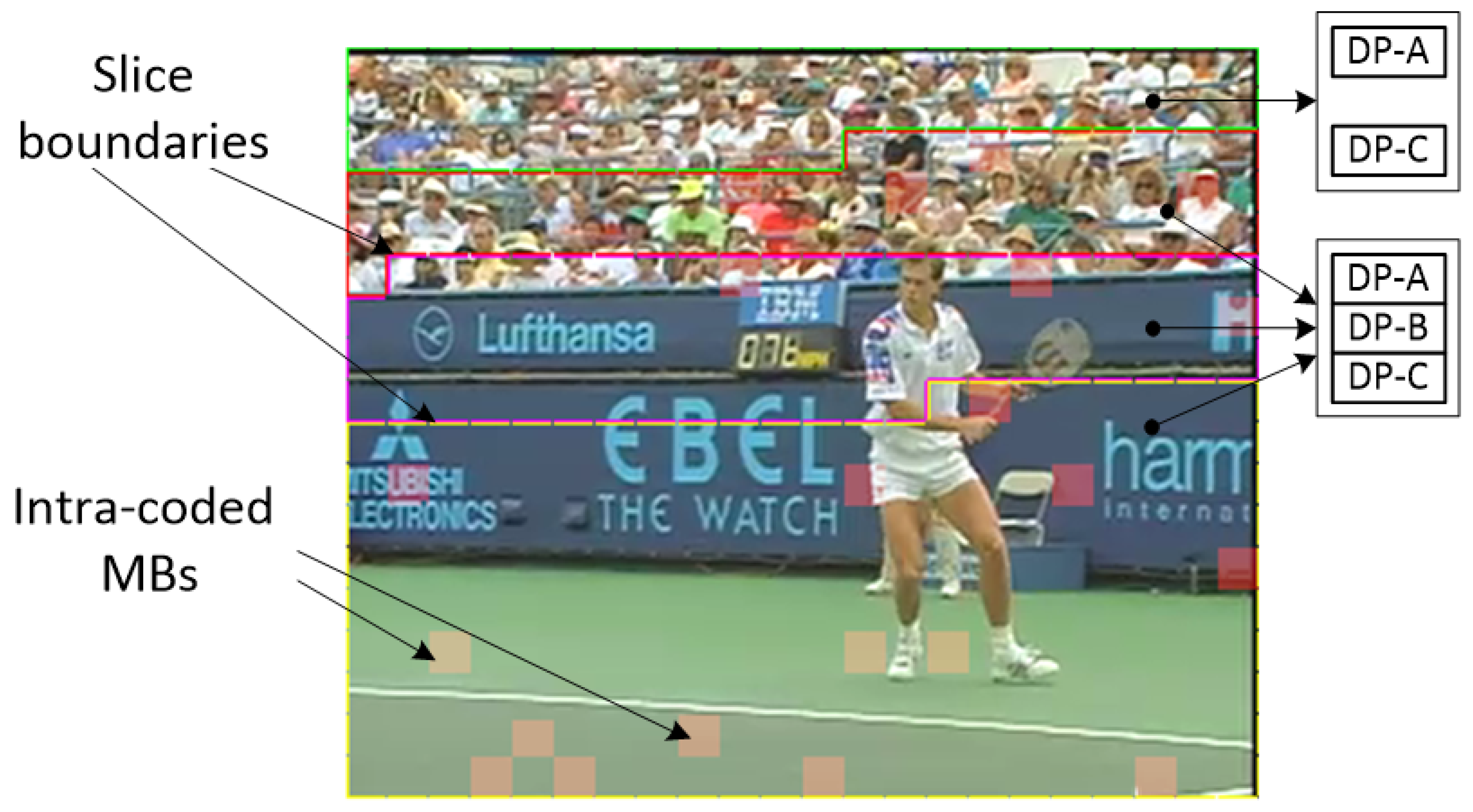

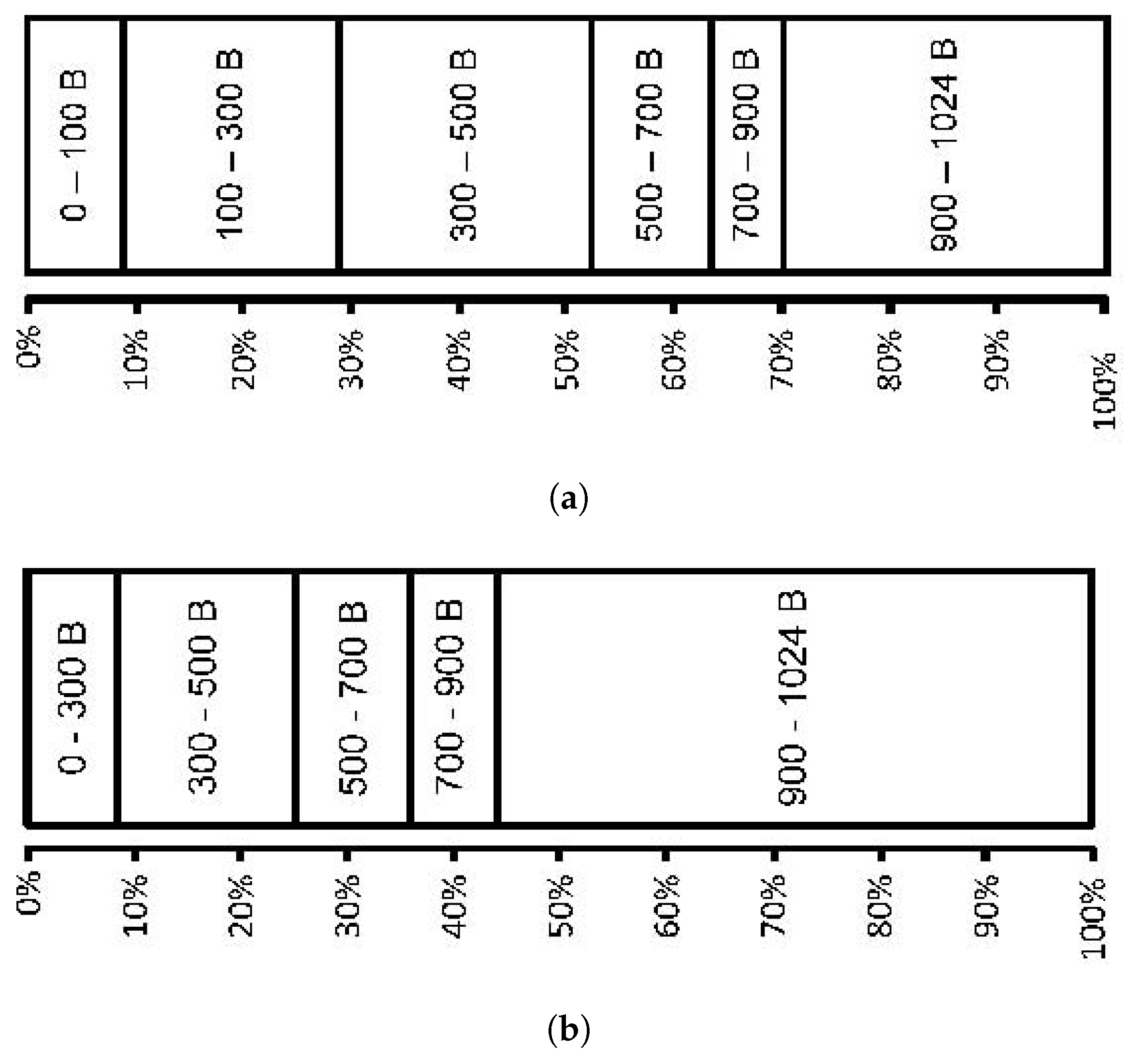

2.2. Data Partitioning

2.3. Concealing Lost Packets

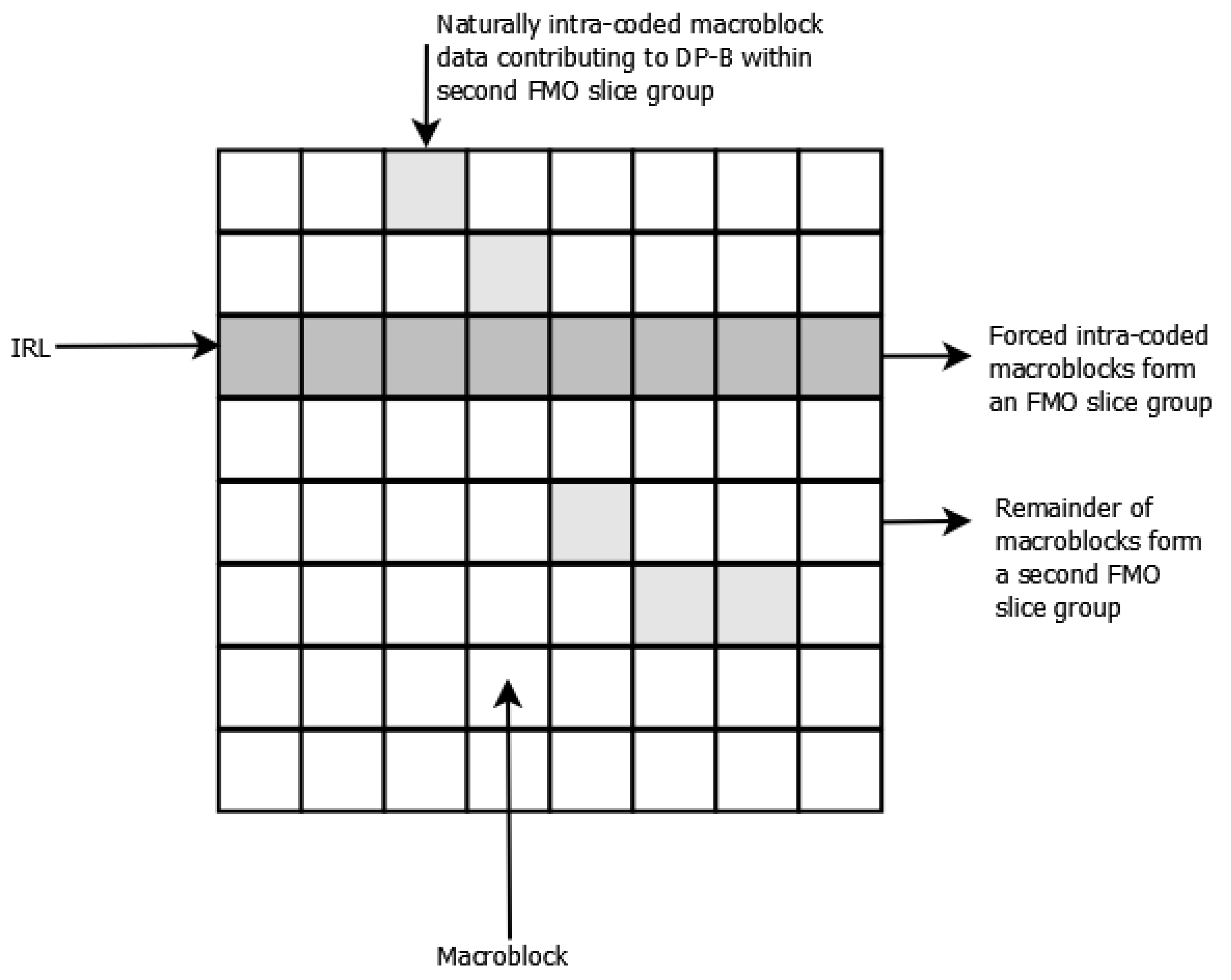

3. Implementation with FMO

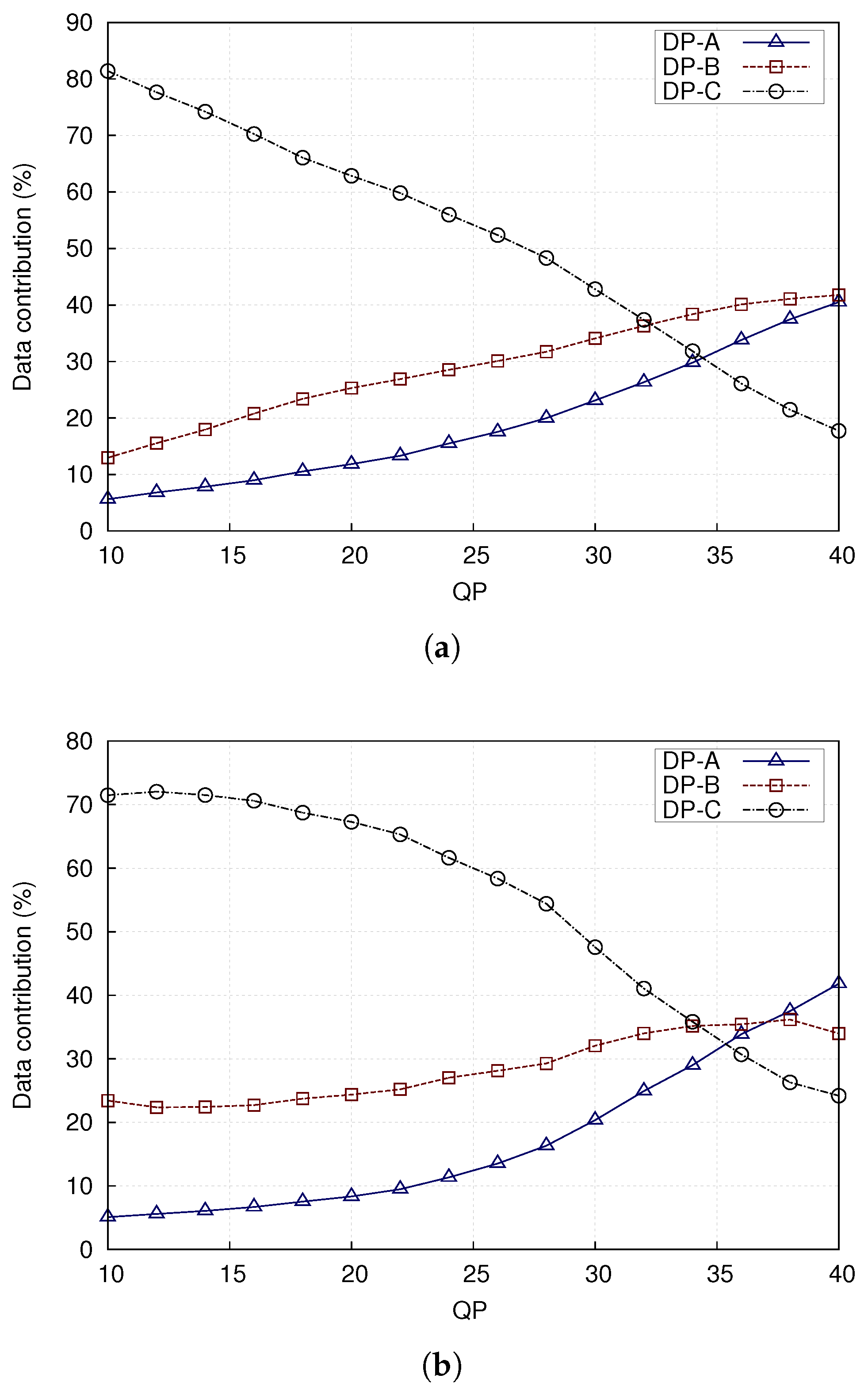

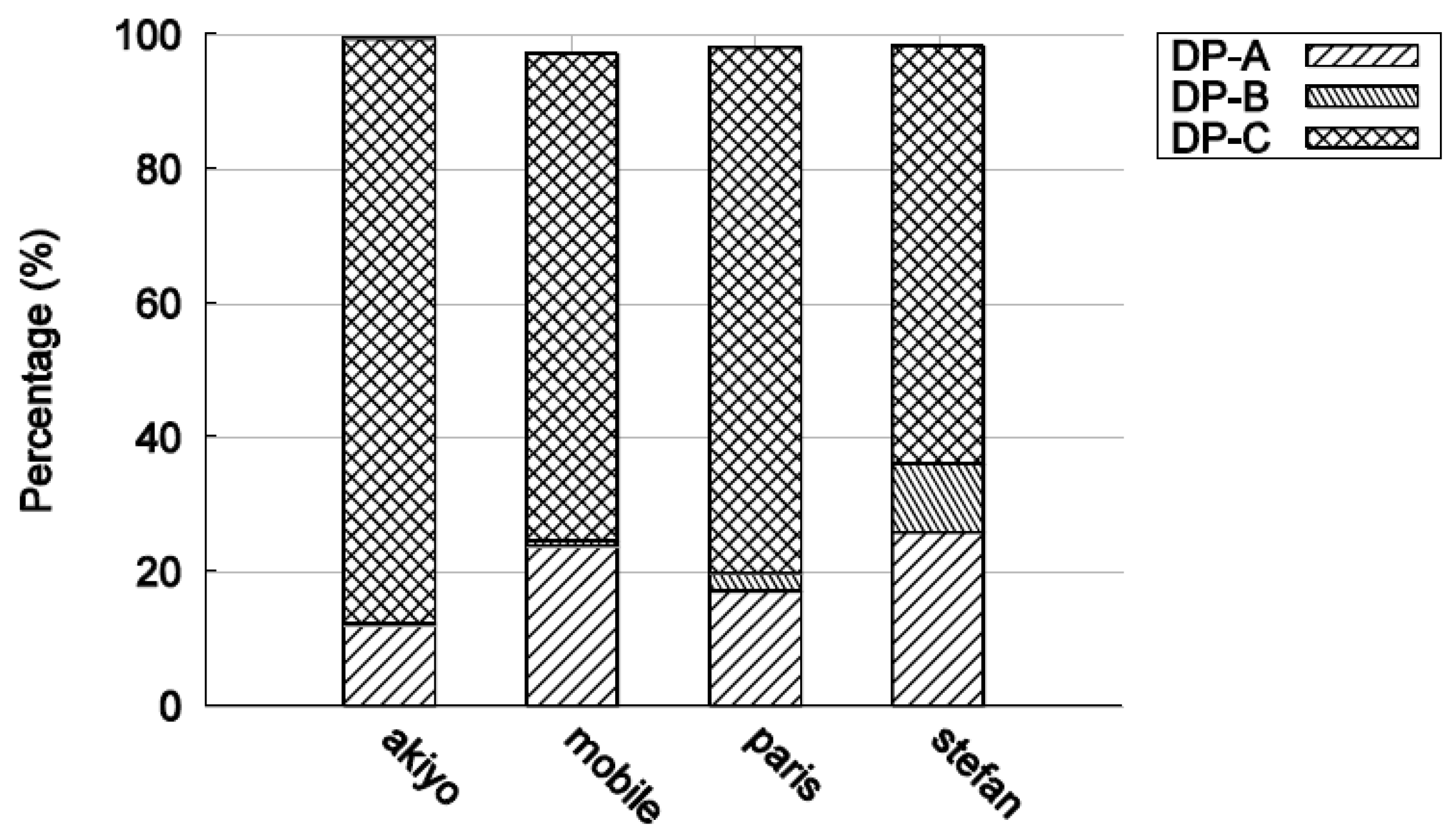

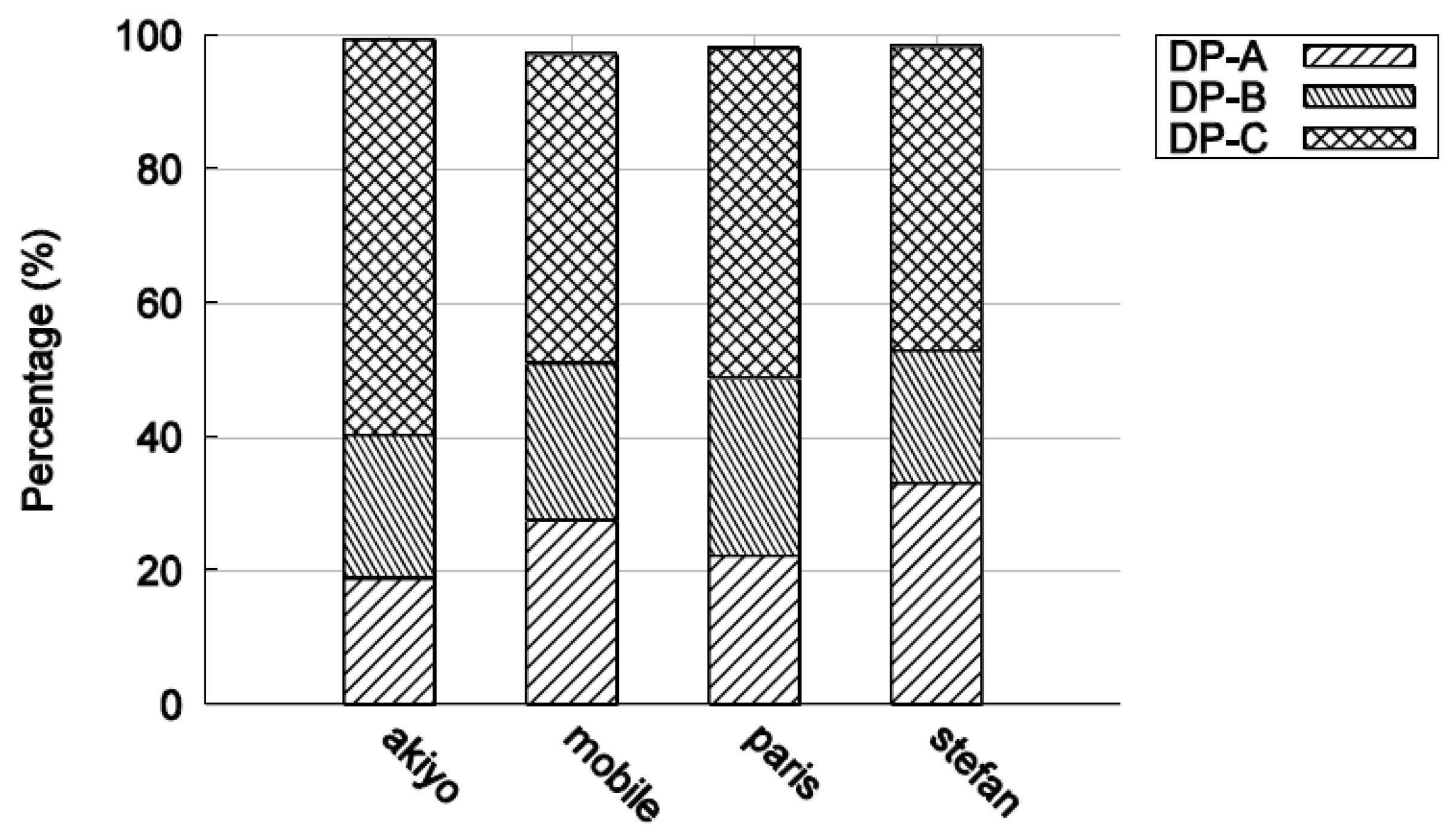

4. Performance Analysis

5. Evaluation

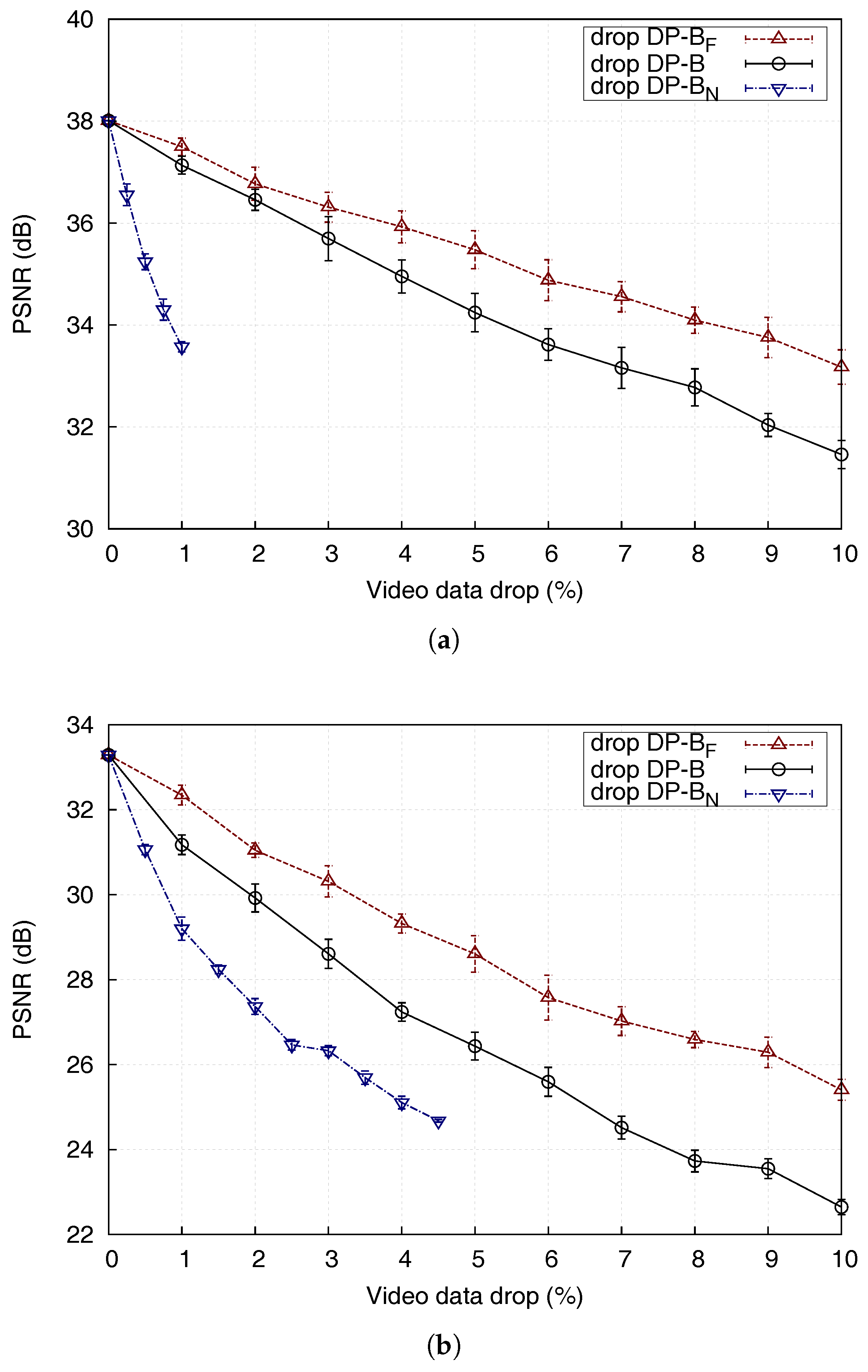

5.1. Effect of Packet Drops

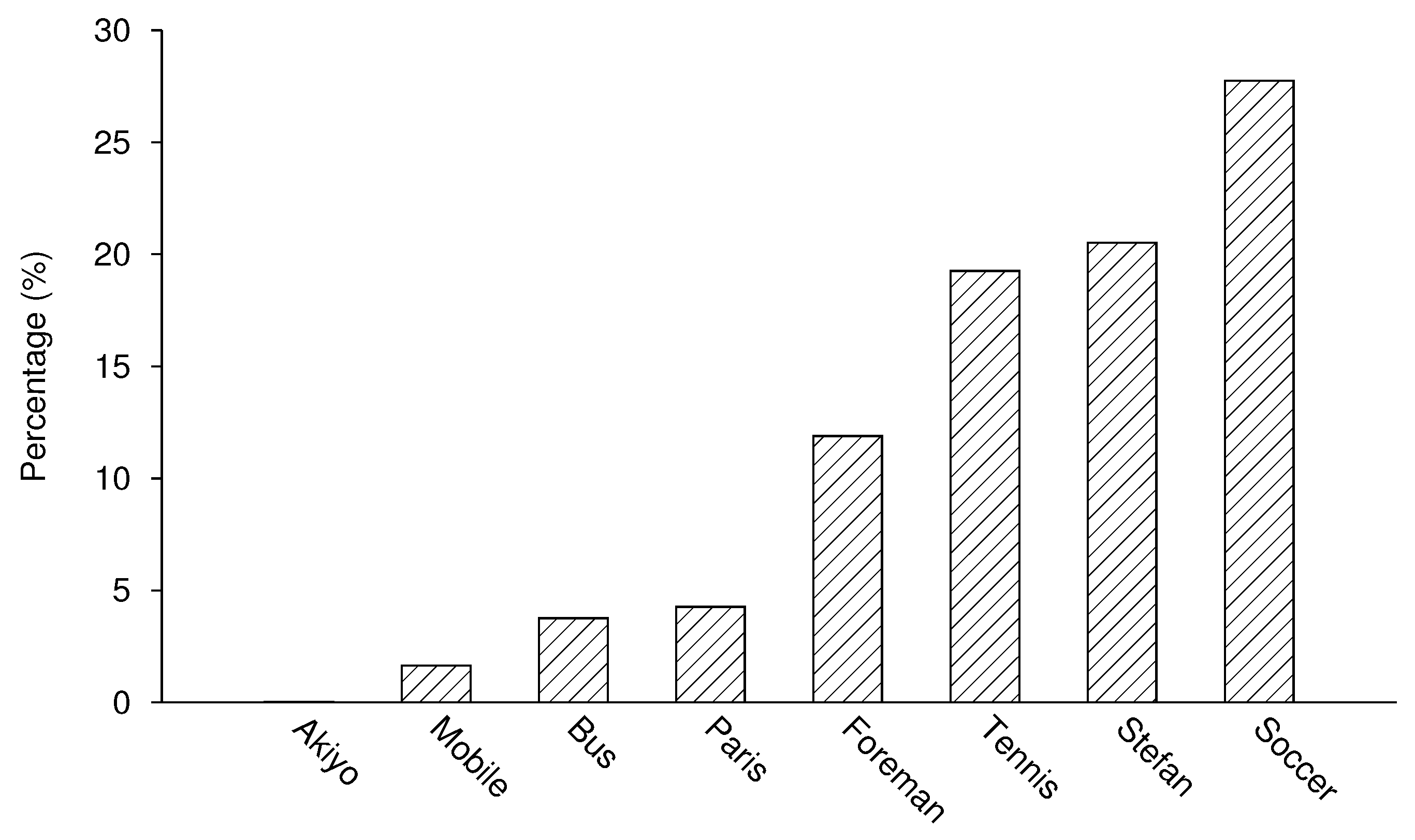

5.2. Intra-Refresh

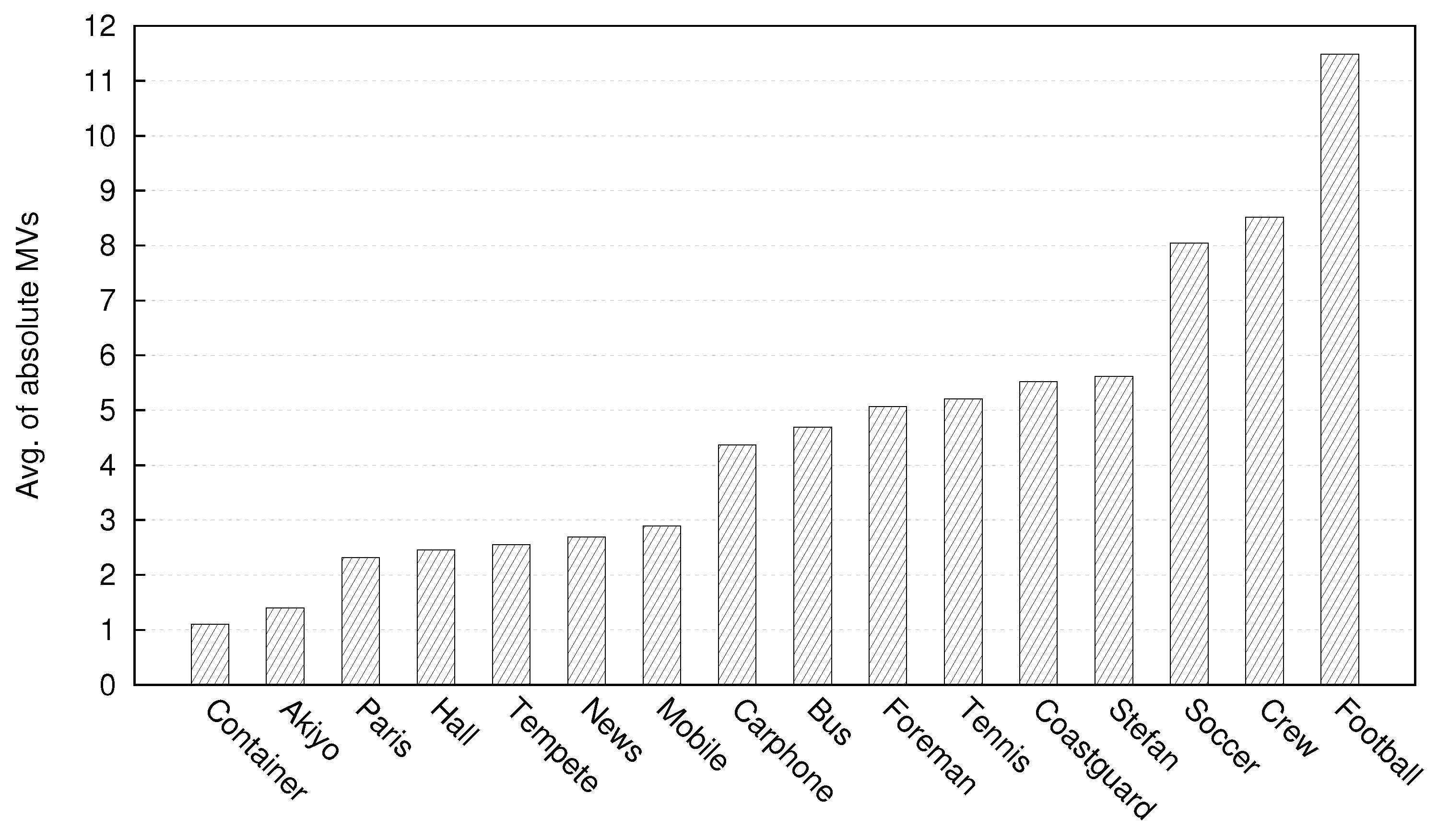

5.3. Impact of Data Loss

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Wiegand, T.; Sullivan, G.; Bjontegaard, G.; Luthra, A. Overview of the H.264/AVC video coding standard. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 560–576. [Google Scholar] [CrossRef]

- Talluri, R. Error-resilient coding in the ISO MPEG-4 standard. IEEE Commun. Mag. 1998, 36, 112–119. [Google Scholar] [CrossRef]

- Takashima, Y.; Wada, M.; Murakami, H. Reversible variable length codes. IEEE Trans. Commun. 1995, 43, 158–162. [Google Scholar] [CrossRef]

- Stockhammer, T.; Bystrom, M. H.264/AVC data partitioning for mobile video communication. In Proceedings of the International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 545–548. [Google Scholar]

- Mys, S.; Dhondt, Y.; Van der Walle, D.; De Shrivjer, D.; Van der Walle, R. A performance evaluation of the data partitioning tool in H.264/AVC. SPIE Multimed. Syst. Appl. 2006, 6391, 639102. [Google Scholar]

- Stockhammer, T. Independent data partitions A and B. In Proceedings of the 3rd Meeting of JVT, Fairfax, VA, USA, 6–10 May 2002. [Google Scholar]

- Ye, Y.; Chen, Y. Flexible data partitioning mode for streaming video. In Proceedings of the 4th Meeting of JVT, Klagenfurth, Austria, 22–26 July 2002. Doc. JVT-D136. [Google Scholar]

- Sullivan, G. Seven steps toward a more robust codec design. In Proceedings of the 3rd Meeting of JVT, Fairfax, VA, USA, 6–10 May 2002. Document: JVT-C117. [Google Scholar]

- Lambert, P.; De Neve, W.; Dhondt, Y.; Van de Walle, R. Flexible macroblock ordering in H.264/AVC. J. Vis. Commun. Image Represent. 2006, 17, 358–375. [Google Scholar] [CrossRef]

- Schreier, R.; Rahman, A.; Krishnamurthy, G.; Rothermel, A. Architecture analysis for low-delay video coding. In Proceedings of the IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006; pp. 2053–2056. [Google Scholar]

- Ali, I.; Moiron, S.; Fleury, M.; Ghanbari, M. Congestion Resiliency for Data-Partitioned H.264/AVC Video Streaming over IEEE 802.11e Wireless Networks. Int. J. Handheld Comput. Res. 2012, 3, 55–73. [Google Scholar] [CrossRef]

- Satyan, R.; Nyamweno, S.; Labeau, F. Comparison of intra updating methods for H.264. In Proceedings of the 10th International Symposium on Wireless Personal Multimedia Communications, Sydney, Australia, 7–10 September 2007; pp. 996–999. [Google Scholar]

- Haskell, P.; Messerschmitt, D. Resynchronization of motion compensated video affected by ATM cell loss. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, San Francisco, CA, USA, 23–26 March 1992; pp. 545–548. [Google Scholar]

- Schreier, R.; Rothermel, A. Motion adaptive intra refresh for the H.264 video coding standard. IEEE Trans. Consum. Electron. 2006, 52, 249–253. [Google Scholar] [CrossRef]

- Stuhlmüller, K.; Färber, N.; Link, M.; Girod, B. Analysis of video transmission over lossy channels. IEEE J. Sel. Areas Commun. 2000, 18, 1012–1032. [Google Scholar] [CrossRef]

- Stockhammer, T.; Kontopodis, D.; Wiegand, T. Rate-distortion optimization for JVT/H.26L video coding in packet loss environment. In Proceedings of the Packet VideoWorkshop, Pittsburgh, PY, USA, 24 April 2002. [Google Scholar]

- Zhang, R.; Regunathan, S.; Rose, K. Video coding with optimal inter/intra-mode switching for packet loss resilience. IEEE J. Sel. Areas Commun. 2000, 18, 966–976. [Google Scholar] [CrossRef]

- Zhang, Y.; Gao, W.; Lu, Y.; Huang, Q.; Zhao, D. Joint source-channel rate-distortion optimization for H.264 video coding over error-prone networks. IEEE Trans. Multimed. 2007, 9, 445–454. [Google Scholar] [CrossRef]

- Schierl, T.; Hannuksela, M.; Wang, Y.K.; Wenger, S. System Layer Integration of High Efficiency Video Coding. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1871–1884. [Google Scholar] [CrossRef]

- Ali, I.; Moiron, S.; Fleury, M.; Ghanbari, M. Refined Data Partitioning for Improved Video Prioritization. In Proceedings of the IEEE 8th Computer Science and Electronic Engineering Conference, Colchester, UK, 28–30 September 2016. [Google Scholar]

- Cisco. Cisco Visual Networking Index: Forecast and Methodology, 2012–2017; Technical Report; Cisco Systems, Inc.: San Jose, CA, USA, 2012. [Google Scholar]

- Stockhammer, T.; Hannuksela, M. H.264/AVC video for wireless transmission. IEEE Wirel. Commun. 2005, 12, 6–13. [Google Scholar] [CrossRef]

- Wenger, S. H.264/AVC over IP. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 645–656. [Google Scholar] [CrossRef]

- Marpe, D.; Schwarz, H.; Wiegand, T. Context-adaptive binary arithmetic coding in the H.264/AVC video compression standard. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 620–636. [Google Scholar] [CrossRef]

- Dhondt, Y.; Mys, S.; Vermeirsch, K.; Van de Walle, R. Constrained inter prediction: Removing dependencies between different data partitions. In Advanced Concepts for Intelligent Vision Systems; Springer: Berlin/Heidelberg, Germany, 2007; pp. 720–731. [Google Scholar]

- Worrall, S.; Fabri, S.; Sadka, A.H.; Kondoz, A.M. Prioritisation of data partitioned MPEG-4 video over mobile networks. Eur. Trans. Telecommun. 2001, 12, 169–174. [Google Scholar] [CrossRef]

- Barmada, B.; Ghandi, M.; Jones, E.; Ghanbari, M. Prioritized transmission of data partitioned H.264 video with hierarchical QAM. IEEE Signal Process. Lett. 2005, 12, 577–580. [Google Scholar] [CrossRef]

- Yang, G.; Shen, D.; Li, V.O.K. UEP for video transmission in space-time coded OFDM systems. In Proceedings of the IEEE INFOCOM, Twenty-Third AnnualJoint Conference of the IEEE Computer and Communications Societies, Hong Kong, China, 7–11 March 2004; pp. 1200–1210. [Google Scholar]

- Wenger, S.; Stockhammer, T. H.26L over IP and H.324 framework. In Proceedings of the 14th Meeting of ITU-T VCEG, Santa Barbara, CA, USA, 24–27 September 2001. Document: VCEG-N52. [Google Scholar]

- Varsa, V.; Hannuksela, M.; Wang, Y.K. Non-normative error concealment algorithms. In Proceedings of the 14th Meeting of ITU-T VCEG, Santa Barbara, CA, USA, 24–27 September 2001. Document: VCEG-N62. [Google Scholar]

- Tan, W.; Setton, E.; Apostolopoulos, J. Lossless FMO and Slice Structure Modification for Compressed H.264 Video. In Proceedings of the IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; Volume 4, pp. 285–288. [Google Scholar]

- Mazataud, C.; Bing, B. A new lossless FMO removal scheme for H.264 videos. In Proceedings of the 18th International Conference on Computer Communications and Networks, San Francisco, CA, USA, 3–6 August 2009; pp. 1–6. [Google Scholar]

- Im, S.; Pearmain, A. An optimized mapping algorithm for classified video transmission with the H.264 Flexible Macroblock Ordering. In Proceedings of the IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006; pp. 1445–1448. [Google Scholar]

- Argyropoulos, S.; Tan, A.; Thomos, N.; Arikan, E.; Strintzis, M. Robust transmission of multi-view video streams using flexible macroblock ordering and LT codes. In Proceedings of the 3DTV Conference, Kos Island, Greece, 7–9 May 2007; pp. 1–4. [Google Scholar]

- Vu, T.; Aramvith, S. An error-resilience technique based on FMO and error propagation for H.264 video coding in error prone channels. In Proceedings of the IEEE International Conference on Multimedia and Expo, New York, NY, USA, 28 June–3 July 2009; pp. 205–208. [Google Scholar]

- Xu, J.; Wu, Z. Joint adaptive intra refreshment and unequally error protection algorithms for robust transmission of H.264/AVC video. In Proceedings of the IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006; pp. 693–696. [Google Scholar]

- Wenger, S.; Horowitz, M. FMO: Flexible Macroblock Ordering. In Proceedings of the 3rd Meeting of Joint Video Team (JVT) of ISO/IEC MPEG & ITU-T VCEG, Munich, Germany, 15–19 March 2004. Document: JVT-C089. [Google Scholar]

- Bing, B. 3D and HD Broadband Video Networking; Artech House: Boston, MA, USA, 2010. [Google Scholar]

- Wenger, S. Coding Performance when not using In-Picture Prediction. In Proceedings of the 3rd Meeting of Joint Video Team (JVT) of ISO/IEC MPEG & ITU-T VCEG, Munich, Germany, 15–19 March 2004. Document: JVT-B024. [Google Scholar]

- Richardson, I. H.264 and MPEG-4 Video Compression; Wiley & Sons Ltd.: Chichester, UK, 2004. [Google Scholar]

- Hannuksela, M.; Wang, Y.; Gabbouj, M. Isolated regions in video coding. IEEE Trans. Multimed. 2004, 6, 259–267. [Google Scholar] [CrossRef]

- Ali, I.; Moiron, S.; Fleury, M.; Ghanbari, M. Packet Prioritization for H.264/AVC Video with Cyclic Intra-Refresh Line. J. Vis. Commun. Image Represent. 2013, 24, 486–498. [Google Scholar] [CrossRef]

- Stockhammer, T. Dynamic adaptive streaming over HTTP –: Standards and design principles. In Proceedings of the Second Annual ACM Conference on Multimedia Systems, San Jose, CA, USA, 23–25 February 2011; pp. 133–144. [Google Scholar]

- Seufert, M.; Egger, S.; Slanina, M.; Zinner, T.; Hoßfeld, T.; Tran-Gia, P. A Survey on Quality of Experience of HTTP Adaptive Streaming. IEEE Commun. Surv. Tutor. 2015, 17, 469–492. [Google Scholar] [CrossRef]

| NALU Type | Class | Content of NALU |

|---|---|---|

| 0 | - | Unspecified |

| 1 | VCL | Coded slice |

| 2 | VCL | Coded slice partition A |

| 3 | VCL | Coded slice partition B |

| 4 | VCL | Coded slice partition C |

| 5 | VCL | Coded slice of an IDR picture |

| 6–12 | Non-VCL | Supplementary information, Parameter sets, etc. |

| 13–23 | - | Reserved |

| 24–31 | - | Unspecified |

| Available Partitions | Error Concealment Method |

|---|---|

| A and B | Conceal missing MBs with MVs from partition-A and texture from partition-B, optionally where appropriate perform intra-concealment |

| A and C | Conceal missing MBs with MVs from partition-A and texture from partition-C, optionally where appropriate perform inter-concealment |

| A | Conceal missing MBs using MVs from partition-A |

| B and/or C | Drop the partitions and either employ whole frame concealment or use the MVs from the MB row spatially above the missing MBs. |

| Data Loss Rates: | Video Quality Gain (dB) | ||||

|---|---|---|---|---|---|

| 2% | 4% | 6% | 8% | 10% | |

| Foreman | 1.29 | 1.91 | 2.29 | 3.17 | 2.54 |

| Bus | 0.88 | 2.27 | 3.51 | 2.96 | 3.89 |

| Tennis | 0.95 | 2.06 | 3.19 | 3.50 | 4.44 |

| Soccer | 3.46 | 3.72 | 5.02 | 5.43 | 5.47 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, I.A.; Moiron, S.; Fleury, M.; Ghanbari, M. Data Partitioning Technique for Improved Video Prioritization. Computers 2017, 6, 23. https://doi.org/10.3390/computers6030023

Ali IA, Moiron S, Fleury M, Ghanbari M. Data Partitioning Technique for Improved Video Prioritization. Computers. 2017; 6(3):23. https://doi.org/10.3390/computers6030023

Chicago/Turabian StyleAli, Ismail Amin, Sandro Moiron, Martin Fleury, and Mohammed Ghanbari. 2017. "Data Partitioning Technique for Improved Video Prioritization" Computers 6, no. 3: 23. https://doi.org/10.3390/computers6030023