1. Introduction

Why do people cooperate? Economists usually assume that people are actively pursuing their material self-interest, carefully processing and judiciously weighing their options. Obviously, this is not always the case. Some people choose to ignore information on others and cooperate unconditionally. Some governments, for example, self commit to reduce their CO-emission regardless of the commitments of others. These decisions would sometimes be pejoratively described as naive or ignorant and subject to the exploitation of others. We show in this paper that unconditional cooperators (UCs) can actually survive in the presence of two other types of agents: defectors (never cooperating) and conditional cooperators (CCs). By simulating interactions between these three types of agents on graphs, we show that a small fraction of UCs helps to sustain and stabilize cooperation in environments where there would be no cooperation without them.

Why is it surprising that UCs survive and even help stabilize cooperation in the population when they are competing for resources with CCs and defectors? In most interactions, cooperation is costly. If using information about others comes with low costs, using information can prevent wasting resources by avoiding cooperation with those that would not reciprocate (e.g., defectors). Furthermore, cooperation with defectors might be interpreted as a signal to others that the agent with whom one has cooperated is considered trustworthy, which lowers the quality of information on which agents like CCs can condition. From an evolutionary point of view, UCs thus seem to favor defectors.

Although ignoring information that comes with no cost seems irrational, experiments show that humans are even willing to pay in order to receive no information about others in strategic interactions [

1]. One explanation for this

strategic ignorance may be that it offers an excuse to behave egoistically without the psychological costs that come with knowing how one’s action affects others [

2]. In the context of self-commitment, Carrillo and Mariotti [

3] argue that people may prefer no information, fearing the impact that a change of their preconceived ideas could have on their behavior. Additionally to these studies, we show that strategic ignorance can serve not just selfish interests, but can also increase long-run welfare of an individual and other agents. More specifically, by ignoring information about defectors in their interactions, UCs can help promote and stabilize cooperation.

Much research on the interplay between strategies like (un)conditional cooperation and defection has been done by evolutionary game theorists, mostly through the study of games modeling a social dilemma (e.g., the prisoner’s dilemma game). Most studies focus on the question of whether a strategy like UC can survive when competing with other strategies, usually not more than 2–3, and whether any cooperation can be sustained on a population level.

1 Although we use a typical model from this area [

9,

10], our research question is different from the questions usually studied in evolutionary game theory. Within a range of parameters, the model we use is known to sustain cooperation among conditional cooperators and defectors. Our question then is how does the insertion of a fraction of UCs affect (1) the outcomes for UCs, (2) the behavior of agents with other strategies and (3) the performance of the entire system of agents. Hence, our analysis will not focus on the question of whether there is any cooperation in a population of agents, but by how much more cooperation decreases or increases in the population. Furthermore, evolutionary game theorists usually assume that a strategy like UC can enter the population through mutation at any point in time; we induce UCs to the population in a way similar to an intervention of a policy maker or an event changing a fraction of conditional cooperators to unconditional cooperators at once to affect the entire population. Although we frame our study from the perspective of an external decision-maker, agents can nevertheless still explore or mutate. In this light, the work of Han et al. [

11] on finite population dynamics bears mentioning. They show cycling among cooperation, defection and a third strategy, which is either (generous) tit-for-tat, win-stay-lose-shift or a generalized version of generous tit-for-tat (intention recognition). Han et al. [

12] ask a related question when they study a decision-maker who can interfere in a system to achieve preferred behavioral patterns and aims to optimize the trade-off between the cost of interference and gain from achieving the desired system. Intervention in Han et al.’s study comes in the form of a decision-maker who has fixed resources to reward cooperation, while intervention in our study comes in the form of introducing unconditional cooperators. Phelps et al. [

13] also discuss a more general version of the problem by analyzing the optimal design infrastructure of a multi-agent system from the viewpoint of a policy maker.

Unconditional cooperation seems disadvantageous in a three-strategy setting where CCs use a strictly reciprocal/tit-for-tat strategy alongside UCs and defectors. In this setting, defectors might first exploit UCs and benefit from them. Eventually, UCs vanish because their resources are consumed from cooperating with defectors, and defectors receive no further benefits from cooperation, since there are no more agents to cooperate with them. CCs then take over the population since they do not cooperate with defectors and increase their fitness by cooperating with other CCs. The model of Nowak and Sigmund [

9,

14], which we use, allows for more sophisticated strategies of CCs based on indirect reciprocity.

2 While CCs using tit-for-tat operate on direct reciprocity, by basing their cooperation on previous interactions with a specific agent, CCs that apply indirect reciprocity base their behavior on the reputation of the agent (i.e., behavior that the other agent has shown in general towards others). Lotem et al. [

26] argue that under indirect reciprocity, unconditional altruism or unconditional cooperation can thrive. They interpret unconditional cooperation as a costly signal towards CCs that boosts the UCs’ reputation and signals their own trustworthiness to ensure future cooperation with CCs. Furthermore, Ohtsuki and Nowak [

10] and Santos et al. [

27] find that when agents are interacting according to a network structure, UCs may survive in larger networks when there are least some small clusters.

Nowak and Sigmund [

28] show that when agents’ behavior includes a small chance for unintended defection, strategies incorporating what they refer to as forgiveness or goodwill outperform strictly (in)direct reciprocal strategies. In groups where all agents cooperate according to indirect reciprocity, unintended defection causes an agent to immediately get a bad reputation. In this scenario, cooperation breaks down easily, and distrust can spread quickly among agents and cannot be restored. As Nowak and Sigmund show, mild degrees of goodwill rebuild trust and keep up cooperation, while still preventing invasion by defectors. In this light, UCs can inject goodwill or forgiveness in the system by cooperating regardless of the previous actions of players, thereby restoring trust.

Although unconditional cooperation seems to be disadvantageous at first glance, its consequences for an agent and the population turn out to be less clear once we enter worlds with indirect reciprocity. Our question therefore is how a small fraction of UCs set into a population of CCs and defectors affects the evolution of cooperation. In the following sections, we present a formal model based on the work of Nowak and Sigmund (

Section 2) and its implementation for simulation in

Section 3). In

Section 4, we compare worlds with small fractions of UCs with worlds without UCs. We identify in which kinds of environments unconditional cooperation persists and whether it has positive or negative effects on cooperation.

Section 5 concludes by elaborating upon the implications of the results in a broader context.

4. Results

4.1. General Results

In this paper, we test how the introduction of a tiny fraction of unconditional cooperators (UCs) affects cooperation in a population where there are also conditional cooperators (CCs) and defectors. We introduce this “tiny fraction” of UCs by replacing 20% of the CCs in the initial population by UCs. Note that 20% of the CCs may be as little as 2% of the whole population in cases where the starting population of CCs is 10%. Would it lead to more cooperation by encouraging CCs to cooperate more often or will it decrease cooperation by enabling defectors to benefit from the ignorance of UCs? To answer this, we look at the ratio of defectors during the last iteration of a simulation.

9 A high ratio of defectors implies less cooperation in the population. Since cooperation is the only way for the system to generate resources, the ratio of defectors can be interpreted as the inverse of the efficiency of the population. That is, the more defectors there are in the system, the less resources are generated.

Surprisingly, the simulations in which a tiny fraction of UCs was initially present on average had a lower ratio of defectors, thus were more efficient. On average, worlds with UCs had about 8% less defectors during the final iteration compared to worlds without UCs. A Wilcoxon rank sum test comparing all simulations with and without UCs shows that this difference is clearly significant (). This indicates that UCs can actually decrease the fitness of defectors.

To provide a more detailed analysis, we split all simulations into groups. The smallest such group is defined by unique combinations of values of

b,

k,

q,

,

and

. Henceforth, we will refer to these smallest group as worlds.

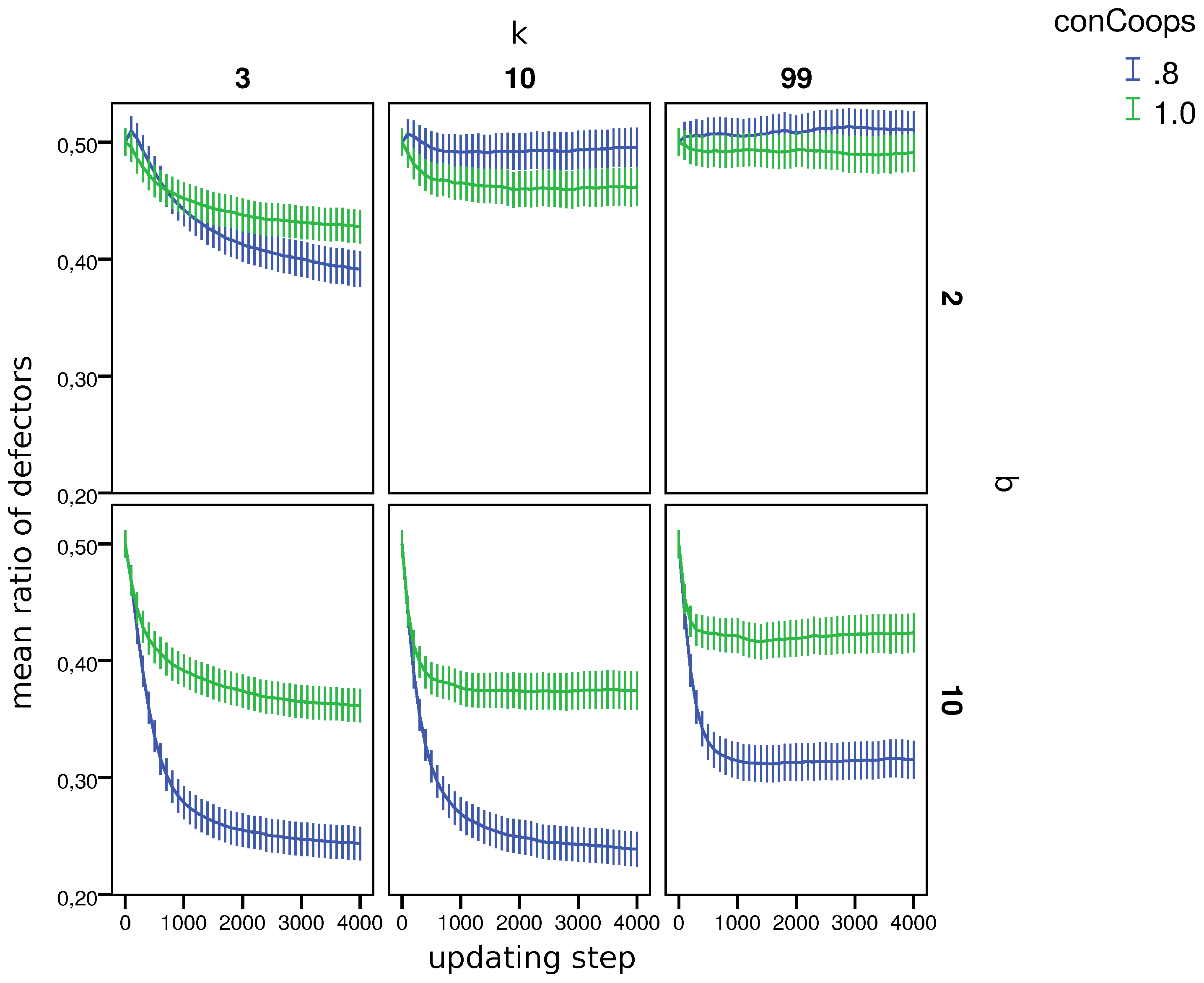

Figure 1 shows that in worlds with

, the initial presence of UCs led to more defectors. In 13 out of 162 worlds, the ratio of defectors was higher in worlds with UCs, and in 69 worlds, it was lower in the remaining worlds; the differences in the ratio of defectors was not significant according in a two-sided Wilcoxon rank sum test (

).

10To identify the parameters that had the largest effect on the ratio of defectors in worlds with and without UCs, we used a step-wise regression builder (based on the AIC). The regression builder used variables

b,

k,

q,

and

to predict the difference with respect to the ratio of defectors in the last round in worlds with and without UCs. We also calculated the evolutionary status of CCs. Specifically, we considered for every world if conditional cooperation is an evolutionarily-stable state [

46]. Whether a strategy is evolutionarily stable is often used as a main predictor for the survival chances of a strategy [

42,

47].

11 This status of conditional cooperators (

) was added as a possible predictor to the step-wise regression builder. An ANOVA comparing whether a model with more predictors performs significantly better in terms of the AIC than a model with less predictors shows that only two predictors (

b and

) contribute significantly to the prediction, where

b is the most important. The predictive power of

thus seems to be negligible.

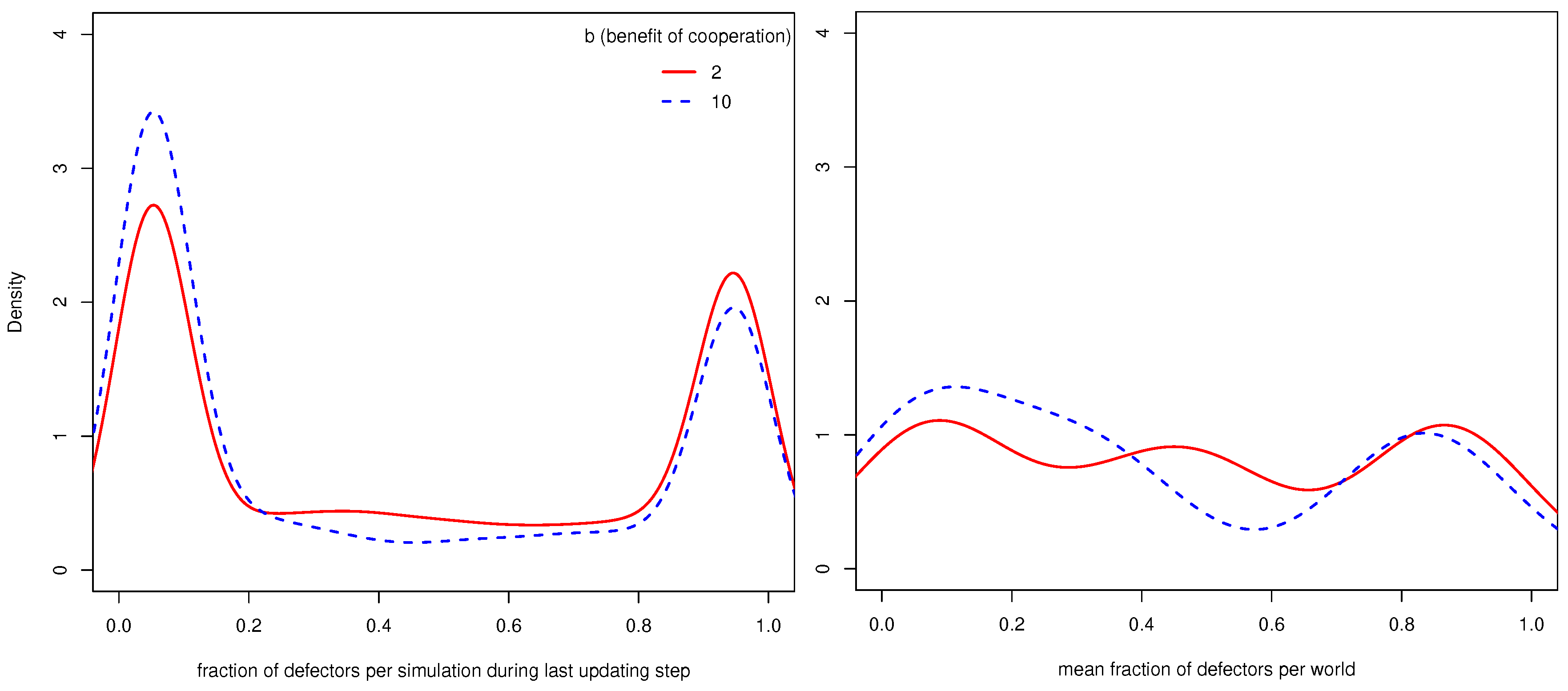

Figure 2 shows on the left the distribution of the fraction of defectors in all simulations where

conCoops was one (there were thus no UCs) during the last updating step. One can see that most simulations converged to only containing defectors or only CCs and that simulations where

b (benefit of cooperation) is 10 less often converge to a state with a high fraction of defectors.

Figure 2 shows on the right the distribution of worlds with regard to the mean fraction of defectors across the 110 simulations per world. While the left density plot shows that most simulations converge to states with only defectors or CCs, the right density plot shows that most worlds allow the population to end up in both states.

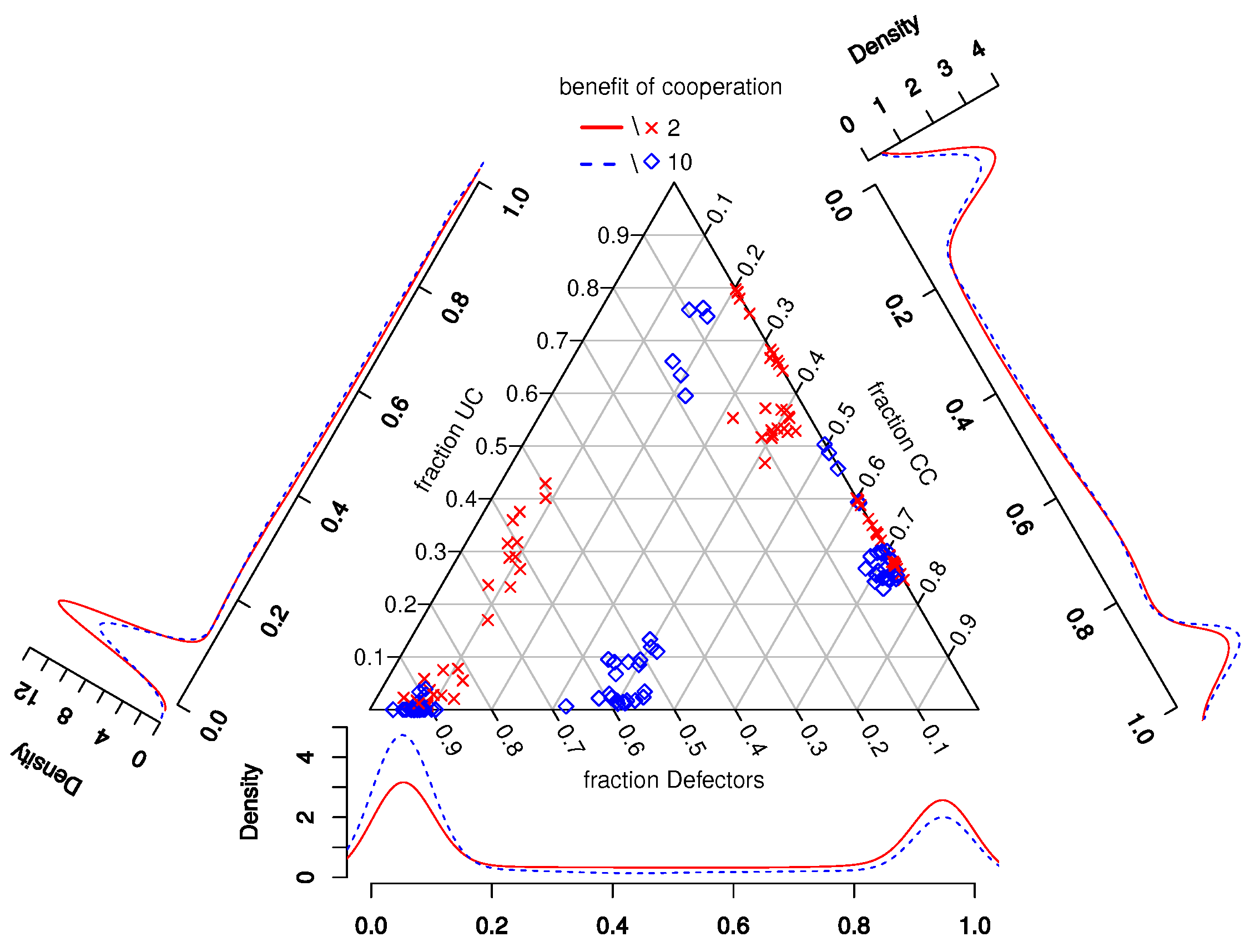

Figure 3 also shows the distribution of strategies among all simulations (density plots) and the per world mean fraction of strategies in worlds (points in the simplex) during the last updating step. By comparing the distributions of the fraction of defectors in

Figure 2 and

Figure 3, one can see that worlds with UCs generally lead to a lower fraction of defectors and that the differences between worlds with

b = 2 and

b = 10 became more pronounced.

4.2. Regression Tree

While the step-wise regression builder indicates which parameters are most important, we discuss in this paragraph how various parameters interact and which of their values make UCs good or bad for defectors. For this purpose, we used the per-world comparison of worlds with

= 0.8 and

= 1 discussed in

Section 4.1 and trained a non-parametric regression tree with the same predictors as those used in the step-wise regression builder to predict the results of the per-world comparison, i.e., whether UCs lead to significantly more or less defectors.

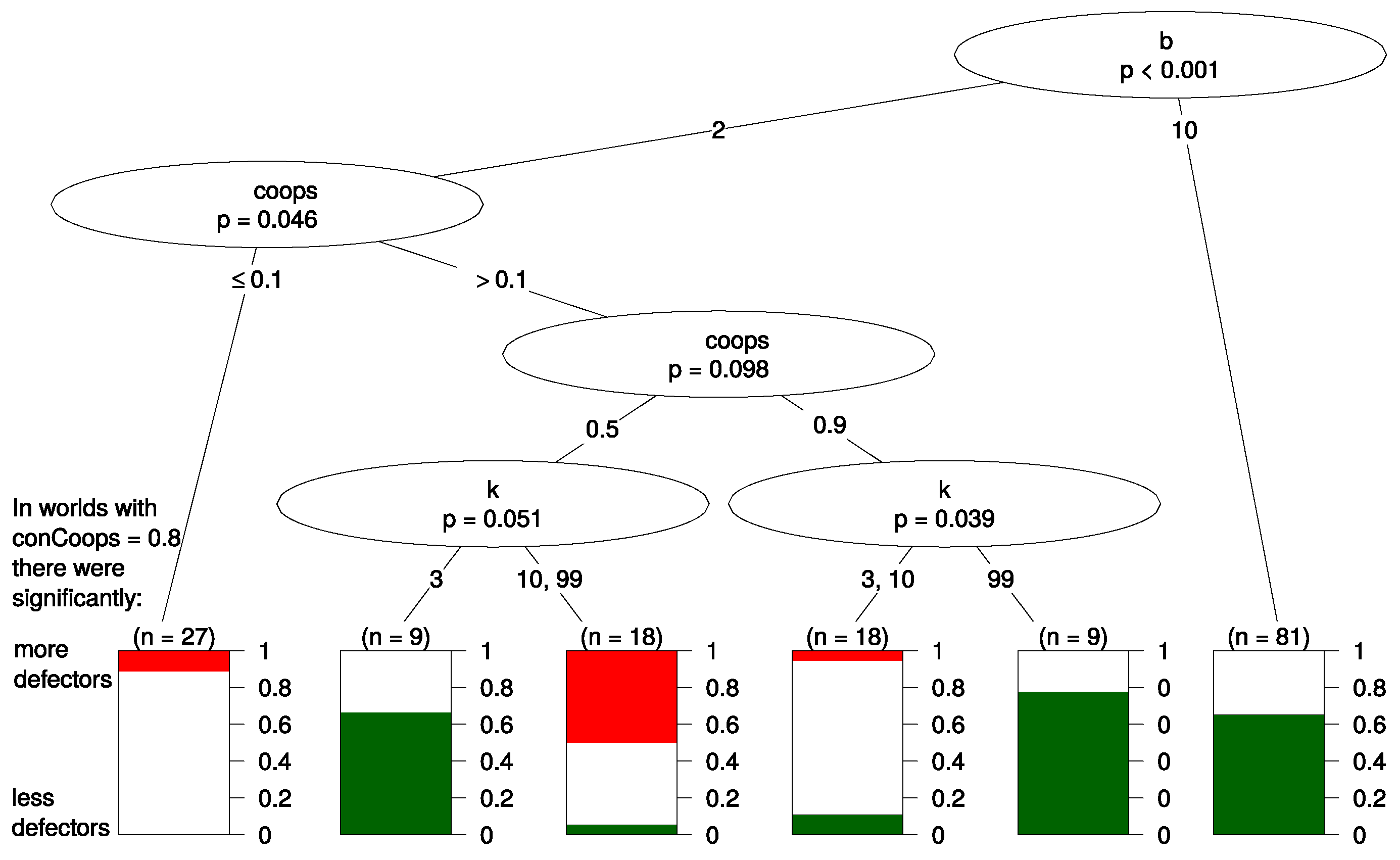

12 Figure 4 shows the resulting tree. Again

b and

appear the most important predictors since they appear in the top splits of the tree. Below, we discuss the most important splits in the tree.

The first split of the tree is made according to parameter b. In the right branch of the tree where the benefit of cooperation is high (), no other parameters helped with predicting the effect of initial UCs. The effect of UCs was in of all worlds negative for defectors (thus, led to more cooperation), and in the other worlds in this branch, UCs had no significant effect. This effect seems robust as it is not dependent on any of the other parameters, such as the updating mechanism or k.

The left branch of the tree has a more complex structure, and we see that if the benefit of cooperation was rather low (), the effect that UCs had on defectors depends mainly on the value of the parameter ; i.e., in these worlds, it was most important how many agents started being cooperators.

On the very left part of the tree (), there are only very few cooperators, and the benefit of cooperation is rather low. Here, UCs led to more defectors in 10% of all worlds and made no difference in the resulting 90%. We interpret this in the following way: in worlds where the relative benefit of cooperation is small and cooperators are initially in the minority, UCs will, if they make any difference at all, benefit the evolution of defectors. The right branch of the tree, which we discussed in the previous paragraph, contained worlds where the parameter values were set in favor of cooperation (). If the relative benefit of cooperation is high, UCs harm the evolution of defection and benefit cooperators.

The middle part of the tree (

) classifies worlds where the parameter settings are ambivalent towards cooperation. On the one hand, a large fraction of the population (at least 50%) is initially cooperators. On the other hand, the relative benefit of cooperation is small. Here, only

k predicts whether UCs benefit or harm defectors. It seems that when there are only very few defectors (

), UCs harm defectors when agents are highly connected (

). In contrast, UCs have hardly any effect on defectors when connectedness is low. If there is a considerable amount of defectors (

), high connectedness makes the presence of UCs beneficial for defectors, but low connectedness makes UCs harmful to defectors. A possible explanation for this finding is that a low

k leads to small isolated clusters of agents. If these clusters do not contain too many defectors or there are only very few connections to other clusters with defectors, cooperators can better withstand invasion by defectors. UCs “fortify” this cluster of cooperation against defection. If there are hardly any defectors, this isolation of cooperators from defectors neither helps nor harms overall cooperation. However, this part of the tree should be interpreted with caution as it seems to depend on a very specific initialization of parameters, and we know that results in evolutionary games are often sensitive to exact parametrization [

40].

4.3. Robustness Check

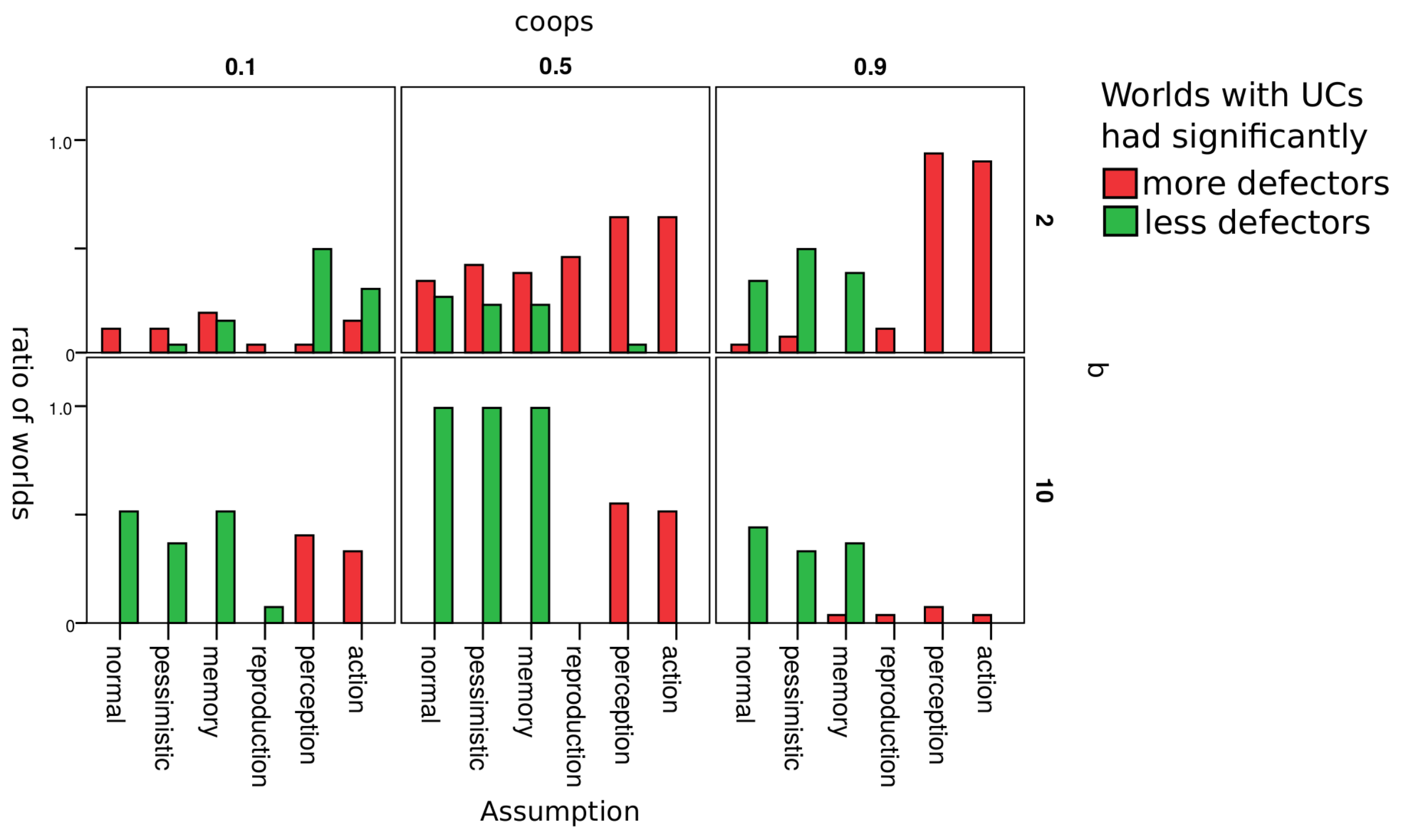

To check the robustness of our results, we changed various assumptions in our model and analyzed how these change the effect of UCs on the final ratio of defectors. Per assumption changed, we reran all worlds another 110 times.

Table 4 lists and describes assumptions changed.

Appendix C discusses changes and their effect in detail.

Figure 5 gives a rough overview on how the change of an assumption changed the influence that UCs had on the ratio of defectors. Red bars indicate the number of worlds where UCs led to more defectors; green bars indicate the ratio of worlds where UCs led to less defectors. Each cell in the figure represents worlds with a unique combination of parameters

and

b. As discussed in the previous section, these turned to out be the most relevant parameters w.r.t. our research question.

Results are hardly affected by whether agents are optimistic or pessimistic w.r.t. agents whose reputation they do not know. Results are also robust w.r.t. to whether an agent’s reputation depends only on the last action of or on the last three actions (assumption memory). As soon as we introduced errors in reproduction, there were hardly any differences between worlds where there were initially UCs and where there were none. We explain this by the fact that UCs can enter populations in worlds with errors in reproduction even if there initially were no UCs. They appear as an error in reproduction. It thus does not seem to matter whether UCs are there from the beginning or enter the population later as mutants. The impact of UCs differs clearly from normal when agents make errors in the perception of the reputation of another agent or in choosing whether to defect or cooperate (action). With these two types of errors, worlds with = 0.8 never lead to less defectors and often to more defectors.

5. Discussion

We tested how the presence of a tiny fraction of unconditional cooperators (UCs) affects cooperation in a population where there are also conditional cooperators (CCs) and defectors. We introduce this “tiny fraction” of UCs by replacing 20% of the CCs in the initial population by UCs. The effect of unconditional cooperation in these populations is two-fold: it allows defectors to benefit from cooperation in their interactions and stops the spread of distrust among CCs. These two mechanisms can, in part, explain our results. Which of the two effects dominates depends on the specific world in which agents interact.

In summary, we find that in worlds where gains from cooperation are high, unconditional cooperation (in small doses) helps sustain cooperation. In worlds where the gains from cooperation are small and where there are initially many defectors, UCs encourage defection. In worlds where cooperation is only mildly beneficial, but there are relatively few defectors, the effect of UCs on cooperation depends on the social structure, which defines interactions among agents.

If one thinks of unconditional cooperation as a form of strategic ignorance ignoring free information about other agents, this strategy opens an agent to exploitation by defectors and, thus, erodes overall cooperation in the population. We find that this is not always the dominant effect. In our model, we even find that cooperation can be boosted through the presence of a tiny fraction of unconditional cooperators (UCs). Under most of the parameter settings examined in our simulations, conditional cooperators (CCs) benefited more from the presence of UCs, than defectors could exploit the naivete of UCs. However, this was not true among all parameter settings we examined. In about 8% of all cases, defectors benefited more from the presence of UCs.

It is fairly straightforward that unconditional cooperation can be exploited by defectors. However, it is also easy to overlook the positive effects that unconditional cooperators can have on conditional cooperators. We think that the presence of unconditional cooperators has two opposing effects on the evolution of cooperation in a three-strategy setting with UCs, CCs and defectors. On the one hand, UCs benefit defectors as any agent that cooperates with defectors increases defectors’ fitness. CCs share less often with defectors since they condition their cooperation. On the other hand, unconditional cooperators rebuild trust among CCs by the following mechanism: defectors immediately receive a bad reputation since they never cooperate. CCs meeting agents with a bad reputation also get a bad reputation because they do not cooperate with that agent. Therefore, one defector can lead to a chain of conditional cooperators getting a bad reputation. This dynamic can lead to a world with very little cooperation even when the beginning ratio of defectors is rather low. In these worlds, defectors would have a higher average fitness as they never “lost” fitness by sharing with others. Unconditional cooperators can stop this spread of bad reputation, since UCs never get a bad reputation because they always share, and CCs hence would always share with UCs and thereby get a good reputation. As a consequence, more CCs will cooperate with each other.

The model we presented in this paper is rather abstract and simplified. Nevertheless, there are phenomena in the real world that share a similar dynamic as depicted in our model. Generally, our findings apply to “missing hero stories”, and we will elaborate on this by looking at vaccination and herd immunity in the real world. Herd immunity against a disease can be seen as a public good. It is in the interest of society that a certain number of people are immunized to prevent the spread of diseases. The costs of each individual to get vaccinated are the rare adverse reactions towards vaccination. Obviously, this situation presents a social dilemma, as it is in the interest of everybody that all the others are vaccinated, but each individual is better off by not taking the risk of adverse reactions to vaccines. One can look at individuals fundamentally resisting vaccination as defectors and most other people as conditional vaccinators who will get vaccinated if sufficiently many others are vaccinated. In this context, all people who get vaccinated irrespective of what others do can be seen as unconditional vaccinators. Defectors benefit from people who vaccinate themselves unconditionally and, thus, decrease the chances of an outbreak. In those cases where an outbreak is imminent for the population (this corresponds to worlds in our model where b is high) it is crucial that conditional vaccinators observe others getting vaccinated to get vaccinated themselves. Hence, the benefits of rebuilding trust dominate the disadvantages of avoiding the support of defectors.

Unconditional cooperation on its own should not be deemed as something immediately disadvantageous for the individual agent or for the population. There is merit in ignoring another agent’s history of defection and in acting in good will in spite of another agent’s consistent betrayal. Whether unconditional cooperation turns out to be advantageous depends on factors, such as the social structure and the ratio with which other strategies are present in the population, and most importantly, it depends on the gains from cooperating.