Application of Artificial Neural Networks for Catalysis: A Review

Abstract

:1. Introduction

2. Principle of ANN

2.1. Schematic Structure of an ANN

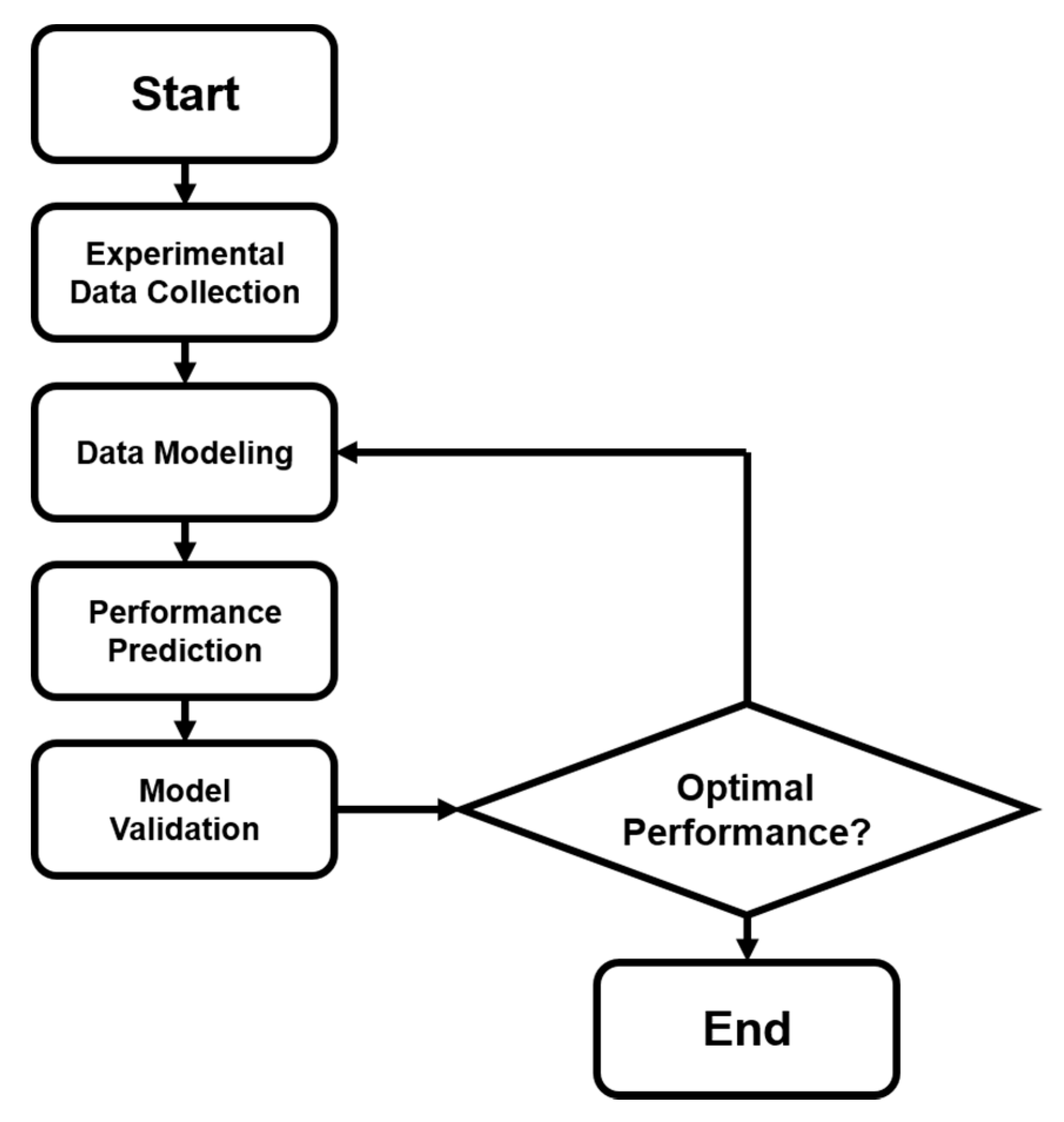

2.2. Model Development

2.2.1. Model Training

2.2.2. Model Testing

3. Applications of ANN for Catalysis: Experiment

3.1. Prediction of Catalytic Activity

3.2. Optimization of Catalysis

4. Applications of ANN for Catalysis: Theory

4.1. Prediction of Reaction Descriptors

4.2. Prediction of Potential Energy Surface

5. Remarks and Prospects

- (1)

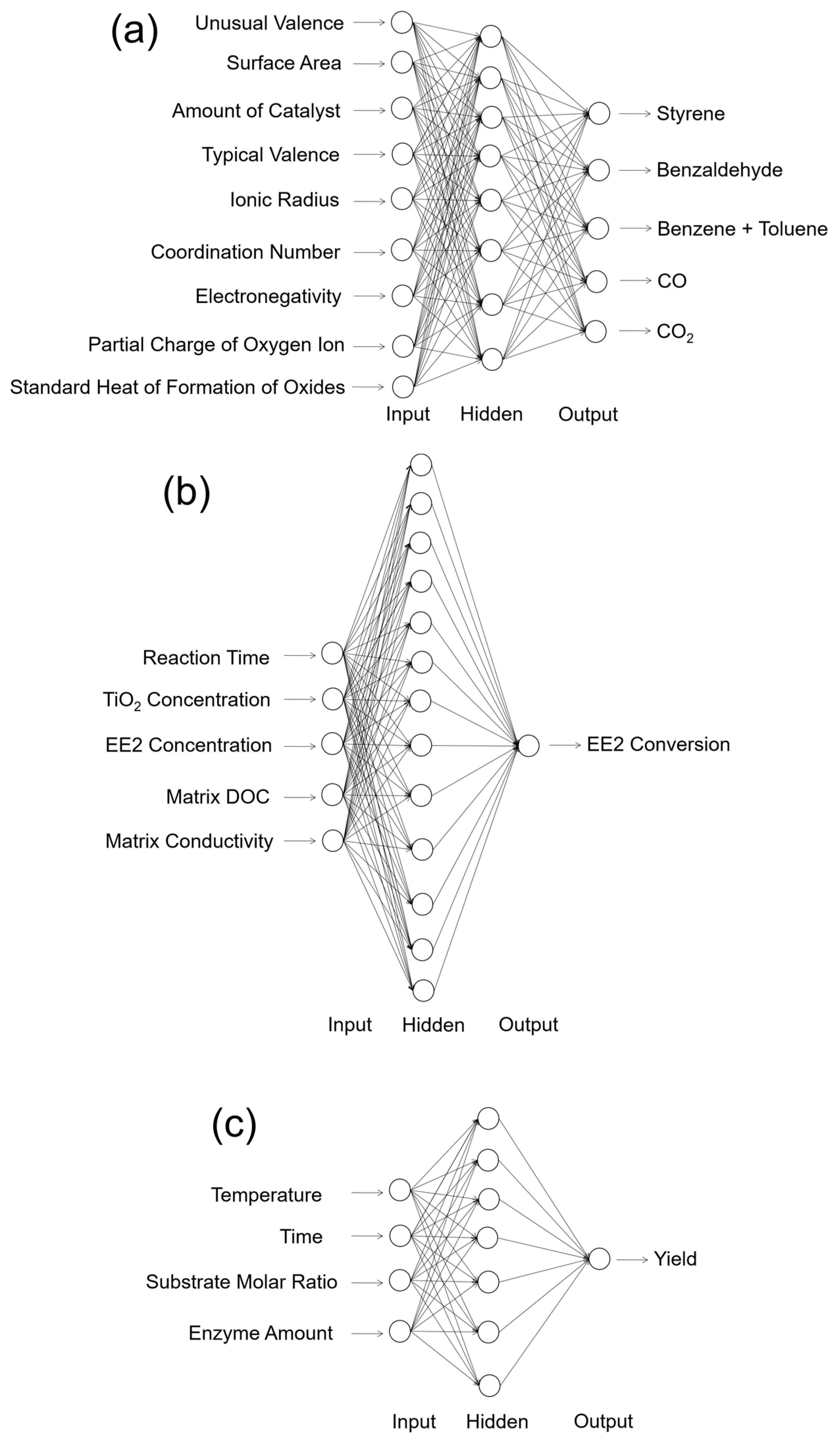

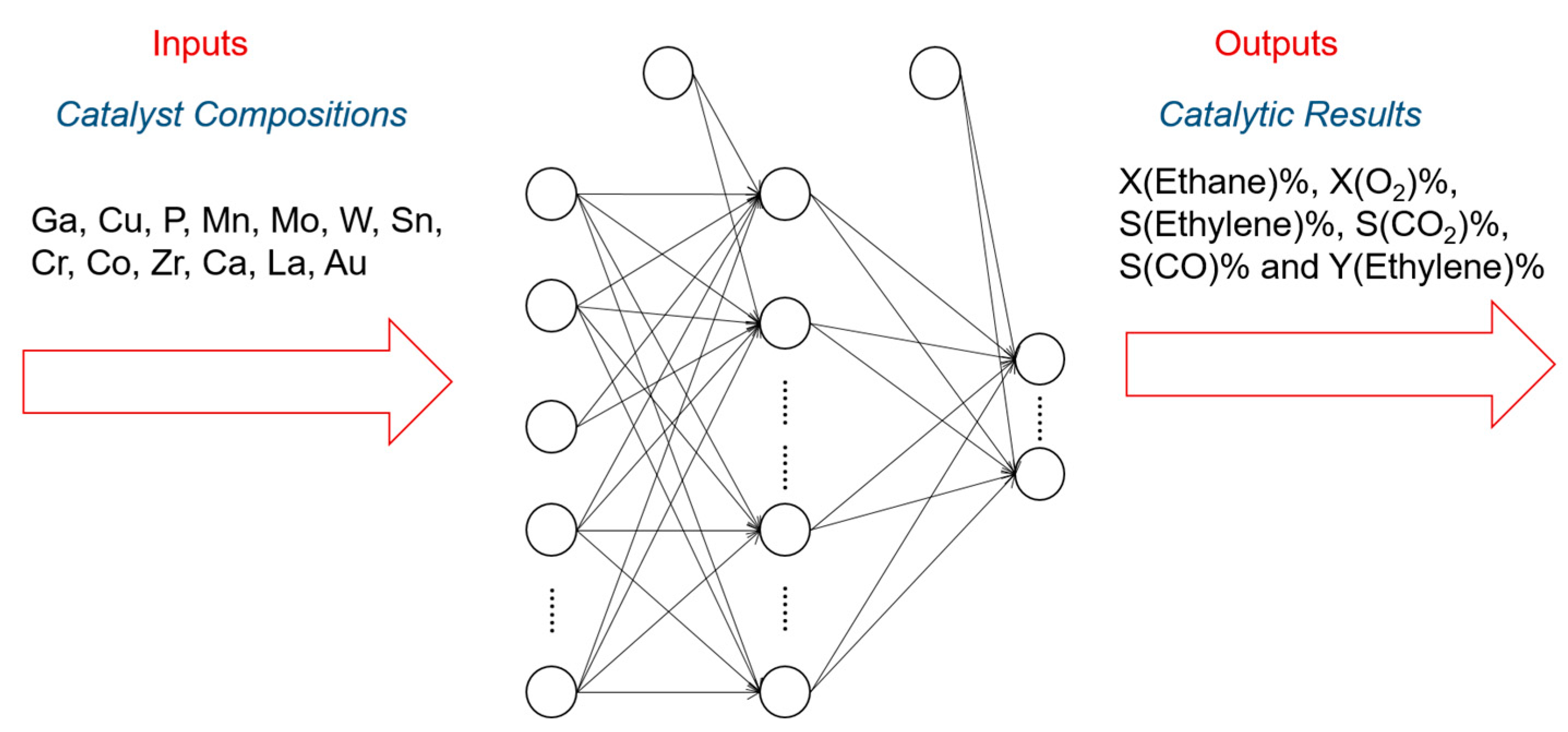

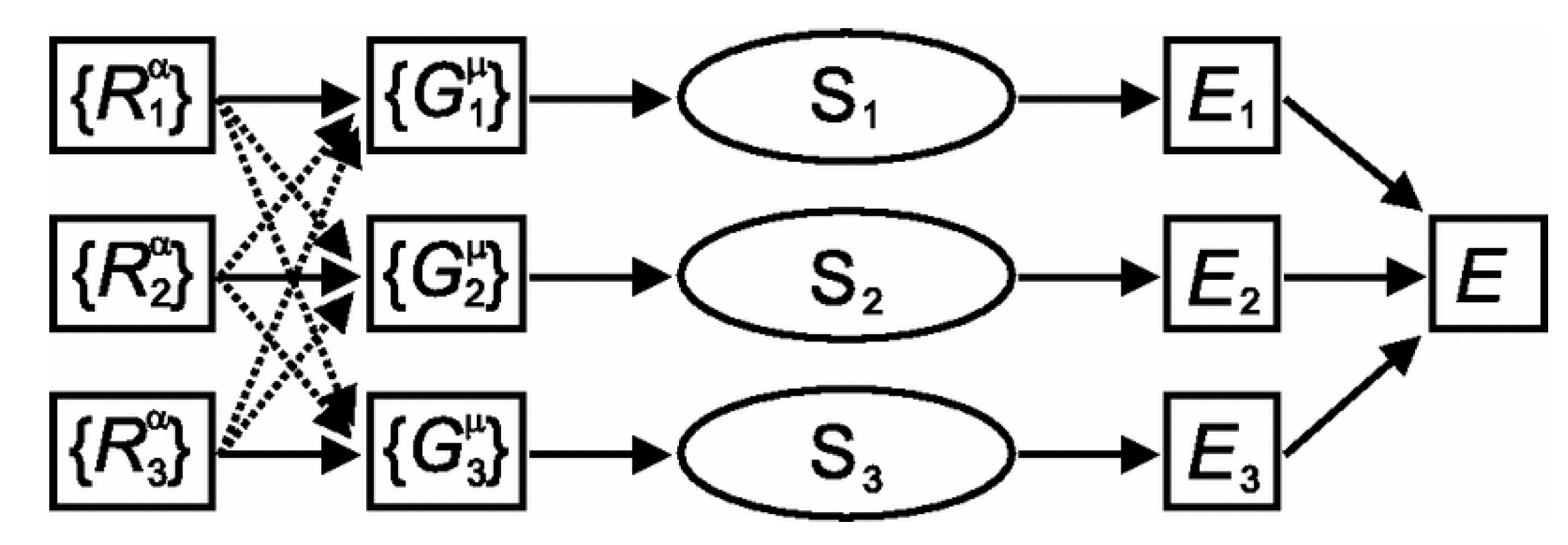

- As the most straightforward application, ANN has been widely used for the prediction of catalytic performance during the past two decades. Though there are various relevant studies, the motifs are quite similar: setting the experimental conditions and/or the properties of the catalytic system as the inputs, and the catalytic activities as the output of the model. Figure 2 summarizes three typical examples of such an application. It shows that the number of output variables can be more than one. That means an ANN with a sufficiently large database is able to perform multiple outputs to predict the product distribution and reaction selectivity.

- (2)

- In the catalysis community, the optimization and design of catalysts are usually more important. In addition to predicting the catalytic activities, some studies generated new input combinations for a well-trained ANN model, and acquired the predicted output activities. For the generations of new input combinations, GA is the most popular and (so far) the most successful strategy for input generation with less time-consumption. It is expected that in addition to the GA method, a machine learning-assisted HTS can be more sufficient for the generation of inputs in future study [37,104].

- (3)

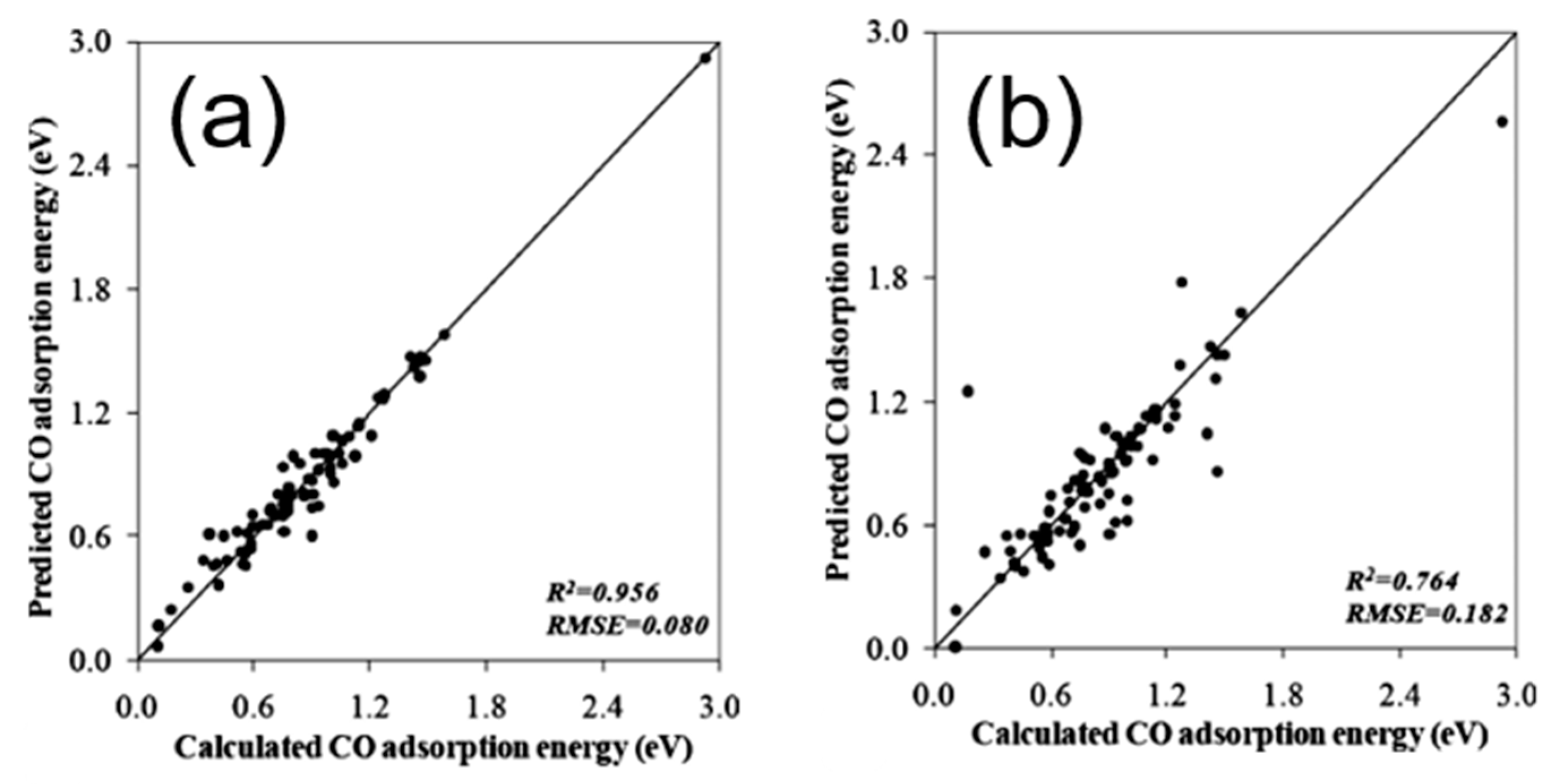

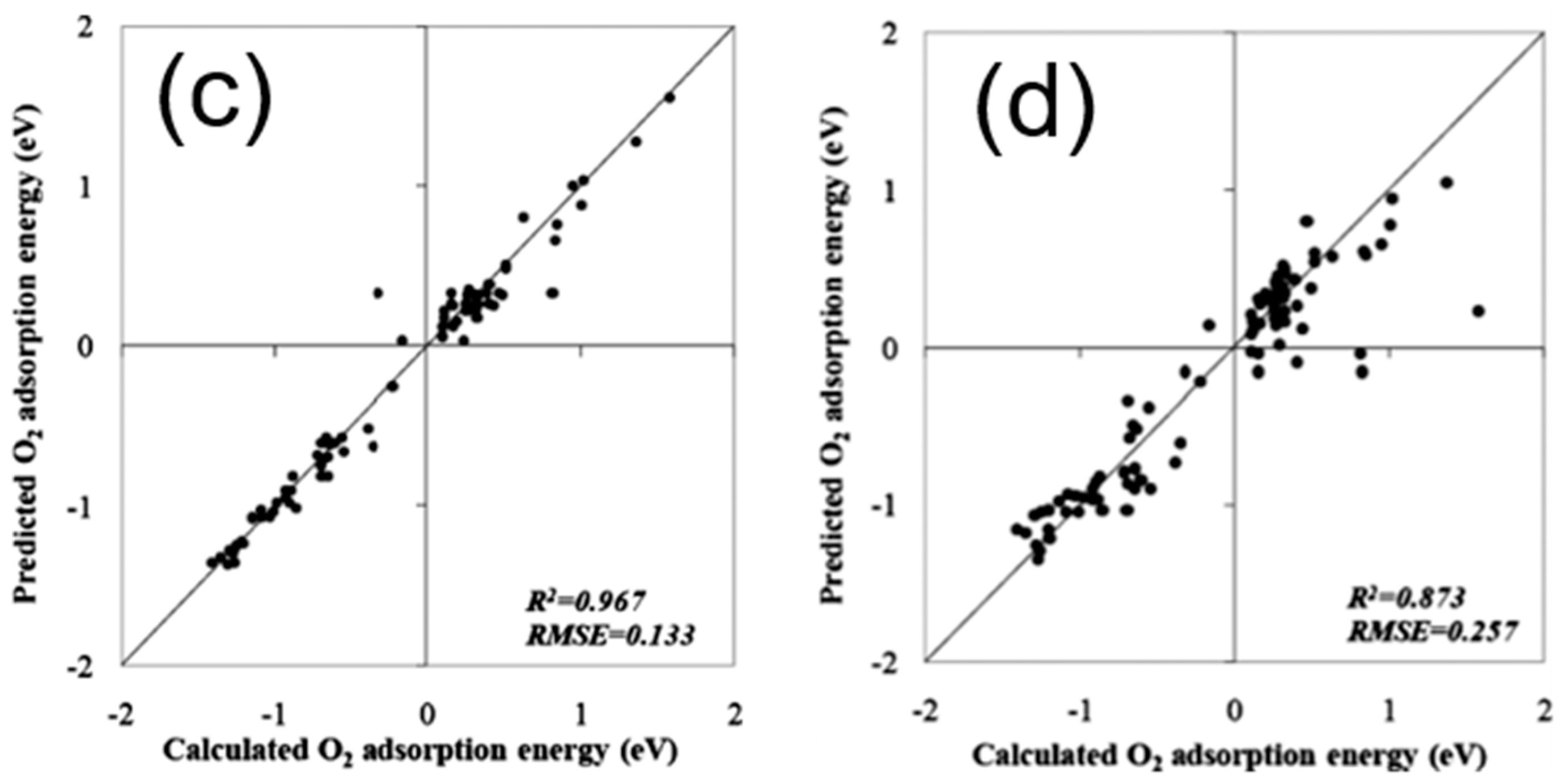

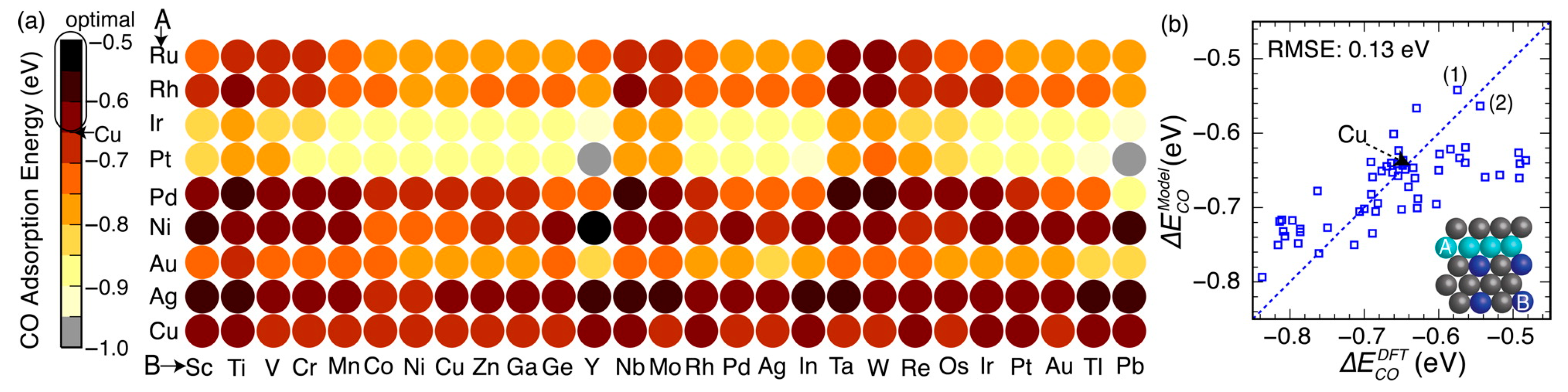

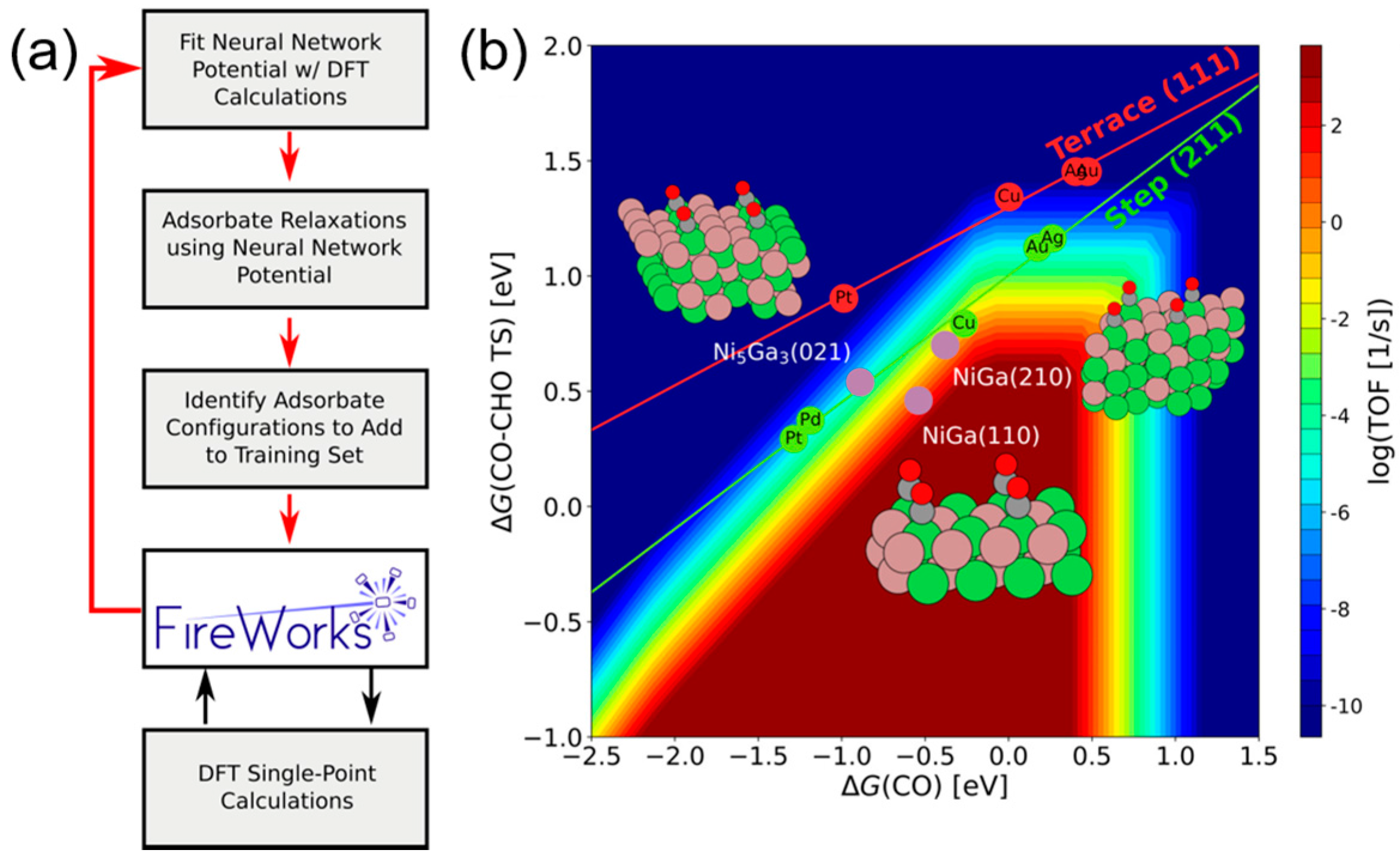

- In terms of the theoretical catalysis study, ANN has proven to be a good tool for catalytic descriptor prediction (e.g., binding energy of adsorbate on a catalytic surface). Based on a DFT-calculated database with proper independent variables as the inputs, ANN is able to “learn” the highly-complicated intrinsic properties via a non-linear fitting process. Several successful studies such as the research done by Ulissi and Nørskov et al. [84] have shown that machine learning can be a good choice to reduce the computational cost of theoretical catalysis study, and meanwhile, provides precise and ultra-fast screening for new catalyst discovery.

- (4)

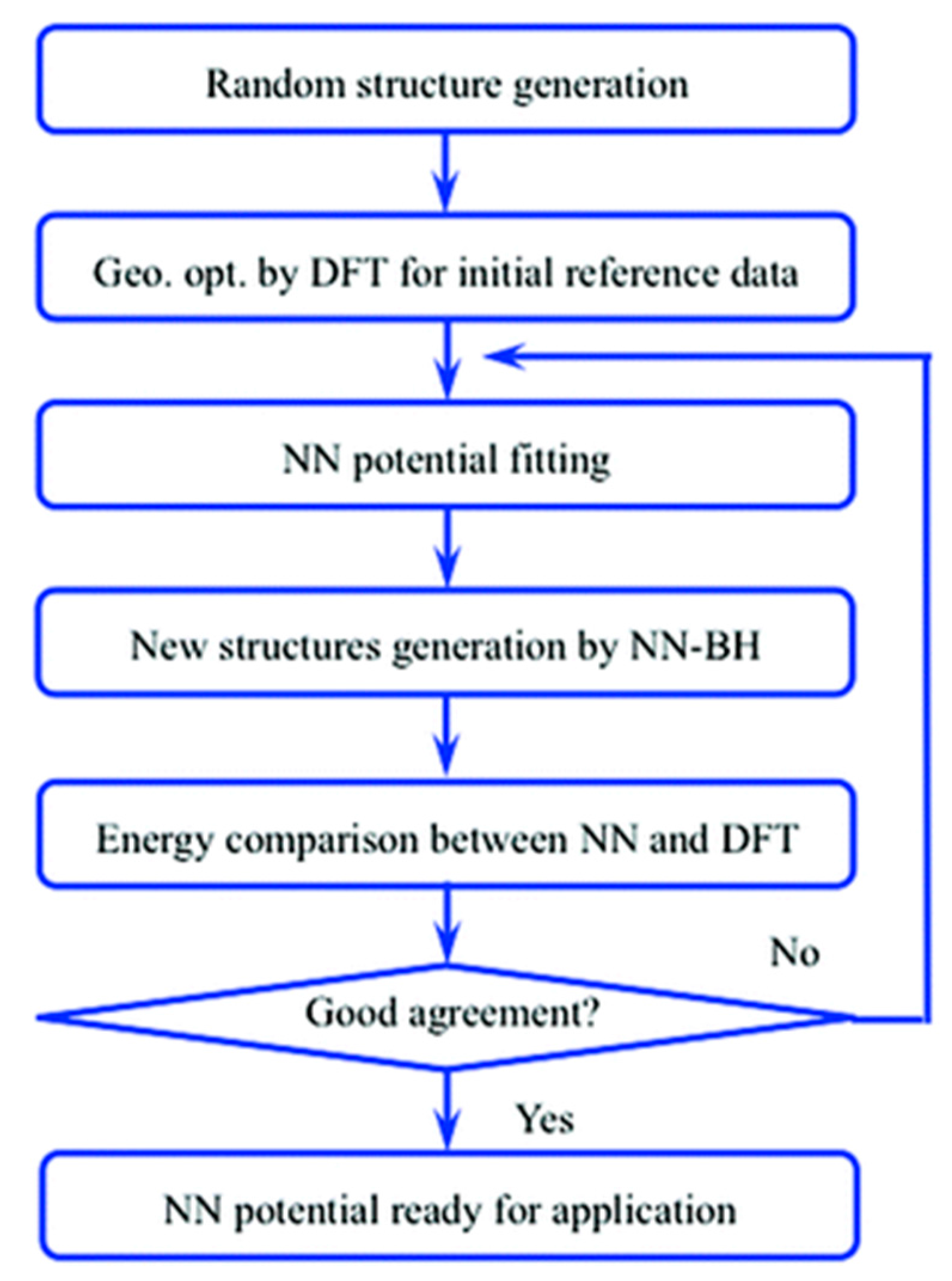

- To theoretically study the PES for a catalytic system and perform global optimizations, ANN has shown its capacity for mapping the PES for a specific reaction and/or a specific type of catalyst, combined with the MC methods. To reduce the inputs, Behler and Parrinello [92] developed a new ANN representation for atomic systems, which dramatically reduces the number of inputs for network training and saves much time and manpower. It is expected that this method would be more widely used for discovering the favorable structures of the catalytic systems (especially the metallic catalytic systems) and screening good potential catalysts.

- (5)

- It should be noted that though machine learning development has boosted the ANN applications to the catalysis study of both experiment and theory, many previous studies failed to use an appropriate training-and-testing method. Many of the studies did not perform optimization on ANN structures before they used the model for further applications. This is clearly not doing it in the correct way and could be risky for real applications. To define the optimal structure of ANN, different numbers of hidden neurons and (even) hidden layers should be tried for multiple training and testing. Failing to do this would lead to the severe potential risks of under- or over-fitting.

- (1)

- So far, most of the relevant studies have been done by a conventional ANN (e.g., BPNN). However, with the development of machine learning, conventional ANNs are sometimes no longer the best choice. For example, for fitting a PES of a metallic cluster, a BPNN with regular activation functions sometimes cannot provide smooth fitting, leading to incorrect forces. Also, the required training time of a conventional ANN is another challenge: with a larger database and higher number of hidden neurons and layers, the required training time would become much longer. At this moment, finding out the optimal ANN structure would become harder. Actually, with the algorithm developments, there are many machine learning methods that are sometimes more precise and much faster than the conventional ANN (e.g., GRNN, support vector machine (SVM) [105], and ELM [31]). Several comparative studies have been performed to compare the speed and accuracy of different algorithms [18,34,35]. It is expected that an increasing number of machine learning algorithms will be applied to catalysis studies in the future.

- (2)

- Similarly, with the rapid development of big data analysis and deep learning techniques [32], it is expected that they could be widely applied for catalytic activity predictions and global structural optimizations of catalytic systems. Though, so far, only a few relevant studies have focused on deep learning techniques (e.g., Zhai et al. [100]), more applicable studies should emerge in the near future. It is also expected that some of the current challenges, such as CO2 electroreduction selectivity and machine learning-assisted MD simulations, could be well-addressed and understood by state-of-the-art data-mining analysis and deep learning techniques.

- (3)

- Compared to other research areas, the applications of machine learning for the catalysis community are still not popular and not well-studied. The main reasons include: (i) acquiring the original database for model training is expensive; (ii) too many input variables have to be considered for modeling training; and (iii) there is a lack of user-friendly platforms. The first two points can be addressed by the developments of experimental and computational techniques and devices. The development of good atomic representations can also help reduce the input variables. In terms of the third point, despite there being some chemical packages that could help speed up the machine learning fitting, due to the complexity of chemical systems, very few of them are effective and user-friendly enough. It is expected that future inter-disciplinary study would help address this issue and more well-developed software platforms can be provided for more complicated catalytic studies.

Acknowledgments

Author Contributions

Conflicts of Interest

Nomenclature

| AI | artificial intelligence |

| IT | information technology |

| ANN | artificial neural network |

| BPNN | back-propagation neural network |

| GRNN | general regression neural network |

| ELM | extreme learning machine |

| DNN | deep neural network |

| RMSE | root mean square error |

| EE2 | 17-ethynylestradiol |

| DOC | dissolved organic carbon |

| ODHE | oxidative dehydrogenation of ethane |

| GA | genetic algorithm |

| AC | active carbon |

| HTS | high-throughput screening |

| WGS | water-gas shift |

| RSM | response surface methodology |

| DFT | density functional theory |

| MD | molecular dynamics |

| TST | transition state theory |

| MC | Monte Carlo |

| PES | potential energy surface |

| BH | basin hopping |

| Amp | atomistic machine-learning package |

| SVM | support vector machine |

References

- Witten, I.H.; Frank, E. Data Mining: Practical Machine Learning Tools and Techniques; Morgan Kaufman: Burlington, MA, USA, 2005. [Google Scholar]

- Mair, C.; Kadoda, G.; Le, M.; Phalp, K.; Scho, C.; Shepperd, M.; Webster, S. An investigation of machine learning based prediction systems. J. Syst. Softw. 2000, 53, 23–29. [Google Scholar] [CrossRef]

- Kotsiantis, S.B. Supervised Machine Learning: A Review of Classification Techniques. Informatica 2007, 31, 249–268. [Google Scholar]

- Carpenter, G.A.; Grossberg, S.; Reynolds, J.H. ARTMAP: Supervised real-time learning and classification of nonstationary data by a self-organizing neural network. Neural Netw. 1991, 4, 565–588. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin, Germany, 2006; Volume 4. [Google Scholar]

- Raudys, S.J.; Jain, A.K. Small sample size effects in statistical pattern recognition: Recommendations for practitioners. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 252–264. [Google Scholar] [CrossRef]

- Chen, F.; Li, H.; Xu, Z.; Hou, S.; Yang, D. User-friendly optimization approach of fed-batch fermentation conditions for the production of iturin A using artificial neural networks and support vector machine. Electron. J. Biotechnol. 2015, 18. [Google Scholar] [CrossRef]

- Sommer, C.; Gerlich, D.W. Machine learning in cell biology—Teaching computers to recognize phenotypes. J. Cell Sci. 2013, 126, 5529–5539. [Google Scholar] [CrossRef] [PubMed]

- Tarca, A.L.; Carey, V.J.; Chen, X.; Romero, R.; Drăghici, S. Machine Learning and Its Applications to Biology. PLoS Comput. Biol. 2007, 3, e116. [Google Scholar] [CrossRef] [PubMed]

- Wernick, M.; Yang, Y.; Brankov, J.; Yourganov, G.; Strother, S. Machine learning in medical imaging. IEEE Signal Proc. Mag. 2010, 27, 25–38. [Google Scholar] [CrossRef] [PubMed]

- Khan, J.; Wei, J.S.; Ringnér, M.; Saal, L.H.; Ladanyi, M.; Westermann, F.; Berthold, F.; Schwab, M.; Antonescu, C.R.; Peterson, C.; et al. Classification and diagnostic prediction of cancers using gene expression profiling and artificial neural networks. Nat. Med. 2001, 7, 673–679. [Google Scholar] [CrossRef] [PubMed]

- Deo, R.C. Machine learning in medicine. Circulation 2015, 132, 1920–1930. [Google Scholar] [CrossRef] [PubMed]

- Kalogirou, S. Applications of artificial neural networks in energy systems. Energy Convers. Manag. 1999, 40, 1073–1087. [Google Scholar] [CrossRef]

- Sanaye, S.; Asgari, H. Thermal modeling of gas engine driven air to water heat pump systems in heating mode using genetic algorithm and Artificial Neural Network methods. Int. J. Refrig. 2013, 36, 2262–2277. [Google Scholar] [CrossRef]

- Kalogirou, S.A.; Panteliou, S.; Dentsoras, A. Artificial neural networks used for the performance prediction of a thermosiphon solar water heater. Renew. Energy 1999, 18, 87–99. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, K.; Li, H.; Zhang, X.; Jin, G.; Cheng, K. Artificial Neural Networks-Based Software for Measuring Heat Collection Rate and Heat Loss Coefficient of Water-in-Glass Evacuated Tube Solar Water Heaters. PLoS ONE 2015, 10, e0143624. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Li, H.; Tang, X.; Zhang, X.; Lin, F.; Cheng, K. Extreme learning machine: A new alternative for measuring heat collection rate and heat loss coefficient of water-in-glass evacuated tube solar water heaters. Springerplus 2016, 5. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Li, H.; Zhang, X.; Jin, G.; Cheng, K. Novel method for measuring the heat collection rate and heat loss coefficient of water-in-glass evacuated tube solar water heaters based on artificial neural networks and support vector machine. Energies 2015, 8, 8814–8834. [Google Scholar] [CrossRef]

- Jung, H.C.; Kim, J.S.; Heo, H. Prediction of building energy consumption using an improved real coded genetic algorithm based least squares support vector machine approach. Energy Build. 2015, 90, 76–84. [Google Scholar] [CrossRef]

- Gardner, M.; Dorling, S. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Liu, Z.; Li, H.; Cao, G. Quick Estimation Model for the Concentration of Indoor Airborne Culturable Bacteria: An Application of Machine Learning. Int. J. Environ. Res. Public Health 2017, 14, 857. [Google Scholar] [CrossRef] [PubMed]

- Recknagel, F. Applications of machine learning to ecological modelling. Ecol. Modell. 2001, 146, 303–310. [Google Scholar] [CrossRef]

- Peng, H.; Ling, X. Optimal design approach for the plate-fin heat exchangers using neural networks cooperated with genetic algorithms. Appl. Therm. Eng. 2008, 28, 642–650. [Google Scholar] [CrossRef]

- Kalogirou, S.A. Designing and Modeling Solar Energy Systems. In Solar Energy Engineering; Academic Press: Cambridge, MA, USA, 2014; pp. 583–699. [Google Scholar]

- Liu, Z.; Li, H.; Liu, K.; Yu, H.; Cheng, K. Design of high-performance water-in-glass evacuated tube solar water heaters by a high-throughput screening based on machine learning: A combined modeling and experimental study. Sol. Energy 2017. [Google Scholar] [CrossRef]

- Gao, J.; Jamidar, R. Machine Learning Applications for Data Center Optimization. Google White Pap. 2014, 1–13. [Google Scholar]

- Li, M. Scaling Distributed Machine Learning with the Parameter Server. In Proceedings of the 2014 International Conference on Big Data Science and Computing—BigDataScience’14, Broomfield, CO, USA, 6–8 October 2014. [Google Scholar]

- Hopfield, J.J. Artificial neural networks. IEEE Circuits Devices Mag. 1988, 4, 3–10. [Google Scholar] [CrossRef]

- Nawi, N.M.; Khan, A.; Rehman, M.Z. A New Back-Propagation Neural Network Optimized. ICCSA 2013, 2013, 413–426. [Google Scholar]

- Specht, D.F. A general regression neural network. IEEE Trans. Neural Netw. 1991, 2, 568–576. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Huang, G.B.; Song, S.; You, K. Trends in extreme learning machines: A review. Neural Netw. 2015, 61, 32–48. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep Learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 1106–1114. [Google Scholar]

- Li, H.; Tang, X.; Wang, R.; Lin, F.; Liu, Z.; Cheng, K. Comparative Study on Theoretical and Machine Learning Methods for Acquiring Compressed Liquid Densities of 1,1,1,2,3,3,3-Heptafluoropropane (R227ea) via Song and Mason Equation, Support Vector Machine, and Artificial Neural Networks. Appl. Sci. 2016, 6, 25. [Google Scholar] [CrossRef]

- Li, H.; Chen, F.; Cheng, K.; Zhao, Z.; Yang, D. Prediction of Zeta Potential of Decomposed Peat via Machine Learning : Comparative Study of Support Vector Machine and Artificial Neural Networks. Int. J. Electrochem. Sci. 2015, 10, 6044–6056. [Google Scholar]

- Paxton, A.T.; Gumbsch, P.; Methfessel, M. A quantum mechanical calculation of the theoretical strength of metals. Philos. Mag. Lett. 1991, 63, 267–274. [Google Scholar] [CrossRef]

- Li, H.; Liu, Z.; Liu, K.; Zhang, Z. Predictive Power of Machine Learning for Optimizing Solar Water Heater Performance: The Potential Application of High-Throughput Screening. Int. J. Photoenergy 2017. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Browne, M.W. Cross-Validation Methods. J. Math. Psychol. 2000, 44, 108–132. [Google Scholar] [CrossRef] [PubMed]

- Tetko, I.V.; Livingstone, D.J.; Luik, A.I. Neural Network Studies. 1. Comparison of Overfitting and Overtraining. J. Chem. Inf. Comput. Sci. 1995, 35, 826–833. [Google Scholar] [CrossRef]

- Kito, S.; Hattori, T.; Murakami, Y. Estimation of catalytic performance by neural network—Product distribution in oxidative dehydrogenation of ethylbenzene. Appl. Catal. A Gen. 1994, 114. [Google Scholar] [CrossRef]

- Sasaki, M.; Hamada, H.; Kintaichi, Y.; Ito, T. Application of a neural network to the analysis of catalytic reactions Analysis of NO decomposition over Cu/ZSM-5 zeolite. Appl. Catal. A Gen. 1995, 132, 261–270. [Google Scholar] [CrossRef]

- Mohammed, M.L.; Patel, D.; Mbeleck, R.; Niyogi, D.; Sherrington, D.C.; Saha, B. Optimisation of alkene epoxidation catalysed by polymer supported Mo(VI) complexes and application of artificial neural network for the prediction of catalytic performances. Appl. Catal. A Gen. 2013, 466, 142–152. [Google Scholar] [CrossRef]

- Frontistis, Z.; Daskalaki, V.M.; Hapeshi, E.; Drosou, C.; Fatta-Kassinos, D.; Xekoukoulotakis, N.P.; Mantzavinos, D. Photocatalytic (UV-A/TiO2) degradation of 17α-ethynylestradiol in environmental matrices: Experimental studies and artificial neural network modeling. J. Photochem. Photobiol. A Chem. 2012, 240, 33–41. [Google Scholar] [CrossRef]

- Abdul Rahman, M.B.; Chaibakhsh, N.; Basri, M.; Salleh, A.B.; Abdul Rahman, R.N.Z.R. Application of artificial neural network for yield prediction of lipase-catalyzed synthesis of dioctyl adipate. Appl. Biochem. Biotechnol. 2009, 158, 722–735. [Google Scholar] [CrossRef] [PubMed]

- Günay, M.E.; Yildirim, R. Neural network analysis of selective CO oxidation over copper-based catalysts for knowledge extraction from published data in the literature. Ind. Eng. Chem. Res. 2011, 50. [Google Scholar] [CrossRef]

- Raccuglia, P.; Elbert, K.C.; Adler, P.D.F.; Falk, C.; Wenny, M.B.; Mollo, A.; Zeller, M.; Friedler, S.A.; Schrier, J.; Norquist, A.J. Machine-learning-assisted materials discovery using failed experiments. Nature 2016, 533, 73–76. [Google Scholar] [CrossRef] [PubMed]

- Maldonado, A.G.; Rothenberg, G. Predictive modeling in catalysis—From dream to reality. Chem. Eng. Prog. 2009, 105, 26–32. [Google Scholar]

- Corma, A.; Serra, J.M.; Argente, E.; Botti, V.; Valero, S. Application of artificial neural networks to combinatorial catalysis: Modeling and predicting ODHE catalysts. ChemPhysChem 2002, 3, 939–945. [Google Scholar] [CrossRef]

- Kumar, M.; Husian, M.; Upreti, N.; Gupta, D. Genetic Algorithm: Review and Application. Int. J. Inf. Technol. Knowl. Manag. 2010, 2, 451–454. [Google Scholar]

- Yamada, M.; Omata, K. Prediction of Effective Additives to a Ni/Active Carbon Catalyst for Vapor-Phase Carbonylation of Methanol by an Artificial Neural Network. Ind. Eng. Chem. Res. 2004, 43, 6622–6625. [Google Scholar]

- Hou, Z.-Y.; Dai, Q.; Wu, X.-Q.; Chen, G.-T. Artificial neural network aided design of catalyst for propane ammoxidation. Appl. Catal. A Gen. 1997, 161, 183–190. [Google Scholar] [CrossRef]

- Zhao, Y.; Cundari, T.; Deng, J. Design of a Propane Ammoxidation Catalyst Using Artificial Neural Networks and Genetic Algorithms. Ind. Eng. Chem. Res. 2001, 40, 5475–5480. [Google Scholar]

- Umegaki, T.; Watanabe, Y.; Nukui, N.; Omata, K.; Yamada, M. Optimization of catalyst for methanol synthesis by a combinatorial approach using a parallel activity test and genetic algorithm assisted by a neural network. Energy Fuels 2003, 17, 850–856. [Google Scholar] [CrossRef]

- Rodemerck, U.; Baerns, M.; Holena, M.; Wolf, D. Application of a genetic algorithm and a neural network for the discovery and optimization of new solid catalytic materials. Appl. Surf. Sci. 2004, 223, 168–174. [Google Scholar] [CrossRef]

- Baumes, L.; Farrusseng, D.; Lengliz, M.; Mirodatos, C. Using artificial neural networks to boost high-throughput discovery in heterogeneous catalysis. QSAR Comb. Sci. 2004, 23, 767–778. [Google Scholar] [CrossRef]

- Kasiri, M.B.; Aleboyeh, H.; Aleboyeh, A. Modeling and optimization of heterogeneous photo-fenton process with response surface methodology and artificial neural networks. Environ. Sci. Technol. 2008, 42, 7970–7975. [Google Scholar] [CrossRef] [PubMed]

- Basri, M.; Rahman, R.N.Z.R.A.; Ebrahimpour, A.; Salleh, A.B.; Gunawan, E.R.; Rahman, M.B.A. Comparison of estimation capabilities of response surface methodology (RSM) with artificial neural network (ANN) in lipase-catalyzed synthesis of palm-based wax ester. BMC Biotechnol. 2007, 7, 53. [Google Scholar] [CrossRef] [PubMed]

- Prakash Maran, J.; Priya, B. Comparison of response surface methodology and artificial neural network approach towards efficient ultrasound-assisted biodiesel production from muskmelon oil. Ultrason. Sonochem. 2015, 23, 192–200. [Google Scholar] [CrossRef] [PubMed]

- Betiku, E.; Taiwo, A.E. Modeling and optimization of bioethanol production from breadfruit starch hydrolyzate vis-a-vis response surface methodology and artificial neural network. Renew. Energy 2015, 74, 87–94. [Google Scholar] [CrossRef]

- Talebian-Kiakalaieh, A.; Amin, N.A.S.; Zarei, A.; Noshadi, I. Transesterification of waste cooking oil by heteropoly acid (HPA) catalyst: Optimization and kinetic model. Appl. Energy 2013, 102, 283–292. [Google Scholar] [CrossRef]

- Rajković, K.M.; Avramović, J.M.; Milić, P.S.; Stamenković, O.S.; Veljković, V.B. Optimization of ultrasound-assisted base-catalyzed methanolysis of sunflower oil using response surface and artifical neural network methodologies. Chem. Eng. J. 2013, 215–216, 82–89. [Google Scholar] [CrossRef]

- Mohammad Fauzi, A.H.; Saidina Amin, N.A. Optimization of oleic acid esterification catalyzed by ionic liquid for green biodiesel synthesis. Energy Convers. Manag. 2013, 76, 818–827. [Google Scholar] [CrossRef]

- Stamenković, O.S.; Rajković, K.; Veličković, A.V.; Milić, P.S.; Veljković, V.B. Optimization of base-catalyzed ethanolysis of sunflower oil by regression and artificial neural network models. Fuel Process. Technol. 2013, 114, 101–108. [Google Scholar] [CrossRef]

- Ayodele, O.B.; Auta, H.S.; Md Nor, N. Artificial neural networks, optimization and kinetic modeling of amoxicillin degradation in photo-fenton process using aluminum pillared montmorillonite-supported ferrioxalate catalyst. Ind. Eng. Chem. Res. 2012, 51, 16311–16319. [Google Scholar] [CrossRef]

- Pople, J.A.; Gill, P.M.W.; Johnson, B.G. Kohn—Sham density-functional theory within a finite basis set. Chem. Phys. Lett. 1992, 199, 557–560. [Google Scholar] [CrossRef]

- Grimme, S.; Antony, J.; Ehrlich, S.; Krieg, H. A consistent and accurate ab initio parametrization of density functional dispersion correction (DFT-D) for the 94 elements H-Pu. J. Chem. Phys. 2010, 132. [Google Scholar] [CrossRef] [PubMed]

- Van Gunsteren, W.F.; Berendsen, H.J.C. Computer Simulation of Molecular Dynamics: Methodology, Applications, and Perspectives in Chemistry. Angew. Chem. Int. Ed. Engl. 1990, 29, 992–1023. [Google Scholar] [CrossRef]

- Rapaport, D.C. Molecular dynamics simulation. Comput. Sci. Eng. 1999, 1, 537–542. [Google Scholar] [CrossRef]

- Pechukas, P. Transition State Theory. Annu. Rev. Phys. Chem. 1981, 32, 159–177. [Google Scholar] [CrossRef]

- Nørskov, J.K.; Rossmeisl, J.; Logadottir, A.; Lindqvist, L.; Kitchin, J.R.; Bligaard, T.; Jónsson, H. Origin of the overpotential for oxygen reduction at a fuel-cell cathode. J. Phys. Chem. B 2004, 108, 17886–17892. [Google Scholar] [CrossRef]

- Nørskov, J.K.; Bligaard, T.; Logadottir, A.; Kitchin, J.R.; Chen, J.G.; Pandelov, S.; Stimming, U. Trends in the Exchange Current for Hydrogen Evolution. J. Electrochem. Soc. 2005, 152, J23. [Google Scholar] [CrossRef] [Green Version]

- Falsig, H.; Hvolbæk, B.; Kristensen, I.S.; Jiang, T.; Bligaard, T.; Christensen, C.H.; Nørskov, J.K. Trends in the catalytic CO oxidation activity of nanoparticles. Angew. Chem. Int. Ed. 2008, 47, 4835–4839. [Google Scholar] [CrossRef] [PubMed]

- Evans, M.G.; Polanyi, M. Inertia and driving force of chemical reactions. Trans. Faraday Soc. 1938, 34, 11. [Google Scholar] [CrossRef]

- Logadottir, A.; Rod, T.; Nørskov, J.; Hammer, B.; Dahl, S.; Jacobsen, C.J. The Brønsted–Evans–Polanyi Relation and the Volcano Plot for Ammonia Synthesis over Transition Metal Catalysts. J. Catal. 2001, 197, 229–231. [Google Scholar] [CrossRef]

- Loffreda, D.; Delbecq, F.; Vigné, F.; Sautet, P. Fast prediction of selectivity in heterogeneous catalysis from extended brønsted-evans-polanyi relations: A theoretical insight. Angew. Chem. Int. Ed. 2009, 48, 8978–8980. [Google Scholar] [CrossRef] [PubMed]

- Fernndez, E.M.; Moses, P.G.; Toftelund, A.; Hansen, H.A.; Martínez, J.I.; Abild-Pedersen, F.; Kleis, J.; Hinnemann, B.; Rossmeisl, J.; Bligaard, T.; et al. Scaling relationships for adsorption energies on transition metal oxide, sulfide, and nitride surfaces. Angew. Chem. Int. Ed. 2008, 47, 4683–4686. [Google Scholar] [CrossRef] [PubMed]

- Fields, M.; Tsai, C.; Chen, L.D.; Abild-Pedersen, F.; Nørskov, J.K.; Chan, K. Scaling Relations for Adsorption Energies on Doped Molybdenum Phosphide Surfaces. ACS Catal. 2017, 7, 2528–2534. [Google Scholar] [CrossRef]

- Ma, X.; Li, Z.; Achenie, L.E.K.; Xin, H. Machine-Learning-Augmented Chemisorption Model for CO2 Electroreduction Catalyst Screening. J. Phys. Chem. Lett. 2015, 6, 3528–3533. [Google Scholar] [CrossRef] [PubMed]

- Greeley, J.; Jaramillo, T.F.; Bonde, J.; Chorkendorff, I.B.; Nørskov, J.K. Computational high-throughput screening of electrocatalytic materials for hydrogen evolution. Nat. Mater. 2006, 5, 909–913. [Google Scholar] [CrossRef] [PubMed]

- Greeley, J.; Stephens, I.E.L.; Bondarenko, A.S.; Johansson, T.P.; Hansen, H.A.; Jaramillo, T.F.; Rossmeisl, J.; Chorkendorff, I.; Nørskov, J.K. Alloys of platinum and early transition metals as oxygen reduction electrocatalysts. Nat. Chem. 2009, 1, 552–556. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.Y.; Mullen, G.M.; Flaherty, D.W.; Mullins, C.B. Selective hydrogen production from formic acid decomposition on Pd-Au bimetallic surfaces. J. Am. Chem. Soc. 2014, 136, 11070–11078. [Google Scholar] [CrossRef] [PubMed]

- Davran-Candan, T.; Günay, M.E.; Yildirim, R. Structure and activity relationship for CO and O2 adsorption over gold nanoparticles using density functional theory and artificial neural networks. J. Chem. Phys. 2010, 132, 174113. [Google Scholar] [CrossRef] [PubMed]

- Ulissi, Z.W.; Tang, M.T.; Xiao, J.; Liu, X.; Torelli, D.A.; Karamad, M.; Cummins, K.; Hahn, C.; Lewis, N.S.; Jaramillo, T.F.; et al. Machine-Learning Methods Enable Exhaustive Searches for Active Bimetallic Facets and Reveal Active Site Motifs for CO2 Reduction. ACS Catal. 2017, 7b01648. [Google Scholar] [CrossRef]

- Jain, A.; Ong, S.P.; Chen, W.; Medasani, B.; Qu, X.; Kocher, M.; Brafman, M.; Petretto, G.; Rignanese, G.M.; Hautier, G.; et al. FireWorks: A dynamic workflow system designed for high-throughput applications. Concurr. Comput. 2015, 27, 5037–5059. [Google Scholar] [CrossRef]

- Wolf, U.; Arkhipov, V.I.; Bässler, H. Current injection from a metal to a disordered hopping system. I. Monte Carlo simulation. Phys. Rev. B 1999, 59, 7507–7513. [Google Scholar] [CrossRef]

- Sumpter, B.G.; Noid, D.W. Potential energy surfaces for macromolecules. A neural network technique. Chem. Phys. Lett. 1992, 192, 455–462. [Google Scholar] [CrossRef]

- Lorenz, S.; Scheffler, M.; Gross, A. Descriptions of surface chemical reactions using a neural network representation of the potential-energy surface. Phys. Rev. B 2006, 73, 115431. [Google Scholar] [CrossRef]

- Tai No, K. Description of the potential energy surface of the water dimer with an artificial neural network. Chem. Phys. Lett. 1997, 271, 152–156. [Google Scholar] [CrossRef]

- Ouyang, R.; Xie, Y.; Jiang, D. Global minimization of gold clusters by combining neural network potentials and the basin-hopping method. Nanoscale 2015, 7, 14817–14821. [Google Scholar] [CrossRef] [PubMed]

- Wales, D.J. Global Optimization of Clusters, Crystals, and Biomolecules. Science 1999, 285, 1368–1372. [Google Scholar] [CrossRef] [PubMed]

- Behler, J.; Parrinello, M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 2007, 98. [Google Scholar] [CrossRef] [PubMed]

- Behler, J. Neural network potential-energy surfaces in chemistry: A tool for large-scale simulations. Phys. Chem. Chem. Phys. 2011, 13, 17930. [Google Scholar] [CrossRef] [PubMed]

- Behler, J. Constructing high-dimensional neural network potentials: A tutorial review. Int. J. Q. Chem. 2015, 115, 1032–1050. [Google Scholar] [CrossRef]

- Morawietz, T.; Behler, J. A density-functional theory-based neural network potential for water clusters including van der waals corrections. J. Phys. Chem. A 2013, 117, 7356–7366. [Google Scholar] [CrossRef] [PubMed]

- Natarajan, S.K.; Behler, J. Neural network molecular dynamics simulations of solid–liquid interfaces: Water at low-index copper surfaces. Phys. Chem. Chem. Phys. 2016, 18, 28704–28725. [Google Scholar] [CrossRef] [PubMed]

- Artrith, N.; Behler, J. High-dimensional neural network potentials for metal surfaces: A prototype study for copper. Phys. Rev. B—Condens. Matter Mater. Phys. 2012, 85. [Google Scholar] [CrossRef]

- Chiriki, S.; Jindal, S.; Bulusu, S.S. Neural network potentials for dynamics and thermodynamics of gold nanoparticles. J. Chem. Phys. 2017, 146. [Google Scholar] [CrossRef] [PubMed]

- Boes, J.R.; Groenenboom, M.C.; Keith, J.A.; Kitchin, J.R. Neural network and ReaxFF comparison for Au properties. Int. J. Q. Chem. 2016, 116, 979–987. [Google Scholar] [CrossRef]

- Zhai, H.; Alexandrova, A.N. Ensemble-Average Representation of Pt Clusters in Conditions of Catalysis Accessed through GPU Accelerated Deep Neural Network Fitting Global Optimization. J. Chem. Theory Comput. 2016, 12, 6213–6226. [Google Scholar] [CrossRef] [PubMed]

- Khorshidi, A.; Peterson, A.A. Amp: A modular approach to machine learning in atomistic simulations. Comput. Phys. Commun. 2016, 207, 310–324. [Google Scholar] [CrossRef]

- Boes, J.R.; Kitchin, J.R. Neural network predictions of oxygen interactions on a dynamic Pd surface. Mol. Simul. 2017, 43, 346–354. [Google Scholar] [CrossRef]

- Boes, J.R.; Kitchin, J.R. Modeling Segregation on AuPd(111) Surfaces with Density Functional Theory and Monte Carlo Simulations. J. Phys. Chem. C 2017, 121, 3479–3487. [Google Scholar] [CrossRef]

- Hautier, G.; Fischer, C.C.; Jain, A.; Mueller, T.; Ceder, G. Finding natures missing ternary oxide compounds using machine learning and density functional theory. Chem. Mater. 2010, 22, 3762–3767. [Google Scholar] [CrossRef]

- Suykens, J.A.; Vandewalle, J. Least Squares Support Vector Machine Classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Zhang, Z.; Liu, Z. Application of Artificial Neural Networks for Catalysis: A Review. Catalysts 2017, 7, 306. https://doi.org/10.3390/catal7100306

Li H, Zhang Z, Liu Z. Application of Artificial Neural Networks for Catalysis: A Review. Catalysts. 2017; 7(10):306. https://doi.org/10.3390/catal7100306

Chicago/Turabian StyleLi, Hao, Zhien Zhang, and Zhijian Liu. 2017. "Application of Artificial Neural Networks for Catalysis: A Review" Catalysts 7, no. 10: 306. https://doi.org/10.3390/catal7100306