Dynamic Evaluation of Photochemical Grid Model Response to Emission Changes in the South Coast Air Basin in California

Abstract

:1. Introduction

Previous Dynamic Evaluation Studies

- Operational evaluation: generate statistics of the deviations between model estimates for a simulation year and corresponding observations, and compare the magnitudes of those deviations to selected criteria

- Diagnostic evaluation: test the ability of the model to simulate each of the interacting processes that govern the system

- Dynamic evaluation: test the model’s ability to predict changes in air quality concentrations in response to changes in either source emissions or meteorological conditions

- Probabilistic evaluation: focus on the modeled distributions of selected variables rather than individual model estimates at specific times and locations

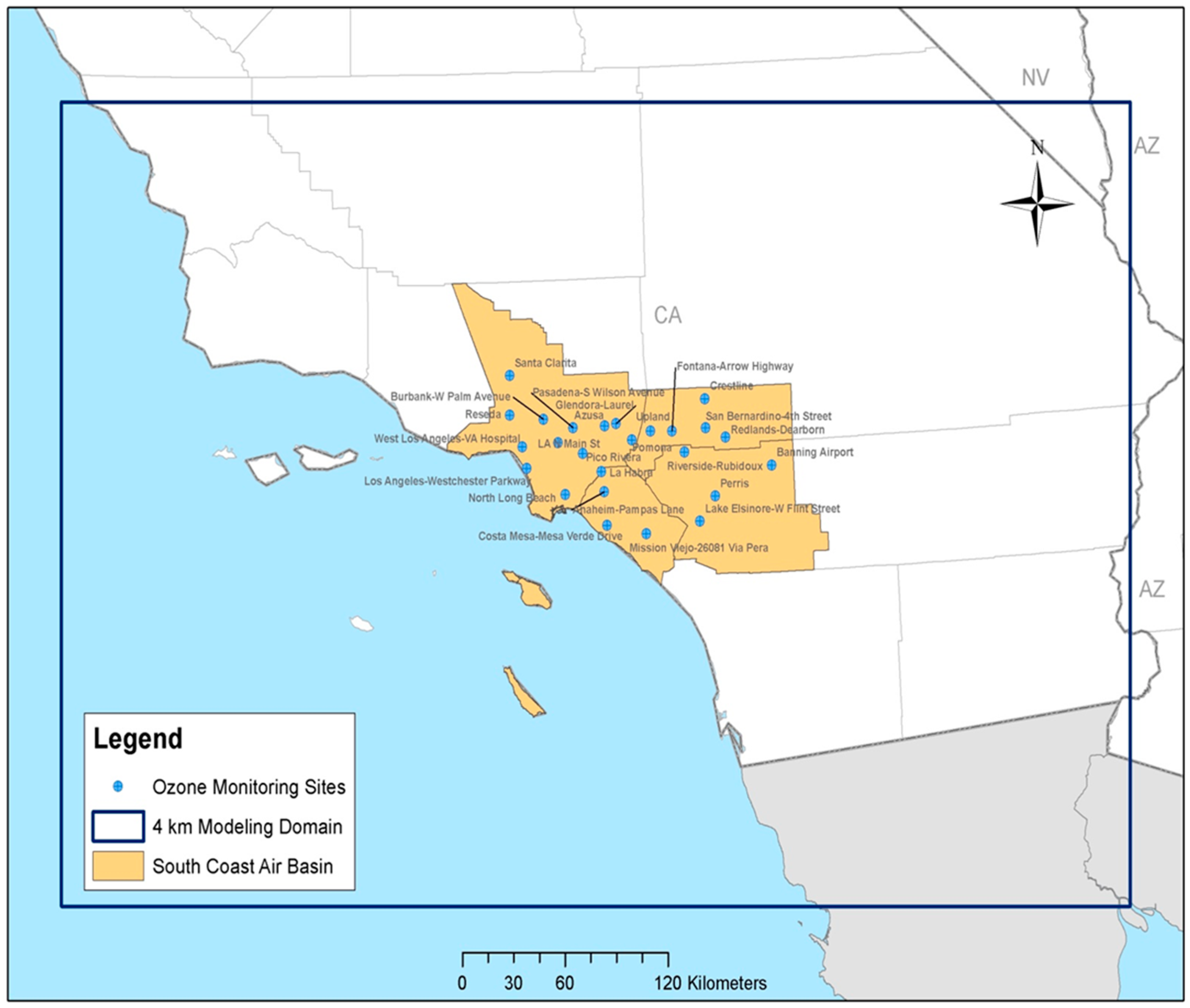

2. Experiments

2.1. The 2012 AQMP and 2016 AQMP Modeling

- CMAQ model versions: v4.7.1 for the 2012 AQMP, and v5.0.2 for the 2016 AQMP.

- Base year (for meteorology and emissions): 2008 for the 2012 AQMP, and 2012 for the 2016 AQMP.

- Ozone season: June through August for the 2012 AQMP, and May through September for the 2016 AQMP.

- Meteorological model versions: the Weather Research and Forecasting Model (WRF) v3.3 was used in the 2012 AQMP, and WRF v3.6 was used in the 2016 AQMP.

- RRF calculations for 8-h ozone attainment demonstrations: the 2012 AQMP modeling followed the EPA (2007) guidance [11], while the 2016 AQMP modeling followed the EPA (2014) guidance [12]. In the 2012 AQMP, all days that met the selection criteria were included in the analysis, while in the 2016 AQMP, the top ten days were selected. Due to the high frequency of ozone episodes in the Basin, the number of days used for the attainment calculations in the 2012 AQMP was significantly higher than ten. For example, the Crestline site, which often determines the Basin design value, typically experiences 50 or more days that would satisfy the selection criteria [23] and all such days were used in the 8-h ozone attainment demonstrations in the 2012 AQMP. The focus on the top ten days in the 2016 AQMP was expected to produce future-year design values that are more responsive to emission reductions [23].

2.2. Dynamic Evaluation Approach

2.3. Historical-Year Emissions

3. Results and Discussion

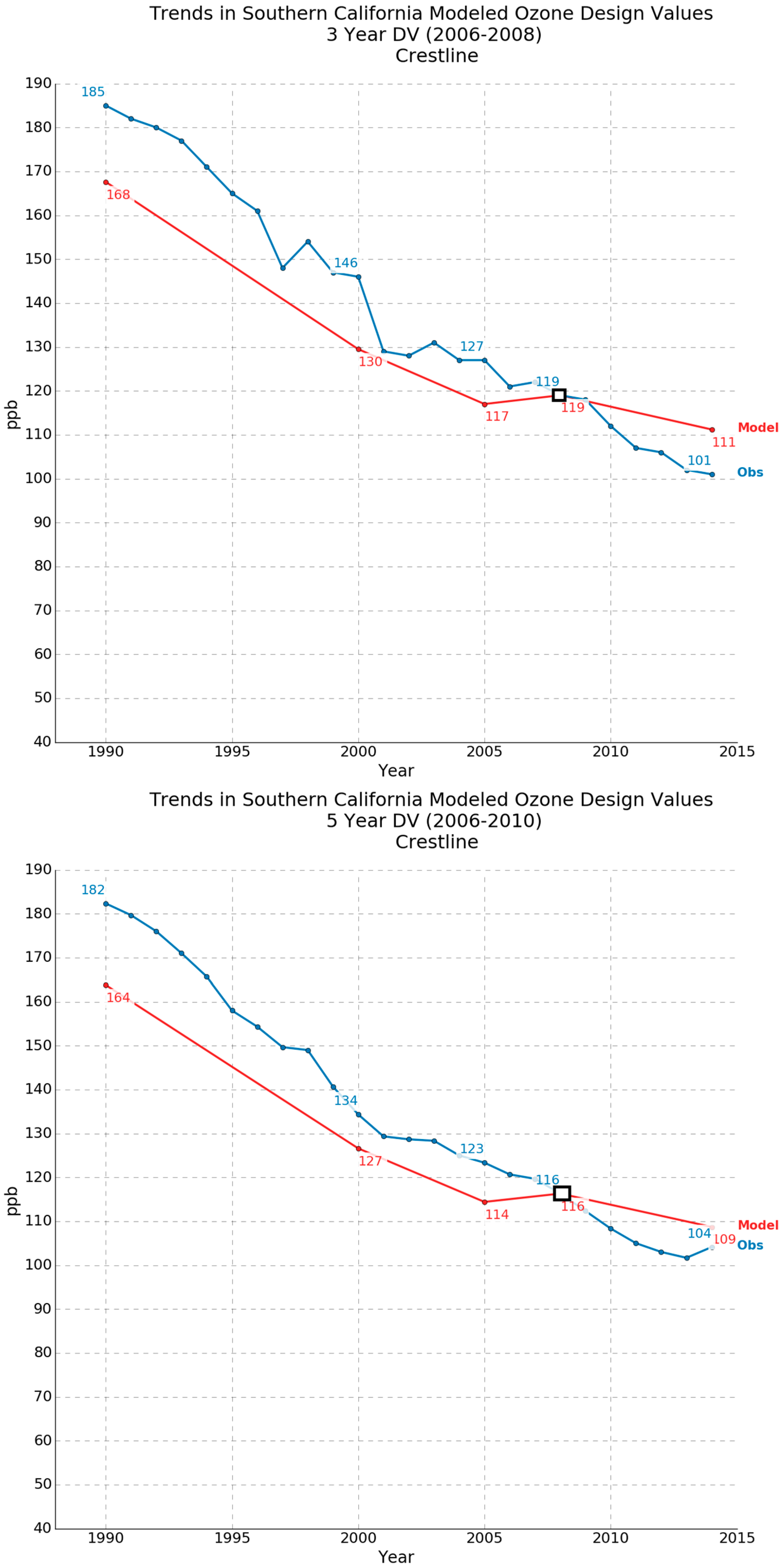

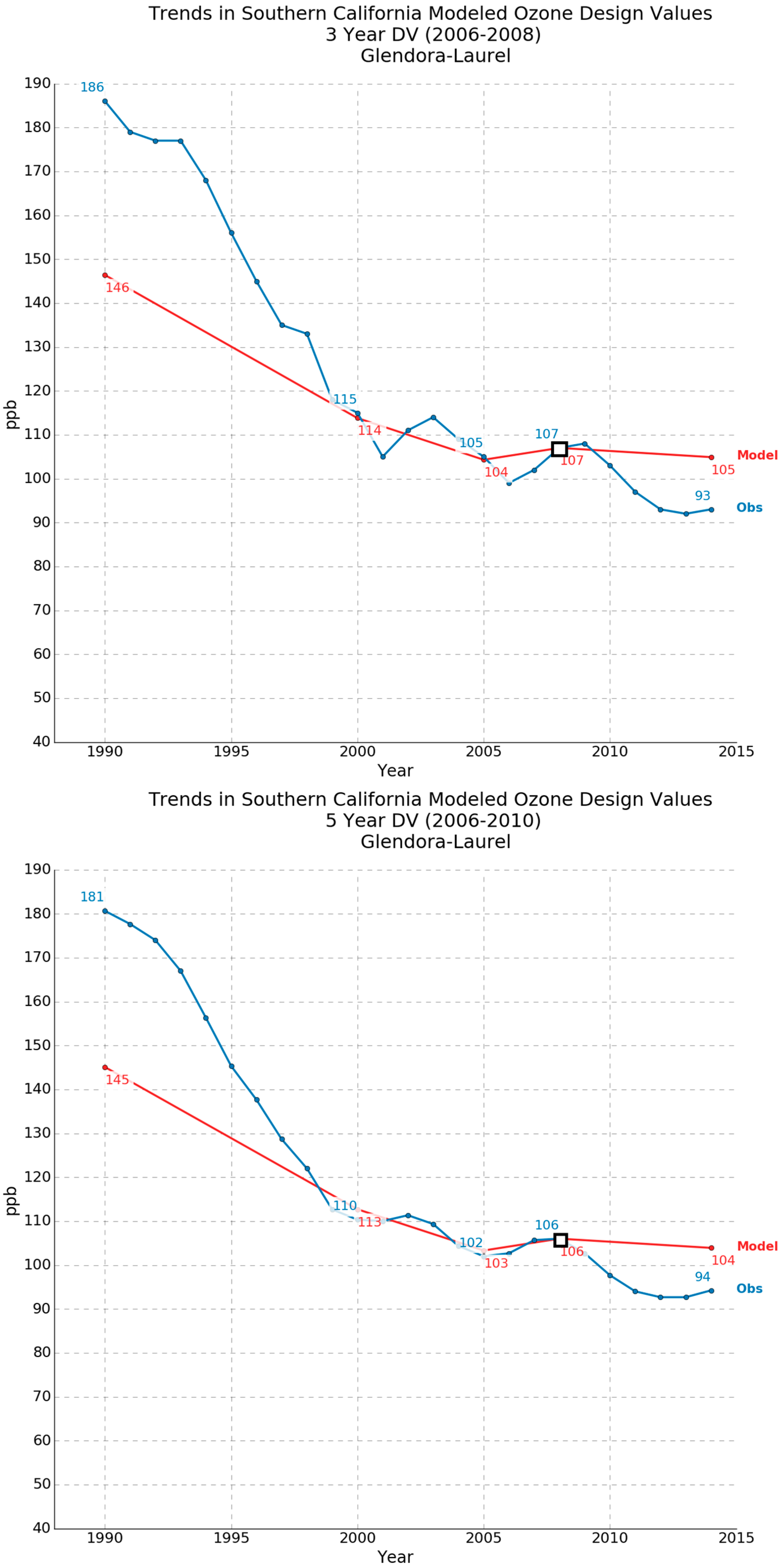

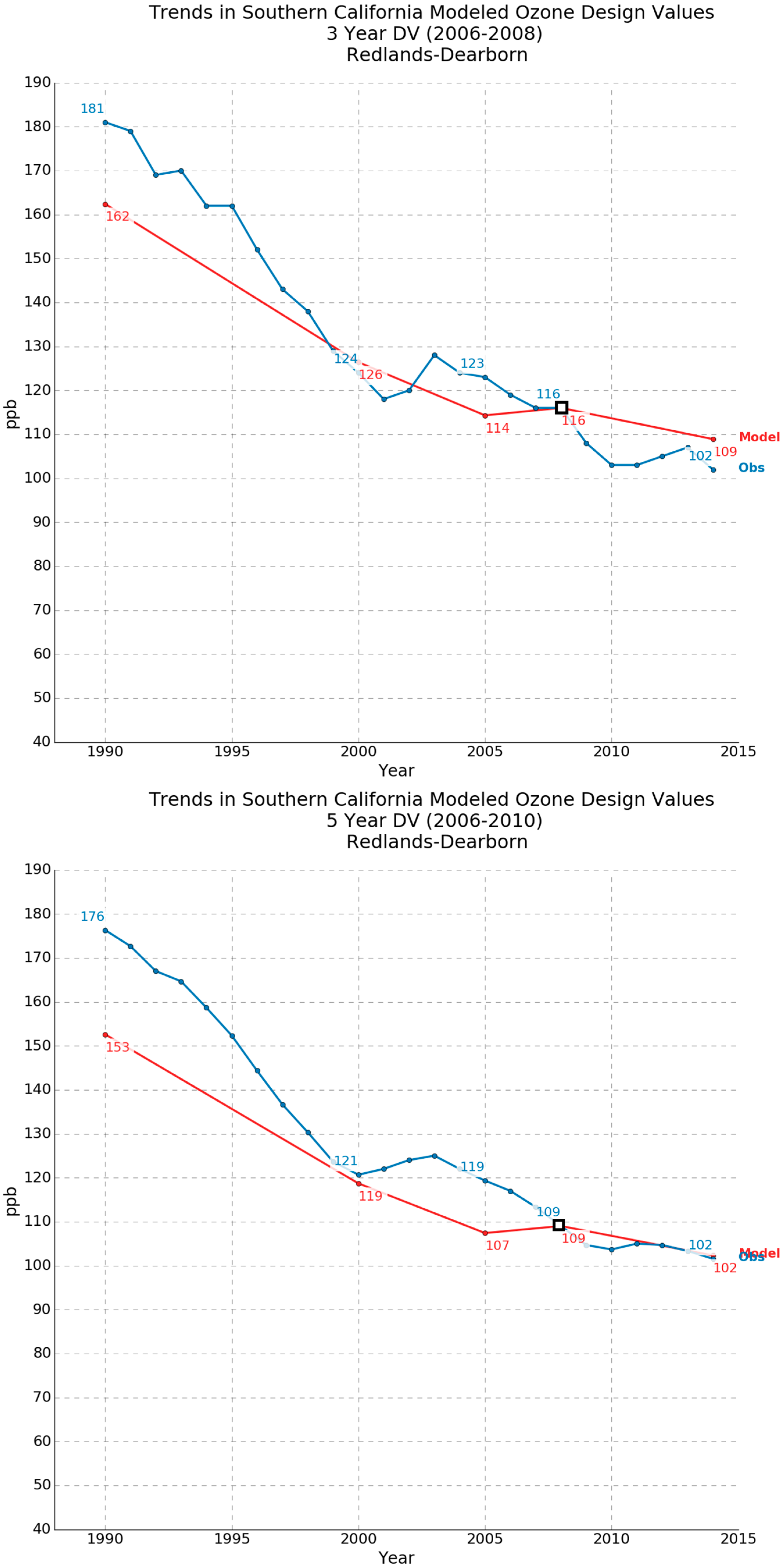

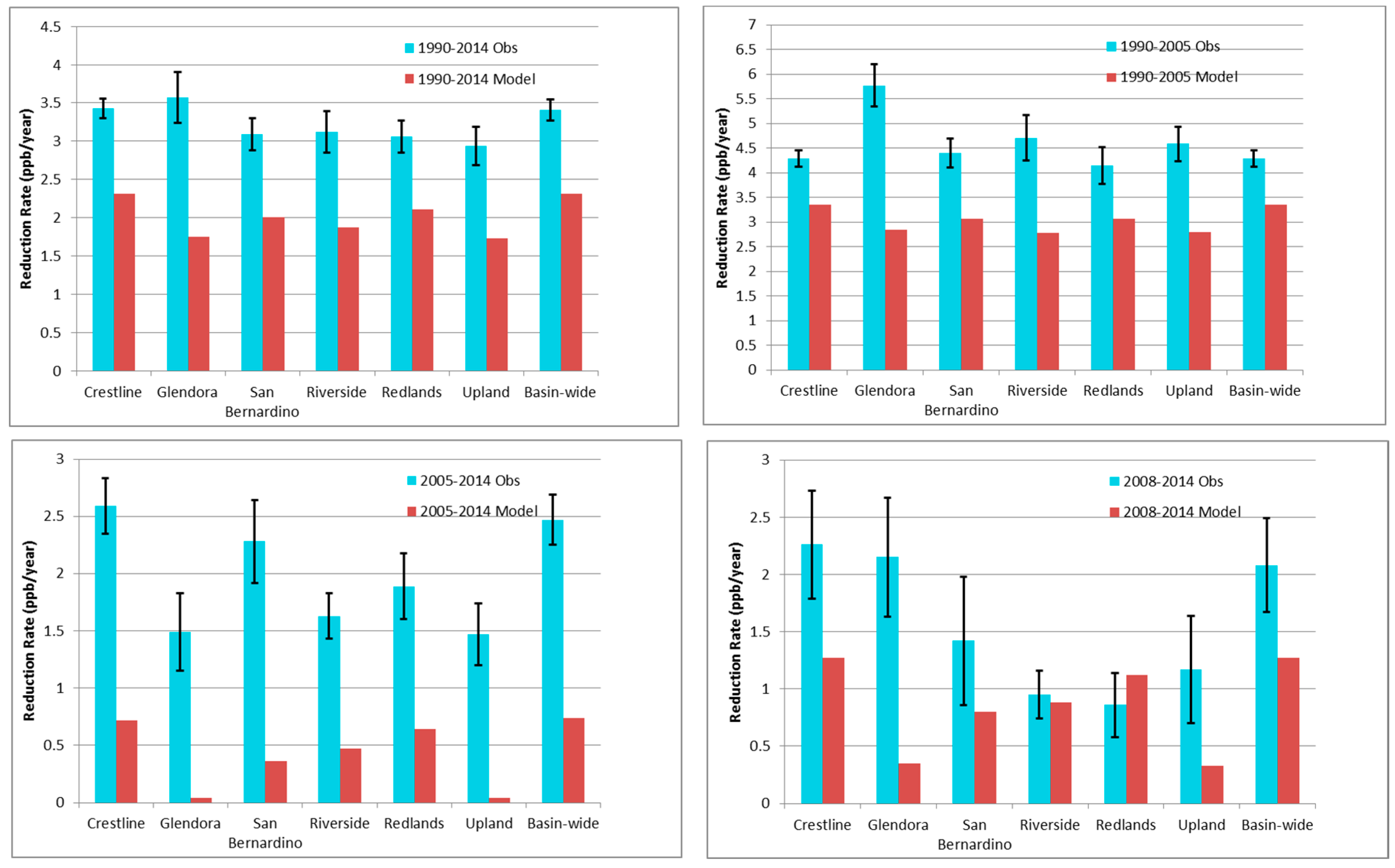

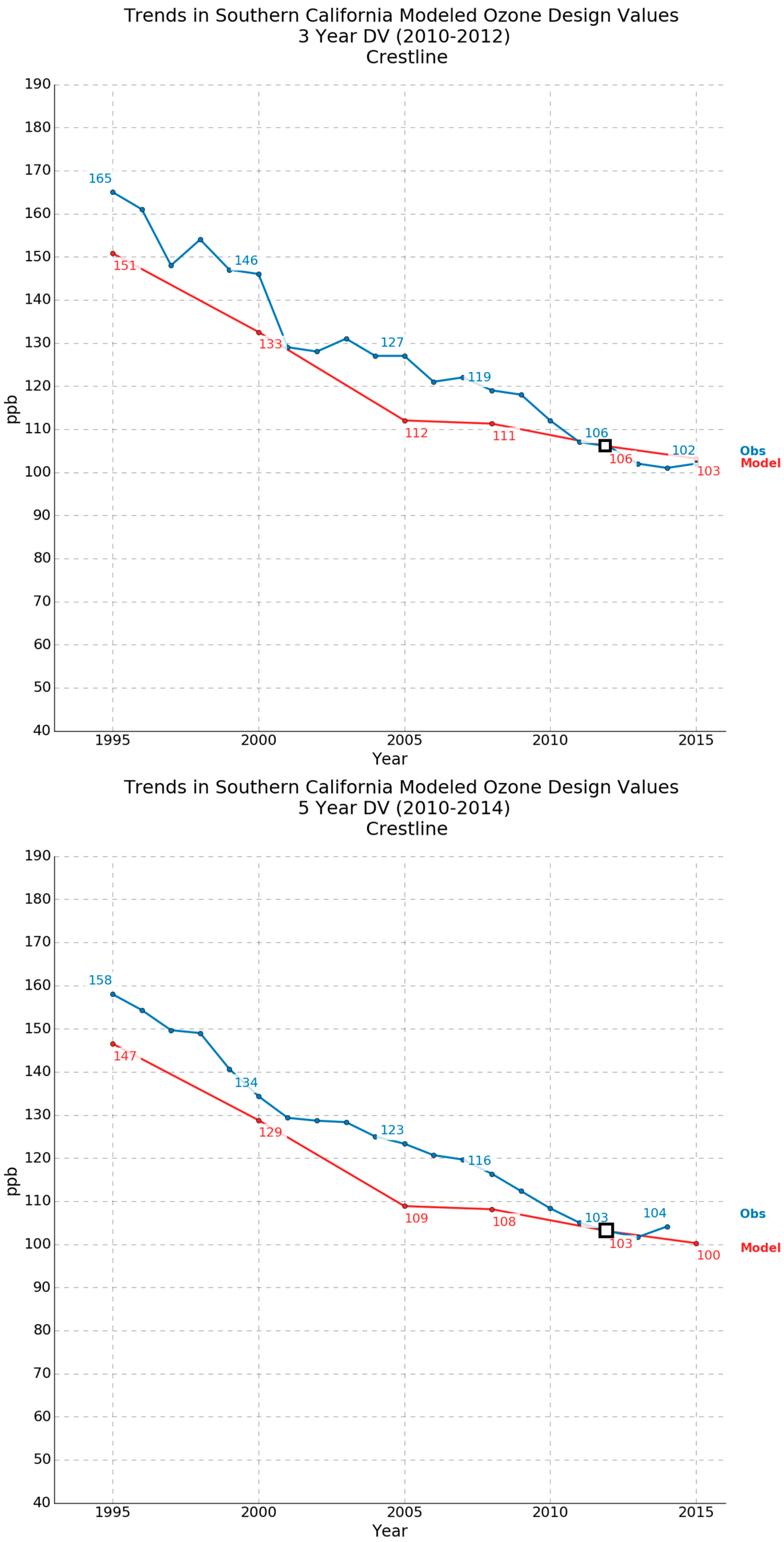

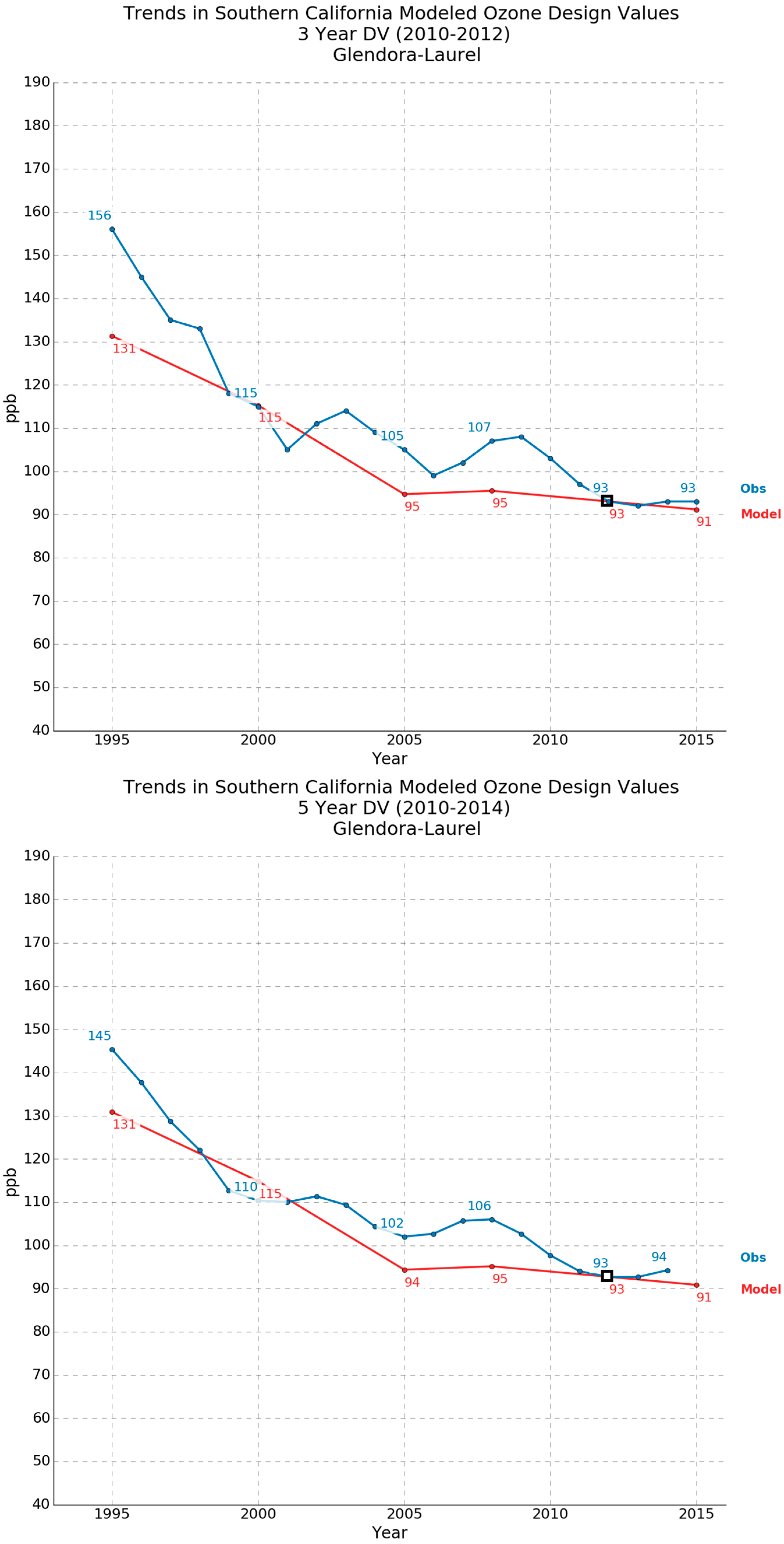

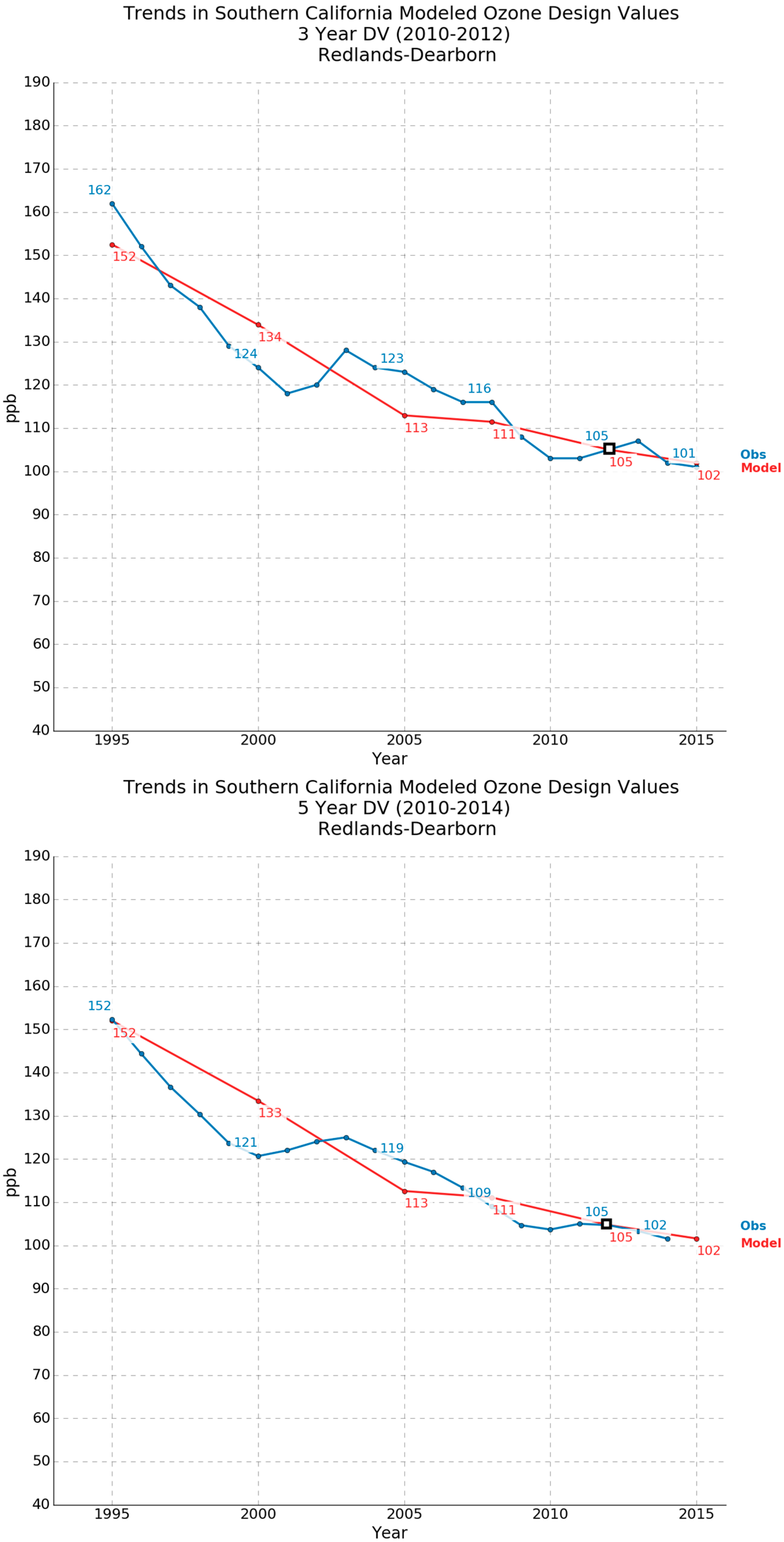

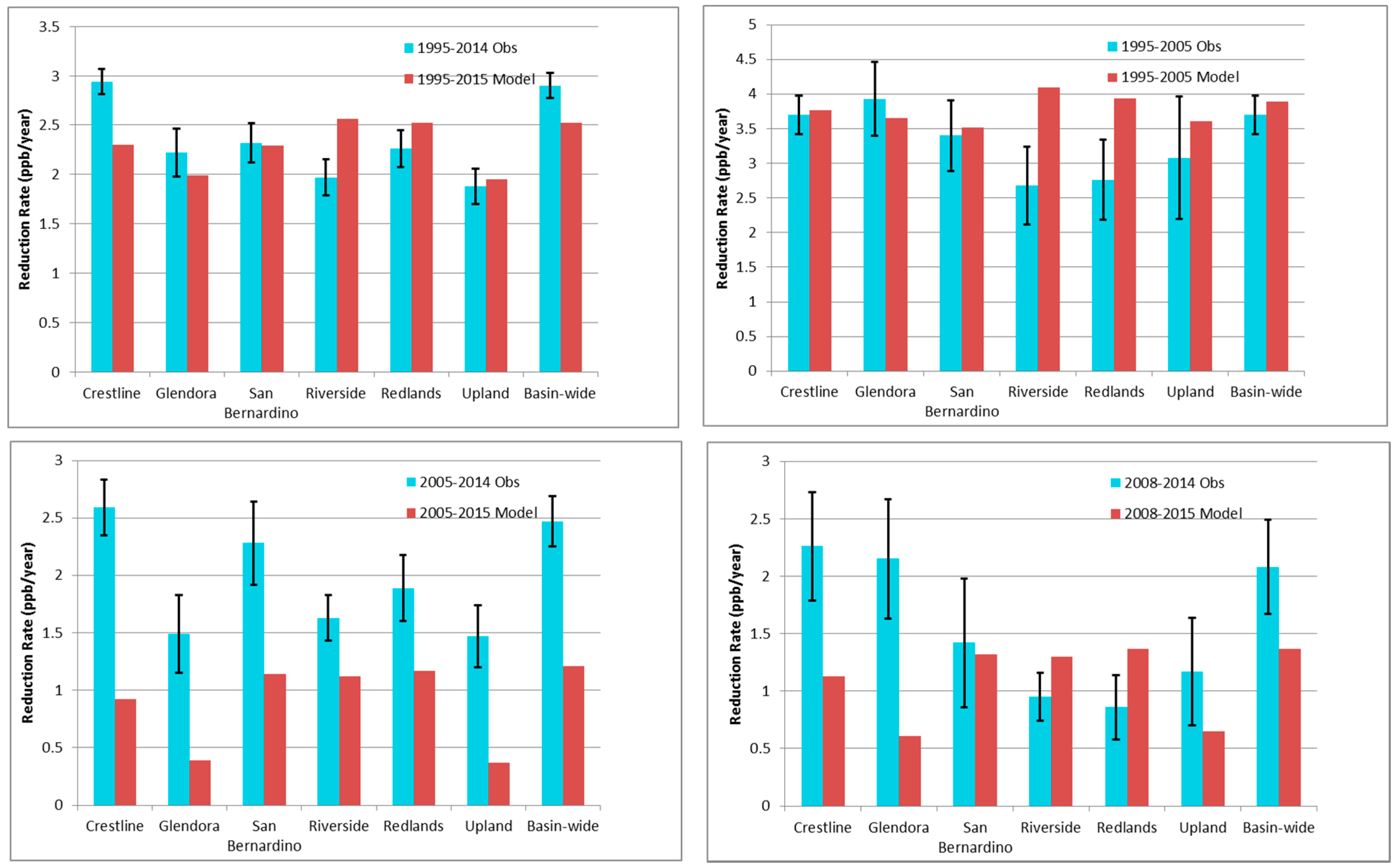

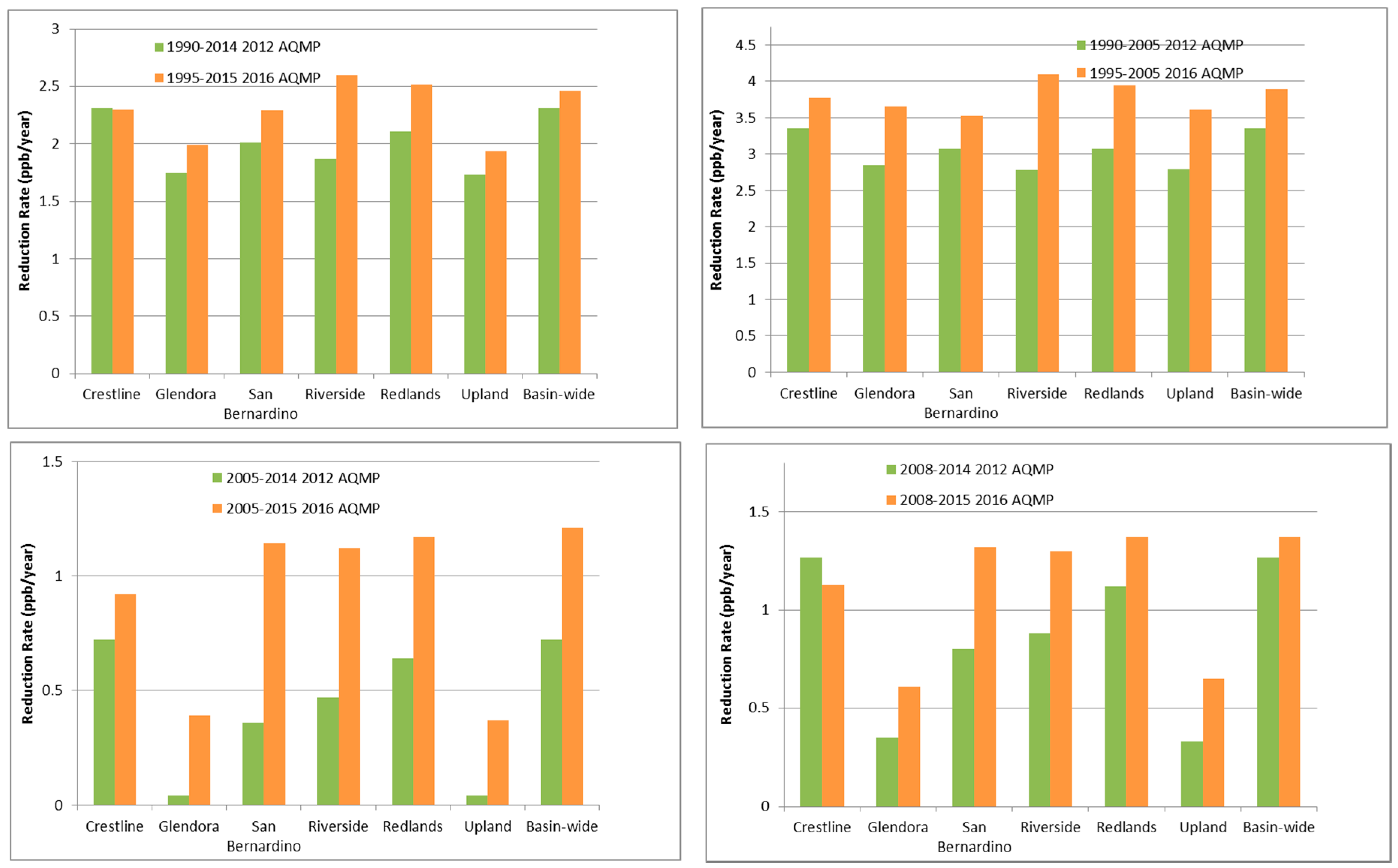

3.1. Dynamic Evaluation Using 2012 AQMP Database

3.2. Dynamic Evaluation Using 2016 AQMP Database

3.3. Meteorology and Boundary Condition Sensitivity Studies

3.4. Emissions Sensitivity Study

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Foley, K.M.; Dolwick, P.; Hogrefe, C.; Simon, H.; Timin, B.; Possiel, N. Dynamic evaluation of CMAQ part II: Evaluation of relative response factor metrics for ozone attainment demonstrations. Atmos. Environ. 2015, 103, 188–195. [Google Scholar] [CrossRef]

- Napelenok, S.L.; Foley, K.M.; Kang, D.; Mathur, R.; Pierce, T.; Rao, S.T. Dynamic evaluation of regional air quality model’s response to emission reductions in the presence of uncertain emission inventories. Atmos. Environ. 2011, 45, 4091–4098. [Google Scholar] [CrossRef]

- Cohan, D.S.; Napelenok, S.L. Air quality response modeling for decision support. Atmosphere 2011, 2, 407–425. [Google Scholar] [CrossRef]

- Hogrefe, C.; Civerolo, K.L.; Winston, H.; Ku, J.-Y.; Zalewsky, E.E.; Sistla, G. Rethinking the assessment of photochemical modeling systems in air quality planning applications. J. Air Waste Manag. Assoc. 2008, 58, 1086–1099. [Google Scholar] [CrossRef] [PubMed]

- Fujita, E.M.; Campbell, D.E.; Stockwell, W.R.; Lawson, D.R. Past and future ozone trends in California's South Coast Air Basin: Reconciliation of ambient measurements with past and projected emission inventories. J. Air Waste Manag. Assoc. 2013, 63, 54–69. [Google Scholar] [CrossRef] [PubMed]

- Croes, B.E.; Fujita, E.M. Overview of the 1997 southern California ozone study (SCOS97-NARSTO). Atmos. Environ. 2003, 37, 6. [Google Scholar] [CrossRef]

- Pollack, I.B.; Ryerson, T.B.; Trainer, M.; Neuman, J.A.; Roberts, J.M.; Parrish, D.D. Trends in ozone, its precursors, and related secondary oxidation products in Los Angeles, California: A synthesis of measurements from 1960 to 2010. J. Geophys. Res. Atmos. 2013, 118, 5893–5911. [Google Scholar] [CrossRef]

- Fujita, E.M.; Campbell, D.E.; Stockwell, W.R.; Saunders, E.; Fitzgerald, R.; Perea, R. Projected ozone trends and changes in the ozone-precursor relationship in the South Coast Air Basin in response to varying reductions of precursor emissions. J. Air Waste Manag. Assoc. 2016, 66, 201–214. [Google Scholar] [CrossRef] [PubMed]

- Huang, M.; Bowman, K.W.; Carmichael, G.R.; Chai, T.; Pierce, R.B.; Worden, J.R.; Luo, M.; Pollack, I.B.; Ryerson, T.B.; Nowak, J.B.; et al. Changes in nitrogen oxides emissions in California during 2005–2010 indicated from top-down and bottom-up emission estimates. J. Geophys. Res. Atmos. 2014, 119, 12928–12952. [Google Scholar] [CrossRef]

- Dennis, R.; Fox, T.; Fuentes, M.; Gilliland, A.; Hanna, S.; Hogrefe, C.; Irwin, J.; Rao, S.T.; Scheffe, R.; Schere, K.; et al. A framework for evaluating regional-scale numerical photochemical modeling systems. Environ. Fluid Mech. 2010, 10, 471–489. [Google Scholar] [CrossRef] [PubMed]

- U.S. EPA. Guidance on the Use of Models and Other Analyses for Demonstrating Attainment of Air Quality Goals for Ozone, PM2.5 and Regional Haze; U.S. Environmental Protection Agency: Research Triangle Park, NC, USA; EPA-454/B-002; April 2017. Available online: https://www3.epa.gov/scram001/guidance/guide/final-03-pm-rh-guidance.pdf (accessed on 9 August 2017).

- U.S. EPA. Draft Modeling Guidance for Demonstrating Attainment of Air Quality Goals for Ozone, PM2.5 and Regional Haze; U.S. Environmental Protection Agency: Research Triangle Park, NC, USA, December 2014. Available online: https://www3.epa.gov/scram001/guidance/guide/Draft_O3-PM-RH_Modeling_Guidance-2014.pdf (accessed on 9 August 2017).

- Stehr, J.W. Reality Check: Evaluating the Model the Way It Is Used. In Proceedings of the MARAMA Workshop on Weight of Evidence Demonstrations for Ozone SIPs, Cape May, NJ, USA, 5 February 2007; Available online: http://www.marama.org/calendar/events/presentations/2007_02Annual/Stehr_WoE_ModelResponse.pdf (accessed on 9 August 2017).

- Thunis, P.; Clappier, A. Indicators to support the dynamic evaluation of air quality models. Atmos. Environ. 2014, 98, 402–409. [Google Scholar] [CrossRef]

- Byun, D.; Schere, K.L. Review of the governing equations, computational algorithms, and other components of the Models-3 Community Multiscale Air Quality (CMAQ) modeling system. Appl. Mech. Rev. 2006, 59, 51–77. [Google Scholar] [CrossRef]

- Gilliland, A.B.; Hogrefe, C.; Pinder, R.W.; Godowitch, J.M.; Foley, K.L.; Rao, S.T. Dynamic evaluation of regional air quality models: Assessing changes in O3 stemming from changes in emissions and meteorology. Atmos. Environ. 2008, 42, 5110–5123. [Google Scholar] [CrossRef]

- Pierce, T.; Hogrefe, C.; Rao, S.T.; Porter, S.P.; Ku, J.-Y. Dynamic evaluation of a regional air quality model: Assessing the emissions-induced weekly ozone cycle. Atmos. Environ. 2010, 44, 3583–3596. [Google Scholar] [CrossRef]

- Zhou, W.; Cohan, D.S.; Napelenok, S.L. Reconciling NOx emissions reductions and ozone trends in the U.S., 2002–2006. Atmos. Environ. 2013, 70, 236–244. [Google Scholar] [CrossRef]

- Foley, K.M.; Hogrefe, C.; Pouliot, G.; Possiel, N.; Roselle, S.J.; Simon, H.; Timin, B. Dynamic evaluation of CMAQ part I: Separating the effects of changing emissions and changing meteorology on ozone levels between 2002 and 2005 in the eastern US. Atmos. Environ. 2015, 103, 247–255. [Google Scholar] [CrossRef]

- Xing, J.; Mathur, R.; Pleim, J.; Hogrefe, C.; Gan, C.-M.; Wong, D.C.; Wei, C.; Gilliam, R.; Pouliot, G. Observations and modeling of air quality trends over 1990–2010 across the Northern Hemisphere: China, the United States and Europe. Atmos. Chem. Phys. 2015, 15, 2723–2747. [Google Scholar] [CrossRef]

- Appel, K.W.; Napelenok, S.L.; Foley, K.M.; Pye, H.O.T.; Hogrefe, C.; Luecken, D.J.; Bash, J.O.; Roselle, S.J.; Pleim, J.E.; Foroutan, H.; et al. Description and evaluation of the Community Multiscale Air Quality (CMAQ) modeling system version 5.1. Geosci. Model Dev. 2017, 10, 1703–1732. [Google Scholar] [CrossRef]

- South Coast Air Quality Management District. Final 2012 Air Quality Management Plan. South Coast Air Quality Management District: Diamond Bar, CA, USA, February 2013. Available online: http://www.aqmd.gov/docs/default-source/clean-air-plans/air-quality-management-plans/2012-air-quality-management-plan/final-2012-aqmp-(february-2013)/main-document-final-2012.pdf (accessed on 9 August 2017).

- South Coast Air Quality Management District. Final 2016 Air Quality Management Plan. South Coast Air Quality Management District: Diamond Bar, CA, USA, June 2016. Available online: http://www.aqmd.gov/docs/default-source/clean-air-plans/air-quality-management-plans/2016-air-quality-management-plan/final-2016-aqmp/final2016aqmp.pdf?sfvrsn=15 (accessed on 9 August 2017).

- Emmons, L.K.; Walters, S.; Hess, P.G.; Lamarque, J.F.; Pfister, G.G.; Fillmore, D.; Granier, C.; Guenther, A.; Kinnison, D.; Laepple, T. Description and evaluation of the Model for Ozone and Related chemical Tracers, version 4 (MOZART-4). Geosci. Model Dev. 2010, 3, 43–67. [Google Scholar] [CrossRef] [Green Version]

- Carter, W.P.L. Documentation of the SAPRC-99 Chemical Mechanism for VOC Reactivity Assessment, Final Report to California Air Resources Board Contract No. 929, and 908. Air Pollution Research Center and College of Engineering, Center for Environmental Research and Technology, University of California: Riverside, CA, USA, May 2000. Available online: http://www.engr.ucr.edu/~carter/pubs/s99txt.pdf (accessed on 9 August 2017).

- Carter, W.P.L. Development of the SAPRC-07 chemical mechanism. Atmos. Environ. 2010, 44, 5324–5335. [Google Scholar] [CrossRef]

- Carter, W.P.L. Development of a condensed SAPRC-07 chemical mechanism. Atmos. Environ. 2010, 44, 5336–5345. [Google Scholar] [CrossRef]

- Abt Associates Inc. Modeled Attainment Test Software User’s Manual, prepared for U.S. EPA OAQPS. Abt Associates, Inc.: Bethesda, MD, USA, April 2014. Available online: https://www3.epa.gov/ttn/scram/guidance/guide/MATS_2-6-1_manual.pdf (accessed on 9 August 2017).

- Air Quality Trend Summaries. Available online: https://www.arb.ca.gov/adam/trends/trends1.php (accessed on 9 August 2017).

- CEPAM: 2009 Almanac—Standard Emissions Tool. Available online: https://www.arb.ca.gov/app/emsinv/fcemssumcat2009.php (accessed on 9 August 2017).

- CEPAM: 2013 Almanac—Standard Emissions Tool. Available online: https://www.arb.ca.gov/app/emsinv/fcemssumcat2013.php (accessed on 9 August 2017).

- Speciation Profiles Used in Modeling. Available online: https://www.arb.ca.gov/ei/speciate/speciate.htm (accessed on 9 August 2017).

- Porter, S.; Rao, S.T.; Hogrefe, C.; Mathur, R. A reduced form model for ozone based on two decades of CMAQ simulations for the continental United States. Atmos. Pollut. Res. 2017, 8, 275–284. [Google Scholar] [CrossRef]

| Pollutant | 1990 | 2000 | 2005 | 2008 |

|---|---|---|---|---|

| NOx | 2759 | 1889 | 1528 | 1473 |

| CO | 21,192 | 9388 | 6500 | 6560 |

| TOG | 8872 | 11,555 | 10,390 | 4552 |

| NH3 | 360 | 360 | 360 | 360 |

| SO2 | 355 | 223 | 211 | 160 |

| PM2.5 | 356 | 234 | 228 | 280 |

| PM10 | 1209 | 683 | 671 | 987 |

| Pollutant | 1995 | 2000 | 2005 | 2008 | 2012 | 2015 |

|---|---|---|---|---|---|---|

| NOx | 3086 | 2472 | 1999 | 1614 | 1158 | 1022 |

| CO | 14,747 | 8599 | 5954 | 5185 | 4066 | 3546 |

| TOG | 7989 | 8952 | 6363 | 5637 | 4676 | 4433 |

| NH3 | 308 | 308 | 308 | 308 | 308 | 308 |

| SO2 | 240 | 213 | 202 | 127 | 71 | 71 |

| PM2.5 | 350 | 270 | 262 | 239 | 217 | 211 |

| PM10 | 1964 | 995 | 987 | 955 | 906 | 937 |

| Monitor | Observed | 2016 AQMP | ||

|---|---|---|---|---|

| Baseline | 2008 Meteorology | O3 BCs Reduced by 20% | ||

| Crestline | 2.8 | 1.2 | 1.0 | 1.4 |

| Glendora | 2.5 | 0.6 | 0.3 | 0.7 |

| Redlands | 1.4 | 1.4 | 1.3 | 1.4 |

| Basin-wide | 2.6 | 1.2 | 1.1 | 1.4 |

| Monitor | Observed | 2016 AQMP | |

|---|---|---|---|

| Baseline | Double VOC Emissions | ||

| Crestline | 2.8 | 1.2 | 2.3 |

| Glendora | 2.5 | 0.6 | 2.2 |

| Redlands | 1.4 | 1.4 | 2.7 |

| Basin-wide | 2.6 | 1.2 | 2.5 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karamchandani, P.; Morris, R.; Wentland, A.; Shah, T.; Reid, S.; Lester, J. Dynamic Evaluation of Photochemical Grid Model Response to Emission Changes in the South Coast Air Basin in California. Atmosphere 2017, 8, 145. https://doi.org/10.3390/atmos8080145

Karamchandani P, Morris R, Wentland A, Shah T, Reid S, Lester J. Dynamic Evaluation of Photochemical Grid Model Response to Emission Changes in the South Coast Air Basin in California. Atmosphere. 2017; 8(8):145. https://doi.org/10.3390/atmos8080145

Chicago/Turabian StyleKaramchandani, Prakash, Ralph Morris, Andrew Wentland, Tejas Shah, Stephen Reid, and Julia Lester. 2017. "Dynamic Evaluation of Photochemical Grid Model Response to Emission Changes in the South Coast Air Basin in California" Atmosphere 8, no. 8: 145. https://doi.org/10.3390/atmos8080145