Comprehensive Evaluation of Machine Learning Techniques for Estimating the Responses of Carbon Fluxes to Climatic Forces in Different Terrestrial Ecosystems

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Sites

2.2. Data Preparation

2.3. Machine Learning Methods

2.3.1. Adaptive Neuro-Fuzzy Inference System

2.3.2. Extreme Learning Machine

2.3.3. Artificial Neural Network

2.3.4. Support Vector Machine

2.4. Model Development and Software Availability

2.5. Model Performance Evaluation

3. Results

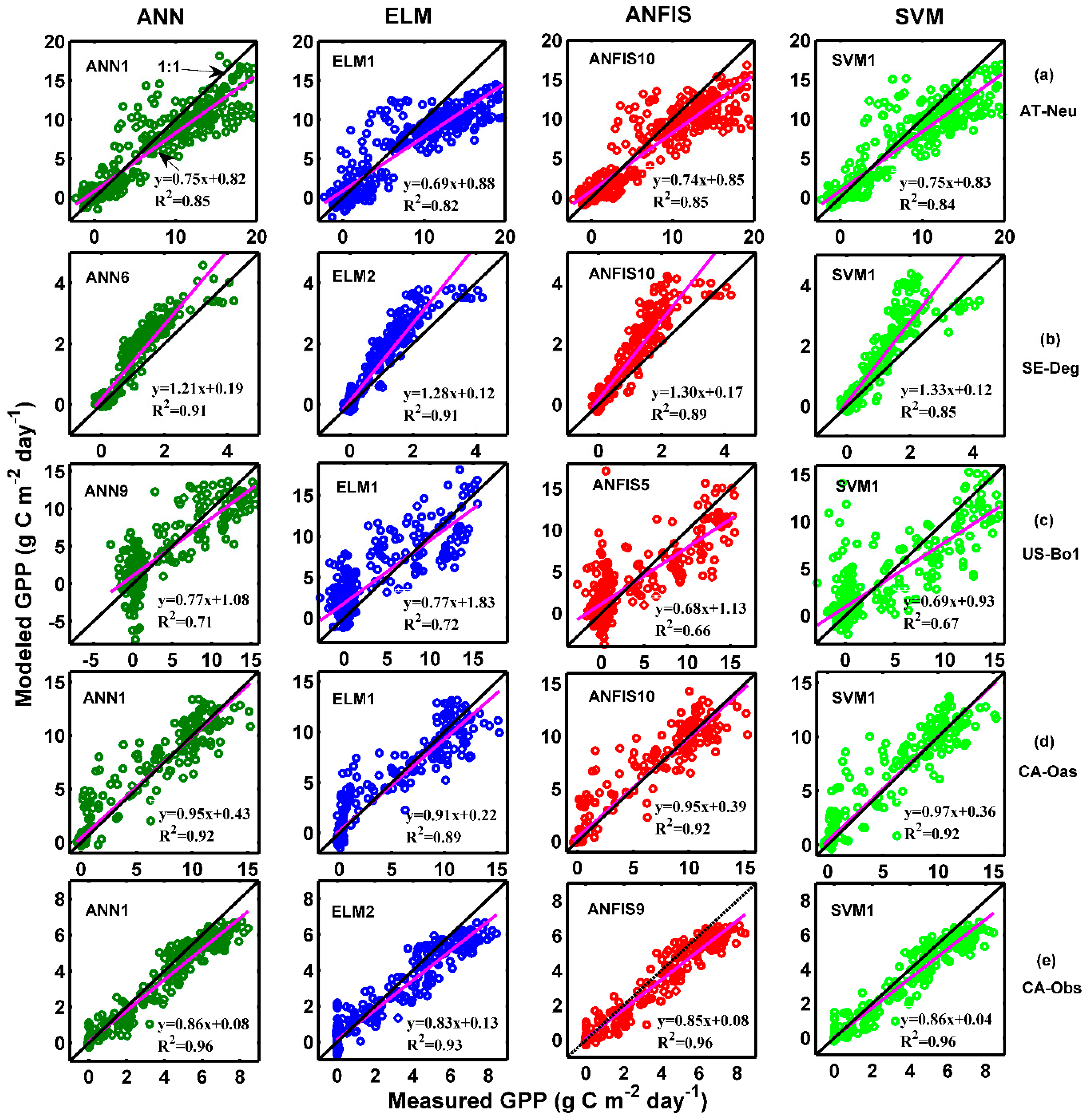

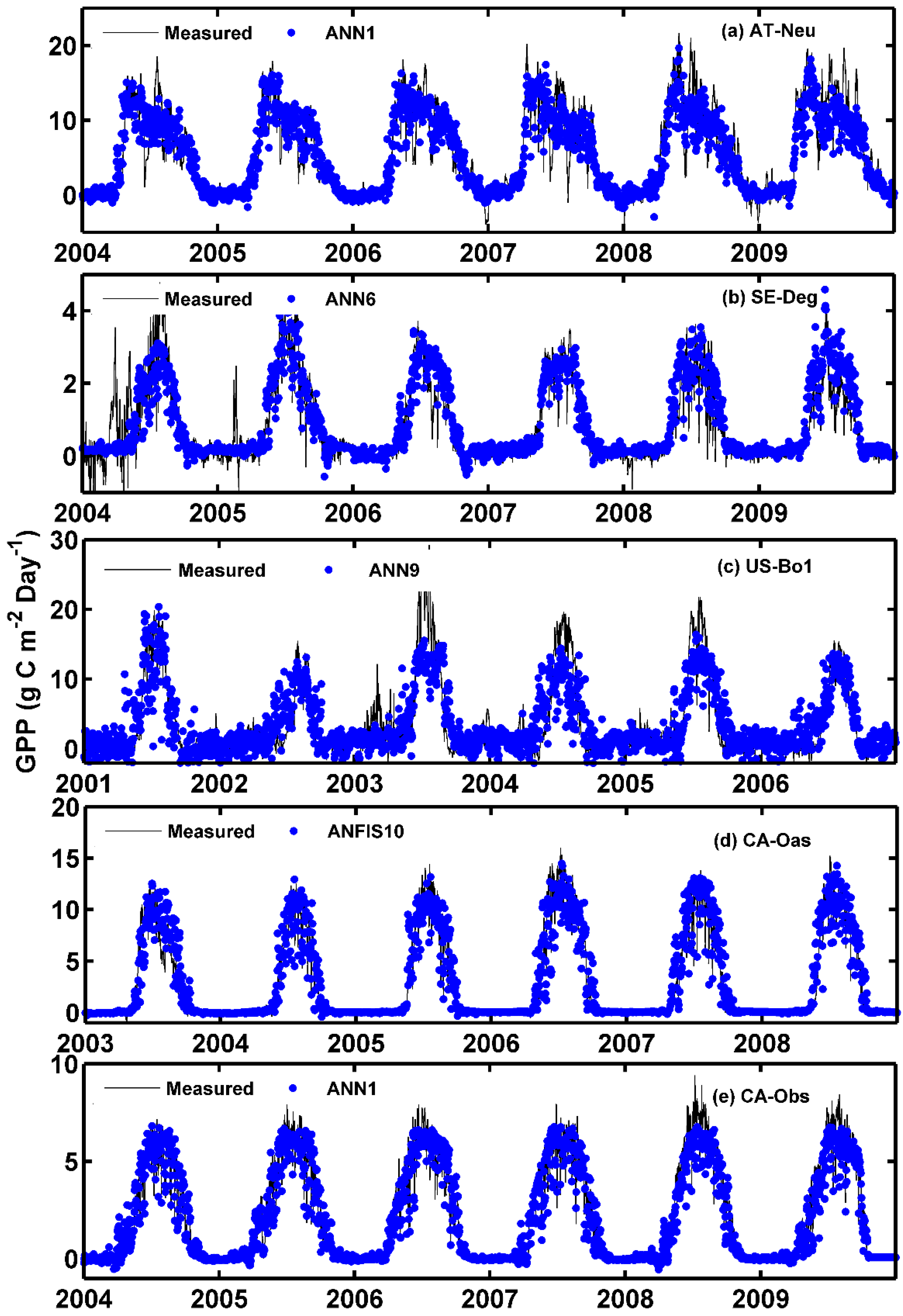

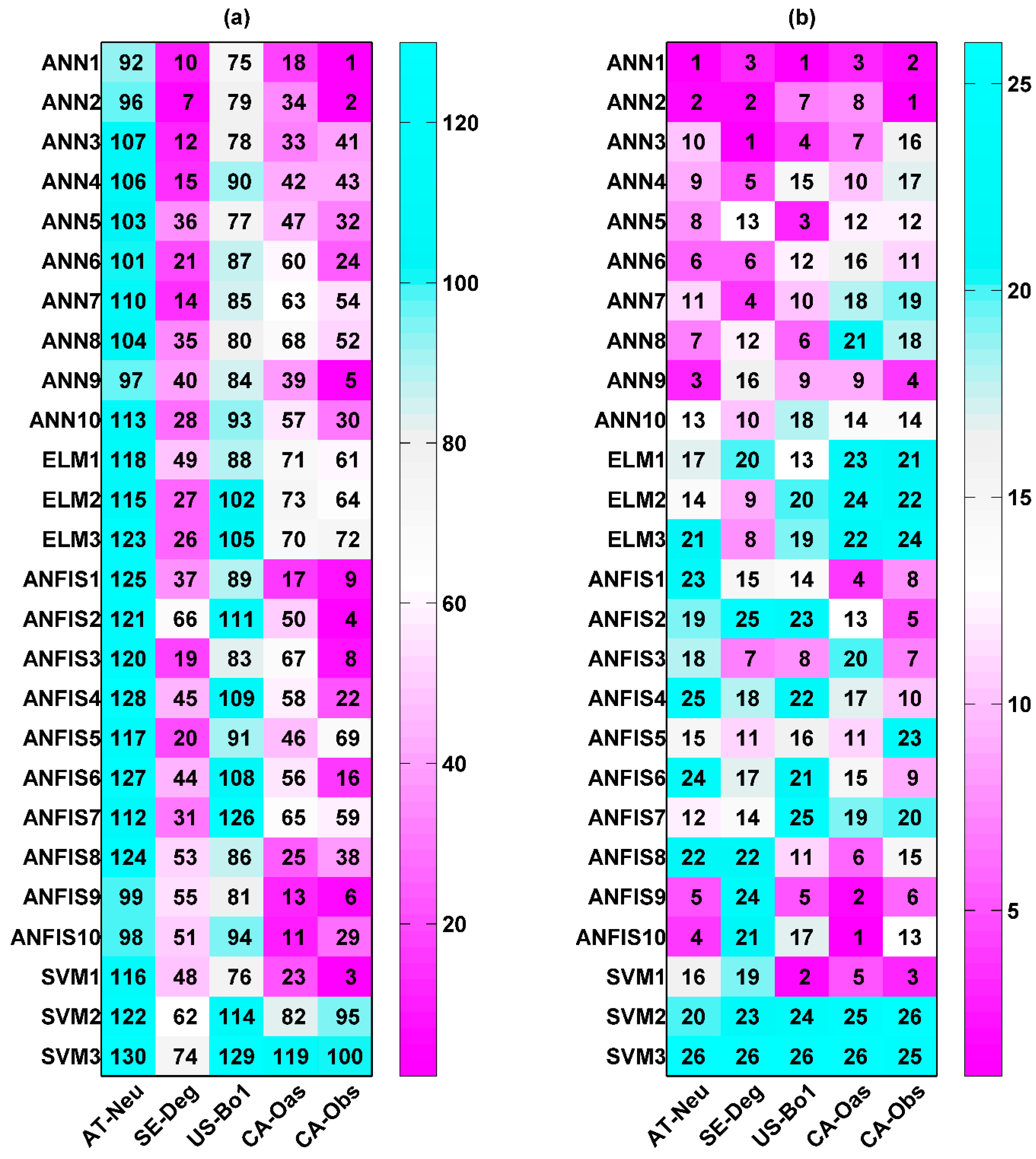

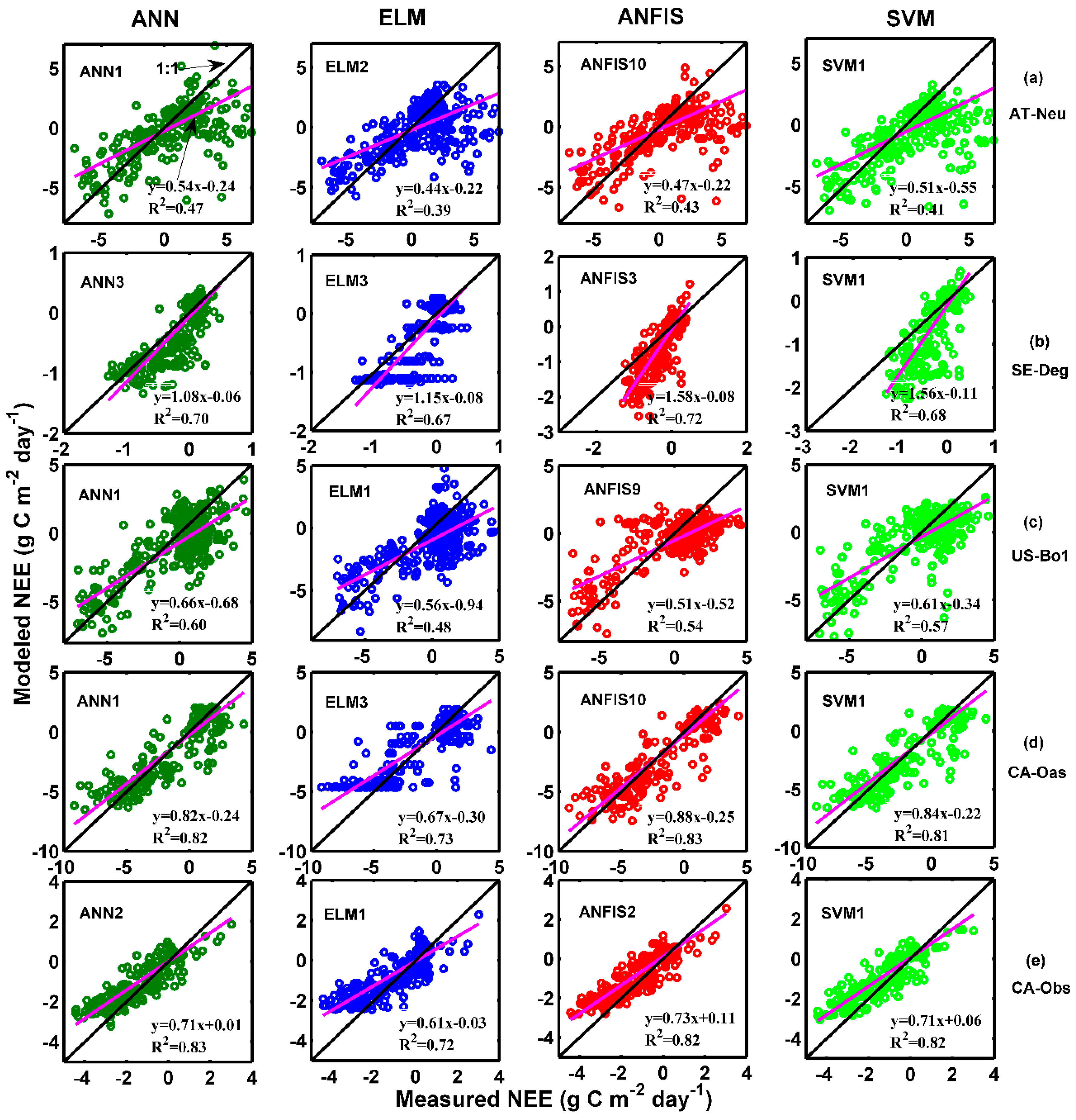

3.1. Evaluation of Model Performance for the GPP Flux in Different Ecosystems

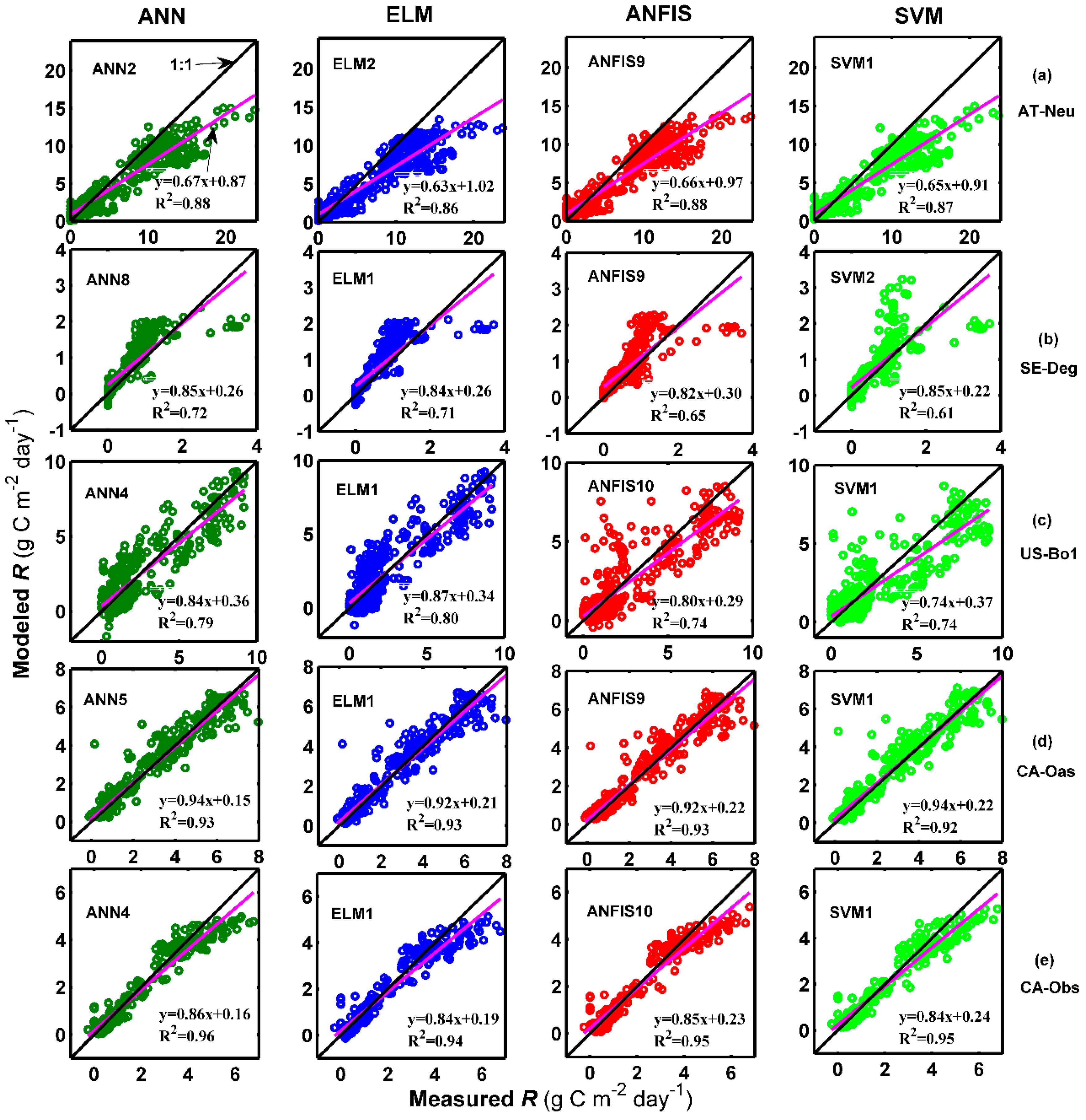

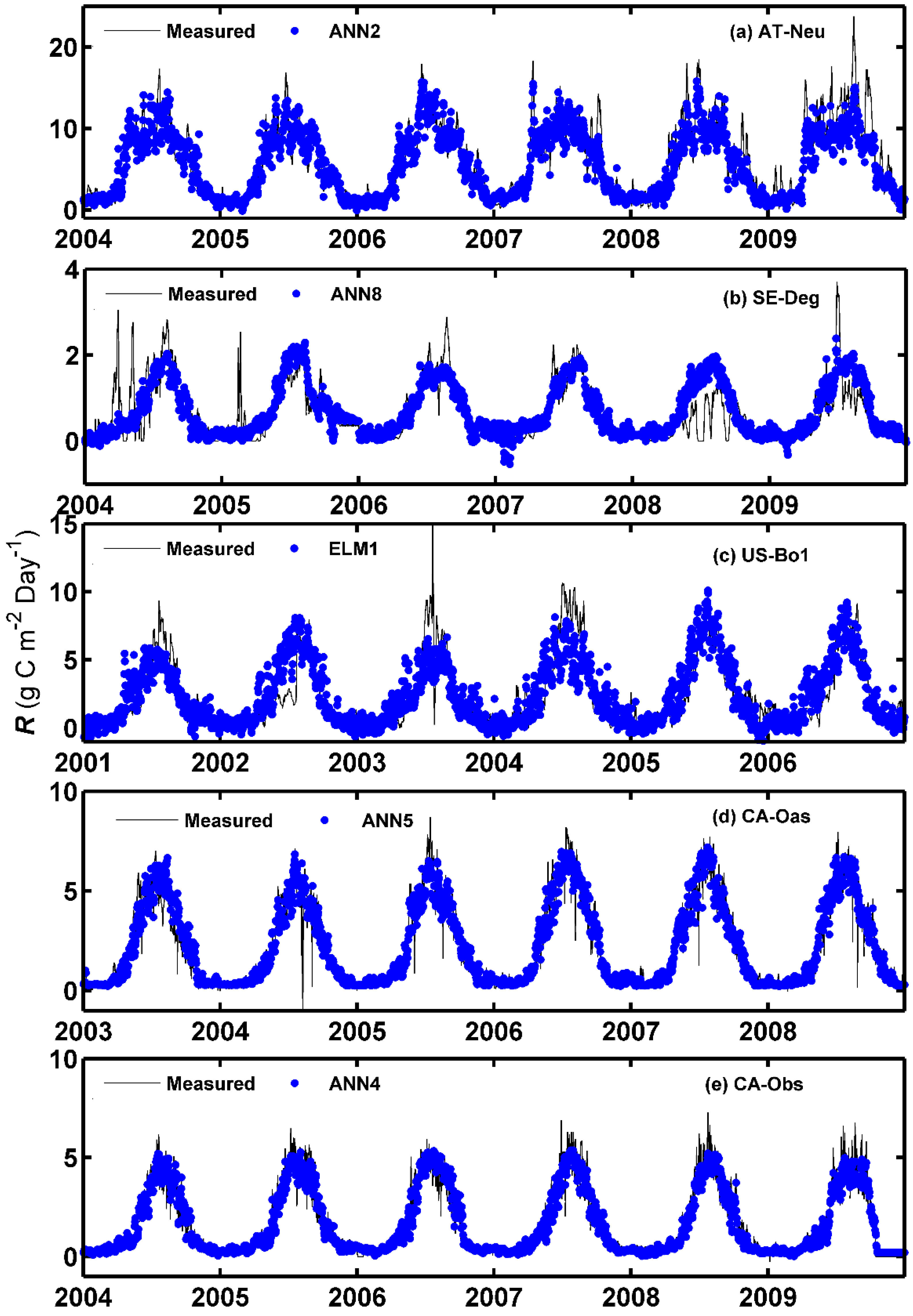

3.2. Evaluation of Model Performance for the R Flux in Different Ecosystems

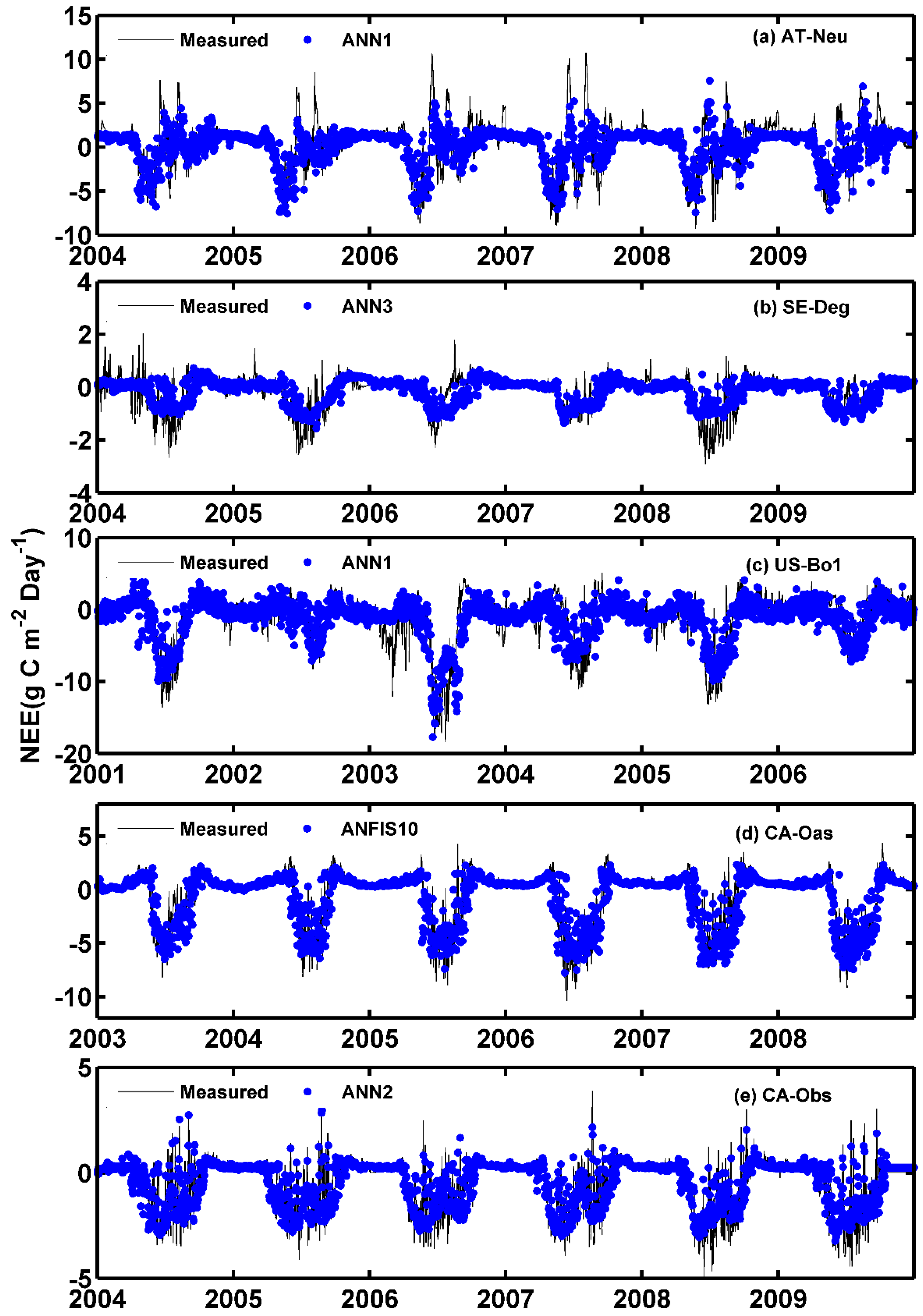

3.3. Evaluation of Model Performance for NEE flux in Different Ecosystems

4. Discussion

4.1. Modeling Capability of Machine Learning Approaches and Their Comparison

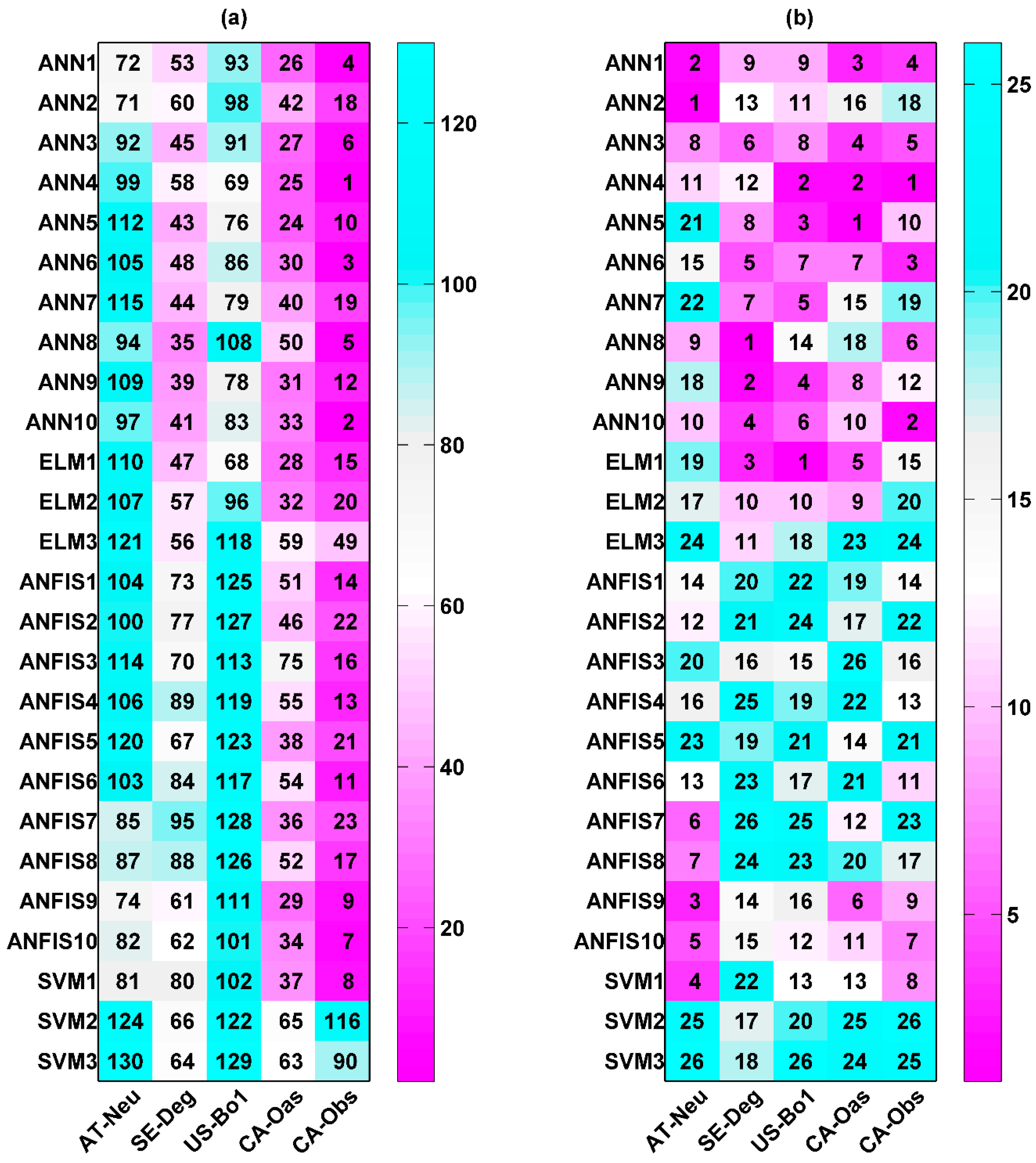

4.2. Effects of Different Inner Parameters on Their Corresponding Approaches

4.3. Difference in Forecasting Accuracy among Various Fluxes and Sites

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Green, J.K.; Konings, A.G.; Alemohammad, S.H.; Berry, J.; Entekhabi, D.; Kolassa, J.; Lee, J.-E.; Gentine, P. Regionally strong feedbacks between the atmosphere and terrestrial biosphere. Nat. Geosci. 2017, 10, 410–414. [Google Scholar] [CrossRef]

- Schimel, D.; Pavlick, R.; Fisher, J.B.; Asner, G.P.; Saatchi, S.; Townsend, P.; Miller, C.; Frankenberg, C.; Hibbard, K.; Cox, P. Observing terrestrial ecosystems and the carbon cycle from space. Glob. Chang. Biol. 2015, 21, 1762–1776. [Google Scholar] [CrossRef] [PubMed]

- Stoy, P.C.; Dietze, M.C.; Richardson, A.D.; Vargas, R.; Barr, A.G.; Anderson, R.S.; Arain, M.A.; Baker, I.T.; Black, T.A.; Chen, J.M.; et al. Evaluating the agreement between measurements and models of net ecosystem exchange at different times and timescales using wavelet coherence: An example using data from the North American Carbon Program Site-Level Interim Synthesis. Biogeosciences 2013, 10, 6893–6909. [Google Scholar] [CrossRef] [Green Version]

- Frank, D.; Reichstein, M.; Bahn, M.; Frank, D.; Mahecha, M.D.; Smith, P.; Thonicke, K.; Velde, M.; Vicca, S.; Babst, F. Effects of climate extremes on the terrestrial carbon cycle: Concepts, processes and potential future impacts. Glob. Chang. Biol. 2015, 21, 2861–2880. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, F.; Zeng, N.; Asrar, G.; Friedlingstein, P.; Ito, A.; Jain, A.; Kalnay, E.; Kato, E.; Koven, C.D.; Poulter, B.; et al. Role of CO2, climate and land use in regulating the seasonal amplitude increase of carbon fluxes in terrestrial ecosystems: A multimodel analysis. Biogeosciences 2016, 13, 5121–5137. [Google Scholar] [CrossRef]

- Stassen, P. Carbon cycle: Global warming then and now. Nat. Geosci. 2016, 9, 268–269. [Google Scholar] [CrossRef]

- Cramer, W.; Bondeau, A.; Woodward, F.I.; Prentice, I.C.; Betts, R.A.; Brovkin, V.; Cox, P.M.; Fisher, V.; Foley, J.A.; Friend, A.D. Global response of terrestrial ecosystem structure and function to CO2 and climate change: Results from six dynamic global vegetation models. Glob. Chang. Biol. 2001, 7, 357–373. [Google Scholar] [CrossRef]

- Arneth, A.; Harrison, S.P.; Zaehle, S.; Tsigaridis, K.; Menon, S.; Bartlein, P.J.; Feichter, J.; Korhola, A.; Kulmala, M.; O’donnell, D. Terrestrial biogeochemical feedbacks in the climate system. Nat. Geosci. 2010, 3, 525–532. [Google Scholar] [CrossRef]

- Luo, Y. Terrestrial carbon–cycle feedback to climate warming. Annu. Rev. Ecol. Evol. Syst. 2007, 38, 683–712. [Google Scholar] [CrossRef]

- Schwalm, C.R.; Huntzinger, D.N.; Fisher, J.B.; Michalak, A.M.; Bowman, K.; Ciais, P.; Cook, R.; El-Masri, B.; Hayes, D.; Huang, M. Toward “optimal” integration of terrestrial biosphere models. Geophys. Res. Lett. 2015, 42, 4418–4428. [Google Scholar] [CrossRef]

- Zscheischler, J.; Michalak, A.M.; Schwalm, C.; Mahecha, M.D.; Huntzinger, D.N.; Reichstein, M.; Berthier, G.; Ciais, P.; Cook, R.B.; El-Masri, B.; et al. Impact of large-scale climate extremes on biospheric carbon fluxes: An intercomparison based on mstmip data. Glob. Biogeochem. Cycles 2014, 28, 585–600. [Google Scholar] [CrossRef]

- Bauerle, W.L.; Daniels, A.B.; Barnard, D.M. Carbon and water flux responses to physiology by environment interactions: A sensitivity analysis of variation in climate on photosynthetic and stomatal parameters. Clim. Dynam. 2014, 42, 2539–2554. [Google Scholar] [CrossRef]

- Walker, A.P.; Zaehle, S.; Medlyn, B.E.; De Kauwe, M.G.; Asao, S.; Hickler, T.; Parton, W.; Ricciuto, D.M.; Wang, Y.-P.; Wårlind, D.; et al. Predicting long-term carbon sequestration in response to CO2 enrichment: How and why do current ecosystem models differ? Glob. Biogeochem. Cycles 2015, 29, 476–495. [Google Scholar] [CrossRef]

- Raczka, B.M.; Davis, K.J.; Huntzinger, D.; Neilson, R.P.; Poulter, B.; Richardson, A.D.; Xiao, J.; Baker, I.; Ciais, P.; Keenan, T.F. Evaluation of continental carbon cycle simulations with North American flux tower observations. Ecol. Monogr. 2013, 83, 531–556. [Google Scholar] [CrossRef] [Green Version]

- Schindler, D.E.; Hilborn, R. Prediction, precaution, and policy under global change. Science 2015, 347, 953–954. [Google Scholar] [CrossRef] [PubMed]

- Ye, H.; Beamish, R.J.; Glaser, S.M.; Grant, S.C.H.; Hsieh, C.-h.; Richards, L.J.; Schnute, J.T.; Sugihara, G. Equation-free mechanistic ecosystem forecasting using empirical dynamic modeling. Proc. Nat. Acad. Sci. USA 2015, 112, E1569–E1576. [Google Scholar] [CrossRef] [PubMed]

- Crisci, C.; Ghattas, B.; Perera, G. A review of supervised machine learning algorithms and their applications to ecological data. Ecol. Model. 2012, 240, 113–122. [Google Scholar] [CrossRef]

- Olden, J.D.; Lawler, J.J.; Poff, N.L. Machine learning methods without tears: A primer for ecologists. Q. Rev. Biol. 2008, 83, 171–193. [Google Scholar] [CrossRef] [PubMed]

- Michener, W.K.; Jones, M.B. Ecoinformatics: Supporting ecology as a data-intensive science. Trends Ecol. Evol. 2012, 27, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Vahedi, A.A. Artificial neural network application in comparison with modeling allometric equations for predicting above-ground biomass in the hyrcanian mixed-beech forests of iran. Biomass Bioenerg. 2016, 88, 66–76. [Google Scholar] [CrossRef]

- Dengel, S.; Zona, D.; Sachs, T.; Aurela, M.; Jammet, M.; Parmentier, F.-J.W.; Oechel, W.; Vesala, T. Testing the applicability of neural networks as a gap-filling method using CH4 flux data from high latitude wetlands. Biogeosciences 2013, 10, 8185–8200. [Google Scholar] [CrossRef]

- Saigusa, N.; Ichii, K.; Murakami, H.; Hirata, R.; Asanuma, J.; Den, H.; Han, S.J.; Ide, R.; Li, S.G.; Ohta, T. Impact of meteorological anomalies in the 2003 summer on gross primary productivity in East Asia. Biogeosciences 2010, 7, 641–655. [Google Scholar] [CrossRef]

- Verrelst, J.; Muñoz, J.; Alonso, L.; Delegido, J.; Rivera, J.P.; Camps-Valls, G.; Moreno, J. Machine learning regression algorithms for biophysical parameter retrieval: Opportunities for sentinel-2 and -3. Remote Sens. Environ. 2012, 118, 127–139. [Google Scholar] [CrossRef]

- Huntzinger, D.N.; Post, W.M.; Wei, Y.; Michalak, A.M.; West, T.O.; Jacobson, A.R.; Baker, I.T.; Chen, J.M.; Davis, K.J.; Hayes, D.J.; et al. North American Carbon Program (NACP) regional interim synthesis: Terrestrial biospheric model intercomparison. Ecol. Model. 2012, 232, 144–157. [Google Scholar] [CrossRef]

- Beer, C.; Reichstein, M.; Tomelleri, E.; Ciais, P.; Jung, M.; Carvalhais, N.; RC6denbeck, C.; Arain, M.A.; Baldocchi, D.; Bonan, G.B. Terrestrial gross carbon dioxide uptake: Global distribution and covariation with climate. Science 2010, 329, 834–838. [Google Scholar] [CrossRef] [PubMed]

- Papale, D.; Black, T.A.; Carvalhais, N.; Cescatti, A.; Chen, J.; Jung, M.; Kiely, G.; Lasslop, G.; Mahecha, M.D.; Margolis, H.; et al. Effect of spatial sampling from european flux towers for estimating carbon and water fluxes with artificial neural networks. J. Geophys. Res. Biogeosci. 2015, 120, 1941–1957. [Google Scholar] [CrossRef]

- Dou, X.; Chen, B.; Black, T.; Jassal, R.; Che, M. Impact of nitrogen fertilization on forest carbon sequestration and water loss in a chronosequence of three Douglas-fir stands in the Pacific Northwest. Forests 2015, 6, 1897–1921. [Google Scholar] [CrossRef]

- Evrendilek, F. Quantifying biosphere–atmosphere exchange of CO2 using eddy covariance, wavelet denoising, neural networks, and multiple regression models. Agric. For. Meteorol. 2013, 171, 1–8. [Google Scholar] [CrossRef]

- Maier, H.R.; Dandy, G.C. Neural network based modelling of environmental variables: A systematic approach. Math. Comput. Model. 2001, 33, 669–682. [Google Scholar] [CrossRef]

- Raghavendra, N.S.; Deka, P.C. Support vector machine applications in the field of hydrology: A review. Appl. Soft Comput. 2014, 19, 372–386. [Google Scholar]

- Şahin, M.; Kaya, Y.; Uyar, M.; Yıldırım, S. Application of extreme learning machine for estimating solar radiation from satellite data. Int. J. Energy Res. 2014, 38, 205–212. [Google Scholar] [CrossRef]

- Olatomiwa, L.; Mekhilef, S.; Shamshirband, S.; Petković, D. Adaptive neuro-fuzzy approach for solar radiation prediction in nigeria. Renew. Sust. Energy Rev. 2015, 51, 1784–1791. [Google Scholar] [CrossRef]

- Mohammadi, K.; Shamshirband, S.; Yee, P.L.; Petković, D.; Zamani, M.; Ch, S. Predicting the wind power density based upon extreme learning machine. Energy 2015, 86, 232–239. [Google Scholar] [CrossRef]

- Osório, G.J.; Matias, J.C.O.; Catalão, J.P.S. Short-term wind power forecasting using adaptive neuro-fuzzy inference system combined with evolutionary particle swarm optimization, wavelet transform and mutual information. Renew. Energy 2015, 75, 301–307. [Google Scholar] [CrossRef]

- Shamshirband, S.; Mohammadi, K.; Tong, C.W.; Petković, D.; Porcu, E.; Mostafaeipour, A.; Ch, S.; Sedaghat, A. Application of extreme learning machine for estimation of wind speed distribution. Clim. Dynam. 2015, 46, 1893–1907. [Google Scholar] [CrossRef]

- Yaseen, Z.M.; Jaafar, O.; Deo, R.C.; Kisi, O.; Adamowski, J.; Quilty, J.; El-Shafie, A. Stream-flow forecasting using extreme learning machines: A case study in a semi-arid region in Iraq. J. Hydrol. 2016, 542, 603–614. [Google Scholar] [CrossRef]

- Kisi, O. Streamflow forecasting and estimation using least square support vector regression and adaptive neuro-fuzzy embedded fuzzy c-means clustering. Water Resour. Manag. 2015, 29, 5109–5127. [Google Scholar] [CrossRef]

- Abdullah, S.S.; Malek, M.A.; Abdullah, N.S.; Kisi, O.; Yap, K.S. Extreme learning machines: A new approach for prediction of reference evapotranspiration. J. Hydrol. 2015, 527, 184–195. [Google Scholar] [CrossRef]

- Gavili, S.; Sanikhani, H.; Kisi, O.; Mahmoudi, M.H. Evaluation of several soft computing methods in monthly evapotranspiration modelling. Meteorol. Appl. 2018, 25, 128–138. [Google Scholar] [CrossRef]

- Tezel, G.; Buyukyildiz, M. Monthly evaporation forecasting using artificial neural networks and support vector machines. Theor. Appl. Climatol. 2016, 124, 69–80. [Google Scholar] [CrossRef]

- Moosavi, V.; Vafakhah, M.; Shirmohammadi, B.; Behnia, N. A wavelet-ANFIS hybrid model for groundwater level forecasting for different prediction periods. Water Resour. Manag. 2013, 27, 1301–1321. [Google Scholar] [CrossRef]

- Zha, T.; Barr, A.G.; van der Kamp, G.; Black, T.A.; McCaughey, J.H.; Flanagan, L.B. Interannual variation of evapotranspiration from forest and grassland ecosystems in Western Canada in relation to drought. Agric. For. Meteorol. 2010, 150, 1476–1484. [Google Scholar] [CrossRef]

- Wohlfahrt, G.; Hammerle, A.; Haslwanter, A.; Bahn, M.; Tappeiner, U.; Cernusca, A. Seasonal and inter-annual variability of the net ecosystem CO2 exchange of a temperate mountain grassland: Effects of climate and management. J. Geophys. Res. -Atmos. 2008, 113, D8. [Google Scholar] [CrossRef] [PubMed]

- Lund, M.; Lafleur, P.M.; Roulet, N.T.; Lindroth, A.; Christensen, T.R.; Aurela, M.; Chojnicki, B.H.; Flanagan, L.B.; Humphreys, E.R.; Laurila, T. Variability in exchange of CO2 across 12 northern peatland and tundra sites. Glob. Chang. Biol. 2010, 16, 2436–2448. [Google Scholar] [CrossRef]

- Meyers, T.P.; Hollinger, S.E. An assessment of storage terms in the surface energy balance of maize and soybean. Agric. For. Meteorol. 2004, 125, 105–115. [Google Scholar] [CrossRef]

- Griffis, T.J.; Black, T.A.; Morgenstern, K.; Barr, A.G.; Nesic, Z.; Drewitt, G.B.; Gaumont-Guay, D.; McCaughey, J.H. Ecophysiological controls on the carbon balances of three southern boreal forests. Agric. For. Meteorol. 2003, 117, 53–71. [Google Scholar] [CrossRef]

- Krishnan, P.; Black, T.A.; Barr, A.G.; Grant, N.J.; Gaumont-Guay, D.; Nesic, Z. Factors controlling the interannual variability in the carbon balance of a southern boreal black spruce forest. J. Geophys. Res. 2008, 113, D09109. [Google Scholar] [CrossRef]

- Baldocchi, D. Measuring fluxes of trace gases and energy between ecosystems and the atmosphere–the state and future of the eddy covariance method. Glob. Chang. Biol. 2014, 20, 3600–3609. [Google Scholar] [CrossRef] [PubMed]

- Moffat, A.M.; Papale, D.; Reichstein, M.; Hollinger, D.Y.; Richardson, A.D.; Barr, A.G.; Beckstein, C.; Braswell, B.H.; Churkina, G.; Desai, A.R.; et al. Comprehensive comparison of gap-filling techniques for eddy covariance net carbon fluxes. Agric. For. Meteorol. 2007, 147, 209–232. [Google Scholar] [CrossRef]

- Yaseen, Z.M.; Ebtehaj, I.; Bonakdari, H.; Deo, R.C.; Mehr, A.D.; Mohtar, W.H.M.W.; Diop, L.; El-Shafie, A.; Singh, V.P. Novel approach for streamflow forecasting using a hybrid ANFIS-FFA model. J. Hydrol. 2017, 554, 263–276. [Google Scholar] [CrossRef]

- Seo, Y.; Kim, S.; Kisi, O.; Singh, V.P. Daily water level forecasting using wavelet decomposition and artificial intelligence techniques. J. Hydrol. 2015, 520, 224–243. [Google Scholar] [CrossRef]

- Lohani, A.K.; Goel, N.K.; Bhatia, K.K.S. Improving real time flood forecasting using fuzzy inference system. J. Hydrol. 2014, 509, 25–41. [Google Scholar] [CrossRef]

- Takagi, T.; Sugeno, M. Fuzzy identification of systems and its applications to modeling and control. IEEE Trans. Syst. Man Cybern. 1985, 15, 116–132. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.-B.; Wang, D.H.; Lan, Y. Extreme learning machines: A survey. Int. J. Mach. Learn. Cybern. 2011, 2, 107–122. [Google Scholar] [CrossRef]

- Ding, S.; Xu, X.; Nie, R. Extreme learning machine and its applications. Neural Comput. Appl. 2014, 25, 549–556. [Google Scholar] [CrossRef]

- Pirdashti, M.; Curteanu, S.; Kamangar, M.H.; Hassim, M.H.; Khatami, M.A. Artificial neural networks: Applications in chemical engineering. Rev. Chem. Eng. 2013, 29, 205–239. [Google Scholar] [CrossRef]

- Shahin, M.A.; Jaksa, M.B.; Maier, H.R. State of the art of artificial neural networks in geotechnical engineering. EJGE 2008, 8, 1–26. [Google Scholar]

- Kamp, R.; Savenije, H. Hydrological model coupling with anns. Hydrol. Earth Syst. Sc. 2007, 11, 1869–1881. [Google Scholar] [CrossRef]

- Dawson, C.W.; Wilby, R.L. Hydrological modelling using artificial neural networks. Prog. Phys. Geogr. 2001, 25, 80–108. [Google Scholar] [CrossRef]

- Funahashi, K.-I. On the approximate realization of continuous mappings by neural networks. Neural Netw. 1989, 2, 183–192. [Google Scholar] [CrossRef]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. MCSS 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Abrahart, R.J.; Anctil, F.; Coulibaly, P.; Dawson, C.W.; Mount, N.J.; See, L.M.; Shamseldin, A.Y.; Solomatine, D.P.; Toth, E.; Wilby, R.L. Two decades of anarchy? Emerging themes and outstanding challenges for neural network river forecasting. Prog. Phys. Geogr. 2012, 36, 480–513. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Ding, Y.; Song, X.; Zen, Y. Forecasting financial condition of Chinese listed companies based on support vector machine. Expert Syst. Appl. 2008, 34, 3081–3089. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Min, J.H.; Lee, Y.-C. Bankruptcy prediction using support vector machine with optimal choice of kernel function parameters. Expert Syst. Appl. 2005, 28, 603–614. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Wen, X.; Zhao, Z.; Deng, X.; Xiang, W.; Tian, D.; Yan, W.; Zhou, X.; Peng, C. Applying an artificial neural network to simulate and predict Chinese fir (cunninghamia lanceolata) plantation carbon flux in subtropical China. Ecol. Model. 2014, 294, 19–26. [Google Scholar] [CrossRef]

- Evrendilek, F. Assessing neural networks with wavelet denoising and regression models in predicting diel dynamics of eddy covariance-measured latent and sensible heat fluxes and evapotranspiration. Neural Comput. Appl. 2012, 24, 327–337. [Google Scholar] [CrossRef]

- Zounemat-Kermani, M.; Kişi, Ö.; Adamowski, J.; Ramezani-Charmahineh, A. Evaluation of data driven models for river suspended sediment concentration modeling. J. Hydrol. 2016, 535, 457–472. [Google Scholar] [CrossRef]

- Tabari, H.; Martinez, C.; Ezani, A.; Talaee, P.H. Applicability of support vector machines and adaptive neurofuzzy inference system for modeling potato crop evapotranspiration. Irrig. Sci. 2013, 31, 575–588. [Google Scholar] [CrossRef]

- Mitchell, S.R.; Emanuel, R.E.; McGlynn, B.L. Land–atmosphere carbon and water flux relationships to vapor pressure deficit, soil moisture, and stream flow. Agric. For. Meteorol. 2015, 208, 108–117. [Google Scholar] [CrossRef]

- van der Molen, M.K.; Dolman, A.J.; Ciais, P.; Eglin, T.; Gobron, N.; Law, B.E.; Meir, P.; Peters, W.; Phillips, O.L.; Reichstein, M.; et al. Drought and ecosystem carbon cycling. Agric. For. Meteorol. 2011, 151, 765–773. [Google Scholar] [CrossRef]

- Petrie, M.D.; Brunsell, N.A.; Vargas, R.; Collins, S.L.; Flanagan, L.B.; Hanan, N.P.; Litvak, M.E.; Suyker, A.E. The sensitivity of carbon exchanges in great plains grasslands to precipitation variability. J. Geophys. Res.-Biogeosci. 2016, 121, 280–294. [Google Scholar] [CrossRef]

- Rowland, L.; Lobo-do-Vale, R.L.; Christoffersen, B.O.; Melem, E.A.; Kruijt, B.; Vasconcelos, S.S.; Domingues, T.; Binks, O.J.; Oliveira, A.A.; Metcalfe, D.; et al. After more than a decade of soil moisture deficit, tropical rainforest trees maintain photosynthetic capacity, despite increased leaf respiration. Glob. Chang. Biol. 2015, 21, 4662–4672. [Google Scholar] [CrossRef] [PubMed]

- Mystakidis, S.; Seneviratne, S.I.; Gruber, N.; Davin, E.L. Hydrological and biogeochemical constraints on terrestrial carbon cycle feedbacks. Environ. Res. Lett. 2017, 12, 014009. [Google Scholar] [CrossRef]

- Loescher, H.W.; Law, B.E.; Mahrt, L.; Hollinger, D.Y.; Campbell, J.; Wofsy, S.C. Uncertainties in, and interpretation of, carbon flux estimates using the eddy covariance technique. J. Geophys. Res.-Atmos. 2006, 111. [Google Scholar] [CrossRef]

- Franssen, H.J.H.; Stöckli, R.; Lehner, I.; Rotenberg, E.; Seneviratne, S.I. Energy balance closure of eddy-covariance data: A multisite analysis for European FLUXNET stations. Agric. For. Meteorol. 2010, 150, 1553–1567. [Google Scholar] [CrossRef]

- Reichstein, M.; Falge, E.; Baldocchi, D.; Papale, D.; Aubinet, M.; Berbigier, P.; Bernhofer, C.; Buchmann, N.; Gilmanov, T.; Granier, A.; et al. On the separation of net ecosystem exchange into assimilation and ecosystem respiration: Review and improved algorithm. Glob. Chang. Biol. 2005, 11, 1424–1439. [Google Scholar] [CrossRef]

- Soloway, A.D.; Amiro, B.D.; Dunn, A.L.; Wofsy, S.C. Carbon neutral or a sink? Uncertainty caused by gap-filling long-term flux measurements for an old-growth boreal black spruce forest. Agric. For. Meteorol. 2017, 233, 110–121. [Google Scholar] [CrossRef]

- Ainsworth, E.A.; Rogers, A. The response of photosynthesis and stomatal conductance to rising [CO2]: Mechanisms and environmental interactions. Plant. Cell. Environ. 2007, 30, 258–270. [Google Scholar] [CrossRef] [PubMed]

- Xue, W.; Lindner, S.; Dubbert, M.; Otieno, D.; Ko, J.; Muraoka, H.; Werner, C.; Tenhunen, J. Supplement understanding of the relative importance of biophysical factors in determination of photosynthetic capacity and photosynthetic productivity in rice ecosystems. Agric. For. Meteorol. 2017, 232, 550–565. [Google Scholar] [CrossRef]

- Flanagan, L.B.; Sharp, E.J.; Gamon, J.A. Application of the photosynthetic light-use efficiency model in a northern great plains grassland. Remote Sens. Environ. 2015, 168, 239–251. [Google Scholar] [CrossRef]

- Stoy, P.; Richardson, A.; Baldocchi, D.; Katul, G.; Stanovick, J.; Mahecha, M.; Reichstein, M.; Detto, M.; Law, B.; Wohlfahrt, G. Biosphere-atmosphere exchange of CO2 in relation to climate: A cross-biome analysis across multiple time scales. Biogeosciences 2009, 6, 2297–2312. [Google Scholar] [CrossRef]

- Zhu, L.; Johnson, D.A.; Wang, W.; Ma, L.; Rong, Y. Grazing effects on carbon fluxes in a northern China grassland. J. Arid Environ. 2015, 114, 41–48. [Google Scholar] [CrossRef]

- Texeira, M.; Oyarzabal, M.; Pineiro, G.; Baeza, S.; Paruelo, J.M. Land cover and precipitation controls over long-term trends in carbon gains in the grassland biome of South America. Ecosphere 2015, 6, 1–21. [Google Scholar] [CrossRef]

- Zhou, X.; Sherry, R.A.; An, Y.; Wallace, L.L.; Luo, Y. Main and interactive effects of warming, clipping, and doubled precipitation on soil CO2 efflux in a grassland ecosystem. Glob. Biogeochem. Cycles 2006, 20. [Google Scholar] [CrossRef]

- Buysse, P.; Bodson, B.; Debacq, A.; De Ligne, A.; Heinesch, B.; Manise, T.; Moureaux, C.; Aubinet, M. Carbon budget measurement over 12 years at a crop production site in the silty-loam region in Belgium. Agric. For. Meteorol. 2017, 246, 241–255. [Google Scholar] [CrossRef]

- Aubinet, M.; Moureaux, C.; Bodson, B.; Dufranne, D.; Heinesch, B.; Suleau, M.; Vancutsem, F.; Vilret, A. Carbon sequestration by a crop over a 4-year sugar beet/winter wheat/seed potato/winter wheat rotation cycle. Agric. For. Meteorol. 2009, 149, 407–418. [Google Scholar] [CrossRef]

- Verma, S.B.; Dobermann, A.; Cassman, K.G.; Walters, D.T.; Knops, J.M.; Arkebauer, T.J.; Suyker, A.E.; Burba, G.G.; Amos, B.; Yang, H.; et al. Annual carbon dioxide exchange in irrigated and rainfed maize-based agroecosystems. Agric. For. Meteorol. 2005, 131, 77–96. [Google Scholar] [CrossRef]

| Site | Latitude | Longitude | Elevation | MAT | TAP | Vegetation | Period | Reference |

|---|---|---|---|---|---|---|---|---|

| AT-Neu | 47.12 | 11.32 | 970 | 6.68 | 669 | GRA | 2004–2009 | Wohlfahrt et al. [43] |

| SE-Deg | 64.18 | 19.55 | 270 | 2.90 | 436 | WET | 2004–2009 | Lund et al. [44] |

| US-Bo1 | 40.01 | −88.29 | 219 | 11.52 | 785 | CRO | 2001–2006 | Meyers and Hollinger [45] |

| CA-Oas | 53.63 | −106.20 | 601 | 1.82 | 343 | DBF | 2003–2008 | Griffis et al. [46] |

| CA-Obs | 53.99 | −105.12 | 629 | 1.00 | 373 | ENF | 2004–2009 | Krishnan et al. [47] |

| Stand | Variable | Xmean | Xmax | Xmin | Xsd | Xku | Xsk | CCGPP | CCR | CCNEE |

|---|---|---|---|---|---|---|---|---|---|---|

| AT-Neu | Ta | 6.68 | 22.96 | −17.63 | 8.19 | 2.24 | −0.29 | 0.80 | 0.88 | −0.23 |

| Rn | 4.24 | 16.51 | −6.44 | 5.33 | 1.97 | 0.16 | 0.81 | 0.77 | −0.43 | |

| Rh | 79.80 | 99.98 | 44.36 | 10.98 | 2.52 | −0.46 | −0.39 | −0.35 | 0.24 | |

| Ts | 8.71 | 21.28 | −2.56 | 6.86 | 1.50 | 0.01 | 0.81 | 0.88 | −0.24 | |

| SE-Deg | Ta | 2.90 | 28.40 | −26.79 | 9.43 | 2.90 | −0.08 | 0.77 | 0.74 | −0.54 |

| Rn | 2.43 | 14.72 | −12.74 | 4.33 | 2.41 | 0.54 | 0.67 | 0.50 | −0.62 | |

| Rh | 81.04 | 100.00 | 37.50 | 13.10 | 2.56 | −0.62 | −0.45 | −0.28 | 0.48 | |

| Ts | 4.98 | 16.75 | −0.31 | 4.99 | 1.89 | 0.61 | 0.79 | 0.73 | −0.59 | |

| US-Bo1 | Ta | 11.52 | 29.21 | −20.46 | 10.31 | 2.09 | −0.33 | 0.52 | 0.72 | −0.28 |

| Rn | 6.37 | 23.14 | −4.31 | 6.12 | 2.13 | 0.58 | 0.38 | 0.48 | −0.24 | |

| Rh | 74.80 | 100.00 | 17.98 | 17.46 | 3.60 | −0.97 | −0.01 | −0.02 | 0.01 | |

| Ts | 12.30 | 30.63 | −5.35 | 9.29 | 1.65 | 0.03 | 0.58 | 0.76 | −0.36 | |

| CA-Oas | Ta | 1.82 | 26.46 | −35.04 | 13.02 | 2.22 | −0.40 | 0.70 | 0.82 | −0.50 |

| Rn | 5.19 | 18.94 | −4.67 | 5.66 | 2.02 | 0.45 | 0.69 | 0.68 | −0.60 | |

| Rh | 69.88 | 98.78 | 21.83 | 16.15 | 2.62 | −0.52 | −0.18 | −0.21 | 0.13 | |

| Ts | 4.73 | 17.31 | −5.81 | 5.81 | 1.69 | 0.30 | 0.83 | 0.93 | −0.64 | |

| CA-Obs | Ta | 1.00 | 26.37 | −35.31 | 13.15 | 2.15 | −0.34 | 0.83 | 0.81 | −0.58 |

| Rn | 6.71 | 21.90 | −4.28 | 6.17 | 2.05 | 0.48 | 0.72 | 0.56 | −0.71 | |

| Rh | 72.35 | 100.00 | 24.56 | 16.69 | 2.46 | −0.43 | −0.31 | −0.16 | 0.42 | |

| Ts | 3.19 | 15.41 | −9.18 | 4.99 | 1.99 | 0.58 | 0.87 | 0.94 | −0.48 |

| Stand | Model | Training | Validation | Testing | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R2 | IA | RMSE | MAE | R2 | IA | RMSE | MAE | R2 | IA | RMSE | MAE | ||

| AT-Neu | ANN1 | 0.8312 | 0.9529 | 2.2268 | 1.4728 | 0.8529 | 0.9468 | 2.5424 | 1.9219 | 0.8519 | 0.9432 | 2.6885 | 1.9724 |

| ELM1 | 0.7760 | 0.9340 | 2.5641 | 1.7764 | 0.8225 | 0.9278 | 2.8483 | 2.1407 | 0.8201 | 0.9222 | 3.0660 | 2.3044 | |

| ANFIS10 | 0.8363 | 0.9540 | 2.1918 | 1.4487 | 0.8422 | 0.9398 | 2.6682 | 1.9318 | 0.8486 | 0.9412 | 2.7268 | 1.9568 | |

| SVM1 | 0.8377 | 0.9556 | 2.2129 | 1.3228 | 0.8349 | 0.9423 | 2.6402 | 1.9385 | 0.8433 | 0.9434 | 2.7004 | 1.9075 | |

| SE-Deg | ANN6 | 0.7951 | 0.9418 | 0.5184 | 0.3462 | 0.8303 | 0.9382 | 0.5019 | 0.3544 | 0.9140 | 0.9373 | 0.5116 | 0.3679 |

| ELM2 | 0.7952 | 0.9405 | 0.5175 | 0.3545 | 0.8204 | 0.9352 | 0.5352 | 0.3571 | 0.9059 | 0.9340 | 0.5389 | 0.3642 | |

| ANFIS10 | 0.8277 | 0.9512 | 0.4747 | 0.3181 | 0.8314 | 0.9297 | 0.5674 | 0.3803 | 0.8884 | 0.9158 | 0.6206 | 0.4122 | |

| SVM1 | 0.8743 | 0.9661 | 0.4068 | 0.2307 | 0.8310 | 0.9172 | 0.6543 | 0.4174 | 0.8483 | 0.9029 | 0.6782 | 0.4093 | |

| US-Bo1 | ANN9 | 0.6058 | 0.8653 | 3.6494 | 2.5147 | 0.7233 | 0.9074 | 3.1101 | 2.2727 | 0.7128 | 0.9127 | 2.5075 | 1.9127 |

| ELM1 | 0.4532 | 0.7804 | 4.2979 | 2.8972 | 0.8078 | 0.9459 | 2.6739 | 1.9537 | 0.7248 | 0.9017 | 2.6946 | 2.0094 | |

| ANFIS5 | 0.7113 | 0.9102 | 3.1227 | 2.0373 | 0.7606 | 0.9323 | 2.9541 | 2.0404 | 0.6582 | 0.8919 | 2.6830 | 1.8824 | |

| SVM1 | 0.6854 | 0.9024 | 3.2784 | 1.8555 | 0.7028 | 0.9099 | 3.2500 | 1.9768 | 0.6744 | 0.8984 | 2.6043 | 1.6394 | |

| CA-Oas | ANN1 | 0.9160 | 0.9778 | 1.2361 | 0.6850 | 0.9292 | 0.9775 | 1.2908 | 0.7781 | 0.9153 | 0.9771 | 1.3444 | 0.7653 |

| ELM1 | 0.8663 | 0.9630 | 1.5586 | 1.0494 | 0.8804 | 0.9663 | 1.5508 | 1.0379 | 0.8899 | 0.9707 | 1.4929 | 0.9629 | |

| ANFIS10 | 0.9124 | 0.9766 | 1.2618 | 0.6763 | 0.9329 | 0.9793 | 1.2326 | 0.7107 | 0.9189 | 0.9783 | 1.3044 | 0.6921 | |

| SVM1 | 0.9205 | 0.9792 | 1.2044 | 0.5942 | 0.9217 | 0.9750 | 1.3680 | 0.7567 | 0.9156 | 0.9772 | 1.3528 | 0.7324 | |

| CA-Obs | ANN1 | 0.9491 | 0.9868 | 0.5500 | 0.3633 | 0.9565 | 0.9779 | 0.7722 | 0.5063 | 0.9622 | 0.9836 | 0.6548 | 0.4220 |

| ELM2 | 0.9281 | 0.9810 | 0.6534 | 0.4868 | 0.9301 | 0.9710 | 0.8724 | 0.6255 | 0.9329 | 0.9741 | 0.8115 | 0.5682 | |

| ANFIS9 | 0.9484 | 0.9866 | 0.5535 | 0.3584 | 0.9531 | 0.9771 | 0.7875 | 0.5131 | 0.9595 | 0.9816 | 0.6898 | 0.4477 | |

| SVM1 | 0.9553 | 0.9884 | 0.5156 | 0.3091 | 0.9556 | 0.9778 | 0.7750 | 0.4936 | 0.9574 | 0.9818 | 0.6915 | 0.4290 | |

| Stand | Model | Training | Validation | Testing | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R2 | IA | RMSE | MAE | R2 | IA | RMSE | MAE | R2 | IA | RMSE | MAE | ||

| AT-Neu | ANN2 | 0.9035 | 0.9742 | 1.2858 | 0.9130 | 0.8853 | 0.9552 | 1.7460 | 1.2169 | 0.8834 | 0.9155 | 2.7290 | 1.9584 |

| ELM2 | 0.8708 | 0.9644 | 1.4878 | 1.0620 | 0.8807 | 0.9505 | 1.8119 | 1.2977 | 0.8590 | 0.8958 | 2.9793 | 2.1264 | |

| ANFIS9 | 0.8942 | 0.9714 | 1.3464 | 0.9579 | 0.8629 | 0.9543 | 1.8124 | 1.2480 | 0.8797 | 0.9122 | 2.7619 | 1.9803 | |

| SVM1 | 0.8988 | 0.9722 | 1.3205 | 0.8715 | 0.8702 | 0.9515 | 1.8184 | 1.2737 | 0.8708 | 0.9043 | 2.8800 | 2.0479 | |

| SE-Deg | ANN8 | 0.7031 | 0.9093 | 0.3765 | 0.2421 | 0.3091 | 0.6450 | 0.5947 | 0.3966 | 0.7212 | 0.9016 | 0.4011 | 0.2724 |

| ELM1 | 0.7028 | 0.9075 | 0.3763 | 0.2422 | 0.3078 | 0.6424 | 0.5948 | 0.3984 | 0.7096 | 0.8968 | 0.4107 | 0.2819 | |

| ANFIS9 | 0.7631 | 0.9294 | 0.3359 | 0.2123 | 0.3141 | 0.6478 | 0.5912 | 0.3806 | 0.6516 | 0.8709 | 0.4612 | 0.3253 | |

| SVM2 | 0.6525 | 0.8906 | 0.4142 | 0.2561 | 0.3679 | 0.6915 | 0.5484 | 0.3562 | 0.6098 | 0.8666 | 0.4776 | 0.2830 | |

| US-Bo1 | ANN4 | 0.6497 | 0.8828 | 1.5390 | 1.0309 | 0.8241 | 0.9519 | 1.0657 | 0.8351 | 0.7927 | 0.9419 | 1.1644 | 0.9524 |

| ELM1 | 0.6546 | 0.8852 | 1.5278 | 1.0300 | 0.8524 | 0.9545 | 1.1009 | 0.8443 | 0.7956 | 0.9437 | 1.1653 | 0.9155 | |

| ANFIS10 | 0.7939 | 0.9397 | 1.1800 | 0.7493 | 0.8338 | 0.9443 | 1.2617 | 0.9314 | 0.7415 | 0.9239 | 1.3215 | 0.9503 | |

| SVM1 | 0.8126 | 0.9454 | 1.1276 | 0.6244 | 0.7209 | 0.9169 | 1.3433 | 0.9443 | 0.7413 | 0.9175 | 1.3272 | 0.9492 | |

| CA-Oas | ANN5 | 0.9114 | 0.9765 | 0.6203 | 0.3824 | 0.9397 | 0.9845 | 0.5256 | 0.3594 | 0.9337 | 0.9827 | 0.5580 | 0.3580 |

| ELM1 | 0.9118 | 0.9765 | 0.6187 | 0.3740 | 0.9431 | 0.9852 | 0.5076 | 0.3340 | 0.9303 | 0.9815 | 0.5722 | 0.3595 | |

| ANFIS9 | 0.9238 | 0.9799 | 0.5751 | 0.3534 | 0.9287 | 0.9815 | 0.5714 | 0.3420 | 0.9299 | 0.9813 | 0.5741 | 0.3512 | |

| SVM1 | 0.9264 | 0.9808 | 0.5657 | 0.3039 | 0.9458 | 0.9858 | 0.5042 | 0.3319 | 0.9246 | 0.9800 | 0.6019 | 0.3701 | |

| CA-Obs | ANN4 | 0.9330 | 0.9824 | 0.4371 | 0.2818 | 0.9392 | 0.9791 | 0.4889 | 0.3273 | 0.9554 | 0.9845 | 0.4280 | 0.2944 |

| ELM1 | 0.9297 | 0.9815 | 0.4477 | 0.2874 | 0.9411 | 0.9798 | 0.4775 | 0.3061 | 0.9422 | 0.9800 | 0.4821 | 0.3289 | |

| ANFIS10 | 0.9391 | 0.9841 | 0.4167 | 0.2619 | 0.9418 | 0.9785 | 0.4902 | 0.3084 | 0.9498 | 0.9825 | 0.4522 | 0.3199 | |

| SVM1 | 0.9414 | 0.9847 | 0.4089 | 0.2334 | 0.9425 | 0.9791 | 0.4850 | 0.3102 | 0.9471 | 0.9809 | 0.4692 | 0.3385 | |

| Stand | Model | Training | Validation | Testing | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R2 | IA | RMSE | MAE | R2 | IA | RMSE | MAE | R2 | IA | RMSE | MAE | ||

| AT-Neu | ANN1 | 0.5032 | 0.8130 | 2.0021 | 1.2738 | 0.4827 | 0.8100 | 2.0669 | 1.4847 | 0.4679 | 0.8057 | 1.9879 | 1.2727 |

| ELM2 | 0.3843 | 0.7389 | 2.2282 | 1.4976 | 0.4798 | 0.7825 | 2.0855 | 1.6120 | 0.3862 | 0.7613 | 2.1270 | 1.4395 | |

| ANFIS10 | 0.4851 | 0.8038 | 2.0377 | 1.2949 | 0.6456 | 0.8543 | 1.7610 | 1.3050 | 0.4324 | 0.7789 | 2.0406 | 1.2892 | |

| SVM1 | 0.4781 | 0.8074 | 2.0784 | 1.1627 | 0.5855 | 0.8401 | 1.8886 | 1.3730 | 0.4099 | 0.7731 | 2.2073 | 1.3812 | |

| SE-Deg | ANN3 | 0.5493 | 0.8344 | 0.4267 | 0.2828 | 0.7219 | 0.8018 | 0.5597 | 0.3734 | 0.7018 | 0.8917 | 0.2638 | 0.1834 |

| ELM3 | 0.5437 | 0.8355 | 0.4278 | 0.2787 | 0.7171 | 0.8385 | 0.5307 | 0.3482 | 0.6747 | 0.8667 | 0.3080 | 0.2028 | |

| ANFIS3 | 0.7387 | 0.9203 | 0.3237 | 0.2123 | 0.7137 | 0.9131 | 0.4634 | 0.3016 | 0.7238 | 0.8147 | 0.4362 | 0.2693 | |

| SVM1 | 0.7619 | 0.9296 | 0.3091 | 0.1784 | 0.7067 | 0.9088 | 0.4686 | 0.2963 | 0.6800 | 0.7910 | 0.4685 | 0.2736 | |

| US-Bo1 | ANN1 | 0.5440 | 0.8411 | 2.6355 | 1.8241 | 0.6539 | 0.8699 | 2.3804 | 1.7979 | 0.5999 | 0.8579 | 1.6549 | 1.3337 |

| ELM1 | 0.3628 | 0.7179 | 3.1072 | 2.2364 | 0.6812 | 0.8863 | 2.2871 | 1.7946 | 0.4836 | 0.7931 | 1.9581 | 1.6110 | |

| ANFIS9 | 0.5789 | 0.8530 | 2.5261 | 1.7290 | 0.2906 | 0.6814 | 3.4383 | 2.2186 | 0.5387 | 0.8179 | 1.6927 | 1.3535 | |

| SVM1 | 0.5572 | 0.8497 | 2.6103 | 1.5559 | 0.6013 | 0.8676 | 2.5591 | 1.7161 | 0.5679 | 0.8523 | 1.6019 | 1.1772 | |

| CA-Oas | ANN1 | 0.7755 | 0.9313 | 1.2105 | 0.7227 | 0.7561 | 0.9267 | 1.2592 | 0.7873 | 0.8163 | 0.9471 | 1.1252 | 0.6872 |

| ELM3 | 0.6380 | 0.8797 | 1.5348 | 1.0086 | 0.6994 | 0.9011 | 1.3719 | 0.9026 | 0.7293 | 0.9087 | 1.3745 | 0.8726 | |

| ANFIS10 | 0.7935 | 0.9397 | 1.1591 | 0.6545 | 0.8044 | 0.9394 | 1.2147 | 0.7318 | 0.8345 | 0.9540 | 1.0826 | 0.6343 | |

| SVM1 | 0.7867 | 0.9369 | 1.1783 | 0.6314 | 0.7765 | 0.9343 | 1.2225 | 0.7280 | 0.8125 | 0.9474 | 1.1390 | 0.6518 | |

| CA-Obs | ANN2 | 0.8083 | 0.9441 | 0.5080 | 0.3407 | 0.8496 | 0.9389 | 0.6095 | 0.4113 | 0.8292 | 0.9306 | 0.6165 | 0.4407 |

| ELM1 | 0.6754 | 0.8956 | 0.6610 | 0.4944 | 0.7611 | 0.8831 | 0.7850 | 0.5716 | 0.7216 | 0.8827 | 0.7651 | 0.5640 | |

| ANFIS2 | 0.8108 | 0.9454 | 0.5045 | 0.3345 | 0.8584 | 0.9369 | 0.6169 | 0.4184 | 0.8224 | 0.9237 | 0.6560 | 0.5027 | |

| SVM1 | 0.8130 | 0.9457 | 0.5019 | 0.3139 | 0.8406 | 0.9320 | 0.6358 | 0.4313 | 0.8175 | 0.9255 | 0.6426 | 0.4746 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dou, X.; Yang, Y. Comprehensive Evaluation of Machine Learning Techniques for Estimating the Responses of Carbon Fluxes to Climatic Forces in Different Terrestrial Ecosystems. Atmosphere 2018, 9, 83. https://doi.org/10.3390/atmos9030083

Dou X, Yang Y. Comprehensive Evaluation of Machine Learning Techniques for Estimating the Responses of Carbon Fluxes to Climatic Forces in Different Terrestrial Ecosystems. Atmosphere. 2018; 9(3):83. https://doi.org/10.3390/atmos9030083

Chicago/Turabian StyleDou, Xianming, and Yongguo Yang. 2018. "Comprehensive Evaluation of Machine Learning Techniques for Estimating the Responses of Carbon Fluxes to Climatic Forces in Different Terrestrial Ecosystems" Atmosphere 9, no. 3: 83. https://doi.org/10.3390/atmos9030083