WPCB-Tree: A Novel Flash-Aware B-Tree Index Using a Write Pattern Converter

Abstract

:1. Introduction

2. Background and Related Works

2.1. Flash Memory

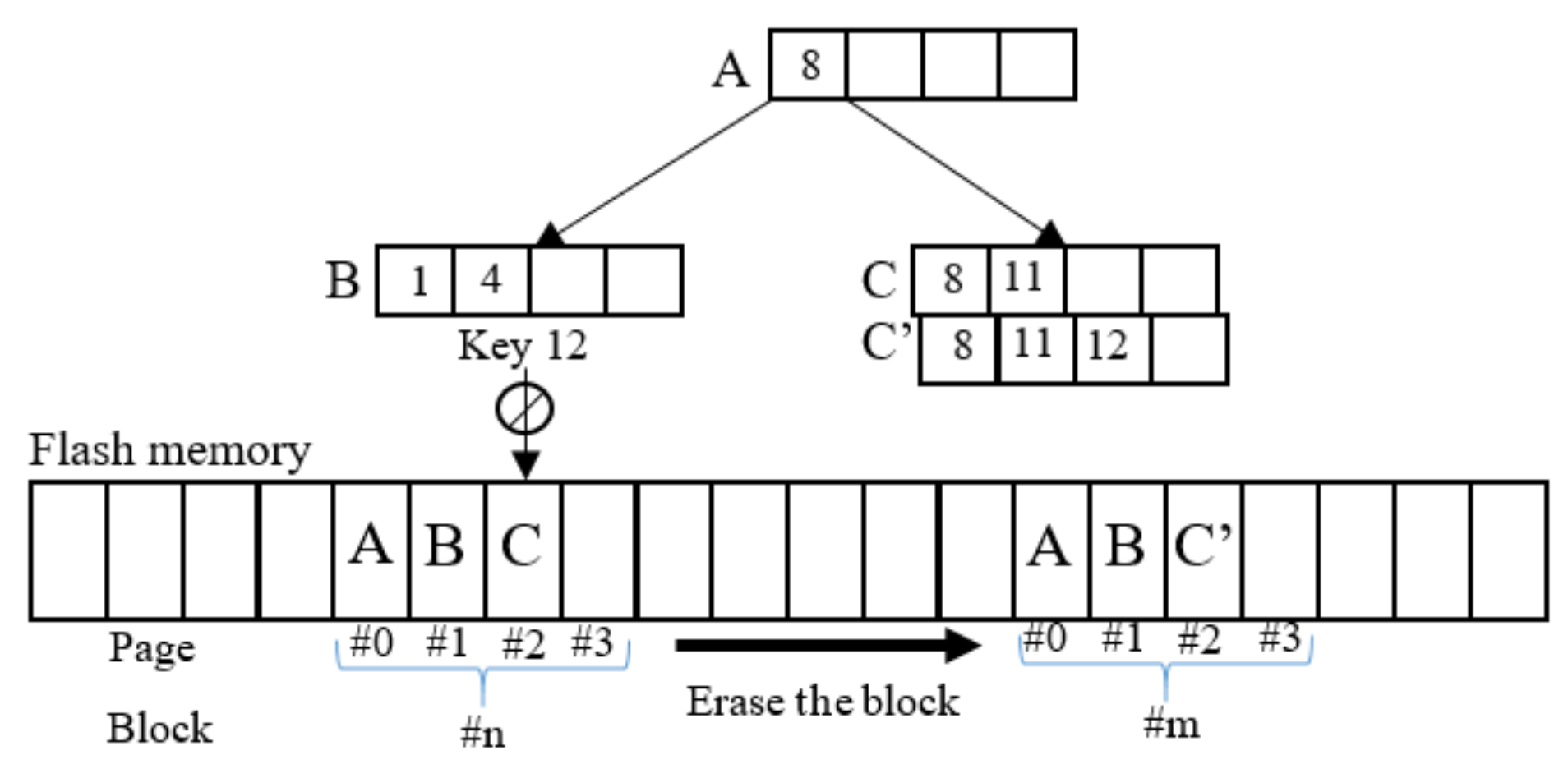

2.2. B-Tree on Flash Memory

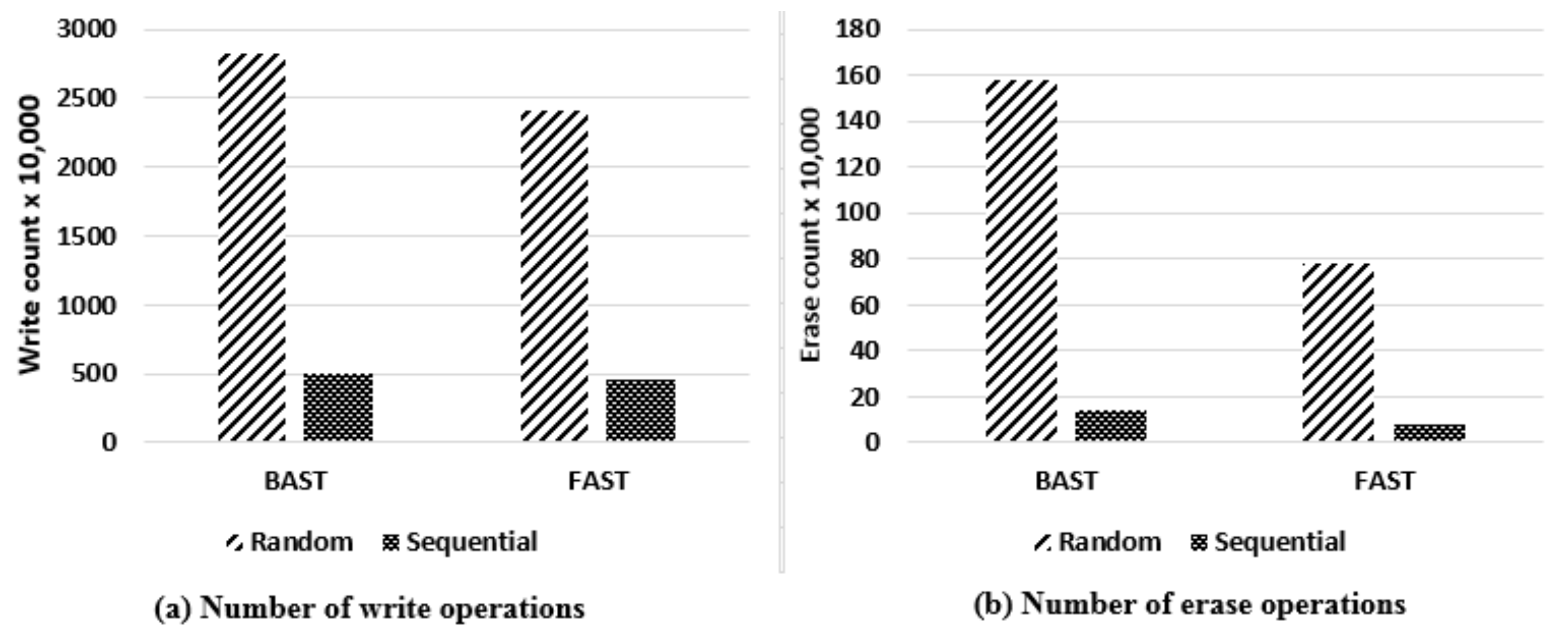

2.3. The Limitation of Random Write Performance

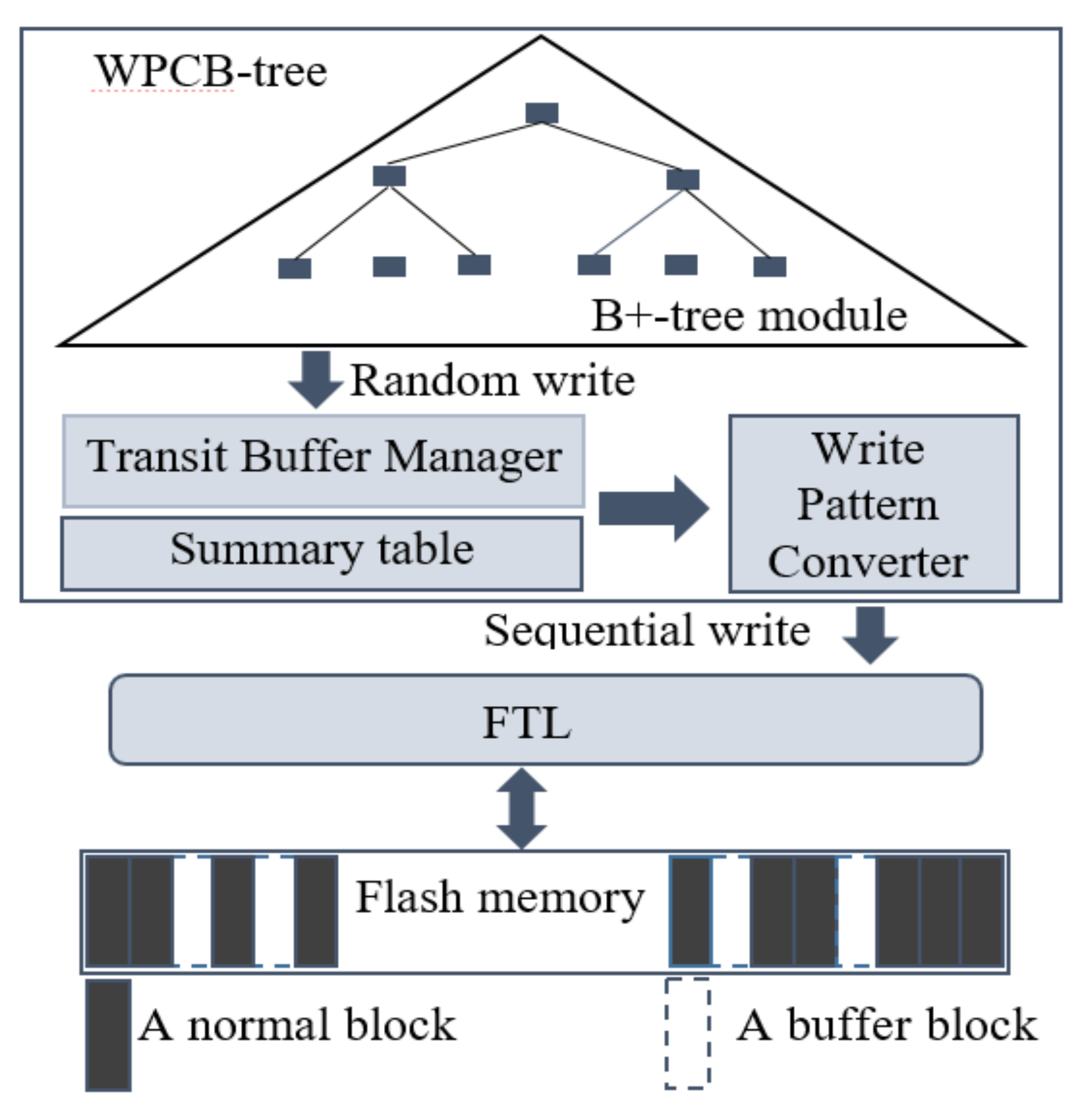

3. WPCB-Tree: A B-Tree Using a Write Pattern Converter

3.1. Design of the WPCB-Tree

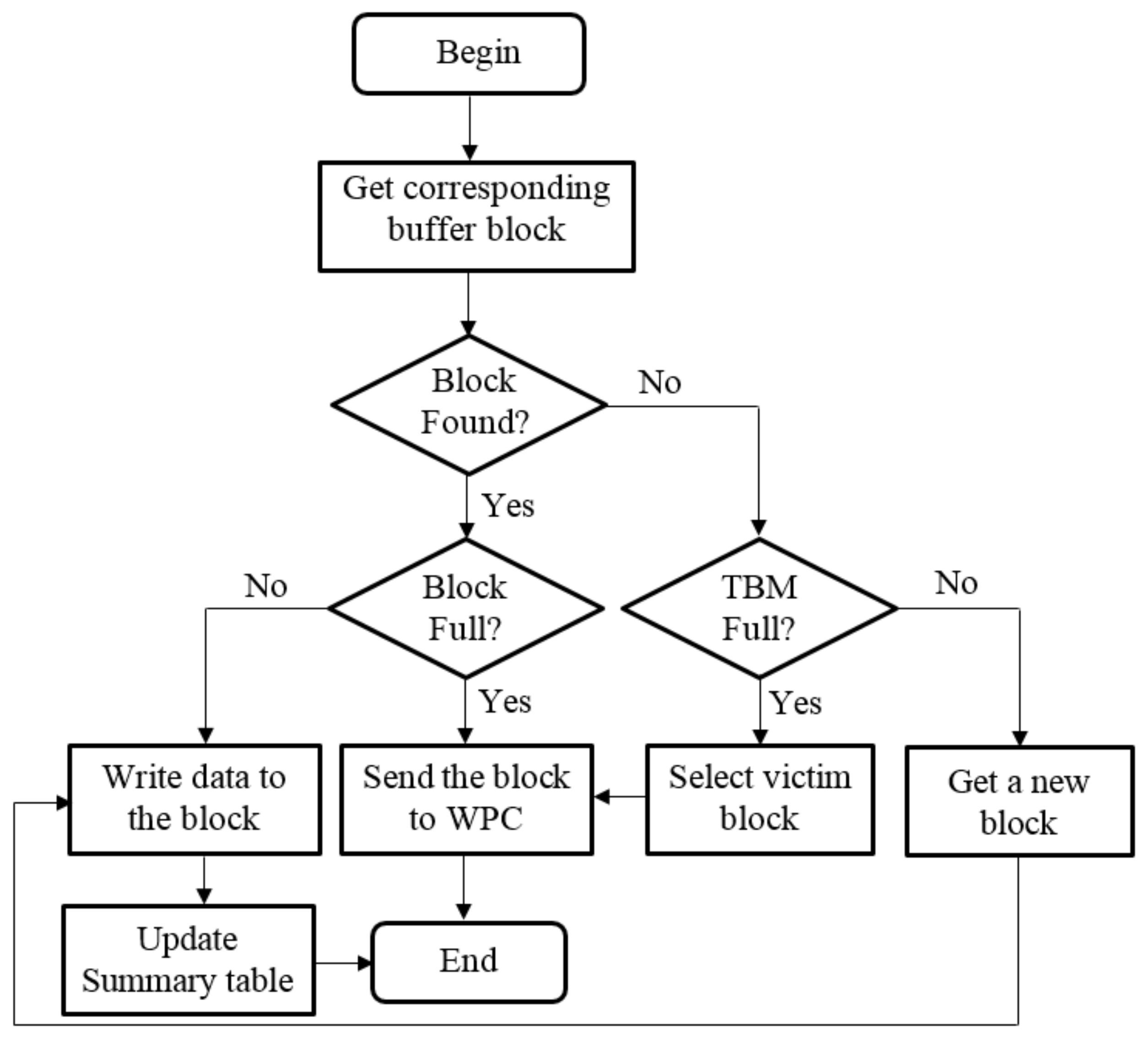

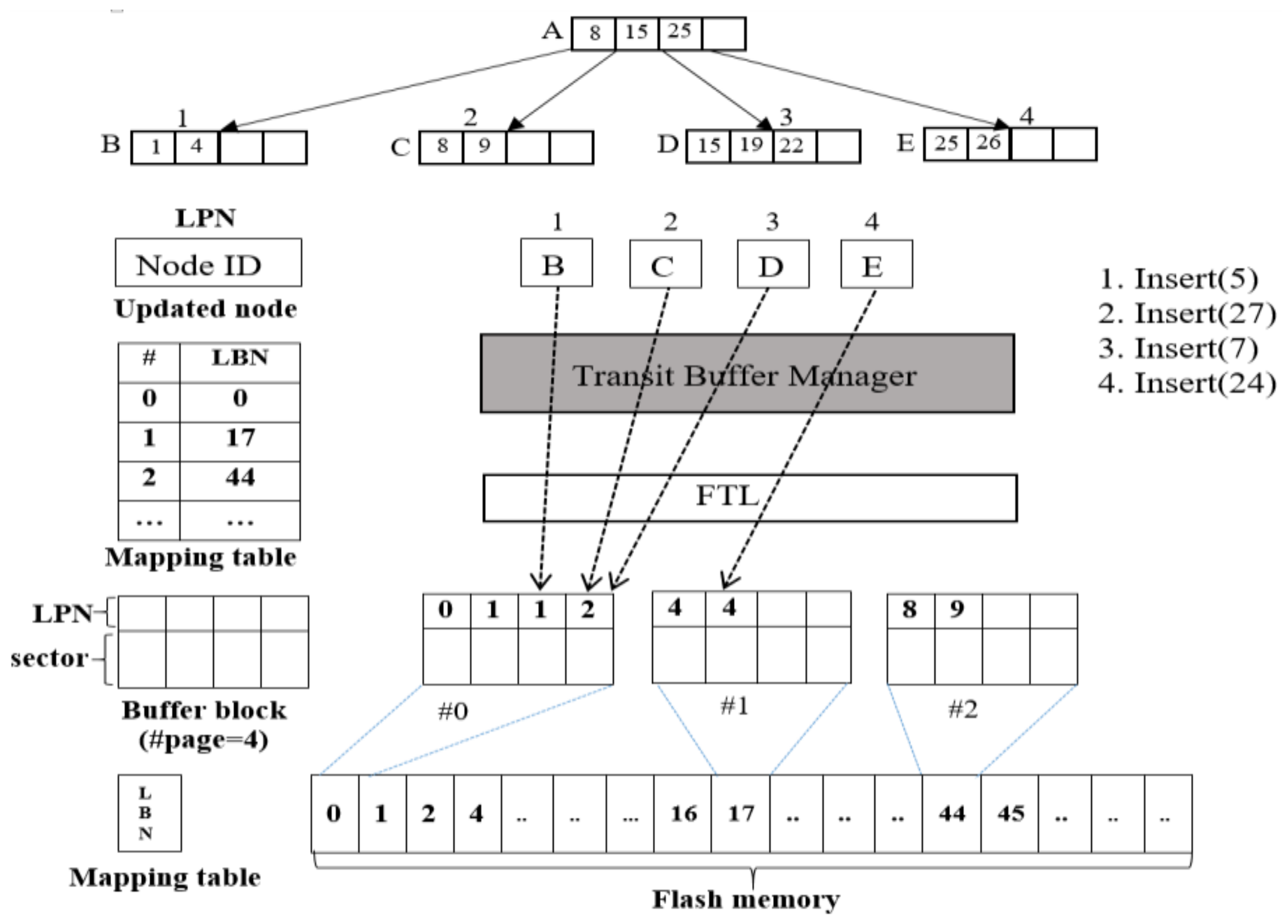

3.2. Transit Buffer Manager

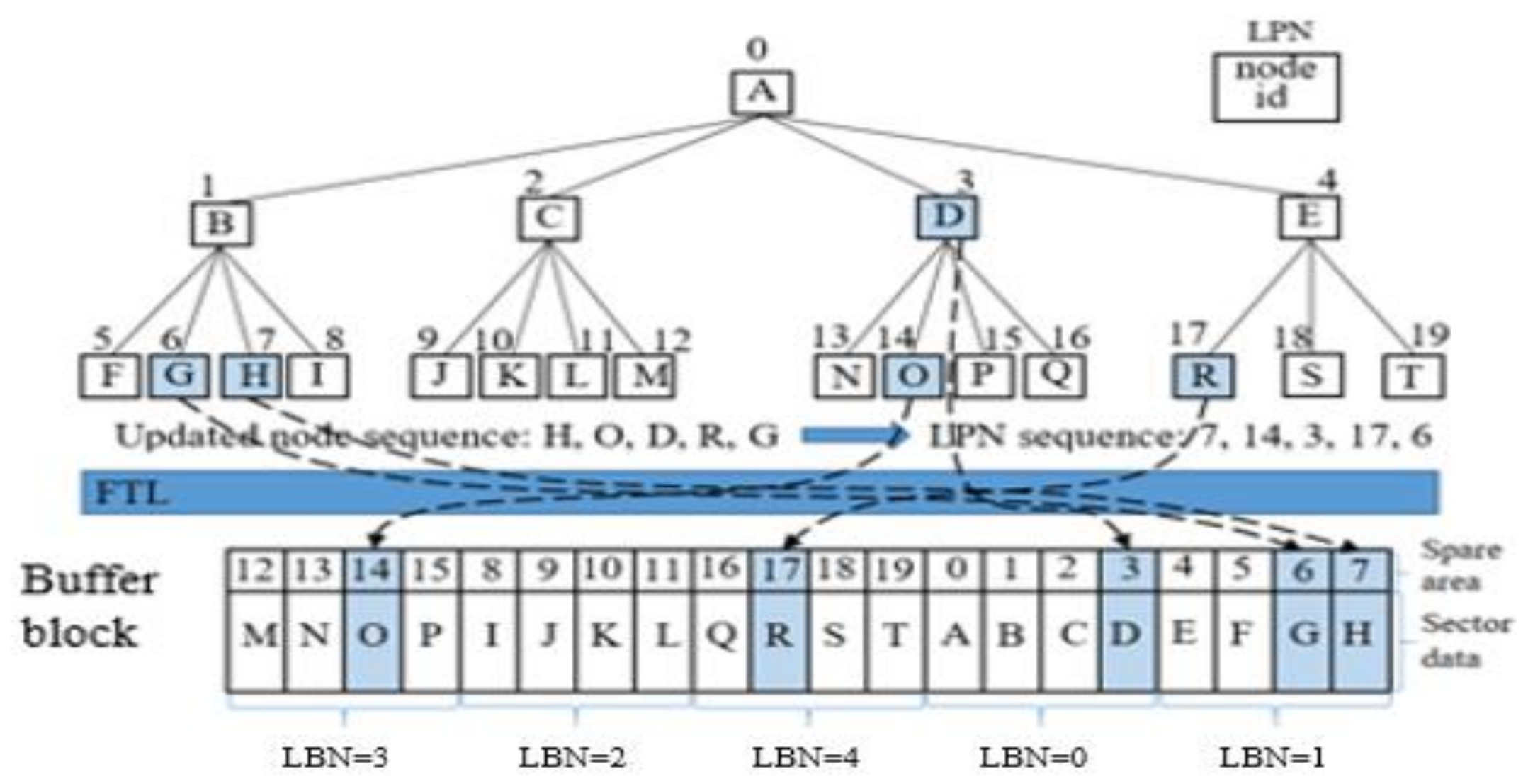

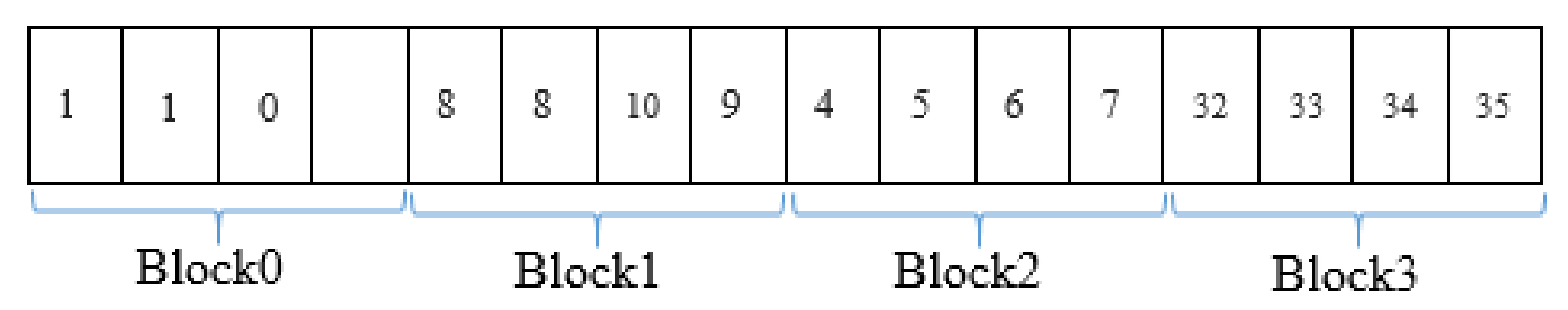

3.3. Write Pattern Converter

4. WPCB-Tree Operations

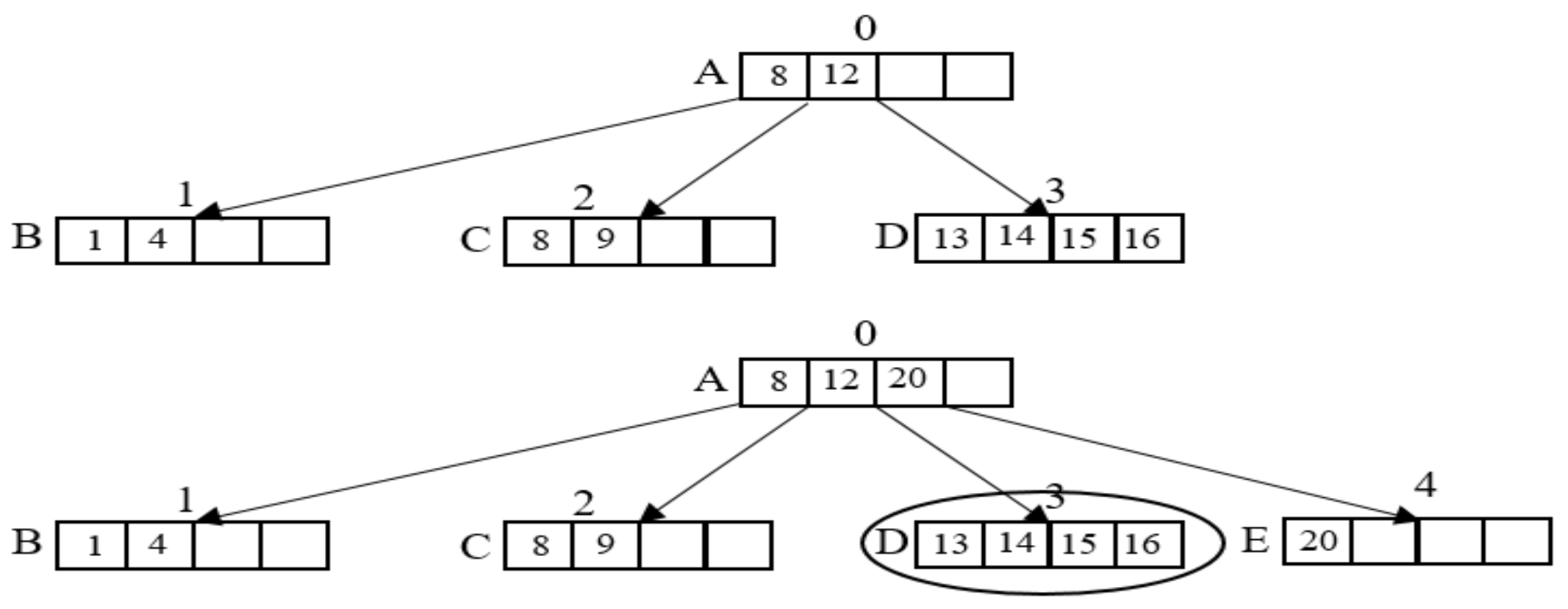

4.1. Insert Operation

| Algorithm 1 Insertion algorithm |

| Input key k |

| 1 insert k into node on B-tree |

| 2 b <- getCorrBufferBlock() |

| 3 if exist(b) then |

| 4 if isfull(b) then |

| 5 s <- convertWritePattern(b) |

| 6 write(s) |

| 7 erase(b) |

| 8 endif |

| 9 else |

| 10 if isfull(TBM) then |

| 11 b <‐ victim block |

| 12 s <- convertWritePattern(b) |

| 13 write(s) |

| 14 erase(b) |

| 15 else |

| 16 b <- free block in TBM |

| 17 endif |

| 18 endif |

| 19 append(node, b, TBM) |

4.2. Delete Operation

4.3. Search Operation

5. System Analysis

6. Performance Evaluation

6.1. Simulation Methodology

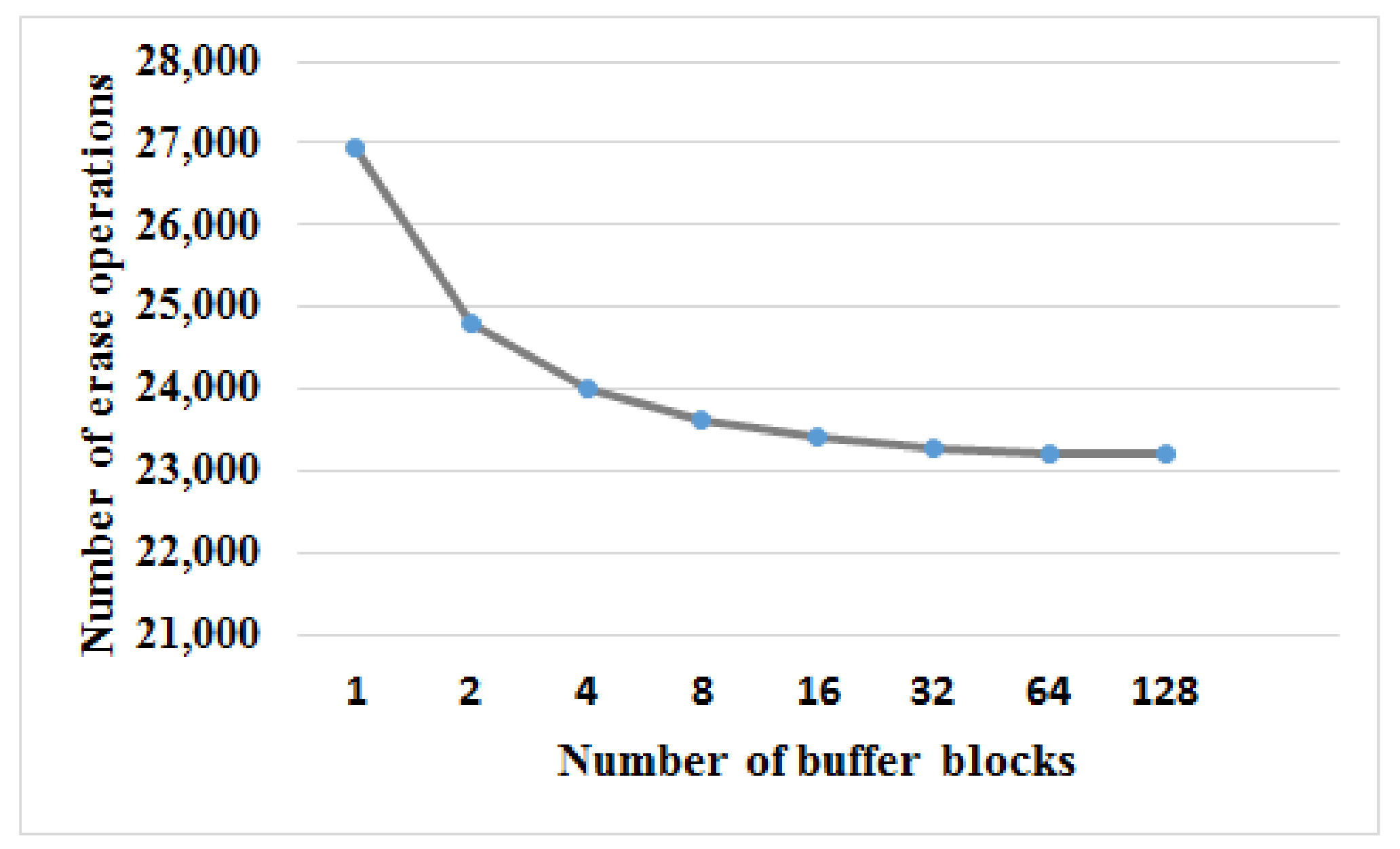

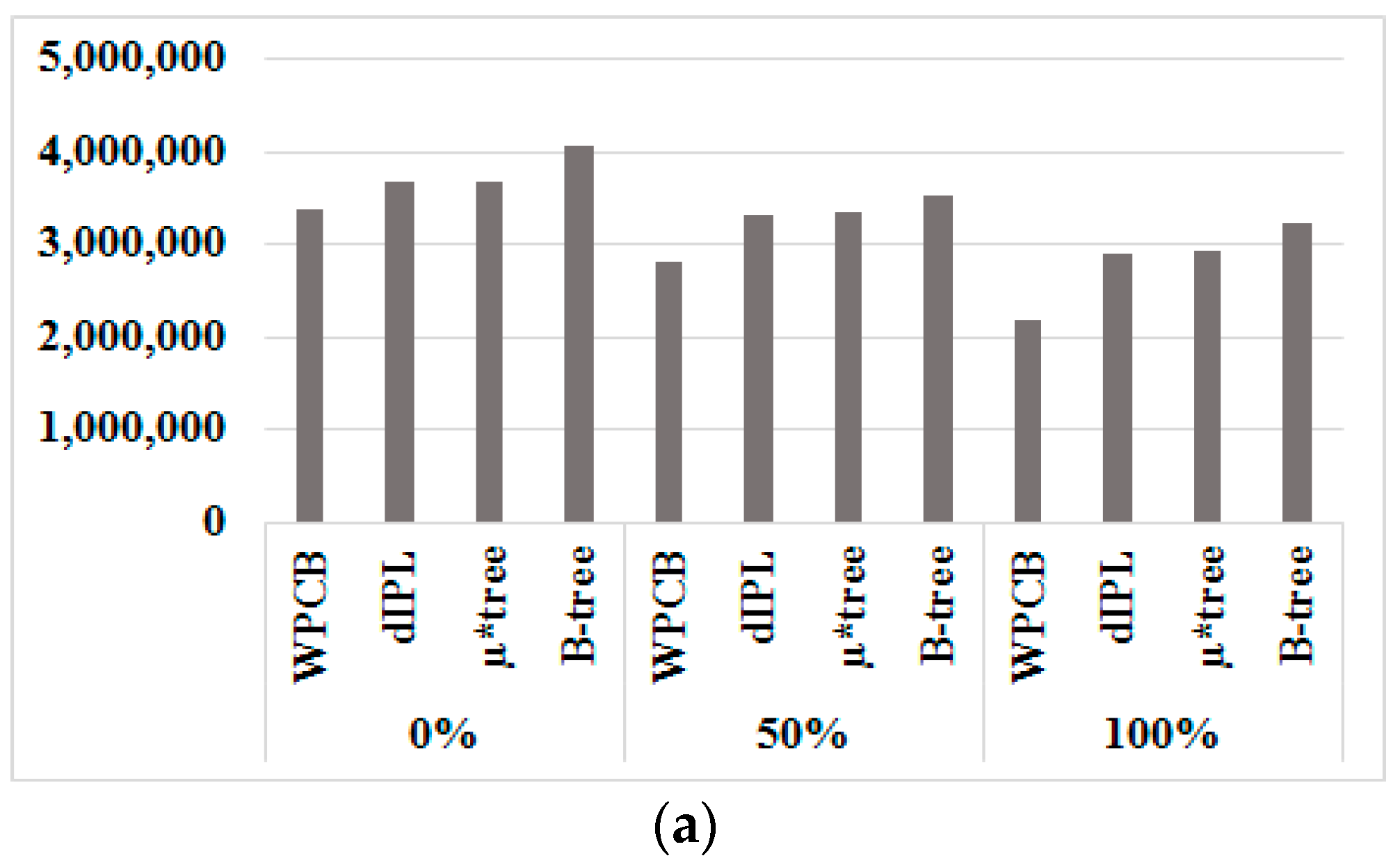

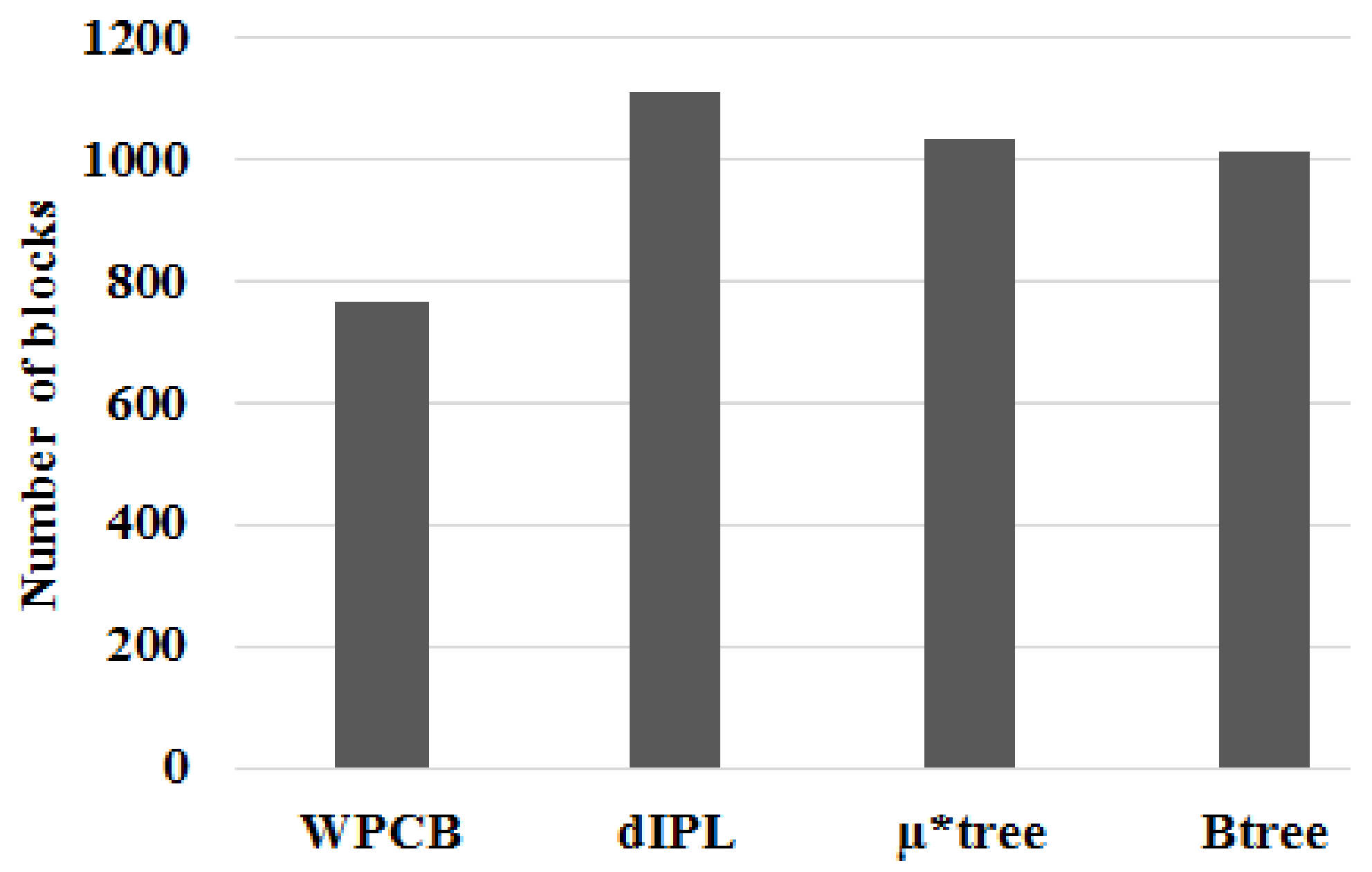

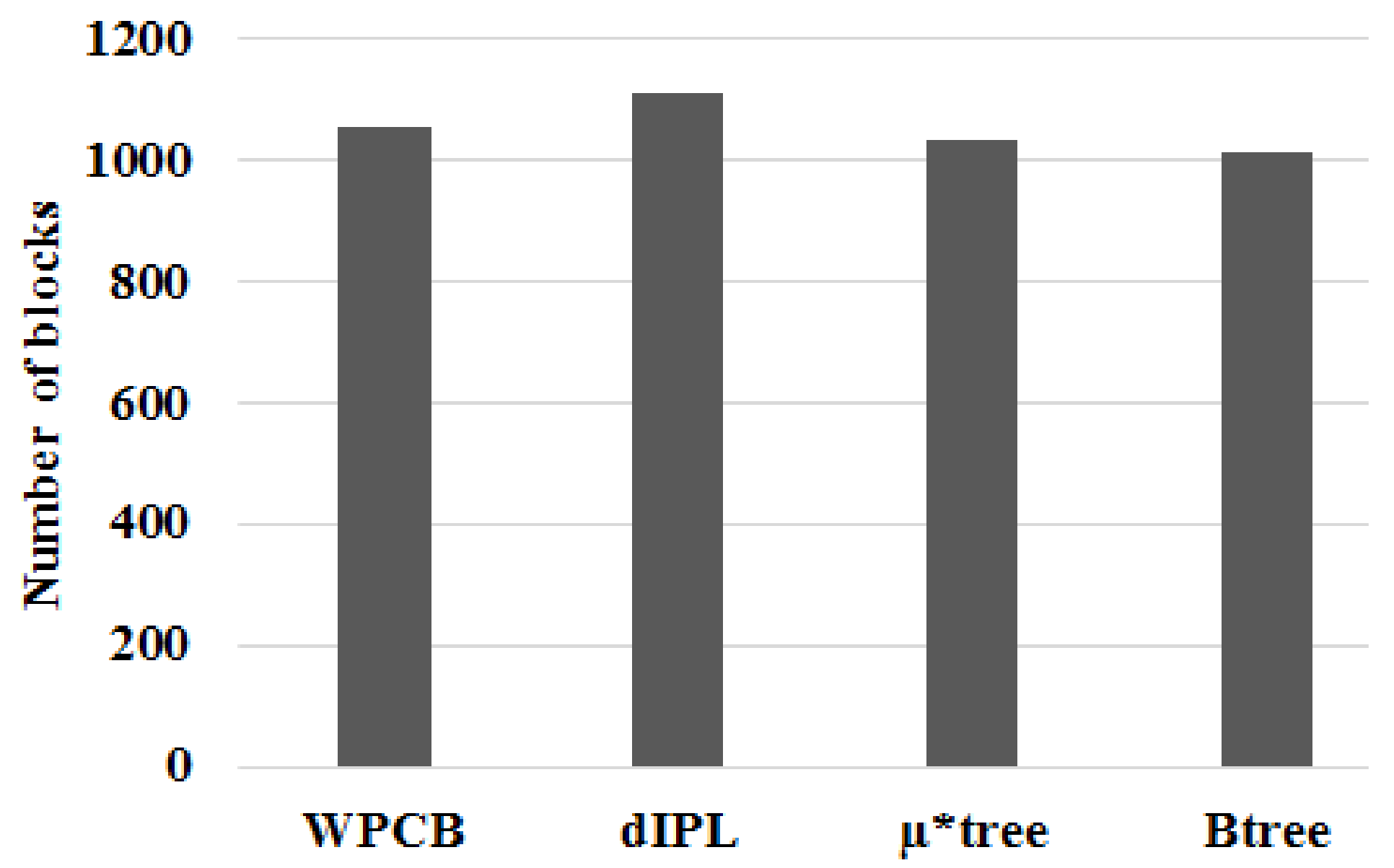

6.2. The Performance of the WPCB-Tree with Respect to Flash Operations

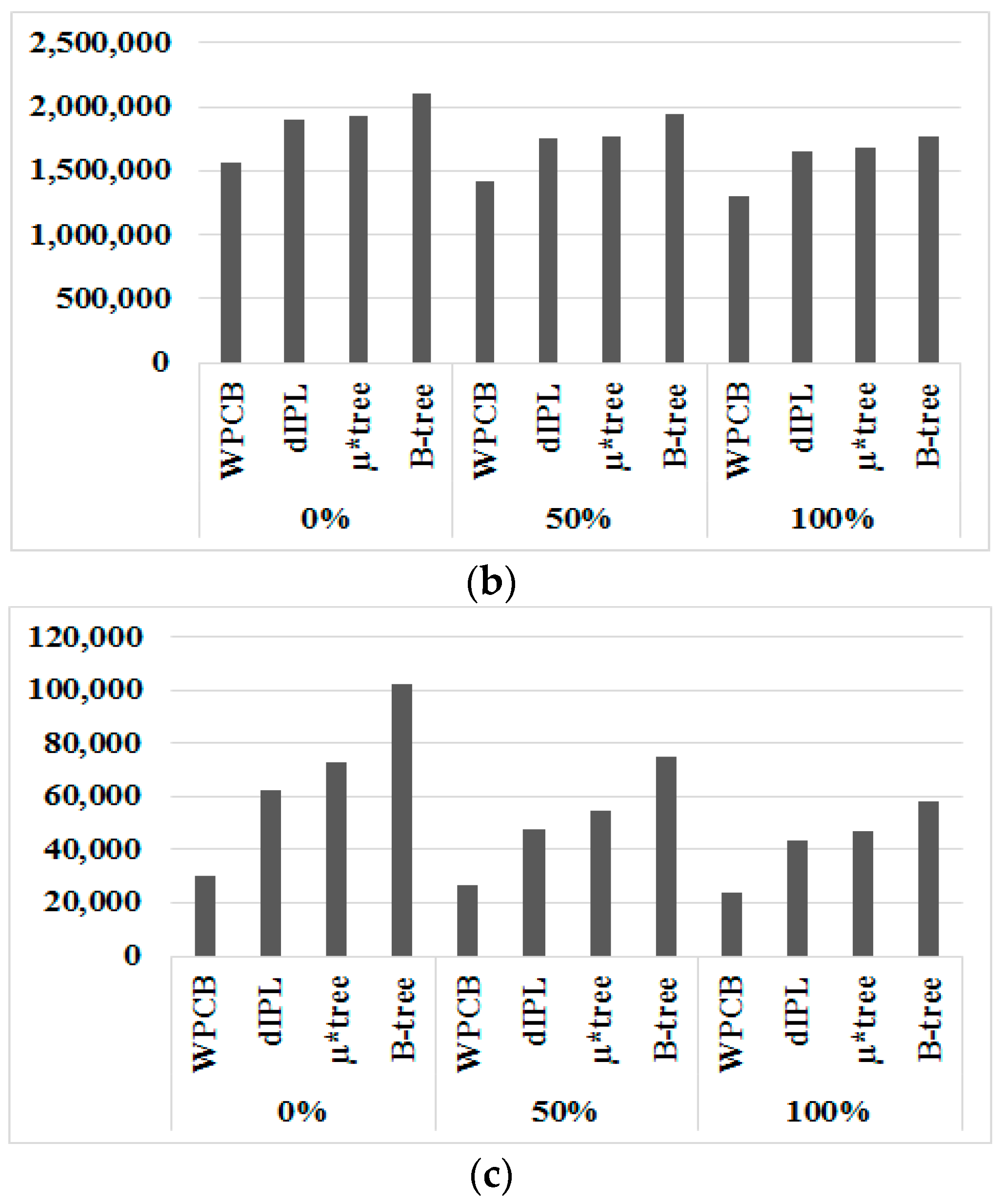

6.3. The Performance of the WPCB-Tree with Respect to Time Consumption

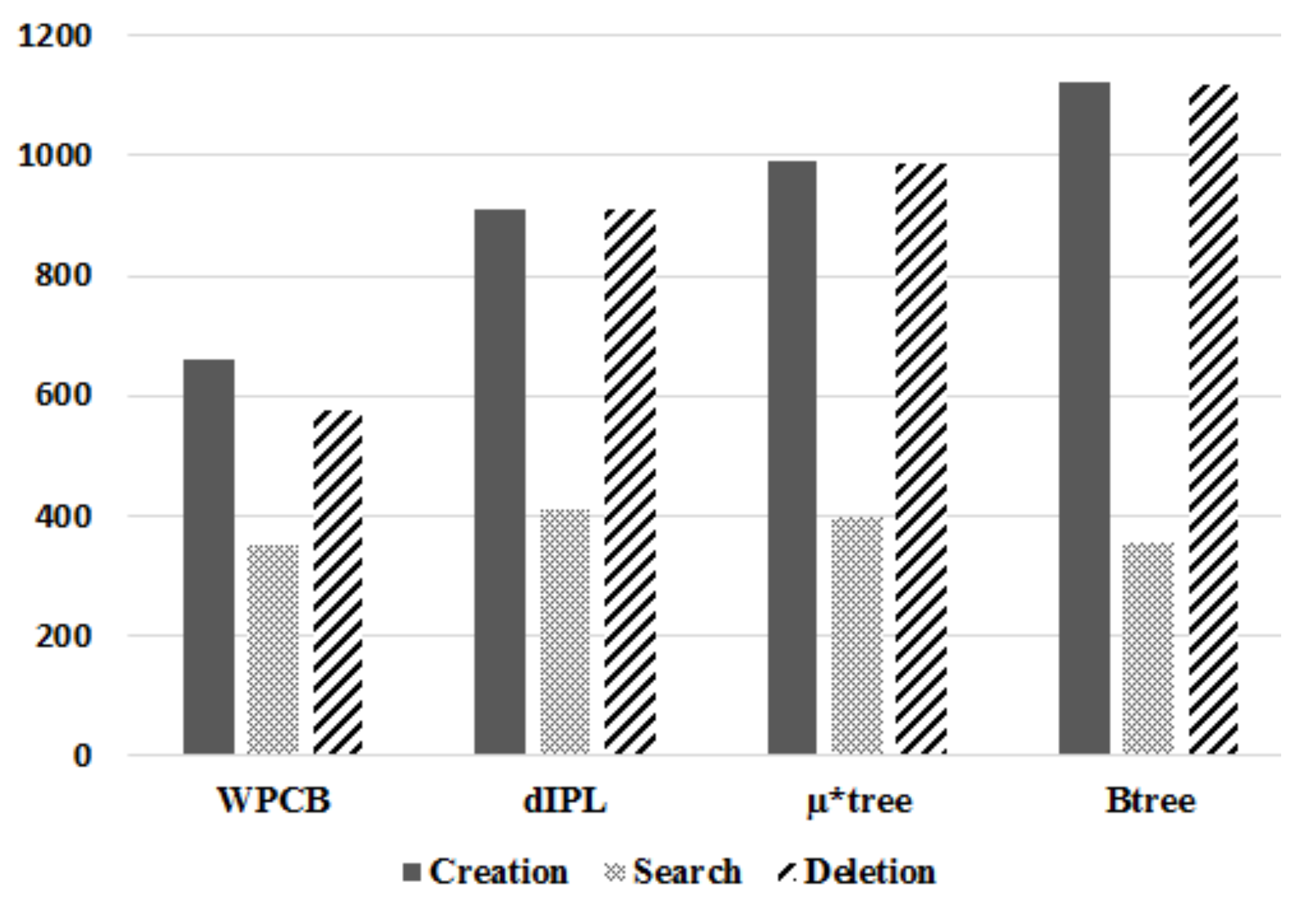

6.4. Page Utilization

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Chung, T.-S.; Park, D.-J.; Park, S.; Lee, D.-H.; Lee, S.-W.; Song, H.-J. A Survey of Flash Translation Layer. J. Syst. Archit. 2009, 55, 332–343. [Google Scholar] [CrossRef]

- Lee, S.-W.; Moon, B.; Park, C.; Kim, J.-M.; Kim, S.-W. A Case for Flash Memory SSD in Enterprise Database Applications. In Proceedings of the SIGMOD’08 Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2008; pp. 1075–1086. [Google Scholar]

- Kingston Technology Corporation. Flash Memory Guide. 2012. Available online: media.kingston.com/pdfs/MKF-_283.1_Flash_Memory_Guide_EN.pdf (accessed on 10 April 2012).

- Pratibha, S; Mrs., Suvarna. Efficient Flash Translation layer for Flash Memory. Int. J. Sci. Res. Publ. 2013, 3, 1646–1652. [Google Scholar]

- Li, Y.; Quader, K.N. NAND Flash Memory: Challenges and Opportunities. Computer 2013, 46, 23–29. [Google Scholar] [CrossRef]

- Ma, D.; Feng, J.; Li, G. LazyFTL: A Page-Level Flash Translation Layer Optimized for NAND Flash Memory. In Proceedings of the SIGMOD’11 Proceedings of the 2011 ACM SIGMOD International Conference on Management of Data, Athens, Greece, 12–16 June 2011; pp. 1–12. [Google Scholar]

- Ma, D.; Feng, J.; Li, G. A Survey of Address Translation Technologies for Flash Memories. ACM Comput. Surv. 2014, 46, 36. [Google Scholar] [CrossRef]

- Lee, H.-S.; Yun, H.-S.; Lee, D.-H. HFTL: Hybrid Flash Translation Layer based on Hot Data Identification for Flash Memory. IEEE Trans. Consum. Electron. 2009, 55, 2005–2011. [Google Scholar] [CrossRef]

- Gupta, A.; Kim, Y.; Urgaonkar, B. DFTL: A Flash Translation Layer Employing Demand-based Selective Caching of Page-level Address Mappings. In Proceedings of the 14th International Conference Architectural Support for Programming Languages and Operating Systems, Washington, DC, USA, 7–11 March 2009; pp. 229–240. [Google Scholar]

- Park, D. CFTL: An Adaptive Hybrid Flash Translation Layer with Efficient Caching Strategies. In Proceedings of the Modeling, Analysis & Simulation of Computer and Telecommunication Systems (MASCOTS), Singapore, 25–17 July 2011. [Google Scholar]

- Birrell, A.; Isard, M.; Thacker, C.; Wobber, T. A Design for High-Performance Flash Disks. ACM SIGOPS Oper. Syst. Rev. 2007, 41, 88–93. [Google Scholar] [CrossRef]

- Batory, D.S. B+-Trees and Indexed Sequential Files: A Performance Comparison. In Proceedings of the 1981 ACM SIGMOD International Conference, Ann Arbor, MI, USA, 29 April–1 May 1981; pp. 30–39. [Google Scholar]

- Ho, V.; Park, D.-J. A Survey of the-State-of-the-Art B-tree Index on Flash Memory. Int. J. Softw. Eng. Its Appl. 2016, 10, 173–188. [Google Scholar] [CrossRef]

- IBM Research Report. The Fundamental Limit of Flash Random Write Performance: Understanding, Analysis and Performance Modelling. Available online: http://domino.watson.ibm.com/li-brary/Cyberdig.nsf/home (accessed on 31 March 2010).

- Kim, H.; Ahn, S. BPLRU: A buffer management scheme for improving random writes in flash storage. In Proceedings of the FAST’08 Proceedings of the 6th USENIX Conference on File and Storage Technologies, San Jose, CA, USA, 26–29 February 2008. Article No. 16. [Google Scholar]

- Gb F-die NAND Flash. Available online: http://www.mt-system.ru/sites/default/files/docs/samsung/k9f1g08u0f_1.0.pdf (accessed on 26 July 2016).

- Park, D.-J.; Choi, H.-G. Efficiently Managing the B-tree using Write Pattern Conversion on NAND Flash Memory. J. KIISE Comput. Syst. Theory 2009, 36, 521–531. [Google Scholar]

- Roselli, D.; Lorch, J.R.; Anderson, T.E. A Comparison of File System Workloads. In Proceedings of the 6th USENIX Conference on File and Storage Technologies, San Diego, CA, USA, 18–23 June 2000; pp. 41–54. [Google Scholar]

- Leung, A.W.; Pasupathy, S.; Goodson, G.; Miller, E.L. Measurement and Analysis of Large Scale Network File System Workloads. In Proceedings of the ATC’08 USENIX 2008 Annual Technical Conference, Boston, MA, USA, 22–27 June 2008; pp. 213–226. [Google Scholar]

- Dillon, S.; Beck, C.; Kyte, T.; Kallman, J.; Rogers, H. Beginning Oracle Programming; Wrox Press: Birmingham, UK, 2003. [Google Scholar]

- Ponnekanti, N.; Kodavalla, H. Online Index Rebuild. In Proceedings of the SIGMOD/PODS’00 ACM International Conference on Management of Data and Symposium on Principles of Database Systems, Dallas, TX, USA, 15–18 May 2000; pp. 529–538. [Google Scholar]

- Kim, Y.; Tauras, B.; Gupta, A.; Urgaonkar, B. FlashSim: A Simulator for NAND Flash-based Solid-State Drives. In Proceedings of the First International Conference on Advances in System Simulation, Porto, Portugal, 20–25 September 2009; pp. 125–131. [Google Scholar]

- Axboe, J.; Brunelle, A.D. Blktrace User Guide. 2007. Available online: http://kernel.org/pub/linux/kernel/people/axboe/blk-trace/ (accessed on 5 September 2006).

| Random Write | Random Read | Sequential Write | Sequential Read |

|---|---|---|---|

| 400 µs | 25 µs | 200 µs | 25 ns |

| Name | Resource of Buffer | Number of Node per Page | Write Operations | Read Operations | Split Operations | Merge Operations | Risk Of Data Loss | Page Occupancy |

|---|---|---|---|---|---|---|---|---|

| BFTL | Main memory | Many | Reduced | Increased | Normal | Normal | Yes | Low |

| IBSF | Main memory | One | Reduced | Normal | Normal | Normal | Yes | Normal |

| LA-tree | Flash memory | One | Reduced | Increased | Normal | Normal | No | Normal |

| MB-tree | Main memory | One | Reduced | Normal | Normal | Normal | Yes | Normal |

| µ-tree | No | Many | Normal | Normal | Frequently | Normal | No | High |

| µ*-tree | No | Many | Normal | Normal | Frequently | Normal | No | High |

| IPL | Flash memory | One | Increased | Increased | Normal | Frequently | No | High |

| dIPL | Flash memory | One | Increased | Increased | Normal | Frequently | No | High |

| WPCB | Flash memory | One | Increased | Normal | Reduced | No | No | Reduced |

| Symbols | Definitions |

|---|---|

| n | number of records |

| h | height of B-trees |

| Or | cost of a flash read operation |

| Ow | cost of a flash random write operation |

| Osw | cost of a flash sequential write operation |

| m | maximum entries one node can have |

| Ns | number of split operations |

| Nm | number of merge operations |

| Nr | number of rotation operations |

| M | number of block merge operations |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ho, V.P.; Park, D.-J. WPCB-Tree: A Novel Flash-Aware B-Tree Index Using a Write Pattern Converter. Symmetry 2018, 10, 18. https://doi.org/10.3390/sym10010018

Ho VP, Park D-J. WPCB-Tree: A Novel Flash-Aware B-Tree Index Using a Write Pattern Converter. Symmetry. 2018; 10(1):18. https://doi.org/10.3390/sym10010018

Chicago/Turabian StyleHo, Van Phi, and Dong-Joo Park. 2018. "WPCB-Tree: A Novel Flash-Aware B-Tree Index Using a Write Pattern Converter" Symmetry 10, no. 1: 18. https://doi.org/10.3390/sym10010018