Multimedia System for Real-Time Photorealistic Nonground Modeling of 3D Dynamic Environment for Remote Control System

Abstract

:1. Introduction

2. Related Works

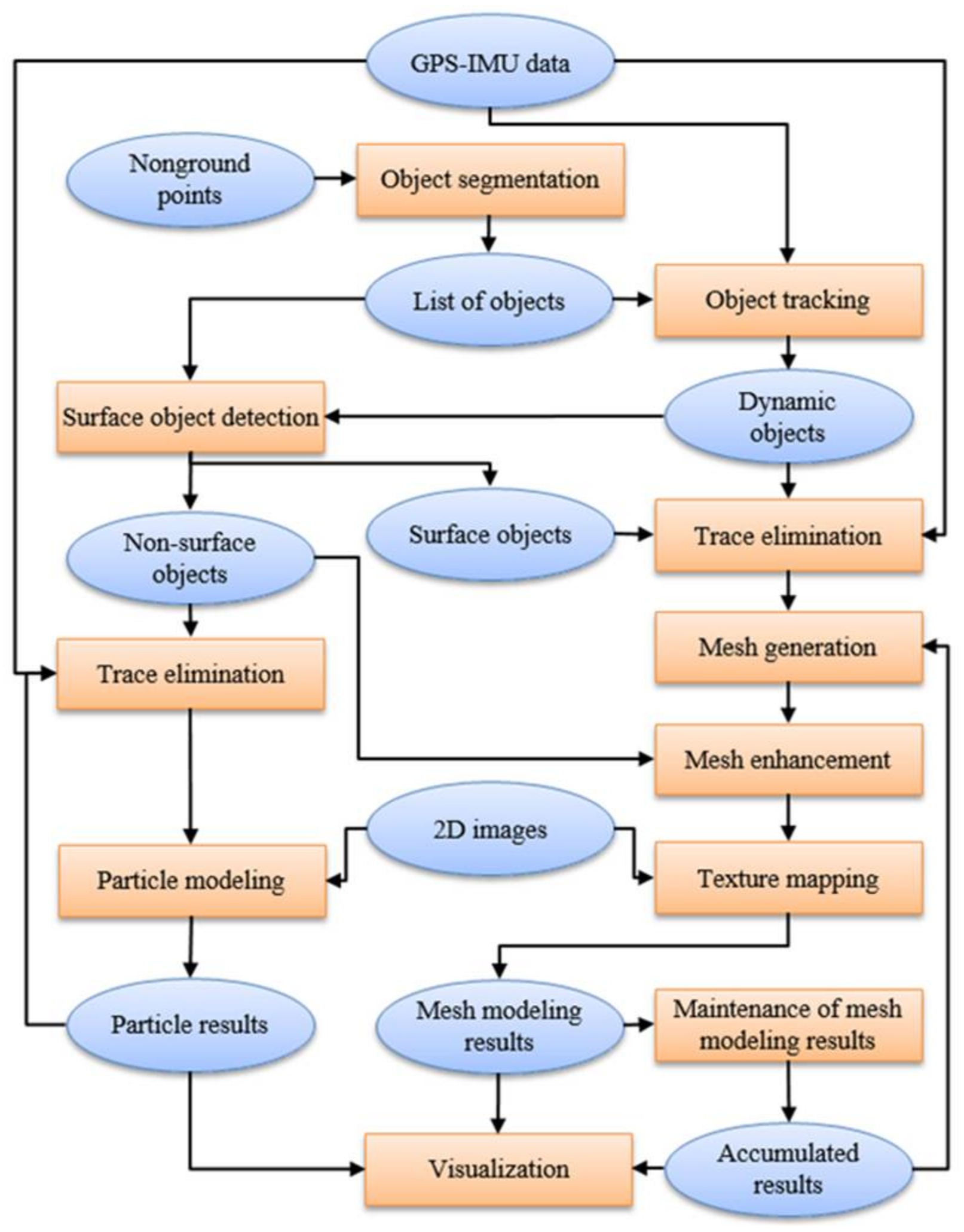

3. Proposed Method

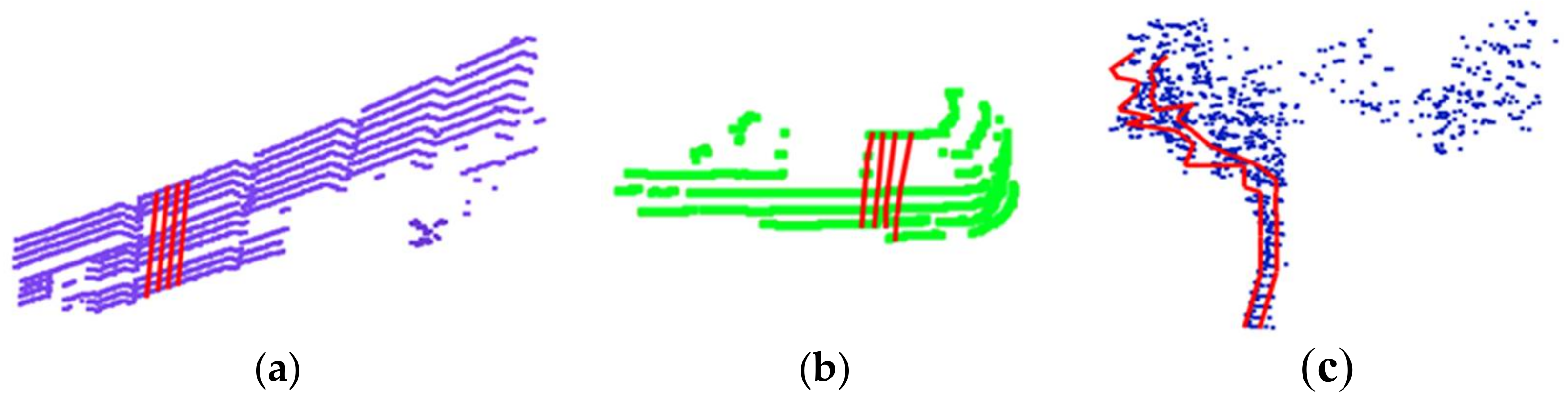

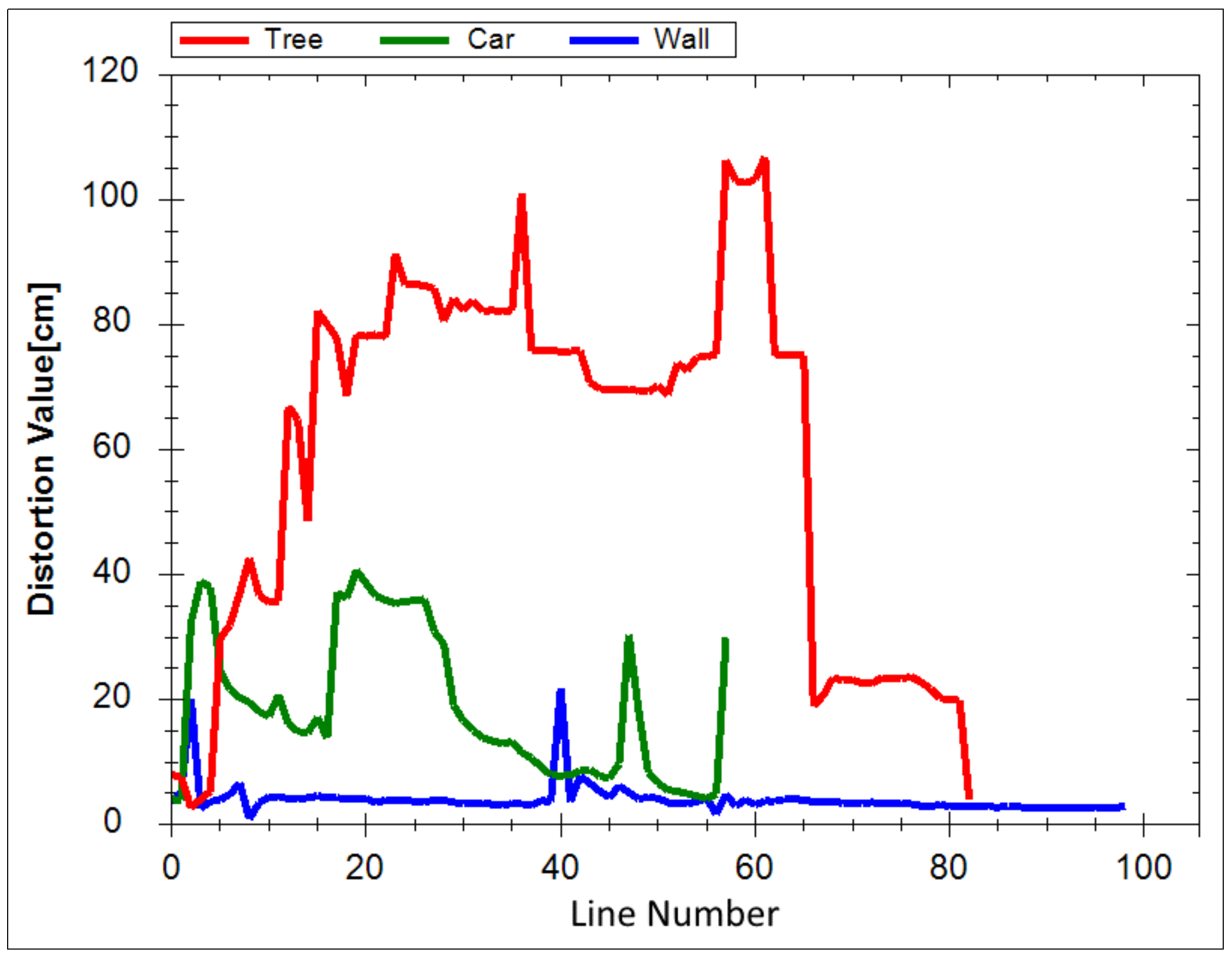

3.1. Surface Object Detection

| Algorithm 1. Surface object detection. |

| Function CHECK_SURFACE_OBJECT(Object obj) |

| h←obj. Get_Height() |

| w←obj. Get_Width() |

| If obj. Get_Object_Type() = Dynamic then |

| If h < dSmall AND w < dSmall then |

| Return 0 //non-surface object |

| Else |

| Return 2 //large dynamic object |

| End |

| Else if h < dSmall AND w < dSmall then |

| Return 0 //non-surface object |

| End |

| ns←0 |

| For i = 0. n − 1 do |

| d←obj. Calculate_Distortion() |

| If d < dmax then |

| ns←ns + 1 |

| End |

| End |

| r←ns/n |

| If r > rmin then |

| Return 1 //surface object |

| Else |

| Return 0 //non-surface object |

| End |

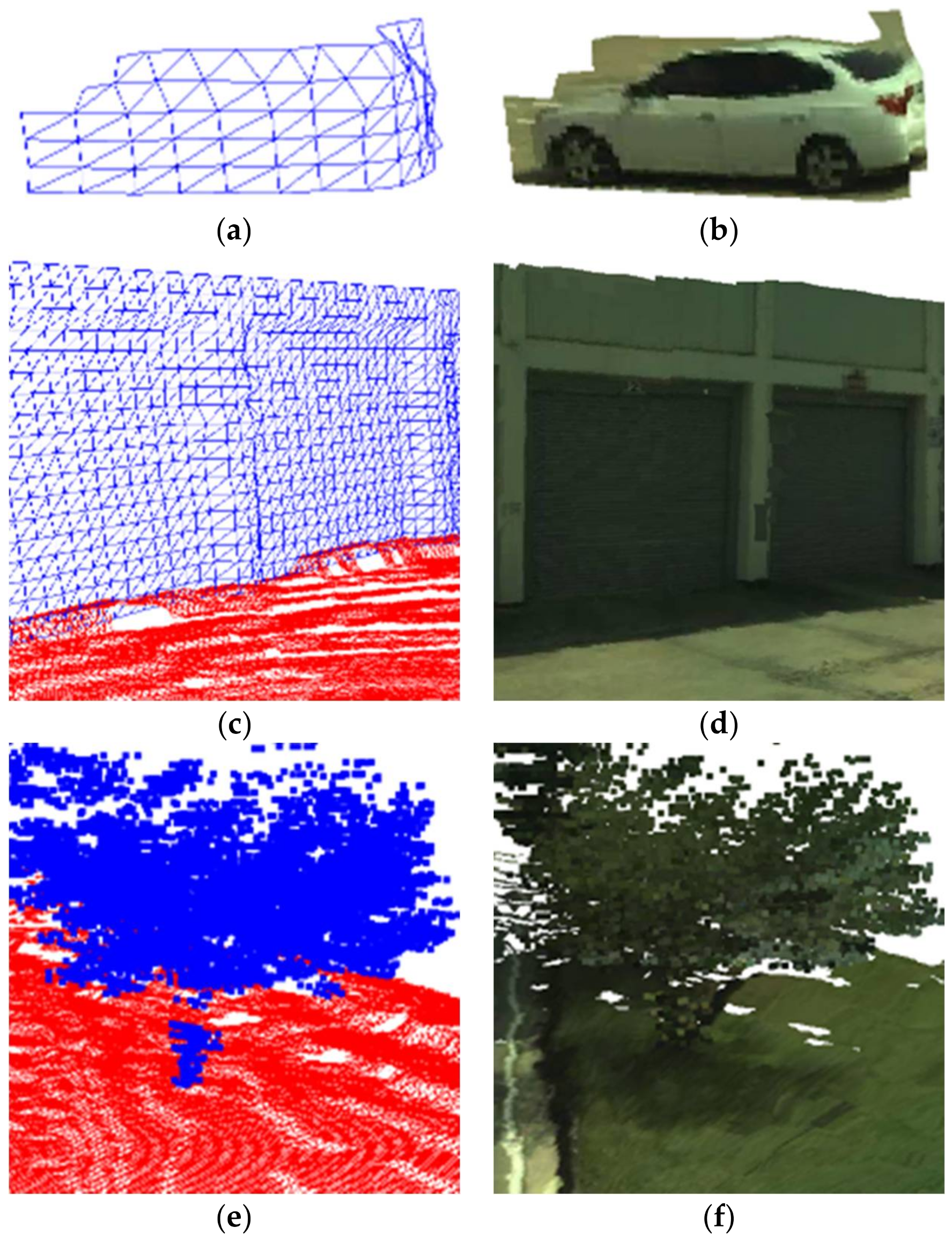

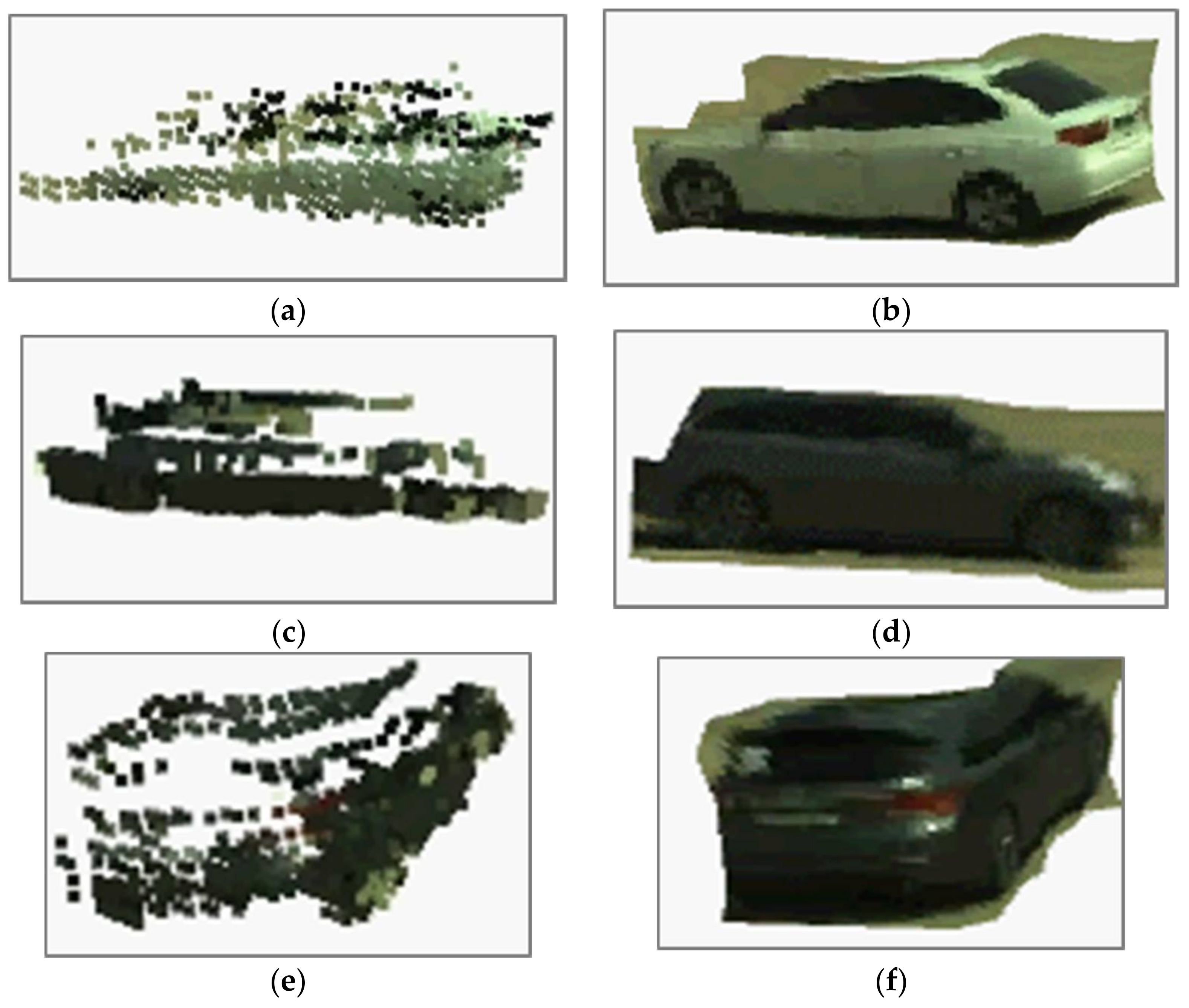

3.2. 3D Mesh Generation for Nonground Surfaces

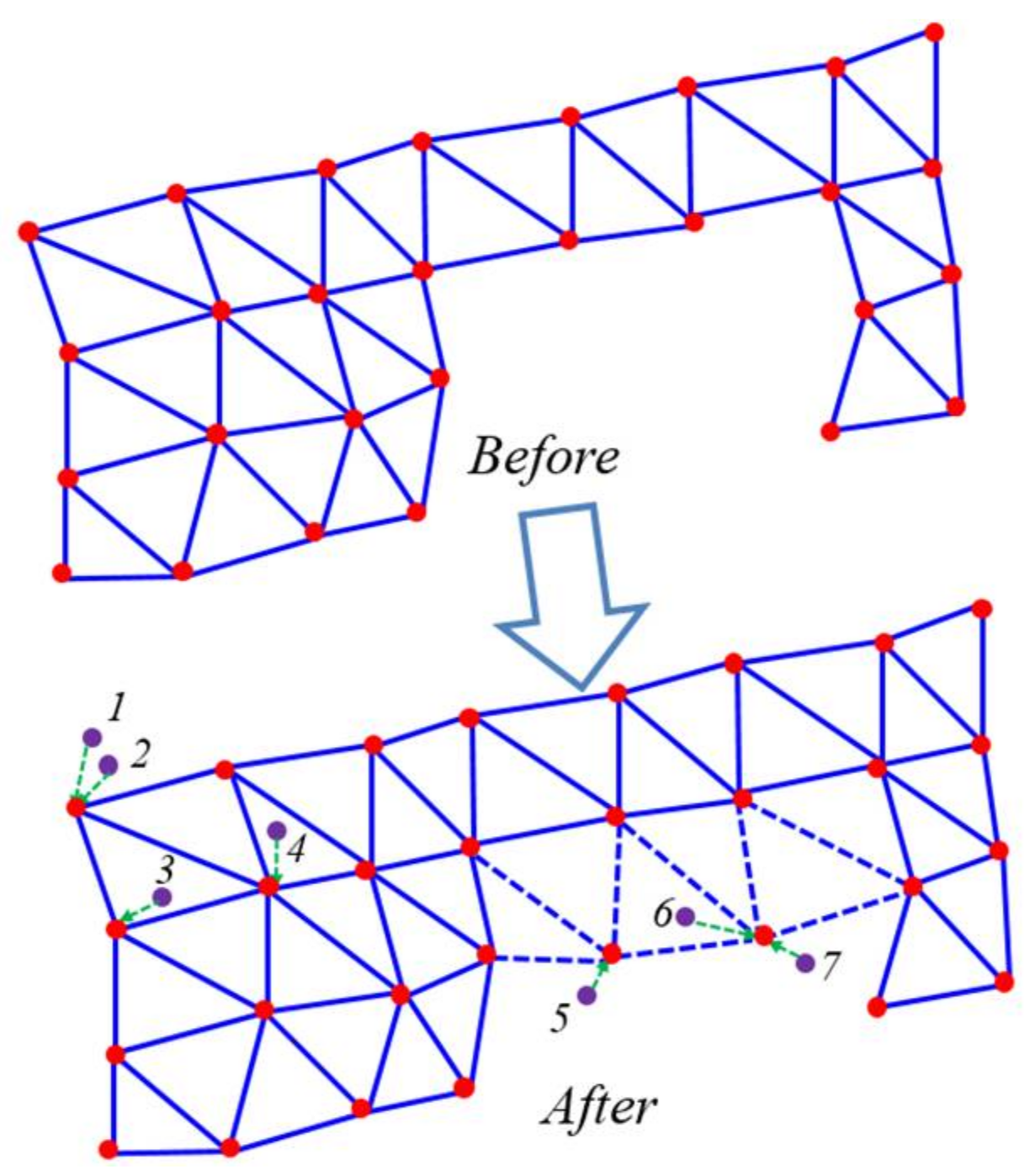

3.3. Mesh Enhancement

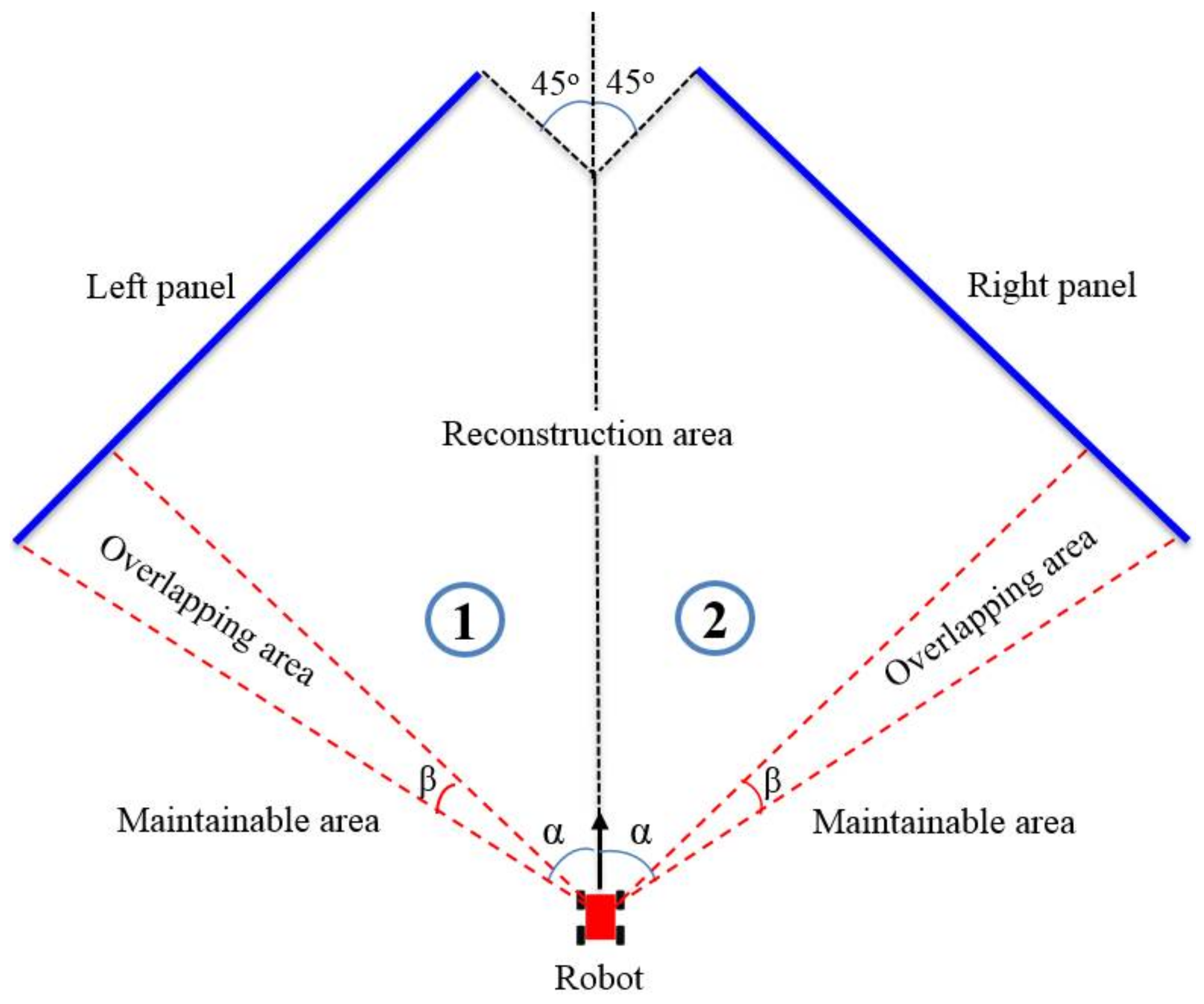

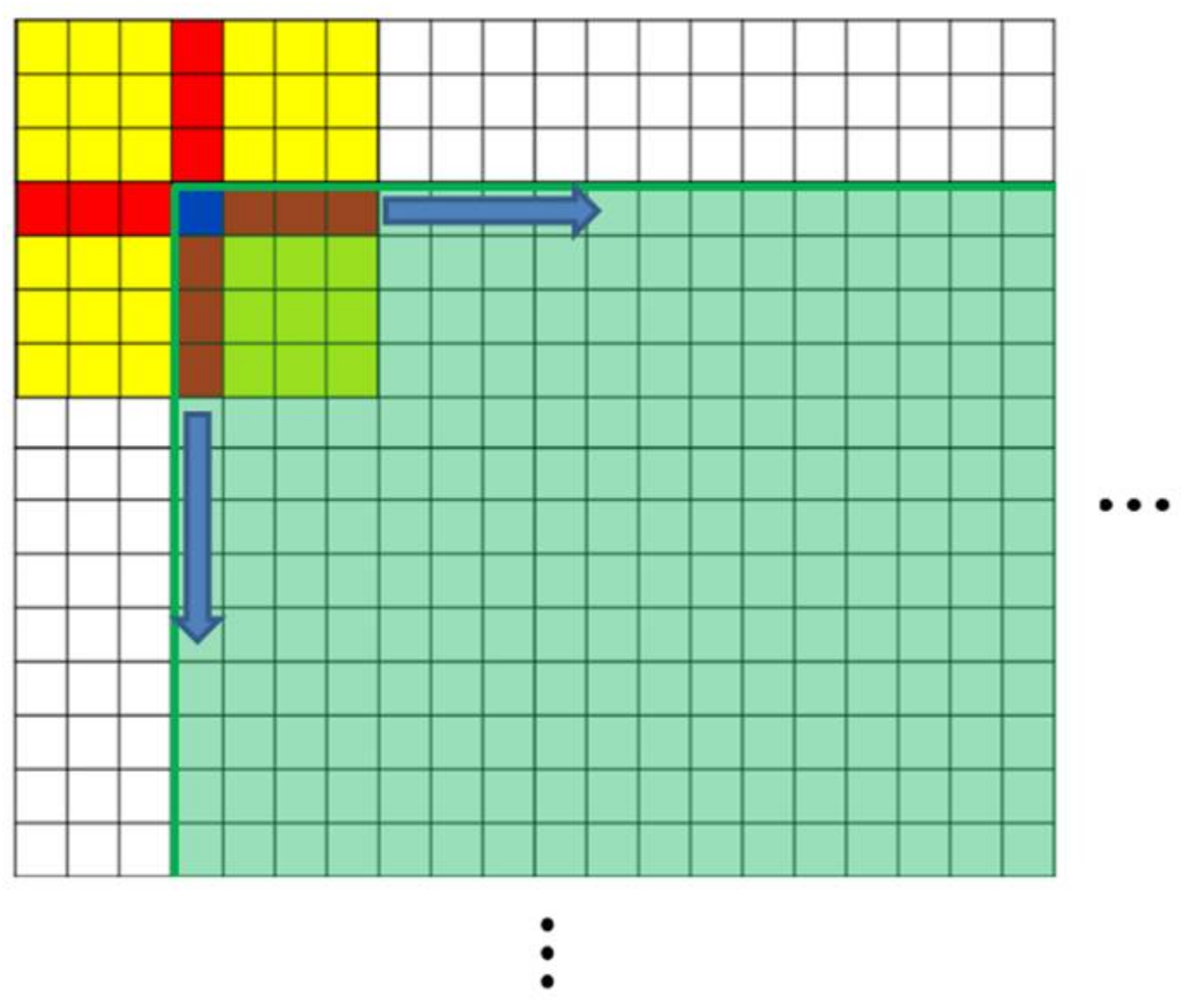

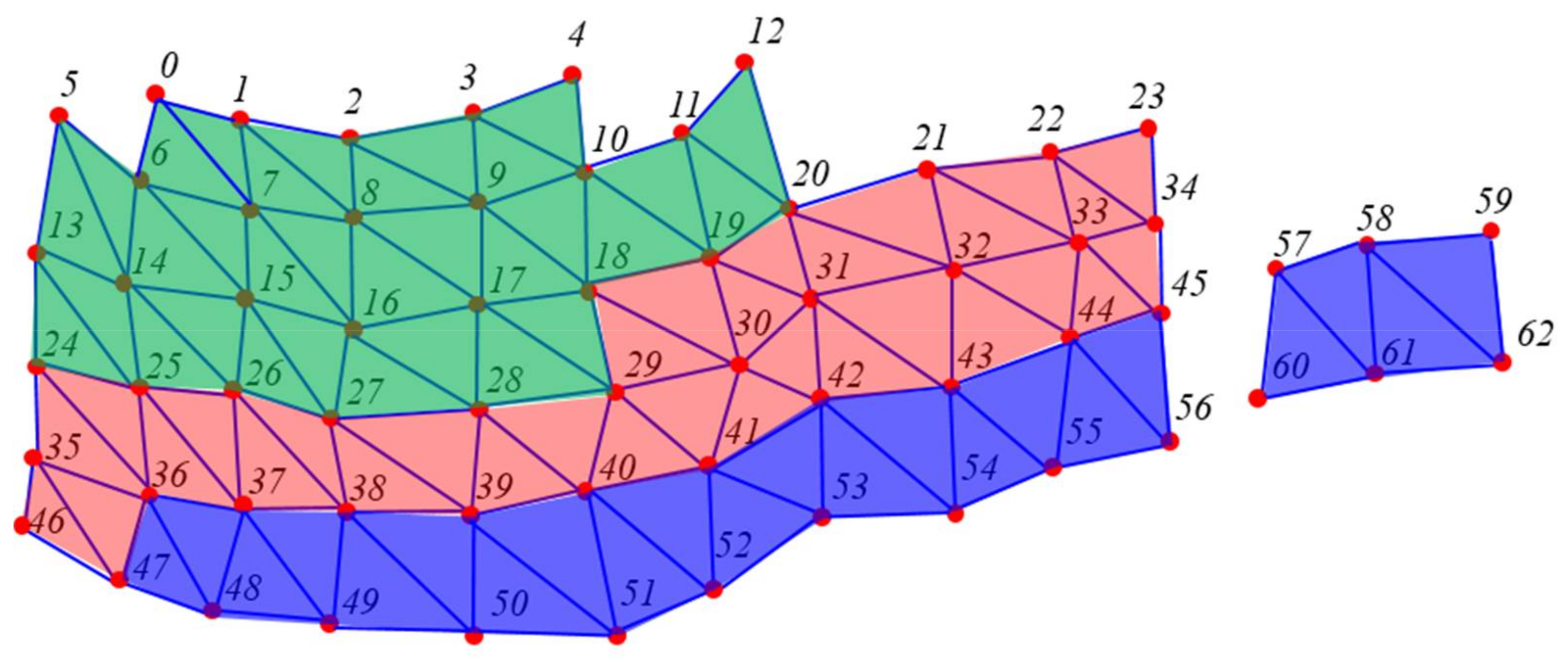

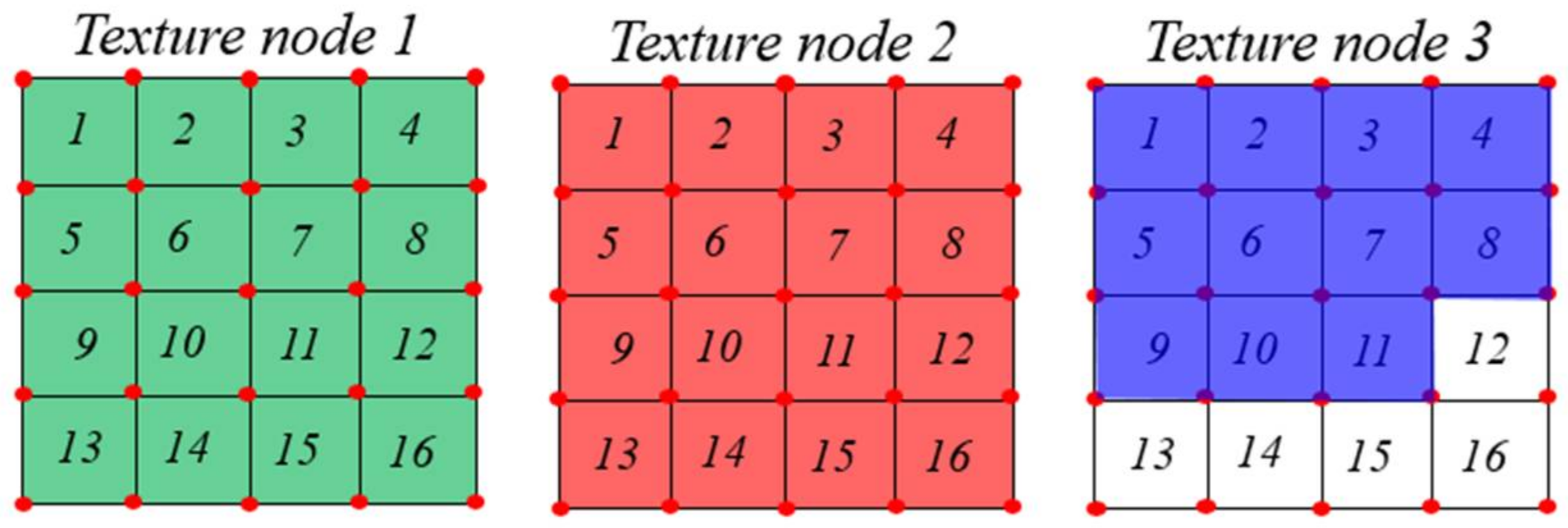

3.4. Texture Mapping

| Algorithm 2. Calculate UV for texture mapping. |

| Procedure CALCULATE_UV() |

| For i = 0..k×k do |

| row←i/k |

| col←i – row × k |

| u[i×4]← col/k |

| v[i×4]← (row + 1)/k |

| u[i×4 + 1]←(col + 1)/k |

| v[i×4 + 1]←(row + 1)/k |

| u[i×4 + 2]←(col + 1)/k |

| v[i×4 + 2]←row/k |

| u[i×4 + 3]←col/k |

| v[i×4 + 3]←row/k |

| End |

3.5. Maintenance of Mesh Modeling Results

4. Experiments and Analysis

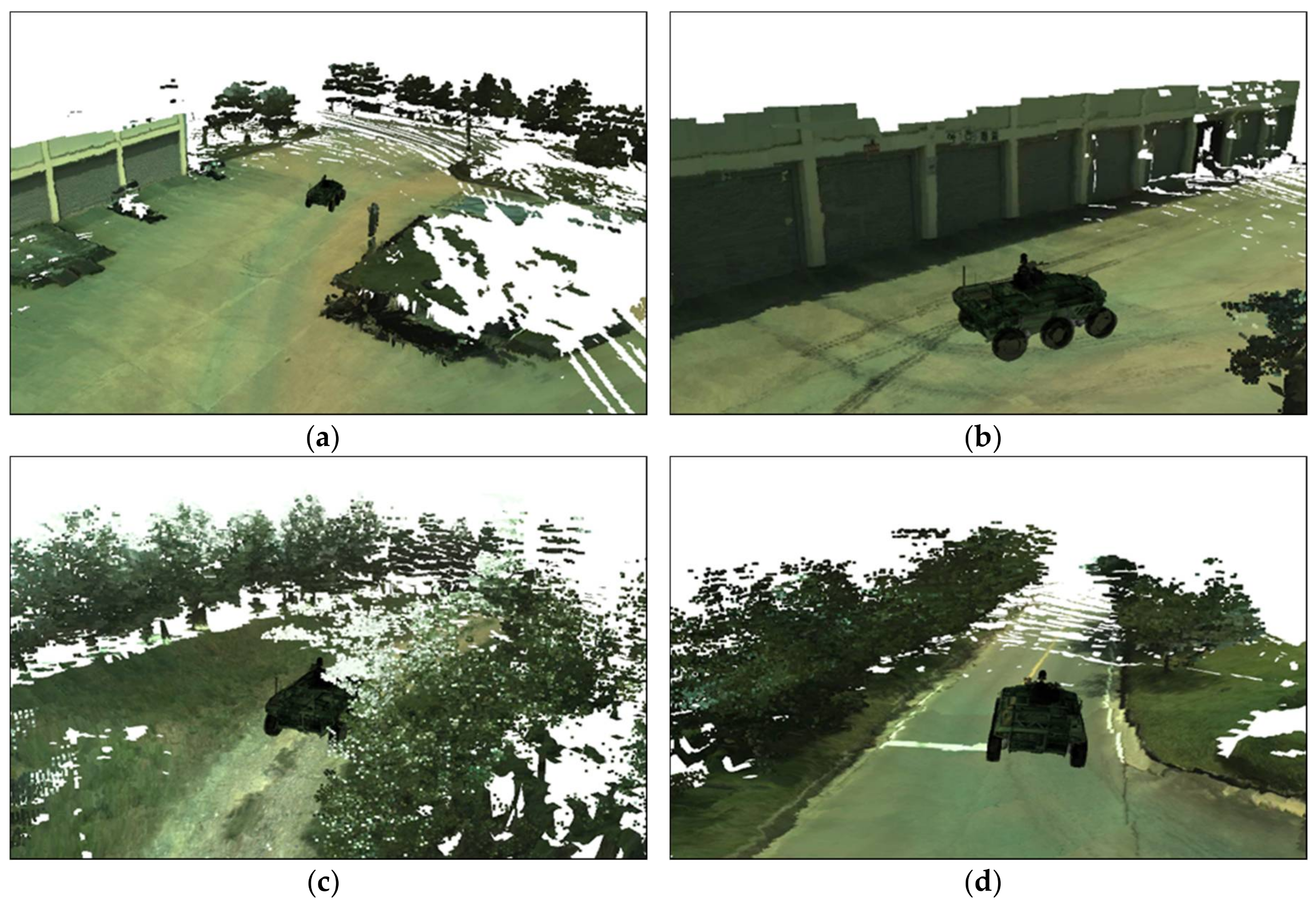

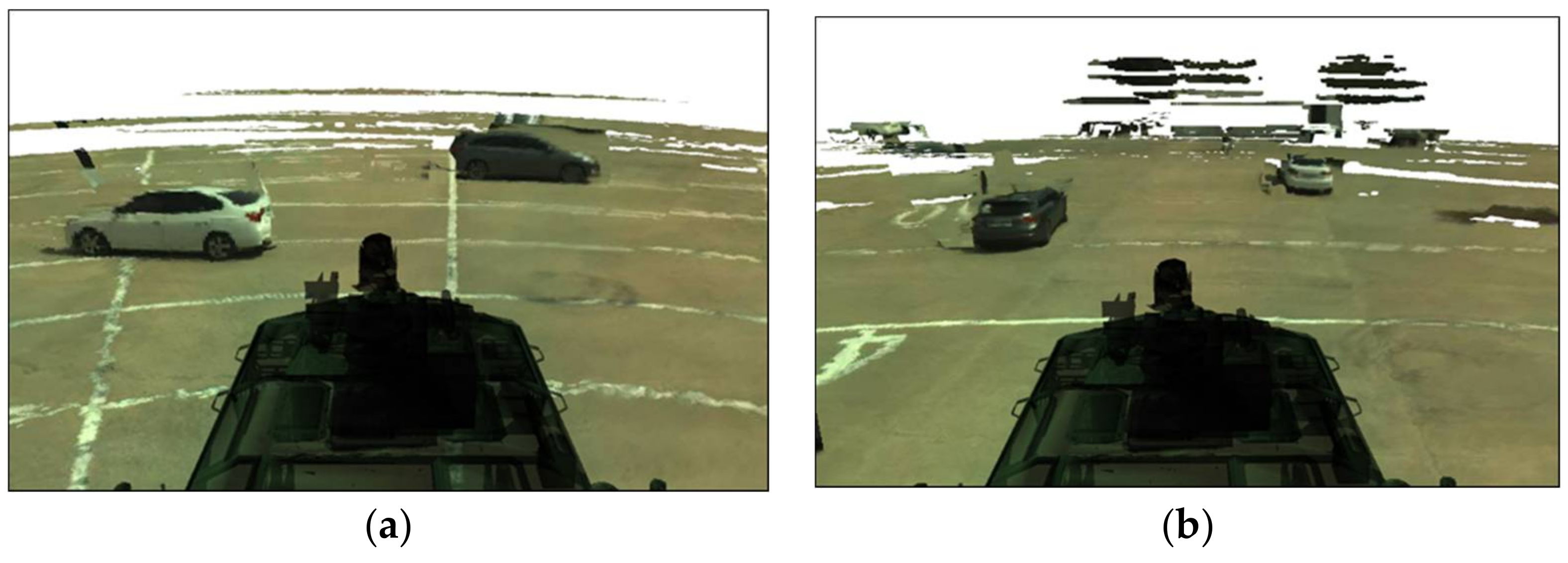

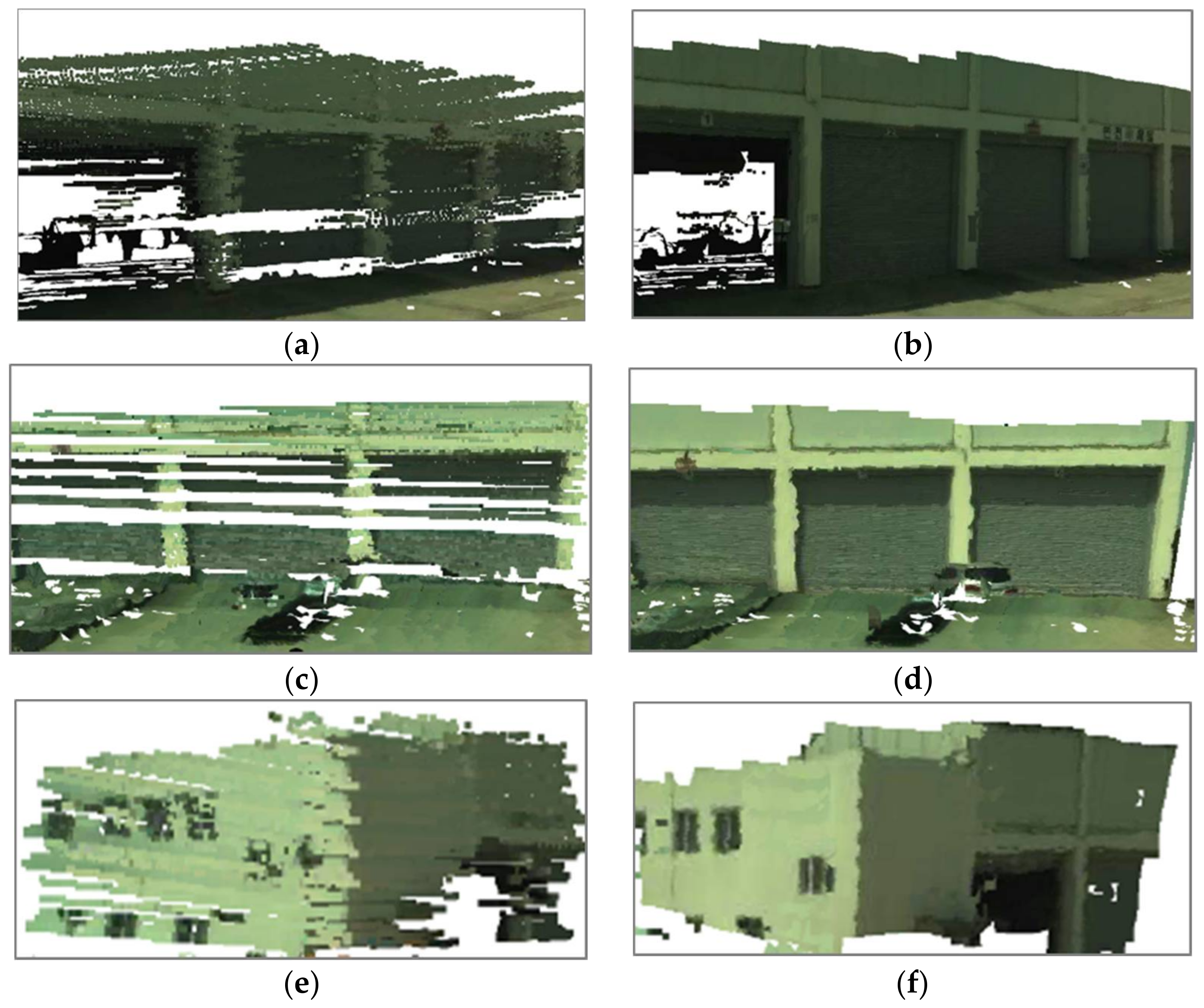

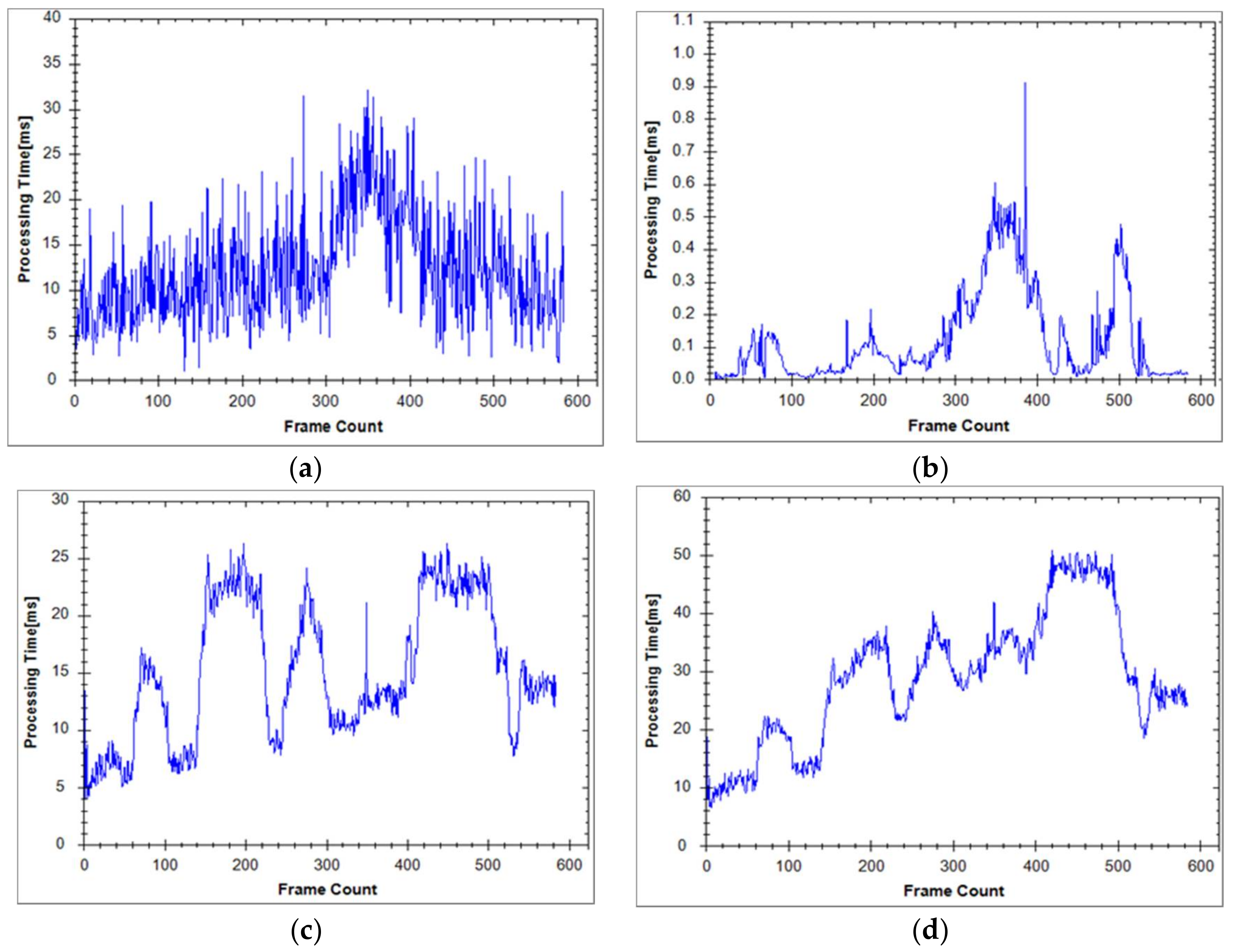

4.1. Experimental Results

4.2. Experimental Analysis

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lim, J.B.; Gil, J.M.; Yu, H.C. A Distributed Snapshot Protocol for Efficient Artificial Intelligence Computation in Cloud Computing Environments. Symmetry 2018, 10, 30. [Google Scholar] [CrossRef]

- Lim, J.B.; Yu, H.C.; Gil, J.M. An Efficient and Energy-Aware Cloud Consolidation Algorithm for Multimedia Big Data Applications. Symmetry 2017, 9, 184. [Google Scholar] [CrossRef]

- Maity, S.; Park, J.H. Powering IoT Devices: A Novel Design and Analysis Technique. J. Converg. 2016, 7, 1–18. [Google Scholar]

- Song, W.; Liu, L.; Tian, Y.; Sun, G.; Fong, S.; Cho, K. A 3D localisation method in indoor environments for virtual reality applications. Hum. Cent. Comput. Inf. Sci. 2017, 7, 39. [Google Scholar] [CrossRef]

- Fong, T.; Thorpe, C. Vehicle Teleoperation Interfaces. Auton. Robots 2001, 11, 9–18. [Google Scholar] [CrossRef]

- Kogut, G.; Blackburn, M.; Everett, H.R. Using Video Sensor Networks to Command and Control Unmanned Ground Vehicles. In Proceedings of the AUVSI Unmanned Systems in International Security 2003 (USIS 03), London, UK, 9–12 September 2003; pp. 1–14. [Google Scholar]

- Murphy, R.R.; Kravitz, J.; Stover, S.L.; Shoureshi, R. Mobile robots in mine rescue and recovery. IEEE Robot. Autom. Mag. 2009, 16, 91–103. [Google Scholar] [CrossRef]

- Kawatsuma, S.; Mimura, R.; Asama, H. Unitization for portability of emergency response surveillance robot system: Experiences and lessons learned from the deployment of the JAEA-3 emergency response robot at the Fukushima Daiichi Nuclear Power Plants. ROBOMECH J. 2017, 4, 6. [Google Scholar] [CrossRef]

- Song, W.; Cho, S.; Cho, K.; Um, K.; Won, C.S.; Sim, S. Traversable Ground Surface Segmentation and Modeling for Real-Time Mobile Mapping. Int. J. Distrib. Sens. Netw. 2014, 10. [Google Scholar] [CrossRef]

- Chu, P.; Cho, S.; Fong, S.; Park, Y.; Cho, K. 3D reconstruction framework for multiple remote Robots on cloud system. Symmetry 2017, 9, 55. [Google Scholar] [CrossRef]

- Song, W.; Cho, K. Real-time terrain reconstruction using 3D flag map for point clouds. Multimed. Tools Appl. 2015, 74, 3459–3475. [Google Scholar] [CrossRef]

- Kelly, A.; Chan, N.; Herman, H.; Huber, D.; Meyers, R.; Rander, P.; Warner, R.; Ziglar, J.; Capstick, E. Real-Time Photorealistic Virtualized Reality Interface For Remote Mobile Robot Control. Int. J. Robot. Res. 2011, 30, 384–404. [Google Scholar] [CrossRef]

- Hoppe, H.; DeRose, T.; Duchamp, T.; McDonald, J.; Stuetzle, W. Surface reconstruction from unorganized points. In Proceedings of the ACM SIGGRAPH 1992, Chicago, IL, USA, 27–31 July 1992; pp. 71–78. [Google Scholar]

- Gopi, M.; Krishnan, S.; Silva, C.T. Surface reconstruction based on lower dimensional localized Delaunay triangulation. Comput. Graph. Forum 2000, 19, 467–478. [Google Scholar] [CrossRef]

- Butchart, C.; Borro, D.; Amundarain, A. GPU local triangulation an interpolating surface reconstruction algorithm. Comput. Graph. Forum 2008, 27, 807–814. [Google Scholar] [CrossRef]

- Cao, T.; Nanjappa, A.; Gao, M.; Tan, T. A GPU-accelerated algorithm for 3D Delaunay triangulation. In Proceedings of the ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, San Francisco, CA, USA, 14–16 March 2014; pp. 47–54. [Google Scholar]

- Moosmann, F.; Pink, O.; Stiller, C. Segmentation of 3D LiDAR Data in non-flat Urban Environments using a Local Convexity Criterion. In Proceedings of the IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 215–220. [Google Scholar]

- Hernández, J.; Marcotegui, B. Point Cloud Segmentation towards Urban Ground Modeling. In Proceedings of the 2009 Joint Urban Remote Sensing Event, Shanghai, China, 20–22 May 2009; pp. 1–5. [Google Scholar]

- Himmelsbach, M.; Hundelshausen, F.V.; Wuensche, H. Fast segmentation of 3d point clouds for ground vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 560–565. [Google Scholar]

- Douillard, B.; Underwood, J.; Kuntz, N.; Vlaskine, V.; Quadros, A.; Morton, P.; Frenkel, A. On the Segmentation of 3D LiDAR Point Clouds. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2798–2805. [Google Scholar]

- Bogoslavskyi, I.; Stachniss, C. Fast range image-based segmentation of sparse 3d laser scans for online operation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 163–169. [Google Scholar]

- Bar-Shalom, Y. Tracking Methods in a Multi target Environment. IEEE Trans. Autom. Control 1978, 23, 618–626. [Google Scholar] [CrossRef]

- Shalom, Y.B.; Li, X.R. Multitarget Multisensor Tracking: Principles and Techniques; YBS Publishing: London, UK, 1995. [Google Scholar]

- Blom, H.A.P.; Bar-Shalom, Y. The Interacting Multiple Model Algorithm for Systems with Markovian Switching Coefficients. IEEE Trans. Autom. Control 1988, 33, 780–783. [Google Scholar] [CrossRef]

- Blackman, S.; Popoli, R. Design and Analysis of Modem Tracking Systems; Artech House Publishing: Norwood, MA, USA, 1999. [Google Scholar]

- Wang, C.C.; Thorpe, C.; Suppe, A. LADAR-based detection and tracking of moving objects from a ground vehicle at high speeds. In Proceedings of the IEEE International Conference on Intelligent Vehicles Symposium, Columbus, OH, USA, 9–11 June 2003; pp. 416–421. [Google Scholar]

- Zhang, L.; Li, Q.; Li, M.; Mao, Q.; Nüchter, A. Multiple Vehicle-like Target Tracking Based on the Velodyne LiDAR. IFAC Process. Vol. 2013, 45, 126–131. [Google Scholar] [CrossRef]

- Hwang, S.; Kim, N.; Cho, Y.; Lee, S.; Kweon, I. Fast Multiple Objects Detection and Tracking Fusing Color Camera and 3D LiDAR for Intelligent Vehicles. In Proceedings of the International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xi’an, China, 19–22 August 2016; pp. 234–239. [Google Scholar]

- Monteiro, G.; Premebida, C.; Peixoto, P.; Nunes, U. Tracking and classification of dynamic obstacles using laser range finder and vision. In Proceedings of the International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006; pp. 1–7. [Google Scholar]

- Mahlisch, M.; Schweiger, R.; Ritter, W.; Dietmayer, K. Sensorfusion using spatio-temporal aligned video and LiDAR for improved vehicle detection. In Proceedings of the 2006 IEEE Conference on Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 424–429. [Google Scholar]

- Spinello, L.; Siegwart, R. Human detection using multimodal and multidimensional features. In Proceedings of the IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 3264–3269. [Google Scholar]

- Premebida, C.; Ludwig, O.; Nunes, U. LiDAR and vision-based pedestrian detection system. J. Field Robot. 2009, 26, 696–711. [Google Scholar] [CrossRef]

- Brscic, D.; Kanda, T.; Ikeda, T.; Miyashita, T. Person Tracking in Large Public Spaces Using 3-D Range Sensors. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 522–534. [Google Scholar] [CrossRef]

- Cesic, J.; Markovic, I.; Juric-Kavelj, S.; Petrovic, I. Detection and Tracking of Dynamic Objects using 3D Laser Range Sensor on a Mobile Platform. In Proceedings of the 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Vienna, Austria, 1–3 September 2014; pp. 1–10. [Google Scholar]

- Ye, Y.; Fu, L.; Li, B. Object Detection and Tracking Using Multi-layer Laser for Autonomous Urban Driving. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 259–264. [Google Scholar]

- Chu, P.; Cho, S.; Sim, S.; Kwak, K.; Cho, K. A Fast Ground Segmentation Method for 3D Point Cloud. J. Inf. Process. Syst. 2017, 13, 491–499. [Google Scholar] [CrossRef]

- Chu, P.M.; Cho, S.; Park, Y.W.; Cho, K. Fast point cloud segmentation based on flood-fill algorithm. In Proceedings of the 2017 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI 2017), Daegu, Korea, 16–18 November 2017; pp. 1–4. [Google Scholar]

- Chu, P.M.; Cho, S.; Nguyen, H.T.; Huang, K.; Park, Y.W.; Cho, K. Ubiquitous Multimedia System for Fast Object Segmentation and Tracking Using a Multi-channel Laser Sensor. Cluster Comput. 2018, in press. [Google Scholar]

- Chu, P.M.; Cho, S.; Sim, S.; Kwak, K.; Cho, K. Convergent application for trace elimination of dynamic objects from accumulated LiDAR point clouds. Multimed. Tools Appl. 2017, 2017, 1–19. [Google Scholar] [CrossRef]

- Sim, S.; Sock, J.; Kwak, K. Indirect Correspondence-Based Robust Extrinsic Calibration of LiDAR and Camera. Sensors 2016, 16, E933. [Google Scholar] [CrossRef] [PubMed]

| Step | Processing Time (ms) |

|---|---|

| Ground segmentation | 3.69 |

| Object segmentation | 4.56 |

| Object tracking | 0.34 |

| Surface object detection | 12.34 |

| Ground modeling | 43.7 |

| Nonground modeling | 28.98 |

| Total time | 93.61 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chu, P.M.; Cho, S.; Sim, S.; Kwak, K.; Cho, K. Multimedia System for Real-Time Photorealistic Nonground Modeling of 3D Dynamic Environment for Remote Control System. Symmetry 2018, 10, 83. https://doi.org/10.3390/sym10040083

Chu PM, Cho S, Sim S, Kwak K, Cho K. Multimedia System for Real-Time Photorealistic Nonground Modeling of 3D Dynamic Environment for Remote Control System. Symmetry. 2018; 10(4):83. https://doi.org/10.3390/sym10040083

Chicago/Turabian StyleChu, Phuong Minh, Seoungjae Cho, Sungdae Sim, Kiho Kwak, and Kyungeun Cho. 2018. "Multimedia System for Real-Time Photorealistic Nonground Modeling of 3D Dynamic Environment for Remote Control System" Symmetry 10, no. 4: 83. https://doi.org/10.3390/sym10040083