Digital images play an important role in our daily life. However, due to the powerful image editing software, images can be easily tampered with. Therefore, we need to think about the authenticity of the images. This can be done by digital image forensic techniques.

Two main types of authentication methods in digital image forensics have been explored in the literature: active methods and passive methods.

In active methods, mainly watermarking and steganography techniques are used to embed the digital authentication information into the original image. The authentication information may be used as a verification for forensic investigation when the image has been falsified, and even point out if the image has been tampered with or not. These techniques are restricted because authentication information could be embedded either at the time of recording, or later by an authorized person. The limitation of this technique is that it needs special cameras or subsequent processing of the digital image. Furthermore, some watermarks may distort the quality of the original image. Due to these restrictions, the researchers tend to develop passive techniques for digital image forensics.

Copy–move forgery is becoming one of the most popular image operations in image tampering especially with many easy to use photo editing software. The key characteristic of the duplicated regions is that they have the same noise components, textures, color patterns, homogeneity conditions and internal structures.

For copy–move forgery detection (CMFD), many methods have been proposed that use algorithms that are either block based or key-point based algorithms.

1.1. Block Based Algorithm

First, an image is divided into overlapping sub-blocks. Then, some features are extracted from each block, and compared with other blocks to find the most similar blocks. Various techniques have been developed by several researchers to deal with copy–move forgery using block based methods. The first block based method for detecting copy–move forgery was introduced by Fridrich et al. [

14]. Discrete cosine transformation (DCT) based features have been used. The main drawback of their proposed algorithm is the high computational complexity and inefficiency against some post-processing operations such as rotation, scaling and blurring.

In [

15], the ridgelet transform of divided blocks was applied to extract features and compute Hu moments for these features to produce feature vectors. Euclidean distance of feature vectors is computed for similarity measure. However, in [

16], a copy–move forgery algorithm based on discrete wavelet transform (DWT) was proposed to extract features from input image to yield a reduction in feature dimensional size.

Common limitations of block-based methods include direct uses of quantized or frequency based features from divided blocks for matching, which makes the size of feature vectors quite high, and makes the dimension reduction step mandatory. To overcome these issues, an alternative approach used for the copy–move tampering detection is the key-point based method, as discussed in the next subsection.

1.2. Key Point Based Algorithm

The key-point based methods depend on the extraction of local interest points (key-points) from local interest regions with high entropy without any image sub-blocking. Good key-points should be efficient for computing, be capable of identifying distinct locations in the image regions, and be robust in detection of geometric transformations, illumination changes, noise and other distortions.

The main advantage of key-point based methods is that they have high detection rates in duplicated regions, which exhibit a rich structure such as image regions, but still struggle to reduce the false matches in the flat regions like sky and ocean, etc.

Huang et al. [

17], proposed a Scale-invariant feature transform (SIFT) based detection method to identify duplicate regions with scale and rotation, then used the best bin first search (BBF) method to find the nearest neighbors with high probability that return the matched key points (inliers) as a possible duplicate region. To increase the accuracy of detection methods, a nearest neighbor distance ratio (NNDR) is applied for matched key-points.

Amerini et al. [

18] have proposed detecting multiple duplicated regions based on SIFT features, and then employed generalized nearest neighbor (G2NN) to improve the similarity match between key-points. The agglomerative hierarchical linkage (AHL) clustering method has been employed to group the similar key-points into the same cluster and merge closest pair of clusters into single cluster to represent the cloned regions. The estimation of affine transformation parameters is computed between duplicated regions.

Battiato et al. [

19] proposed a framework for detection of duplicated region based on SIFT. The hashing method is applied to the feature vectors, and then saved into a hash table, which is used for comparing the hash code of corresponding feature vectors after image alignment. The alignment process of the hash is used to estimate the geometric transformation parameters.

All above techniques have the use of the SIFT key-points in common, which are invariant to changes in rotation, illumination, and scaling. Hence, they commonly inherit the limitations of a lack of key-points in flat or smooth areas in images, where little structures are exhibited. This motivated researchers to utilize other key-points descriptors to overcome these limitations, such as Harris corners, Hessian and Laplacian of Gaussian (LOG) detectors.

Liu et al. [

20] have applied Harris detectors to localize key-points, and then adaptive non maximal suppression (ANMS) is employed to identify more key-points in the flat regions in images, which is the main drawback of the SIFT algorithm. The daisy descriptor has been implemented based on an orientation gradient map of image regions around the key-point to perform image matching in a dense way.

Ling et al. [

21] proposed a near duplicate image detection framework based on Hessian affine and polar mapping. At the final stage of detection, a nearest neighbor distance ratio (NNDR) here is used for matching between feature vectors.

Kakar et al. [

22] proposed an algorithm where local interest points are detected by a modified scale Laplacian of Gaussian and Harris corner detector, which make features invariant to geometric transformations. In addition, the feature descriptor was built based on MPEG-7 signature tools.

It is very obvious from the literature that the CMFD approaches discussed have their pros and cons regarding the geometrical changes in the copied regions. Therefore, an efficient CMFD should be robust to some geometrical changes, such as rotation and scaling. These issues have been under extensive study during the past few years.

Rotation is considered the most difficult geometric transformation to deal with in CMFD. Three key approaches were introduced to achieve a rotation invariant CMFD: polar mapping, circle blocking and image moments such as Zernike moments.

In [

23], polar mapping based on log-polar transformation of divided blocks in images was employed. Then, Fast Fourier transformation is applied to build descriptors under different orientations range from 0°–180°. These feature descriptors are then saved into matrices and lexicographical sorting is applied to them. To improve the detection decision, the counting bloom filter has been used to detect descriptors that have the same hashing values.

Shao et al. [

24] proposed a duplicated region detection based on circular window expansion and phase correlation. The algorithm starts with calculating the Fourier transform of the polar expansion on overlapping circular windows pairs, and then an adaptive process is applied to obtain a correlation matrix. Then, estimating the rotation angle of the forged region, a searching procedure is implemented to display the duplicated regions. The algorithm was robust to rotation, illumination changes, blurring, and JPEG compression.

In [

25], the Zernike moments are extracted from overlapping blocks and their magnitudes are used as feature representation. Locality sensitive hashing (LSH) is employed for block matching, and falsely matched block pairs are removed by inspecting phase differences of corresponding Zernike moments.

In this research, we propose developing an efficient copy–move forgery detection algorithm that is able to detect and locate different duplicated regions under various geometric transformations and post processing operations.

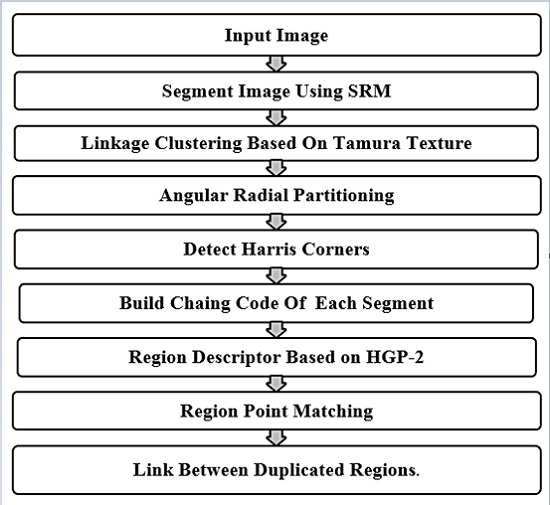

The main advantage of key-point based CMFD methods is invariance under rotation, scaling operations, but still struggle to reduce the false matches in flat regions. In order to improve the efficiency and capability of region duplication detection, we propose a rotation robust method for detecting duplicated regions using Harris corner points and angular radial partitioning (ARP). The proposed method depends on statistical region merging segmentation techniques (SRM) of images as a preprocessing step to detect smooth and patterned regions. However, to improve the time complexity of detection algorithm, a linkage clustering, based on Tamura texture features of detected image regions, is employed in the next step. Then, Harris corner points are detected in angular radial partitions (ARPs) of a circle region for each detected image region in order to obtain a scale and rotation invariant feature points. The matching procedure includes two main steps: (1) matching between chain codes to find similar regions based on internal structure; (2) matching the feature point’s patches based on Hӧlder estimation regularity based descriptor (HGP-2) to find similar regions based on texture. Finally, the matched keypoints between similar regions are linked and the duplicated regions are localized in a blue color to show forgery.

The organization of this paper is presented as follows. In

Section 2, the proposed algorithm is explained.

Section 3 presents the experiment results. Comparisons and discussions are described in

Section 4. Finally, conclusions are drawn in

Section 5.