The Development of Improved Incremental Models Using Local Granular Networks with Error Compensation

Abstract

:1. Introduction

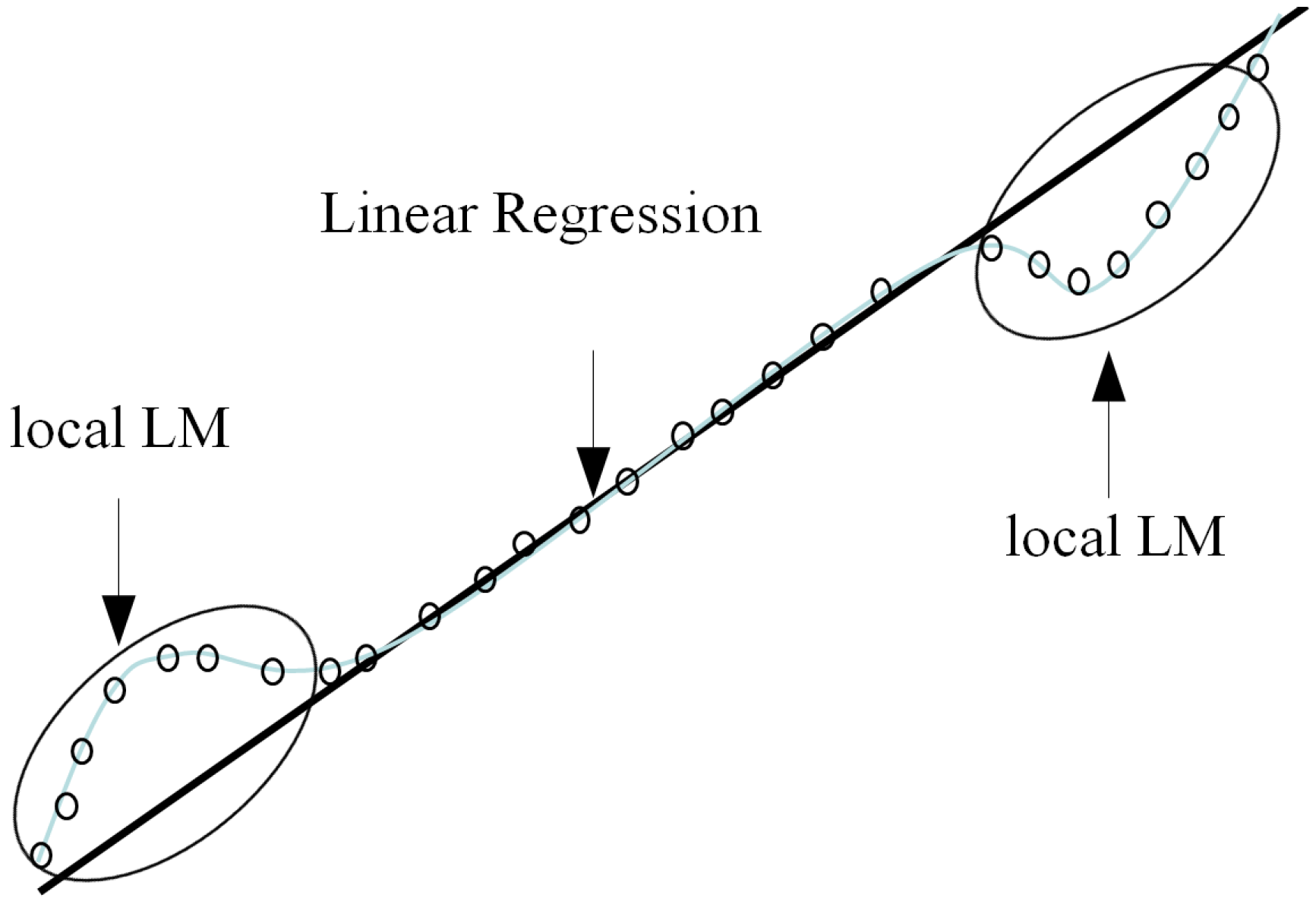

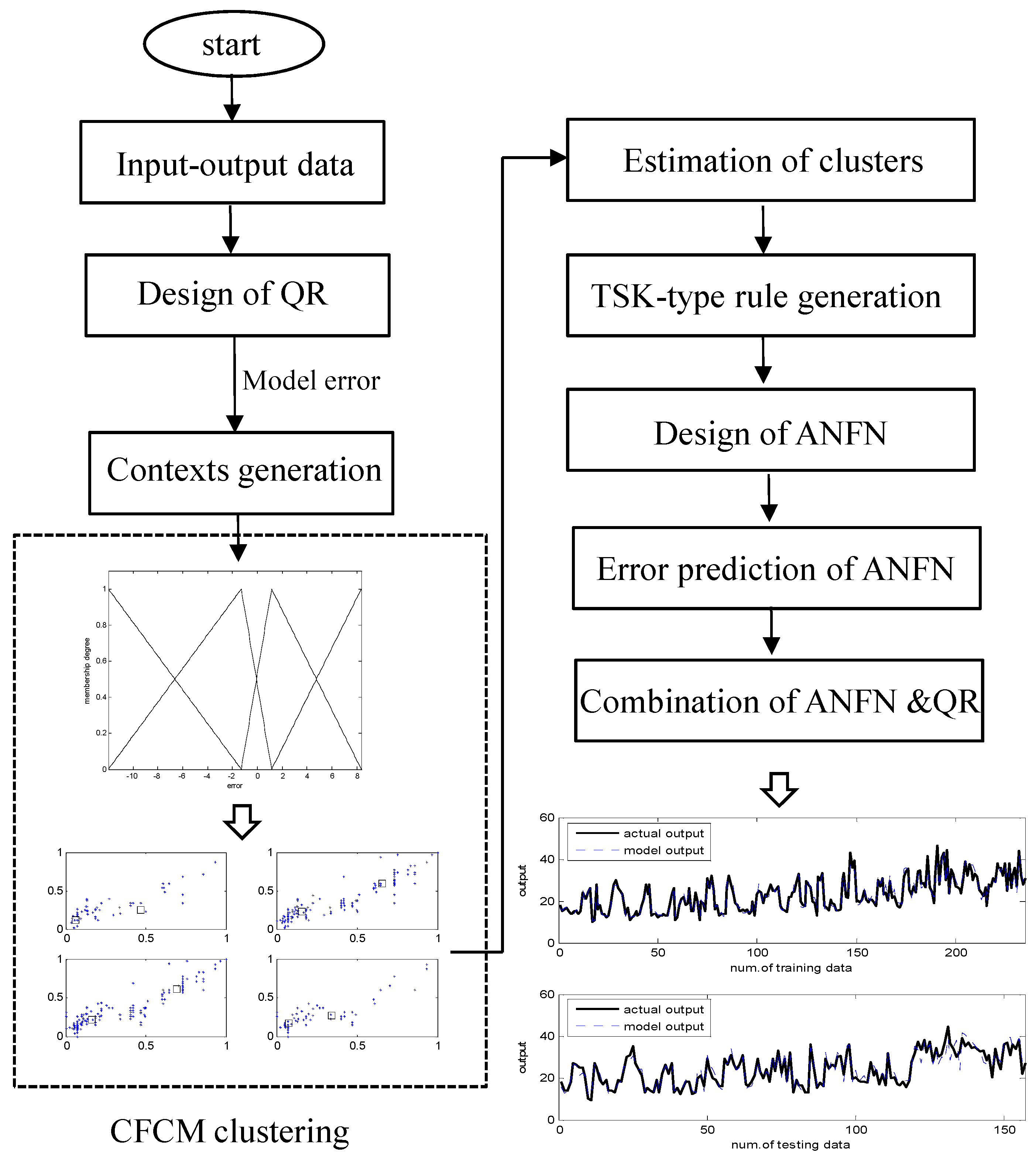

2. Incremental Model Based on LR and Local LM

2.1. The Description of IM

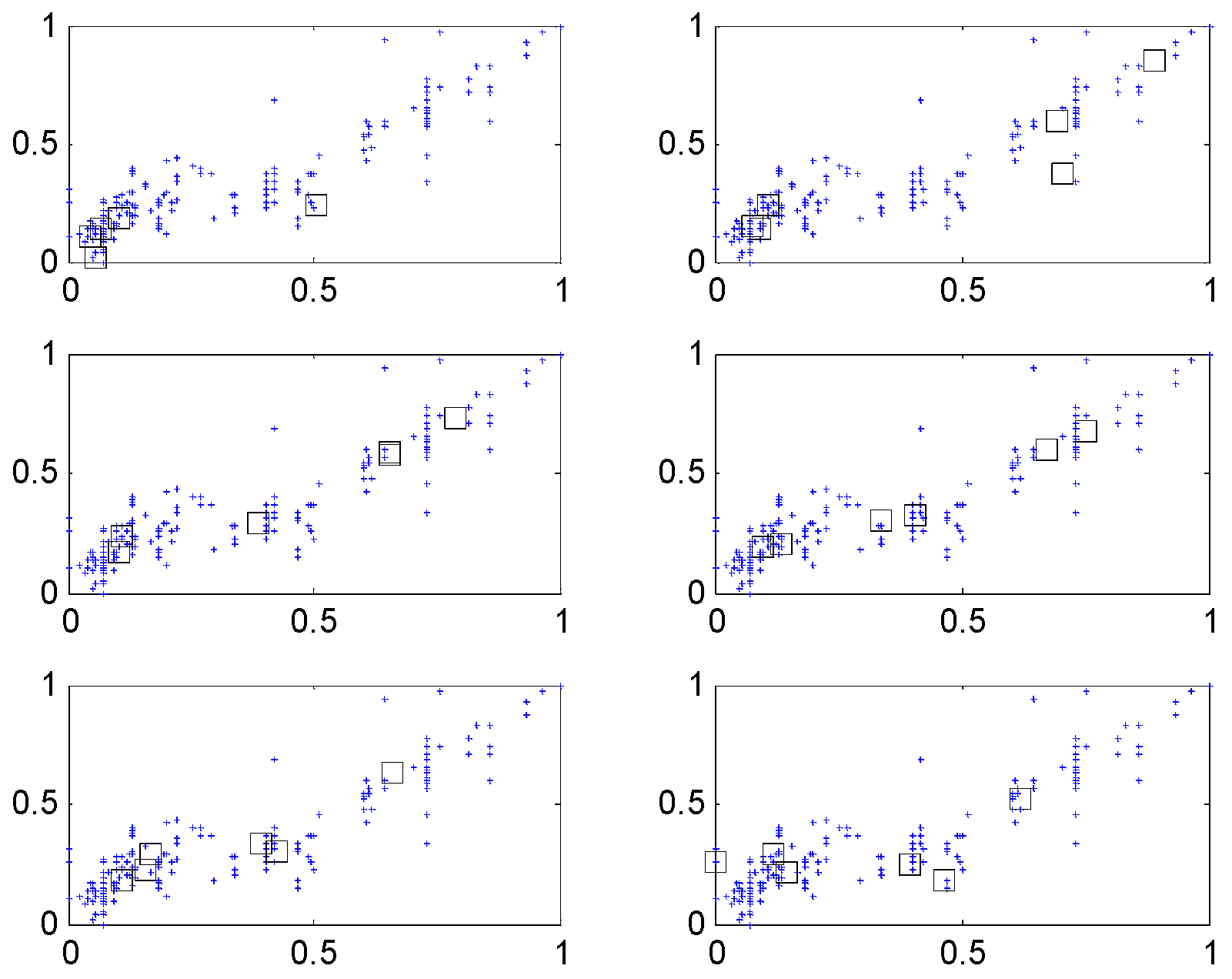

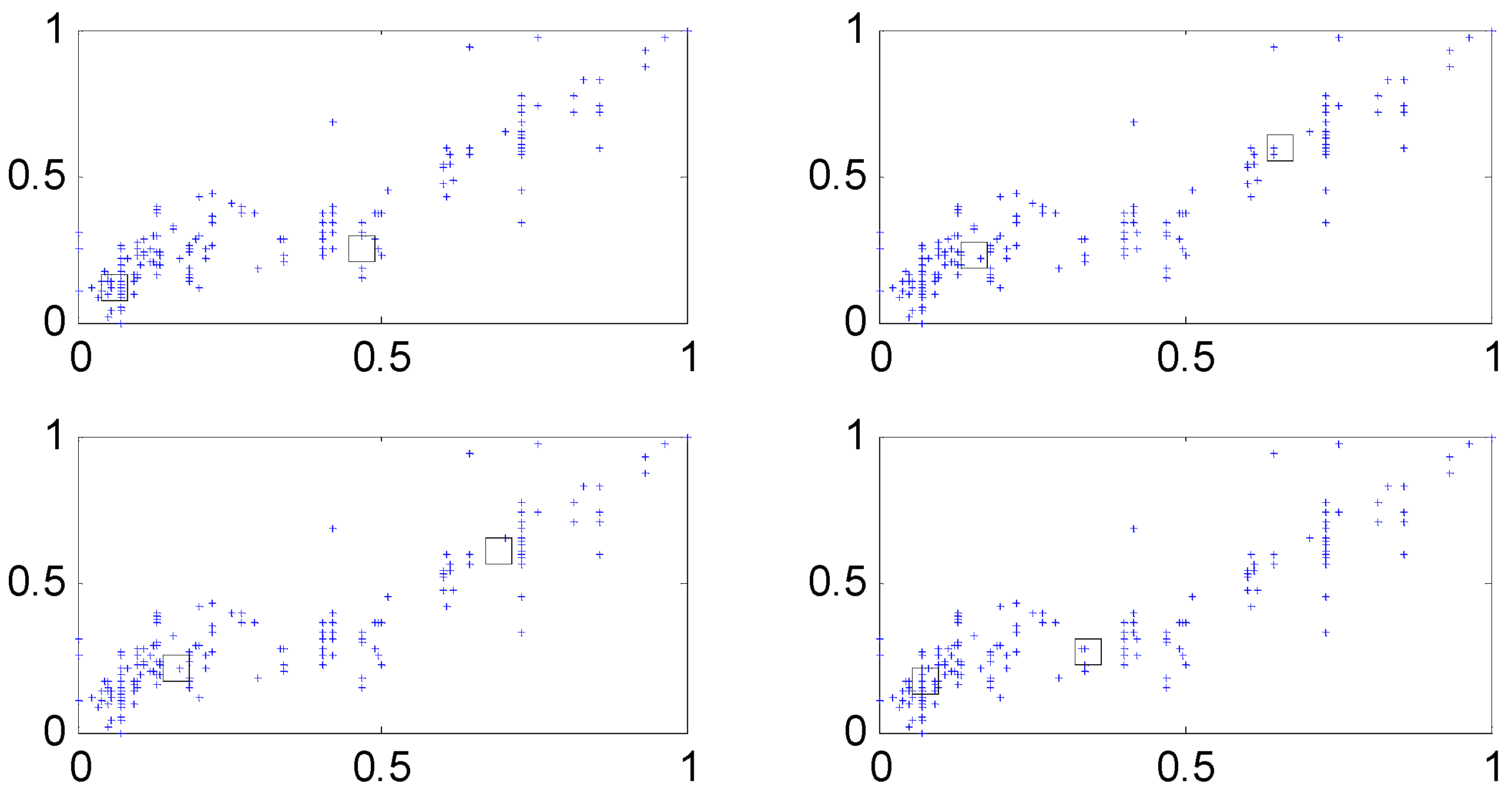

2.2. CFCM Clustering

2.3. The Design Procedure of IM

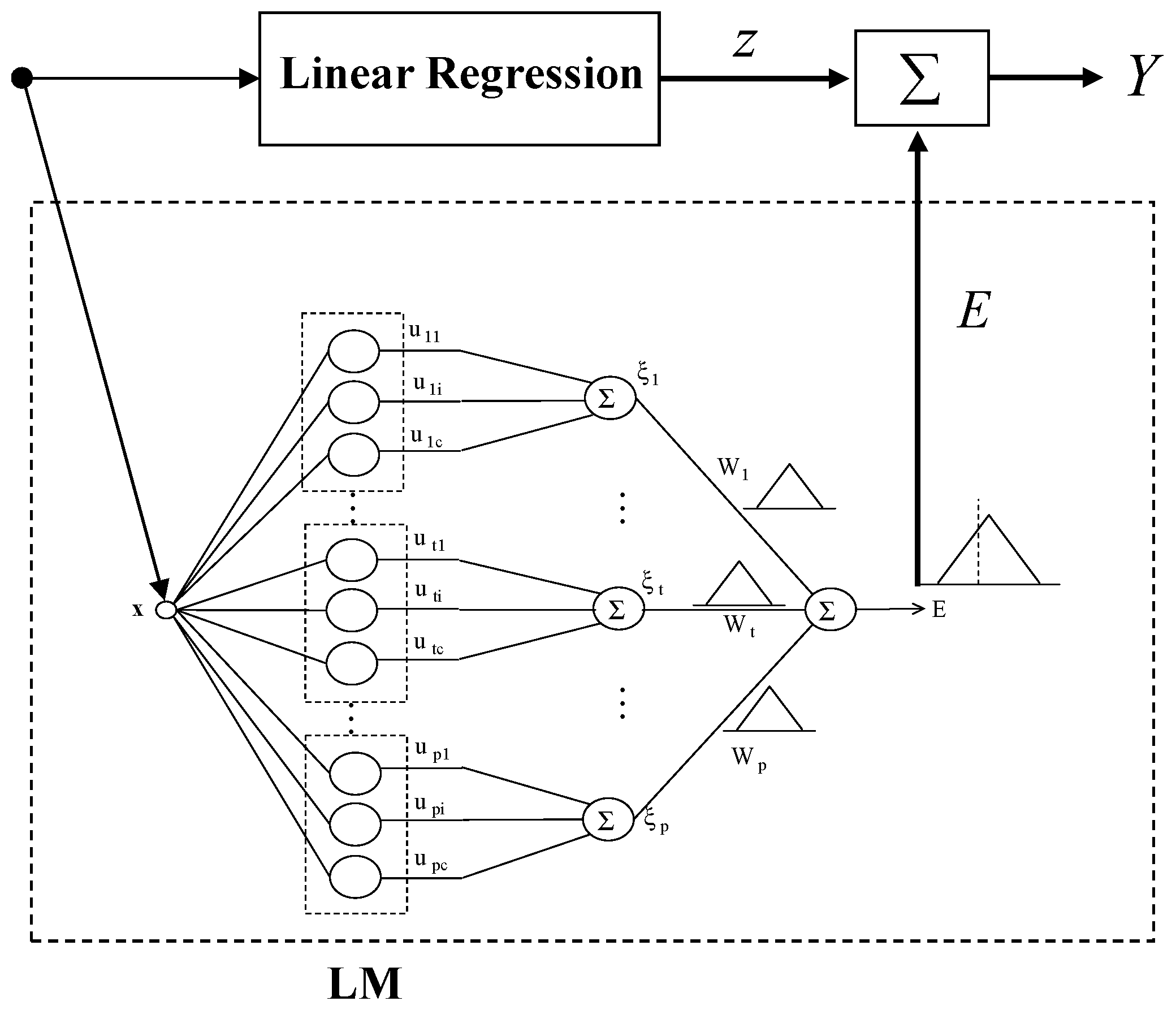

- Step 1: Construct LR from input-output data pairs. LR performs the task of fitting data using a linear model. After performing the regression, we obtain the input and error pairs, ().

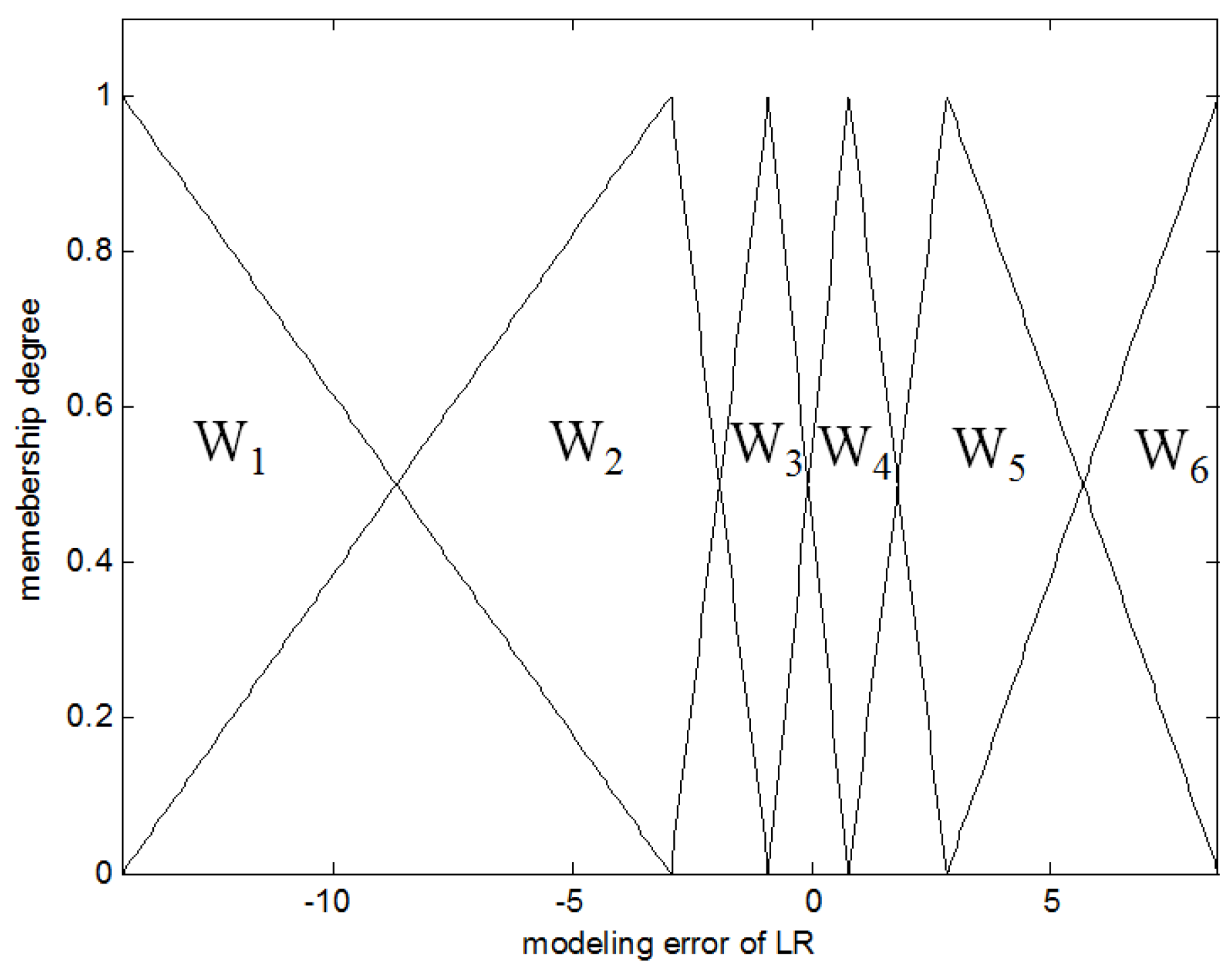

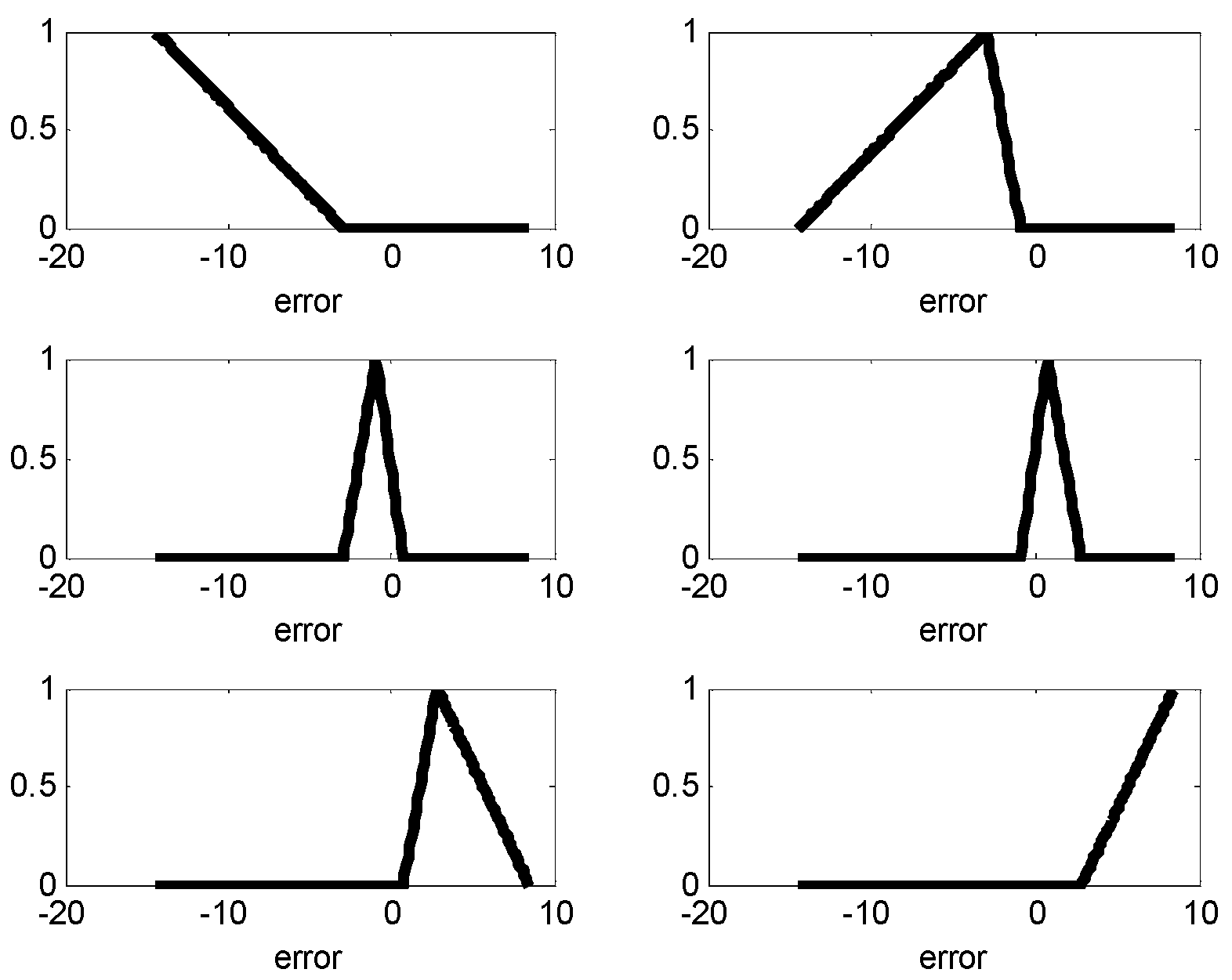

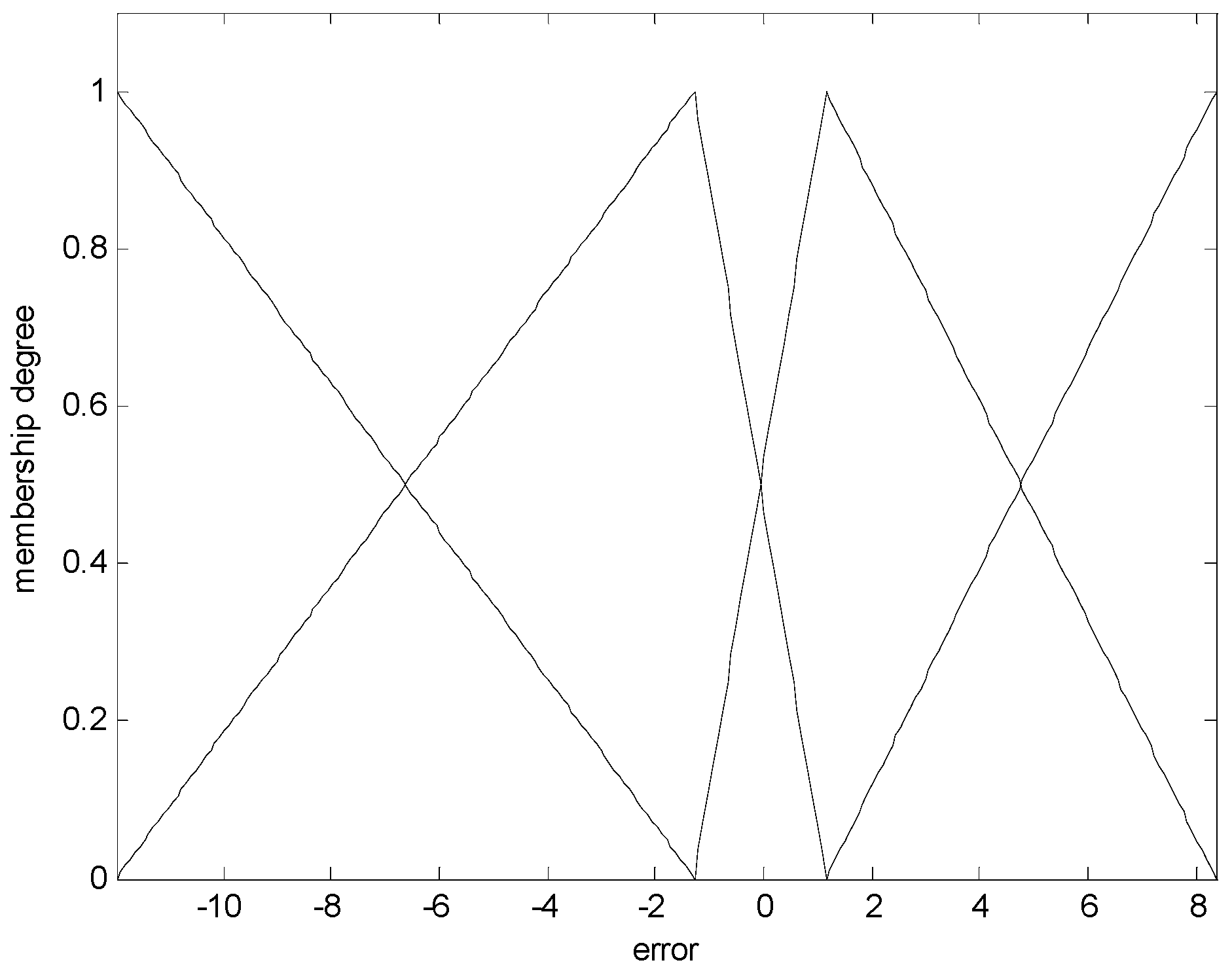

- Step 2: Generate the contexts in the error space.

- Step 3: Estimate cluster centers by CFCM clustering.

- Step 4: The final output of LM is expressed as:

- Step 5: Obtain the model output by combining the outputs of LR and LM.

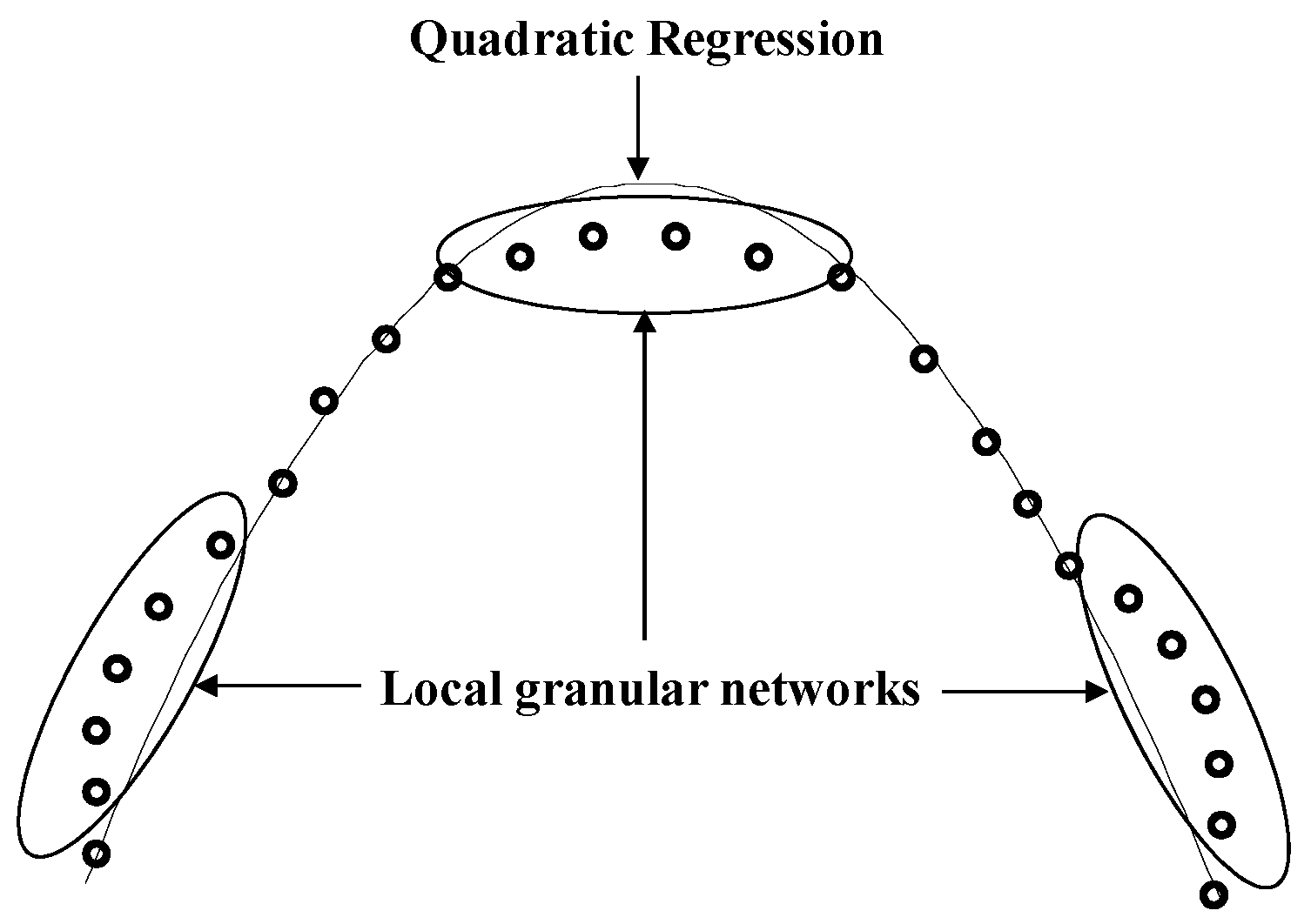

3. Improved Incremental Models Using Local Granular Networks

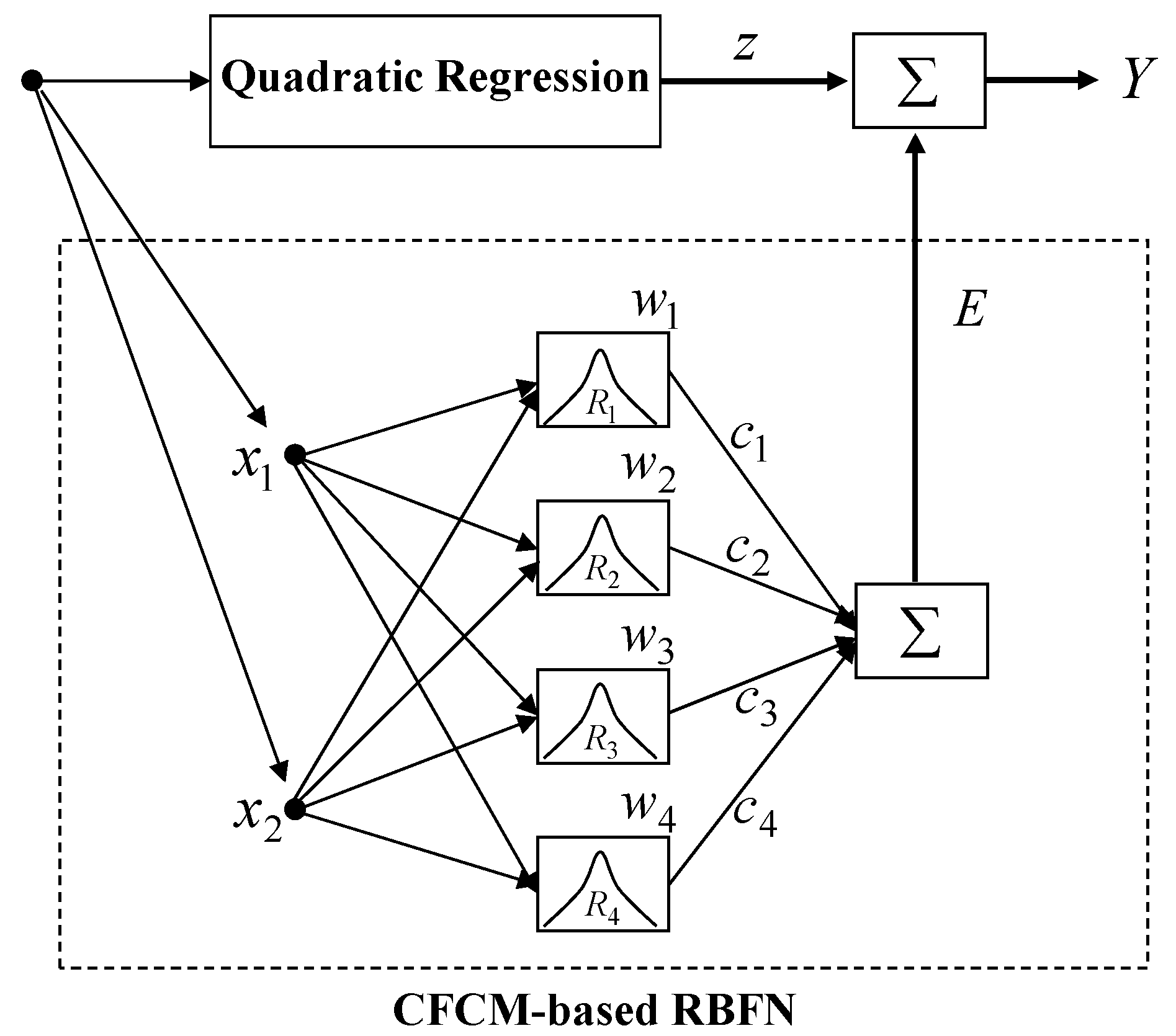

3.1. Incremental RBFN

- Divide randomly for the training and testing data.

- Normalize the input data between zero and one.

- Design QR as the global model and obtain the modeling error.

- Set the number of contexts and clusters.

- Generate the contexts in the error space.

- Design local RBFN using CFCM clustering to compensate the error.

- Estimate the weights of the output layer based on the LSE method as one-pass; or adjust the centers estimated by CFCM and initial weights using the BP algorithm.

- Obtain the output of the local RBFN.

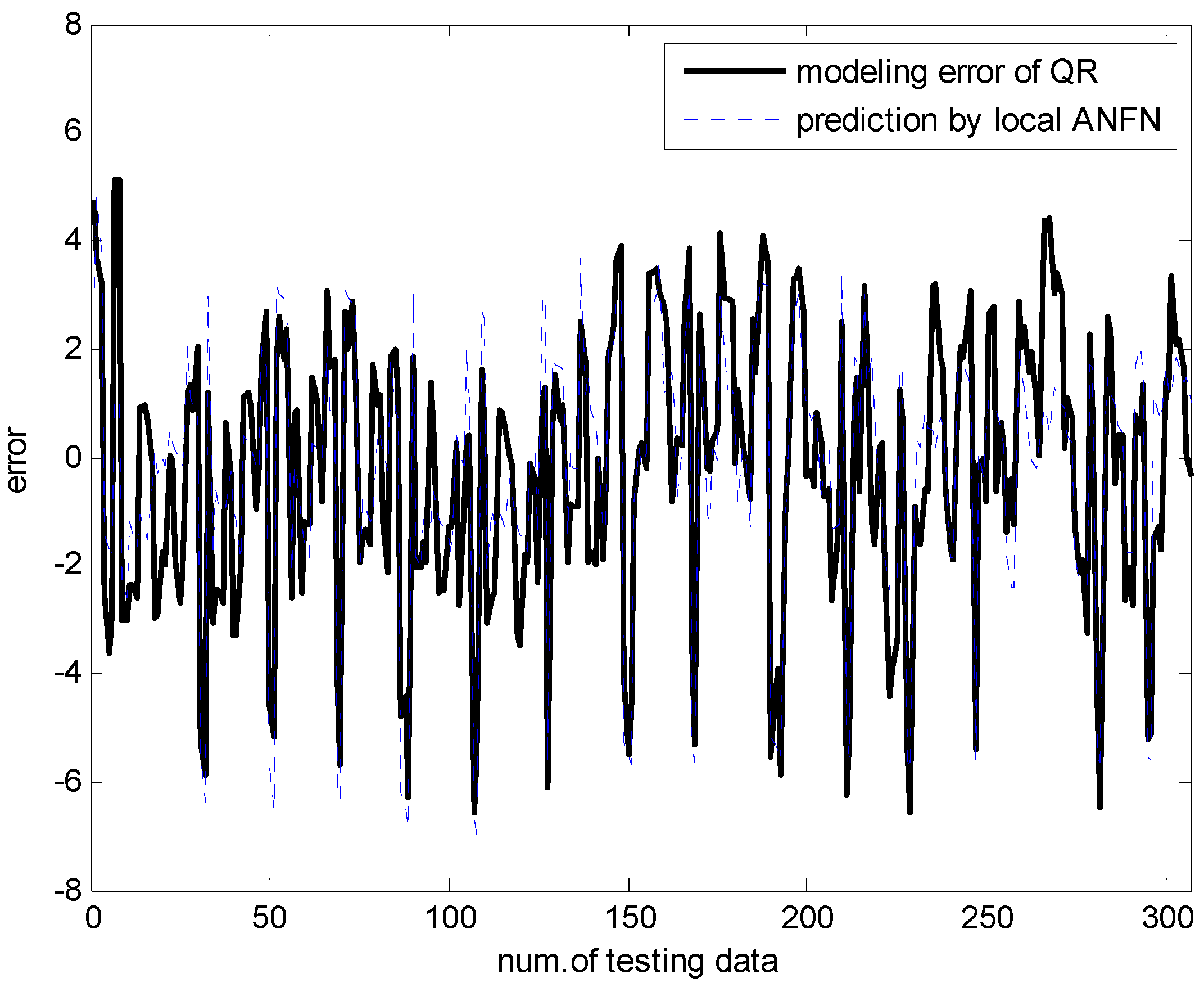

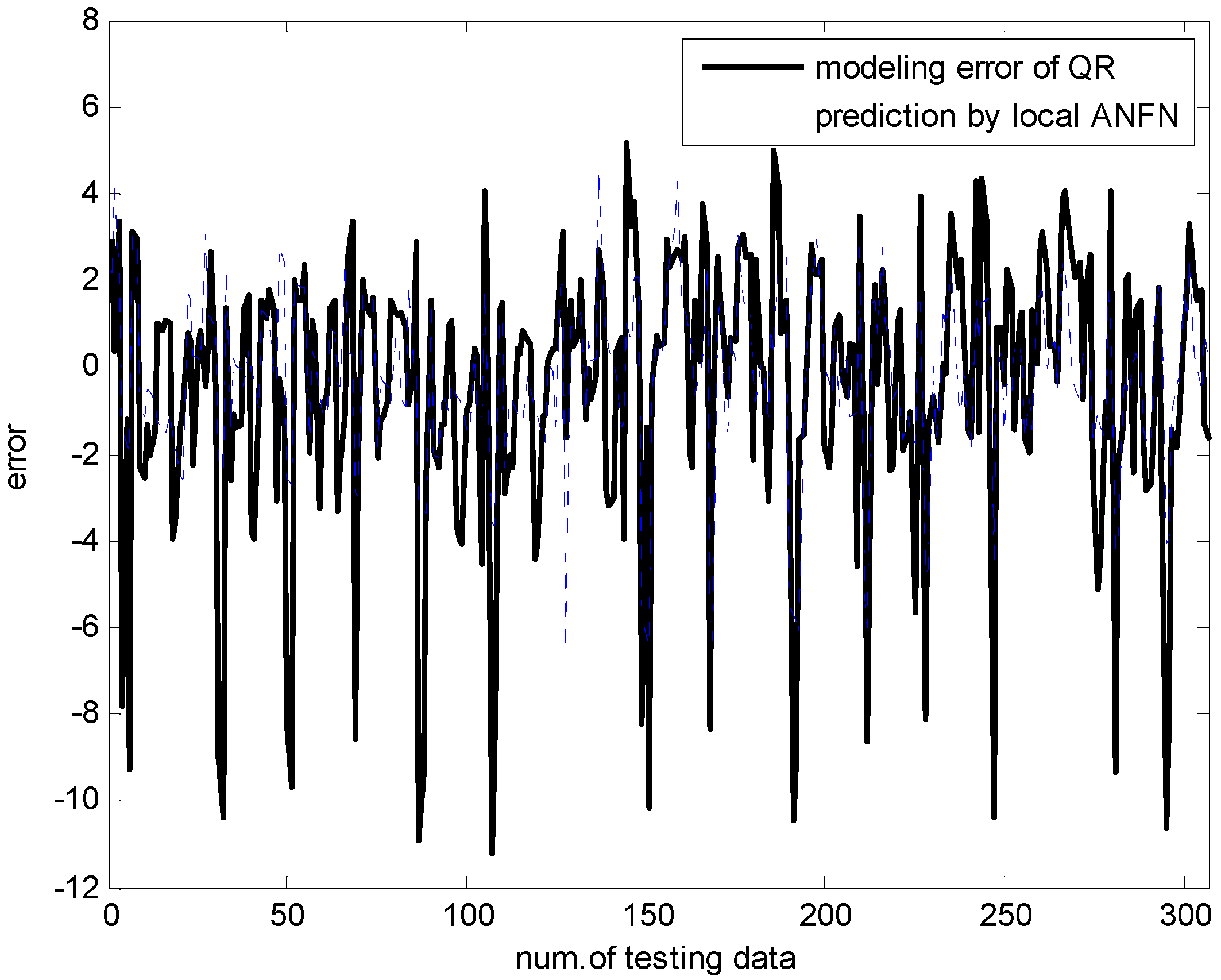

3.2. Incremental ANFN

4. Experimental Results

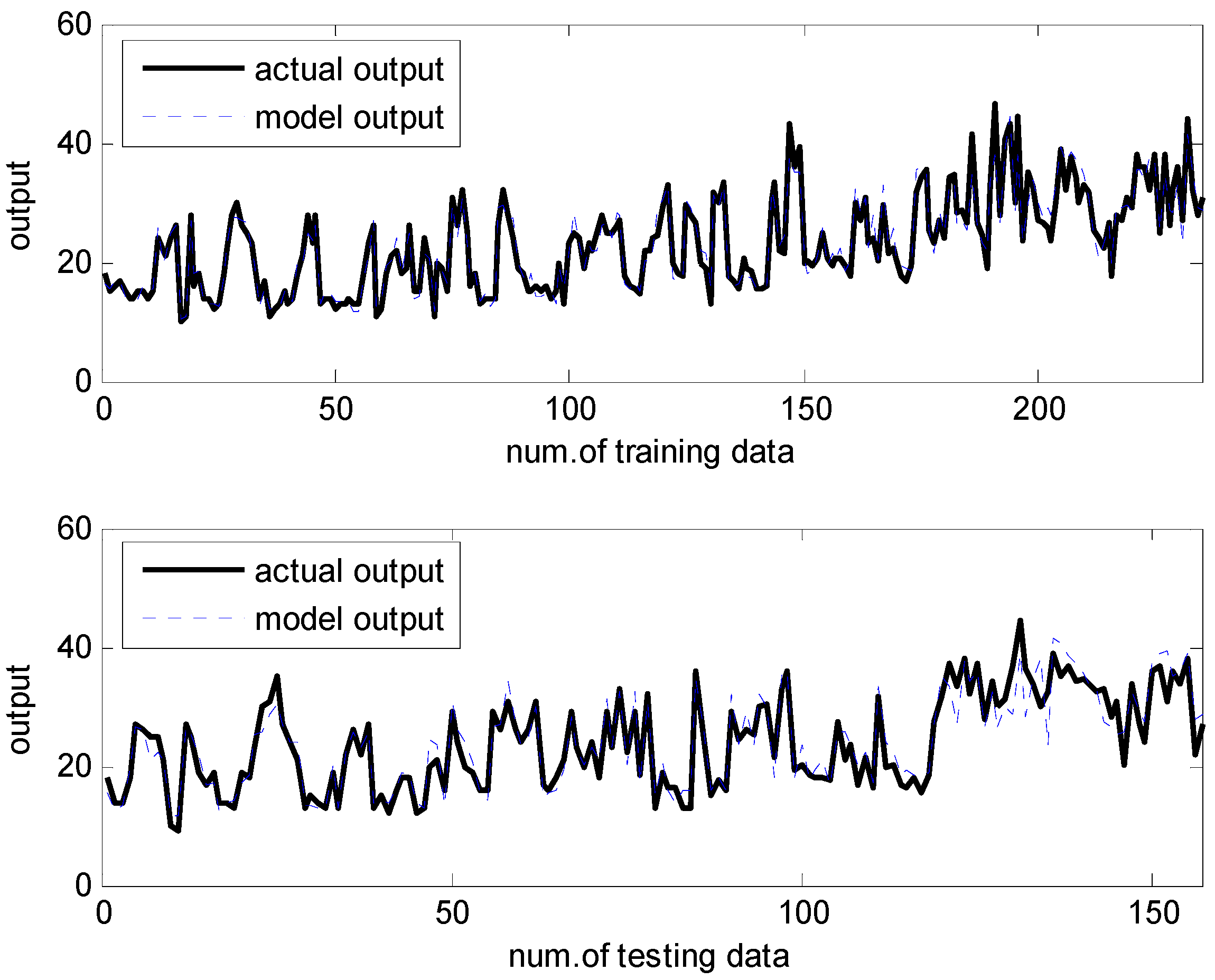

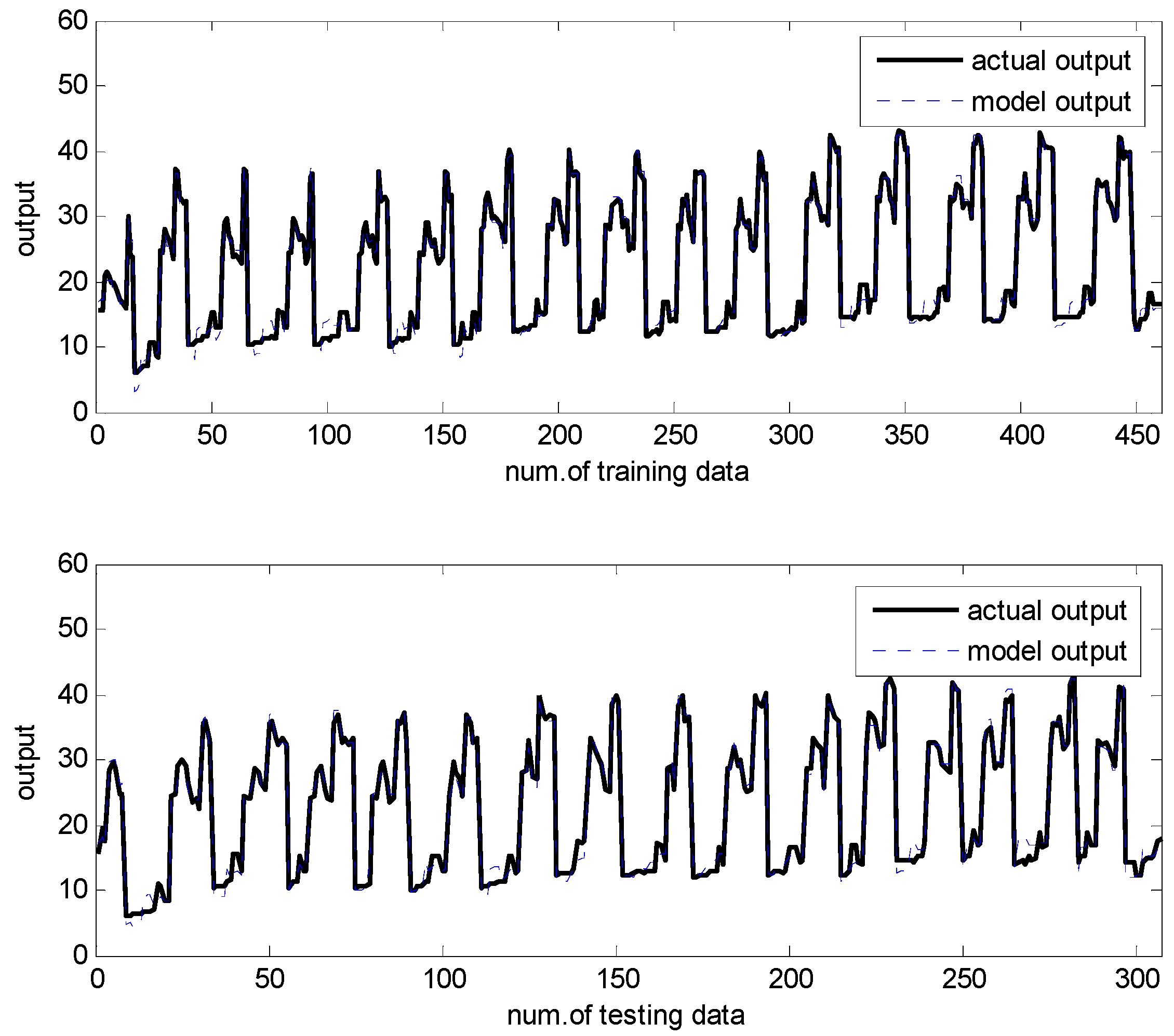

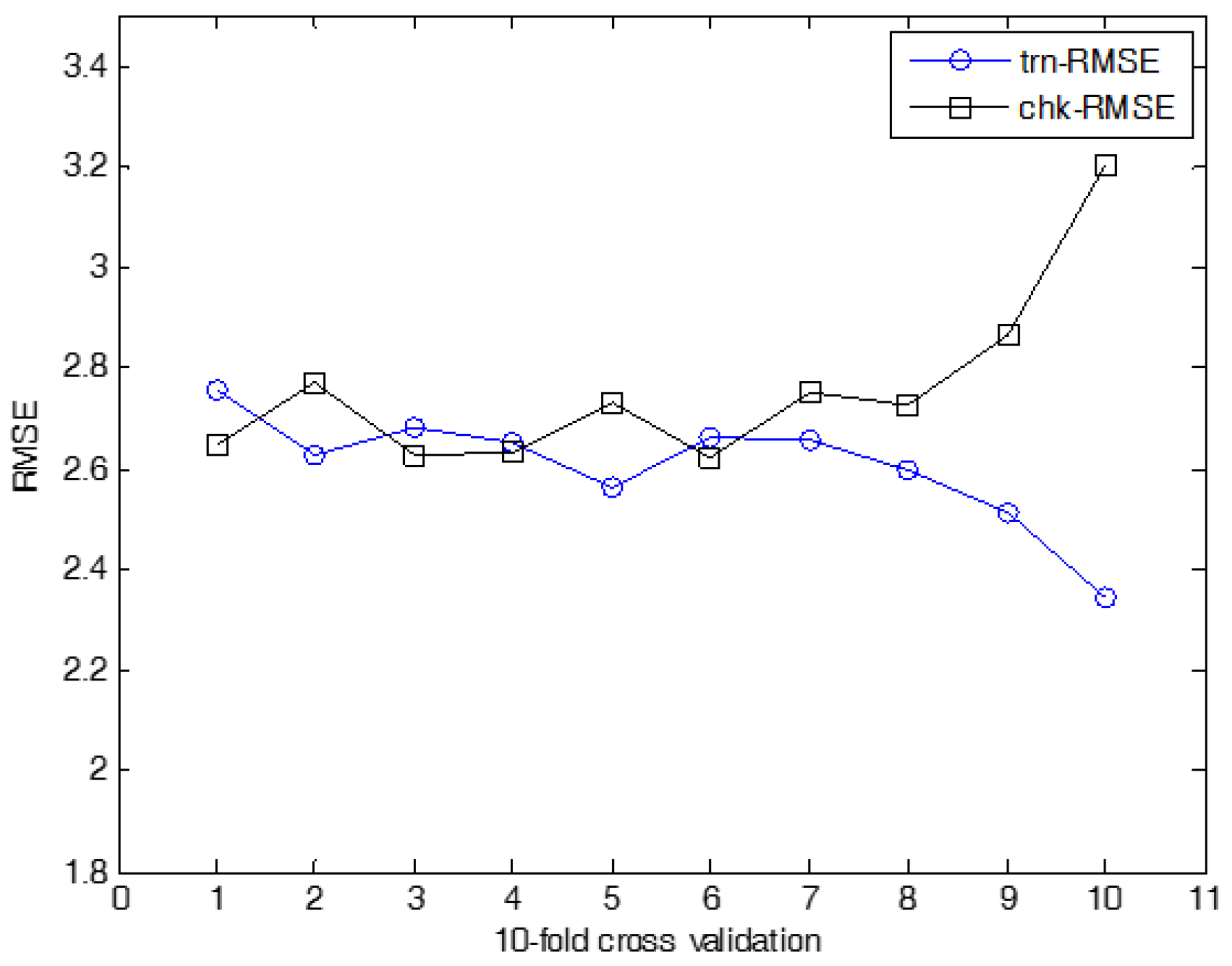

4.1. Automobile MPG Dataset

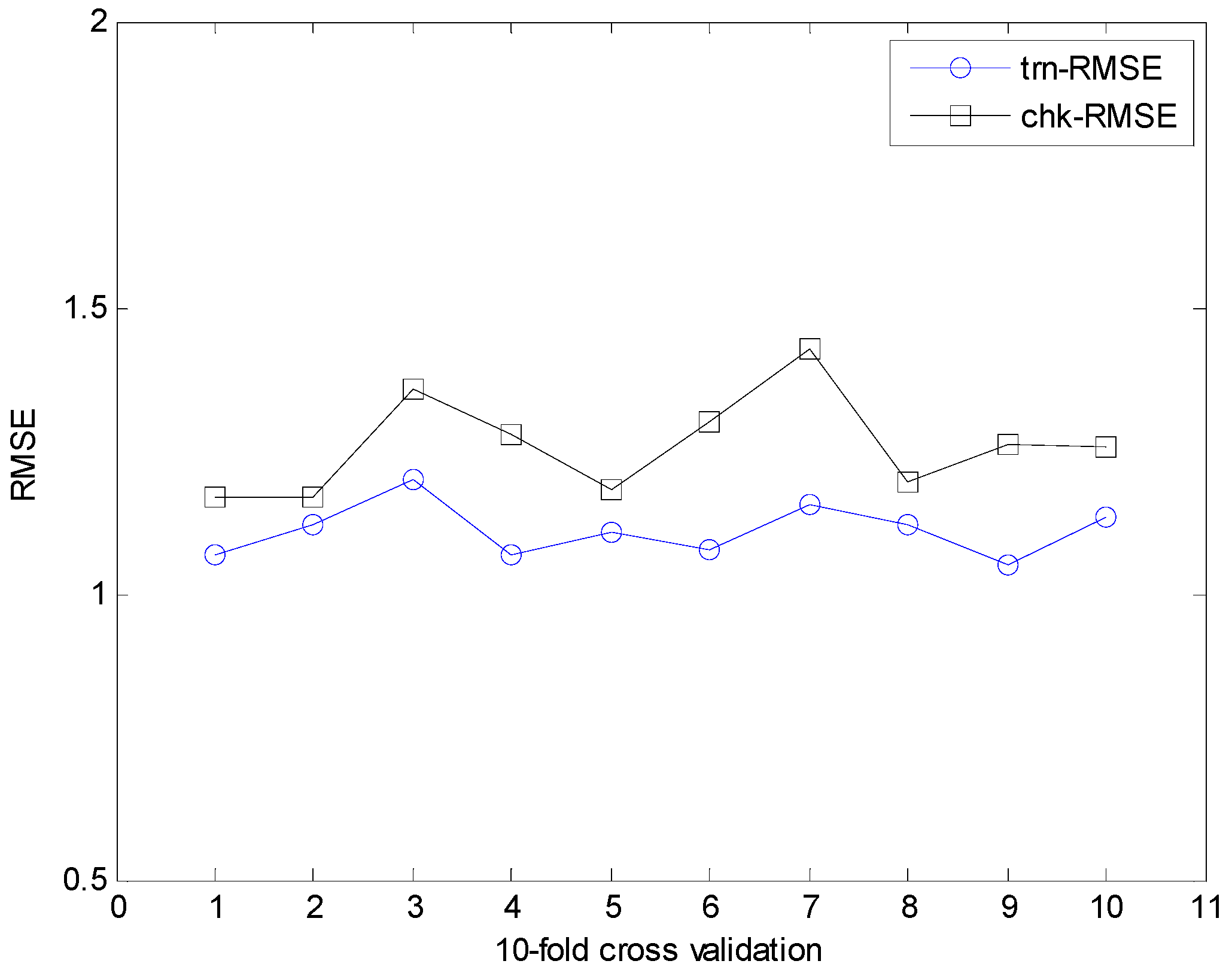

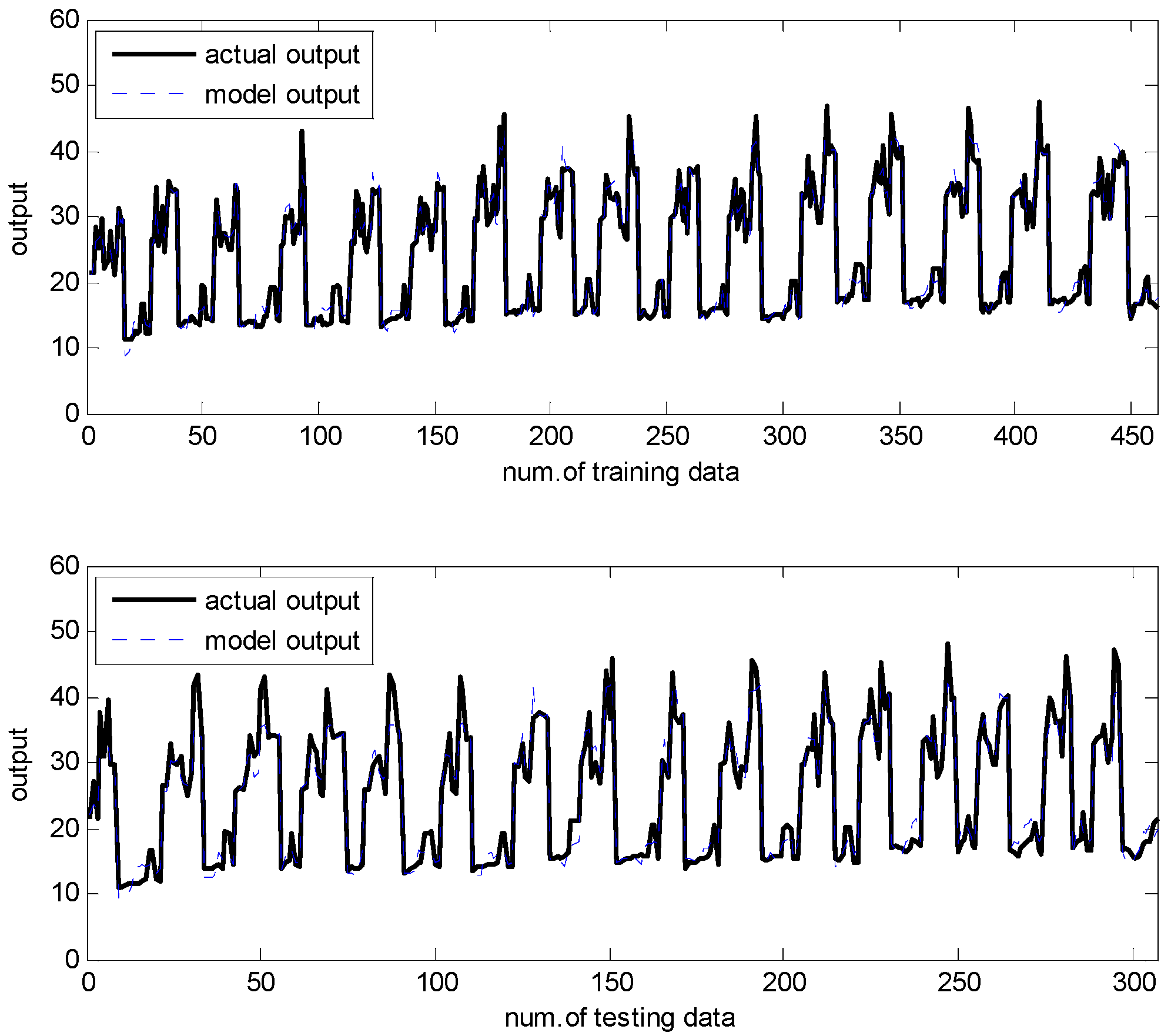

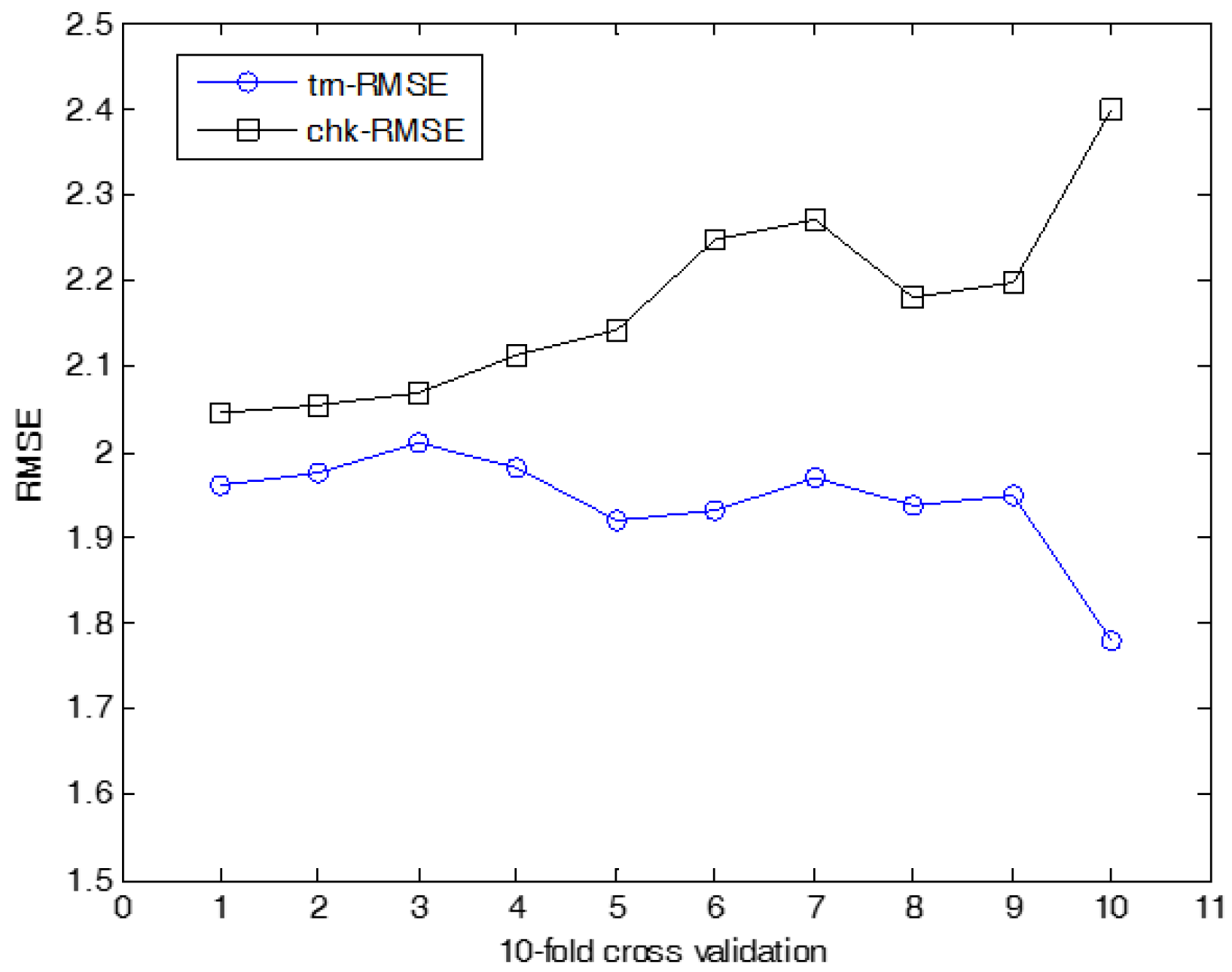

4.2. Energy Efficiency Data

4.3. Boston Housing Data and Computer Hardware Datasets

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Jang, J.S.R.; Sun, C.T.; Mizutani, E. Neuro-Fuzzy and Soft Computing: A Computational Approach to Learning and Machine Intelligence; Prentice Hall: New Dehli, India, 1997. [Google Scholar]

- Pedrycz, W.; Gomide, F. Fuzzy Systems Engineering: Toward Human-Centric Computing; IEEE Press: New York, NY, USA, 2007. [Google Scholar]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Plenum Press: New York, NY, USA, 1981. [Google Scholar]

- Chiu, S.L. Fuzzy model identification based on cluster estimation. J. Intell. Fuzzy Syst. 1994, 2, 267–278. [Google Scholar]

- Gustafson, D.E.; Kessel, W.C. Fuzzy clustering with a fuzzy covariance matrix. In Proceedings of the Decision and Control Including 17th Symposium on Adaptive Processes, San Diego, CA, USA, 10–12 January 1978; pp. 761–766. [Google Scholar]

- Abonyi, J.; Babuska, R.; Szeifert, F. Modified Gath-Geva fuzzy clustering for identification of Takagi-Sugeno fuzzy models. IEEE Trans. Syst. Man Cybern. Part B 2002, 32, 612–621. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Yu, J.; Yang, M.S. Analysis of parameter selection for Gustafson-Kessel fuzzy clustering using Jocobian matrix. IEEE Trans. Fuzzy Syst. 2015, 23, 2329–2342. [Google Scholar]

- Lin, K.P. A novel evolutionary kernel intuitionistic fuzzy c-means clustering algorithm. IEEE Trans. Fuzzy Syst. 2014, 22, 1074–1087. [Google Scholar] [CrossRef]

- Pedrycz, W.; Izakian, H. Cluster-centric fuzzy modeling. IEEE Trans. Fuzzy Syst. 2014, 22, 1585–1597. [Google Scholar] [CrossRef]

- Pedrycz, W. Conditional fuzzy C-Means. Pattern Recognit. Lett. 1996, 17, 625–632. [Google Scholar] [CrossRef]

- Pedrycz, W. Conditional fuzzy clustering in the design of radial basis function neural networks. IEEE Trans. Neural Netw. 1998, 9, 601–612. [Google Scholar] [CrossRef] [PubMed]

- Pedrycz, W.; Vasilakos, A.V. Linguistic models and linguistic modeling. IEEE Trans. Syst. Man Cybern. 1999, 29, 745–757. [Google Scholar] [CrossRef] [PubMed]

- Pedrycz, W.; Kwak, K.C. Linguistic models as a framework of user-centric system modeling. IEEE Trans. Syst. Man Cybern. Part A 2006, 36, 727–745. [Google Scholar] [CrossRef]

- Pedrycz, W.; Kwak, K.C. The development of incremental models. IEEE Trans. Fuzzy Syst. 2007, 15, 507–518. [Google Scholar] [CrossRef]

- Reyes-Galaviz, O.F.; Pedrycz, W. Granular fuzzy models: Analysis, design, and evaluation. Int. J. Approx. Reason. 2015, 64, 1–19. [Google Scholar] [CrossRef]

- Li, J.; Pedrycz, W.; Wang, X. A rule-based development of incremental models. Int. J. Approx. Reason. 2015, 64, 20–38. [Google Scholar] [CrossRef]

- Mamdani, E.H.; Assilian, S. An experiment in linguistic synthesis with a fuzzy logic controller. Int. Man Mach. Stud. 1975, 7, 1–13. [Google Scholar] [CrossRef]

- Kwak, K.C.; Kim, S.S. Development of quantum-based adaptive neuro-fuzzy networks. IEEE Trans. Syst. Man Cybern. Part B. 2010, 40, 91–100. [Google Scholar]

- Kwak, K.C. A design of incremental granular model using context-based interval type-2 fuzzy c-means clustering algorithm. IEICE Trans. Inf. Syst. 2016, E99-D, 309–312. [Google Scholar] [CrossRef]

- UCI Machine Learning Repository. Available online: https://archive.ics.uci.edu/ml/datasets (accessed on 2 November 2017).

- Lee, M.W.; Kwak, K.C.; Pedrycz, W. An expansion of local granular models in the design of incremental model. In Proceedings of the 2016 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Vancouver, BC, Canada, 24–29 July 2016; pp. 1664–1670. [Google Scholar]

- Lee, M.W.; Kwak, K.C. An incremental radial basis function networks based on information granules and its application. Comput. Intell. Neurosci. 2016, 2016, 3207627. [Google Scholar] [CrossRef] [PubMed]

- Tsanas, A.; Xifara, A. Accurate quantitative estimation of energy performance of residential buildings using statistical machine learning tools. Energy Build. 2012, 49, 560–567. [Google Scholar] [CrossRef]

| Methods | No. of Rules | trn-RMSE | chk-RMSE | ||

|---|---|---|---|---|---|

| LR | - | 3.38 | 3.47 | ||

| LM [12] | 36 | 2.80 | 3.32 | ||

| RBFN (CFCM) [11] | 36 | 2.34 | 3.18 | ||

| IM [14] | 36 | 2.41 | 3.10 | ||

| IRBFN (LR) [21] | LSE | 36 | 2.03 | 3.04 | |

| BP | 36 | 2.62 | 2.92 | ||

| IANFN (LR) [21] | 8 | 2.10 | 2.74 | ||

| IIM | Proposed IRBFN | LSE | 9 | 1.74 | 2.63 |

| BP | 9 | 2.36 | 2.87 | ||

| Proposed IANFN | 8 | 2.05 | 2.46 | ||

| Methods | No. of Rules | trn-RMSE | chk-RMSE | ||

|---|---|---|---|---|---|

| LR | - | 2.94 | 2.91 | ||

| LM [12] | 36 | 3.70 | 4.02 | ||

| RBFN (CFCM) [11] | 36 | 2.77 | 3.11 | ||

| IM [14] | 36 | 2.46 | 2.80 | ||

| IRBFN (LR) [21] | LSE | 36 | 2.28 | 2.83 | |

| BP | 36 | 2.35 | 2.73 | ||

| IANFN (LR) [21] | 8 | 1.05 | 1.32 | ||

| IIM | Proposed IRBFN | LSE | 9 | 2.26 | 2.38 |

| BP | 9 | 2.12 | 2.19 | ||

| Proposed IANFN | 8 | 1.11 | 1.26 | ||

| Methods | No. of Rules | trn-RMSE | chk-RMSE | ||

|---|---|---|---|---|---|

| LR | - | 3.18 | 3.21 | ||

| LM [12] | 36 | 3.87 | 4.30 | ||

| RBFN (CFCM) [11] | 36 | 2.87 | 3.39 | ||

| IM [14] | 36 | 2.66 | 3.10 | ||

| IRBFN (LR) [21] | LSE | 36 | 2.46 | 3.10 | |

| BP | 36 | 2.56 | 3.09 | ||

| IANFN (LR) [21] | 8 | 1.93 | 2.38 | ||

| IIM | Proposed IRBFN | LSE | 9 | 2.61 | 2.76 |

| BP | 9 | 2.46 | 2.64 | ||

| Proposed IANFN | 8 | 1.858 | 2.153 | ||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeom, C.-U.; Kwak, K.-C. The Development of Improved Incremental Models Using Local Granular Networks with Error Compensation. Symmetry 2017, 9, 266. https://doi.org/10.3390/sym9110266

Yeom C-U, Kwak K-C. The Development of Improved Incremental Models Using Local Granular Networks with Error Compensation. Symmetry. 2017; 9(11):266. https://doi.org/10.3390/sym9110266

Chicago/Turabian StyleYeom, Chan-Uk, and Keun-Chang Kwak. 2017. "The Development of Improved Incremental Models Using Local Granular Networks with Error Compensation" Symmetry 9, no. 11: 266. https://doi.org/10.3390/sym9110266