Face Liveness Detection Based on Skin Blood Flow Analysis

Abstract

:1. Introduction

2. Related Work

- Motion-Based Methods: Motion-based methods aim to detect the natural responses of live faces, such as eye blinking [10,11], head rotation [12], and mouth movements [13]. Although these methods can successfully detect printed photo attacks, they are ineffective at identifying replayed video attacks, which present natural responses. Furthermore, they require multiple frames (usually >3 s) to estimate facial motions restricted by the human physiological rhythm [14].

- Image Quality Analysis-Based Methods: Image quality analysis-based methods [15,16] capture the image quality differences between live and spoofing face images. Image quality degradations, which are caused by spoofing mediums (e.g., paper and screen), usually appear in spoofing face images, and printed photos and replayed videos displayed on a monitor can be detected using color space analysis [17]. Thus, these methods extract chromatic moment features to distinguish a live face image from a spoofing face image. These methods usually assess image quality by using whole images and are highly generalizable. However, image quality might be more discriminative in small and local areas of face images.

- Texture-Based Methods: Texture-based methods [9,18] assume that the use of various spoofing mediums would result in distinct surface reflection and shape deformation, which lead to texture differences between live and spoofing face images. These methods are used to perform face spoof detection by extracting texture features from a single face image and can thus provide a quick response. However, the texture features may lack good generalizability to various facial expressions, poses, and spoofing schemes when the training data are collected from few subjects and under limited conditions. Therefore, combining texture features and image quality features may improve the performance of face spoof detection.

- Depth-Based Methods: Depth-based methods [12,19] estimate the depth information of a face to discriminate a live 3D face from a spoofing face presented on 2D planar media. The defocusing technique [20], near-infrared sensors [21], and light field cameras [22] are representative examples of these methods. Depth features can be used to effectively detect printed photos and video replay attacks. On the other hand, few studies have developed 3D depth analysis methods to estimate the 3D depth information of a face. An optical flow field-based approach is proposed to analyze the difference in the optical flow field between a planar object and a 3D face [12]. Another study exploits geometric invariants according to a set of facial landmarks for detecting replay attacks [19]. However, to estimate the depth information, these methods generally require multiple frames or a depth-measuring device, which might increase the cost of the systems.

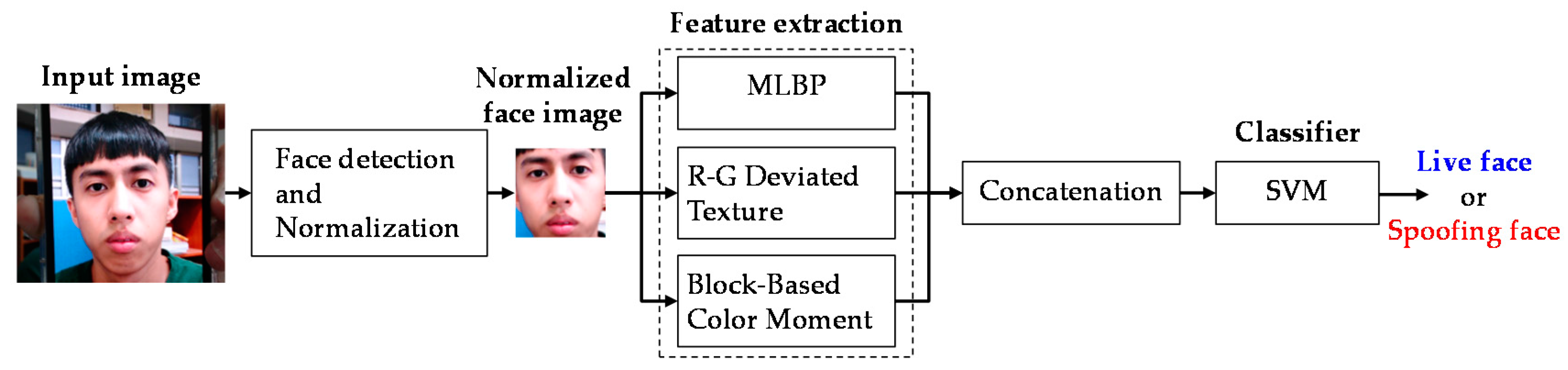

- The first feature highlights the distinct properties in red and green channels between live and spoofing face images. This feature can reveal skin blood flow differences between live and spoofing face images. This skin-related texture feature is extracted by the local binary pattern (LBP) operator in red and green channels and can detect both shape and color distortion. In other words, it combines the advantages of texture- and image quality analysis-based methods.

- The second feature is a block-based color moment that estimates the color distribution in the local regions of face images. This feature can preserve the local color distribution of face images and, further, provides more spatial information than does the color moment determined from a whole image. The local information helps discriminate between live and spoofing face images.

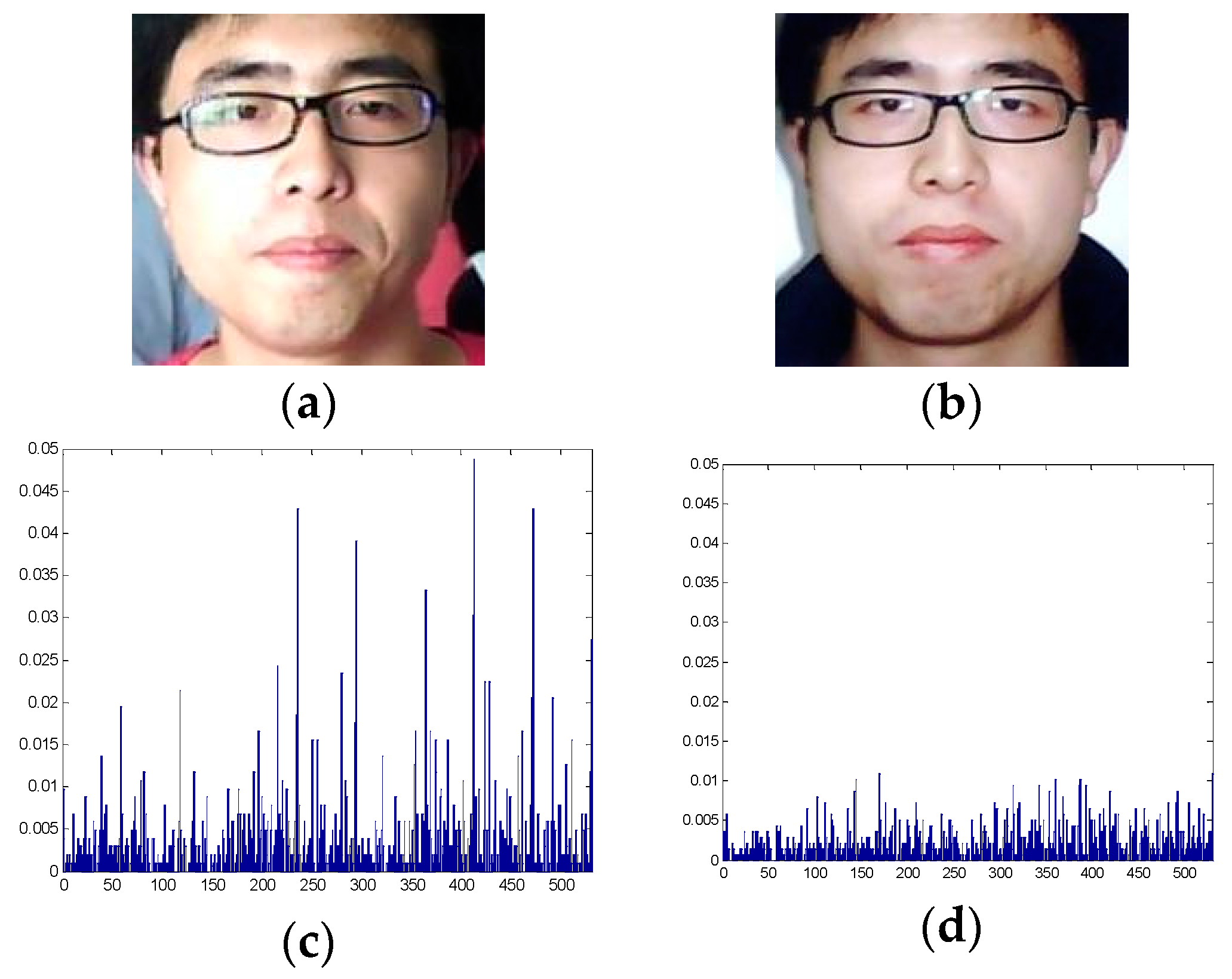

3. Face Livenss Detection

3.1. Multi-Scale Local Binary Pattern

3.2. Red–Green Deviated Texture

3.3. Block-Based Color Moment

4. Empirical Work

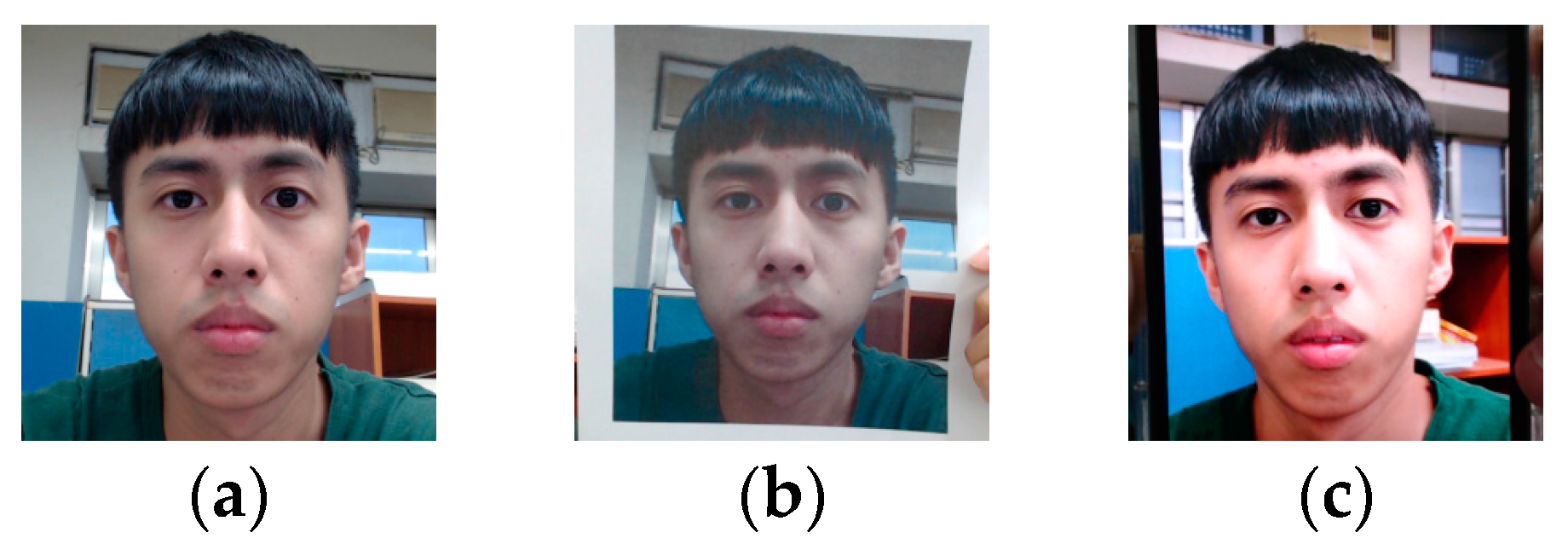

4.1. Face Spoofing Database

4.1.1. NUAA Photograph Imposter Database

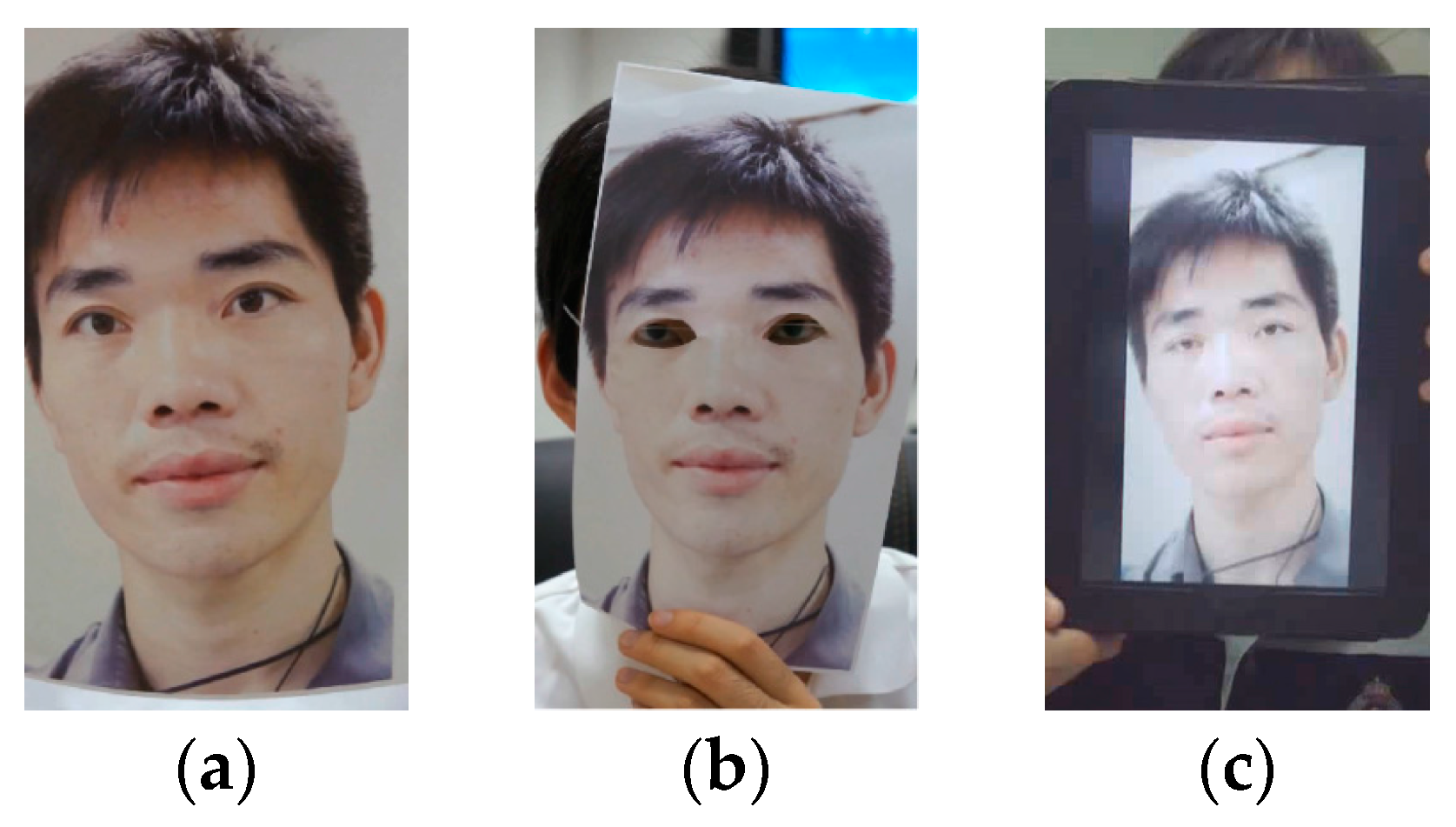

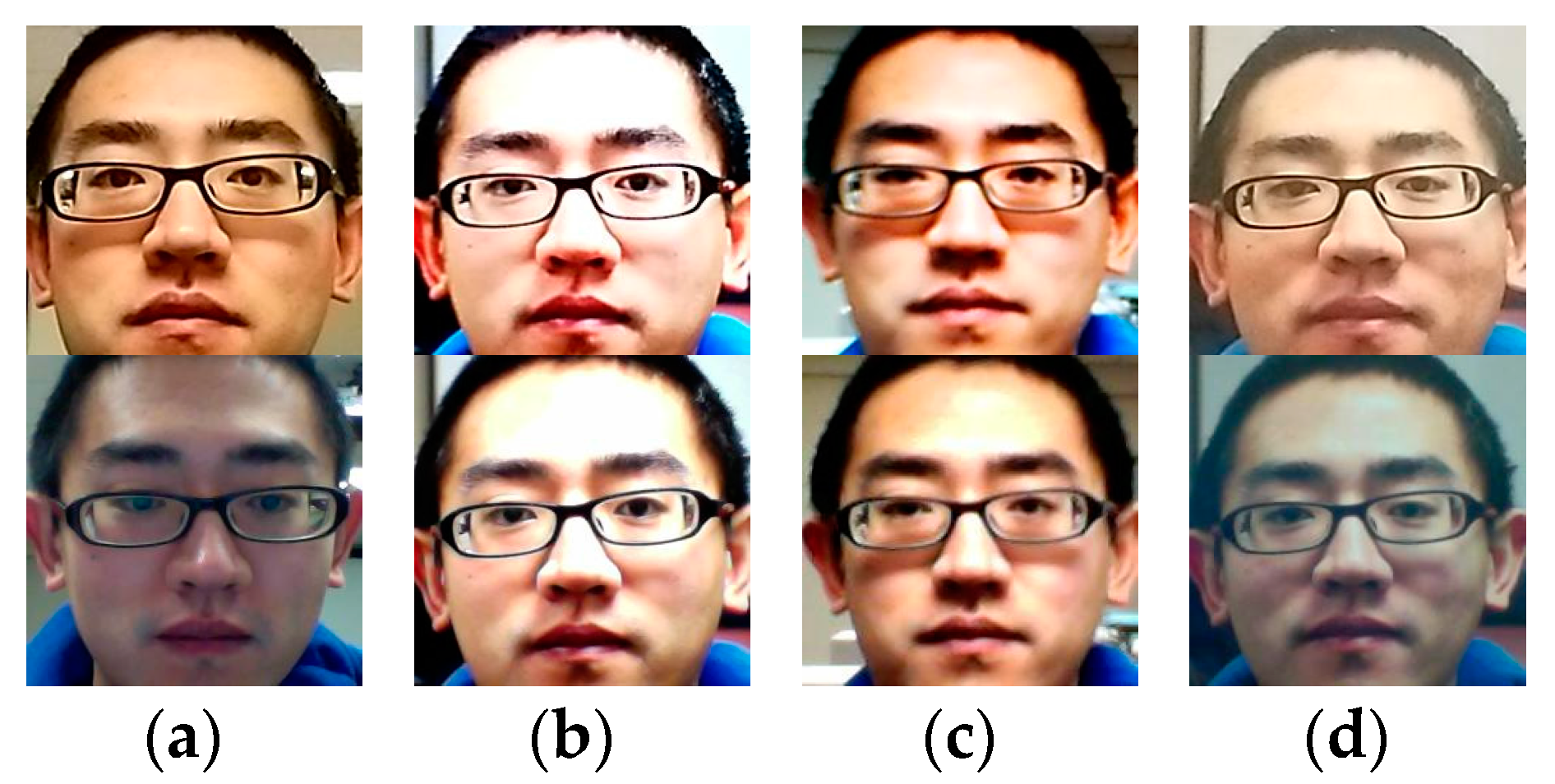

4.1.2. CASIA Face Anti-Spoofing Database

4.1.3. Idiap Replay-Attack

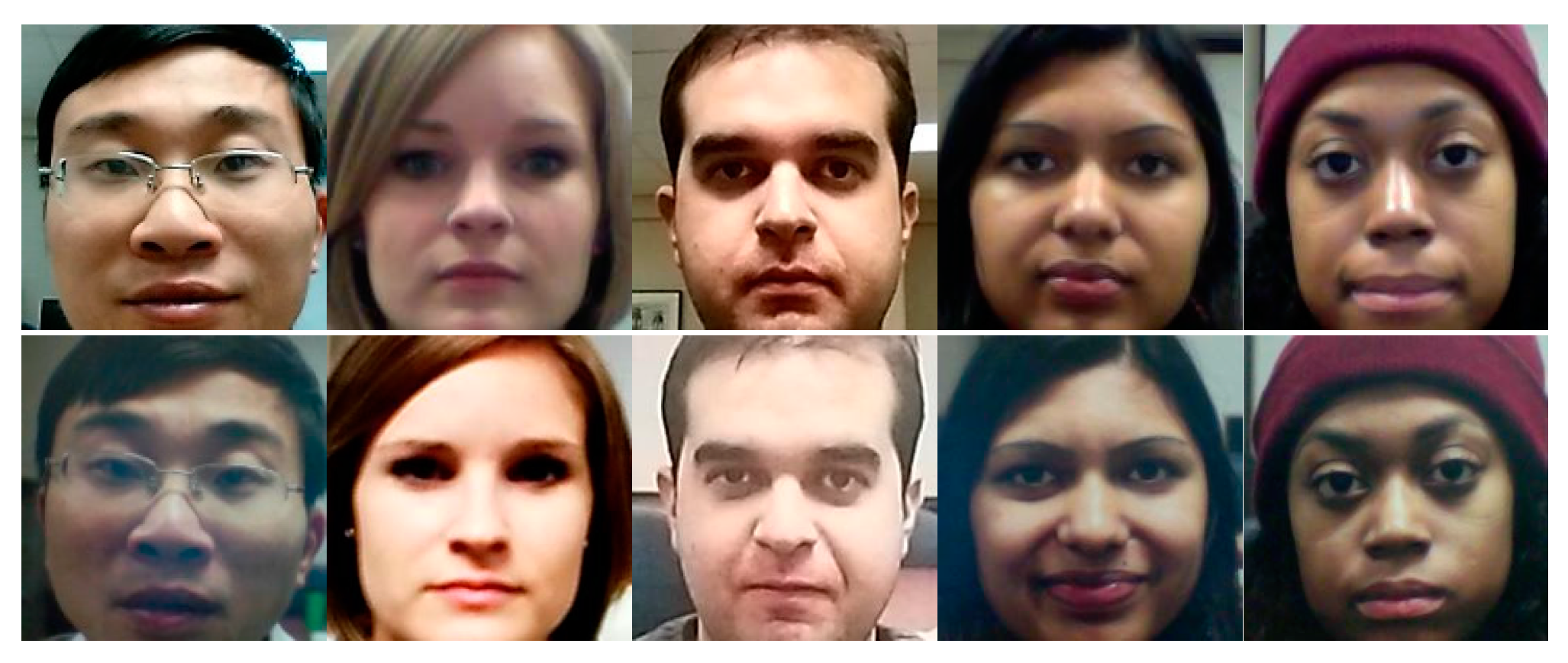

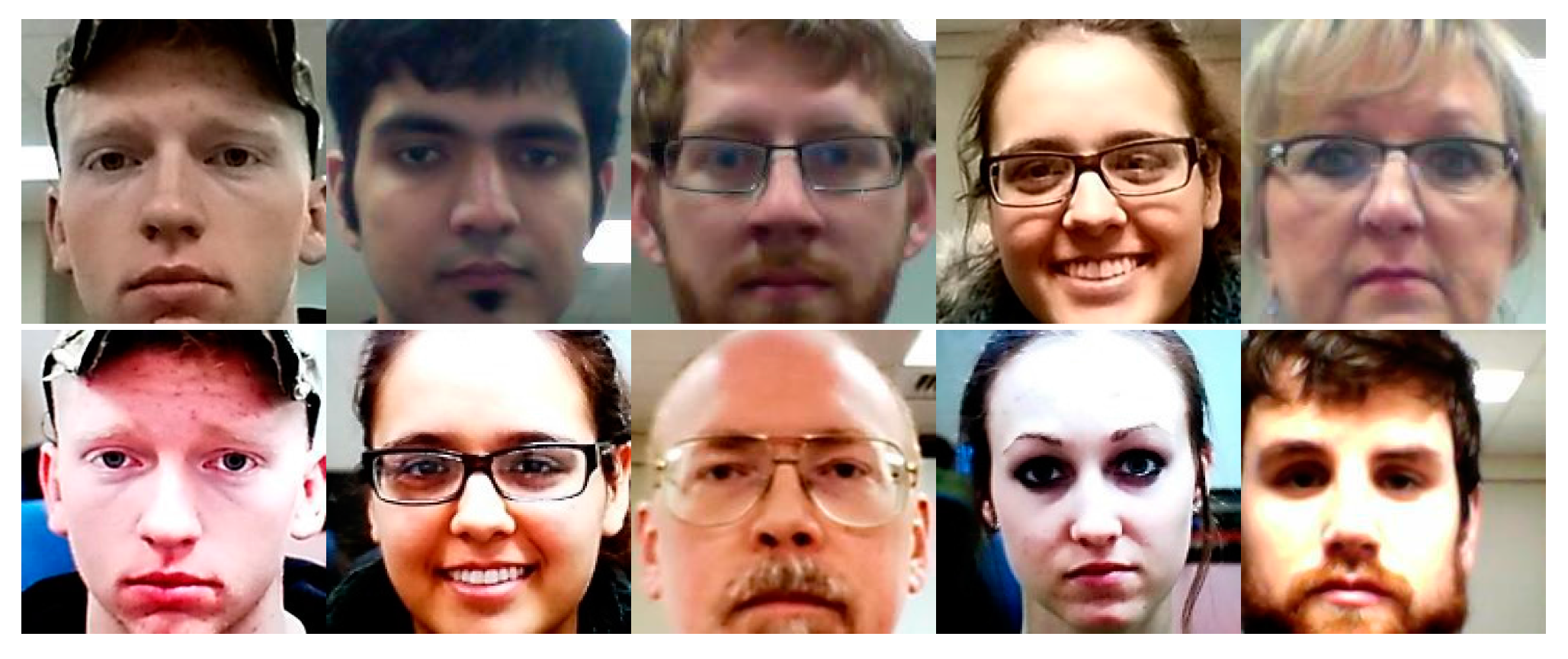

4.1.4. MSU Mobile Face Spoofing Database

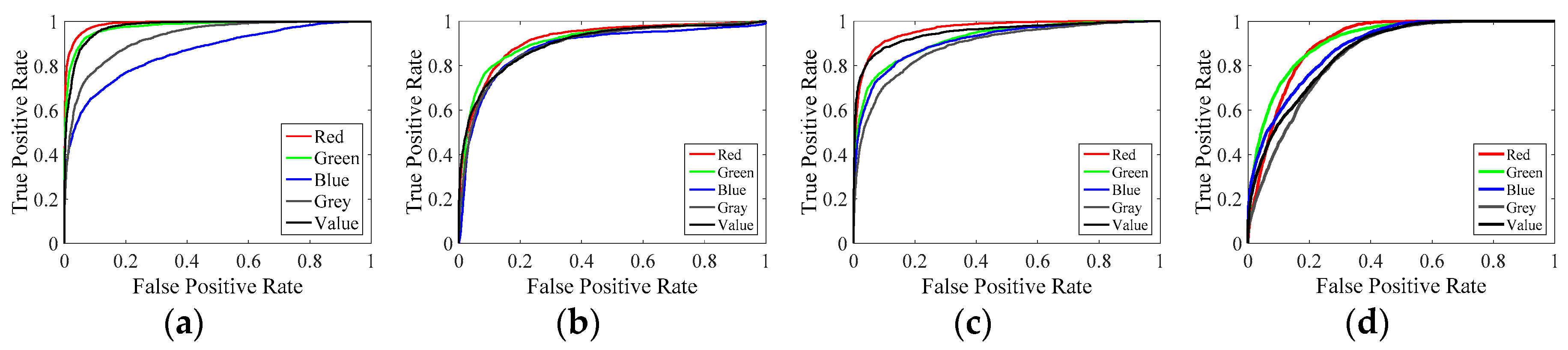

4.2. Effects of Different Color Channels

4.3. Effects of Different Features

5. Performance Evaluation

5.1. Performance Index

5.2. Comparison with Other Methods in NUAA Database

5.3. Comparison with Other Methods in CASIA Database

5.4. Comparison with Other Methods in Idiap Database

5.5. Comparison with Other Methods in MSU Database

5.6. Computational Complexity Analysis

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Jain, A.K.; Prabhakar, S.; Hong, L.; Pankanti, S. Filterbank-based fingerprint matching. IEEE Trans. Image Process. 2000, 9, 846–859. [Google Scholar] [CrossRef] [PubMed]

- Ramachandra, R.; Busch, C. Presentation attack detection methods for face recognition systems: A comprehensive survey. ACM Comput. Surv. (CSUR) 2017, 50, 8. [Google Scholar] [CrossRef]

- Soldera, J.; Schu, G.; Schardosim, L.R.; Beltrao, E.T. Facial biometrics and applications. IEEE Instrum. Meas. Mag. 2017, 20, 4–10. [Google Scholar] [CrossRef]

- Arashloo, S.R.; Kittler, J.; Christmas, W. An Anomaly Detection Approach to Face Spoofing Detection: A New Formulation and Evaluation Protocol. IEEE Access 2017, 5, 13868–13882. [Google Scholar] [CrossRef]

- Vezzetti, E.; Marcolin, F.; Tornincasa, S.; Ulrich, L.; Dagnes, N. 3D geometry-based automatic landmark localization in presence of facial occlusions. Multimed. Tools Appl. 2017, 1–29. [Google Scholar] [CrossRef]

- Marcolin, F.; Vezzetti, E. Novel descriptors for geometrical 3D face analysis. Multimed. Tools Appl. 2017, 76, 13805–13834. [Google Scholar] [CrossRef]

- Huang, K.-K.; Dai, D.-Q.; Ren, C.-X.; Yu, Y.-F.; Lai, Z.-R. Fusing landmark-based features at kernel level for face recognition. Pattern Recognit. 2017, 63, 406–415. [Google Scholar] [CrossRef]

- Smith, D.F.; Wiliem, A.; Lovell, B.C. Face recognition on consumer devices: Reflections on replay attacks. IEEE Trans. Inf. Forensics Secur. 2015, 10, 736–745. [Google Scholar] [CrossRef]

- Chingovska, I.; Anjos, A.; Marcel, S. On the Effectiveness of Local Binary Patterns in Face Anti-Spoofing. In Proceedings of the 2012 BIOSIG International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 6–7 September 2012; pp. 1–7. [Google Scholar]

- Pan, G.; Sun, L.; Wu, Z.; Lao, S. Eyeblink-Based Anti-Spoofing in Face Recognition from a Generic Webcamera. In Proceedings of the IEEE 11th International Conference on Computer Vision (ICCV 2007), Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Sun, L.; Pan, G.; Wu, Z.; Lao, S. Blinking-based live face detection using conditional random fields. Adv. Biom. 2007, 252–260. [Google Scholar]

- Bao, W.; Li, H.; Li, N.; Jiang, W. A liveness Detection Method for Face Recognition Based on Optical Flow Field. In Proceedings of the International Conference on Image Analysis and Signal Processing (IASP), Taizhou, China, 11–12 April 2009; pp. 233–236. [Google Scholar]

- Kollreider, K.; Fronthaler, H.; Faraj, M.I.; Bigun, J. Real-time face detection and motion analysis with application in “liveness” assessment. IEEE Trans. Inf. Forensics Secur. 2007, 2, 548–558. [Google Scholar] [CrossRef]

- Akhtar, Z.; Foresti, G.L. Face spoof attack recognition using discriminative image patches. J. Electr. Comput. Eng. 2016, 2016. [Google Scholar] [CrossRef]

- Galbally, J.; Marcel, S.; Fierrez, J. Image quality assessment for fake biometric detection: Application to iris, fingerprint, and face recognition. IEEE Trans. Image Process. 2014, 23, 710–724. [Google Scholar] [CrossRef] [PubMed]

- Wen, D.; Han, H.; Jain, A.K. Face spoof detection with image distortion analysis. IEEE Trans. Inf. Forensics Secur. 2015, 10, 746–761. [Google Scholar] [CrossRef]

- Lai, C.; Tai, C. A Smart Spoofing Face Detector by Display Features Analysis. Sensors 2016, 16, 1136. [Google Scholar] [CrossRef] [PubMed]

- Määttä, J.; Hadid, A.; Pietikäinen, M. Face Spoofing Detection from Single Images Using Micro-Texture Analysis. In Proceedings of the 2011 International Joint Conference on Biometrics (IJCB), Washington, DC, USA, 12–14 October 2011; pp. 1–7. [Google Scholar]

- De Marsico, M.; Nappi, M.; Riccio, D.; Dugelay, J.-L. Moving Face Spoofing Detection via 3D Projective Invariants. In Proceedings of the 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2012; pp. 73–78. [Google Scholar]

- Kim, S.; Ban, Y.; Lee, S. Face Liveness Detection Using Defocus. Sensors 2015, 15, 1537–1563. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Yi, D.; Lei, Z.; Li, S.Z. Face Liveness Detection by Learning Multispectral Reflectance Distributions. In Proceedings of the IEEE International Conference on Automatic Face & Gesture Recognition and Workshops (FG 2011), Santa Barbara, CA, USA, 21–25 March 2011; pp. 436–441. [Google Scholar]

- Kim, S.; Ban, Y.; Lee, S. Face Liveness Detection Using a Light Field Camera. Sensors 2014, 14, 22471–22499. [Google Scholar] [CrossRef] [PubMed]

- Tan, X.; Li, Y.; Liu, J.; Jiang, L. Face liveness detection from a single image with sparse low rank bilinear discriminative model. Comput. Vis. ECCV 2010, 2010, 504–517. [Google Scholar]

- Zhang, Z.; Yan, J.; Liu, S.; Lei, Z.; Yi, D.; Li, S.Z. A Face Antispoofing Database with Diverse Attacks. In Proceedings of the 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2012; pp. 26–31. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Harwood, D. Performance evaluation of texture measures with classification based on Kullback discrimination of distributions. In Proceedings of the IEEE 12th International Conference on Pattern Recognition, Jerusalem, Israel, 9–13 October 1994; pp. 582–585. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Lin, K.-Y.; Chen, D.-Y.; Tsai, W.-J. Face-Based Heart Rate Signal Decomposition and Evaluation Using Multiple Linear Regression. IEEE Sens. J. 2016, 16, 1351–1360. [Google Scholar] [CrossRef]

- Barkan, O.; Weill, J.; Wolf, L.; Aronowitz, H. Fast High Dimensional Vector Multiplication Face Recognition. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1960–1967. [Google Scholar]

- Stricker, M.A.; Orengo, M. Similarity of Color Images. In Proceedings of the IS&T/SPIE’s Symposium on Electronic Imaging: Science & Technology, San Jose, CA, USA, 5–10 February 1995; pp. 381–392. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 27. [Google Scholar] [CrossRef]

- Hsu, C.-W.; Chang, C.-C.; Lin, C.-J. A Practical Guide to Support Vector Classification; National Taiwan University: Taipei, Taiwan, 2003. [Google Scholar]

- Menotti, D.; Chiachia, G.; Pinto, A.; Schwartz, W.R.; Pedrini, H.; Falcao, A.X.; Rocha, A. Deep representations for iris, face, and fingerprint spoofing detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 864–879. [Google Scholar] [CrossRef]

- Kim, W.; Suh, S.; Han, J.-J. Face liveness detection from a single image via diffusion speed model. IEEE Trans. Image Process. 2015, 24, 2456–2465. [Google Scholar]

- Liao, S.; Law, M.W.; Chung, A.C. Dominant local binary patterns for texture classification. IEEE Trans. Image Process. 2009, 18, 1107–1118. [Google Scholar] [CrossRef] [PubMed]

- Burges, C.J. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

| Feature | NUAA | CAISA | Idiap | MSU | ||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | AUC | Accuracy | AUC | Accuracy | AUC | Accuracy | AUC | |

| (i) | 80.04 | 91.68 | 82.90 | 90.52 | 86.04 | 90.12 | 75.99 | 86.82 |

| (ii) | 85.60 | 92.46 | 80.72 | 86.08 | 87.13 | 92.79 | 85.15 | 91.17 |

| (iii) | 72.48 | 90.97 | 85.66 | 89.27 | 84.76 | 92.28 | 78.19 | 86.64 |

| (iv) | 73.60 | 91.34 | 89.03 | 91.85 | 91.56 | 93.78 | 79.19 | 87.72 |

| (v) | 95.45 | 99.29 | 90.72 | 95.13 | 93.74 | 97.46 | 82.13 | 90.44 |

| (vi) | 95.52 | 99.34 | 91.70 | 95.35 | 95.52 | 98.73 | 88.45 | 93.89 |

| (vii) | 98.56 | 99.85 | 88.59 | 94.00 | 92.59 | 97.13 | 86.31 | 92.57 |

| (viii) | 92.16 | 99.43 | 90.02 | 94.27 | 92.01 | 97.08 | 88.68 | 94.47 |

| (ix) | 96.69 | 99.96 | 93.24 | 96.57 | 96.55 | 99.34 | 90.06 | 95.71 |

| Method | Classifier | NUAA | CASIA | Idiap | MSU | |

|---|---|---|---|---|---|---|

| Accuracy | AUC | EER | HTER | EER | ||

| Määttä et al. [18] | Nonlinear SVM | 92.70% | 99.00% | N/A | N/A | N/A |

| Tan et al. [23] | Sparse nonlinear logistic regression | 84.50% | 95.00% | N/A | N/A | N/A |

| Kim et al. [35] | Linear SVM | 98.45% | N/A | N/A | 12.50% | N/A |

| Pinto et al. [34] | Linear SVM | N/A | N/A | 14.00% | N/A | N/A |

| Wen et al. [16] | Ensemble SVM | N/A | N/A | 12.90% | 7.41% | 8.58% |

| Proposed method | Linear SVM | 96.69% | 99.96% | 7.01% | 4.92% | 10.20% |

| Proposed method 1 | Nonlinear SVM | N/A | N/A | N/A | N/A | 7.23% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.-Y.; Yang, S.-H.; Chen, Y.-P.; Huang, J.-W. Face Liveness Detection Based on Skin Blood Flow Analysis. Symmetry 2017, 9, 305. https://doi.org/10.3390/sym9120305

Wang S-Y, Yang S-H, Chen Y-P, Huang J-W. Face Liveness Detection Based on Skin Blood Flow Analysis. Symmetry. 2017; 9(12):305. https://doi.org/10.3390/sym9120305

Chicago/Turabian StyleWang, Shun-Yi, Shih-Hung Yang, Yon-Ping Chen, and Jyun-We Huang. 2017. "Face Liveness Detection Based on Skin Blood Flow Analysis" Symmetry 9, no. 12: 305. https://doi.org/10.3390/sym9120305