1. Introduction

With the development of various virtual reality devices and related technologies, an environment where general users can easily enjoy virtual reality content is being formed. As a result, content that enables users to feel an experience that is similar to reality is continuously needed, and various research and technical development related to virtual reality are being carried out to satisfy these needs. To provide a visual experience with three-dimensional (3D) effects, Sutherland [

1] studied the HMD (Head Mounted Display) system in the 1960s. Since then, input processing techniques based on virtual reality began to be researched and developed to control physical events in a virtual space while satisfying the users’ five senses, including auditory and tactile senses.

As the hardware performance of smart phones is increasing and low-priced mobile virtual reality HMDs are being propagated, a wide variety of mobile platform virtual reality content is being produced, and many related studies are being conducted. The popularization of mobile HMD is especially providing an environment where anyone can experience immersive virtual reality content anywhere. However, mobile HMD requires the attachment of the mobile device inside the HMD, unlike personal computer (PC) or console platforms such as Oculus Rift.

For this reason, the touch input method of mobile devices cannot be used. Because of this limitation, mobile virtual reality content generally uses simple input methods using gaze or connects a game pad for controlling the virtual reality. Recently, dedicated controllers interconnected with mobile HMDs such as Samsung Rink are being developed, and studies [

2] are being conducted to develop hardware systems that can process inputs by direct touch of the mobile screen. To provide a virtual reality environment with a greater sense of immersion for users, hardware devices such as Leap Motion and data glove have been developed, which reflect the finger movements and motions of users in real time in the virtual reality environment. As suggested by the above discussion, which virtual reality technology and devices are used to experience immersive content based on virtual reality can be an important factor. However, it is also important to check the generation of motion sickness due to dizziness by the input processing technique while the user is experiencing the content. Nevertheless, there are few studies that analyze the effects of the interaction methods on the physical and psychological responses of users in comparison to the studies on the input processing techniques and interaction methods to improve user immersion.

This study was conducted to design user-oriented interaction to improve immersion in the production of interactive 3D content for the virtual reality environment and to systematically analyze the effects of the interaction on the actual immersion of users. For this purpose, interactive 3D content was designed for a comparative experiment on the suitability and immersion of the proposed input processing technique and interaction. Furthermore, as the core contribution of this study, an interaction method that can increase immersion more than gaze—which is the main input method of the existing mobile virtual reality content—is proposed. To this end, hand interaction that combines Leap Motion with gaze—which is the beginning of users’ immersion for interaction with objects in the virtual space—has been included. Lastly, the physical and psychological factors of users that are influenced by the input processing through the proposed hand interaction are evaluated through a questionnaire, and input processing techniques and interactions that can increase users’ immersion are analyzed.

Section 2 analyzes various input processing techniques and the psychological factors of users that are required to develop contents based on virtual reality.

Section 3 describes the production process for immersive 3D interactive contents proposed in this study.

Section 4 describes the core technique of hand interaction using gaze and Leap Motion in this study.

Section 5 describes the experiment and analysis process for the proposed method. Finally,

Section 6 outlines the conclusion and presents future research directions.

2. Related Works

In the early 1900s, studies on virtual reality were conducted to satisfy the visual sense of users through such devices as head-mounted virtual reality systems [

1]. Since then, many researchers have tried to improve the realism of the virtual reality environment and the immersion of user, which has led to studies on haptic system and other devices to satisfy various senses such as tactile sense by improving the physical responses of the virtual world [

3,

4]. With the development of mobile devices, many application studies using mobile devices were conducted in the virtual reality arena. Lopes et al. [

5] proposed a mobile force feedback system through muscle simulation using electricity. Yano et al. [

6] conducted research on a handheld haptic device that can touch objects with its fingers. In addition, GyroTab, which gives feedback of a mobile torque based on a gyroscope, was proposed [

7]. Another example is POKE [

8], a mobile haptic device that interacts through an air pump and silicon membranes. These studies were conducted to provide tactile sense as well as vision in mobile virtual reality, but they were not developed into systems that can be easily accessed and used by anyone.

How to provide input processing for users in a limited environment is as important for virtual reality content in a mobile environment as the design of hardware devices to satisfy the five senses of users. Unlike the PC platform, the mobile virtual reality embedded in the HMD has limitations in users’ input environments due to the impossibility of touch, which is the only input function. For this reason, many researchers designed interfaces that can process magnetic input for mobile HMD, such as Google Cardboard. Representative magnetic input devices include Abracadabra [

9], Nenya [

10], MagiTact [

11], and MagGetz [

12]. They processed interactions by wearing or holding magnetic objects. Later, Smus et al. [

13] proposed a wireless, unpowered, and inexpensive mobile virtual reality (VR) magnetic input processing method that provides physical feedback with the smart phone, only without the calibration process. Gugenheimer et al. [

2] proposed the facetouch interface, which processes the interaction of the virtual reality environment by attaching a touch-sensitive surface on the mobile HMD. This was also difficult to use because a separate magnetic device must be attached to process input.

Hands are body parts that are often used to interact with objects in both virtual and real environments. For this reason, controllers are frequently used to indirectly replace the movement of hands in the interaction process of virtual reality content. For more direct control, however, studies are being conducted to accurately capture the movements of hands, including joints, and use them for interaction. For instance, Metcalf et al. [

14] conducted a study to capture and control the movements of hands and fingers through optical motion capture using a surface marker. Zhao et al. [

15] also proposed a high-fidelity measurement method of 3D hand joint information by combining a motion capture system based on an optical marker and a Kinect camera from Microsoft.

Stollenwerk et al. [

16] proposed an optical hand motion capture method based on a marker and tested whether colored marker detection is correctly performed under various lighting conditions and applied the detected hand and finger movements to keyboard performance. Oikonomidis et al. [

17] proposed a method of tracking the 3D movements of hands based on the depth information detected by the Kinect. Arkenbout et al. [

18] researched an immersive hand motion control method incorporating the Kinect-based Nimble VR system using a fifth dimension technologies (5DT) data glove and a Kalman filter. Furthermore, studies [

19,

20,

21,

22] on various approaches for analyzing motion by capturing the human hand have been carried out, including a study on articulated hand motion and graphic presentation of data generated from the interaction between objects in certain time intervals [

23]. These studies enable users to interact more directly in a virtual environment, but research has not yet been developed into a VR system. In particular, in the case of mobile platform VR, if VR sickness is considered, many factors other than hand motion detection, such as frames per second (FPS) and the refresh rate, should be considered together. Therefore, in order for the hand motion capture research to be used as a VR application, these various technical factors and compatibility with other VR systems such as HMD should be considered in a comprehensive way.

Recently, studies are being conducted using Leap Motion as a technology for expressing free motions in 3D space by capturing the finger movement and motions of user. A method of receiving signature or certificate was researched by detecting hand gestures using Leap Motion, recognizing the tip of the detected finger and writing along the movement of the fingertip point [

24]. In another study, hand gestures were divided into four motions of circle, swipe, screen tap, and key tap, and the possibility of accurate perception through matching with predefined templates was tested [

25]. Hand gestures were also used with Leap Motions for the training of surgical experiments [

26]. Recently, an interface using Leap Motion was designed, and user reactions were analyzed to use hand motions as interaction for playing a virtual reality game [

27]. However, there are still few cases of applying Leap Motion to virtual reality content, and in particular, almost no studies have been conducted to design an interaction applied to mobile virtual reality. More importantly, research on user psychology is also required to analyze whether or not the proposed interaction method improves user immersion or causes VR sickness. In relation to this, studies were conducted to analyze whether or not the cue conflict of the head-mounted virtual reality display causes motion sickness [

28,

29] or to analyze the effect of unstable positions on motion sickness [

30,

31]. However, few studies have been conducted on the effects of the input processing technique and interaction method of virtual reality on the psychology of users.

Considering this situation, this study designs interactive content based on mobile platform virtual reality, and proposes a hand interaction method using gaze and Leap Motion to improve user immersion. Furthermore, experiments evaluating the suitability of the designed content and proposed interaction method for the virtual reality environment are conducted, and the results analyzed in terms of various factors, such as immersion and VR sickness.

4. Gaze-Based Hand Interaction

User interaction is required for the smooth progress of the immersive mobile virtual reality content. The interaction elements of the proposed content consist of a process of selecting one among the five received cards, and a process of selecting the virtual object that is created when five cards of the same type are collected. An interaction method that can enhance immersion while not interfering with the object control must be designed because the content progresses quickly within a limited time. Gaze-based hand interaction is proposed for this purpose in this study.

Hayhoe et al. [

32] proved that people focus on gaze first when controlling virtual objects in a virtual space. Therefore, the user’s gaze must be considered before designing the interaction using hands. Then, the hand motion and gesture are recognized based on gaze to design input processing. Then, input processing is designed by recognizing the hand motions and gestures of users.

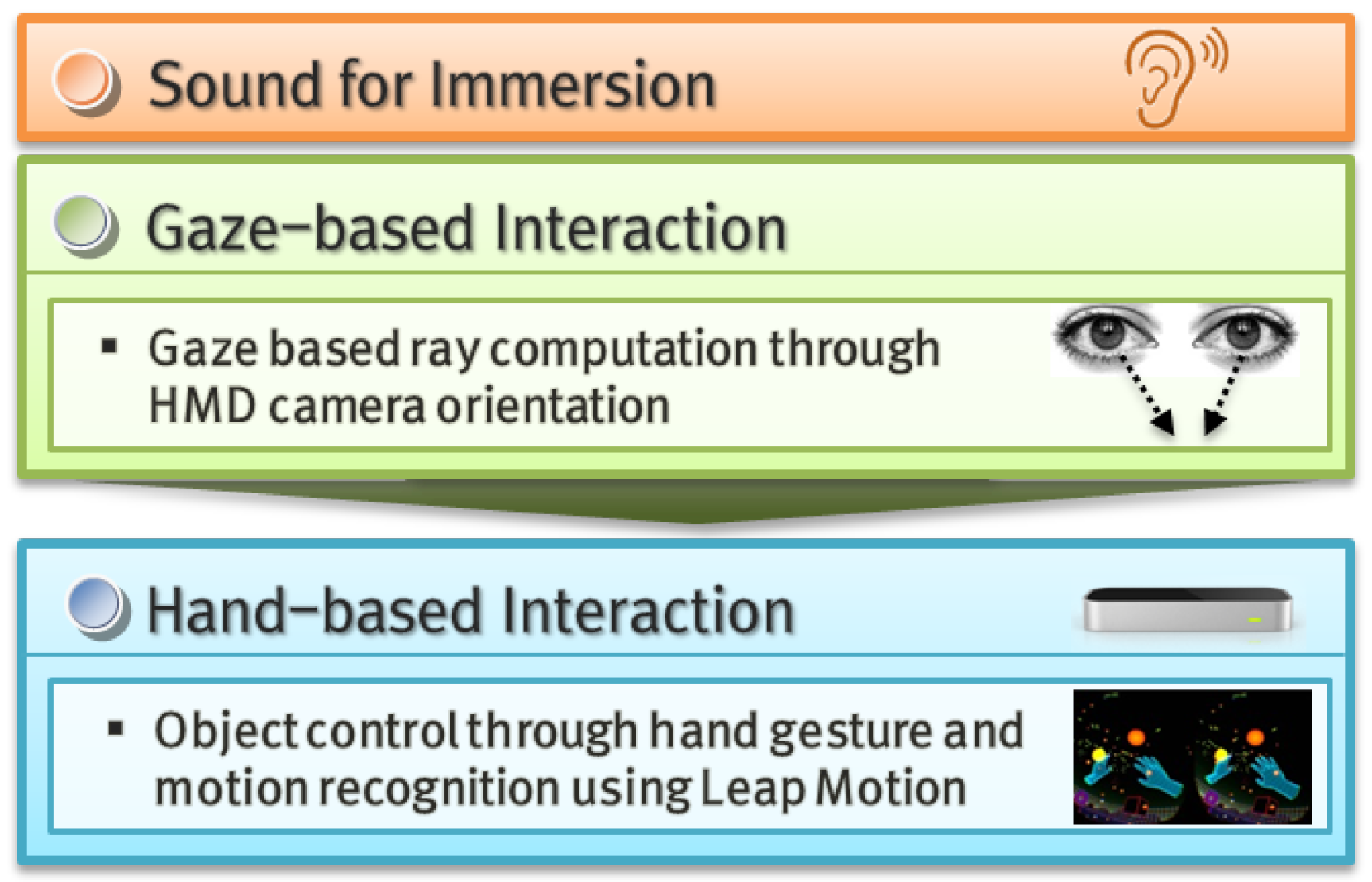

Figure 2 shows an overview of the gaze-based hand interaction.

For users who are wearing a mobile HMD, the viewpoint and direction of the camera in the virtual space correspond to the user’s gaze. When the user’s head moves, the mobile sensor and HMD track it and update the changed information on the screen. In this study, the interactive content is configured in such a way that the user’s gaze is not distributed, and the user can concentrate on the screen where their card is displayed because, if you miss the screen, you can also miss the fast moving flow with sound. At this time, the raycasting method is used so that the gaze of the user can accurately select the desired card. The ray is calculated in the camera direction corresponding to the gaze. Then, the object selection or not of virtual space is calculated through the collision detection with the calculated ray and with the virtual object.

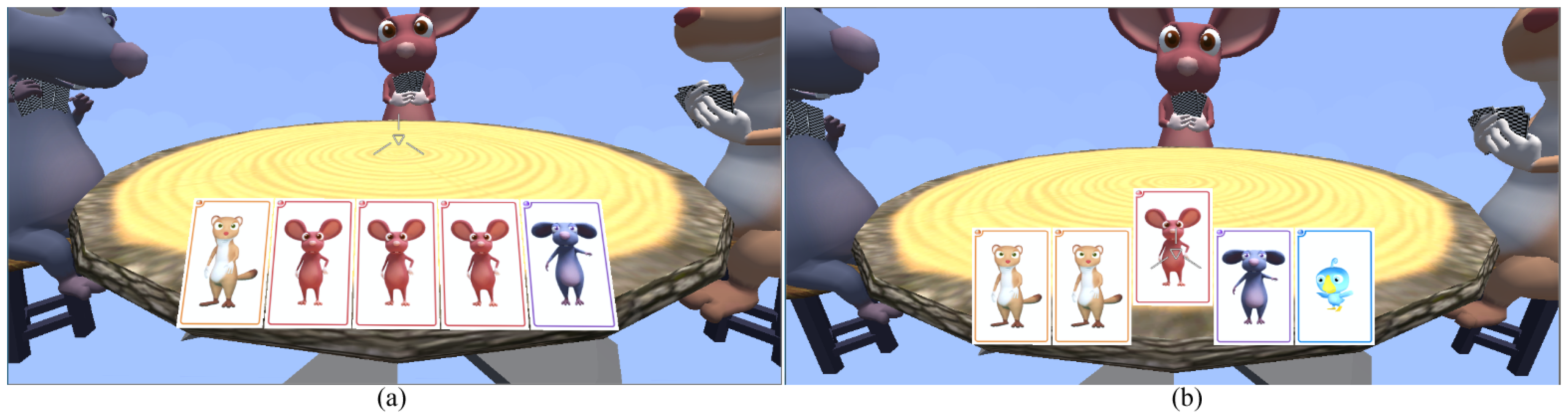

Figure 3 shows the card selection process of the content proposed through the gaze of user.

The user is induced to concentrate on a specific area (location of the arrayed card) on the screen using gaze. Then, the hand interaction structure is designed to reflect a similar behavior as card selection in the virtual environment. In this study, Leap Motion is used as an input processing technique to increase the user’s immersion in the virtual reality content of the mobile environment. The Leap Motion sensor is a hand motion detection sensor, which consists of two infrared cameras and one infrared ray (light-emitting diode (LED)). This sensor is a small USB peripheral device with a height of 12.7 mm and a width of 80 mm. It can be attached to the HMD device, and the hand gestures and motions are recognized and processed by the infrared sensor.

Figure 4 shows the configuration result of input environment consisting of a Leap Motion device used in this study attached to a mobile HMD. Leap Motion is not providing software development kit (SDK) for mobile HMD. Therefore, a mobile virtual reality experiment environment to which hand interaction is applied is constructed in this study by using Unity 3D (Unity Technologies, San Francisco, CA, USA) to produce mobile virtual reality content and integrating it with the Leap Motion development tool and remotely sending divided virtual reality scenes to the mobile phone.

Two hand interactions are proposed: the first is a card selection interaction which checks if the user’s gaze is looking at the card to select. Then, the user’s finger is perceived at the go timing of the “ready-go” sound. Next, the gesture is set by a clicking motion with the index finger. The second hand interaction is the process of selecting a virtual object generated at a random location in the virtual space when five identical cards are collected and the content finishes. During the progress of the content, the user’s gaze is concentrated on his/her cards. In this situation, when a card combination is completed by another character, a virtual object is created instantly, and the user perceives them in the order of gaze to gesture. In other words, the behavior is recognized when the user first looks at the generated virtual object, instantly stretches his/her hand and makes a gesture of holding the object. Algorithm 2 represents the process of these two hand interactions.

| Algorithm 2 Hand Interaction Process. |

- 1:

Array_UserCard[5] ← Array of the user’s five cards. - 2:

procedure Hand-based Card Selection(Array_UserCard) - 3:

range_sound ← A certain time range is saved around the time when “go” sound is played. - 4:

check_gaze ← Checks if the user’s gaze is directed to one element of the Array_UserCard. - 5:

if check_gaze = true then - 6:

Save recognized finger information of 0th hand. - 7:

FingerList OneHand = frame.Hands[0].Fingers . - 8:

OneHand [1].IsExtended ← Activation of the index finger is tested. - 9:

time_hand ← Time when the index finger is detected. - 10:

if OneHand [1].IsExtended = true And time_hand < range_sound then - 11:

Card selection finished. - 12:

end if - 13:

end if - 14:

end procedure - 15:

Obj_Finish ← 3D virtual object that is randomly generated when the content finishes. - 16:

procedure Hand-based Touch Object(Obj_Finish) - 17:

check_gaze ← Checks if the user’s gaze is directed to the Obj_Finish. - 18:

if check_gaze = true then - 19:

cnt_hands ← Number of activated fingers. - 20:

for i=0,4 do - 21:

if frame.Hands[0].Fingers[i].IsExtended = true then - 22:

cnt_hands++ - 23:

end if - 24:

end for - 25:

if cnt_hands = 5 then - 26:

Perceive object touch. - 27:

Record the time from object generation to touch. - 28:

end if - 29:

end if - 30:

end procedure

|

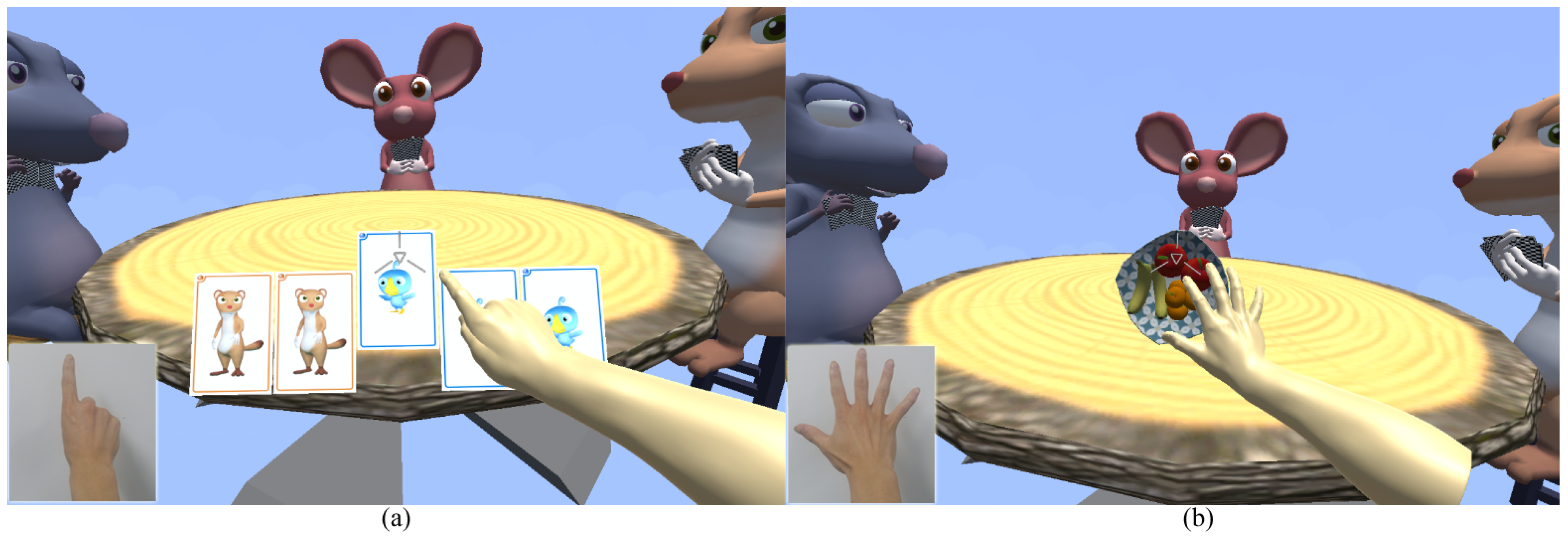

Figure 5 shows the process of perceiving hand motions and controlling the content through the proposed hand interactions.

5. Experimental Results and Analysis

The proposed mobile virtual reality content production and virtual reality technique used Unity 3D 5.3.4f1 (Unity Technologies, San Francisco, CA, USA) and a Google virtual reality development tool (gvr-unity-sdk, Google, Mountain View, CA, USA). The hand interface—which is the core input technology of this study—was implemented using Leap Motion SDK v4.1.4 (Leap Motion, Inc., San Francisco, CA, USA). The PC environment used in this environment was IntelR CoreTM i7-4790 (Intel Corporation, Santa Clara, CA, USA), 8 GB random access memory (RAM), and Geforce GTX 960 GPU (NVIDIA, Santa Clara, CA, USA). Furthermore, a Samsung Galaxy S5 (Suwan, Korea) was used as the mobile phone for this experiment, and the Baofeng Mojing 4 (Beijing, China) HMD was used.

The experimental process consists of checking the production result of the proposed content and the analysis of the physical and psychological effects of the gaze-based hand interaction of this study on users in the virtual reality environment. First, the virtual reality content of the mobile platform was produced in accordance with the plan, and the accurate operation of the interaction process was verified based on the proposed input processing. When the content is started, the main screen is switched to the content screen. On the content screen, four characters (including the user) are deployed in the virtual space, five cards are randomly distributed to each character, and the process of selecting the last finish object by matching the cards of one type is implemented. In this process, an interaction method was designed by which the user selects cards using his/her hands based on his/her gaze and touch of the last virtual object.

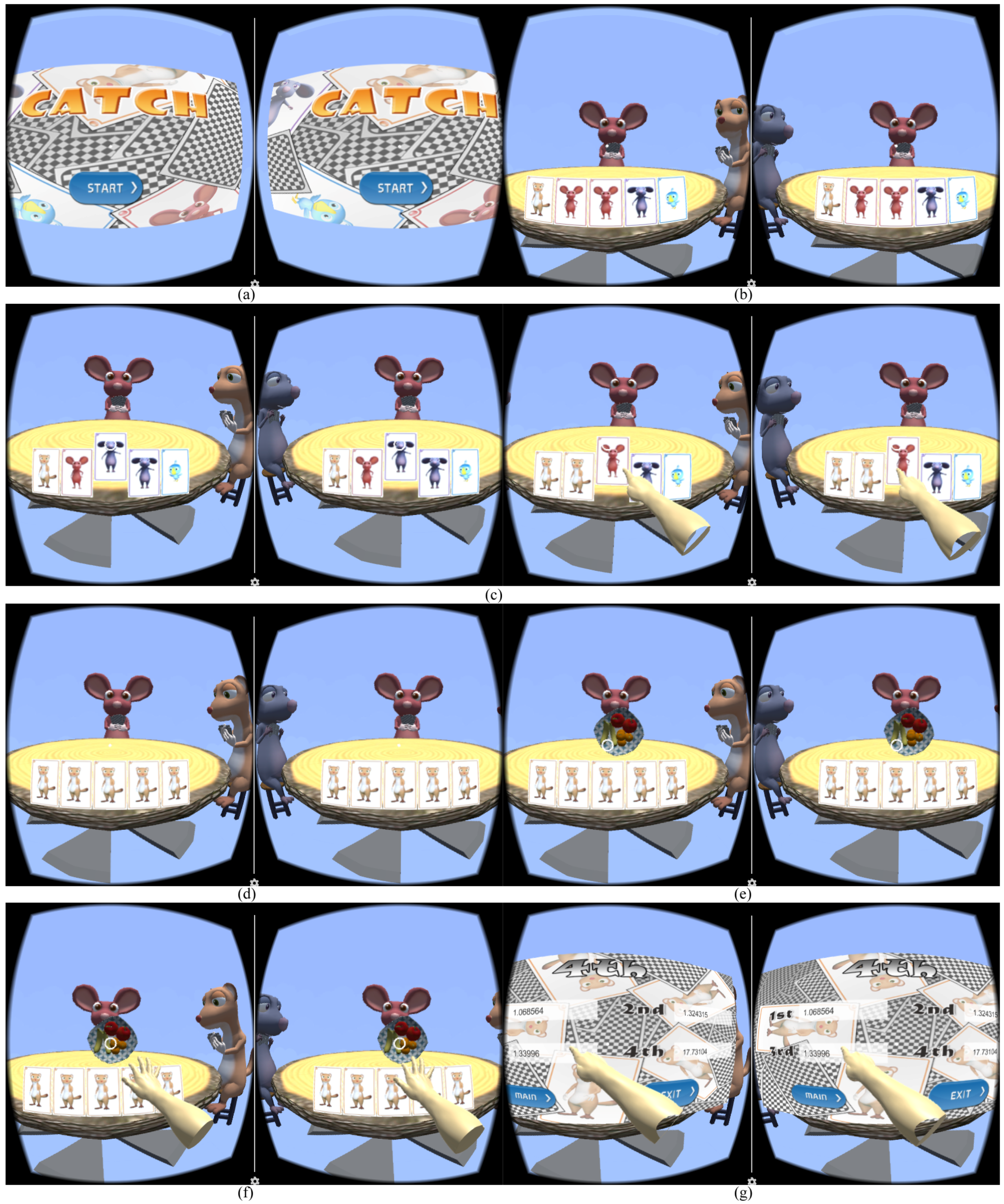

Figure 6 shows this process, and the progress of accurate interaction can be checked in the smooth flow of the proposed interactive content.

Next, the effect of the proposed hand interaction on the psychological elements of the actual result are tested and analyzed. For this experiment, 50 participants in their 20 and 40s were randomly chosen, and they were allowed to experience the produced content before analyzing the results through a questionnaire. The first part of this experiment is the result for the suitability of the proposed virtual reality content. If the card-type content proposed in this study is not suitable for virtual reality content, the later interface has no significance. Therefore, the questionnaire was collected to check this.

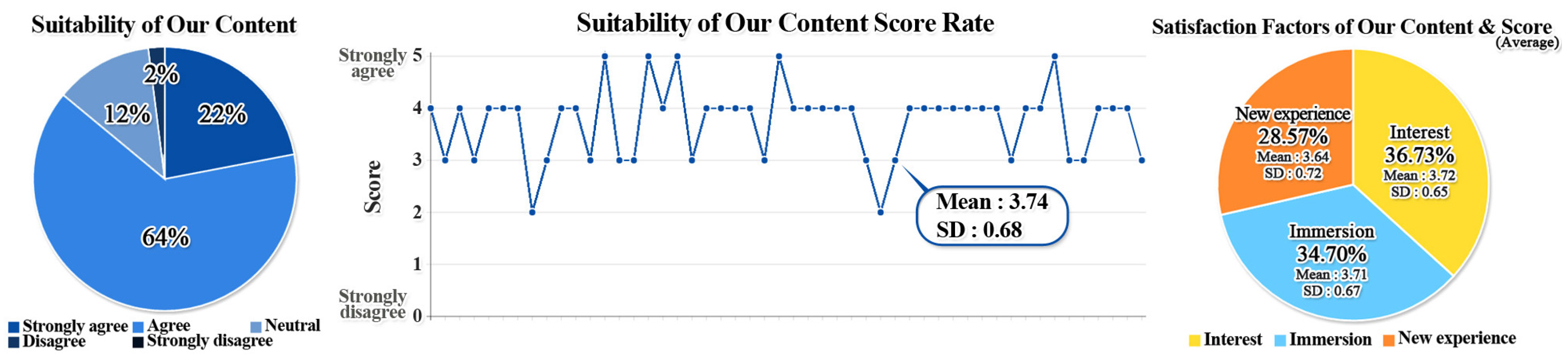

Figure 7 shows the result: 86% of all participants replied that the proposed content was suitable for testing the virtual reality environment. Furthermore, the participants were asked to write the satisfaction score between 1 and 5 to accurately analyze the numerical data. The respondents gave a high satisfaction score of 3.74 (standard deviation (SD): 0.68) for the experience of the virtual reality environment of the proposed content. In particular, when the reasons for the positive evaluation of the proposed content were analyzed, it was selected because it is an interesting topic for virtual reality content and provides a high level of immersion and a new experience. The satisfaction score was also high at approximately 3.7 out of 5.0. Thus, the proposed content was suitable for experimenting and analyzing mobile virtual reality content in line with the intended purpose.

The second is a comparative experiment for the proposed interaction. This study proposed an interaction that combines hand gesture and motion with the gaze interaction method that is mainly used in mobile virtual reality content. Therefore, an experiment was conducted to analyze whether the proposed interaction can give high immersion and satisfaction to users in comparison with the conventional general interaction method, which uses gaze only. Four experimental groups were constructed for this experiment because the experience of users may vary according to the order of the interaction experiences. In the description of the content, the method of using the gaze only is defined as “G”, and gaze-based hand interaction is defined as “H”. The first experimental group experienced G first, followed by H, and the second experimental group experienced H first, followed by G, in the reverse order. The third and fourth experimental groups experienced only G or H, respectively, to obtain objective data. The participants in all experimental groups were asked to evaluate their interaction experiences on a scale of 1 to 5.

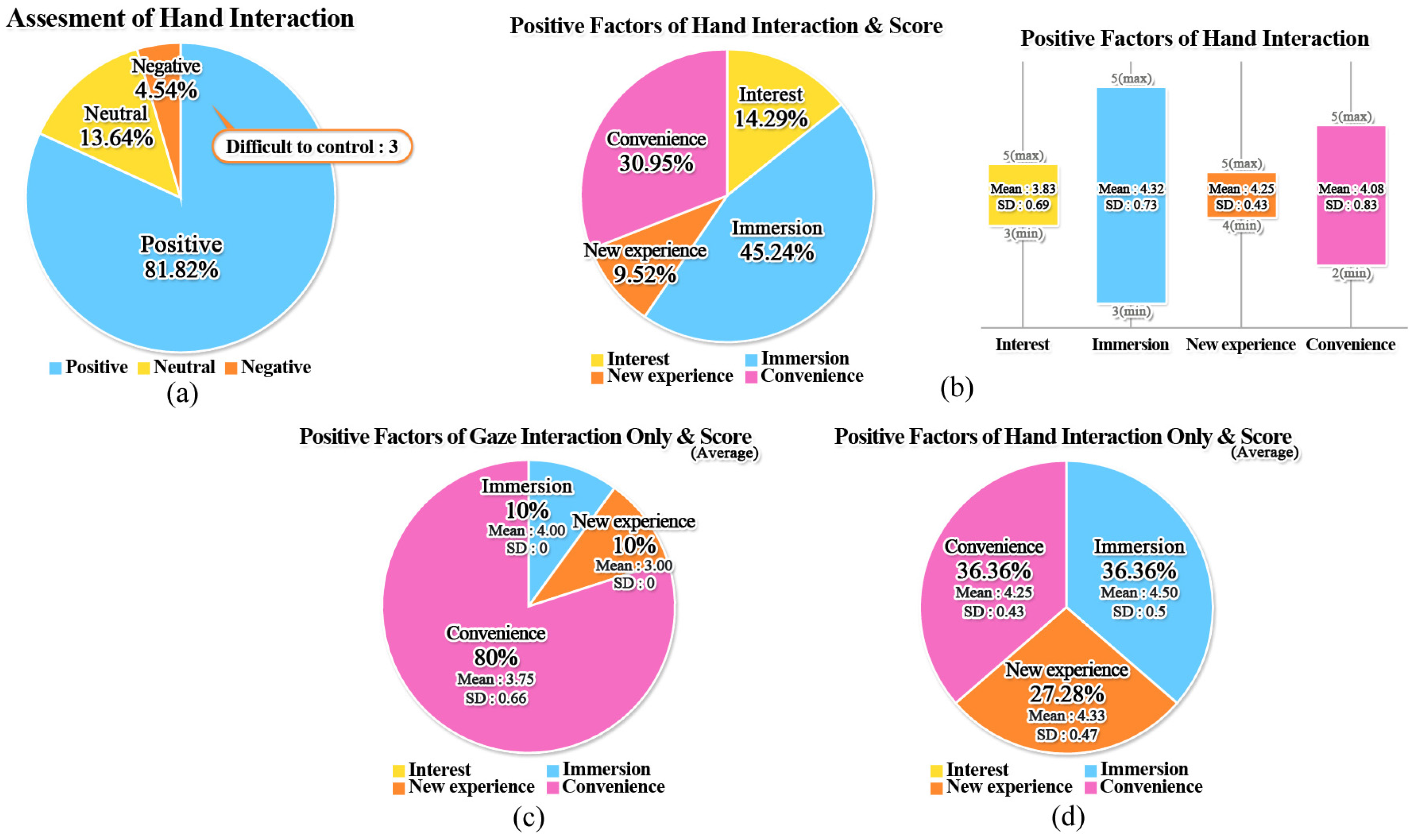

Figure 8 shows the results of the four experimental groups. First, 80% of the participants who experienced the interactions in the order of G and H replied that the hand interaction was more satisfactory. Their satisfaction scores showed an average difference of 0.85 between G and H (

Figure 8a).

Figure 8b shows the results of the experimental group who experienced the interactions in the reverse order, and 83.34% of the experimental group was more satisfied with the gaze-based hand interaction. Their satisfaction score difference between G and H was 1.25. There was a slight difference in satisfaction depending on which interaction was experienced first between the gaze and the hand. In particular, participants who experienced the hand interaction first were more satisfied with the gaze-based hand interaction than with the gaze interaction only.

We conducted a Wilcoxon test to prove the alternative hypothesis that assumes that H provides more advanced interaction than G. First, significance probability (

p-value) was approximately 0.0016162 in the case of participants who experienced Leap Motion first (

Figure 8a). Since this is smaller than significance level (0.05), the null hypothesis was rejected. Next, significance probability was approximately 0.0111225 in the case of participants who experienced the proposed gaze based hand interaction first (

Figure 8b). This is also smaller than the significance level, showing consistent results of rejecting the null hypothesis. That is, both of the two tests rejected the null hypothesis, proving that the alternative hypothesis is correct. Finally, computing the significance probability by combining these two, the statistical test results also proved that the proposed hand interaction gave stronger satisfaction and immersion to users compared to the method that uses gaze only, with

p-value at 6.4767297

.

Next, the responses of the experimental groups who used only one interaction were analyzed. As shown in

Figure 8c, more than 90% of the participants using the gaze-only method were satisfied with the interaction and recorded an average score of 3.8 (SD: 0.60). Participants using the gaze-based hand interaction only scored an average of 4.0 (SD: 0.91) points and more than 83.34% of them gave positive responses (

Figure 8d). Although the difference is small, the proposed hand interaction was found to result in better satisfaction and immersion for participants in the same conditions and situations.

The overall analysis results showed that users are more familiar and satisfied with the gaze interaction method, which is mainly used in the existing mobile platform virtual reality content. Thus, we can see that hand interaction can provide greater immersion and satisfaction if it is combined with the appropriate context for the purpose of the content.

The last is a detailed analytical experiment for psychological factors. The psychological factors of users for the proposed gaze-based hand interaction were subdivided into the four items of improved immersion, inducement of interest, provision of new experience, and convenient control for the analysis of positive factors. Furthermore, the four items of VR sickness, fatigue, difficult operation, and inconvenience were analyzed as negative factors. The details of the eight psychological factors presented here referred to the existing studies on immersion in VR [

33,

34].

The results of the aforementioned experiment showed that the gaze-based hand interaction was more satisfactory. Therefore, the experiment involving the detailed psychological factors was conducted with the proposed hand interaction. As shown in

Figure 8a,b,d, among the participants in the experimental groups experiencing the proposed hand interaction, those who gave relatively or objectively positive responses were asked to select one of the four positive factors and record a score. In addition, participants who gave negative responses were also asked to select one of the negative factors and record a score. However, only 4.55% of the respondents gave negative responses below average, so accurate analysis results could not be derived from them.

Figure 9 shows that 45.24% of the participants who gave positive responses, which constituted 81.28% of all respondents, replied that hand interaction improves their immersion and helps them to accurately control the virtual objects. The scores were also generally high, at 3.8 or higher out of 5.0. Therefore, the proposed hand interaction was found to have the greatest influence on the provision of an experiential environment with high immersion in the virtual reality environment, although it is also helpful for the inducement of interest and convenient control.

The analysis of the negative factors showed that many participants selected the difficulty of manipulation. The information on the participants who gave negative answers revealed that they had either considerable or no experience with virtual reality content. Their negative responses seem to be caused by the inconvenience of the new operation method due to their familiarity with the existing gaze method or their lack of experience with interaction. For reference, the questionnaire results of participants who only experienced G or H were analyzed (

Figure 8c,d). Most participants experienced only gaze selected convenience, as expected. Participants who experienced the hand interaction only selected various items, such as immersion, convenience, and novelty. Their satisfaction score was also found to be higher by at least 0.5. This suggests that, if the gaze and the hand are combined appropriately in line with the situation, it can provide users with various experiences and high satisfaction.

In this study, it was analyzed whether the proposed hand interaction can lead to higher immersion compared to merely gaze, which is mainly used in mobile platform virtual reality content, and also whether it can cause VR sickness. As a result of various experiments, VR sickness was not found to be a problem. For the virtual reality content of the mobile platform in general, the FPS and the polygon count of all objects comprising the content should be considered carefully. In mobile VR, the recommended number of polygons is 50 to 100 kilobyte (k) and the recommended FPS is 75 or more. If these recommendations are not followed, the user may experience sickness due to the delay and distortion of the screen. In the proposed virtual reality content, the number of polygons ranged from 41.4 to 80.0 k with an average of 56.2 k depending on the camera position, and the FPS ranged from 82.1 to 84.0 with an average of 82.8. Thus, users experienced no hardware problems. Therefore, if the technical performance condition is satisfied in the VR environment, the proposed hand interaction will not cause VR sickness (

Table 1).

The simulator sickness questionnaire (SSQ) experiment was conducted to analyze the VR sickness of the proposed hand interaction more systematically and statistically [

35,

36]. In SSQ, the sickness that users can feel from the simulator was deduced in 16 items through various experiments. Participants were asked to select one of four severity levels between none and severe for such items as general discomfort and fatigue. In this study, the sickness was analyzed using raw data excluding weight for the absolute analysis of the proposed interaction [

37]. Based on the aforementioned four experimental groups, the questions were designed to compare the values when only gaze was used and after experiencing the gaze based hand interaction.

Table 2 lists the results of the SSQ experiment. The result of hand interaction increased slightly compared to the method using gaze only. However, most participants felt almost no VR sickness for both interactions (total mean (mean for each of the 16 items) : 1.05 (0.07), 1.57 (0.1), slight or less). For the detailed factors consisting of nausea, oculomotor, and disorientation, the highest values (0.67, 1.0) were obtained for oculomotor in both interactions, but they were not a level that can cause problems in user’s interaction.

Consequently, various new interactions that combine hand motion and movement with gaze should be researched to provide users with satisfying and diverse experiences as well as immersive interactions in the virtual reality content of the mobile environment. Therefore, the current environment where the virtual reality input processing techniques are mostly limited to PCs, consoles, and platforms, and cannot be extended to the mobile environment must be improved so that users can conveniently and easily experience virtual reality content. More specifically, we need to think about how to combine hand gestures and motions with the interaction that handles the movements and controls of objects based on the user’s gaze in order to increase immersion while reducing the VR sickness of users.

6. Conclusions

This study proposed gaze-based hand interaction considering various psychological factors, such as immersion and VR sickness in the process of producing mobile platform virtual reality content. To this end, a card-type interactive content was produced which induces tension and concentration in users in order to plan an interactive content that is suitable for the virtual reality environment of the mobile platform. Then, an environment where users can receive and concentrate on scenes with 3D effects using mobile HMD regardless of the place was provided. A gaze-based hand interaction method was designed to improve the immersion of users in the process of controlling 3D objects and taking actions in the virtual space. Furthermore, the interaction method using gaze only and the interaction method to which hand interface was added were applied with the same content separately, and the experimental results were derived after asking general users to experience them. In a situation where the proposed interactive content was suitable for virtual reality content, the method of combining gaze with hand interaction improved user satisfaction and immersion compared to the interaction method using gaze only. When the detailed psychological factors were analyzed, a high percentage or 45.24% of respondents answered that hand interaction provided interaction with high immersion among the items of improved immersion, inducement of interest, convenient control, and new experience. Thus, it was found that presenting an environment that enables more direct control in the 3D virtual space is helpful for improving the immersion of content when producing virtual reality content of the mobile platform. Finally, it was verified that VR sickness due to hand interaction will not occur if the system requirements, such as FPS and the polygon count, are observed when producing virtual reality content in the mobile environment. The results of the survey experiment through SSQ showed that all respondents felt almost no VR sickness.

The conventional input processing of the mobile platform is providing an interface that mainly uses gaze, due to the limited environment. In this study, interaction combining the conventional gaze method with hand gestures and motions was proposed to improve the immersion of content, and the performance of the proposed method was evaluated. Even though hand interaction is very helpful when analyzed from the aspect of immersion improvement, a perfect development environment for the mobile platform has not yet been provided, due to the problem of installing separate devices. Therefore, it is important to design an interaction method in line with the degree of immersion that the content to be produced desires. In the future, the efficiency of various input processing techniques will be analyzed by experimenting with immersion, motion sickness, etc. through the interaction design as well as using various input devices that support the production of mobile platform virtual reality content.