A Study on Immersion and VR Sickness in Walking Interaction for Immersive Virtual Reality Applications

Abstract

:1. Introduction

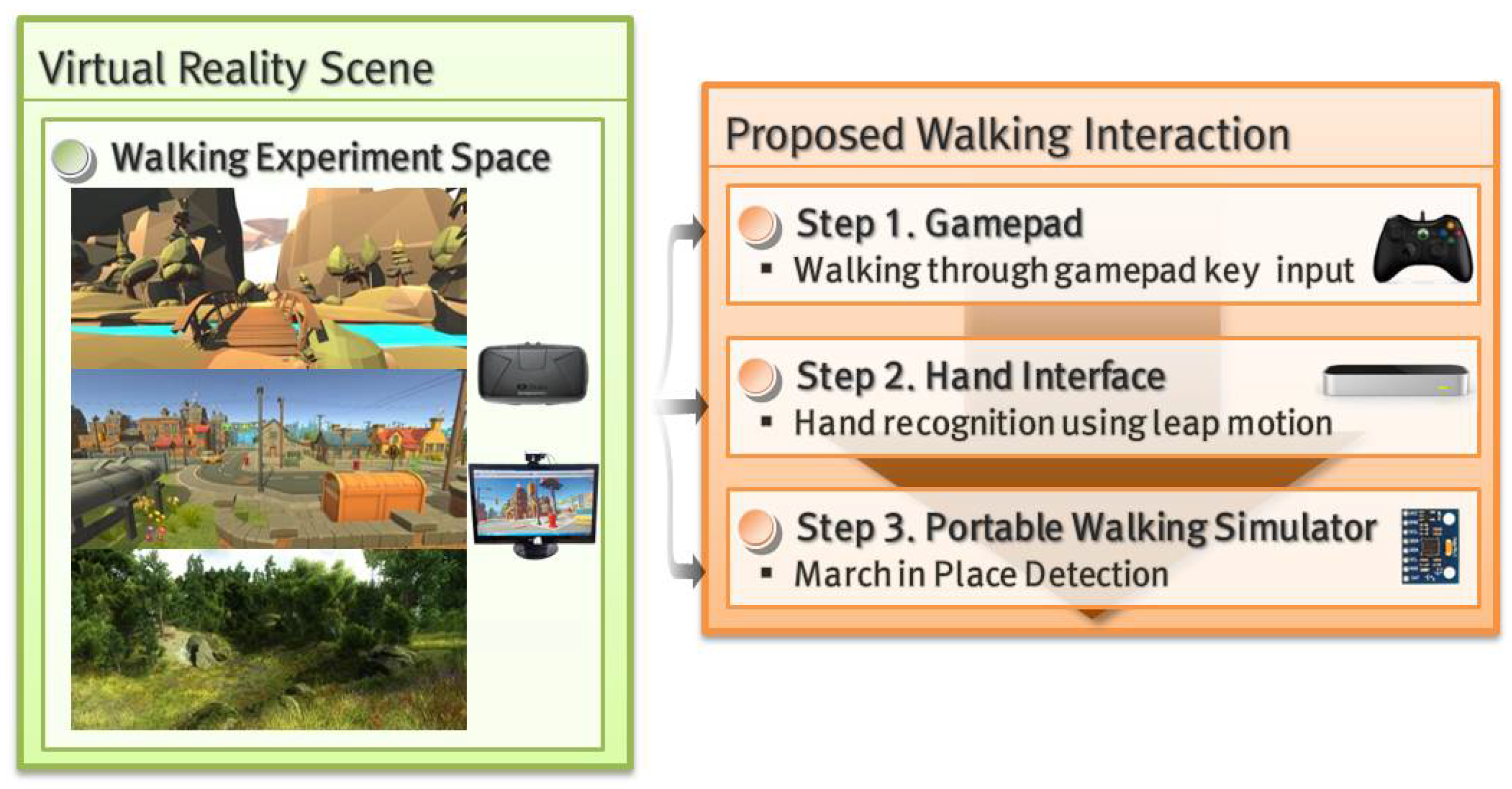

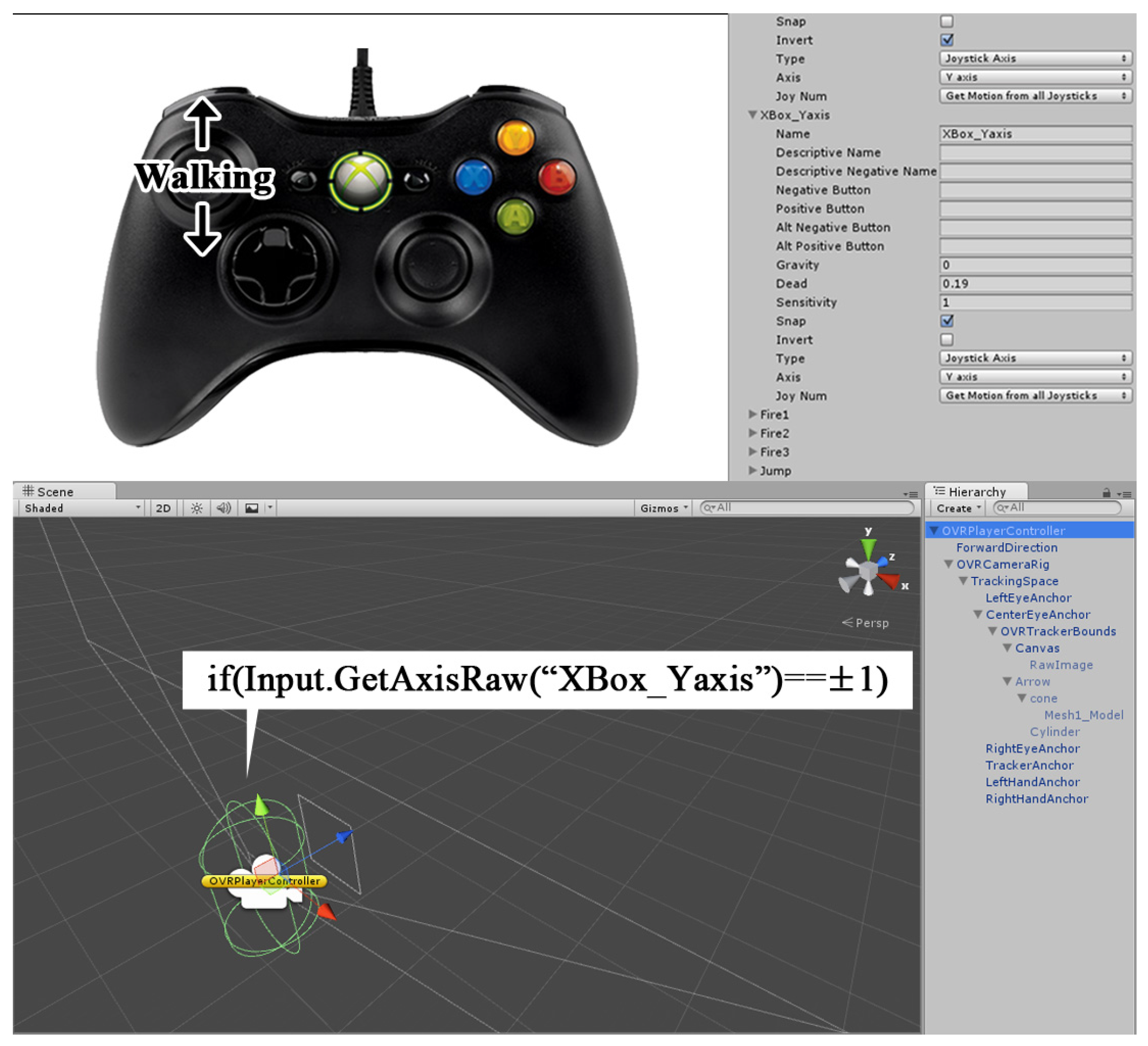

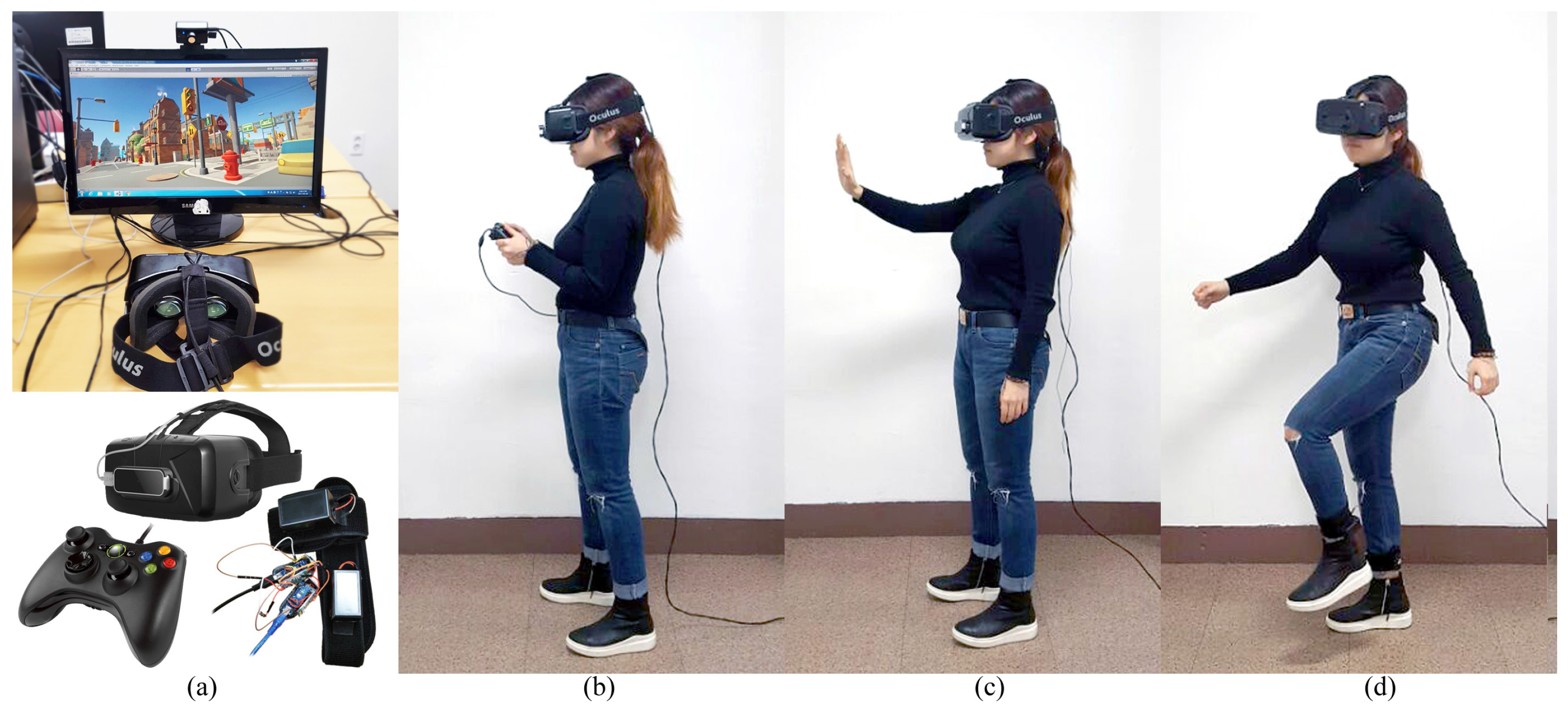

- Design interactions using a gamepad, which is the most popular input device in VR applications, including games.

- Design hand interfaces based on Leap Motion to control motions using only hand gestures for direct expression of intention, which is more direct than a gamepad or keyboard.

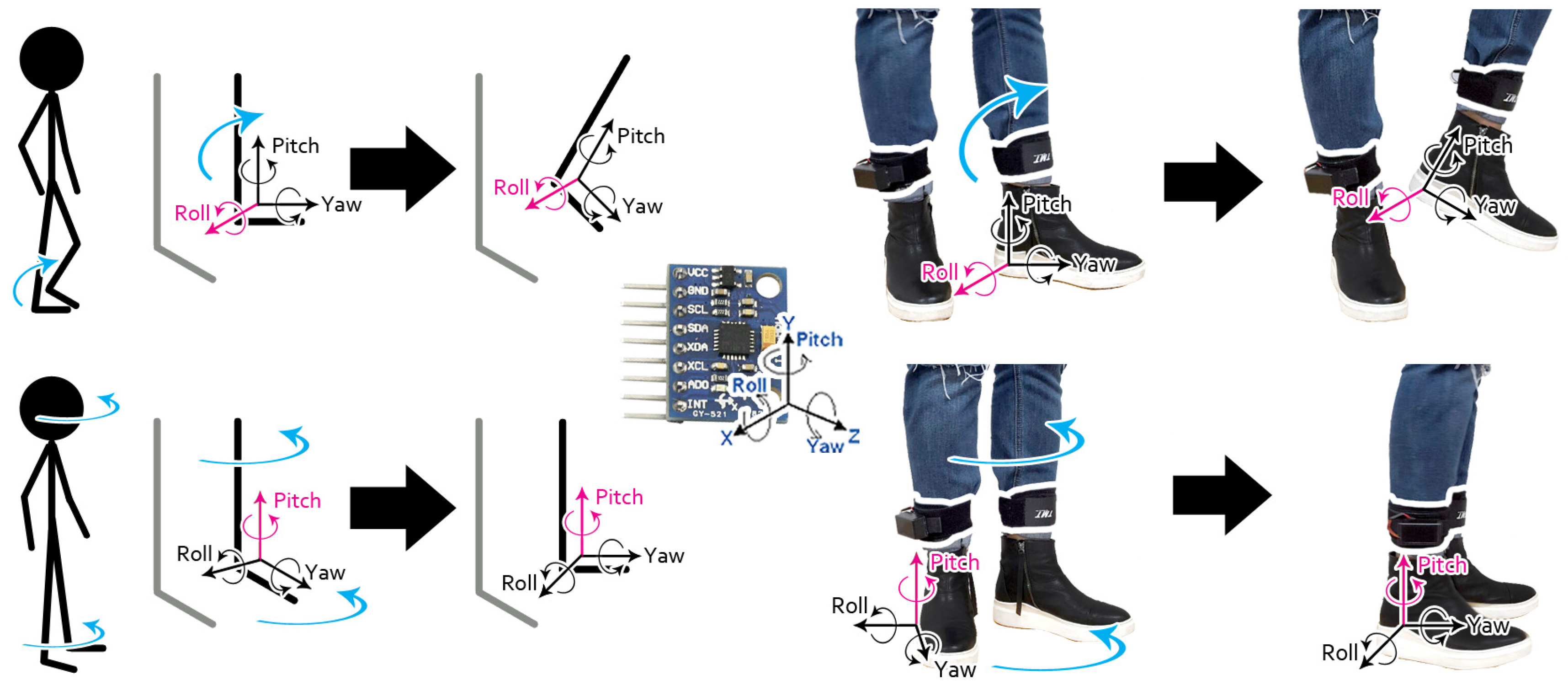

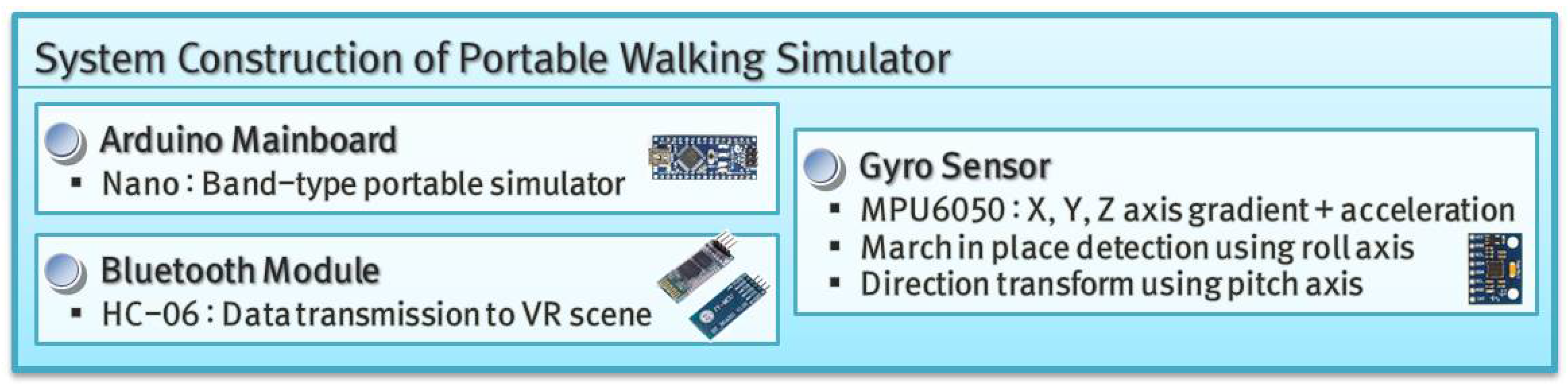

- Design walking interaction using an Arduino-based portable simulator [16], which can detect a march-in-place gesture to express walking motions in VR applications.

2. Previous Works

3. Walking Interaction in Virtual Reality Applications

3.1. Interaction Overview

3.2. Gamepad

3.3. Hand Interface Based on Leap Motion

| Algorithm 1 Walking interaction of hand interface based on Leap Motion. |

|

3.4. Walking Simulator Using March-in-Place

4. Experimental Results and Analysis

4.1. Environment

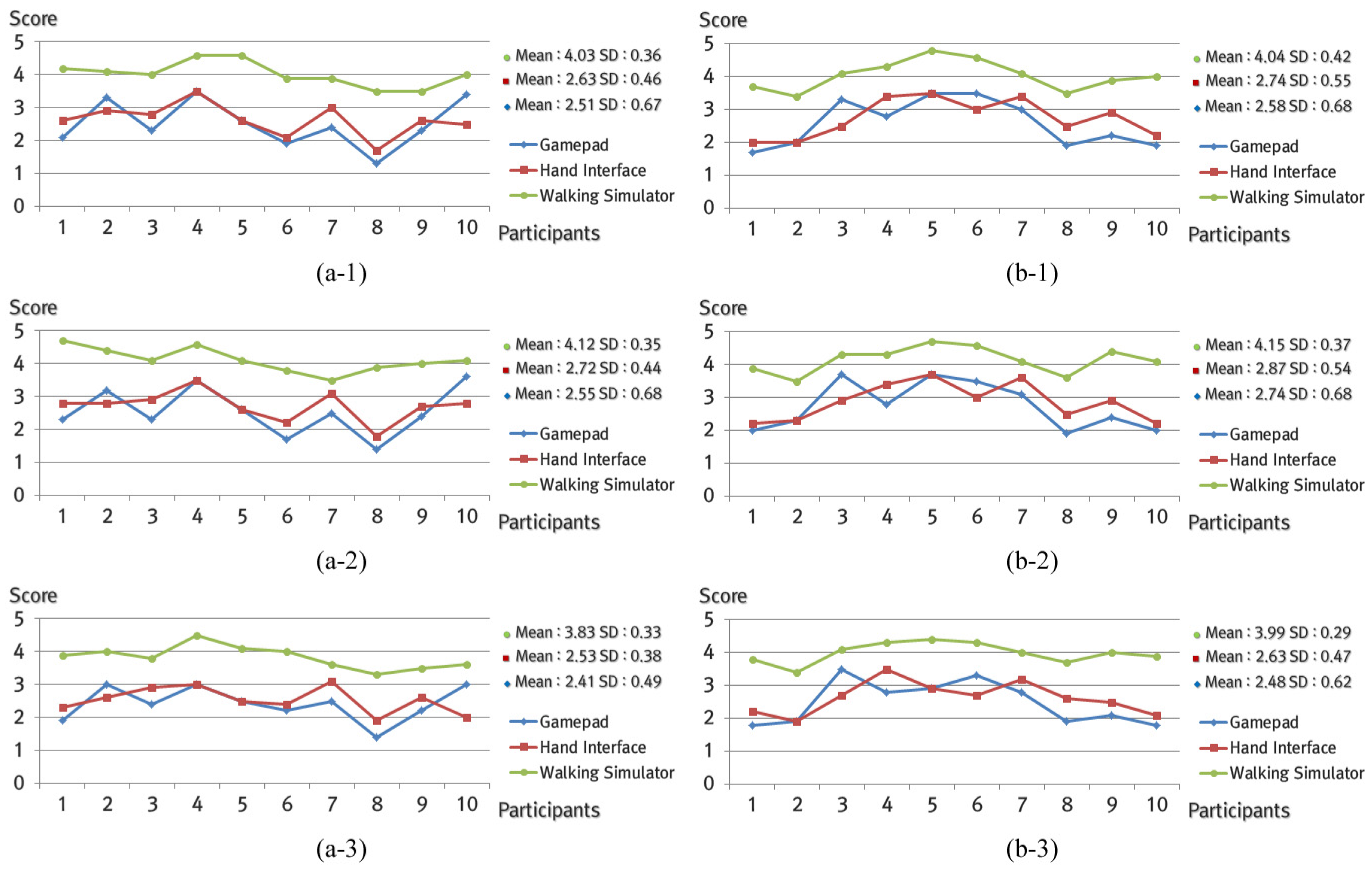

4.2. Immersion

4.3. Presence

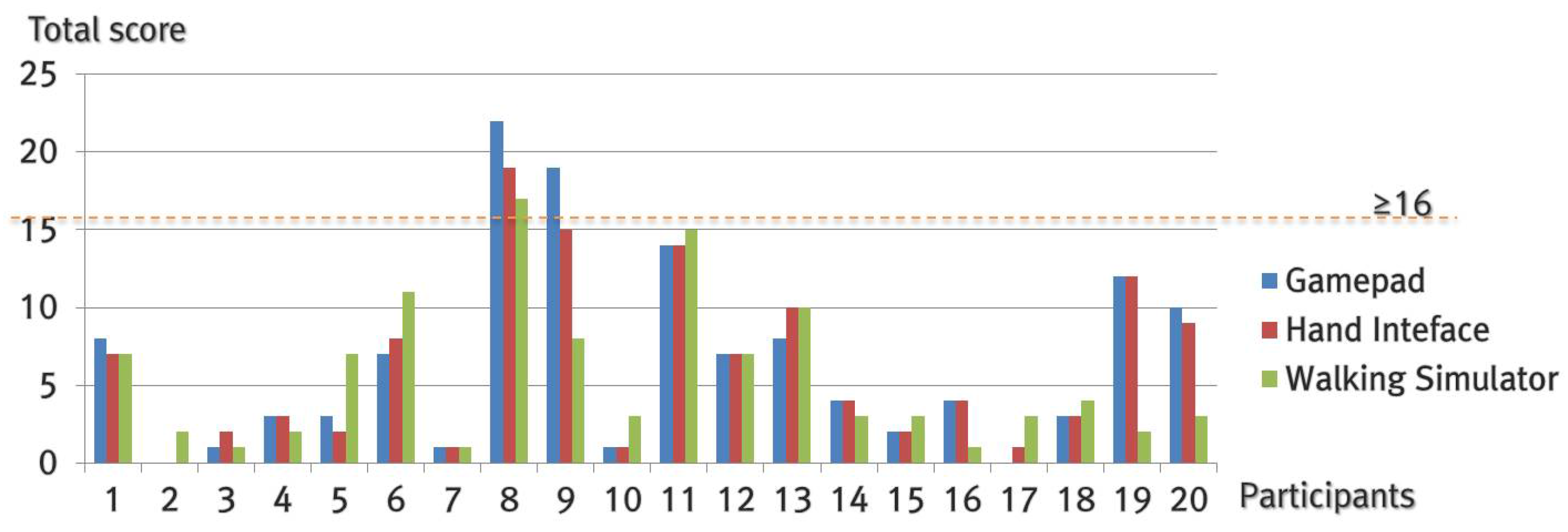

4.4. VR Sickness

5. Limitation and Discussion

6. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Slater, M. Presence and the sixth sense. Presence-Teleoper. Virtual Environ. 2002, 11, 435–439. [Google Scholar] [CrossRef]

- Sanchez-Vives, M.V.; Slater, M. From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 2005, 6, 332–339. [Google Scholar] [CrossRef] [PubMed]

- Slater, M.; Sanchez-Vives, M.V. Enhancing Our Lives with Immersive Virtual Reality. Front. Robot. AI 2016, 3. [Google Scholar] [CrossRef]

- Tanaka, N.; Takagi, H. Virtual Reality Environment Design of Managing Both Presence and Virtual Reality Sickness. J. Physiol. Anthropol. Appl. Hum. Sci. 2004, 23, 313–317. [Google Scholar] [CrossRef]

- Sutherland, I.E. A Head-mounted Three Dimensional Display. In Proceedings of the Fall Joint Computer Conference, (Part I AFIPS’68), San Francisco, CA, USA, 9–11 December 1968; ACM: New York, NY, USA, 1968; pp. 757–764. [Google Scholar]

- Schissler, C.; Nicholls, A.; Mehra, R. Efficient HRTF-based Spatial Audio for Area and Volumetric Sources. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1356–1366. [Google Scholar] [CrossRef] [PubMed]

- Serafin, S.; Turchet, L.; Nordahl, R.; Dimitrov, S.; Berrezag, A.; Hayward, V. Identification of virtual grounds using virtual reality haptic shoes and sound synthesis. In Proceedings of the Eurohaptics 2010 Special Symposium, 1st ed.; Nijholt, A., Dijk, E.O., Lemmens, P.M.C., Luitjens, S., Eds.; University of Twente: Enschede, The Netherlands, 2010; Volume 10-01, pp. 61–70. [Google Scholar]

- Seinfeld, S.; Bergstrom, I.; Pomes, A.; Arroyo-Palacios, J.; Vico, F.; Slater, M.; Sanchez-Vives, M.V. Influence of Music on Anxiety Induced by Fear of Heights in Virtual Reality. Front. Psychol. 2016, 6, 1969. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Chai, J.; Xu, Y.Q. Combining Marker-based Mocap and RGB-D Camera for Acquiring High-fidelity Hand Motion Data. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation; Eurographics Association (SCA’12), Aire-la-Ville, Switzerland, 11–13 July 2012; pp. 33–42. [Google Scholar]

- Tompson, J.; Stein, M.; Lecun, Y.; Perlin, K. Real-Time Continuous Pose Recovery of Human Hands Using Convolutional Networks. ACM Trans. Graph. 2014, 33, 169. [Google Scholar] [CrossRef]

- Nordahl, R.; Berrezag, A.; Dimitrov, S.; Turchet, L.; Hayward, V.; Serafin, S. Preliminary Experiment Combining Virtual Reality Haptic Shoes and Audio Synthesis. In Proceedings of the 2010 International Conference on Haptics—Generating and Perceiving Tangible Sensations: Part II (EuroHaptics’10), Amsterdam, The Netherlands, 8–10 July 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 123–129. [Google Scholar]

- Cheng, L.P.; Roumen, T.; Rantzsch, H.; Köhler, S.; Schmidt, P.; Kovacs, R.; Jasper, J.; Kemper, J.; Baudisch, P. TurkDeck: Physical Virtual Reality Based on People. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology (UIST’15), Charlotte, NC, USA, 11–15 November 2015; ACM: New York, NY, USA, 2015; pp. 417–426. [Google Scholar]

- Slater, M.; Sanchez-Vives, M.V. Transcending the Self in Immersive Virtual Reality. IEEE Comput. 2014, 47, 24–30. [Google Scholar] [CrossRef]

- Kokkinara, E.; Kilteni, K.; Blom, K.J.; Slater, M. First Person Perspective of Seated Participants over a Walking Virtual Body Leads to Illusory Agency Over the Walking. Sci. Rep. 2016, 6, 28879. [Google Scholar] [CrossRef] [PubMed]

- Antley, A.; Slater, M. The Effect on Lower Spine Muscle Activation of Walking on a Narrow Beam in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2011, 17, 255–259. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Jeong, K.; Kim, J. MAVE: Maze-based immersive virtual environment for new presence and experience. Comput. Anim. Virtual Worlds 2017. [Google Scholar] [CrossRef]

- Hoffman, H.G. Physically touching virtual objects using tactile augmentation enhances the realism of virtual environments. In Proceedings of the IEEE 1998 Virtual Reality Annual International Symposium, Atlanta, GA, USA, 14–18 March 1998; IEEE Computer Society: Washington, DC, USA, 1998; pp. 59–63. [Google Scholar]

- Carvalheiro, C.; Nóbrega, R.; da Silva, H.; Rodrigues, R. User Redirection and Direct Haptics in Virtual Environments. In Proceedings of the 2016 ACM on Multimedia Conference (MM’16), Amsterdam, The Netherlands, 15–19 October 2016; ACM: New York, NY, USA, 2016; pp. 1146–1155. [Google Scholar]

- Burdea, G.C. Haptics issues in virtual environments. In Proceedings of the Computer Graphics International 2000, Geneva, Switzerland, 19–24 June 2000; IEEE Computer Society: Washington, DC, USA, 2000; pp. 295–302. [Google Scholar]

- Schorr, S.B.; Okamura, A. Three-dimensional skin deformation as force substitution: Wearable device design and performance during haptic exploration of virtual environments. IEEE Trans. Haptics 2017. [Google Scholar] [CrossRef] [PubMed]

- Usoh, M.; Arthur, K.; Whitton, M.C.; Bastos, R.; Steed, A.; Slater, M.; Brooks, F.P., Jr. Walking > Walking-in-place > Flying, in Virtual Environments. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH’99), Los Angeles, California, USA, 8–13 August 1999; ACM Press/Addison-Wesley Publishing Co.: New York, NY, USA, 1999; pp. 359–364. [Google Scholar]

- Hayward, V.; Astley, O.R.; Cruz-Hernandez, M.; Grant, D.; Robles-De-La-Torre, G. Haptic interfaces and devices. Sens. Rev. 2004, 24, 16–29. [Google Scholar] [CrossRef]

- Yano, H.; Miyamoto, Y.; Iwata, H. Haptic Interface for Perceiving Remote Object Using a Laser Range Finder. In Proceedings of the World Haptics 2009—Third Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems (WHC’09), Salt Lake City, UT, USA, 18–20 March 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 196–201. [Google Scholar]

- Metcalf, C.D.; Notley, S.V.; Chappell, P.H.; Burridge, J.H.; Yule, V.T. Validation and Application of a Computational Model for Wrist and Hand Movements Using Surface Markers. IEEE Trans. Biomed. Eng. 2008, 55, 1199–1210. [Google Scholar] [CrossRef] [PubMed]

- Stollenwerk, K.; Vögele, A.; Sarlette, R.; Krüger, B.; Hinkenjann, A.; Klein, R. Evaluation of Markers for Optical Hand Motion Capture. In Proceedings of the 13 Workshop Virtuelle Realität und Augmented Reality der GI-Fachgruppe VR/AR, Bielefeld, Germany, 8–9 September 2016; Shaker Verlag: Aachen, Germany, 2016. [Google Scholar]

- Visell, Y.; Cooperstock, J.R.; Giordano, B.L.; Franinovic, K.; Law, A.; Mcadams, S.; Jathal, K.; Fontana, F. A Vibrotactile Device for Display of Virtual Ground Materials in Walking. In Proceedings of the 6th International Conference on Haptics: Perception, Devices and Scenarios (EuroHaptics’08), Madrid, Spain, 11–13 June 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 420–426. [Google Scholar]

- Sreng, J.; Bergez, F.; Legarrec, J.; Lécuyer, A.; Andriot, C. Using an Event-based Approach to Improve the Multimodal Rendering of 6DOF Virtual Contact. In Proceedings of the 2007 ACM Symposium on Virtual Reality Software and Technology (VRST’07), Newport Beach, CA, USA, 5–7 November 2007; ACM: New York, NY, USA, 2007; pp. 165–173. [Google Scholar]

- Cheng, L.P.; Lühne, P.; Lopes, P.; Sterz, C.; Baudisch, P. Haptic Turk: A Motion Platform Based on People. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’14), Toronto, Ontario, Canada, 26 April–1 May 2014; ACM: New York, NY, USA, 2014; pp. 3463–3472. [Google Scholar]

- Vasylevska, K.; Kaufmann, H.; Bolas, M.; Suma, E.A. Flexible spaces: Dynamic layout generation for infinite walking in virtual environments. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; IEEE Computer Society: Washington, DC, USA, 2013; pp. 39–42. [Google Scholar]

- Slater, M.; Usoh, M.; Steed, A. Taking steps: The influence of a walking technique on presence in virtual reality. ACM Trans. Comput.-Hum. Interact. 1995, 2, 201–219. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Leeb, R.; Keinrath, C.; Friedman, D.; Neuper, C.; Guger, C.; Slater, M. Walking from thought. Brain Res. 2006, 1071, 145–152. [Google Scholar] [CrossRef] [PubMed]

- Stoolfeather-Games. Low-Poly Landscape. 2016. Available online: http://www.stoolfeather.com/low-poly-series-landscape.html (accessed on 17 January 2017).

- Manufactura-K4. Cartoon Town. 2014. Available online: https://www.assetstore.unity3d.com/en/#!/content/17254 (accessed on 17 January 2017).

- Zatylny, P. Realistic Nature Environment. 2016. Available online: https://www.assetstore.unity3d.com/kr/#!/content/58429 (accessed on 17 January 2017).

- Wilcoxon, F. Individual Comparisons by Ranking Methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- Witmer, B.G.; Jerome, C.J.; Singer, M.J. The Factor Structure of the Presence Questionnaire. Presence Teleoper. Virtual Environ. 2005, 14, 298–312. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Presence Questionnaire. 2004. Available online: http://w3.uqo.ca/cyberpsy/docs/qaires/pres/PQ_va.pdf (accessed on 20 March 2017).

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator Sickness Questionnaire: An Enhanced Method for Quantifying Simulator Sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator Sickness Questionnaire. 2004. Available online: http://w3.uqo.ca/cyberpsy/docs/qaires/ssq/SSQ_va.pdf (accessed on 18 Febraury 2017).

- Bouchard, S.; St-Jacques, J.; Renaud, P.; Wiederhold, B.K. Side effects of immersions in virtual reality for people suffering from anxiety disorders. J. Cyberther. Rehabil. 2009, 2, 127–137. [Google Scholar]

| Frames per Second (fps) | Number of Polygons (Million Byte, M) | |||||

|---|---|---|---|---|---|---|

| Minimum | Maximum | Mean | Minimum | Maximum | Mean | |

| Low-poly Landscape | 83.1 | 108.3 | 88.7 | 0.10693 | 1.1015 | 0.60543 |

| Cartoon Town | 77.3 | 124.4 | 98.7 | 0.24053 | 0.6252 | 0.43125 |

| Nature Environment | 117.1 | 144.0 | 122.3 | 0.84647 | 2.1570 | 1.15134 |

| Samples | Significance Probability (p-Value) |

|---|---|

| Gamepad : Hand interface | |

| Gamepad : Walking simulator | |

| Hand interface : Walking simulator |

| Mean (Raw Data) | Standard Deviation (SD) | ||||||

|---|---|---|---|---|---|---|---|

| Low Poly Landscape | Cartoon Town | Nature Environment | Low Poly Landscape | Cartoon Town | Nature Environment | ||

| Total | G | 111.5 (5.87) | 112.2 (5.91) | 111.1 (5.85) | 3.44 | 3.79 | 3.70 |

| H | 114.6 (6.03) | 115.3 (6.07) | 114.2 (6.01) | 3.35 | 3.47 | 3.52 | |

| S | 118.3 (6.23) | 119.3 (6.28) | 117.9 (6.20) | 5.33 | 5.16 | 5.09 | |

| Realism | G | 39.0 (5.57) | 39.7 (5.67) | 38.6 (5.51) | 1.84 | 2.00 | 1.85 |

| H | 41.5 (5.93) | 42.2 (6.03) | 41.1 (5.87) | 1.50 | 1.33 | 1.51 | |

| S | 44.7 (6.39) | 45.4 (6.49) | 44.0 (6.29) | 2.24 | 2.15 | 1.61 | |

| Possibility of act | G | 25.5 (6.38) | 25.5 (6.38) | 25.5 (6.38) | 1.02 | 1.02 | 1.02 |

| H | 25.2 (6.30) | 25.2 (6.30) | 25.2 (6.30) | 0.98 | 0.98 | 0.98 | |

| S | 25.1 (6.28) | 25.1 (6.28) | 25.1 (6.28) | 1.51 | 1.51 | 1.51 | |

| Quality of interface | G | 17.4 (5.8) | 17.4 (5.8) | 17.4 (5.8) | 1.36 | 1.36 | 1.36 |

| H | 17.8 (5.93) | 17.8 (5.93) | 17.8 (5.93) | 1.25 | 1.25 | 1.25 | |

| S | 18.0 (6.00) | 18.0 (6.00) | 18.0 (6.00) | 1.48 | 1.48 | 1.48 | |

| Possibility of examine | G | 18.2 (6.07) | 18.2 (6.07) | 18.2 (6.07) | 1.24 | 1.24 | 1.24 |

| H | 18.2 (6.07) | 18.2 (6.07) | 18.2 (6.07) | 1.25 | 1.25 | 1.25 | |

| S | 18.3 (6.10) | 18.6 (6.20) | 18.6 (6.20) | 1.27 | 1.11 | 1.17 | |

| Self-evaluation of performance | G | 11.4 (5.70) | 11.4 (5.70) | 11.4 (5.70) | 1.02 | 1.02 | 1.02 |

| H | 11.9 (5.95) | 11.9 (5.95) | 11.9 (5.95) | 0.94 | 0.94 | 0.94 | |

| S | 12.2 (6.10) | 12.2 (6.10) | 12.2 (6.10) | 1.17 | 1.17 | 1.17 | |

| Mean | Standard Deviation (SD) | Minimum | Maximum | ||

|---|---|---|---|---|---|

| Original SSQ (Weighted) | |||||

| Total | G | 31.98 | 28.67 | 0 | 89.76 |

| H | 31.04 | 25.23 | 0 | 82.28 | |

| S | 28.80 | 22.03 | 0 | 74.80 | |

| Nausea | G | 13.36 | 14.90 | 0 | 38.16 |

| H | 12.40 | 13.86 | 0 | 38.16 | |

| S | 12.89 | 13.92 | 0 | 57.24 | |

| Oculomotor | G | 26.90 | 24.15 | 0 | 68.22 |

| H | 25.39 | 19.95 | 0 | 60.64 | |

| S | 24.64 | 17.76 | 0 | 60.64 | |

| Disorientation | G | 50.11 | 51.22 | 0 | 167.04 |

| H | 50.81 | 47.87 | 0 | 167.04 | |

| S | 43.15 | 37.33 | 0 | 125.28 | |

| Alternative SSQ (Raw Data) | |||||

| Total | G | 6.45 | 6.10 | 0 | 22 |

| H | 6.20 | 5.32 | 0 | 19 | |

| S | 5.50 | 4.57 | 0 | 17 | |

| Nausea | G | 1.40 | 1.56 | 0 | 4 |

| H | 1.30 | 1.45 | 0 | 4 | |

| S | 1.30 | 1.49 | 0 | 6 | |

| Oculomotor | G | 3.55 | 3.19 | 0 | 10 |

| H | 3.35 | 2.63 | 0 | 8 | |

| S | 3.25 | 2.34 | 0 | 8 | |

| Disorientation | G | 3.60 | 3.68 | 0 | 12 |

| H | 3.65 | 3.44 | 0 | 13 | |

| S | 3.10 | 2.68 | 0 | 9 | |

| After 180 sec | G | 7.15 | 7.44 | 0 | 26 |

| H | 6.75 | 6.58 | 0 | 22 | |

| S | 5.90 | 5.1 | 1 | 21 | |

| After 300 sec | G | 8.05 | 7.59 | 1 | 32 |

| H | 7.60 | 6.98 | 1 | 28 | |

| S | 6.40 | 5.44 | 1 | 26 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Kim, M.; Kim, J. A Study on Immersion and VR Sickness in Walking Interaction for Immersive Virtual Reality Applications. Symmetry 2017, 9, 78. https://doi.org/10.3390/sym9050078

Lee J, Kim M, Kim J. A Study on Immersion and VR Sickness in Walking Interaction for Immersive Virtual Reality Applications. Symmetry. 2017; 9(5):78. https://doi.org/10.3390/sym9050078

Chicago/Turabian StyleLee, Jiwon, Mingyu Kim, and Jinmo Kim. 2017. "A Study on Immersion and VR Sickness in Walking Interaction for Immersive Virtual Reality Applications" Symmetry 9, no. 5: 78. https://doi.org/10.3390/sym9050078