A Case Study on Iteratively Assessing and Enhancing Wearable User Interface Prototypes

Abstract

:1. Introduction

- Advanced wearable UI prototypes—We design and introduce four wearable UI prototypes including a joystick-embedded UI, a potentiometer-embedded UI, a motion-gesture UI and an infrared (IR) based contactless UI to cover both contact and contactless modalities (Section 3).

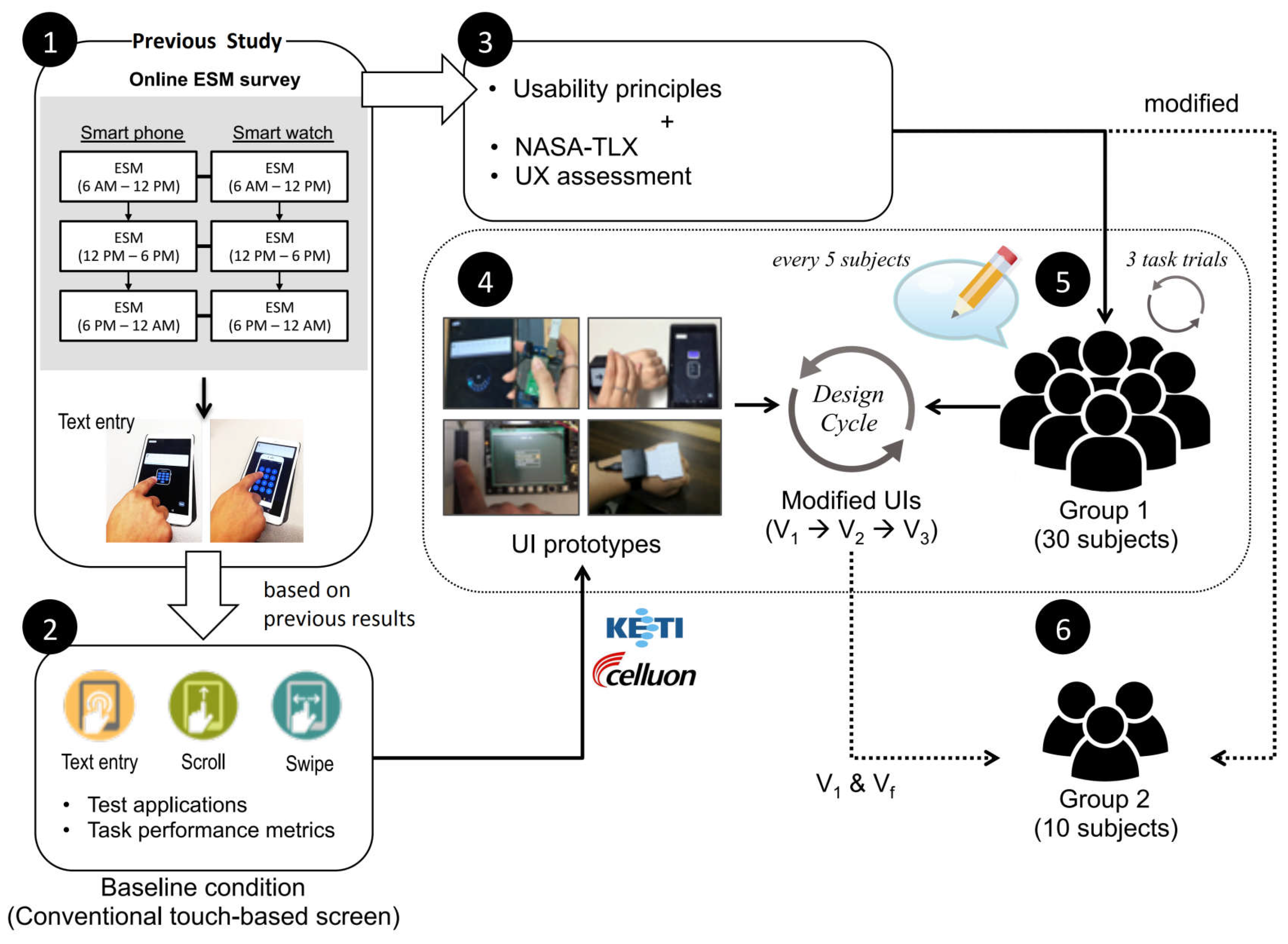

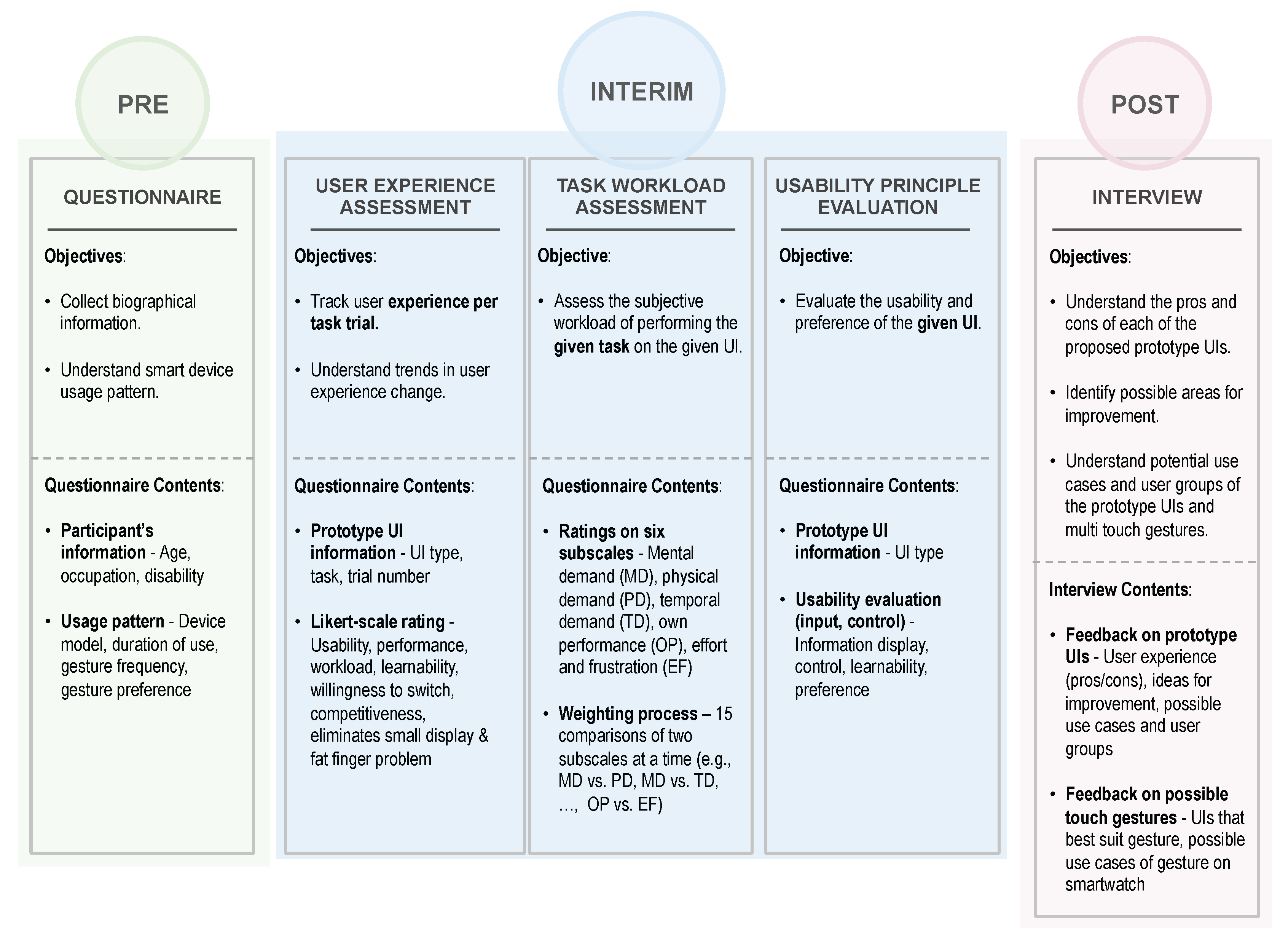

- Conceptual test framework—We present a conceptual framework for testing and evaluating futuristic wearable devices. The presented test framework is designed to iteratively test, evaluate, and improve the usability of target prototypes (Section 4).

- Design implications and recommendations—We identify strengths and weaknesses of each prototype to suggest design implications for a more usable UI and recommend user-interaction methods for future work (Section 5.3).

2. Related Work

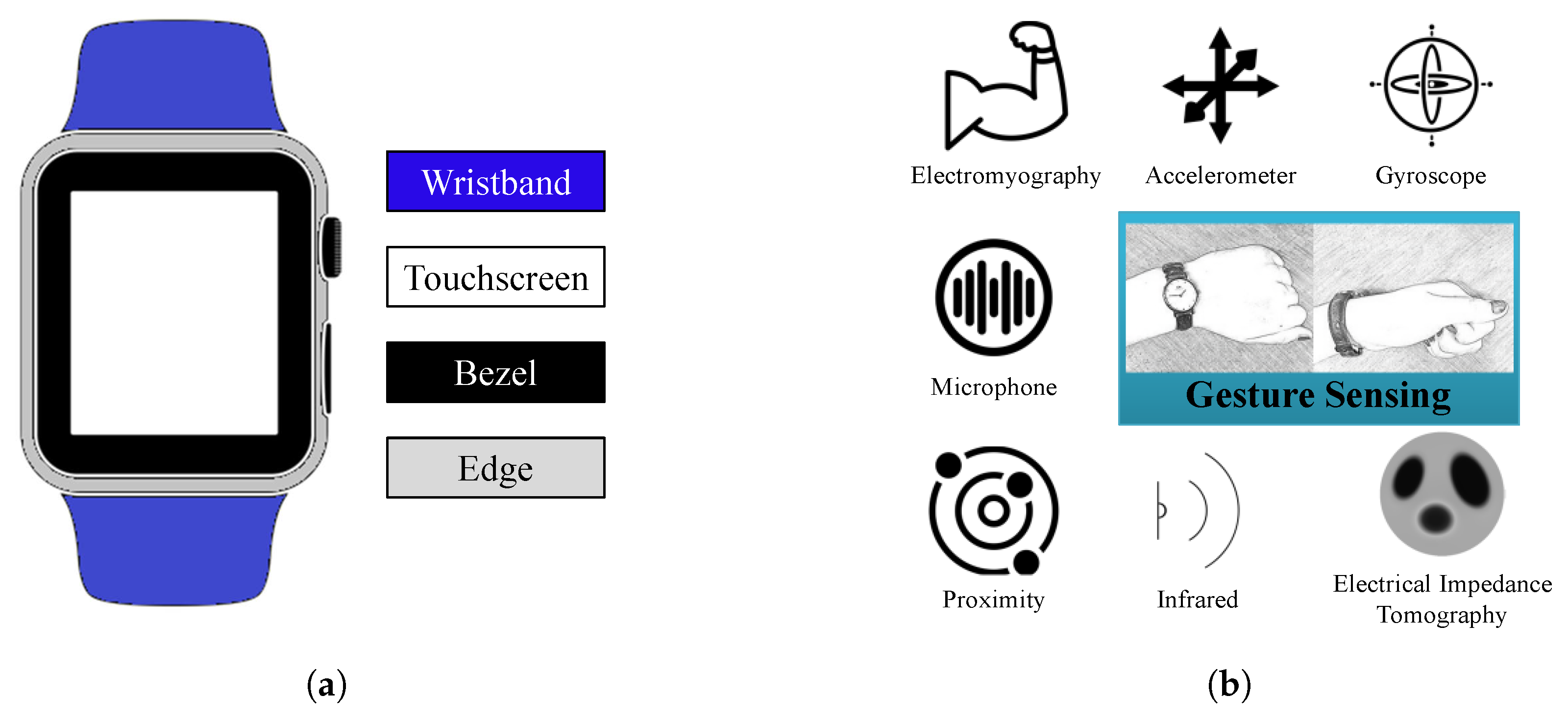

2.1. Contact-Based Interaction

2.2. Contactless Interaction

3. Design and Development of Advanced Wearable UI Prototypes

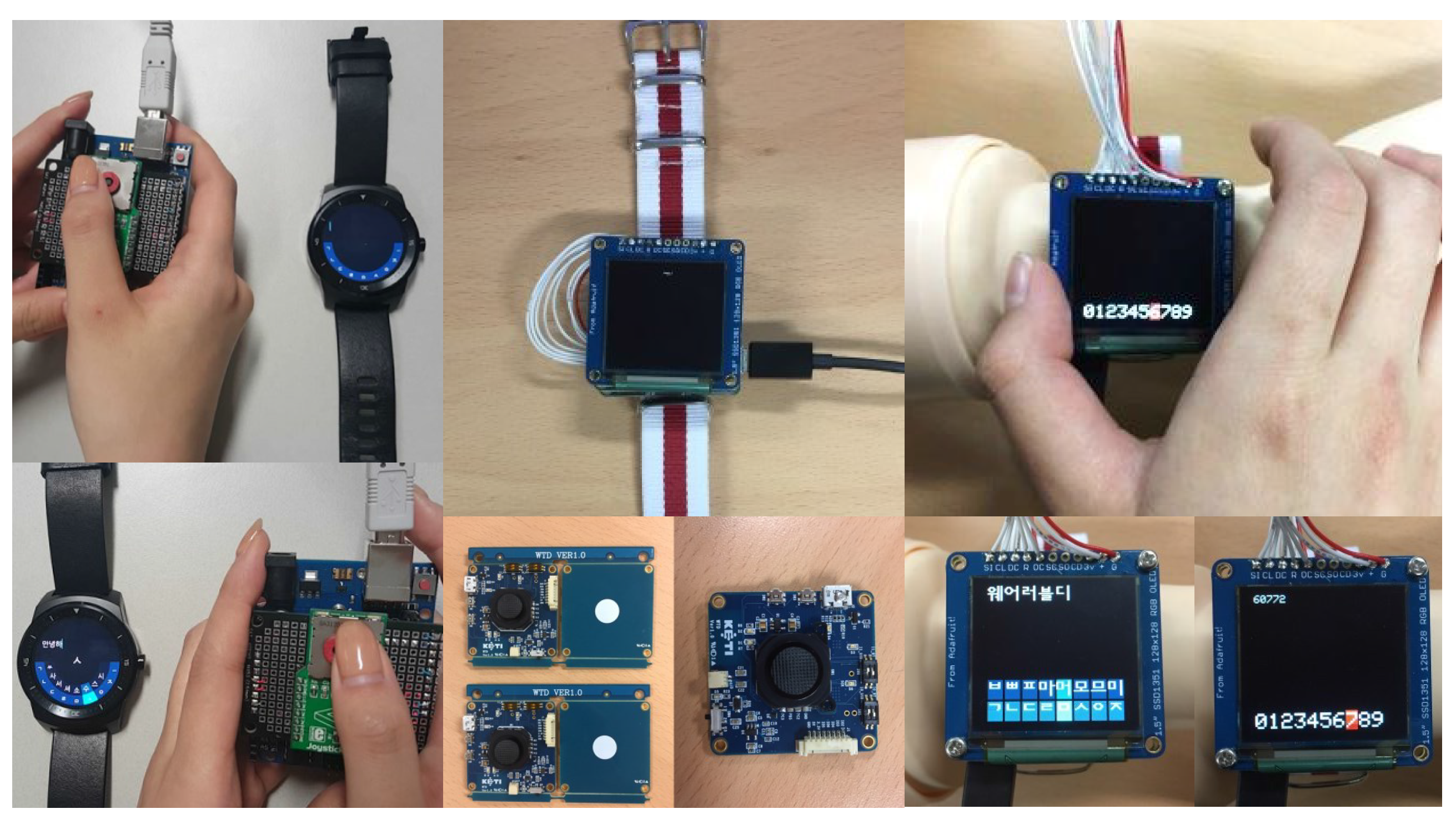

3.1. Joystick-Embedded UI

3.2. Potentiometer-Embedded UI

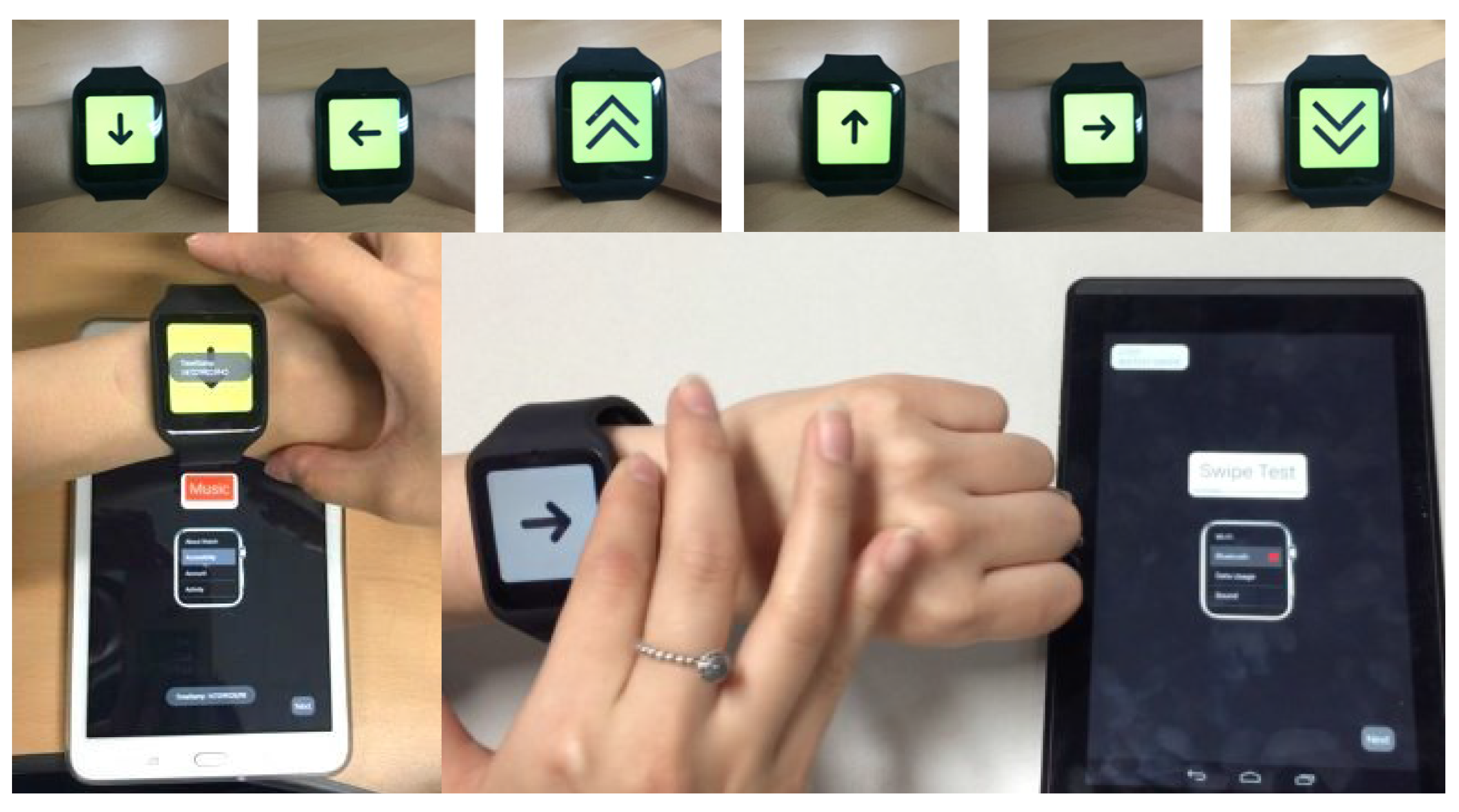

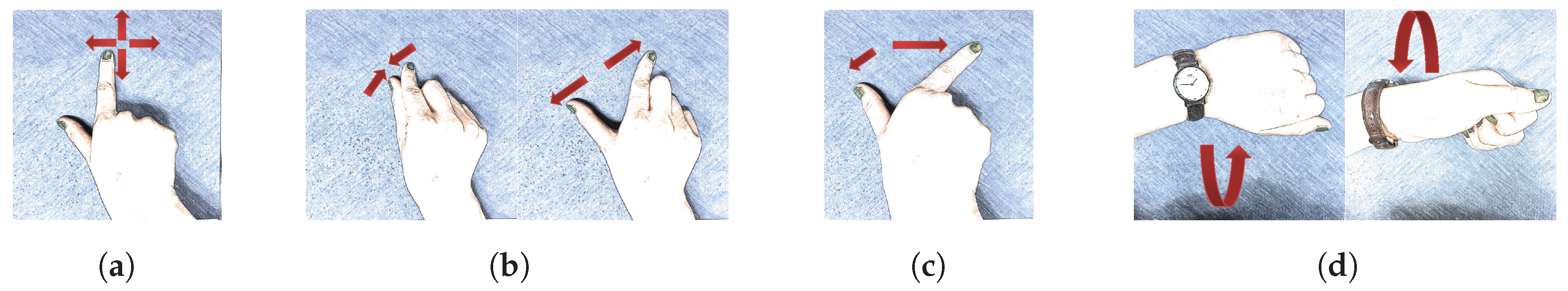

3.3. Motion-Gesture Based UI

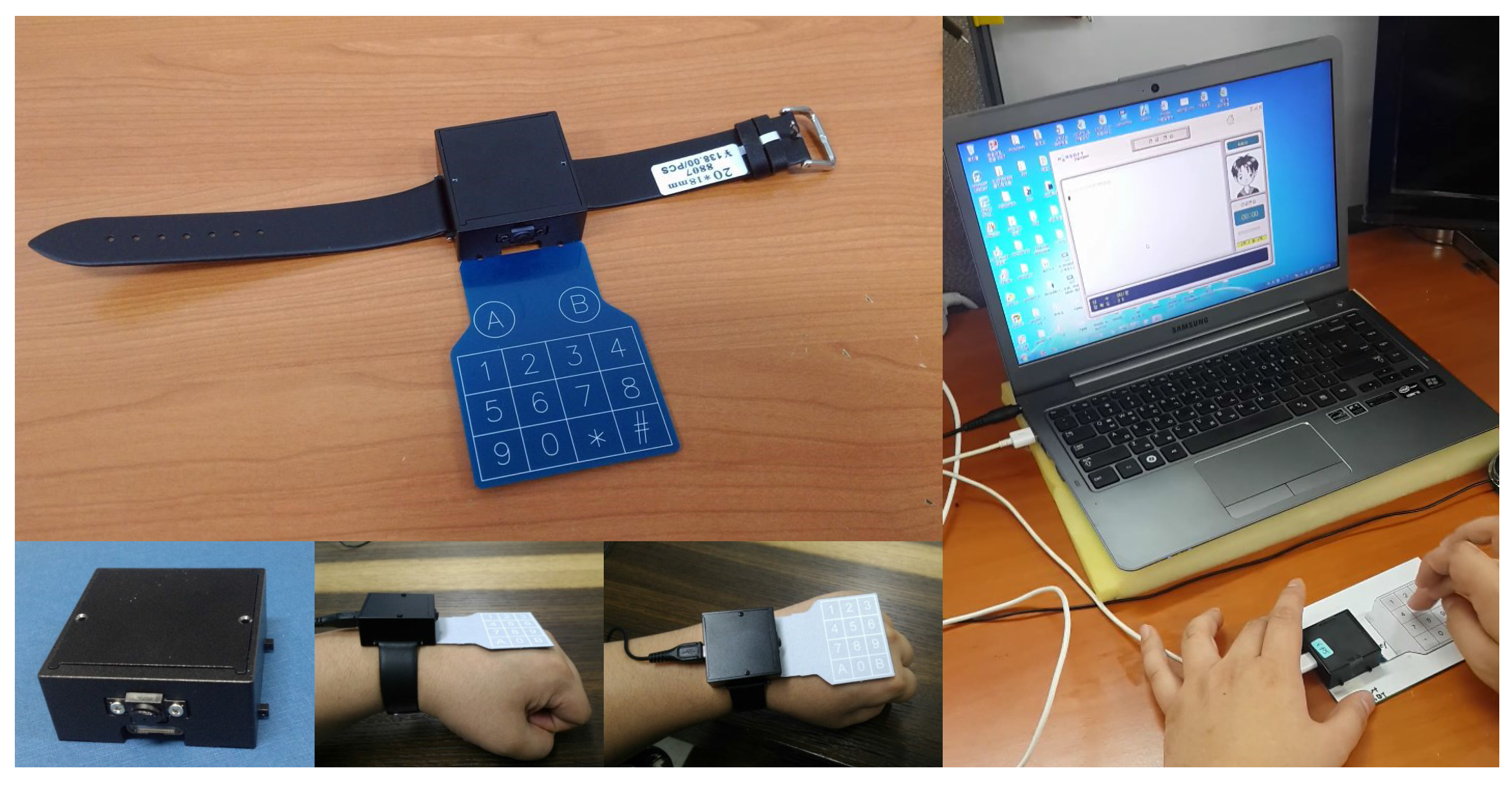

3.4. IR-Based UI

4. A Case Study with UI Prototypes

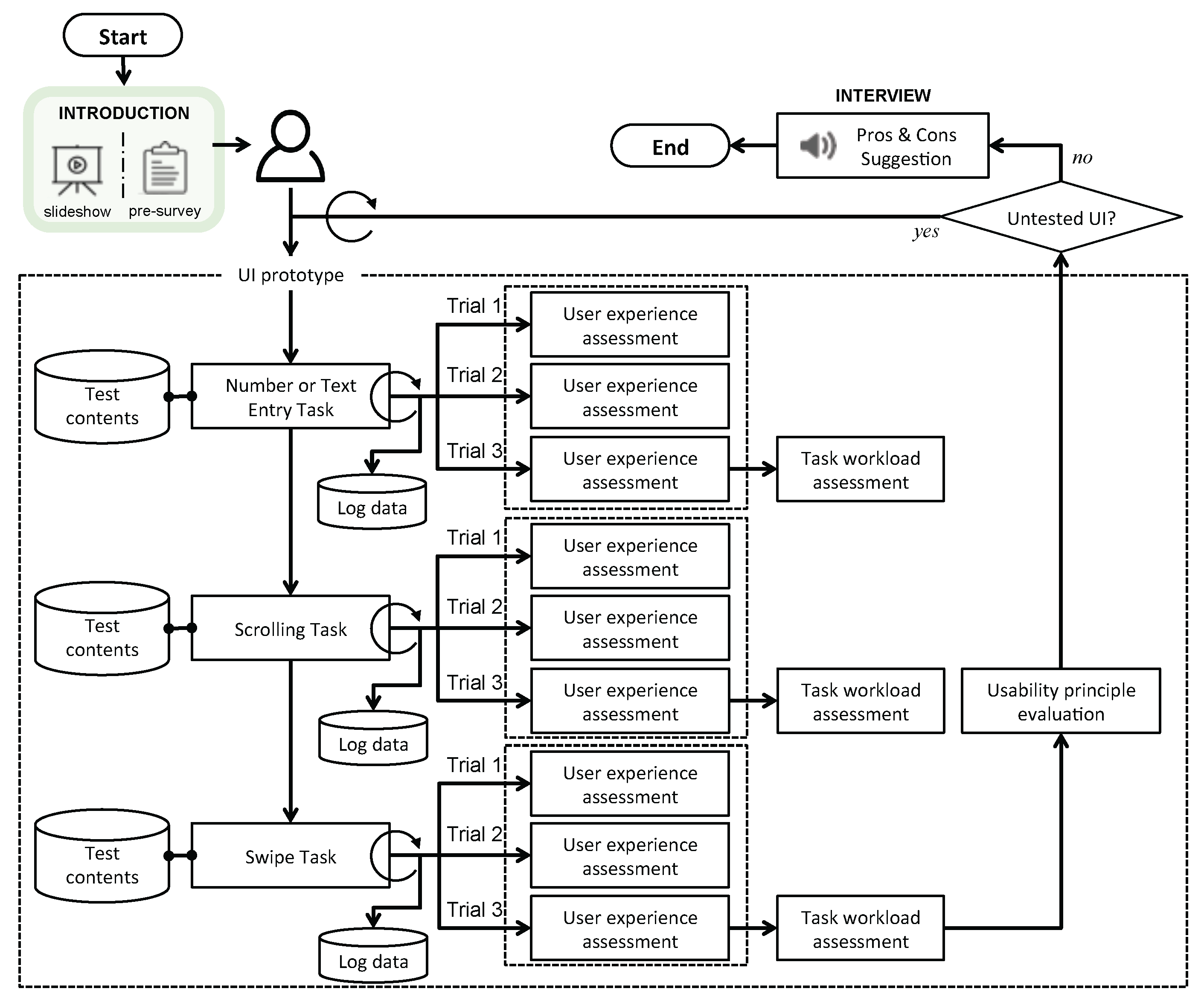

4.1. Test Framework

4.2. Experimental Section

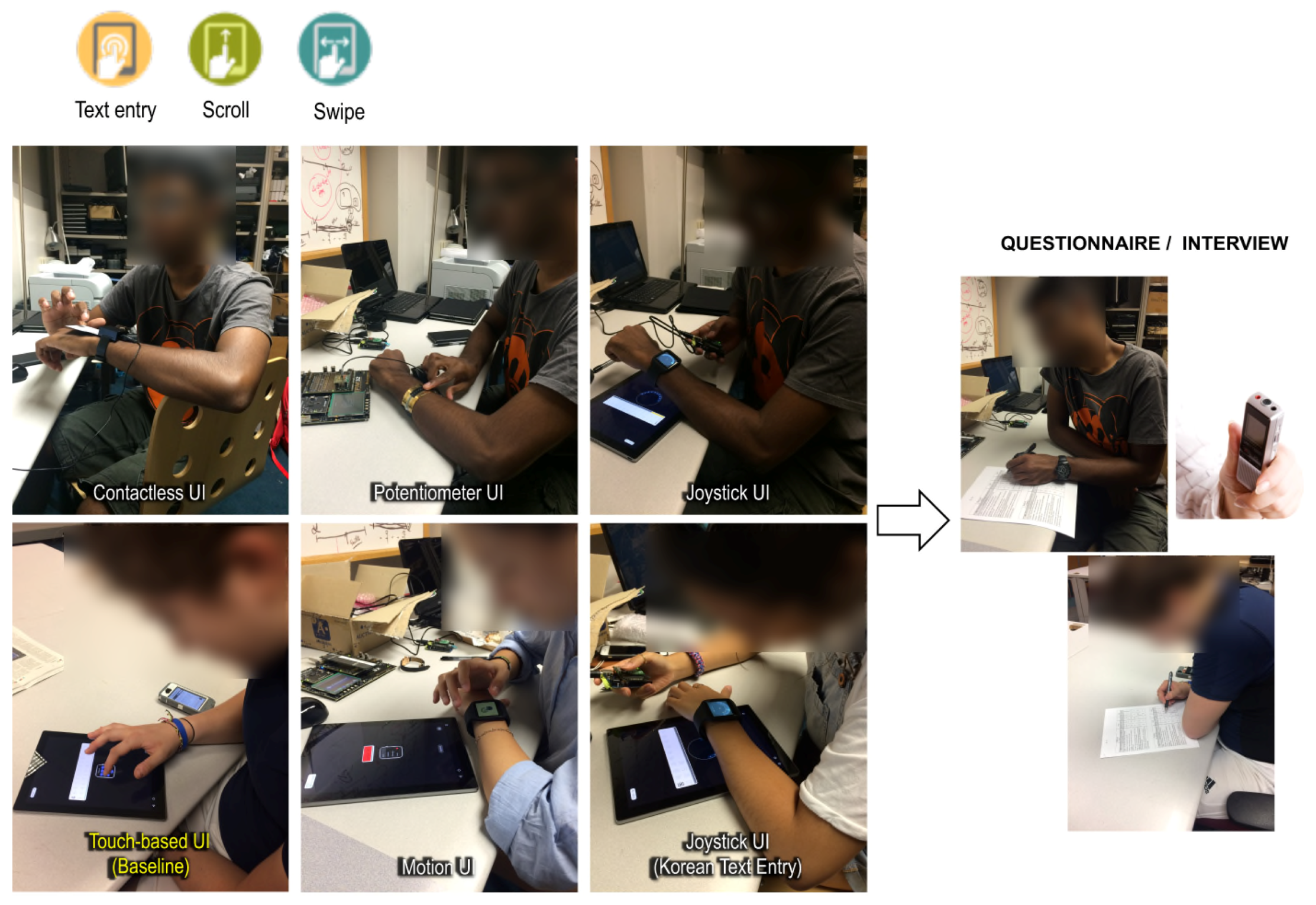

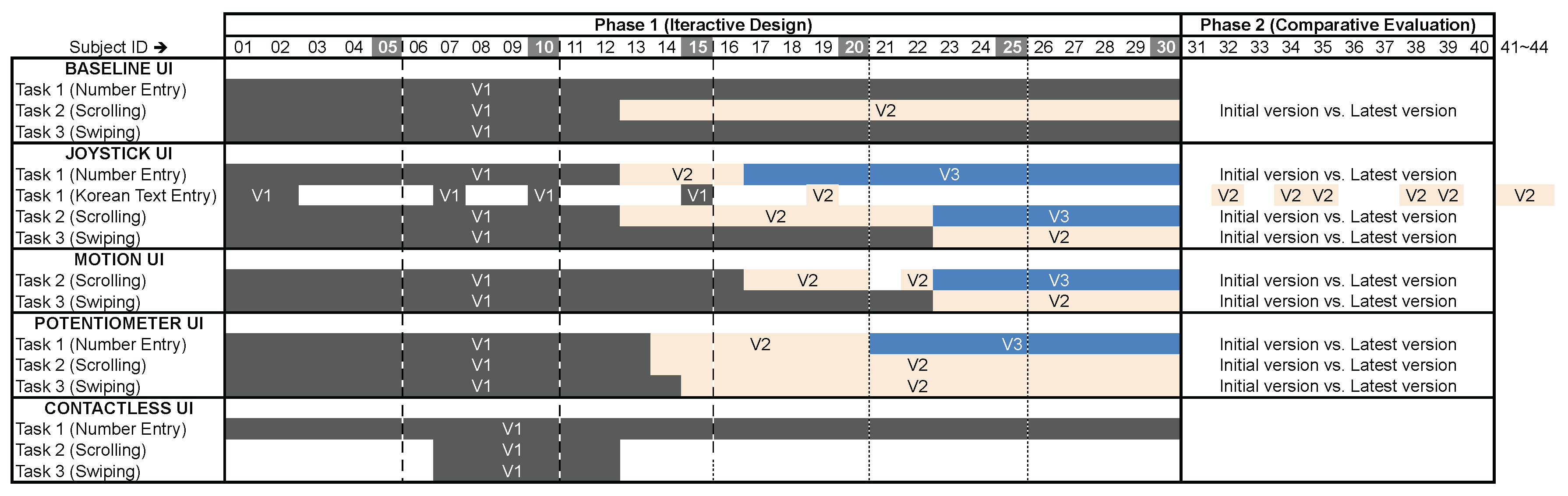

- In the first phase of the experiment (➎ in Figure 6), participants used the test applications to perform the series of interactive tasks with the given prototypes three times. Then we collected participant comments, refined the prototypes based on these usability test results and again had study participants test and evaluate the newer versions. This process was performed for each experiment session of more than five participants (M = 13, SD = 7 per UI version).

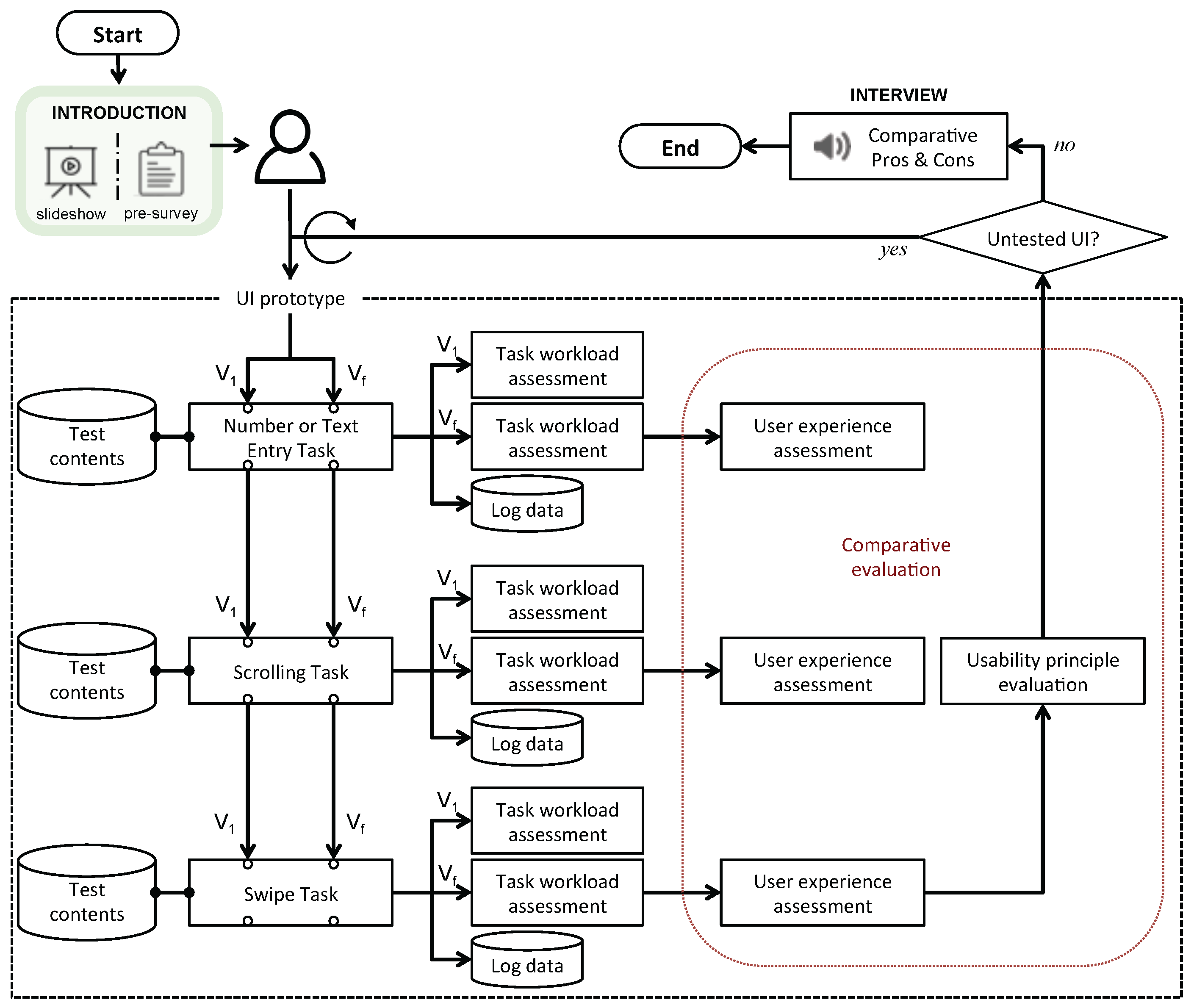

- In the second phase of the experiment (➏ in Figure 6), we recruited a smaller population of new human subjects. In this phase, the study participants were asked to perform comparative evaluations of the initial and final versions of given prototypes, aiming to demonstrate the improvements to the UI and of the UX.

4.3. Study Design

- Complete three interactive tasks by using the provided tablet and UI prototypes for smartwatches. The tasks will involve number entry, scrolling and swiping. The UI prototypes are a touchscreen-based baseline on the tablet, joystick-embedded, potentiometer-embedded, motion-gesture based and contactless IR-based UIs. The expected duration of this study, including the questionnaire sessions following the task execution, will take approximately 2.5 h in total.

- Fill out a variety of questionnaires following these conditions:

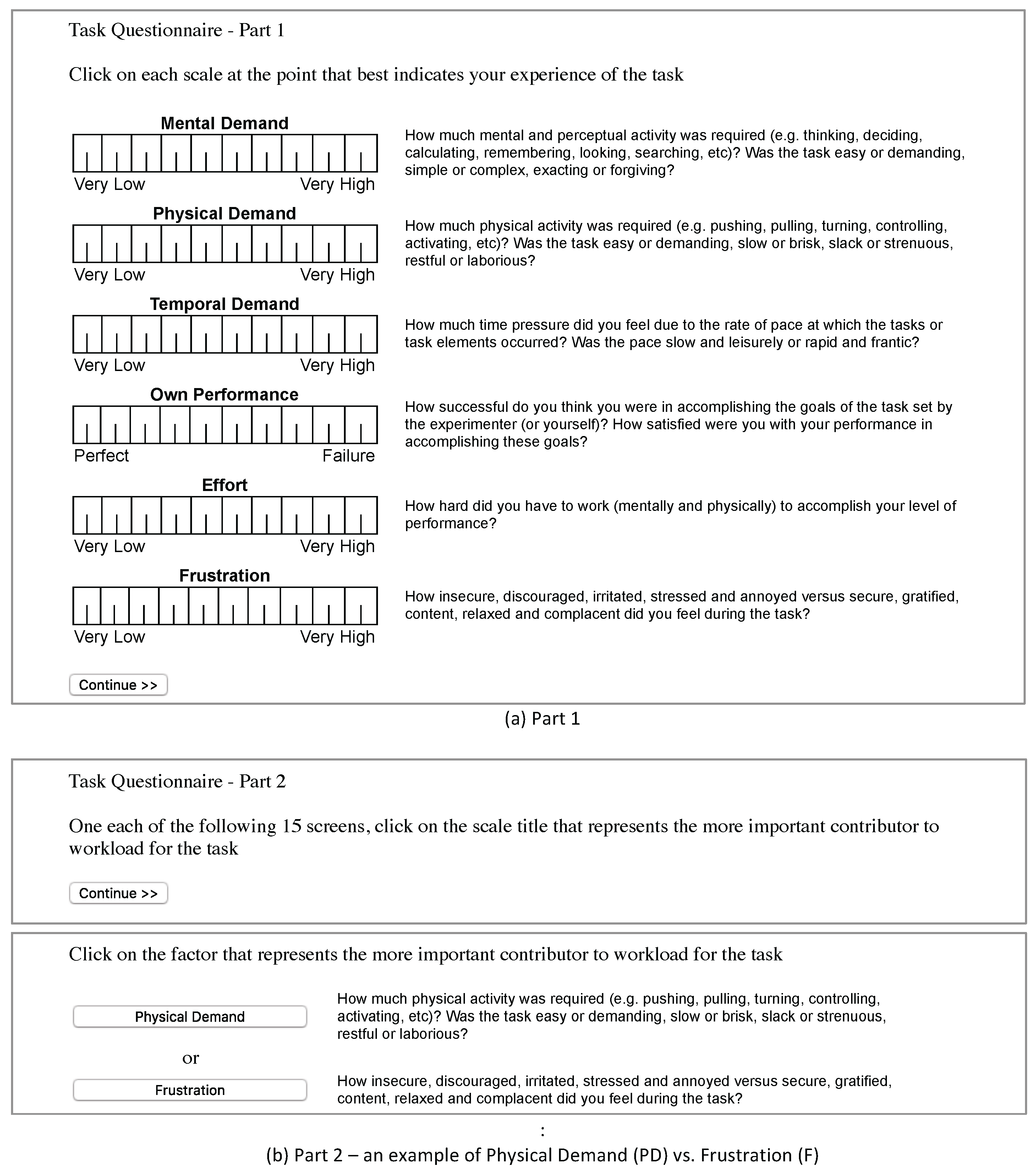

- The main user experience survey after completing each task trial. The expected duration of this survey is no longer than 30 s.

- The NASA task load index survey after completing a task three times. The expected duration of this survey is no longer than five minutes.

- The usability principle evaluation after completing all tasks for the UI prototype. The expected duration of this survey is no longer than two minutes.

- Participate in a post-experiment interview about your experience with the different UI prototypes and the multi-touch gestures. The expected duration of the interview session will be no longer than 45 min. We will be taking an audio recording of the interview for evaluation purposes with your permission.

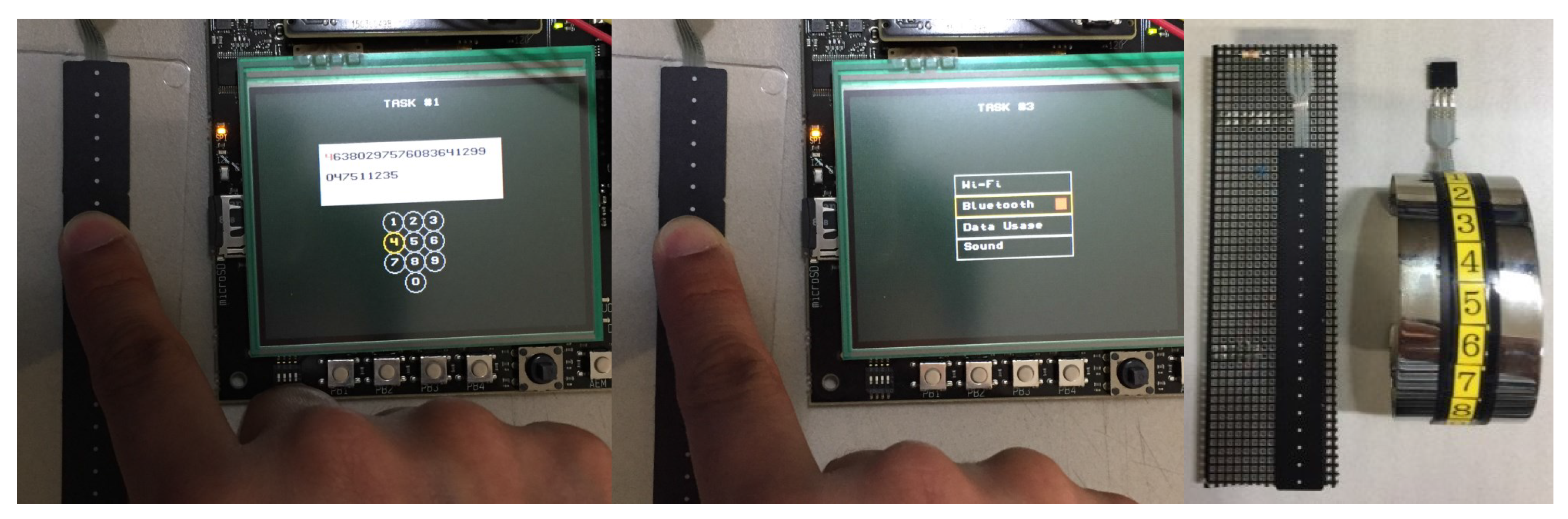

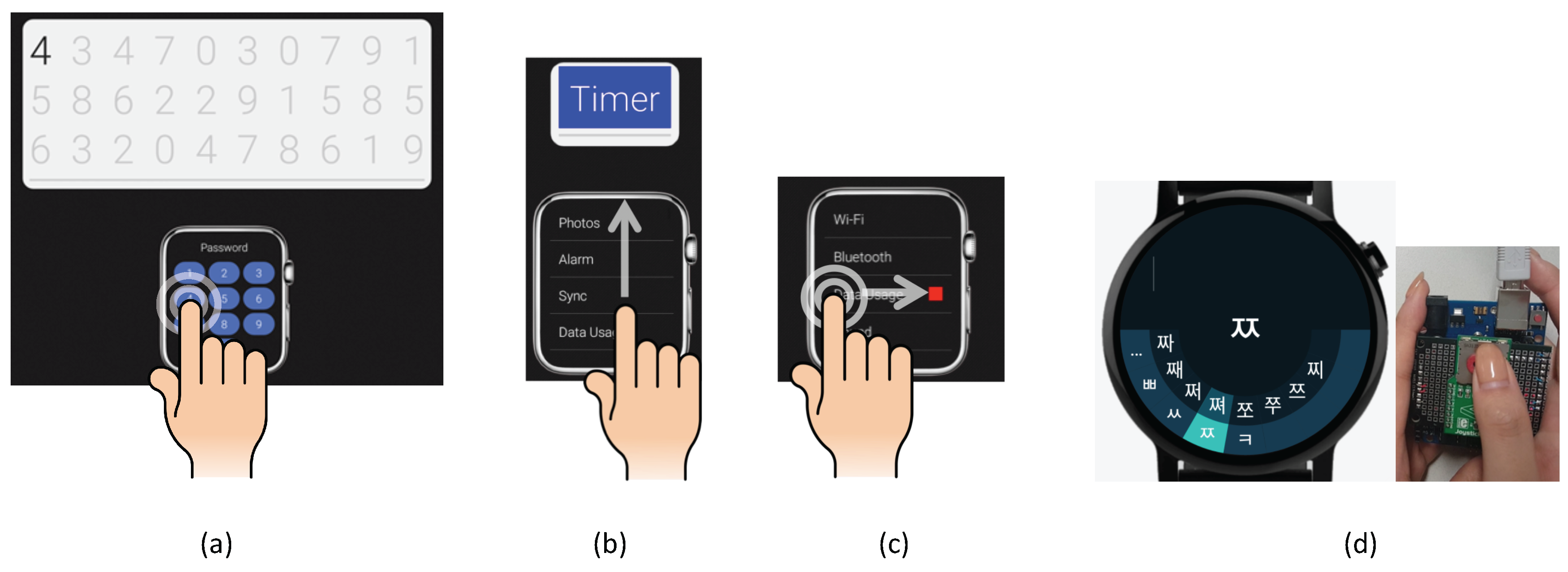

- For the Number Entry Task, we presented our participants with a tablet (baseline UI, joystick-embedded UI; See Figure 10a), an EFM 32 Wonder Gecko (potentiometer-embedded UI), and a custom-built mockup (contactless IR-based UI) that provided a simulated proxy of a number keypad for smartwatches. For the number keypad, we adopted the exact size and layout of either a smartwatch or a smartphone from the most-often sold models since their releases (i.e., iPhone 6, Apple Watch 38 mm). At the top of the screen, the numbers from 0 to 9 were displayed one at a time. Each number was displayed three times in a random sequence, for a total of 30 numbers. Participants were asked to use their index fingertip to enter the number they saw on the number keypad shown on the tablet. For subjects who completed all tasks, we collected the number of errors and the area of contact (cm) between the fingertip and touch panel.

- For the Scrolling Task, participants saw the simulated proxies similar to those described above, but these displayed a list that consisted of 48 items taken from the menu, function and app list of a mobile device (e.g., Figure 10b). For each trial, the order of the items in the list was randomized. Target items (items participants were asked to select) appeared on the 12th, 20th, 28th, 36th and 44th lines. At the top of the screen, a target item (e.g., “Timer” in Figure 10b) was displayed and participants were asked to search for and then tap the same item from the provided list by scrolling through the list with the tip of their index finger (See Figure 10b). If a participant succeeded in tapping the correct target from the list, a new target showed up at the top. The target item appeared three times at each of the 12th, 20th, 28th, 36th and 44th rows, while the order of the target item location was randomized. The total number of scrolls, number of wrong target selections and task completion time (time between touch interaction with target items shown on the top and from within the lists) were collected as performance measures.

- For the Swiping Task, our participants used the same proxies used for the scrolling tasks, but the given task here was to swipe rows (from left to right) indicated by the red box (See Figure 10c). Our test applications registered that swiping was successfully conducted when participants maintained contact with the touch screen for longer than half the length of the row. After a trial of swiping, the red box moved to another row in random sequence, with the red box appearing three times per row. The number of swiping errors, number of target selection errors and task completion time were collected as performance measures.

- The Text Entry Task was designed for the joystick-based UI prototype and given to 15 Korean participants only as an additional task after all task trials for number entry, scrolling and/or swiping were completed. At the top of a tablet screen, our test application displayed one of four Korean proverbs at a time. Our participants were then asked to manipulate the joystick input device to move around the circular menus for vowels or consonants in order to compose one Korean character and then confirm the composed character , as shown in Figure 10d. The total number of error clicks and execution time (ms) were collected as performance measures.

4.4. UI/UX Assessment

- Usability—I think the interface is usable for performing the task.

- Performance—I think I performed the task well.

- Workload—I think it was easy to perform the task.

- Adaptability—I think I’m getting used to using the interface to perform the task.

- Eliminates the SDFF problem—I think the interface allows me to explore the entire touch screen.

- Willing to Switch—I think I might consider using the UI prototype to perform the task.

- Competitiveness—I think the interface is fairly competitive with traditional smartwatch interfaces.

4.5. Usability Principle Evaluation

5. Results

5.1. Iterative Design (Phase 1)

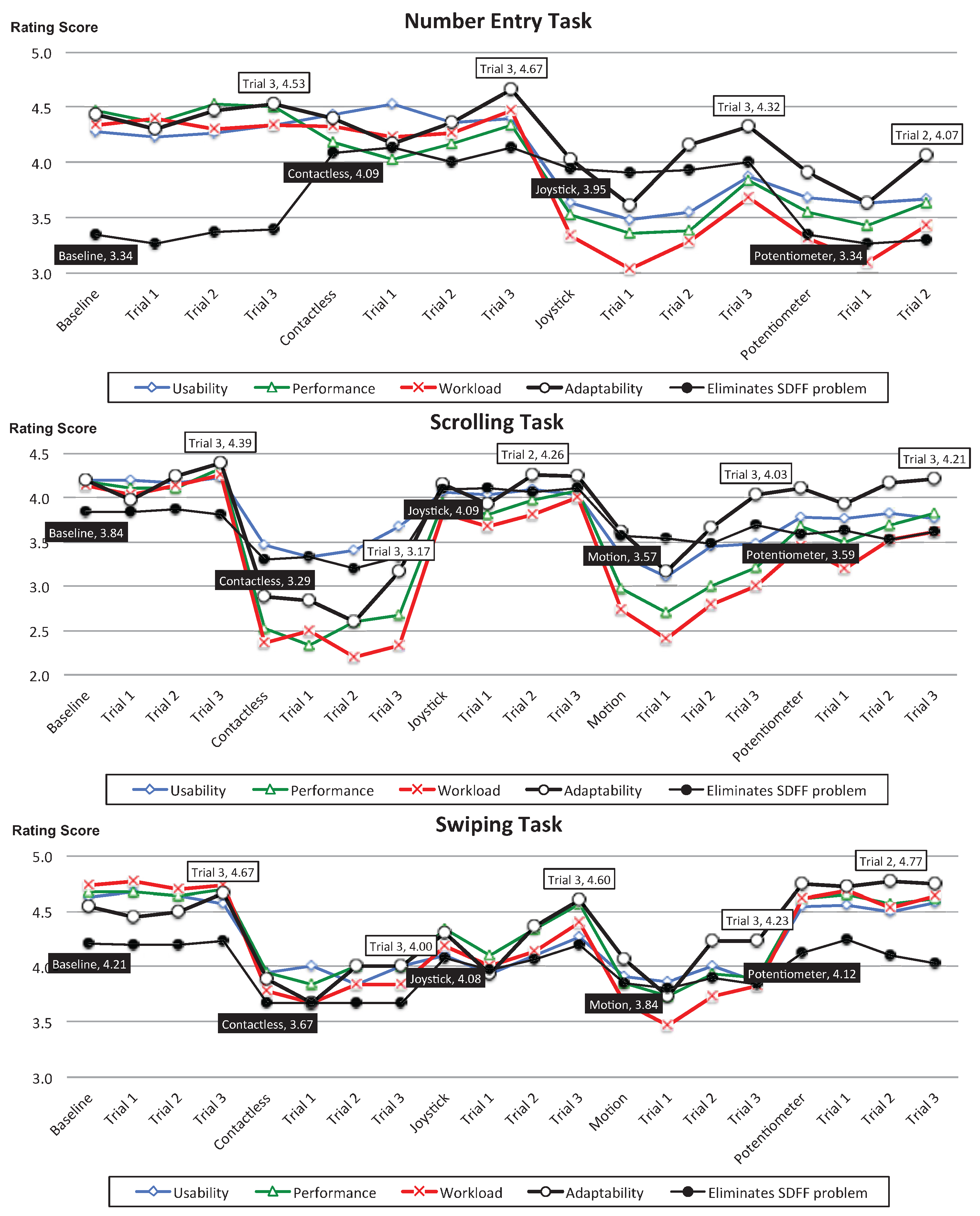

- Finding and Discussion 1: The Adaptability results show that our participants thought they were getting used to using the interface to perform the task, over repeated trials (F(2, 1127) = 24.9, p = 0.000, = 0.042). We saw this across all UI types, including the conventional touch-based screen UI, as well as across all three given tasks. However, we saw the most salient increase in the Number Entry Task using Contactless, Joystick and Potentiometer UIs; the Scrolling Task using the Contactless and Motion UIs; and the Swiping Task using the Joystick and Motion UIs.

- Finding and Discussion 2: The results in the Eliminates SDFF Problem category show that the Contactless UI was the best for the Number Entry Task; the Joystick UI was the best for the Scrolling Task; and the Baseline UI was the best for the Swiping Task. The issue of SDFF was the most problematic when using the Baseline UI, especially for performing the Number Entry Task (F(3, 117) = 4.10, p = 0.008, = 0.095 after the last 3rd trial; e.g., p = 0.028 vs. Contactless UI, p = 0.097 vs. Joystick UI at the post-hoc test). In addition, the results of this UX element suggest the need for improvements to the Potentiometer UI for performing the Number Entry Task and to the Contactless UI for performing the Scrolling Task.

- Finding and Discussion 3: The results across all UX elements show that the touch-based screen is still indispensable for future wearable devices, since the existing touch-based approach can offer our end-users reliable usability and assure task performance without necessarily being fully replaced by any novel UI. Our results also suggest that integrating the proposed UIs with touch-based screens could significantly improve end-users’ experience of exploring various gestural tasks, especially by mitigating the SDFF issue with conventional touch-based screens.

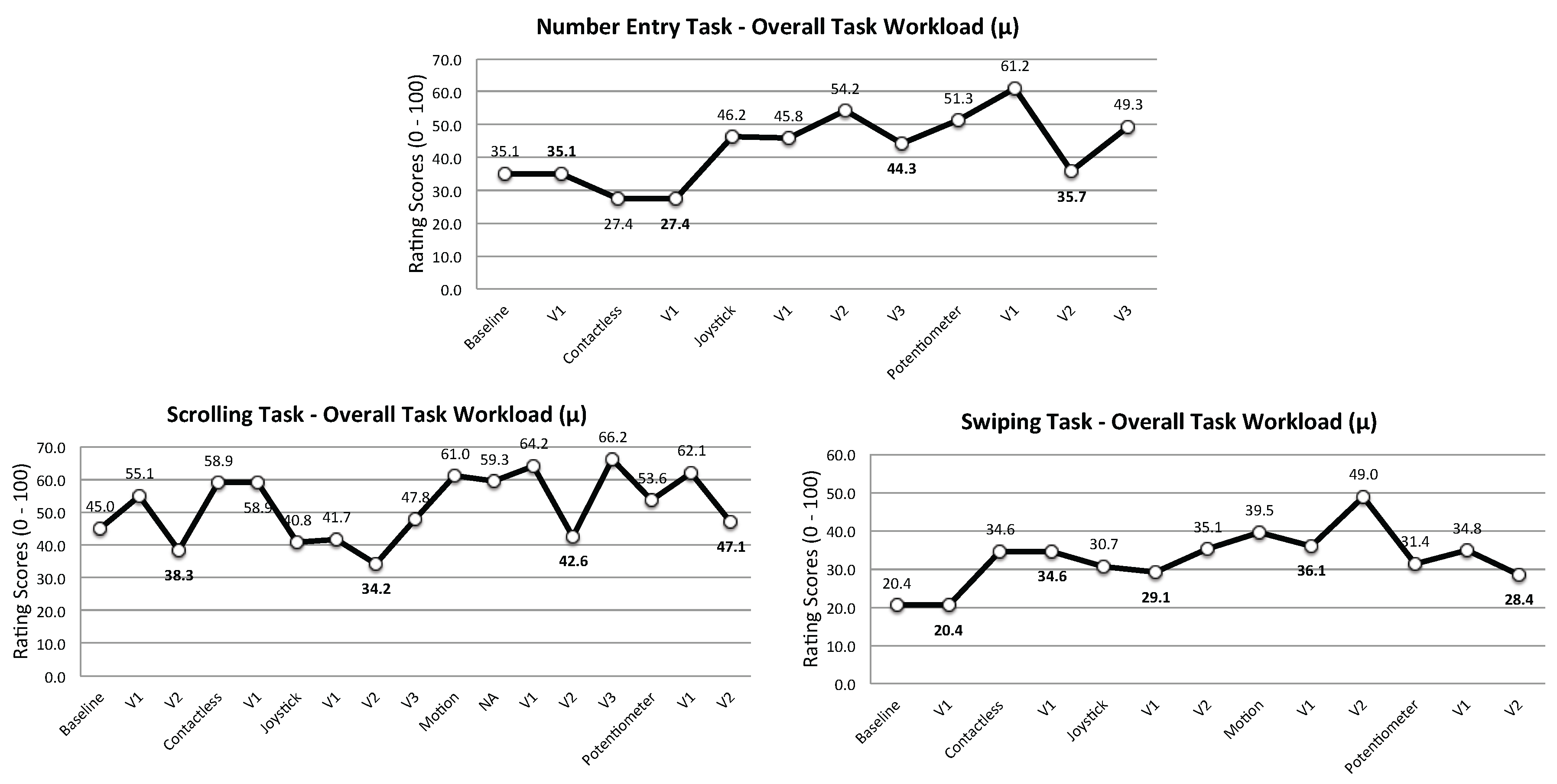

- Finding and Discussion. In performing the Number Entry Task, the Contactless UI was the best for reducing our participants’ overall task workloads. The Joystick UI was the best for the Scrolling Task, while the Baseline UI was the best for the Swiping Task. This confirms our findings from the UX assessment in the previous section. In general, the later versions of UIs significantly reduced our participants’ task workloads across all three tasks, as compared to their initial versions (p = 0.010 between the initial and the latest version; and also F(2, 368) = 4.48, p = 0.012 among all three UI versions across all tasks and all UI types). However, some results also showed that the initial or middle versions could be more effective at reducing task workload than the latest versions. For example, V2 of the Potentiometer UI and of the Joystick UI were better than their V3s for the Number Entry Task and for the Scrolling Task, respectively, and V1 of the Motion UI and of the Joystick UI were better than their V2s for the Swiping Task.

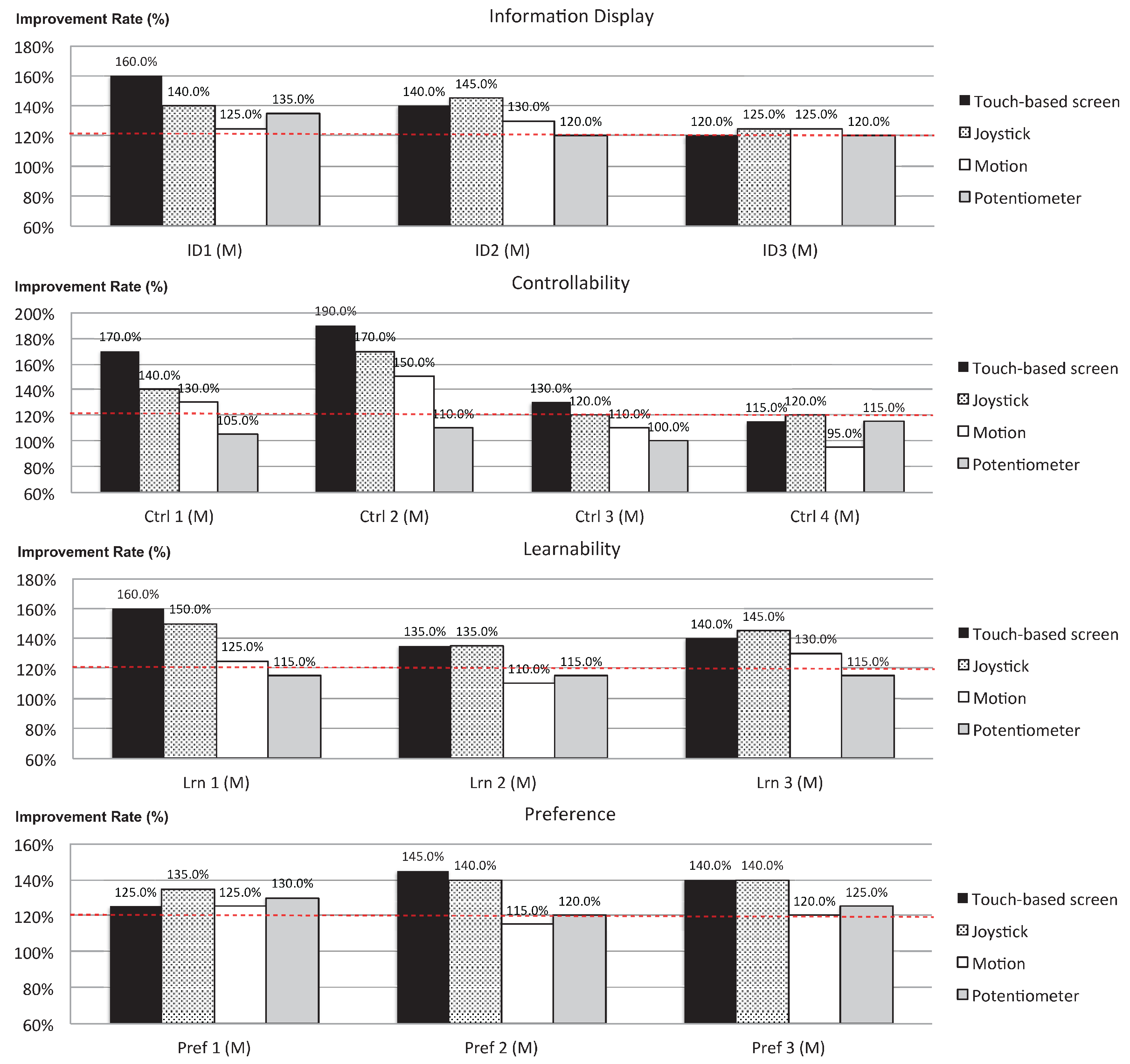

- Finding and Discussion 1. For almost all usability principles, the Contactless UI, the Joystick UI and the Potentiometer UI demonstrated their usability regardless of task type (See Box 1, 4 and 10, respectively). In particular, our participants rated these UIs as consistently usable on Information Display, which is associated with the SDFF issue in the UX assessment (e.g., ID1 scores, M = 4.48, SD = 0.68, p = 0.232 for Contactless UI; M = 4.20, SD = 0.85, p = 0.832 for Joystick UI; M = 4.03, SD = 1.03, p = 0.388) and Learnability, which is associated with Adaptability in the UX assessment (e.g., Lrn1 scores, M = 4.65, SD = 0.58, p = 0.149 for Contactless UI; M = 4.60, SD = 0.56, p = 0.209 for Joystick UI; M = 4.27, SD = 0.74, p = 0.631 for Potentiometer UI), across the multiple versions produced through our iterative design process.

- Finding and Discussion 2. Our participants found the Controllability of the later version set of the Contactless UI to be worse than the initial version (e.g., 2.22 point difference for Ctrl, F(1, 38) = 41.6, p = 0.000; 1.66 point difference for Pref, F(1, 38) = 17.3, p = 0.000), which decreased their preference for it (see Box 2 and Box 3). Specifically, they still liked the look and the feel of the Contactless UI and felt that completing the task with the UI was not intolerable (Pref and Ctrl). However, they did not find the UI to be sufficiently comfortable or satisfactory (Pref and Pref). In particular, they thought that the UI did not react to their inputs precisely and accurately (Ctrl), and they found it was difficult to reach a target function and to undo or redo a task whenever they made an error (Ctrl and Ctrl, respectively).

- Finding and Discussion 3. Interestingly, we observed similar patterns in other proposed UIs, especially when we presented UIs whose versions for both Scrolling and Swiping tasks were upgraded at the same time (See Box 5, 6 to 9). In addition, we found that the results in Preference were highly correlated with those in Controllability (e.g., Motion UI’s NA_V2_V1, r = 0.85 at the 0.05 level of significance; Motion UI’s NA_V3_V2, r = .89 at the 0.01 level of significance, see Box 2 & 3 and 7 & 8), which implies that user preference for wearable devices is influenced by Controllability, perhaps more so than other aspects like Information Display or Learnability.

5.2. Comparative Evaluation (Phase 2)

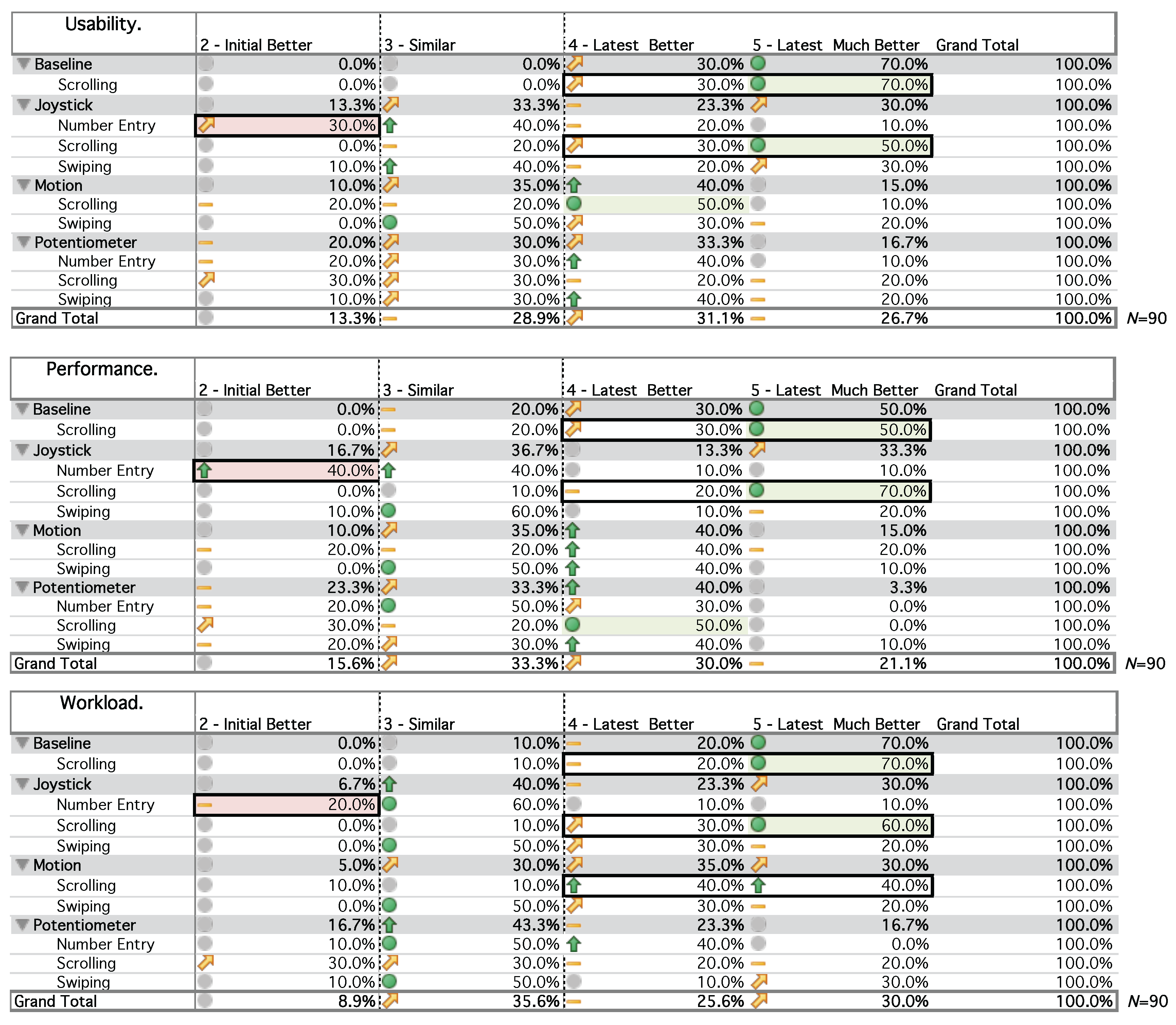

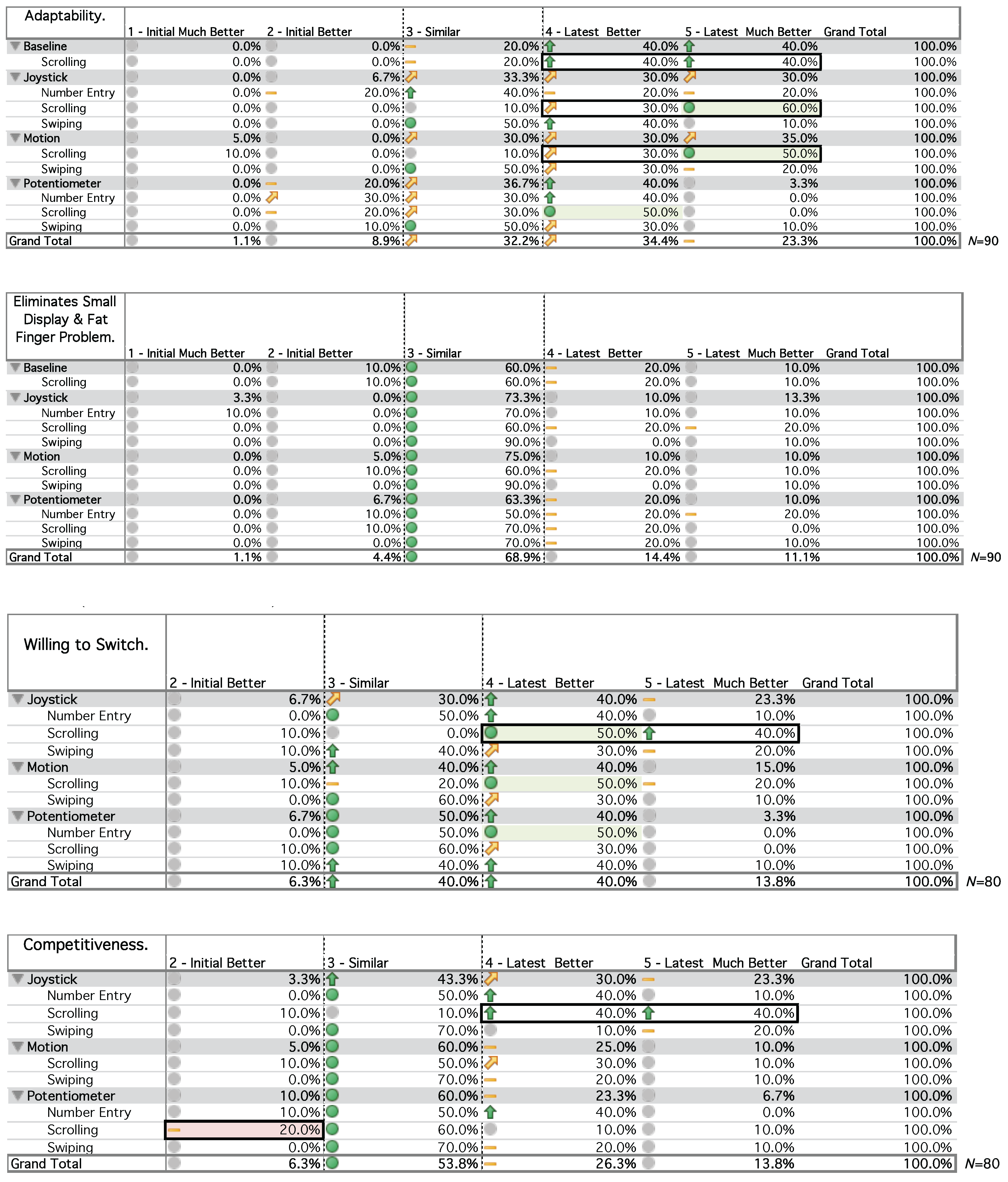

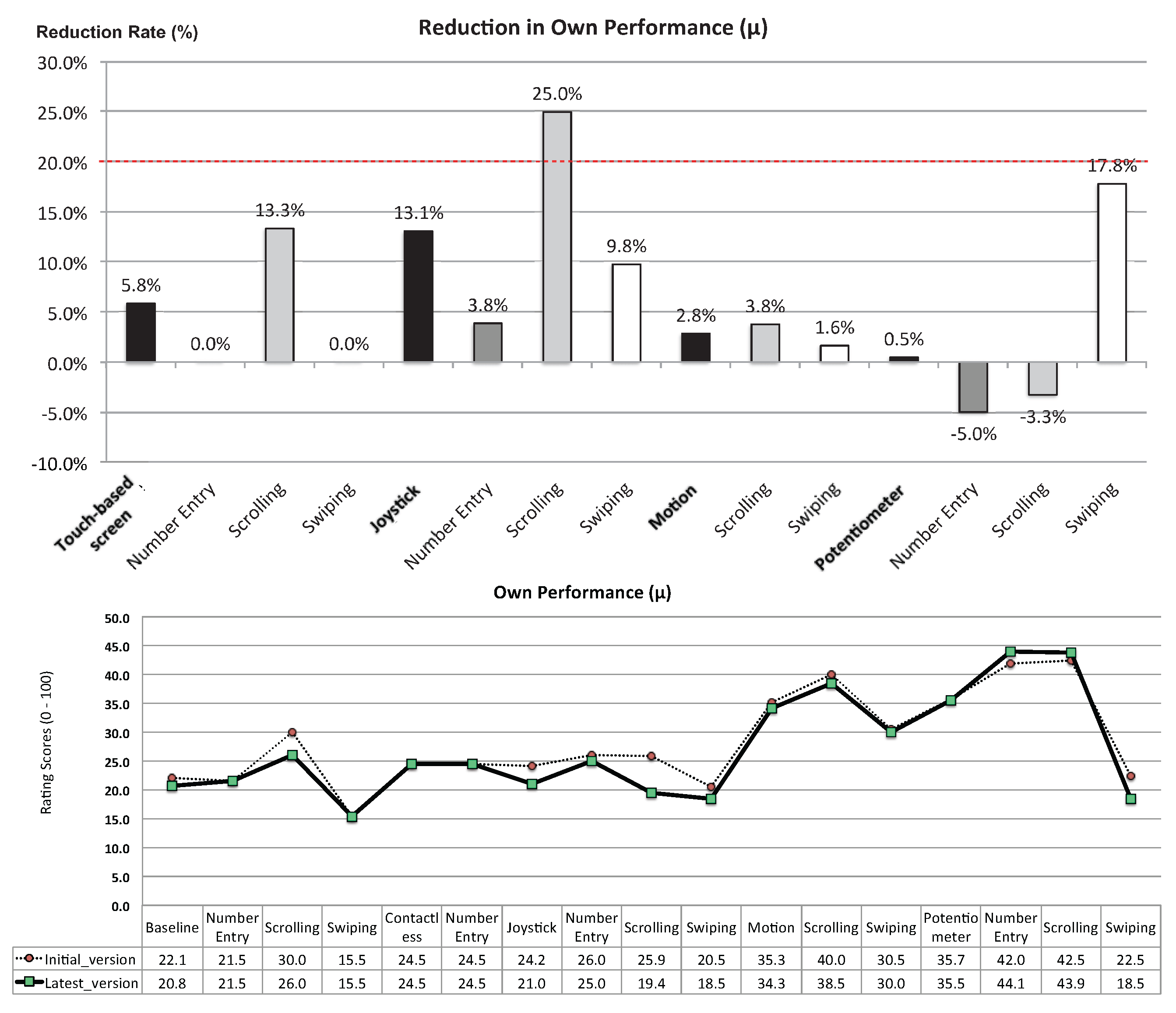

- Finding and Discussion 1. The overall rating results across all three tasks confirmed that our participants’ experience of using our UI prototypes significantly improved from the initial versions, through our iterative design (19.7% of improvement on average across all seven UX aspects; t(79) = 6.87, p = .000). The only exception was that our participants did not think they performed the Number Entry Task well with the latest versions of the Joystick UI (see the 1st chart of Figure 18). Their reaction time during the Number Entry Task increased with progressive versions of the Joystick UI, even as their task performance accuracy was consistently good across various versions (95.4% on average). One of the biggest changes between the initial and latest versions of the UI was its platform—i.e., from a simulated smartwatch on a tablet screen to a real smartwatch (Sony SmartWatch 3). We hypothesized that the changes in form factor could explain the UX reduction for the latest versions of the Joystick UI. However, we did not see a similar UX reduction for the Scrolling and Swiping Tasks. In fact, our participants’ experience using the latest Joystick UI was significantly higher than 120% across all UX aspects we examined (see the blue lines with square markers in the 2nd and 3rd charts in Figure 18; 22.7% of improvement on average across all seven UX aspects; t(29) = 4.96, p = 0.000). Our post hoc analysis for this reversal effect suggests the hypothetical implication that the distance and the orientation between the screen and the Joystick UI module could be an important UI/UX factor, especially during key entry tasks, which may require more continuous cognitive engagement and attention than scrolling/swiping tasks.

- Finding and Discussion 2. The rating results also confirmed that our iterative design process successfully increased our participants’ experience up to 120% or higher across most of our UIs and on most UX aspects. However, when using the latest versions of the Motion UI and the Potentiometer UI, our participants’ rating scores did not sufficiently improve (reference of 20% improvement), even if their experience improved compared to their initial experience. For example, we found that the Motion UI for the Swiping Task might need some improvement to better help end-users explore the entire touch screen. In addition, we found that our participants’ experience improved more slowly when performing either the Number Entry Task or the Scrolling Task with a Potentiometer UI installed in a wrist-worn mockup (i.e., the latest version), rather than on a flat board surface (i.e., the initial version). Figure 19 and Figure 20 show the proportions of participant rating results for each scale category, represented per UI (cells filled in grey) and then per UI × Task pair. In the left of each cell, we include a graphical icon according to the range of its value: a green circle icon if value ≥ 50%, an upwards green arrow icon if 40% ≤ value < 50%, a yellow arrow icon if 30% ≤ value < 40%, a flat yellow icon if 20% ≤ value < 30% and a grey circle icon if value < 20%.

- Finding and Discussion 3. Our participants evaluated the latest versions of the proposed UIs as more usable or much more usable for performing the given tasks than the initial UI versions—e.g., the Joystick UI (53.3% voted for the latest vs. 13.3% for the initial; t(29) = 3.63, p = 0.001), the Motion UI (55.0% vs. 10.0%; t(19) = 3.04, p = 0.007) and Potentiometer UI (50.0% vs. 20.0%; t(29) = 2.54, p = 0.017) (see the grey rows of Figure 19 and Figure 20). For example, more than 80% of participants voted for the latest versions of the Joystick UI and Baseline UI for the Scrolling Task (see the two boxes with thick borders in the right-hand side of Figure 19 and Figure 20). In particular, the latest version of the Joystick UI was assessed as significantly superior to its initial version on most aspects, including Performance (t(29) = 3.07, p = 0.005), Workload (t(29) = 4.32, p = 0.000), Adaptability (t(29) = 4.81, p = 0.000), Willing to Switch (t(29) = 4.94, p = 0.000), and Competitiveness (t(29) = 4.63, p = 0.000). For the Scrolling Task, the latest version of the Motion UI was also highly appreciated as it helped our participants more easily adapt to using the UI to perform the task (see the boxes with thickened borders in the right-hand side of Figure 19 and Figure 20). The Potentiometer UI, on the other hand, was evaluated as not much improved from its initial version for this particular task on Competitiveness.

- Finding and Discussion 4. The detailed results also suggest the hypothetical implication that the comparative usability of different versions of a UI prototype designed for smartwatches could be more dominantly evaluated in terms of Performance and Workload, prior to other UX aspects, such as Adaptability or Eliminating the SDFF Problem. For example, our participants thought that both the initial version and the latest version of the Joystick UI were similar in their usability for the Number Entry Task (t(9) = 0.32, p = 0.758). Similar patterns were also demonstrated in the Performance results, where only 20% of our participants voted for the latest version of the Joystick UI for the Number Entry Task (t(9) = −0.318, p = 0.758). Additionally, our participant responses showed that they felt similar degrees of workload when performing the Number Entry Task with the different versions of the Joystick UI (t(9) = 0.36, p = 0.726; 60% voted similar and 20% identical each for the initial version and for the latest version of the UI). This finding suggests a need for a more structured assessment of task workload and task performance measurement, as in the following sessions.

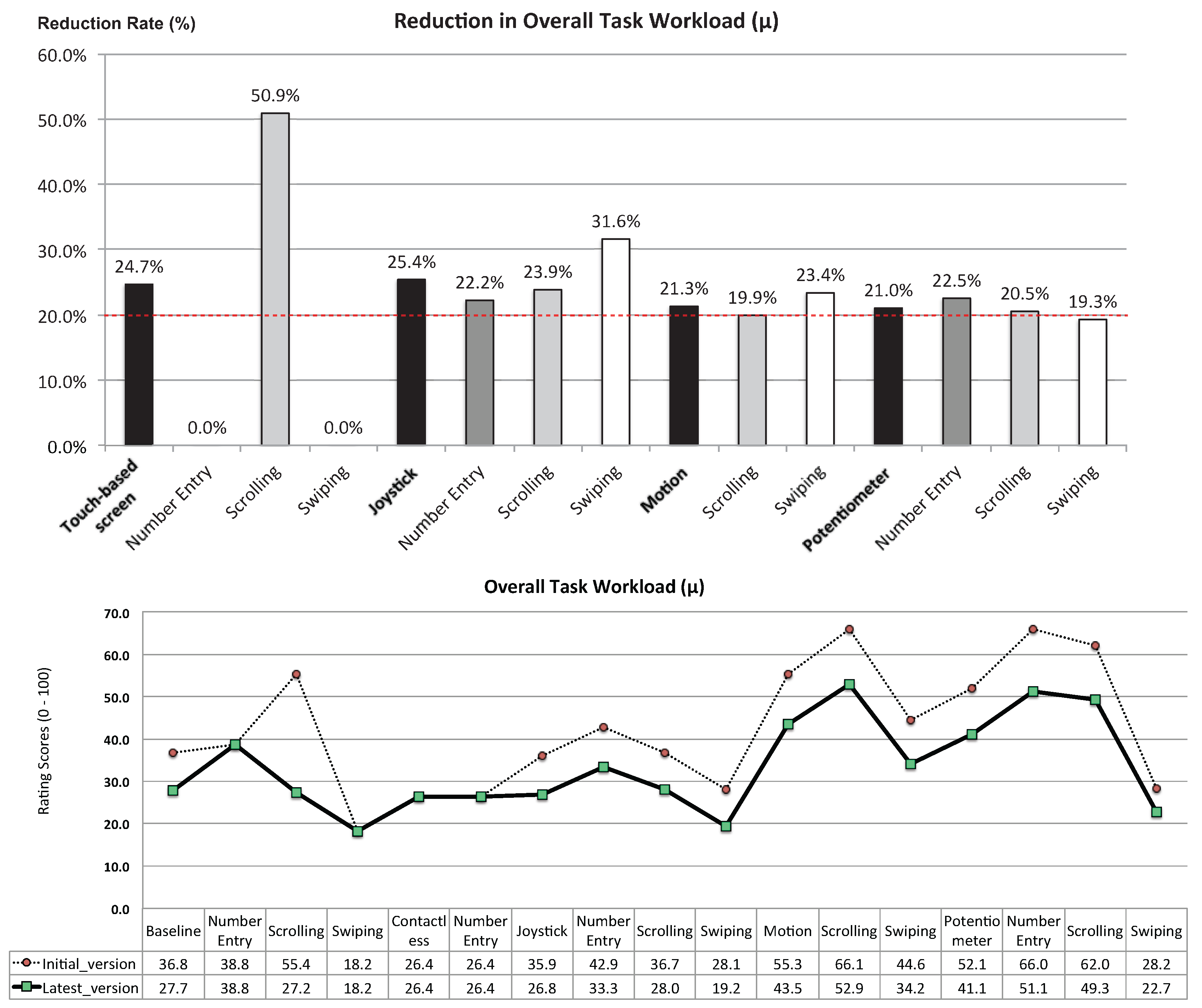

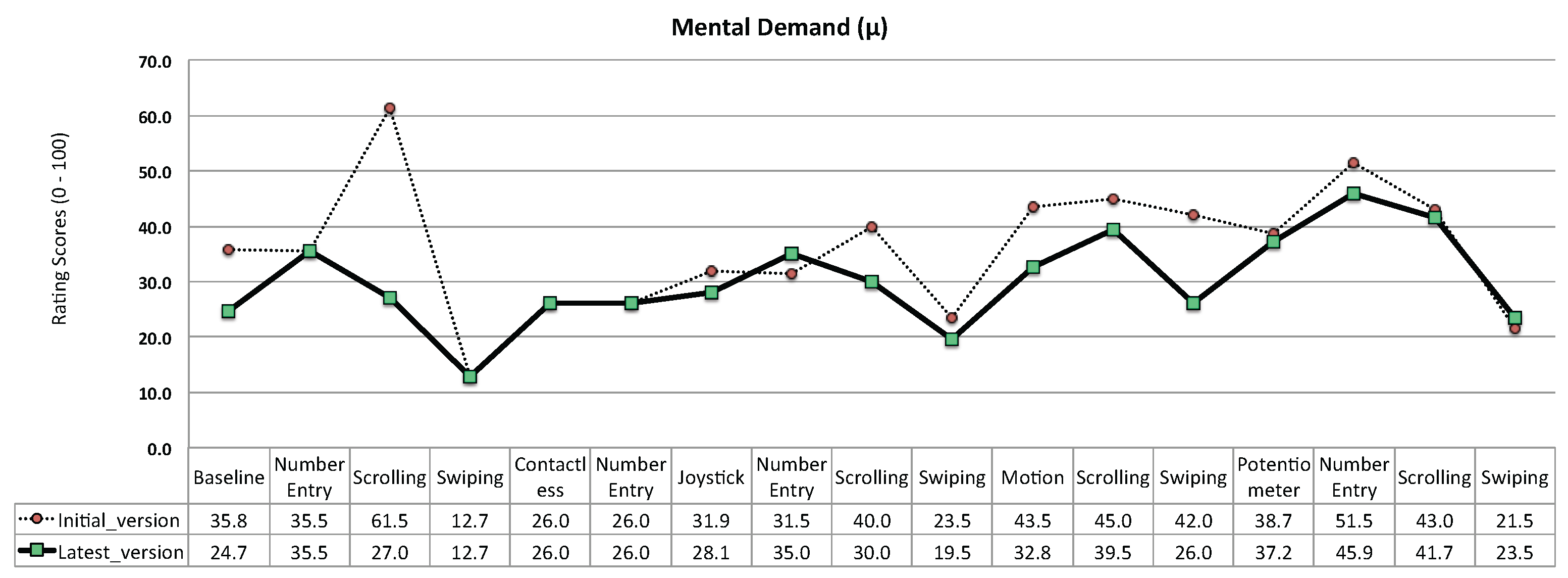

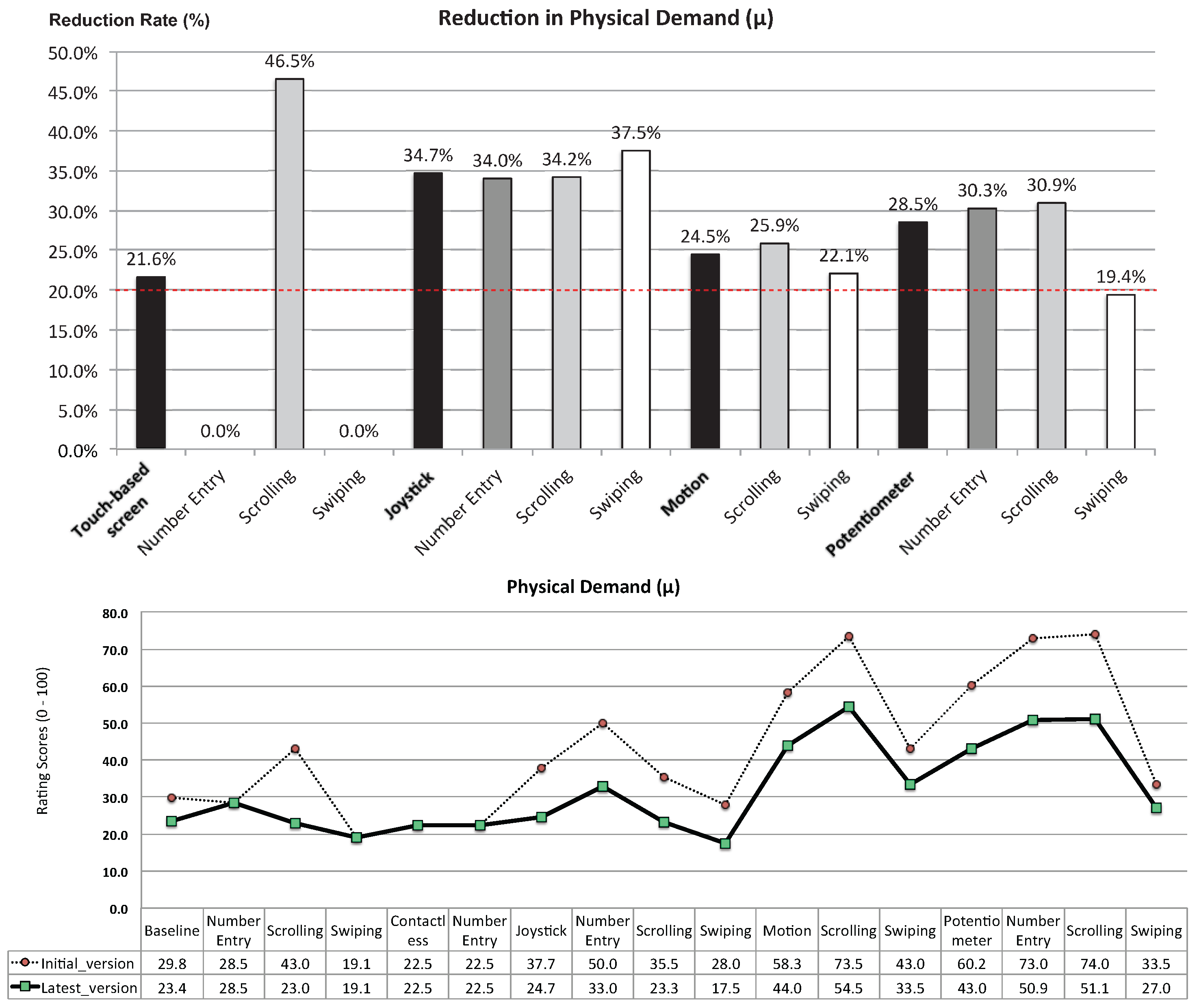

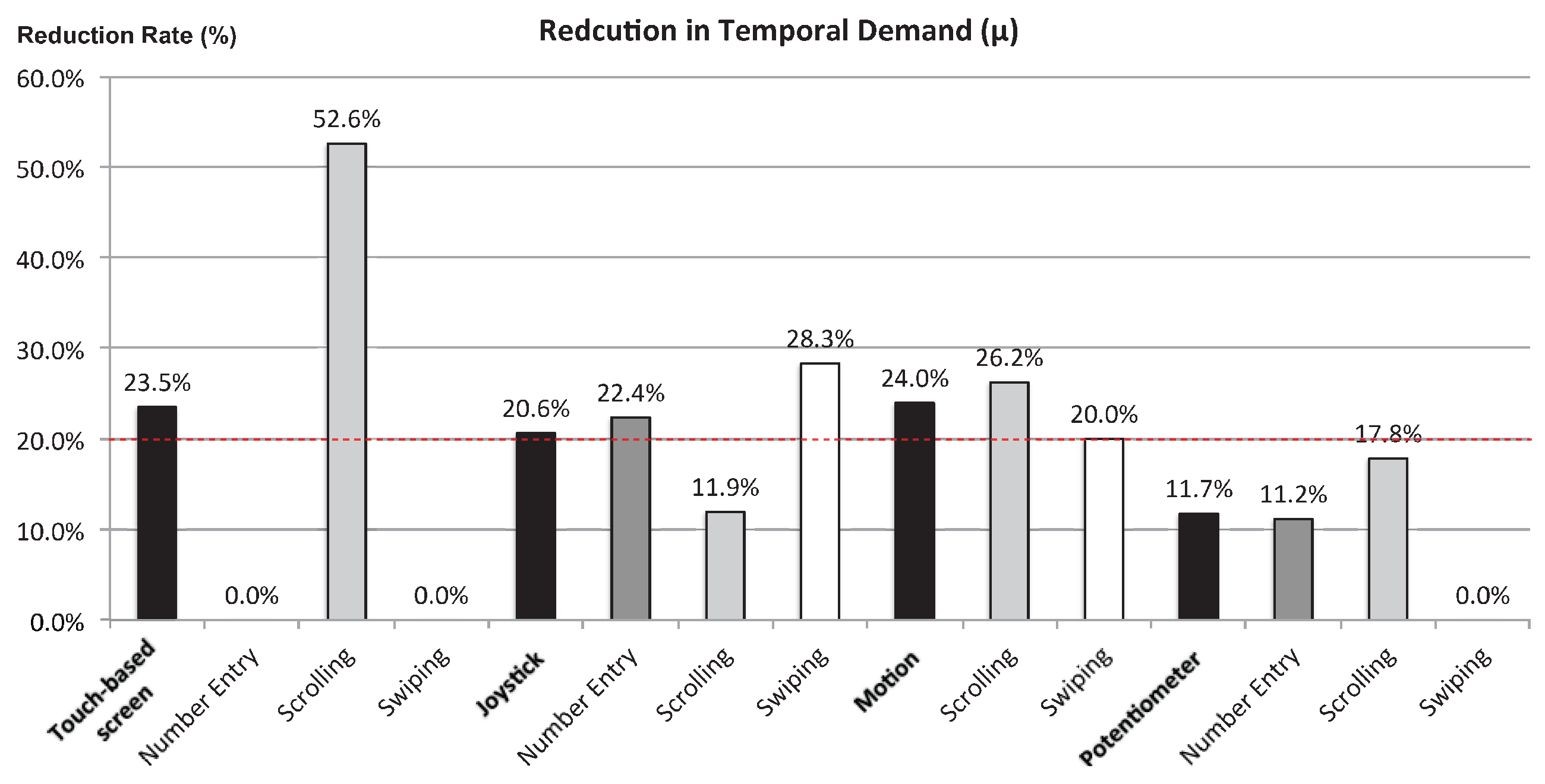

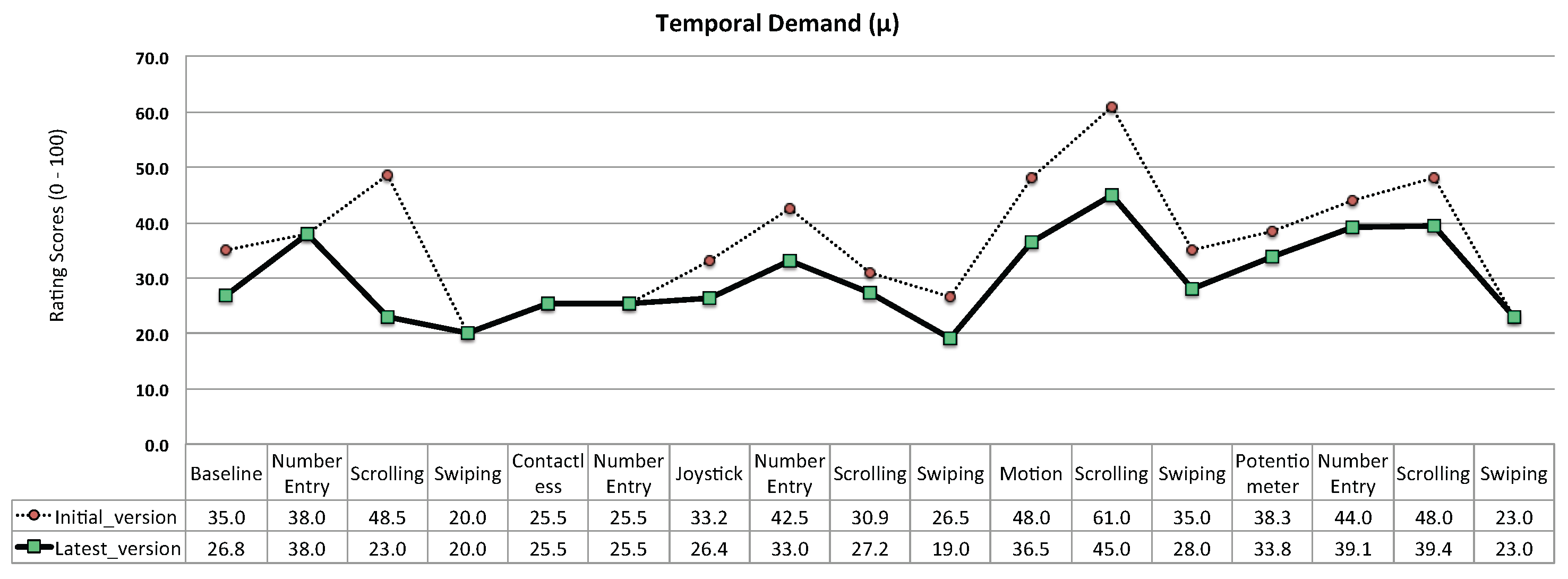

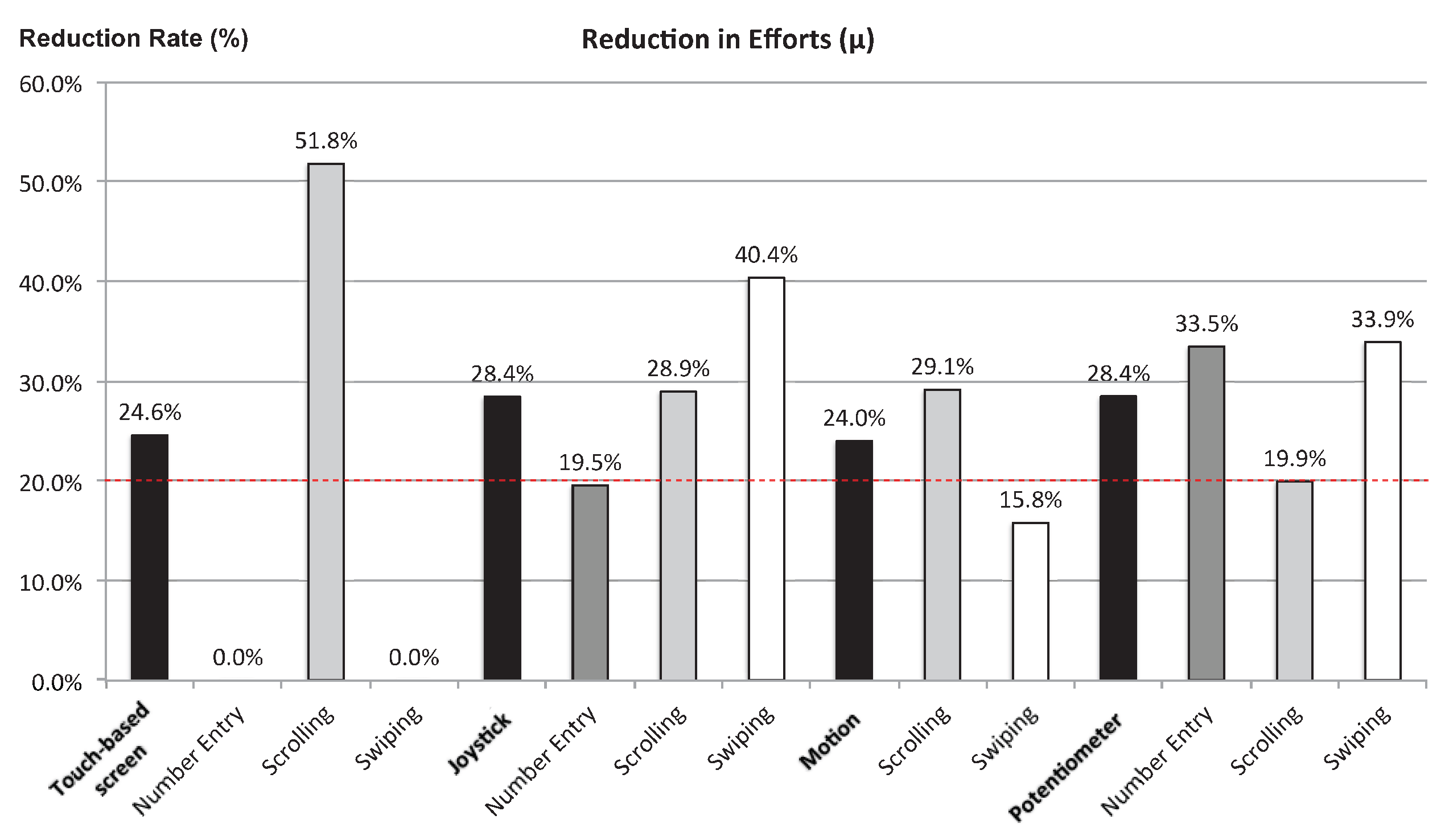

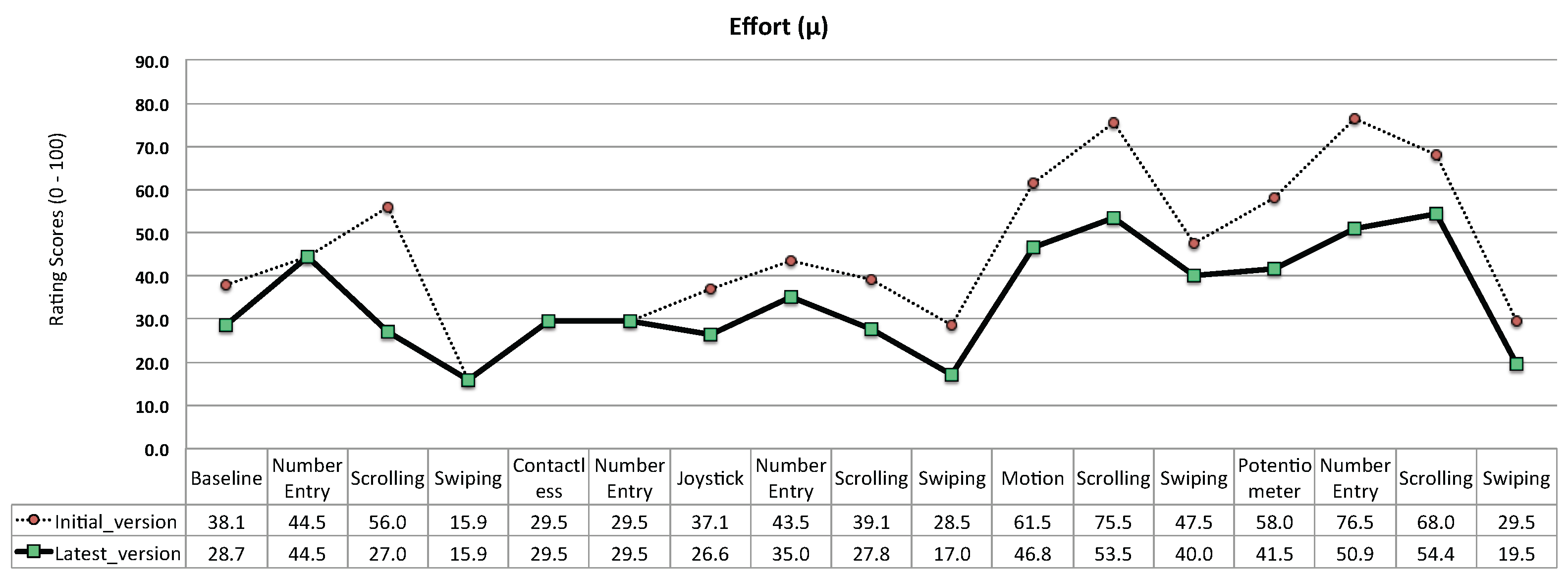

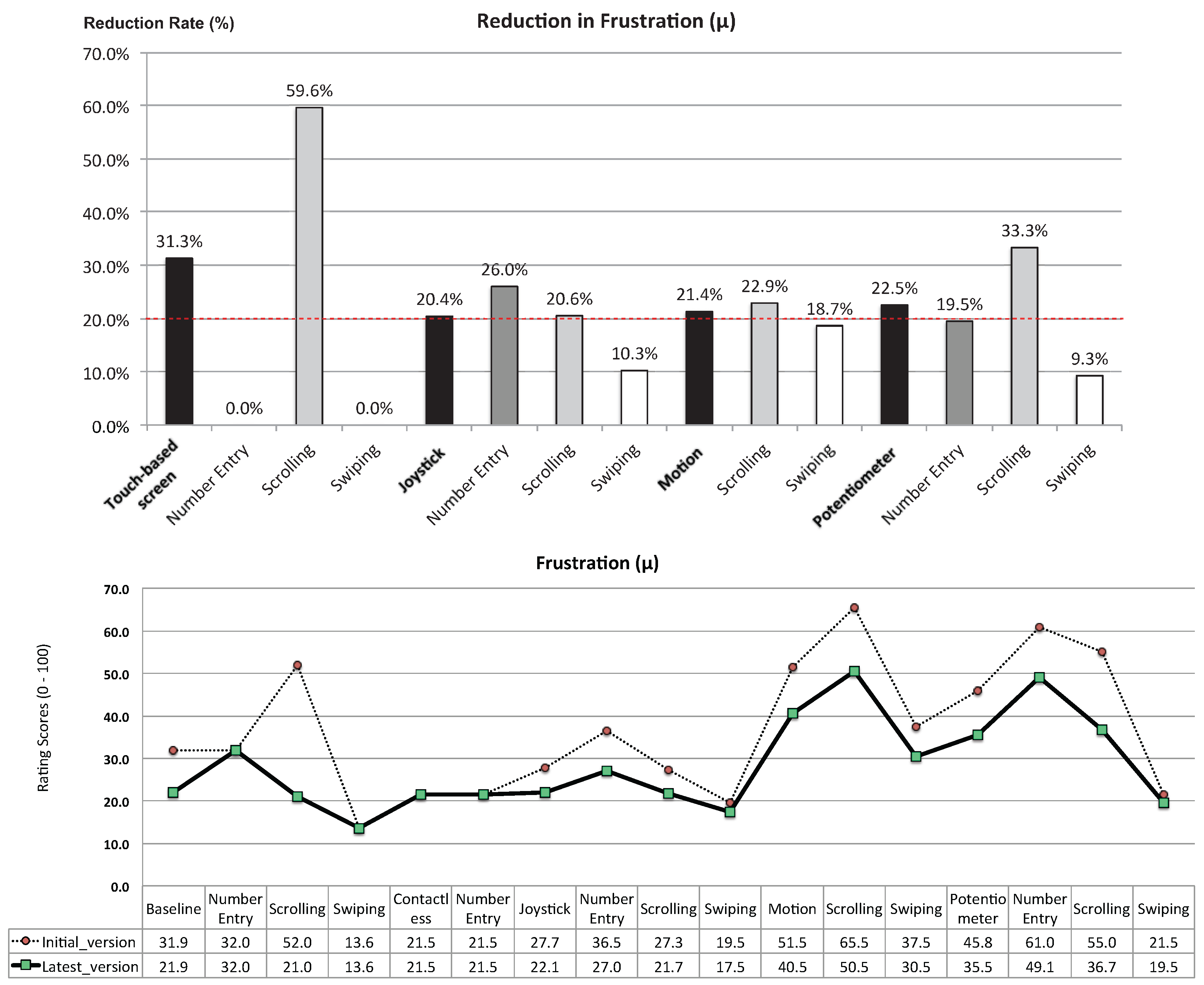

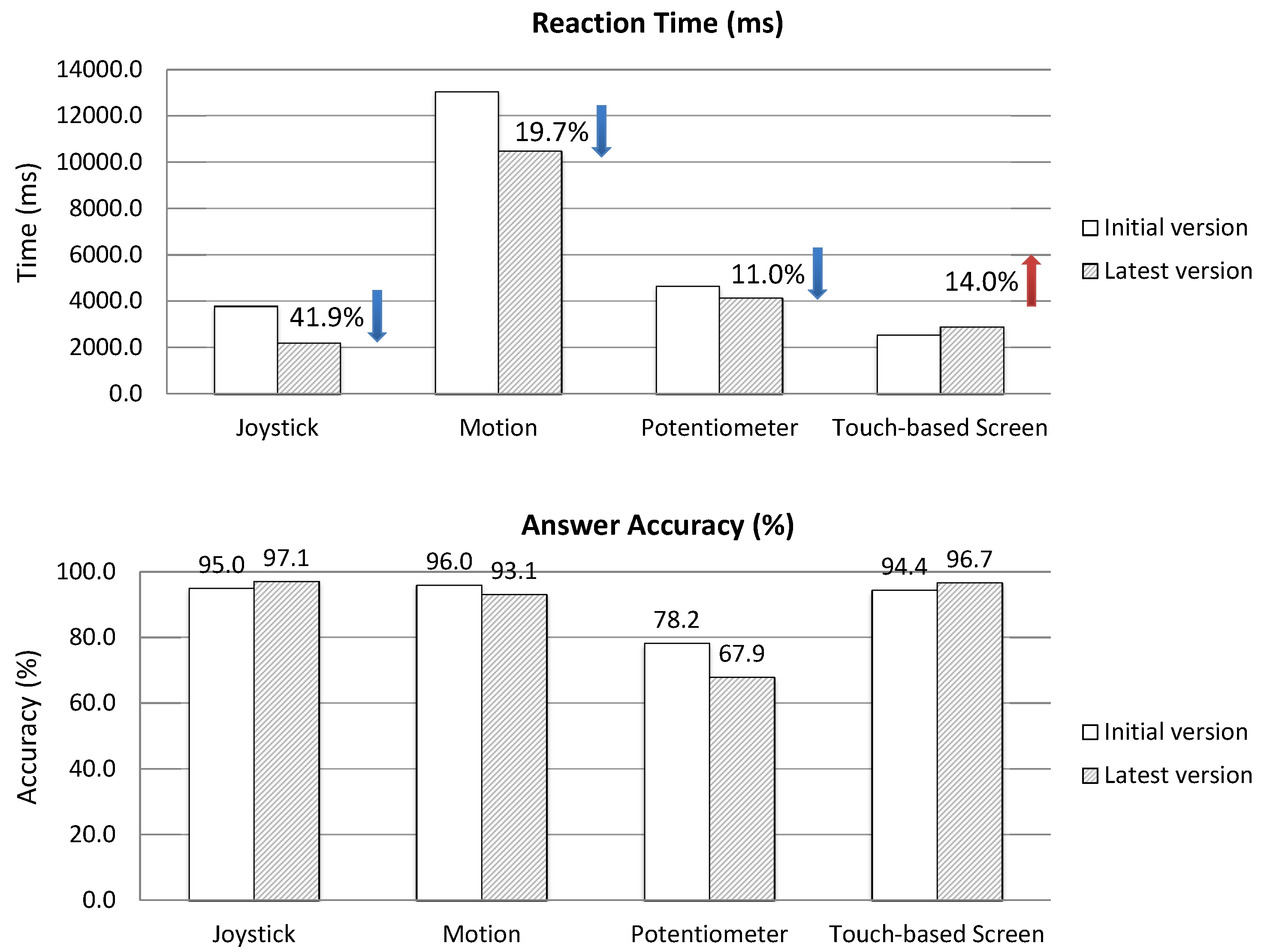

- Finding and Disucssion 1. The results of the Task Workload Assessment showed that our participants’ overall task workload when using the latest versions of provided UIs were significantly lower than when using the initial versions of them—by an approximately 12.2 point difference on average (SD difference = 3.86) across all UI and task combinations, which corresponds to a 25.6% task workload reduction (t(178) = 3.17, p = 0.002).

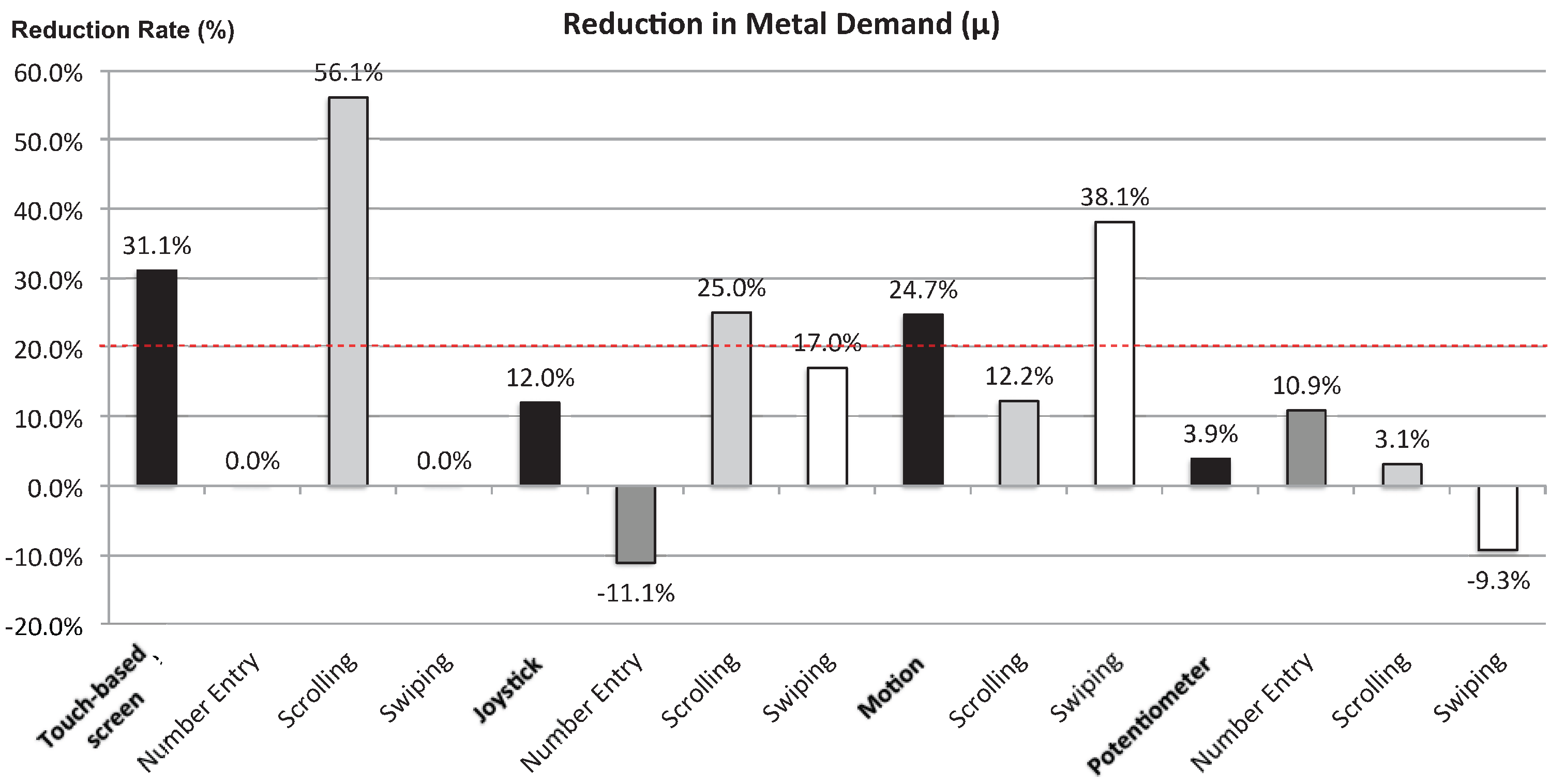

- Finding and Discussion 2. The overall results strongly suggest that users can best perform the Number Entry Task by using the latest version of the Contactless UI, the Scrolling Task by using the latest version of the Joystick UI and the Swiping Task by using a touch-based screen. These findings were clearly evident across most specific workload aspects (Mental Demand, Physical Demand, Temporal Demand, Effort and Frustration).

- Finding and Discussion 3. Interestingly, if a developer aims to help end-users evaluate their performance on the Number Entry Task as satisfactory, a touch-based screen is slightly more effective than the Contactless UI (i.e., Own Performance aspect). In addition, the Potentiometer UI and the Joystick UI were revealed to be the best alternatives to touch-based screens in the Swiping Task, if the goal is to reduce mental and perceptual workload, respectively.

- Finding and Discussion 1. In terms of quantitative evaluation, the latest versions of the UIs significantly reduced participants’ reaction time while maintaining their answer accuracy as shown in Figure 29 (e.g., Reaction Time and Answer Accuracy for the Joystick UI, t(58) = 2.17, p = 0.034 and t(58) = −0.848, p = 0.400, respectively). The improvement in reaction time corresponds to an approximately 41.9% reduction for the Joystick UI, a 19.7% reduction for the Motion UI, and an 11.0% reduction for the Potentiometer UI. However, there was also a reversal for the touch-based screen, with a 14.0% increase in the Baseline UI. Both qualitative and quantitative results in the usability principle evaluation confirm that our iterative design process significantly improved the usability of our proposed UIs, as compared to the initial versions across all three tasks given in our experiment (see the horizontal dashed lines in red in Figure 28).

- Finding and Discussion 2. The results also identified the aspects of usability principles that showed steady or marginal improvement (i.e., the Controllability of the Motion UI and the Potentiometer UI)—specifically, Ctrl & Ctrl of the Motion UI and Ctrl & Ctrl of the Potentiometer UI. Interestingly, in the case of the Potentiometer UI, the latest versions, which were incorporated into a wrist-worn mockup, resulted in steady improvement (M = 3.35, SD = 0.667, t(9) = 1.64, p = 0.135, across all aspects of usability principles). This confirms the results we observed in our previous UX assessment section. This also suggests the hypothetical implication that improvement in the usability of a UI can be slower if the UI increasingly requires users to control the UI with both hands.

5.3. Design Implications and Recommendations

- The Joystick UI module, which provides appealing tactile feedback, can be integrated for tasks requiring a naturalistic exploration of an information space larger than the screen area, such as menu scrolling or map navigation. Overall, participants appreciated the intuitiveness and simplicity of the Joystick UI, which enable an easily accessible eyes-free interaction by presenting explicit tactile feedback. Two potential applications of the Joystick UI, such as playing games and providing enhanced accessibility for handicapped users, were elicited by multiple participants. Subject 19 deemed this availability of tactile feedback useful for people with a medical disability who may have difficulties performing tasks that require high hand-eye coordination. Moreover, 12 subjects found the naturalistic exploration with the Joystick UI (i.e., four directional movements) potentially useful for playing games. However, participants expressed conflicting preferences for the operating speed and controllability of the Joystick UI. For example, Subject 20 suggested, “... make the scrolling speed just a little bit faster. It just needs to not stay on one item for as long as it does”. On the contrary, Subject 12 complimented, “... it’s easy to control how fast and how slow you want to go”. Therefore, an option for personalizing operating speed and controllability is a possible remedy for this issue.

- The Potentiometer UI module, which can be installed on a smartwatch’s strap or bezel, is recommended for tasks that end-users want to less explicitly perform and as the best alternative extension to touch-based interaction. Five participants valued the Potentiometer UI for providing discreet watch interaction, which is a unique feature not mentioned for other UIs. For example, Subject 4 liked the privacy secured by the wristband, “... you could just [interact with the smartwatch] casually and people would not even know what you’re doing”. Other participants found the Potentiometer UI can help maximize uses of the touchscreen. Subject 1 valued that the Potentiometer UI is useful for mitigating the SDFF problem by using extra space on the wrist strap. Subject 5 described this advantage, “just an extension of using your screen” and envisioned a use scenario for the Potentiometer UI, “It will be useful when you needed software that needed more space on the screen”. However, using different pressure levels in the Potentiometer UI was viewed as problematic and demanding by many participants. Subject 7 expressed frustrations, “The different pressure was kind of hard to remember [for the numbers]. I found it confusing”. Subject 4 too elaborated on this shortcoming, “... I did not like how you had to put a certain amount of pressure on it because that’s too much effort”. Therefore, the Potentiometer UI can be made more usable by reducing pressure levels to a single level, complementing by a double-tap and providing visual feedback on the pressure being exerted.

- The Motion-gesture UI module, which allows wrist gestural input, will be effective for simple tasks requiring less precision or attention. Examples of such task include performing a quick confirmation task while jogging, typing on a keyboard, or using a mouse, where end-users do not want to necessarily move their fingers to touch the screen or where other proxies force them disengage from their primary task. Eighteen participants found the Motion-gesture UI very suitable for hands-free/multitasking/active uses (e.g., running, driving). Subject 2 stated that “... I think that would just be easy when you only have one hand available”. Subject 20 too found the Motion-gesture UI handy, “Maybe your one hand is full so you need to just shake [the watch] to move ...” Other participants emphasized use case scenarios for handicapped/disabled users where more sensitive and haptic feedback could make the Motion-gesture UI easier for people with limited mobility. Subject 19 noted, “... this could actually be useful for blind people, because [the motion] provides non-visual feedback through the vibrations”. Subject 35 too found the Motion-gesture UI more feasible for disabled users who can benefit from using alternative and sensitive modalities. However, participants reported that the flicking motion is physically demanding and socially awkward. Subject 12 complained, “I think just having to scroll through so many times was why my arms were getting so tired”. Subject 4 summarized his/her frustration, “... it seems exhausting”. Therefore, the Motion-gesture UI would be more usable and effective when less-demanding micro-gestures are registered and recognized responsively.

- The Contactless UI module, which offers a reconfigurable smartphone-like UI based on the projection of a virtual keyboard layout with an IR light and an embedded sensor, has great potential for tasks requiring end-users’ continuous engagement in cognition and attention, such as number or text entry tasks. Overall, many participants appreciated that the Contactless UI provides an enlarged interaction space while providing a UI/UX that is intuitive and similar to that of the touchscreen baseline. Ten participants in our case study were impressed that the Contactless UI offers a familiar and continuous smartphone experience on a smartwatch. For example, Subject 6 commented, “It does seem pretty easy, since it’s basically just like a [touch screen] just not visible”. Participants noted that the Contactless UI has the advantages of providing a bigger-size user interaction space that can allow for higher accuracy. For example, Subject 16 expressed, “... so if there’s something that’s kind of hard to see on the screen, then you could project it and make it easier for people who can’t see something that small”. On the other hand, multiple participants expressed concerns about integrating the projection module and providing feedback on user interaction. Subject 18 commented on the current prototype, “I guess get the projections working—it’s hard to say since that’s not working”. Subject 33 saw a need for feedback, “I think feedback is necessary because I know there were a lot of errors for mine, but I wasn’t sure where the errors were”. Therefore, the Contactless UI still has room for improvement integrating both visual and haptic feedback.

- There are other types of wearable UI/UX that we did not cover in this paper, but worth considering for future work. For example, a wearable device may employ a minimalist interface or may not provide any physical apparatus for user interaction at all. In this scenario, the device attempts to automatically infer intentions and predict next possible actions of users based on previous behavior patterns. To include such intelligence or context-awareness, we need to quantify and learn from personal big data generated via user interaction. In this aspect, it is an interesting direction to study how the likes of voice-based virtual assistants (i.e., Cortana, Siri, Google Assistant, Alexa and Bixby) can be integrated and cooperated to overcome limitations of current wearable UIs for better user experience.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| UI | User Interface |

| UX | User Experience |

| SDFF | Small Display and Fat Finger |

| SPP | Serial Port Profile |

| API | Application Programming Interface |

| IR | Infrared Radiation |

| ESM | Experience Sampling Method |

| ANOVA | Analysis of Variance |

| HSD | Honest Significant Difference |

References

- Swan, M. Sensor Mania! The Internet of Things, Wearable Computing, Objective Metrics, and the Quantified Self 2.0. J. Sens. Actuator Netw. 2012, 1, 217–253. [Google Scholar] [CrossRef]

- Yoon, H.; Doh, Y.Y.; Yi, M.Y.; Woo, W. A Conceptual Framework for Augmented Smart Coach Based on Quantified Holistic Self. In Proceedings of the 2nd International Conference on Distributed, Ambient, and Pervasive Interactions, Crete, Greece, 22–27 June 2014; pp. 498–508. [Google Scholar]

- Crawford, K.; Lingel, J.; Karppi, T. Our Metrics, Ourselves: A Hundred Years of Self-tracking from the Weight Scale to the Wrist Wearable Device. Eur. J. Cult. Stud. 2015, 18, 479–496. [Google Scholar] [CrossRef]

- Schüll, N.D. Data for Life: Wearable Technology and the Design of Self-care. BioSocieties 2016, 11, 317–333. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tut. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Kim, S.; Chun, J.; Dey, A.K. Sensors Know When to Interrupt You in the Car: Detecting Driver Interruptibility Through Monitoring of Peripheral Interactions. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 487–496. [Google Scholar]

- Piwek, L.; Ellis, D.A.; Andrews, S.; Joinson, A. The Rise of Consumer Health Wearables: Promises and Barriers. PLOS Med. 2016, 13, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.L.; Ding, X.R.; Poon, C.C.Y.; Lo, B.P.L.; Zhang, H.; Zhou, X.L.; Yang, G.Z.; Zhao, N.; Zhang, Y.T. Unobtrusive Sensing and Wearable Devices for Health Informatics. IEEE Trans. Biomed. Eng. 2014, 61, 1538–1554. [Google Scholar] [CrossRef] [PubMed]

- Motti, V.G.; Caine, K. Micro Interactions and Multi Dimensional Graphical User Interfaces in the Design of Wrist Worn Wearables. In Proceedings of the Human Factors and Ergonomics Society 59th Annual Meeting, Los Angeles, CA, USA, 26–30 October 2015; pp. 1712–1716. [Google Scholar]

- Motti, V.G.; Caine, K. Smart Wearables or Dumb Wearables? Understanding How Context Impacts the UX in Wrist Worn Interaction. In Proceedings of the 34th ACM International Conference on the Design of Communication, Silver Spring, MD, USA, 23–24 September 2016. [Google Scholar]

- Siek, K.A.; Rogers, Y.; Connelly, K.H. Fat Finger Worries: How Older and Younger Users Physically Interact with PDAs. In Proceedings of the IFIP TC13 Conference on Human-Computer Interaction, Rome, Italy, 12–16 September 2005; pp. 267–280. [Google Scholar]

- Oakley, I.; Lee, D.; Islam, M.R.; Esteves, A. Beats: Tapping Gestures for Smart Watches. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 1237–1246. [Google Scholar]

- Lafreniere, B.; Gutwin, C.; Cockburn, A.; Grossman, T. Faster Command Selection on Touchscreen Watches. In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems, Santa Clara, CA, USA, 7–12 May 2016; pp. 4663–4674. [Google Scholar]

- Yeo, H.S.; Lee, J.; Bianchi, A.; Quigley, A. WatchMI: Pressure Touch, Twist and Pan Gesture Input on Unmodified Smartwatches. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 394–399. [Google Scholar]

- Hong, J.; Heo, S.; Isokoski, P.; Lee, G. SplitBoard: A Simple Split Soft Keyboard for Wristwatch-sized Touch Screens. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 1233–1236. [Google Scholar]

- Chen, X.A.; Grossman, T.; Fitzmaurice, G. Swipeboard: A Text Entry Technique for Ultra-small Interfaces That Supports Novice to Expert Transitions. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; pp. 615–620. [Google Scholar]

- Oney, S.; Harrison, C.; Ogan, A.; Wiese, J. ZoomBoard: A Diminutive Qwerty Soft Keyboard Using Iterative Zooming for Ultra-small Devices. In Proceedings of the 31st Annual ACM Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 2799–2802. [Google Scholar]

- Leiva, L.A.; Sahami, A.; Catala, A.; Henze, N.; Schmidt, A. Text Entry on Tiny QWERTY Soft Keyboards. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 669–678. [Google Scholar]

- Xia, H.; Grossman, T.; Fitzmaurice, G. NanoStylus: Enhancing Input on Ultra-Small Displays with a Finger-Mounted Stylus. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 11–15 November 2015; pp. 447–456. [Google Scholar]

- Funk, M.; Sahami, A.; Henze, N.; Schmidt, A. Using a Touch-sensitive Wristband for Text Entry on Smart Watches. In Proceedings of the Extended Abstracts of the 32nd Annual ACM Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 2305–2310. [Google Scholar]

- Ahn, Y.; Hwang, S.; Yoon, H.; Gim, J.; Ryu, J.H. BandSense: Pressure-sensitive Multi-touch Interaction on a Wristband. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 251–254. [Google Scholar]

- Yoon, H.; Lee, J.E.; Park, S.H.; Lee, K.T. Position and Force Sensitive N-ary User Interface Framework for Wrist-worn Wearables. In Proceedings of the Joint 8th International Conference on Soft Computing and Intelligent Systems and 17th International Symposium on Advanced Intelligent Systems, Sapporo, Japan, 25–28 August 2016; pp. 671–675. [Google Scholar]

- Perrault, S.T.; Lecolinet, E.; Eagan, J.; Guiard, Y. WatchIt: Simple Gestures and Eyes-free Interaction for Wristwatches and Bracelets. In Proceedings of the 31st Annual ACM Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 1451–1460. [Google Scholar]

- Yu, S.B.; Jung, D.H.; Park, Y.S.; Kim, H.S. Design and Implementation of Force Sensor based Password Generation. In Proceedings of the 2016 Asia Workshop on Information and Communication Engineering, Cheonan-si, Korea, 25 August 2016; pp. 61–64. [Google Scholar]

- Grossman, T.; Chen, X.A.; Fitzmaurice, G. Typing on Glasses: Adapting Text Entry to Smart Eyewear. In Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services, Copenhagen, Denmark, 24–27 August 2015; pp. 144–152. [Google Scholar]

- Kubo, Y.; Shizuki, B.; Tanaka, J. B2B-Swipe: Swipe Gesture for Rectangular Smartwatches from a Bezel to a Bezel. In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems, Santa Clara, CA, USA, 7–12 May 2016; pp. 3852–3856. [Google Scholar]

- Oakley, I.; Lee, D. Interaction on the Edge: Offset Sensing for Small Devices. In Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 169–178. [Google Scholar]

- Darbar, R.; Sen, P.K.; Samanta, D. PressTact: Side Pressure-Based Input for Smartwatch Interaction. In Proceedings of the 34th Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, Santa Clara, CA, USA, 7–12 May 2016; pp. 2431–2438. [Google Scholar]

- Arefin Shimon, S.S.; Lutton, C.; Xu, Z.; Morrison-Smith, S.; Boucher, C.; Ruiz, J. Exploring Non-touchscreen Gestures for Smartwatches. In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems, Santa Clara, CA, USA, 7–12 May 2016; pp. 3822–3833. [Google Scholar]

- Kerber, F.; Löchtefeld, M.; Krüger, A.; McIntosh, J.; McNeill, C.; Fraser, M. Understanding Same-Side Interactions with Wrist-Worn Devices. In Proceedings of the 9th Nordic Conference on Human-Computer Interaction, Gothenburg, Sweden, 23–27 October 2016; pp. 1–10. [Google Scholar]

- Paudyal, P.; Banerjee, A.; Gupta, S.K. SCEPTRE: A Pervasive, Non-Invasive, and Programmable Gesture Recognition Technology. In Proceedings of the 21st International Conference on Intelligent User Interfaces, Sonoma, CA, USA, 7–10 March 2016; pp. 282–293. [Google Scholar]

- Laput, G.; Xiao, R.; Harrison, C. ViBand: High-Fidelity Bio-Acoustic Sensing Using Commodity Smartwatch Accelerometers. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 321–333. [Google Scholar]

- Lee, S.C.; Li, B.; Starner, T. AirTouch: Synchronizing In-air Hand Gesture and On-body Tactile Feedback to Augment Mobile Gesture Interaction. In Proceedings of the 15th Annual International Symposium on Wearable Computers, San Francisco, CA, USA, 12–15 June 2011; pp. 3–10. [Google Scholar]

- Withana, A.; Peiris, R.; Samarasekara, N.; Nanayakkara, S. zSense: Enabling Shallow Depth Gesture Recognition for Greater Input Expressivity on Smart Wearables. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 3661–3670. [Google Scholar]

- Gong, J.; Yang, X.D.; Irani, P. WristWhirl: One-handed Continuous Smartwatch Input using Wrist Gestures. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 861–872. [Google Scholar]

- Guo, A.; Paek, T. Exploring tilt for no-touch, wrist-only interactions on smartwatches. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 17–28. [Google Scholar]

- Zhang, Y.; Harrison, C. Tomo: Wearable, Low-Cost Electrical Impedance Tomography for Hand Gesture Recognition. In Proceedings of the 28th Annual ACM Symposium on User Interface Software and Technology, Charlotte, NC, USA, 11–15 November 2015; pp. 167–173. [Google Scholar]

- Wen, H.; Ramos Rojas, J.; Dey, A.K. Serendipity: Finger Gesture Recognition using an Off-the-Shelf Smartwatch. In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems, Santa Clara, CA, USA, 7–12 May 2016; pp. 3847–3851. [Google Scholar]

- Paudyal, P.; Lee, J.; Banerjee, A.; Gupta, S.K. DyFAV: Dynamic Feature Selection and Voting for Real-time Recognition of Fingerspelled Alphabet Using Wearables. In Proceedings of the 22nd International Conference on Intelligent User Interfaces, Limassol, Cyprus, 13–16 March 2017; pp. 457–467. [Google Scholar]

- Zhang, C.; Bedri, A.; Reyes, G.; Bercik, B.; Inan, O.T.; Starner, T.E.; Abowd, G.D. TapSkin: Recognizing On-Skin Input for Smartwatches. In Proceedings of the 2016 ACM on Interactive Surfaces and Spaces, Niagara Falls, ON, Canada, 6–9 November 2016; pp. 13–22. [Google Scholar]

- Laput, G.; Xiao, R.; Chen, X.A.; Hudson, S.E.; Harrison, C. Skin buttons: Cheap, small, low-powered and clickable fixed-icon laser projectors. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; pp. 389–394. [Google Scholar]

- Ogata, M.; Imai, M. SkinWatch: Skin Gesture Interaction for Smart Watch. In Proceedings of the 6th Augmented Human International Conference, Singapore, 9–11 March 2015; pp. 21–24. [Google Scholar]

- Chun, J.; Kim, S.; Dey, A.K. Usability Evaluation of Smart Watch UI/UX using Experience Sampling Method (ESM). Int. J. Ind. Ergonom. 2017. submitted for publication. [Google Scholar]

- Yoon, H.; Park, S.H.; Lee, K.T. Lightful User Interaction on Smart Wearables. Pers. Ubiquit. Comput. 2016, 20, 973–984. [Google Scholar] [CrossRef]

- Lee, J.E.; Yoon, H.; Park, S.H.; Lee, K.T. Complete as You Go: A Constructive Korean Text Entry Method for Smartwatches. Proceedings of 2016 International Conference on Information and Communication Technology Convergence, Jeju Island, Korea, 19–21 October 2016; pp. 1185–1187. [Google Scholar]

- Xiao, R.; Laput, G.; Harrison, C. Expanding the Input Expressivity of Smartwatches with Mechanical Pan, Twist, Tilt and Click. In Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 193–196. [Google Scholar]

| Text Entry Event | Joystick Input | Scroll/Swipe Event | Joystick Input |

|---|---|---|---|

| Selector Navigator Left | NC Left | Scroll Up | NC Up |

| Selector Navigator Right | NC Right | Scroll Down | NC Down |

| Selector Confirmation Step Up | NC Up | Item Selection | Short Click |

| Character Completion | Short Click | Swipe Left | NC Left |

| Undo Last Selection | NC Down ×1 | Swipe Right | NC Right |

| Undo All Selection | NC Down ×2 | ||

| Delete Last Character | NC Down ×3 | ||

| Space | Click State Right | ||

| Mode Switch | Long Click |

| Usability Principle | Question Item |

|---|---|

| Information Display | ID: Information displayed on the device is well organized and specific enough to understand the meaning. |

| ID: Visual information (icons, symbols, UI elements) is easily identified. | |

| ID: Texts displayed on the screen are clear and readable. | |

| ID: Vibrations of the device are clearly presented and thus easily identifiable. | |

| Control | Ctrl: I can easily complete the task using the prototype UI. |

| Ctrl: I can easily find and reach a target function (an app or interface element) whenever I want. | |

| Ctrl: The device reacts to my input precisely and accurately. | |

| Ctrl: The device provides feedback that allows me to follow the status of the device or the result of the task that I’ve conducted. | |

| Ctrl: I am able to easily undo or redo the same task whenever I make an error. | |

| Learnability | Lrn: It is easy to remember task procedures and repeat them. |

| Lrn: I can easily predict interaction results and the device actually provides result that I’m expecting from it. | |

| Lrn: The device is intuitively designed so that I can easily figure out how to use the device without reading through its manual. | |

| Preference | P: I like the look and the feel of the prototype UI. |

| P: Using the prototype UI is comfortable. | |

| P: Overall, I’m satisfied with the prototype UI. |

| Prototype UI | Task | Initial Version | Latest Version | |

|---|---|---|---|---|

| Baseline UI | Number Entry | -Same | - | |

| NASA-TLX | ||||

| Scrolling | - Random order | -Alphabetical order | UXA | |

| NASA-TLX | NASA-TLX | |||

| Swiping | -Same | - | ||

| NASA-TLX | ||||

| Usability Principle Evaluation | ||||

| Joystick UI | Number Entry | - On tablet | - On watch with feedback | UXA |

| NASA-TLX | NASA-TLX | |||

| Scrolling | - Random order | - Alphabetical order | UXA | |

| - List loop-around | ||||

| NASA-TLX | NASA-TLX | |||

| Swiping | - No selector | - Show selector | UXA | |

| NASA-TLX | NASA-TLX | |||

| Usability Principle Evaluation | ||||

| Potentiometer UI | Number Entry | - Different level pressures for #s | - Level 1 pressure | UXA |

| - Different number areas | ||||

| - On wristlet | ||||

| NASA-TLX | NASA-TLX | |||

| Scrolling | - Random order | - Alphabetical order | UXA | |

| - On wristlet | ||||

| NASA-TLX | NASA-TLX | |||

| Swiping | - Level 2 pressure level | - Level 1 pressure level | UXA | |

| On wristlet | ||||

| NASA-TLX | NASA-TLX | |||

| Usability Principle Evaluation | ||||

| Motion UI | Scrolling | - Random order | - Alphabetical order | UXA |

| - Flick for multi-scroll | - Flick for auto-scroll | |||

| NASA-TLX | NASA-TLX | |||

| Swiping | - No selector | - Show selector | UXA | |

| NASA-TLX | NASA-TLX | |||

| Usability Principle Evaluation | ||||

| Contactless UI | Number Entry | - Same | UXA | |

| NASA-TLX | ||||

| Usability Principle Evaluation | ||||

| Analysis | Phase 1 Experiment | Phase 2 Experiment | Korean Text Entry |

|---|---|---|---|

| (Iterative Design) | (Comparative Evaluation) | ||

| UX assessment | 1130 | 90 | 42 |

| Task workload assessment | 372 | 220 | 15 |

| Usability principle evaluation | 160 | 40 | 15 |

| Response time (ms) | 1133 | 196 | 45 |

| Response accuracy rate (%) | 1139 | 197 | 45 |

| Task | Frequently | Never or Rarely |

|---|---|---|

| Text Entry Task | 88.6% | 2.3% |

| Scrolling Task | 93.2% | 2.3% |

| Swiping Task | 79.5% (least) | 11.4% (most) |

| Task | Smartphone | Smartwatch | ||

|---|---|---|---|---|

| M | SD | M | SD | |

| Text Entry Task | 1.45 (highest) | 0.70 | 2.55 (lowest) | 0.76 |

| Scrolling Task | 1.89 | 0.58 | 1.70 | 0.70 |

| Swiping Task | 2.66 | 0.68 | 1.75 | 0.72 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, H.; Park, S.-H.; Lee, K.-T.; Park, J.W.; Dey, A.K.; Kim, S. A Case Study on Iteratively Assessing and Enhancing Wearable User Interface Prototypes. Symmetry 2017, 9, 114. https://doi.org/10.3390/sym9070114

Yoon H, Park S-H, Lee K-T, Park JW, Dey AK, Kim S. A Case Study on Iteratively Assessing and Enhancing Wearable User Interface Prototypes. Symmetry. 2017; 9(7):114. https://doi.org/10.3390/sym9070114

Chicago/Turabian StyleYoon, Hyoseok, Se-Ho Park, Kyung-Taek Lee, Jung Wook Park, Anind K. Dey, and SeungJun Kim. 2017. "A Case Study on Iteratively Assessing and Enhancing Wearable User Interface Prototypes" Symmetry 9, no. 7: 114. https://doi.org/10.3390/sym9070114