Norm Penalized Joint-Optimization NLMS Algorithms for Broadband Sparse Adaptive Channel Estimation

Abstract

:1. Introduction

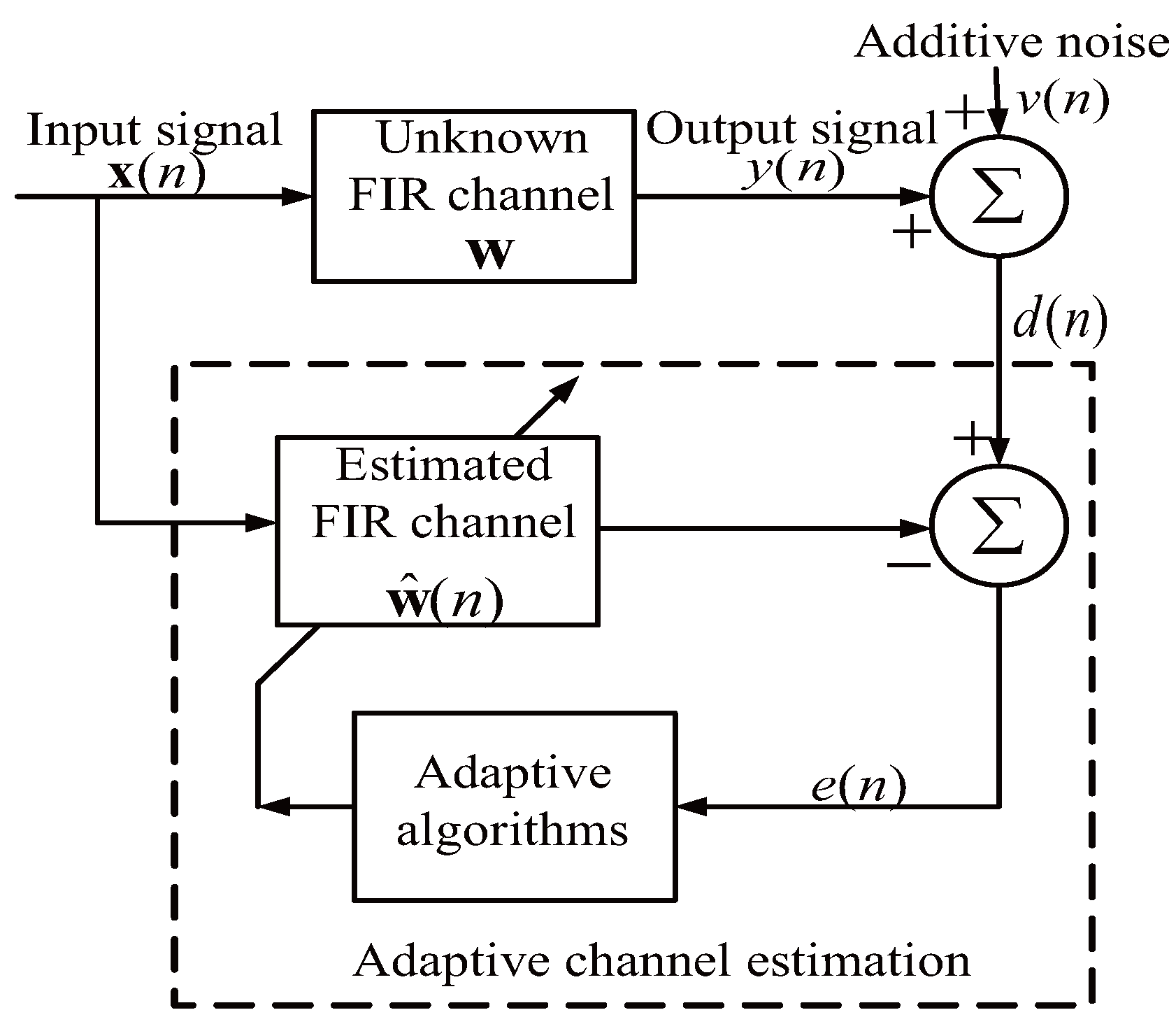

2. NLMS-Based Sparse Adaptive Channel Estimation Algorithm

3. Proposed Sparse Joint-Optimization NLMS Algorithms

- (1)

- Two optimized ZAJO-NLMS and RZAJO-NLMS algorithms with zero attractors have been proposed for sparse channel estimation, in the context of the state variable model.

- (2)

- The proposed ZAJO-NLMS and RZAJO-NLMS algorithms are realized by using the joint-optimization method and the zero attraction techniques to mimic the channel estimation misalignment.

- (3)

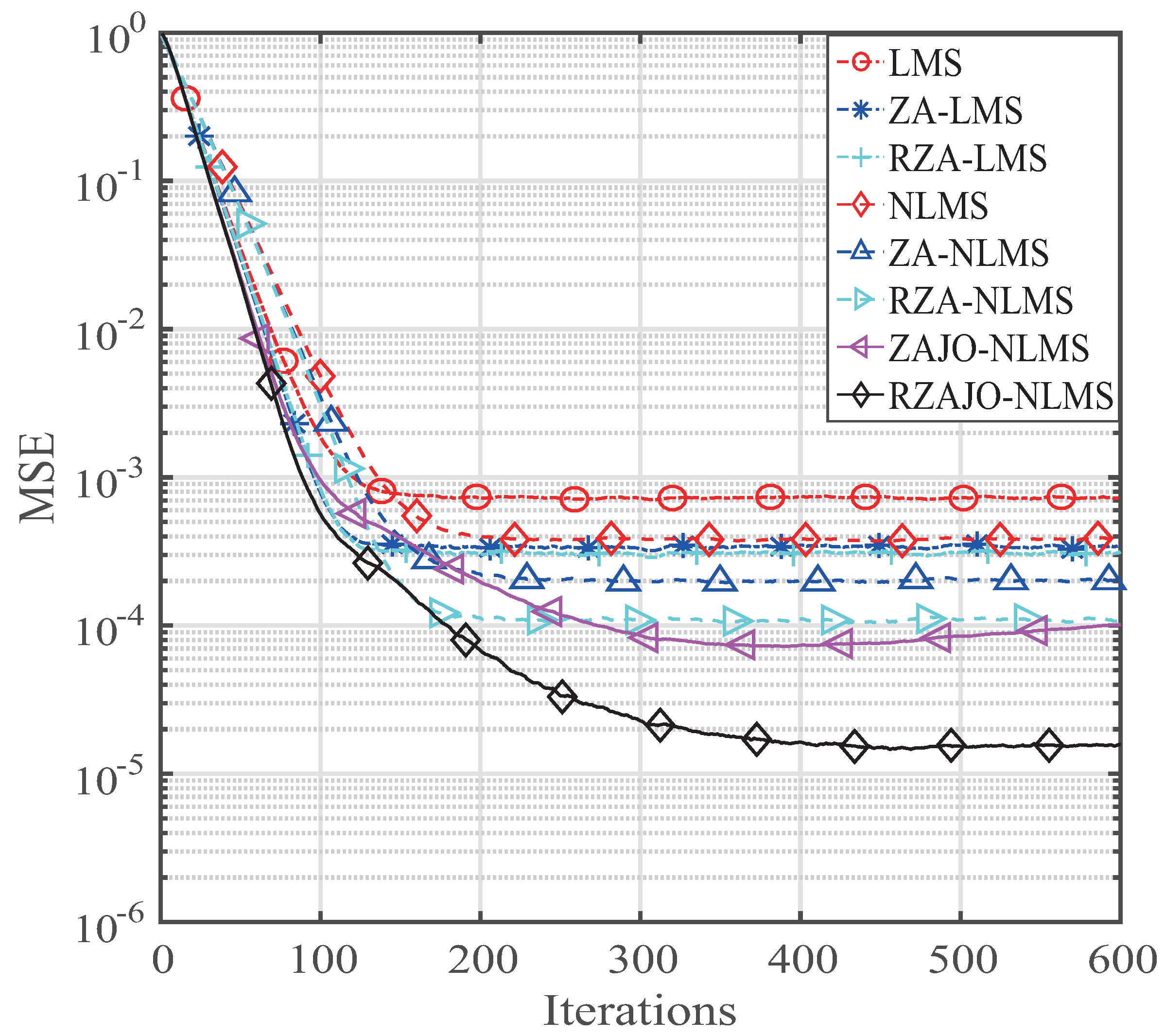

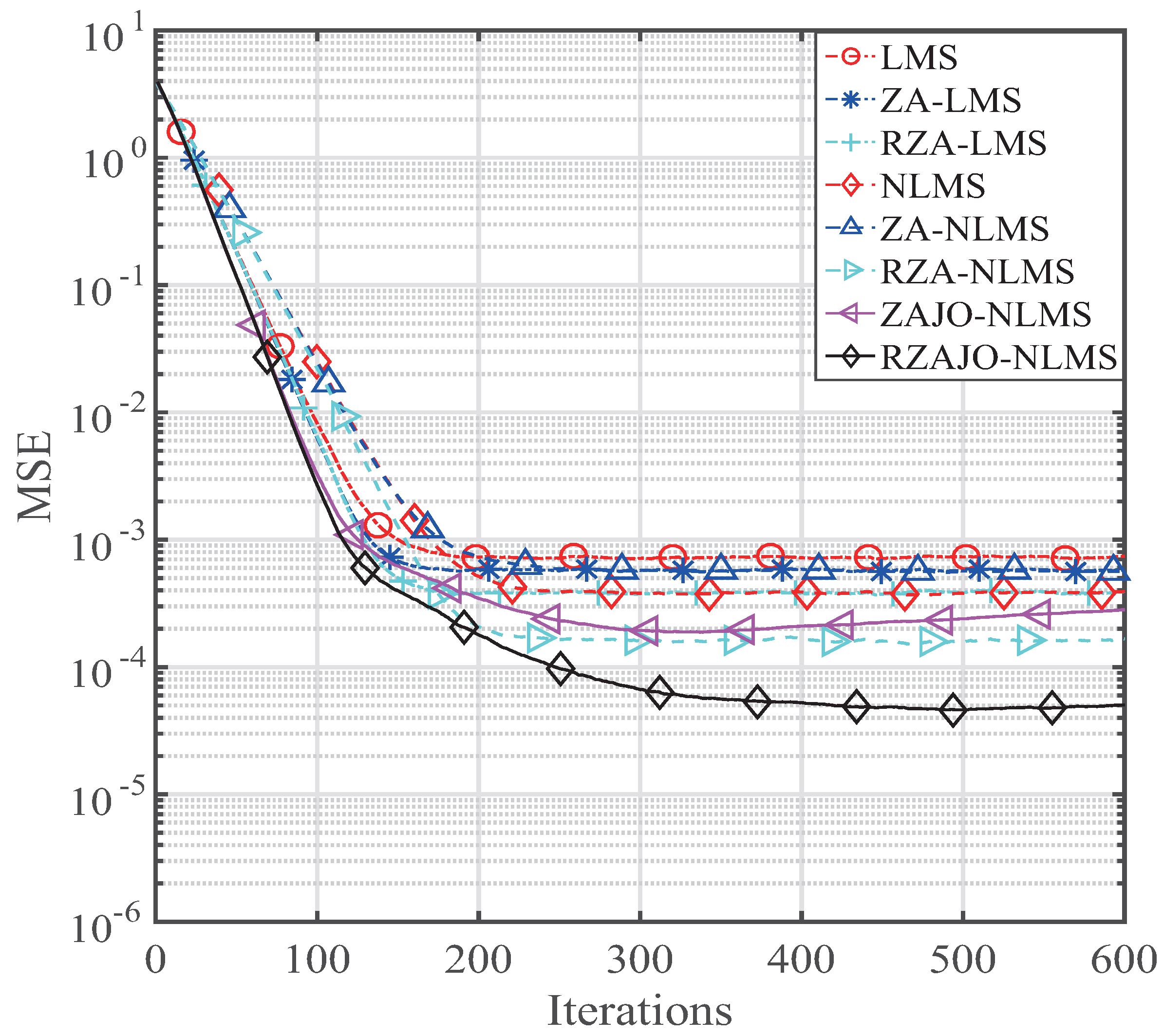

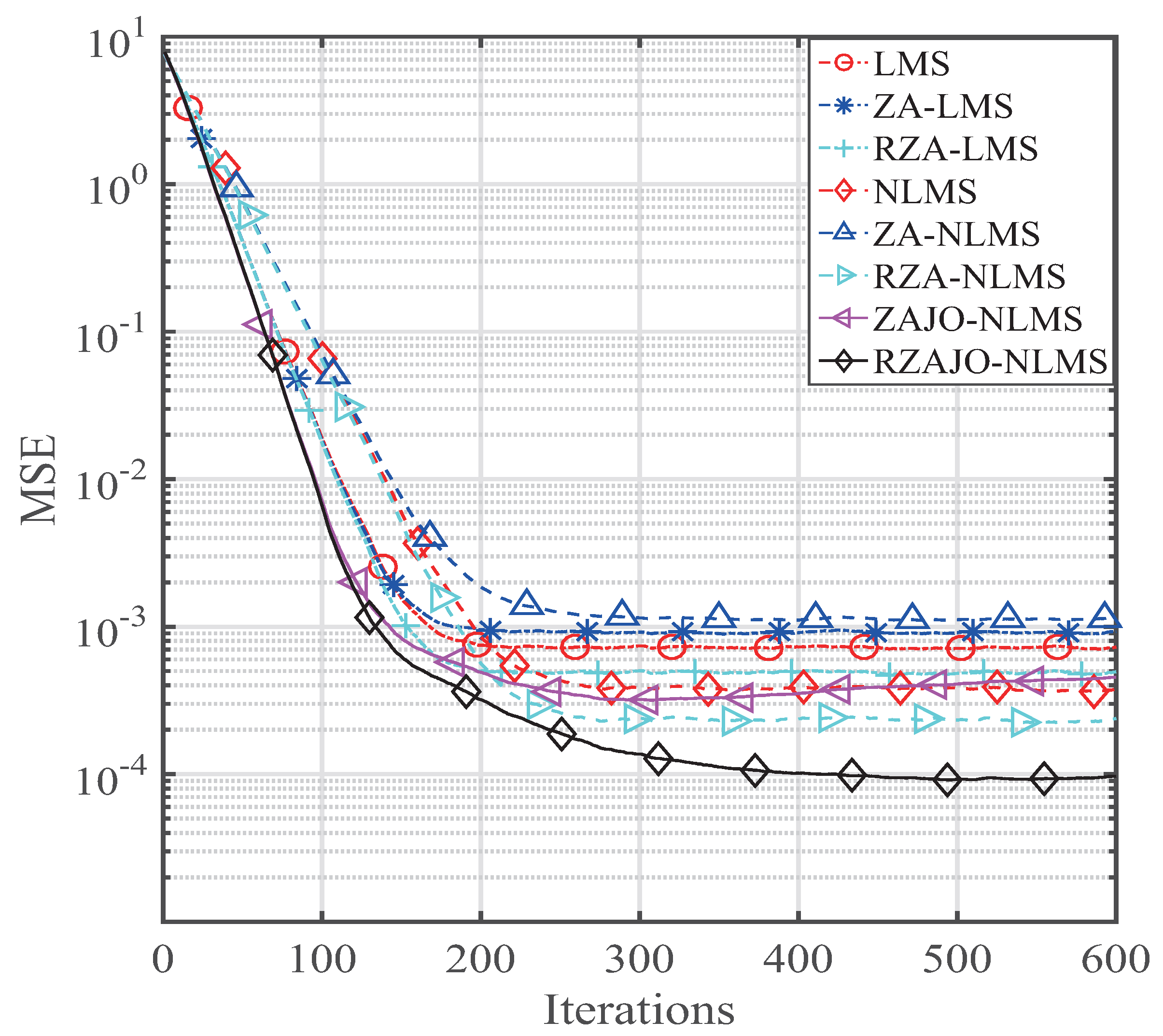

- The behaviors of the proposed ZAJO-NLMS and RZAJO-NLMS algorithms are evaluated for estimating sparse channels.

- (4)

- The ZAJO-NLMS and RZAJO-NLMS algorithms can achieve both faster convergence and lower misalignment than the ZA- and RZA-NLMS algorithms owing to the joint-optimization, which effectively adjusts the step size and the regularization parameter. In the future, we will develop an optimal algorithm to optimize the zero-attractor terms.

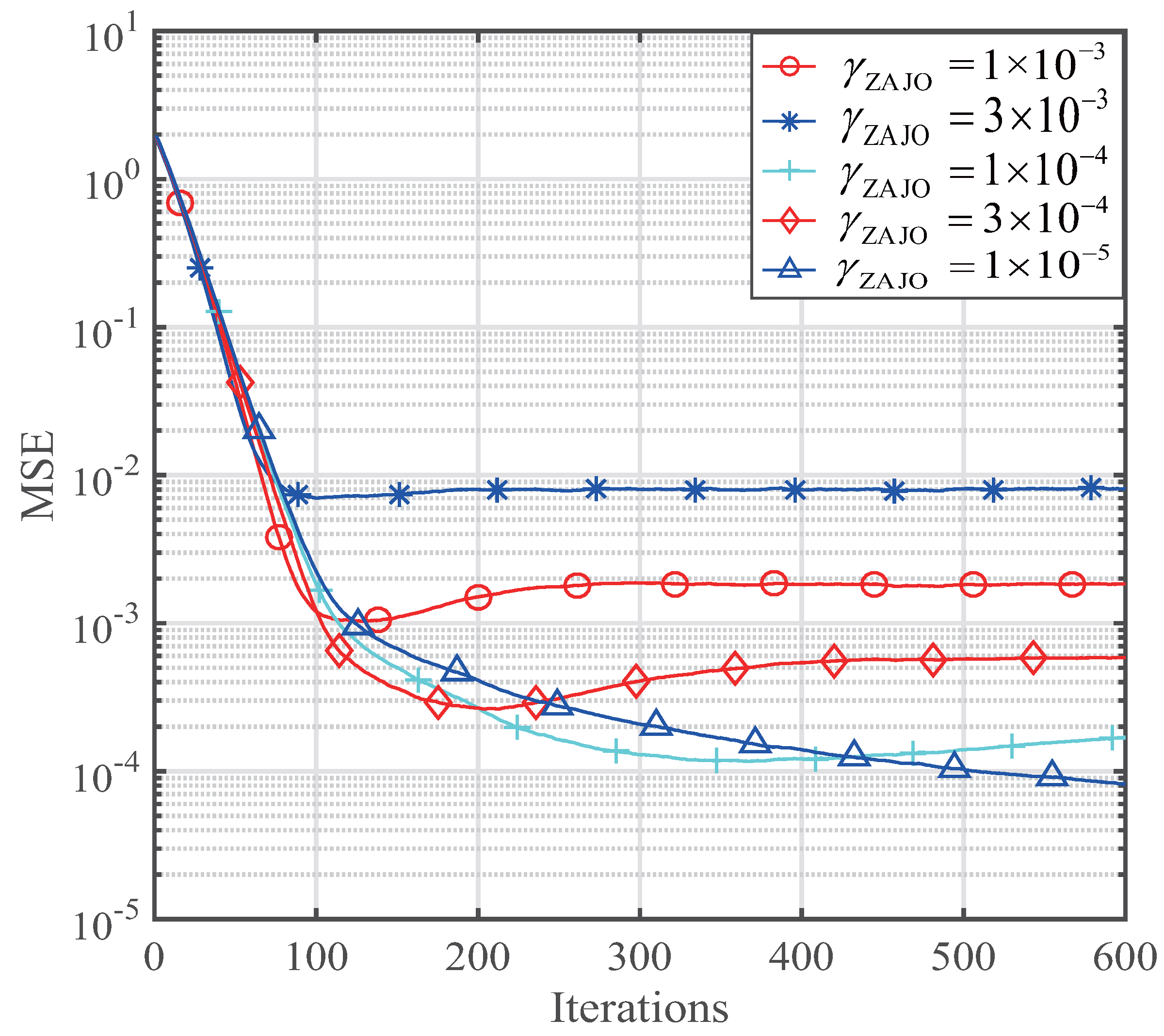

4. Results and Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Korowajczuk, L. LTE, WiMAX and WLAN Network Design, Optimization and Performance Analysis; John Wiley: Chichester, West Susses, UK, 2011. [Google Scholar]

- Proakis, J.G.; Salehi, M. Digital Communications, 5th ed.; McGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Haykin, S.; Widrow, B. Least-Mean-Square Adaptive Filters; Wiley: Hoboken, NJ, USA, 2003. [Google Scholar]

- Diniz, P.S.R. Adaptive Filtering Algorithms and Practical Implementation, 4th ed.; Springer: New York, NY, USA, 2013. [Google Scholar]

- Haykin, S. Adaptive Filter Theory, 4th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Homer, J.; Mareets, I.; Hoang, C. Enhanced detection-guided NLMS estimation of sparse FIR-modeled signal channels. IEEE Trans. Circuits Syst. I 2006, 53, 1783–1791. [Google Scholar] [CrossRef]

- Morelli, M.; Sanguinetti, L.; Mengali, U. Channel estimation for adaptive frequency-domain equalization. IEEE Trans. Wirel. Commun. 2005, 4, 2508–2518. [Google Scholar] [CrossRef]

- Vega, L.R.; Rey, H.G.; Benesty, J.; Tressens, S. A stochastic model for a new robust NLMS algorithm. In Proceedings of the 2007 15th European Signal Processing Conference (EUSIPCO’07), Poznan, Poland, 3–7 September 2007. [Google Scholar]

- Wang, Y.; Li, Y.; Yang, R. Sparsity-aware adaptive filter algorithms with mixed controlled l2 and lp error criterions for sparse adaptive channel estimation. Available online: https://doi.org10.1016/j.jfranklin.2017.07.036 (accessed on 24 July 2017).

- Li, Y.; Wang, Y.; Jiang, T. Sparse least mean mixed-norm adaptive filtering algorithms for sparse channel estimation applications. Int. J. Commun. Syst. 2017, 30, 1–14. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Y. Sparse multi-path channel estimation using norm combination constrained set-membership NLMS algorithms. Wirel. Commun. Mob. Comput. 2017, 2017, 1–10. [Google Scholar]

- Gui, G.; Peng, W.; Adachi, F. Improved adaptive sparse channel estimation based on the least mean square algorithm. In Proceedings of the 2013 IEEE Wireless Communications and Networking Conference (WCNC’13), Shanghai, China, 7–10 April 2013. [Google Scholar]

- Li, Y.; Wang, Y.; Yang, R.; Albu, F. A soft parameter function penalized normalized maximum correntropy criterion algorithm for sparse system identification. Entropy 2017, 19, 45. [Google Scholar] [CrossRef]

- Cotter, S.F.; Rao, B.D. Sparse channel estimation via matching pursuit with application to equalization. IEEE Trans. Commun. 2002, 50, 374–377. [Google Scholar] [CrossRef]

- Vuokko, L.; Kolmonen, V.M.; Salo, J.; Vainikainen, P. Measurement of large-scale cluster power characteristics for geometric channel models. IEEE Trans. Antennas Propag. 2007, 55, 3361–3365. [Google Scholar] [CrossRef]

- Gendron, P.J. Shallow water acoustic response and platform motion modeling via a hierarchical Gaussian mixture model. J. Acoust. Soc. Am. 2016, 139, 1923–1937. [Google Scholar] [CrossRef] [PubMed]

- Maechler, P.; Greisen, P.; Sporrer, B.; Steiner, S.; Felber, N.; Brug, A. Implementation of greedy algorithms for LTE sparse channel estimation. In Proceedings of the 2010 Conference Record of the Forty Fourth Asilomar Conference on Signals, Systems and Computers (ASILOMAR’10), Pacific Grove, CA, USA, 7–10 November 2010; pp. 400–405. [Google Scholar]

- Meng, J.; Yin, W.; Li, Y.; Nguyen, N.T.; Zhu, H. Compressive sensing based high-resolution channel estimation for OFDM system. IEEE J. Sel. Top. Signal Process. 2012, 6, 15–25. [Google Scholar] [CrossRef]

- Bajwa, W.B.; Haupt, J.; Sayeed, A.M.; Nowak, R. Compressed channel sensing: A new approach to estimating sparse multipath channels. Proc. IEEE 2010, 98, 1058–1076. [Google Scholar] [CrossRef]

- Taub{ock, G.; Hlawatach, F. A compressed sensing technique for OFDM channel estimation in mobile environment: exploting channel sparsity for reducing pilots. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP’08), Las Vegas, NV, USA, 31 March–4 April 2008; pp. 2885–2888. [Google Scholar]

- Candés, E.J. The restricted isometry property and its implications for compressed sensing. C. R. Math. 2008, 346, 589–592. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B 1996, 58, 267–288. [Google Scholar]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Chen, Y.; Gu, Y.; Hero, A.O. Sparse LMS for system identification. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP’09), Taipei, Taiwan, 19–24 April 2009; pp. 3125–3128. [Google Scholar]

- Li, Y.; Jin, Z.; Wang, Y. Adaptive channel estimation based on an improved norm constrained set-membership normalized least mean square algorithm. Wirel. Commun. Mob. Comput. 2017, 2017, 1–8. [Google Scholar] [CrossRef]

- Li, Y.; Jin, Z.; Wang, Y.; Yang, R. A robust sparse adaptive filtering algorithm with a correntropy induced metric constraint for broadband multi-path channel estimation. Entropy 2016, 18, 380. [Google Scholar] [CrossRef]

- Shi, K.; Shi, P. Convergence analysis of sparse LMS algorithms with l1-norm penalty based on white input signal. Signal Process. 2010, 90, 3289–3293. [Google Scholar] [CrossRef]

- Wu, F.Y.; Tong, F. Gradient optimization p-norm-like constraint LMS algorithm for sparse system estimation. Signal Process. 2013, 93, 967–971. [Google Scholar] [CrossRef]

- Taheri, O.; Vorobyov, S.A. Sparse channel estimation with Lp-norm and reweighted L1-norm penalized least mean squares. In Proceedings of the 36th International Conference on Acoustics, Speech and Signal Processing (ICASSP’11), Prague, Czech Republic, 22–27 May 2011; pp. 2864–2867. [Google Scholar]

- Gu, Y.; Jin, J.; Mei, S. L0 norm constraint LMS algorithms for sparse system identification. IEEE Signal Process. Lett. 2009, 16, 774–777. [Google Scholar]

- Niazadeh, R.; Ghalehjegh, S.H.; Babaie-Zadeh, M.; Jutten, C. ISI sparse channel estimation based on SL0 and its application in ML sequence-by-sequence equalization. Signal Process. 2012, 92, 1875–1885. [Google Scholar] [CrossRef]

- Li, Y.; Hamamura, M. Zero-attracting variable-step size least mean square algorithms for adaptive sparse channel estimation. Int. J. Adap. Control Signal Process. 2015, 29, 1189–1206. [Google Scholar] [CrossRef]

- Meng, R.; Lamare, R.C.; Nascimento, V.H. Sparsity-aware affine projection adaptive algorithms for system identification. In Proceedings of the Sensor Signal Processing for Defence (SSPD 2011), London, UK, 27–29 September 2011; pp. 1–5. [Google Scholar]

- Lima, M.V.S.; Martins, W.A.; Diniz, P.S.Z. Affine projection algorithms for sparse system identification. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP’13), Vancouver, BC, Canada, 26–31 May 2013; pp. 5666–5670. [Google Scholar]

- Li, Y.; Hamamura, M. An improved proportionate normalized least-mean-square algorithm for broadband multipath channel estimation. Sci. World J. 2014, 2014, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Hamamura, M. Smooth approximation l0-norm constrained affine projection algorithm and its applications in sparse channel estimation. Sci. World J. 2014, 2014, 1–14. [Google Scholar]

- Li, Y.; Li, W.; Yu, W.; Wan, J.; Li, Z. Sparse adaptive channel estimation based on lp-norm-penalized affine projection algorithm. Int. J. Antennas Propag. 2014, 2014, 1–8. [Google Scholar]

- Li, Y.; Zhang, C.; Wang, S. Low-complexity non-uniform penalized affine projection algorithm for sparse system identification. Circuits Syst. Signal Process. 2016, 35, 1611–1624. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Jiang, T. Sparse-aware set-membership NLMS algorithms and their application for sparse channel estimation and echo cancelation. AEU-Int. J. Electron. Commun. 2016, 70, 895–902. [Google Scholar]

- Li, Y.; Wang, Y.; Jiang, T. Norm-adaption penalized least mean square/fourth algorithm for sparse channel estimation. Signal Process. 2016, 128, 243–251. [Google Scholar] [CrossRef]

- Ciochina, S.; Paleologu, C.; Benesty, J. An optimized NLMS algorithm for system identification. Signal Process. 2016, 118, 115–121. [Google Scholar] [CrossRef]

- Candés, E.J.; Wakin, M.B.; Noyd, S.P. Enhancing sparsity by reweighted l1-minimization. J. Fourier Anal. Appl. 2008, 15, 877–905. [Google Scholar] [CrossRef]

- Benesty, J.; Rey, H.; ReyVega, L.; Tressens, S. A nonparametric VSS-NLMS algorithm. IEEE Signal Process. Lett. 2006, 13, 581–584. [Google Scholar] [CrossRef]

- Isserlis, L. On a formula for the product-moment coefficient of any order of a normal frequency distribution in any number of variables. Biometrika 1918, 12, 134–139. [Google Scholar] [CrossRef]

- Sulyman, A.I.; Zerguine, A. Convergence and steady-state analysis of a variable step size NLMS algorithm. Signal Process. 2003, 83, 1255–1273. [Google Scholar] [CrossRef]

- Rodger, J.A. Discovery of medical big data analytics: improving the prediction of traumatic brain injury survival rates by datamining patient informatics processing software hybrid hadoop hive. Inform. Med. Unlocked 2015, 1, 17–26. [Google Scholar] [CrossRef]

- Elangovan, K. Comparative study on the channel estimation for OFDM system using LMS, NLMS and RLS algorithms. In Proceedings of the 2012 International Conference on Pattern Recognition, Informatics and Medical Engineering (PRIME), Tamilnadu, India, 21–23 March 2012. [Google Scholar]

- Gui, G.; Adachi, F. Adaptive sparse channel estimation for time-variant MIMO-OFDM systems. In Proceedings of the 2013 9th International Wireless Communications and Mobile Computing Conference (IWCMC), Sardinia, Italy, 1–5 July 2013. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Li, Y. Norm Penalized Joint-Optimization NLMS Algorithms for Broadband Sparse Adaptive Channel Estimation. Symmetry 2017, 9, 133. https://doi.org/10.3390/sym9080133

Wang Y, Li Y. Norm Penalized Joint-Optimization NLMS Algorithms for Broadband Sparse Adaptive Channel Estimation. Symmetry. 2017; 9(8):133. https://doi.org/10.3390/sym9080133

Chicago/Turabian StyleWang, Yanyan, and Yingsong Li. 2017. "Norm Penalized Joint-Optimization NLMS Algorithms for Broadband Sparse Adaptive Channel Estimation" Symmetry 9, no. 8: 133. https://doi.org/10.3390/sym9080133